-

摘要

发际线是人体头部的一个重要特征,发际线的提取在面部感知应用系统、人体工效学、整形外科学等方面都具有很重要的研究意义和应用价值。基于人体头部的彩色点云模型,提出了直接提取三维发际线的方法,根据人体面貌特征建立人脸局部坐标系,并将点云模型转换到该坐标系下;基于发际线处rgb值突变的特性,对点云模型分层、排序,提取出头部深色部位的边界线点;基于人脸先验知识去噪,得到发际线点,并拟合得发际线。对多个真实的人体头部三维彩色点云模型进行实验,验证了所提方法的有效性。

Abstract

The hairline is an important feature of human head. Hairline extraction has great research significance and application value in face perception systems, ergonomics, plastic surgery, etc. A direct hairline extraction method, which uses 3D head color point cloud, is proposed. First, the point cloud is transformed to a face coordinate system based on human facial features. Second, extract the boundary points of the dark parts through layering and sorting on the basis of the rgb values' mutation near the hairline. Last, filter out noise points among the boundary points according to prior knowledge about human face, and fit the hairline with de-noised boundary points. Actual 3D head color point clouds are used to prove the effectiveness of proposed method.

-

Key words:

- hairline /

- layering /

- sorting /

- color point cloud

-

Overview

Abstract: As the hairline is an important feature of human head, hairline extraction has great research significance and wide applications, such as face perception systems, plastic surgery, 3D film and television, facelift game, hair set customization. With the development of 3D point cloud model acquirement technology, the study on the three-dimensional (3D) hairline extraction, which can be used to analyze the characteristics of hairline qualitatively and quantitatively, turns into a research hot gradually. Based on the 3D color point cloud of human head, a direct 3D hairline extraction method is proposed. Firstly, the point cloud is transformed into the face coordinate system which is built on the basis of human facial features. Secondly, the head dark parts, including eyeballs, eyebrows and hair, were extracted based on gray threshold T1 which can separate hair color from skin color and was calculated using the Otsu algorithm. Thirdly, the boundary points of the dark parts were picked out. The dark parts were layered based on the Y value and the points in every same layer were sorted in accordance with the X value. For each layer, the difference dj,j+1 of X coordinate component between consecutive points pj and pj+1 for arbitrary index j was calculated and the two points were selected out if the difference between them was higher than a certain threshold T2. In this way, all layers were visited and the boundary points were obtained. Fourthly, the 3D hairline points were acquired by filtering noise points out. According to the prior knowledge of human face that the locations of the eyeballs and eyebrows are on the front of hairline at the same height of face, the boundary points of eyeballs and eyebrows were deleted and the remaining points were 3D hairline points. Finally, the 3D hairline points were fitted to obtain 3D hairline curve. In order to speed up the fitting procedure, the hairline points were simplified using the method of bounding box which can keep the hairline character mostly, and then 3D points were fitted with the algorithm of three B-spline curve fitting. Some actual 3D color point clouds of human head were used to extract the 3D hairlines. The experimental results show that the method proposed here is proven a feasible and effective method. What's more, compared with the 2D hairline extraction algorithm, it can get more information of hairline.

-

1. 引言

发际线是人体头部的一个重要特征,发际线的提取在面部感知应用系统、人体工效学、逆向工程、整形外科学等方面具有很重要的研究意义和应用价值。在3D影视、换装游戏等方面,三维的虚拟头发是热门之一,通过提取三维发际线,可使三维虚拟头发的仿真效果更加逼真[1];在假发生产销售领域,通过发际线的三维提取,可以实现发套私人定制;整形外科学中,发际线的提取有助于发际线调整方案的确定,基于三维点云模型,患者可以预先查看调整前后的发际线变化,向医生提出自己的意见,医生也可以通过比较原发际线和设计发际线的位置、形状,测算发际线的受区面积,计算出毛囊需求量[2],从而判断该手术的难易程度,并制定出合理的手术方案。同时,将彩色头部点云模型保存在数据库中,实现数据共享,更容易获得不同种族人群的大量数据。通过提取三维发际线,对发际线定性定量分析,可获得更多更具体的发际线特性,对面部感知、人体工效学等具有很大的研究意义。

基于三维模型提取人脸特征[3-5]的研究主要集中在对人脸特征点和局部特征区域的提取,对发际线等特征线提取的研究较少,且基本都是基于二维图像的,常见的方法有基于人脸先验知识和利用肤色模型或彩色snake模型的提取方法[6-9]。二维发际线能定性描述发际线的特征,不能定量计算,只包含了发际线左右的宽度和上下的高度信息,需特定处理才可能得到前后的深度信息,主要适用于真实感的三维人脸建模、二维人脸识别及跟踪等。

基于三维彩色点云模型,提出一种直接提取三维发际线的方法。利用彩色点云模型中发际线附近rgb值变化大的特性,基于人体面貌特征,通过逐层排序分析法提取发际线点,并拟合得到发际线。与基于二维图像提取的发际线进行比较,分析了提取效果的准确性。针对多个真实的彩色点云模型,进行了直接提取三维发际线的实验,验证了所提方法的有效性和稳定性。

2. 三维发际线提取

2.1 人脸局部坐标系

三维点云模型由物体表面的三维点构成,即为物体表面点在同一坐标系下的三维坐标表示,可用视频设备显示。对于实体的点云模型可以通过激光三维扫描仪等三维成像设备获得。当所用设备不同,定义的世界坐标系不同,或物体的姿态不同,得到的点云模型在坐标系中的位置也不同。

为了统一基准,需重新建立一个新的人脸局部坐标系,并将点云模型转换到该坐标系下,使得当人脸局部坐标系与大地坐标系重合时,人体头部的点云模型处于正视前方的方位。在头部点云模型中,使其正视前方,提取在同一水平面且非共线的三点,以此为基准点可建立人脸局部坐标系。

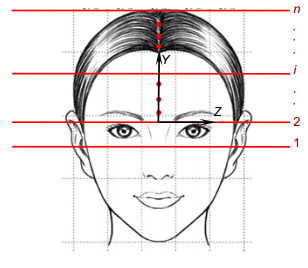

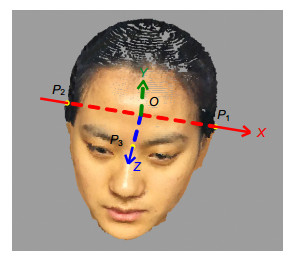

根据人体面貌特征,当人体头部正视前方时,左右耳的至高点与眉间点基本处于同一水平面。如图 1所示,以左右耳的至高点的临近点P1、P2及眉间点P3为基准[10],建立人脸局部坐标系。

1) 连接P2P1,记为X轴,以头部点云模型的左方为正方向;

2) 由P3向P1P2作垂线,垂点为坐标系原点O,并记直线OP3为Z轴,以头部点云模型的前方为正方向;

3) 依据右手坐标系法则,判定Y轴,以头部点云模型的上方为正方向。

新建立的人脸局部坐标系和原坐标系是不重合的,通过坐标系的平移和旋转,得到人脸局部坐标系下的头部点云模型。

2.2 三维发际线提取

据相关调查,中国人的容貌主要具有以下几个特征:肤色中等,呈浅黄/棕黄色;毛发较黑且硬直;眼色多呈深褐色。由此可见,发色、眼色比肤色的颜色深,且在边界处颜色发生突变,即rgb值突变。因此,可以基于头部的彩色点云模型,提取出头部rgb值突变的部分,即头部深色部位的边界,主要包含了发际线、眉毛及眼球部位的边界;再根据人脸先验知识,将眉毛及眼球部位的边界去掉,即为发际线。

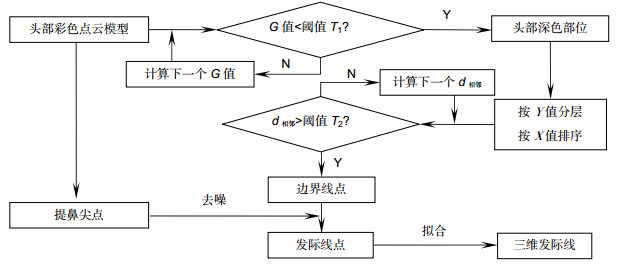

图 2为三维发际线提取算法的流程图。针对人脸局部坐标系中的头部彩色点云模型,计算各点的灰度值G,提取出头部深色部位的点云;对深色部位的点云按照竖直方向分层,并按照水平方向排序;对每一层的点云,分别计算各个相邻点之间的X坐标差值,提取出深色部位点云的边界点;根据鼻尖在人脸正面最前方的特性,提取鼻尖点;基于鼻尖点和人脸先验知识去除噪声点,得到发际线点;拟合发际线点得到三维发际线。

2.2.1 提取深色部位

在头部彩色点云模型中,主要存在发色和肤色两个颜色类,可根据灰度特性自适应地分割出这两个类。最常用的自适应阈值分割法是Otsu算法[11, 12],先对点云模型生成灰度直方图,再对直方图搜索计算类间方差最大值,相应的灰度值即为点云模型的最优灰度阈值T1。利用发色比肤色深的特性,选出点云模型中所有灰度值小于灰度阈值T1的点,即提取出深色部位,主要包括头盖、眉毛及眼球等部位。其中,各点的灰度值由平均值法计算得到,即:

G=(r+g+b)/3, (1) 式中:G为灰度值,(r,g,b)为该点的颜色值。

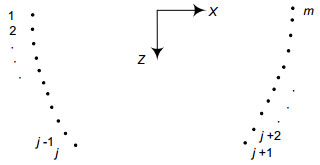

2.2.2 提取边界线点

对提取出的深色部位按照Y值分层,如图 3所示,共分成n层。对每一层的点云按照X值从小到大依次排序,图 4是对第i层的m个点进行排序的示意图。从图中可以看出,大多数相邻点之间的X值差值d都很小,只有点j与(j+1)之间的差值dj,j+1比较大,而且点j与(j+1)正是边界处的点。据此,计算各个相邻点之间的X差值,若差值超过一定阈值T2,即可认为这两个点均为深色部位的边界线点。

2.2.3 提取发际线点

众所周知,鼻尖在人脸正面的最前方,即在人脸局部坐标系中,鼻尖点的Z值最大。相对于发际线的相同高度,眉毛、眼球的位置要靠前。先提取出鼻尖点,以鼻尖点为基准,根据人脸尺寸的统计值[13, 14],将提取出的眉毛、眼球部分去除,得到发际线点。

2.2.4 拟合发际线

提取到的发际线点往往会由于模型或算法的缺陷,出现数据冗余的情况,这样,计算效率不高,甚至误差较大。因此,在保留发际线点原有特征的基础上,需先对发际线点精简,再进行拟合。借鉴传统的包围盒法[15],用一定大小的空间包围盒约束点云,对分布在每个包围盒中的所有点数据求各方向的坐标平均值,并代替整个包围盒中的点数据,即取包围盒中所有点的质心点。

3. 实验结果与分析

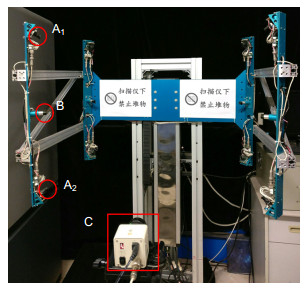

实验中利用实验室自行研制的激光三维扫描仪获得三维彩色人体头部点云模型。激光三维扫描仪包含三维系统、彩色系统两个独立的系统,如图 5所示。A1、A2为两个黑白CCD,B为线激光器,C为彩色CCD。其中,三维系统基于激光三角法,采用一维垂直运动导轨带动扫描模块(包括激光器和黑白CCD)上下移动的方式进行扫描,经数据处理模块,获得人体头部的三维坐标信息;彩色系统则由彩色CCD获得人体头部的二维彩色图像,即彩色信息。利用事先标定好的三维坐标与二维彩色图像的映射关系,得到三维彩色点云模型。该扫描仪获得的点云模型为稠密点云模型,X、Z方向的分辨率约1 mm,Y方向分辨率约2 mm。

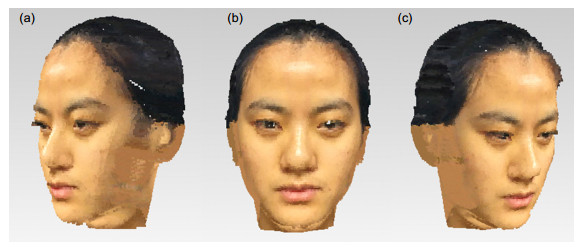

图 6是采用图 5所示的扫描仪获取的实际人体头部的彩色点云模型,图 6(a)、图 6(b)、图 6(c)依次为从左前、正前及右前三个角度看到的视图。

借助实验室开发的点云数据处理软件及Geomagic商业软件,对点云模型进行数据简化压缩、去噪、孔洞填充等预处理,得到光滑的头部彩色点云模型。

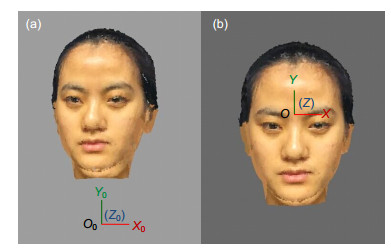

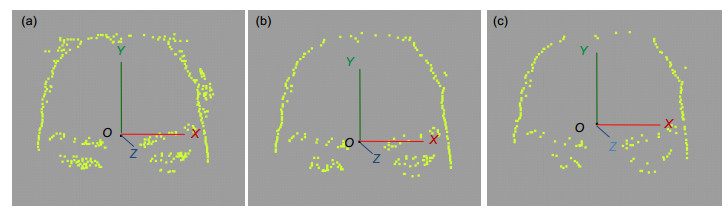

以所获取的点云模型为例说明整个提取过程。建立人脸局部坐标系,并将点云模型转换到新坐标系下,如图 7所示,图 7(a)为点云模型在原始坐标系X0Y0Z0-O0中的位置,图 7(b)为点云模型在新坐标系XYZ-O中的位置。

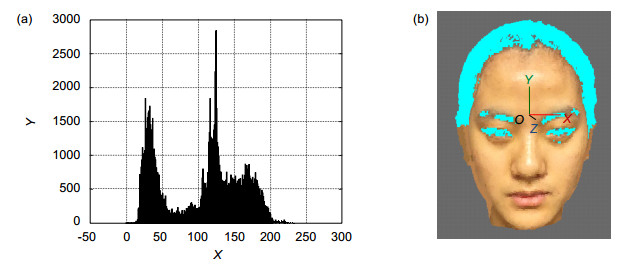

1) 提取深色部位:对彩色点云模型计算阈值T1,选择灰度值小于阈值T1的点云,即把头盖、眉毛、眼球等颜色深的部位提取出来。阈值T1代表发色与肤色的边界灰度值,选取的好坏直接影响着所提发际线的准确性,利用Ostu方法自适应地求解到最优阈值。对点云模型生成灰度直方图,计算类间方差最大值,得到相应的灰度值为100,即阈值T1为100。图 8即深色部位的提取结果,图 8(a)为灰度直方图,图 8(b)为深色部位提取结果图,青色部分是提取出的深色部位;

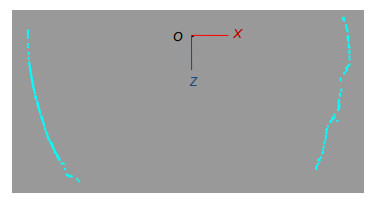

2) 点云排序:对提取出的深色部位的点云依据Y值分层,每层厚度与扫描仪在Y方向的分辨率一致,并对每层的点云按X值从小到大排序,如图 9所示,即为头盖点云在某层的分布;

3) 选择边界线点:由图 9可知,在同一层上,两侧边界线点的水平差距比较大,即X值的差值较大,比较相邻两个点之间的X值变化大小,当X值差值大于阈值T2时,认为这两个点均为边界线上的点。阈值T2代表同一层两侧边界线点的水平距离差。根据人体面貌特征,阈值T2以3 mm~6 mm为佳,数值太小会有杂散点,数值太大则会缺失数据。针对具体模型,多次实验选出最佳值。图 10即显示了不同阈值T2下,对边界线的提取结果,其中,图 10(a)、10(b)、10(c)的阈值T2分别为3 mm、4 mm、6 mm。从图中可看出,图 10(b)的提取结果最佳,既没有数据丢失,也没有过多的噪点数据,因此,本案例取阈值T2为4 mm;

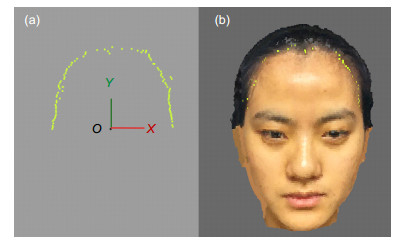

4) 去除噪声:由图 10可见,提出的边界线点中除了头盖的边界线点,还包含了眉毛、眼球部分的边界线点。根据面貌形态学,在同一高度,眉毛、眼球部分相对发际线要靠前,根据最值点提出鼻尖点,并借助实验统计值,以鼻尖为基准利用相对位置去噪,得到发际线点,见图 11。图 11(a)为发际线点显示效果,图 11(b)为发际线点在头部模型的相对位置。

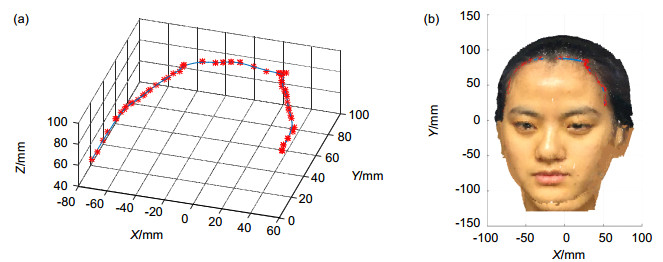

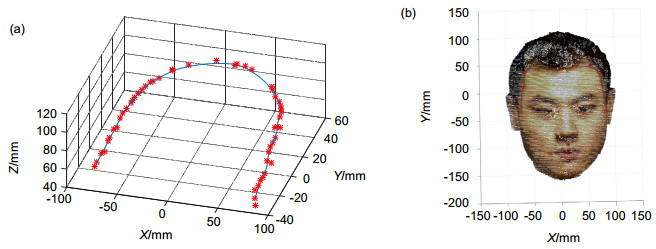

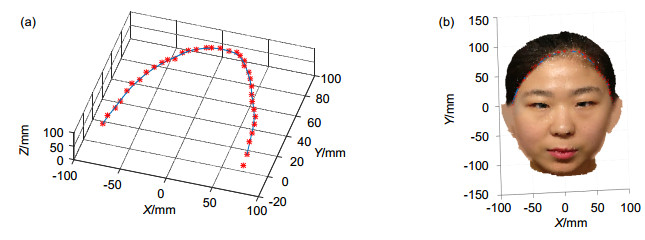

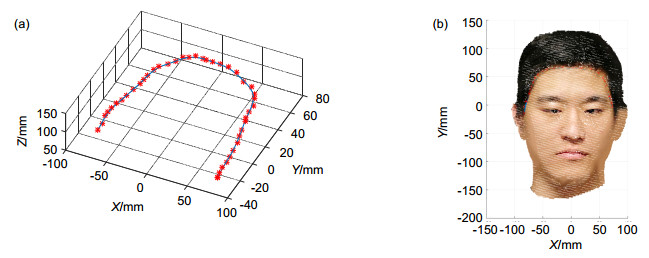

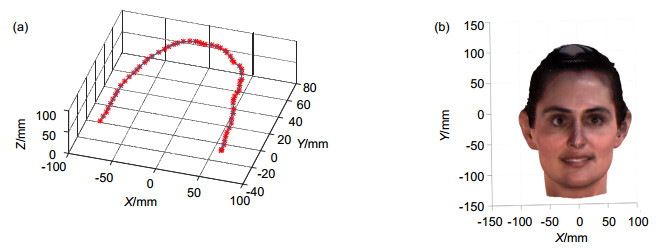

5) 拟合:利用改进的包围盒法精简发际线点,文中使用的包围盒尺寸为8 mm×8 mm×8 mm,并用三次B样条曲线拟合出发际线,如图 12所示。图 12(a)为三维发际线拟合效果,图 12(b)为三维发际线在头部模型的相对位置。其中,X、Y、Z是发际线的三维点坐标,单位为mm。

由图可见,该算法提取的三维发际线比较平滑,且在头部模型中的相对分布也基本吻合。

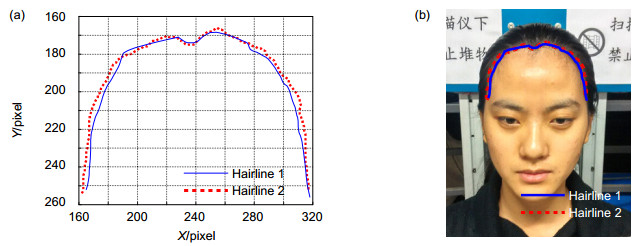

由于目前看到的文献关于发际线的提取方法都是基于二维图像的,为了进行本文所提方法与已有方法的比较,将提取出的三维发际线投影到XY图像平面,得到发际线投影hairline1。基于椭圆肤色模型[18],直接提取二维彩色图像的二维发际线hairline2。图 13为hairline1与hairline2的显示效果图,图 13(a)为拟合的发际线结果,实线代表发际线投影hairline1,虚线代表发际线hairline2,且X、Y为二维发际线的像素坐标,单位为pixel,图 13(b)为二维发际线在相应头部彩色照片的相对位置。

由图 13可知,投影得到的二维发际线与直接提取的二维发际线的整体趋势一致,且在实际照片中的相对位置与实际相吻合,说明提取的发际线基本准确,所提方法有效。

利用本实验室的激光三维扫描仪,采集得到另外三个彩色点云模型(两男一女),并利用所提方法直接提取三维发际线。图 14、图 15、图 16分别为模型1、模型2、模型3的三维发际线的提取结果,(a)为三维发际线拟合效果,(b)为三维发际线在头部模型的相对位置。其中,模型1的阈值T1为41,阈值T2为4 mm;模型2的阈值T1为72,阈值T2为4 mm;模型3的阈值T1为72,阈值T2为4 mm。

为了进一步验证本文所提方法的有效性和稳定性,在Cyberware官网下载得到开放的三维彩色点云模型[19],记为模型4,并利用所提方法对其直接提取三维发际线。该模型由Cyberware公司的3030彩色三维扫描仪得到,X、Y、Z三个方向的分辨率均约1 mm。模型4的提取结果如图 17所示,图 17(a)为三维发际线拟合效果,图 17(b)为三维发际线在头部模型的相对位置。其中,阈值T1为58,阈值T2为4 mm。

由上面图可看出,对于不同的三维彩色点云模型,应用本文方法都能较为准确地提取出三维发际线,与头部点云模型的相对分布也基本吻合,说明所提方法稳定且有效。

4. 结论

基于人体头部彩色点云模型,提出了一种直接提取三维发际线的方法,该方法算法简单,易于实现,提取效果较平滑。根据全球人种的面貌分析[20],绝大部分人都有发色和肤色相差较大的特点,且发色要比肤色深,利用本文的三维提取方法,可以快速准确提取出三维发际线,适用性较广。

-

-

参考文献

[1] 刘海舟. 三维头发重用性的研究和应用[D]. 成都: 西南交通大学, 2014: 1–5.

Liu Haizhou. Research and application on reusability of 3D hair[D]. Chengdu: Southwest Jiaotong University, 2014: 1–5.

[2] 李鹏龙.自体毛发移植再造发际线的临床效果[J].健康之路, 2015, 14(9): 79. http://www.cqvip.com/QK/88424X/201509/74759076504849534857485648.html

Li Penglong. The clinical effect of reengineering hairline with autologous hair transplantation[J]. Health Way, 2015, 14(9): 79. http://www.cqvip.com/QK/88424X/201509/74759076504849534857485648.html

[3] Smeets D, Keustermans J, Vandermeulen D, et al. meshSIFT: Local surface features for 3D face recognition under expression variations and partial data[J]. Computer Vision and Image Understanding, 2013, 117(2): 158–169. doi: 10.1016/j.cviu.2012.10.002

[4] Lee J, Ku B, Da Silveira A C, et al. Three-dimensional analysis of facial asymmetry of healthy Hispanic Caucasian children[C]. Proceedings of the 3rd International Conference on 3D Body Scanning Technologies, Lugano, Switzerland, 2012: 133–138.

[5] Zou L, Hao P, McCarthy M. Establishment of reference frame for sequential facial biometrics[C]. Proceedings of the 5th International Conference on 3D Body Scanning Technologies, Lugano, Switzerland, 2014: 40–45.

[6] Goto T, Lee W S, Mangnenat-Thalmann N. Facial feature extraction for quick 3D face modeling[J]. Signal Processing: Image Communication, 2002, 17(3): 243–259. doi: 10.1016/S0923-5965(01)00021-2

[7] 刘岗, 沈晔湖, 胡静俊, 等.基于肤色及深度信息的人脸轮廓提取[J].江南大学学报(自然科学版), 2006, 5(5): 513–517. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=jiangndxxb200605003

Liu Gang, Shen Yehu, Hu Jingjun, et al. Face Contour Extraction Algorithm Based on Skin Luma and Depth Information[J]. Journal of Southern Yangtze University (Natural Science Edition), 2006, 5(5): 513–517. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=jiangndxxb200605003

[8] 徐从东, 罗家融, 舒双宝.肤色信息马氏图的RBPNN人脸识别[J].光电工程, 2008, 35(3): 131–135. http://www.cqvip.com/QK/90982A/200803/26707708.html

Xu Congdong, Luo Jiarong, Shu Shuangbao. Face recognition based on RBPNN of Mahalanobis distance map for skin color information[J]. Opto-Electronic Engineering, 2008, 35(3): 131– 135. http://www.cqvip.com/QK/90982A/200803/26707708.html

[9] Seo K H, Kim W, Oh C, et al. Face detection and facial feature extraction using color snake[C]// Proceedings of the 2002 IEEE International Symposium on Industrial Electronics, L'Aquila, Italy, 2002: 457–462.

[10] Othman A, El Ghoul O. A novel approach for 3D head segmentation and facial feature points extraction[C]// Proceedings of the 2013 International Conference on Electrical Engineering and Software Applications (ICEESA). Hammamet, 2013: 1–6.

[11] 唐路路, 张启灿, 胡松.一种自适应阈值的Canny边缘检测算法[J].光电工程, 2011, 38(5): 127–132. http://www.docin.com/p-484167071.html

Tang Lulu, Zhang Qican, Hu Song. An improved algorithm for Canny edge detection with adaptive threshold[J]. Opto-Elec-tronic Engineering, 2011, 38(5): 127–132. http://www.docin.com/p-484167071.html

[12] 朱立军, 苑玮琦.一种改进蚁群算法的睫毛提取[J].光电工程, 2016, 43(6): 44–50. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=gdgc201606008

Zhu Lijun, Yuan Weiqi. An eyelash extraction method based on improved ant colony algorithm[J]. Opto-Electronic Engineering, 2016, 43(6): 44–50. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=gdgc201606008

[13] 吕导中, 万荣春.人像面部综合测量特征的特异性研究[J].江苏公安专科学校学报, 2000, 14(2): 117–121.

Lv Daozhong, Wan Rongchun. The research about the specificity of comprehensive measurement features on face[J]. Journal of Jiangsu Public Security College, 2000, 14(2): 117–121.

[14] Horprasert T, Yacoob Y, Davis L S. Computing 3-D head orientation from a monocular image sequence[C]// Proceedings of the 2nd International Conference on Automatic Face and Gesture Recognition. Killington, VT, 1996: 242–247.

[15] 张梦泽. 三维点云数据的精简与平滑处理算法[D]. 青岛: 中国海洋大学, 2014: 18–20.

Zhang Mengze. A 3D data reduction and smoothing algorithm for point clouds[D]. Qing Dao: Ocean University of China, 2014: 18–20.

[16] Chougule V N, Mulay A V, Ahuja B B. Methodologies for development of patient specific bone models from human body CT scans[J]. Journal of the Institution of Engineers (India): Series C, 2016. DOI: 10.1007/s40032-016-0301-6. (in Press

[17] Mittal R C, Rohila R. Numerical simulation of reaction-diffusion systems by modified cubic B-spline differential quadrature method[J]. Chaos, Solitons & Fractals, 2016, 92: 9–19. https://www.sciencedirect.com/science/article/pii/S0960077916302594

[18] 袁敏, 姚恒, 刘牮.结合三帧差分和肤色椭圆模型的动态手势分割[J].光电工程, 2016, 43(6): 51–56. http://www.cqvip.com/QK/90982A/201606/669164765.html

Yuan Min, Yao Heng, Liu Jian. Dynamic gesture segmentation combining three-frame difference method and skin-color elliptic boundary model[J]. Opto-Electronic Engineering, 2016, 43(6): 51–56. http://www.cqvip.com/QK/90982A/201606/669164765.html

[19] Addleman F. Cyberware: Head & Face Color 3D Sam-ples[DB/OL]. [2015-12-02]. http://cyberware.com/.

[20] 李洪臣, 杨秀艳.四大人种主要特征的比较[J].生物学教学, 2012, 37(5): 54. http://d.wanfangdata.com.cn/Periodical_swxjx201205026.aspx

Li Hongchen, Yang XiuYan. The comparison in the characteristics of the four RACES[J]. Biology Teaching, 2012, 37(5): 54. http://d.wanfangdata.com.cn/Periodical_swxjx201205026.aspx

施引文献

期刊类型引用(1)

1. 赵青,余元辉,蔡囯榕. 融合表观与曲率特征的三维点云眉毛提取. 集美大学学报(自然科学版). 2020(03): 228-235 .  百度学术

百度学术

其他类型引用(2)

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: