Dual view fusion detection method for event camera detection of unmanned aerial vehicles

-

摘要

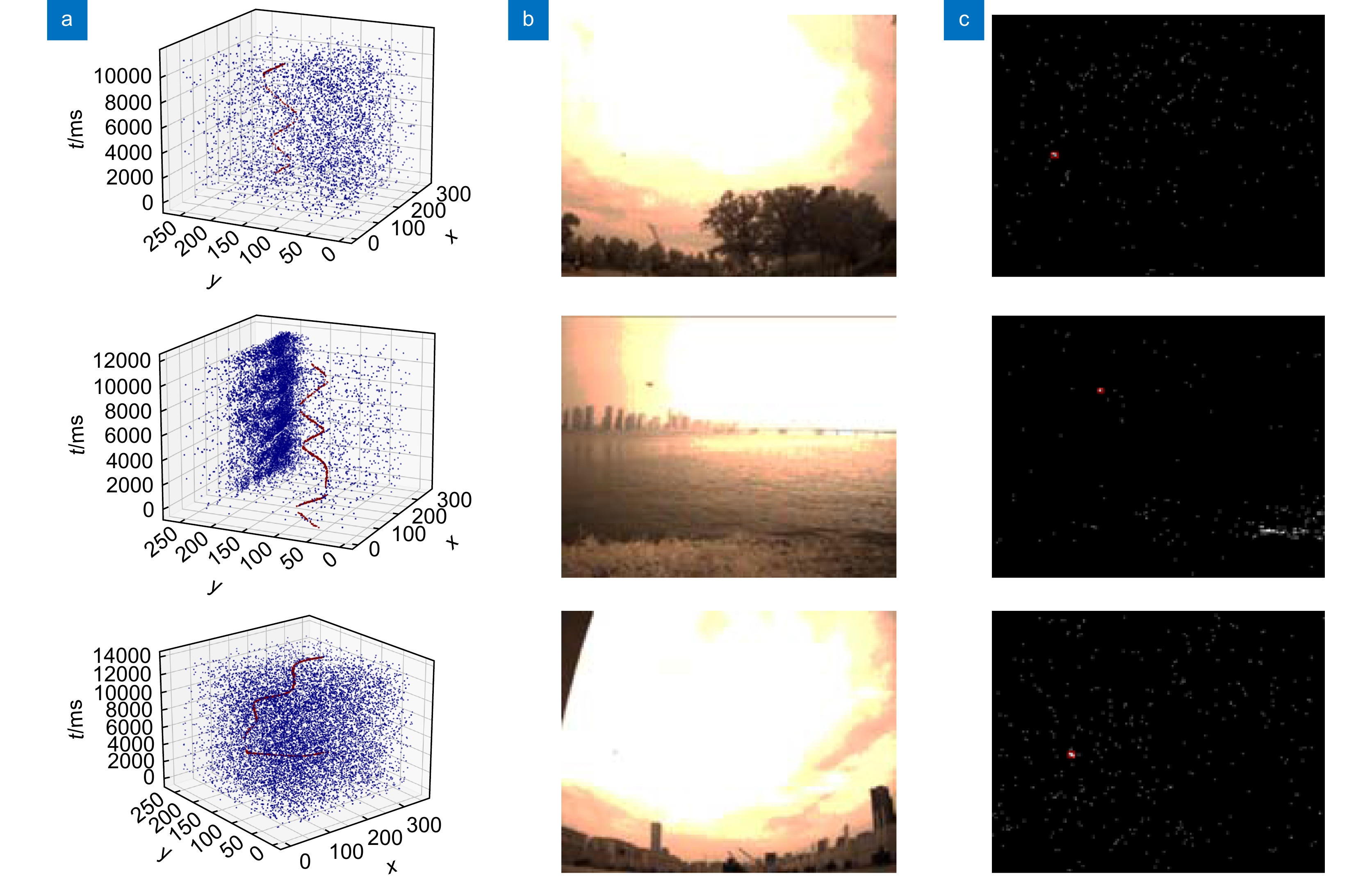

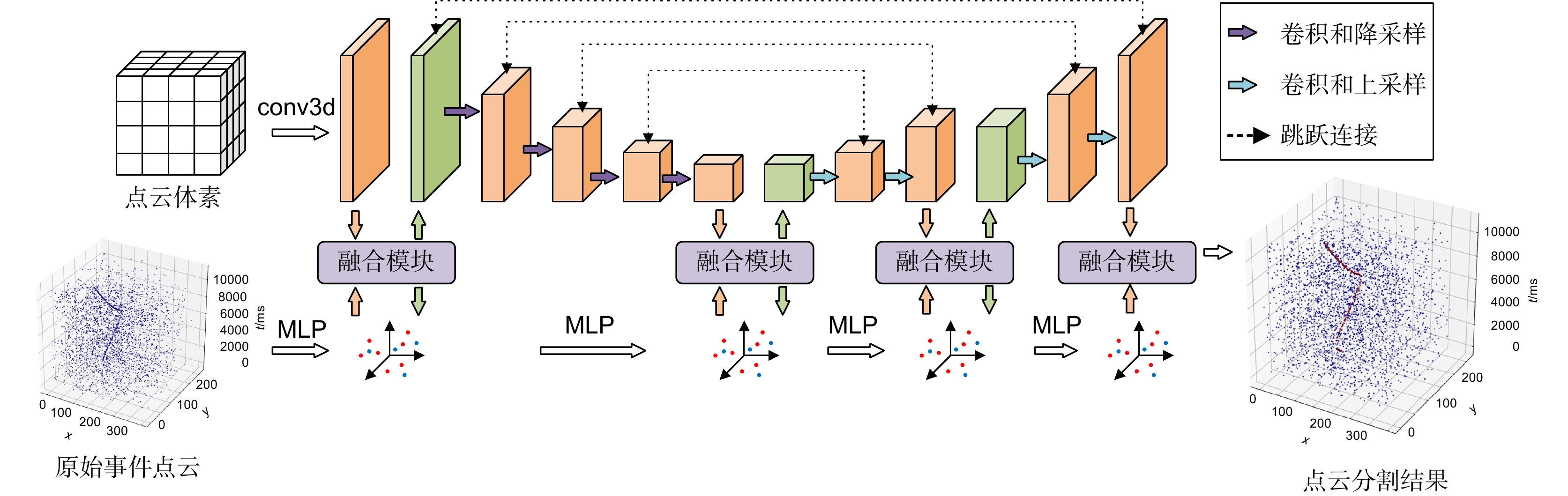

随着低空无人机的广泛应用,实时检测此类低慢小目标对维护公共安全至关重要。传统相机以固定曝光时间成像获得图像帧,在光照变化时难以自适应,导致在强光照等场景下存在探测盲区。事件相机作为一种新型的神经形态传感器,逐像素感知外部亮度变化差异,在复杂光照条件下依然可以生成高频稀疏的事件数据。针对基于图像的检测方法难以适应事件相机稀疏不规则数据的难题,本文将二维目标检测任务建模为三维时空点云中的语义分割任务,提出了一种基于双视图融合的无人机目标分割模型。基于事件相机采集真实的无人机探测数据集,实验结果表明,所提方法在保证实时性的前提下具有最优的检测性能,实现了无人机目标的稳定检测。

Abstract

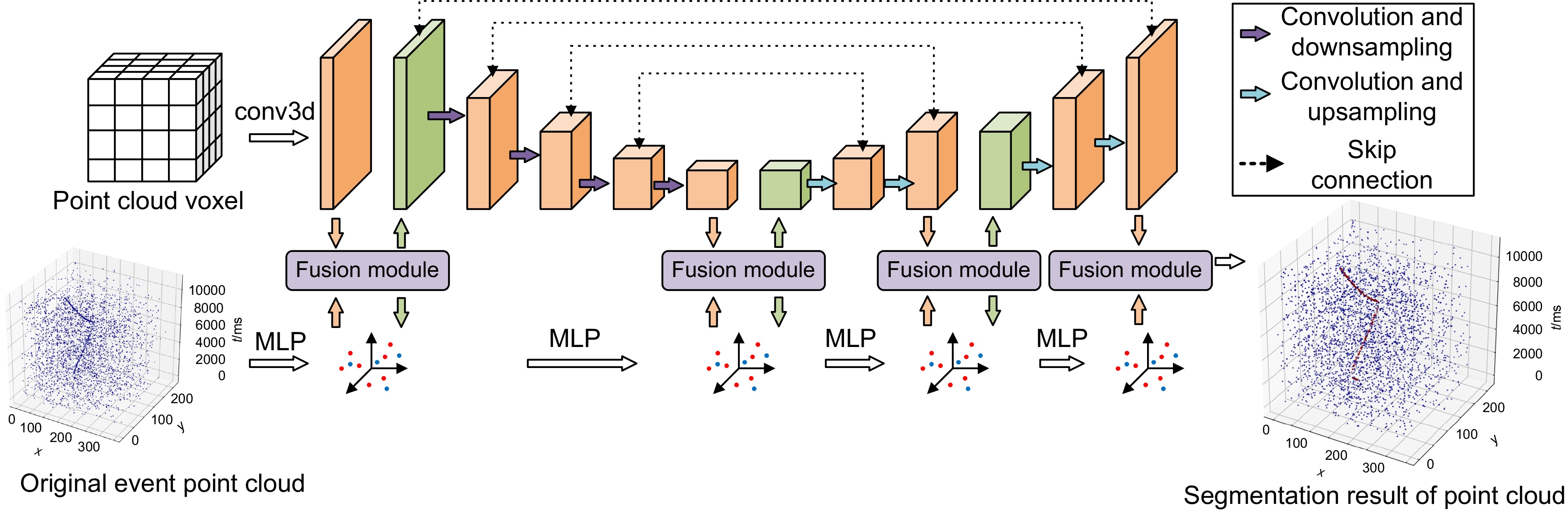

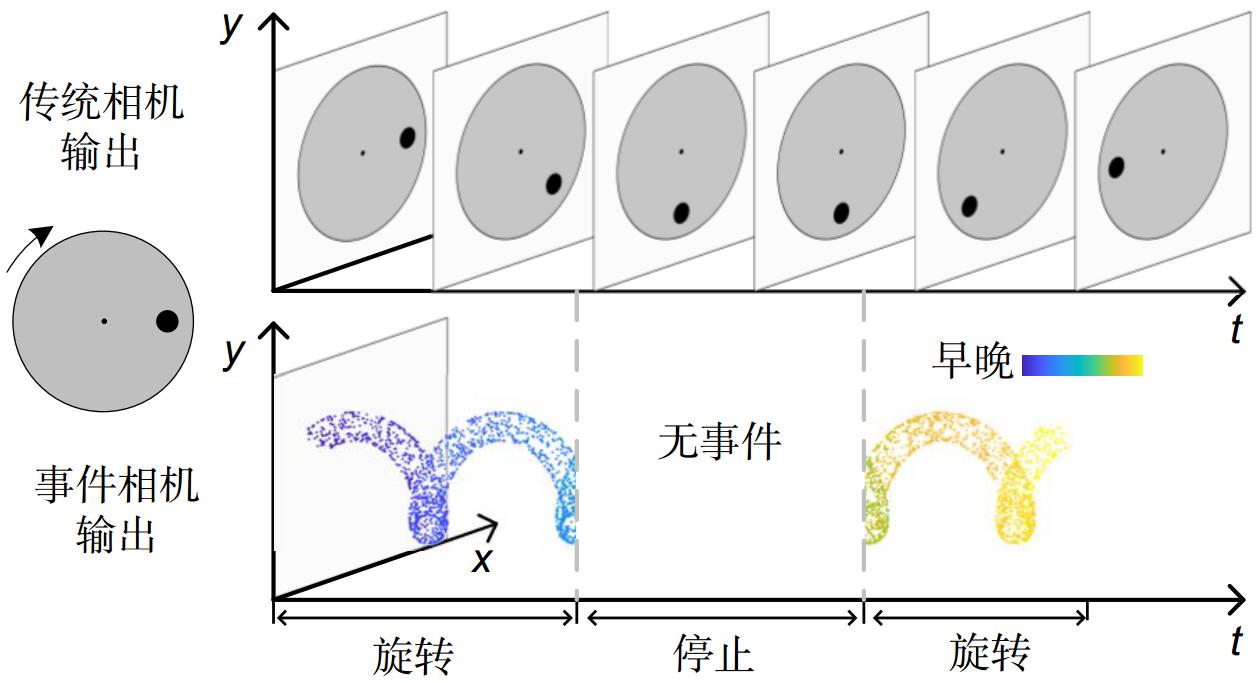

With the widespread application of low-altitude drones, real-time detection of such slow and small targets is crucial for maintaining public safety. Traditional cameras capture image frames with a fixed exposure time, which makes it challenging to adapt to changes in lighting conditions, resulting in the detection of blind spots in intense light and other scenes. Event cameras, as a new type of neuromorphic sensor, sense differences in external brightness changes pixel by pixel. They can still generate high-frequency sparse event data under complex lighting conditions. In response to the difficulty of adapting image-based detection methods to sparse and irregular data from event cameras, this paper models the two-dimensional object detection task as a semantic segmentation task in a three-dimensional spatiotemporal point cloud and proposes a drone object segmentation model based on dual-view fusion. Based on the event camera collecting accurate drone detection datasets, the experimental results show that the proposed method has the optimal detection performance while ensuring real-time performance, achieving stable detection of drone targets.

-

Overview

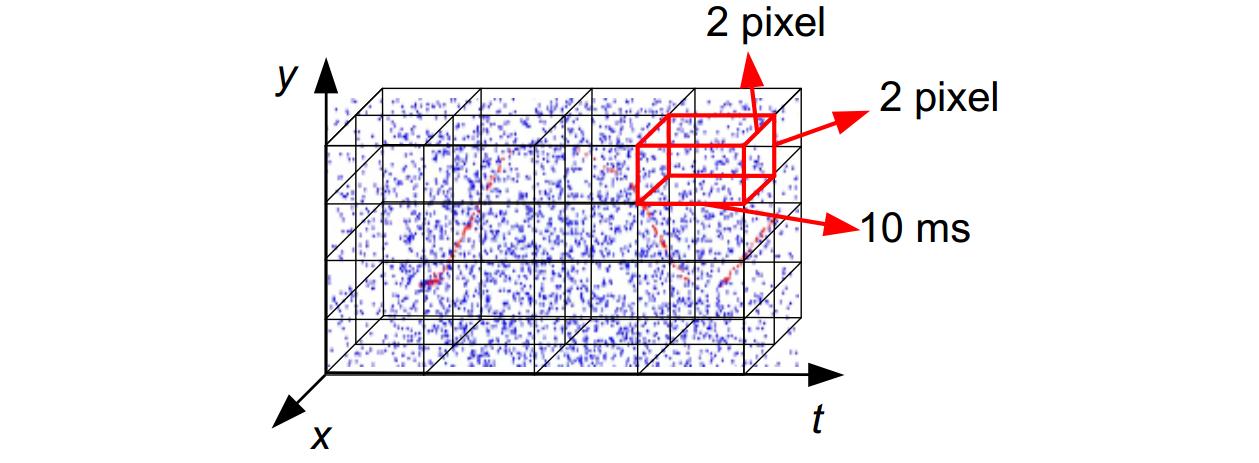

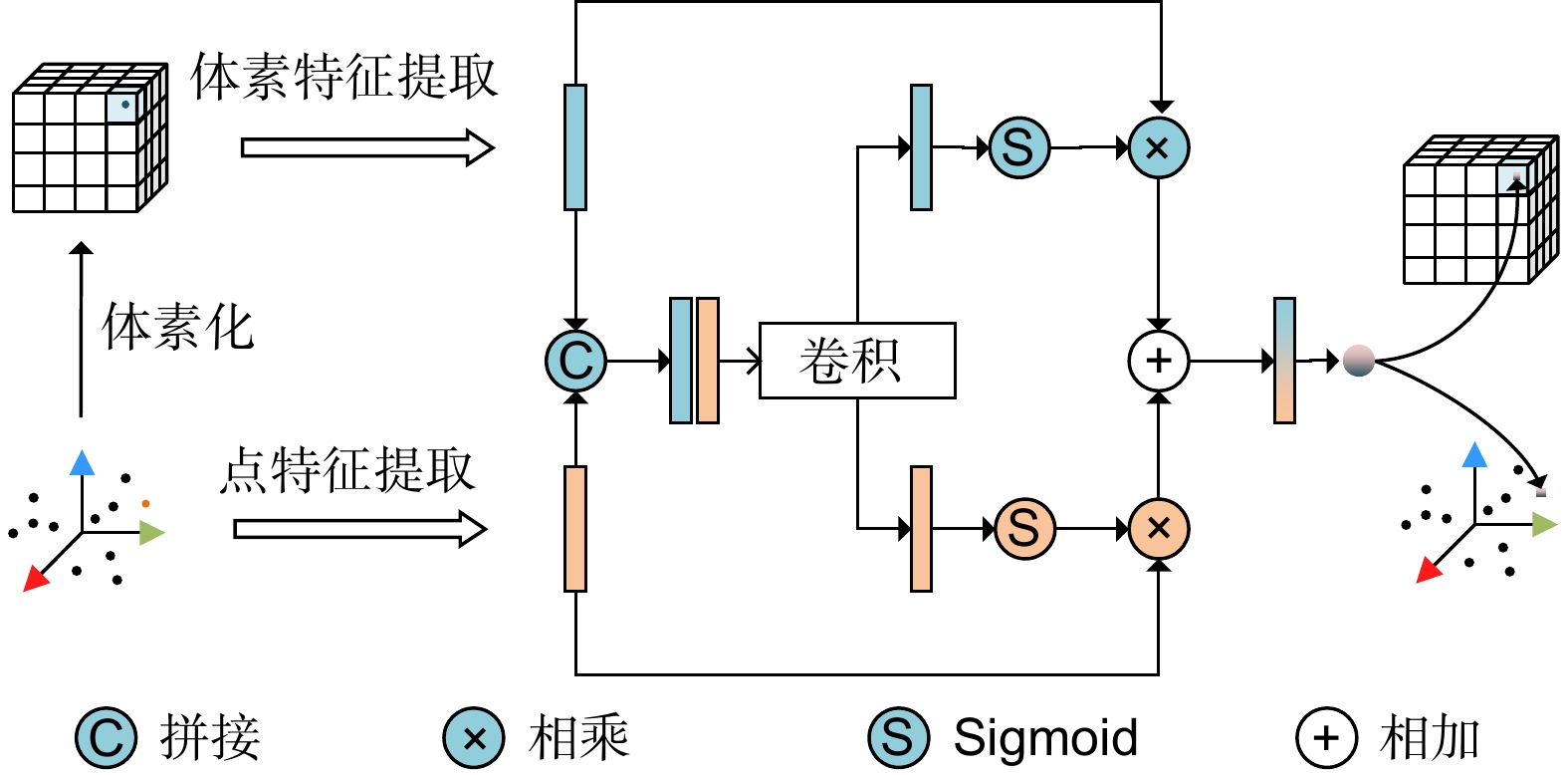

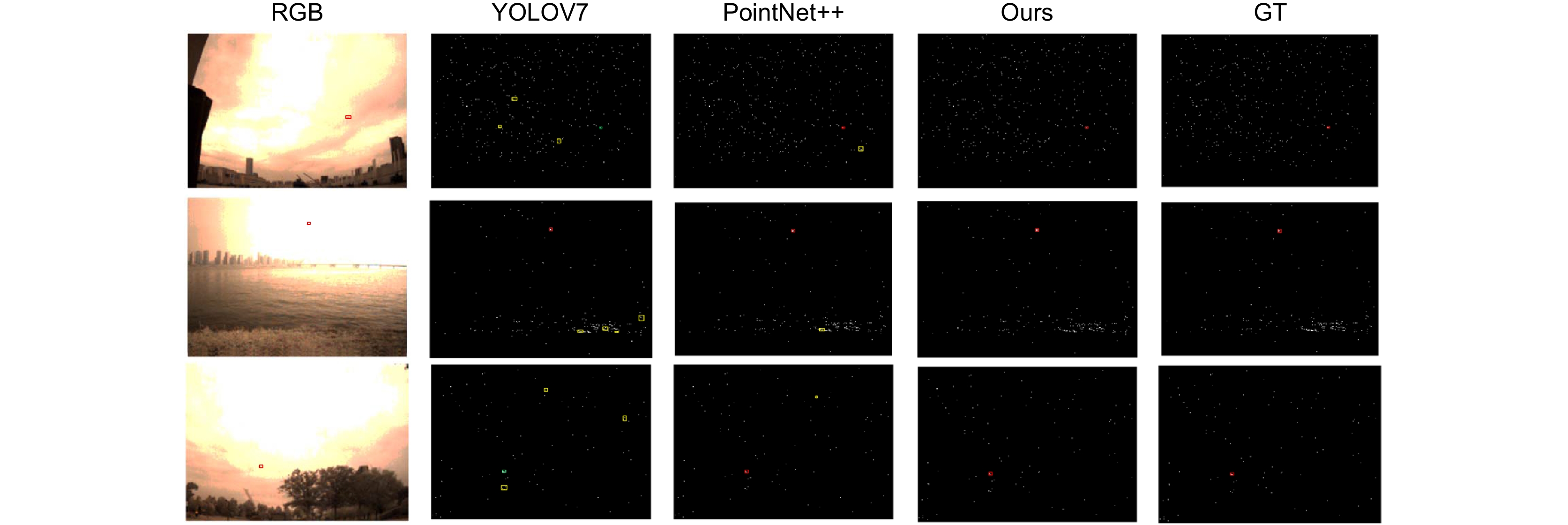

Overview: With the proliferation of UAVs in various industries, concerns over airspace management, public safety, and privacy have escalated. Traditional detection methods, such as acoustic, radio, and radar detection, are often costly and complex, limiting their applicability. In contrast, machine vision-based techniques, particularly event cameras, offer a cost-effective and adaptable solution. Event cameras, also known as dynamic vision sensors (DVS), capture visual information in the form of asynchronous events, each containing the pixel location, timestamp, and polarity of an intensity change. This address-event representation (AER) mechanism enables efficient high-speed and high-dynamic-range visual data processing. Traditional cameras capture image frames with a fixed exposure time, which makes it difficult to adapt to changes in lighting conditions, resulting in the detection of blind spots in strong light and other scenes. Event cameras perform well in this situation. The existing object detection algorithms are suitable for synchronous data such as image frames, but cannot handle asynchronous data such as data streams. The proposed method treats UAV detection as a 3D point cloud segmentation task, leveraging the unique properties of event streams. This paper introduces a dual-branch network, PVNet, which integrates voxel and point feature extraction branches. The voxel branch, resembling a U-Net architecture, extracts contextual information through downsampling and upsampling stages. The point branch utilizes multi-layer perceptrons (MLPs) to extract point-wise features. These two branches interact and fuse at multiple stages, enhancing feature representation. A key innovation lies in the gated fusion mechanism, which selectively aggregates features from both branches, mitigating the impact of non-informative features. This approach outperforms simple addition or concatenation fusion methods, as demonstrated through ablation studies. The method is evaluated on a custom dataset, Ev-UAV, containing 60 event camera recordings of UAV flights under various conditions. Evaluation metrics include intersection over union (IoU) for segmentation accuracy and mean average precision (mAP) for detection performance. Compared to frame-based methods like YOLOV7, SSD, and FastRCNN, event-based methods like RVT and SAST, and point cloud-based methods like PointNet and PointNet++, the proposed PVNet achieves superior performance, with an mAP of 69.5% and IoU of 70.3%, while maintaining low latency. By modelling UAV detection as a 3D point cloud segmentation task and leveraging the strengths of event cameras, the PVNet model effectively addresses the challenges of detecting small, feature-scarce UAVs in event data. The results demonstrate the potential of event cameras and the proposed fusion mechanism for real-time and accurate UAV detection in complex environments.

-

-

表 1 不同算法在Ev-UAV上的实验结果

Table 1. Experimental results of different algorithms on Ev-UAV dataset

方法 框架 $ {P}_{{\mathrm{d}}} $/% $ {P}_{{\mathrm{f}}} $/% AP IoU 推理时间/ms SSD 帧 88.3(+8.2) 13.3(−10.1) 62.7(+6.8) — 18.7 FastRCNN 帧 88.7(+7.8) 13.5(−10.3) 63.2(+6.3) — 19.5 YOLOV7 帧 89.8(+6.7) 10.3(−7.1) 65.3(+4.2) — 16.5 RVT 帧 90.3(+6.2) 10.1(−6.9) 65.7(+3.8) — 10.5 SAST 帧 90.1(+6.4) 10.5(−7.3) 65.5(+4.0) — 13.2 DNA-Net 帧 90.5(+6.0) 9.91(−6.7) 65.9(+3.6) — 20.3 OSCAR 帧 90.3(+6.2) 10.2(−7.0) 66.3(+3.2) — 25.3 Pointnet 点云 91.3(+5.2) 8.35(−5.1) 66.1(+3.4) 66.7(+3.6) 19.8 Pointnet++ 点云 93.5(+3.0) 7.33(−4.1) 67.3(+2.2) 67.5(+2.8) 25.3 Ours 点云 96.5 3.21 69.5 70.3 15.2 表 2 不同视图的影响。V和V+表示不同的体素分辨率: V表示4 pixel×4 pixel×20 ms,V+表示2 pixel×2 pixel×10 ms

Table 2. The impact of different views. V and V+ represent different voxel resolutions: V represents 4 pixel × 4 pixel × 20 ms, V+ represents 2 pixel × 2 pixel × 10 ms

方法 $ {P}_{\rm{d}} $/% $ {P}_{\rm{f}} $/% AP IoU/% 推理时间/ms P 89.6 (+6.9) 9.86(−6.65) 66.3 (+3.2) 63.2 (+7.1) 7.2 V 90.6 (+5.9) 8.98 (−5.77) 65.7 (+3.8) 63.8 (+6.5) 10.2 V+ 92.5 (+4.0) 5.33 (−2.12) 66.8 (+2.7) 64.7 (+5.6) 12.3 PV 94.3 (+2.2) 4.32 (−1.11) 67.2 (+2.3) 69.2 (+1.1) 19.2 PV+ 96.5 3.21 69.5 70.3 20.3 表 3 不同融合方法的有效性

Table 3. The effectiveness of different fusion methods

方法 $ {P}_{\rm{d}} $/% $ {P}_{\rm{f}} $/% AP IoU Add 90.2 (+6.3) 8.63 (−5.42) 67.3 (+2.2) 66.8 (+3.7) Multi 91.8 (+4.7) 7.68 (−4.47) 66.9 (+2.6) 64.2 (+6.1) Concat 93.8 (+2.7) 6.38 (3.17) 67.6 (+1.9) 65.3 (+5.0) Ours 96.5 3.21 69.5 70.3 -

参考文献

[1] Bouguettaya A, Zarzour H, Kechida A, et al. Vehicle detection from UAV imagery with deep learning: a review[J]. IEEE Trans Neural Netw Learn Syst, 2022, 33(11): 6047−6067. doi: 10.1109/TNNLS.2021.3080276

[2] 陈海永, 刘登斌, 晏行伟. 基于IDOU-YOLO的红外图像无人机目标检测算法[J]. 应用光学, 2024, 45(4): 723−731.

Chen H Y, Liu D B, Yan X W. Infrared image UAV target detection algorithm based on IDOU-YOLO[J]. Journal of Applied Optics, 2024, 45(4): 723−731.

[3] 韩江涛, 谭凯, 张卫国, 等. 协同随机森林方法和无人机LiDAR空谱数据的盐沼植被“精灵圈”识别[J]. 光电工程, 2024, 51(3): 230188. doi: 10.12086/oee.2024.230188

Han J T, Tan K, Zhang W G, et al. Identification of salt marsh vegetation “fairy circles” using random forest method and spatial-spectral data of unmanned aerial vehicle LiDAR[J]. Opto-Electron Eng, 2024, 51(3): 230188. doi: 10.12086/oee.2024.230188

[4] Park S, Choi Y. Applications of unmanned aerial vehicles in mining from exploration to reclamation: a review[J]. Minerals, 2020, 10(8): 663. doi: 10.3390/min10080663

[5] Sziroczak D, Rohacs D, Rohacs J. Review of using small UAV based meteorological measurements for road weather management[J]. Prog Aerosp Sci, 2022, 134: 100859. doi: 10.1016/j.paerosci.2022.100859

[6] Wang Z Y, Gao Q, Xu J B, et al. A review of UAV power line inspection[C]//Proceedings of 2020 International Conference on Guidance, Navigation and Control, Tianjin, 2022: 3147–3159. https://doi.org/10.1007/978-981-15-8155-7_263.

[7] Khan A, Gupta S, Gupta S K. Emerging UAV technology for disaster detection, mitigation, response, and preparedness[J]. J Field Robot, 2022, 39(6): 905−955. doi: 10.1002/rob.22075

[8] Li Y, Liu M, Jiang D D. Application of unmanned aerial vehicles in logistics: a literature review[J]. Sustainability, 2022, 14(21): 14473. doi: 10.3390/su142114473

[9] Mademlis I, Mygdalis V, Nikolaidis N, et al. High-level multiple-UAV cinematography tools for covering outdoor events[J]. IEEE Trans Broadcast, 2019, 65(3): 627−635. doi: 10.1109/TBC.2019.2892585

[10] 奚玉鼎, 于涌, 丁媛媛, 等. 一种快速搜索空中低慢小目标的光电系统[J]. 光电工程, 2018, 45(4): 170654. doi: 10.12086/oee.2018.170654

Xi Y D, Yu Y, Ding Y Y, et al. An optoelectronic system for fast search of low slow small target in the air[J]. Opto-Electron Eng, 2018, 45(4): 170654. doi: 10.12086/oee.2018.170654

[11] 张润梅, 肖钰霏, 贾振楠, 等. 改进YOLOv7的无人机视角下复杂环境目标检测算法[J]. 光电工程, 2024, 51(5): 240051. doi: 10.12086/oee.2024.240051

Zhang R M, Xiao Y F, Jia Z N, et al. Improved YOLOv7 algorithm for target detection in complex environments from UAV perspective[J]. Opto-Electron Eng, 2024, 51(5): 240051. doi: 10.12086/oee.2024.240051

[12] 陈旭, 彭冬亮, 谷雨. 基于改进YOLOv5s的无人机图像实时目标检测[J]. 光电工程, 2022, 49(3): 210372 doi: 10.12086/oee.2022.210372

Chen X, Peng D L, Gu Y. Real-time object detection for UAV images based on improved YOLOv5s[J]. Opto-Electron Eng, 2022, 49(3): 210372 doi: 10.12086/oee.2022.210372

[13] 张明淳, 牛春晖, 刘力双, 等. 用于无人机探测系统的红外小目标检测算法[J]. 激光技术, 2024, 48(1): 114−120. doi: 10.7510/jgjs.issn.1001-3806.2024.01.018

Zhang M C, Niu C H, Liu L S, et al. Infrared small target detection algorithm for UAV detection system[J]. Laser Technol, 2024, 48(1): 114−120. doi: 10.7510/jgjs.issn.1001-3806.2024.01.018

[14] Sedunov A, Haddad D, Salloum H, et al. Stevens drone detection acoustic system and experiments in acoustics UAV tracking[C]//Proceedings of 2019 IEEE International Symposium on Technologies for Homeland Security, Woburn, 2019: 1–7. https://doi.org/10.1109/HST47167.2019.9032916.

[15] Chiper F L, Martian A, Vladeanu C, et al. Drone detection and defense systems: Survey and a software-defined radio-based solution[J]. Sensors, 2022, 22(4): 1453. doi: 10.3390/s22041453

[16] de Quevedo Á D, Urzaiz F I, Menoyo J G, et al. Drone detection and radar-cross-section measurements by RAD-DAR[J]. IET Radar Sonar Navig, 2019, 13(9): 1437−1447. doi: 10.1049/iet-rsn.2018.5646

[17] Gallego G, Delbrück T, Orchard G, et al. Event-based vision: a survey[J]. IEEE Trans Pattern Anal Mach Intell, 2020, 44(1): 154−180. doi: 10.1109/TPAMI.2020.3008413

[18] Shariff W, Dilmaghani M S, Kielty P, et al. Event cameras in automotive sensing: a review[J]. IEEE Access, 2024, 12: 51275−51306. doi: 10.1109/ACCESS.2024.3386032

[19] Paredes-Vallés F, Scheper K Y W, De Croon G C H E. Unsupervised learning of a hierarchical spiking neural network for optical flow estimation: from events to global motion perception[J]. IEEE Trans Pattern Anal Mach Intell, 2020, 42(8): 2051−2064. doi: 10.1109/TPAMI.2019.2903179

[20] Cordone L, Miramond B, Thierion P. Object detection with spiking neural networks on automotive event data[C]// Proceedings of 2022 International Joint Conference on Neural Networks, Padua, 2022: 1–8. https://doi.org/10.1109/IJCNN55064.2022.9892618.

[21] Li Y J, Zhou H, Yang B B, et al. Graph-based asynchronous event processing for rapid object recognition[C]//Proceedings of 2021 IEEE/CVF International Conference on Computer Vision, Montreal, 2021: 914–923. https://doi.org/10.1109/ICCV48922.2021.00097.

[22] Schaefer S, Gehrig D, Scaramuzza D. AEGNN: asynchronous event-based graph neural networks[C]//Proceedings of 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, 2022: 12361–12371. https://doi.org/10.1109/CVPR52688.2022.01205.

[23] Jiang Z Y, Xia P F, Huang K, et al. Mixed frame-/event-driven fast pedestrian detection[C]// Proceedings of 2019 International Conference on Robotics and Automation, Montreal, 2019: 8332–8338. https://doi.org/10.1109/ICRA.2019.8793924.

[24] Lagorce X, Orchard G, Galluppi F, et al. HOTS: a hierarchy of event-based time-surfaces for pattern recognition[J]. IEEE Trans Pattern Anal Mach Intell, 2017, 39(7): 1346−1359. doi: 10.1109/TPAMI.2016.2574707

[25] Zhu A, Yuan L Z, Chaney K, et al. Unsupervised event-based learning of optical flow, depth, and egomotion[C]//Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, 2019: 989–997. https://doi.org/10.1109/CVPR.2019.00108.

[26] Wang D S, Jia X, Zhang Y, et al. Dual memory aggregation network for event-based object detection with learnable representation[C]//Proceedings of the 37th AAAI Conference on Artificial Intelligence, Washington, 2023: 2492–2500. https://doi.org/10.1609/aaai.v37i2.25346.

[27] Li J N, Li J, Zhu L, et al. Asynchronous spatio-temporal memory network for continuous event-based object detection[J]. IEEE Trans Image Process, 2022, 31: 2975−2987. doi: 10.1109/TIP.2022.3162962

[28] Peng Y S, Zhang Y Y, Xiong Z W, et al. GET: group event transformer for event-based vision[C]//Proceedings of 2023 IEEE/CVF International Conference on Computer Vision, Paris, 2023: 6015–6025. https://doi.org/10.1109/ICCV51070.2023.00555.

[29] Chen N F Y. Pseudo-labels for supervised learning on dynamic vision sensor data, applied to object detection under ego-motion[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, 2018: 644–653. https://doi.org/10.1109/CVPRW.2018.00107.

[30] Afshar S, Nicholson A P, Van S A, et al. Event-based object detection and tracking for space situational awareness[J]. IEEE Sensors Journal, 2020, 20(24): 15117−15132 doi: 10.1109/JSEN.2020.3009687

[31] Huang H M, Lin L F, Tong R F, et al. UNet 3+: a full-scale connected UNet for medical image segmentation[C]// Proceedings of ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing, Barcelona, 2020: 1055–1059. https://doi.org/10.1109/ICASSP40776.2020.9053405.

[32] Wang C Y, Bochkovskiy A, Liao H Y M. YOLOv7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors[C]//Proceedings of 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, 2023: 7464–7475. https://doi.org/10.1109/CVPR52729.2023.00721.

[33] Liu W, Anguelov D, Erhan D, et al. SSD: single shot multibox detector[C]//Proceedings of the 14th European Conference on Computer Vision, Amsterdam, 2016: 21–37. https://doi.org/10.1007/978-3-319-46448-0_2.

[34] Girshick R. Fast R-CNN[C]//Proceedings of 2015 IEEE International Conference on Computer Vision, Santiago, 2015: 1440–1448. https://doi.org/10.1109/ICCV.2015.169.

[35] Charles R Q, Su H, Kaichun M. PointNet: deep learning on point sets for 3D classification and segmentation[C]// Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, 2017: 77–85. https://doi.org/10.1109/CVPR.2017.16.

[36] Charles R Q, Yi L, Su H, et al. PointNet++: deep hierarchical feature learning on point sets in a metric space[C]//Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, 2017: 5105–5114. https://dl.acm.org/doi/abs/10.5555/3295222.3295263.

[37] Gehrig M, Scaramuzza D. Recurrent vision transformers for object detection with event cameras[C]//Proceedings of 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, 2023: 13884–13893. https://doi.org/10.1109/CVPR52729.2023.01334.

[38] Peng Y S, Li H B, Zhang Y Y, et al. Scene adaptive sparse transformer for event-based object detection[C]//Proceedings of 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, 2024: 16794–16804. https://doi.org/10.1109/CVPR52733.2024.01589.

[39] Li B Y, Xiao C, Wang L G, et al. Dense nested attention network for infrared small target detection[J]. IEEE Trans Image Process, 2023, 32: 1745−1758. doi: 10.1109/TIP.2022.3199107

[40] Dai Y M, Li X, Zhou F, et al. One-stage cascade refinement networks for infrared small target detection[J]. IEEE Trans Geosci Remote Sens, 2023, 61: 5000917. doi: 10.1109/TGRS.2023.3243062

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: