Camera-aware unsupervised person re-identification method guided by pseudo-label refinement

-

摘要

无监督行人重识别因其广泛的实际应用前景而受到越来越多的关注。大多数基于聚类的对比学习方法将每个集群视为一个伪身份类,忽略了由相机风格差异造成的类内差异。一些方法引入了相机感知对比学习,根据相机视角将单一集群划分为多个子集群,但它们容易受到噪声伪标签的误导。为解决这一问题,本文首先基于实例在特征空间中的相似性,采用最近邻的预测标签和原始聚类结果的加权组合细化伪标签。然后,采用细化伪标签动态地关联实例可能属于的类别中心,同时剔除可能存在的假阴性样本。这一方法改进了相机感知对比学习中正负样本的选择机制,有效地减轻了噪声伪标签对对比学习任务的误导。在Market-1501、MSMT17、Personx数据集上mAP/Rank-1分别达到了85.2%/94.4%、44.3%/74.1%、88.7%/95.9%。

Abstract

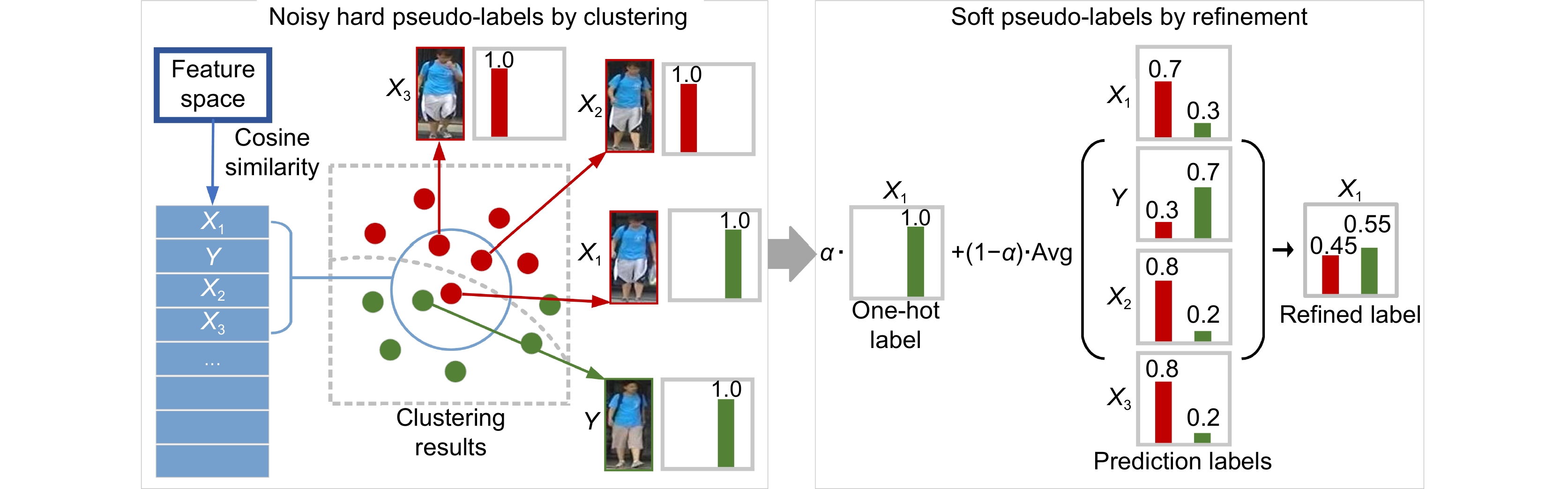

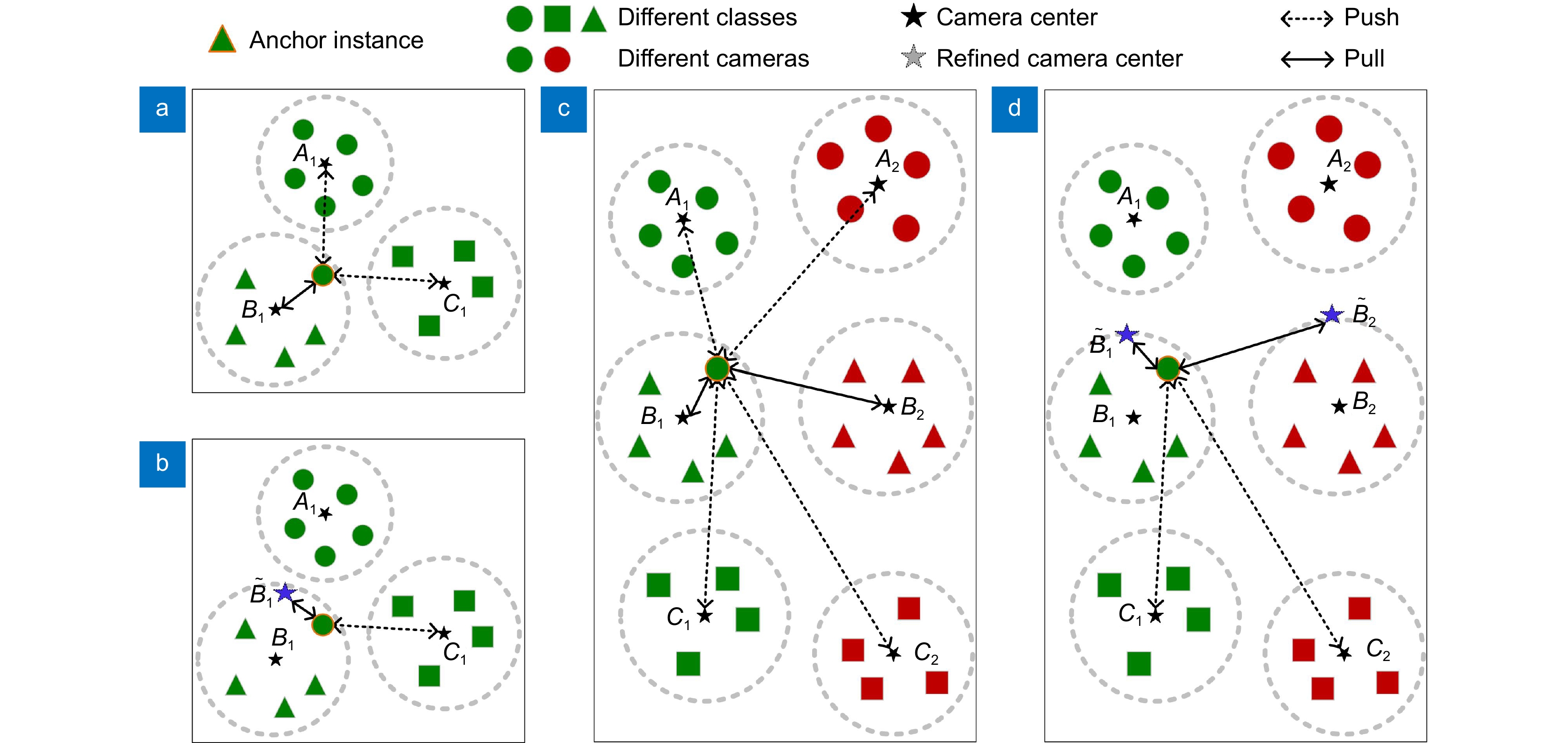

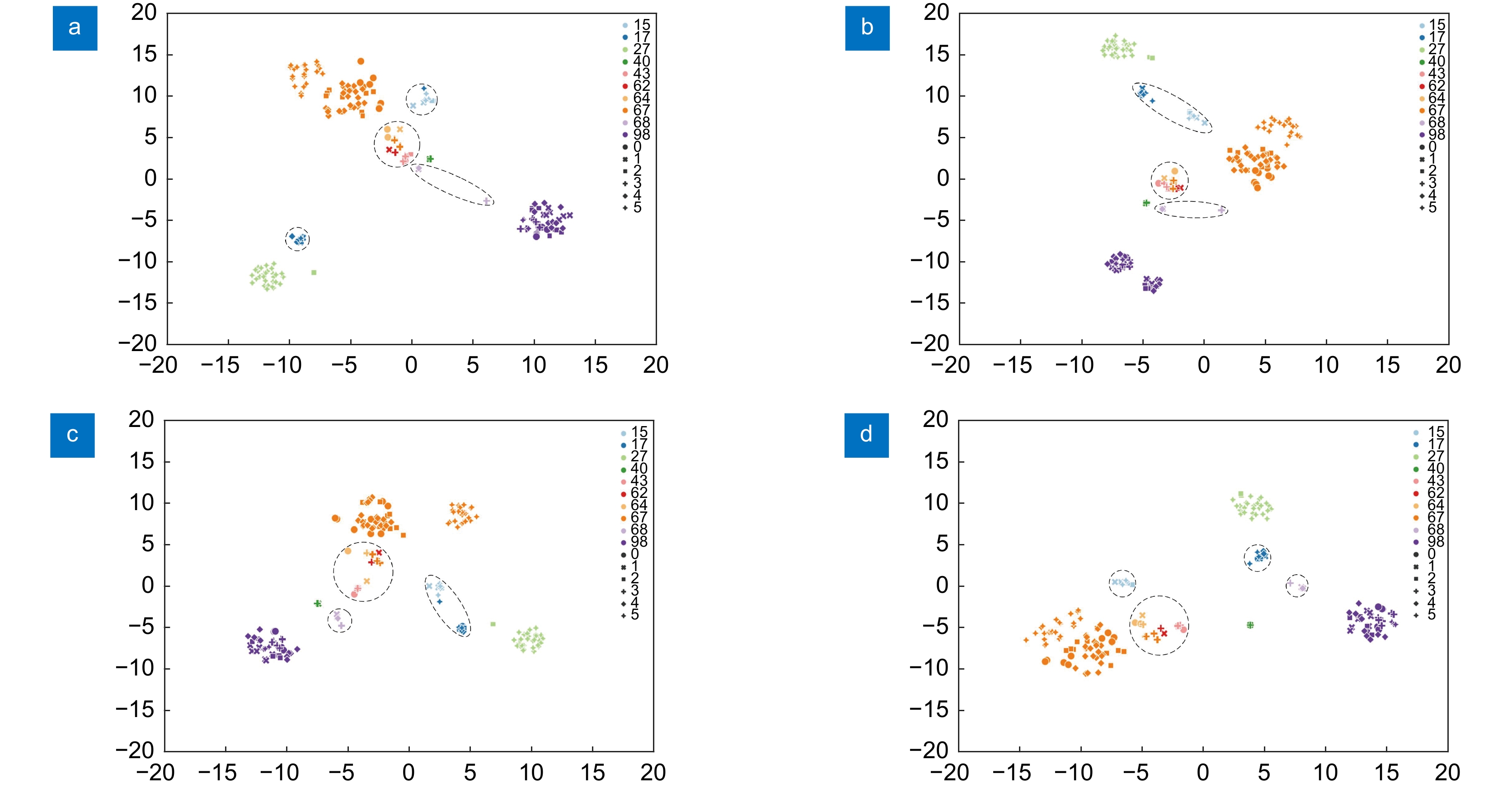

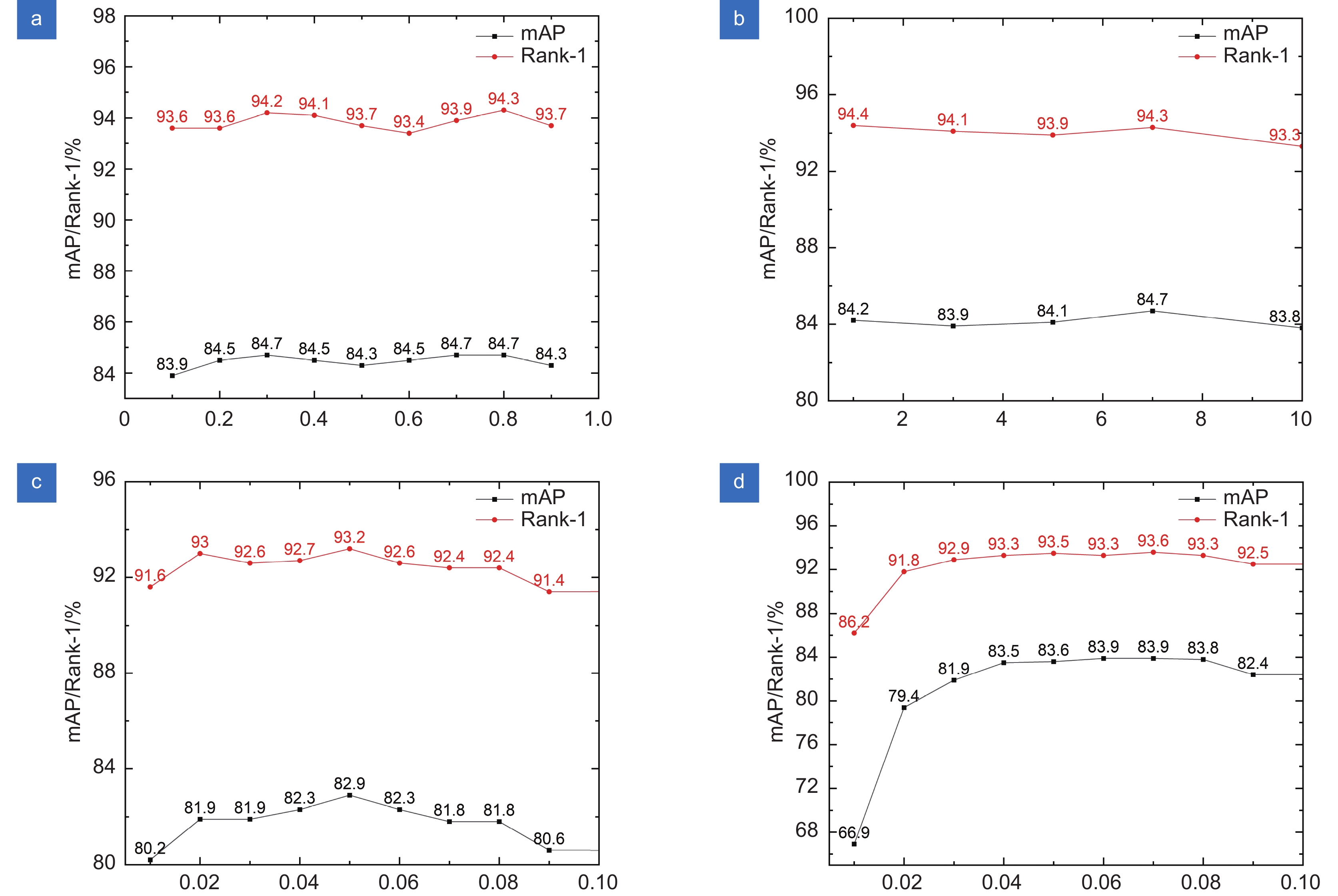

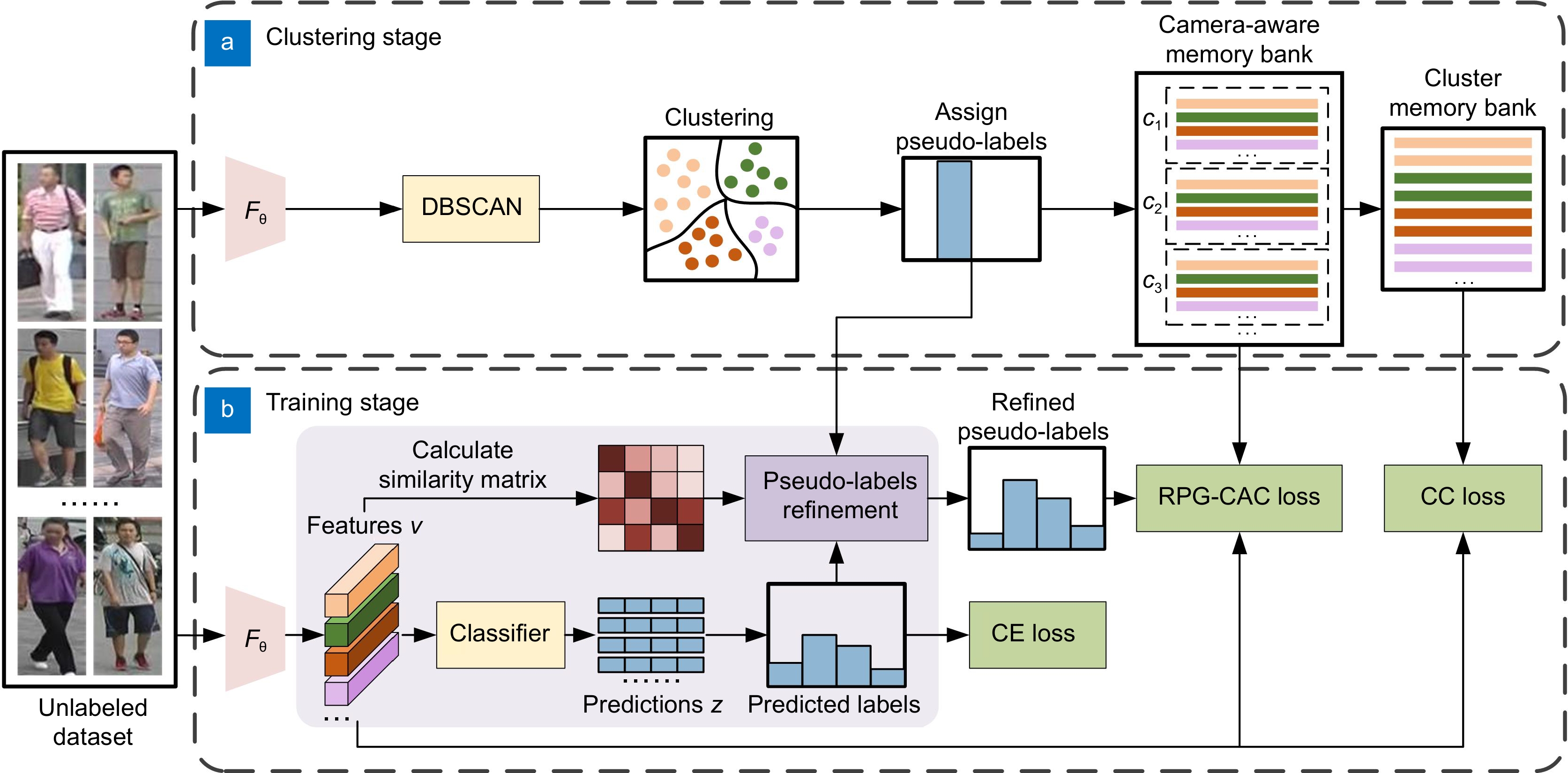

Unsupervised person re-identification has attracted more and more attention due to its extensive practical application prospects. Most clustering-based contrastive learning methods treat each cluster as a pseudo-identity class, overlooking intra-class variances caused by differences in camera styles. While some methods have introduced camera-aware contrastive learning by partitioning a single cluster into multiple sub-clusters based on camera views, they are susceptible to misguidance from noisy pseudo-labels. To address this issue, we first refine pseudo-labels by leveraging the similarity between instances in the feature space, using a weighted combination of the nearest neighboring predicted labels and the original clustering results. Subsequently, it dynamically associates instances with possible category centers based on refined pseudo-labels while eliminating potential false negative samples. This method enhances the selection mechanism for positive and negative samples in camera-aware contrastive learning, effectively mitigating the influence of noisy pseudo-labels on the contrastive learning task. On Market-1501, MSMT17 and Personx datasets, mAP/Rank-1 reached 85.2%/94.4%, 44.3%/74.1% and 88.7%/95.9%.

-

Overview

Overview: Unsupervised person re-identification has received increasing attention due to its wide practical application prospects. Most clustering-based contrastive learning methods treat each cluster as a pseudo-identity class, focusing on improving inter-class differences while ignoring intra-class differences caused by factors such as perspective, lighting, and background between different cameras. This makes it difficult for clustering algorithms to accurately cluster samples with the same identity into the same cluster, inevitably leading to noisy pseudo-labels. Some methods have introduced camera-aware contrastive learning, which divide a single cluster into multiple sub-clusters based on the camera's perspective, and calculate the intra-camera and inter-camera contrastive loss separately. However, the noise in pseudo-labels may interfere with the selection of positive and negative samples in camera-aware contrastive learning, thereby misleading the model's learning process. To address this issue, this paper proposes a camera-aware unsupervised person re-identification method guided by refined pseudo-labels. By calculating the similarity between training instances in feature space, a neighborhood set is determined for each instance. Subsequently, the model refines one-hot pseudo-labels by combining the predicted labels for samples within the neighborhood with the original clustering results using weighted aggregation. The core idea behind this approach is to encourage the model to not only bring samples closer to their respective cluster centers but also establish associations with other nearby samples that may contain identity information. This strategy effectively enhances the model's robustness against noisy labels while reducing the risk of over-fitting. Building upon this, this paper further proposes camera-aware contrastive learning guided by refined pseudo-labels. By leveraging the probability distribution of each class in the refined pseudo-labels for instances, the model dynamically associates instances with potential class centers, no longer relying on a single class center as the positive sample. Additionally, potential false positive and false negative samples are filtered out. This method enhances the selection mechanism of positive and negative samples in camera-aware contrastive learning, effectively mitigating the influence of noisy pseudo-labels on the contrastive learning task. The method proposed in this article was validated on three large-scale public datasets, and the results showed that this method has significantly improved compared to the baseline method and is superior to current advanced methods in the same field. This method achieved mAP/Rank-1 of 85.2%/94.4%, 44.3%/74.1%, and 88.7%/95.9% on the Market-1501, MSMT17, and Personx datasets, respectively, demonstrating superiority. Specifically, on the Market-1501, MSMT17, and Personx datasets, this paper’s method achieves mAP/Rank-1 scores of 85.2%/94.4%, 44.3%/74.1%, and 88.7%/95.9%, respectively, showcasing its superiority.

-

-

表 1 本文方法与最新方法的比较

Table 1. The comparison between the our method and the latest methods

Methods Market-1501 MSMT17 Personx mAP/% Rank-1/% mAP/% Rank-1/% mAP/% Rank-1/% Unsupervised domain adaptation (UDA) ECN[2] CVPR’19 43.0 75.1 10.2 30.2 - - SPCL[3] NeurIPS’20 77.5 89.7 26.8 53.7 78.5 91.1 MEB-Net[13] ECCV’20 76.0 89.9 - - - - MMT[12] CVPR’21 71.2 87.7 23.5 50.0 78.9 90.6 GLT[31] CVPR’21 79.5 92.2 27.7 59.5 - - MCL[32] JBUAA’22 80.6 93.2 28.5 58.5 - - CACHE[33] TCSVT’22 83.1 93.4 31.3 58.0 - - CIFL[20] TMM’22 83.3 93.9 39.0 70.5 - - MCRN[16] AAAI’22 83.8 93.8 35.7 67.5 - - IICM[34] JCRD’23 74.9 89.0 27.2 52.3 - - NPSS[21] TIFS’23 84.6 94.1 38.9 69.4 - - CaCL[11] ICCV’23 84.7 93.8 40.3 70.0 - - Unsupervised learning (USL) SPCL[3] NeurIPS’20 73.1 88.1 19.1 42.3 72.3 88.1 MetaCam[7] CVPR’21 61.7 83.9 15.5 35.2 - - IICS[8] CVPR’21 72.9 89.5 26.9 56.4 - - RLCC[14] CVPR’21 77.7 90.8 27.9 56.5 - - CAP[9] AAAI’21 79.2 91.4 36.9 67.4 - - ICE[17] ICCV’21 82.3 93.8 38.9 70.2 - - CACHE[33] TCSVT’22 81.0 92.0 31.8 58.2 - - CIFL[20] TMM’22 82.4 93.9 38.8 70.1 - - GRACL[35] TCSVT’22 83.7 93.2 34.6 64.0 87.9 95.3 PPLR[15] CVPR’22 84.4 94.3 42.2 73.3 - - CA-UReID[10] ICME’22 84.5 94.1 - - - NPSS[21] TIFS’23 82.3 94.0 36.7 68.8 - - LRMGFS[36] JEMI’23 83.3 93.3 27.4 58.4 - - PLRIS[22] ICIP’23 83.2 93.1 43.3 71.5 - - AdaMG[37] TCSVT’23 84.6 93.9 38.0 66.3 87.6 95.0 LP[18] TIP’23 85.8 94.5 39.5 67.9 - - DCCT[19] TCSVT’’23 86.3 94.4 41.8 68.7 87.6 95.0 CC[4] CoRR’21 82.1 92.3 27.6 56.0 84.7 94.4 Ours - 85.2 94.4 44.3 74.1 88.7 95.9 表 2 Market-1501数据集上消融实验结果

Table 2. Results of ablation studies on Market-1501

${{\widetilde {\rm{L}} }_{ {\text{ce} } } }$ ${ {\widetilde {\rm{L}} }_{ {\text{intra} } } }$ ${ {\widetilde {\rm{L}} }_{ {\text{inter} } } }$ ${\rm{Market} }\text{-}1501$ ${\rm{Personx}} $ mAP/% Rank-1/% Rank-5/% Rank-10/% mAP/% Rank-1/% Rank-5/% Rank-10/% M1(CC[4]) - - - 82.1 92.3 96.7 97.9 84.7 94.4 98.3 99.3 M2 - √ - 82.9 93.2 97.3 98.2 87.9 95.7 98.8 99.5 M3 - - √ 83.9 93.6 97.5 98.3 87.3 95.9 98.8 99.4 M4 - √ √ 84.1 93.6 97.6 98.5 88.5 95.8 98.9 99.6 M5 √ - - 84.7 94.2 97.9 98.7 87.5 95.5 98.9 99.5 M6(Ours) √ √ √ 85.2 94.4 98.1 98.6 88.7 95.9 99.2 99.7 -

参考文献

[1] 张晓艳, 张宝华, 吕晓琪, 等. 深度双重注意力的生成与判别联合学习的行人重识别[J]. 光电工程, 2021, 48(5): 200388. doi: 10.12086/oee.2021.200388

Zhang X Y, Zhang B H, Lv X Q, et al. The joint discriminative and generative learning for person re-identification of deep dual attention[J]. Opto-Electron Eng, 2021, 48(5): 200388. doi: 10.12086/oee.2021.200388

[2] Zhong Z, Zheng L, Luo Z M, et al. Invariance matters: exemplar memory for domain adaptive person re-identification[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019: 598–607. https://doi.org/10.1109/CVPR.2019.00069.

[3] Ge Y X, Zhu F, Chen D P, et al. Self-paced contrastive learning with hybrid memory for domain adaptive object re-ID[C]//Proceedings of the 34th International Conference on Neural Information Processing Systems, 2020: 949. https://doi.org/10.5555/3495724.3496673.

[4] Dai Z Z, Wang G Y, Yuan W H, et al. Cluster contrast for unsupervised person re-identification[C]//Proceedings of the 16th Asian Conference on Computer Vision, 2023: 319–337. https://doi.org/10.1007/978-3-031-26351-4_20.

[5] Tian J J, Tang Q H, Li R, et al. A camera identity-guided distribution consistency method for unsupervised multi-target domain person re-identification[J]. ACM Trans Intell Syst Technol, 2021, 12(4): 38. doi: 10.1145/3454130

[6] Choi Y, Choi M, Kim M, et al. StarGAN: Unified generative adversarial networks for multi-domain image-to-image translation[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018: 8789–8797. https://doi.org/10.1109/CVPR.2018.00916.

[7] Yang F X, Zhong Z, Luo Z M, et al. Joint noise-tolerant learning and meta camera shift adaptation for unsupervised person re-identification[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021: 4853–4862. https://doi.org/10.1109/CVPR46437.2021.00482.

[8] Xuan S Y, Zhang S L. Intra-inter camera similarity for unsupervised person re-identification[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021: 11921–11930. https://doi.org/10.1109/CVPR46437.2021.01175.

[9] Wang M L, Lai B S, Huang J Q, et al. Camera-aware proxies for unsupervised person re-identification[C]//Proceedings of the AAAI Conference on Artificial Intelligence, 2021: 2764–2772. https://doi.org/10.1609/aaai.v35i4.16381.

[10] Li X, Liang T F, Jin Y, et al. Camera-aware style separation and contrastive learning for unsupervised person re-identification[C]//2022 IEEE International Conference on Multimedia and Expo, 2022: 1–6. https://doi.org/10.1109/ICME52920.2022.9859842.

[11] Lee G, Lee S, Kim D, et al. Camera-driven representation learning for unsupervised domain adaptive person re-identification[Z]. arXiv: 2308.11901, 2023. https://doi.org/10.48550/arXiv.2308.11901.

[12] Ge Y X, Chen D P, Li H S. Mutual mean-teaching: pseudo label refinery for unsupervised domain adaptation on person re-identification[C]//8th International Conference on Learning Representations, 2020.

[13] Zhai Y P, Ye Q X, Lu S J, et al. Multiple expert brainstorming for domain adaptive person re-identification[C]//16th European Conference on Computer Vision, 2020: 594–611. https://doi.org/10.1007/978-3-030-58571-6_35.

[14] Zhang X, Ge Y X, Qiao Y, et al. Refining pseudo labels with clustering consensus over generations for unsupervised object re-identification[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021: 3435–3444. https://doi.org/10.1109/CVPR46437.2021.00344.

[15] Cho Y, Kim W J, Hong S, et al. Part-based pseudo label refinement for unsupervised person re-identification[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2022: 7298–7308. https://doi.org/10.1109/CVPR52688.2022.00716.

[16] Wu Y H, Huang T T, Yao H T, et al. Multi-centroid representation network for domain adaptive person re-ID[C]//Proceedings of the AAAI Conference on Artificial Intelligence, 2022: 2750–2758. https://doi.org/10.1609/aaai.v36i3.20178.

[17] Chen H, Lagadec B, Bremond F. ICE: inter-instance contrastive encoding for unsupervised person re-identification[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision, 2021: 14940–14949. https://doi.org/10.1109/ICCV48922.2021.01469.

[18] Lan L, Teng X, Zhang J, et al. Learning to purification for unsupervised person re-identification[J]. IEEE Trans Image Process, 2023, 33: 3338−3353. doi: 10.1109/TIP.2023.3278860

[19] Chen Z Q, Cui Z C, Zhang C, et al. Dual clustering co-teaching with consistent sample mining for unsupervised person re-identification[J]. IEEE Trans Circuits Syst Video Technol, 2023, 33(10): 5908−5920. doi: 10.1109/TCSVT.2023.3261898

[20] Pang Z Q, Zhao L L, Liu Q Y, et al. Camera invariant feature learning for unsupervised person re-identification[J]. IEEE Trans Multimed, 2023, 25: 6171−6182. doi: 10.1109/TMM.2022.3206662

[21] Wang H J, Yang M, Liu J L, et al. Pseudo-label noise prevention, suppression and softening for unsupervised person re-identification[J]. IEEE Trans Inf Forensics Secur, 2023, 18: 3222−3237. doi: 10.1109/TIFS.2023.3277694

[22] Li P N, Wu K Y, Zhou S P, et al. Pseudo labels refinement with intra-camera similarity for unsupervised person re-identification[C]//2023 IEEE International Conference on Image Processing, 2023: 366–370. https://doi.org/10.1109/ICIP49359.2023.10222317.

[23] Zheng L, Shen L Y, Tian L, et al. Scalable person re-identification: a benchmark[C]//Proceedings of the 2015 IEEE International Conference on Computer Vision, 2015: 1116–1124. https://doi.org/10.1109/ICCV.2015.133.

[24] Wei L H, Zhang S L, Gao W, et al. Person transfer GAN to bridge domain gap for person re-identification[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2018: 79–88. https://doi.org/10.1109/CVPR.2018.00016.

[25] Sun X X, Zheng L. Dissecting person re-identification from the viewpoint of viewpoint[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019: 608–617. https://doi.org/10.1109/CVPR.2019.00070.

[26] Ester M, Kriegel H P, Sander J, et al. A density-based algorithm for discovering clusters in large spatial databases with noise[C]//Proceedings of the Second International Conference on Knowledge Discovery and Data Mining, 1996: 226–231. https://doi.org/10.5555/3001460.3001507.

[27] Zhou D Y, Bousquet O, Lal T N, et al. Learning with local and global consistency[C]//Proceedings of the 16th International Conference on Neural Information Processing Systems, 2003: 321–328. https://doi.org/10.5555/2981345.2981386.

[28] Deng J, Dong W, Socher R, et al. ImageNet: a large-scale hierarchical image database[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2009: 248–255. https://doi.org/10.1109/CVPR.2009.5206848.

[29] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016: 770–778. https://doi.org/10.1109/CVPR.2016.90.

[30] Zhong Z, Zheng L, Cao D L, et al. Re-ranking person re-identification with k-reciprocal encoding[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017: 3652–3661. https://doi.org/10.1109/CVPR.2017.389.

[31] Zheng K C, Liu W, He L X, et al. Group-aware label transfer for domain adaptive person re-identification[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021: 5306–5315. https://doi.org/10.1109/CVPR46437.2021.00527.

[32] 李慧, 张晓伟, 赵新鹏, 等. 基于多标签协同学习的跨域行人重识别[J]. 北京航空航天大学学报, 2022, 48(8): 1534−1542. doi: 10.13700/j.bh.1001-5965.2021.0600

Li H, Zhang X W, Zhao X P, et al. Multi-label cooperative learning for cross domain person re-identification[J]. J Beijing Univ Aeronaut Astronaut, 2022, 48(8): 1534−1542. doi: 10.13700/j.bh.1001-5965.2021.0600

[33] Liu Y X, Ge H W, Sun L, et al. Complementary attention-driven contrastive learning with hard-sample exploring for unsupervised domain adaptive person re-ID[J]. IEEE Trans Circuits Syst Video Technol, 2023, 33(1): 326−341. doi: 10.1109/TCSVT.2022.3200671

[34] 陈利文, 叶锋, 黄添强, 等. 基于摄像头域内域间合并的无监督行人重识别方法[J]. 计算机研究与发展, 2023, 60(2): 415−425. doi: 10.7544/issn1000-1239.202110732

Chen L W, Ye F, Huang T Q, et al. An unsupervised person re-Identification method based on intra-/inter-camera merger[J]. J Comput Res Dev, 2023, 60(2): 415−425. doi: 10.7544/issn1000-1239.202110732

[35] Zhang H W, Zhang G Q, Chen Y H, et al. Global relation-aware contrast learning for unsupervised person re-identification[J]. IEEE Trans Circuits Syst Video Technol, 2022, 32(12): 8599−8610. doi: 10.1109/TCSVT.2022.3194084

[36] 钱亚萍, 王凤随, 熊磊. 基于局部细化多分支与全局特征共享的无监督行人重识别方法[J]. 电子测量与仪器学报, 2023, 37(1): 106−115. doi: 10.13382/j.jemi.B2205837

Qian Y P, Wang F S, Xiong L. Unsupervised person re-identification method based on local refinement multi-branch and global feature sharing[J]. J Electron Meas Instrum, 2023, 37(1): 106−115. doi: 10.13382/j.jemi.B2205837

[37] Peng J J, Jiang G Q, Wang H B. Adaptive memorization with group labels for unsupervised person re-identification[J]. IEEE Trans Circuits Syst Video Technol, 2023, 33(10): 5802−5813. doi: 10.1109/TCSVT.2023.3258917

[38] Wang F, Liu H P. Understanding the behaviour of contrastive loss[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021: 2495–2504. https://doi.org/10.1109/CVPR46437.2021.00252.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: