-

摘要

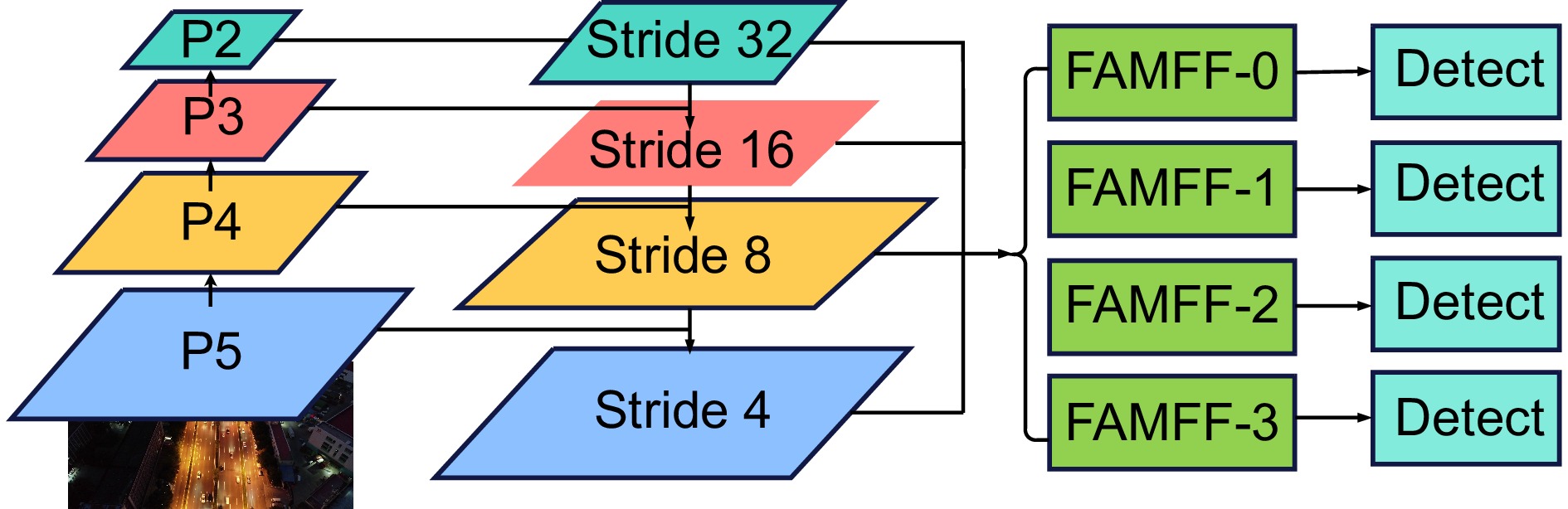

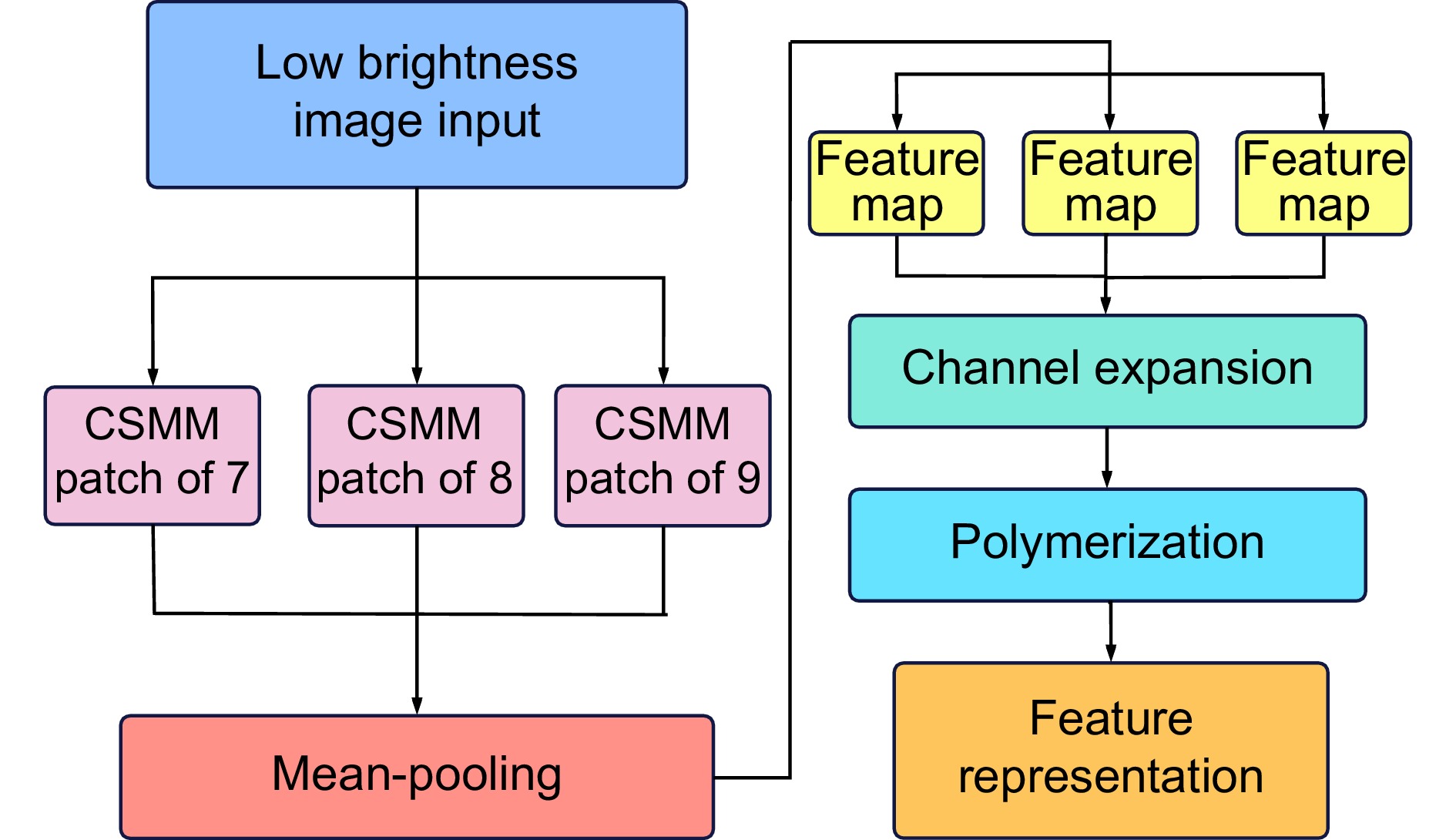

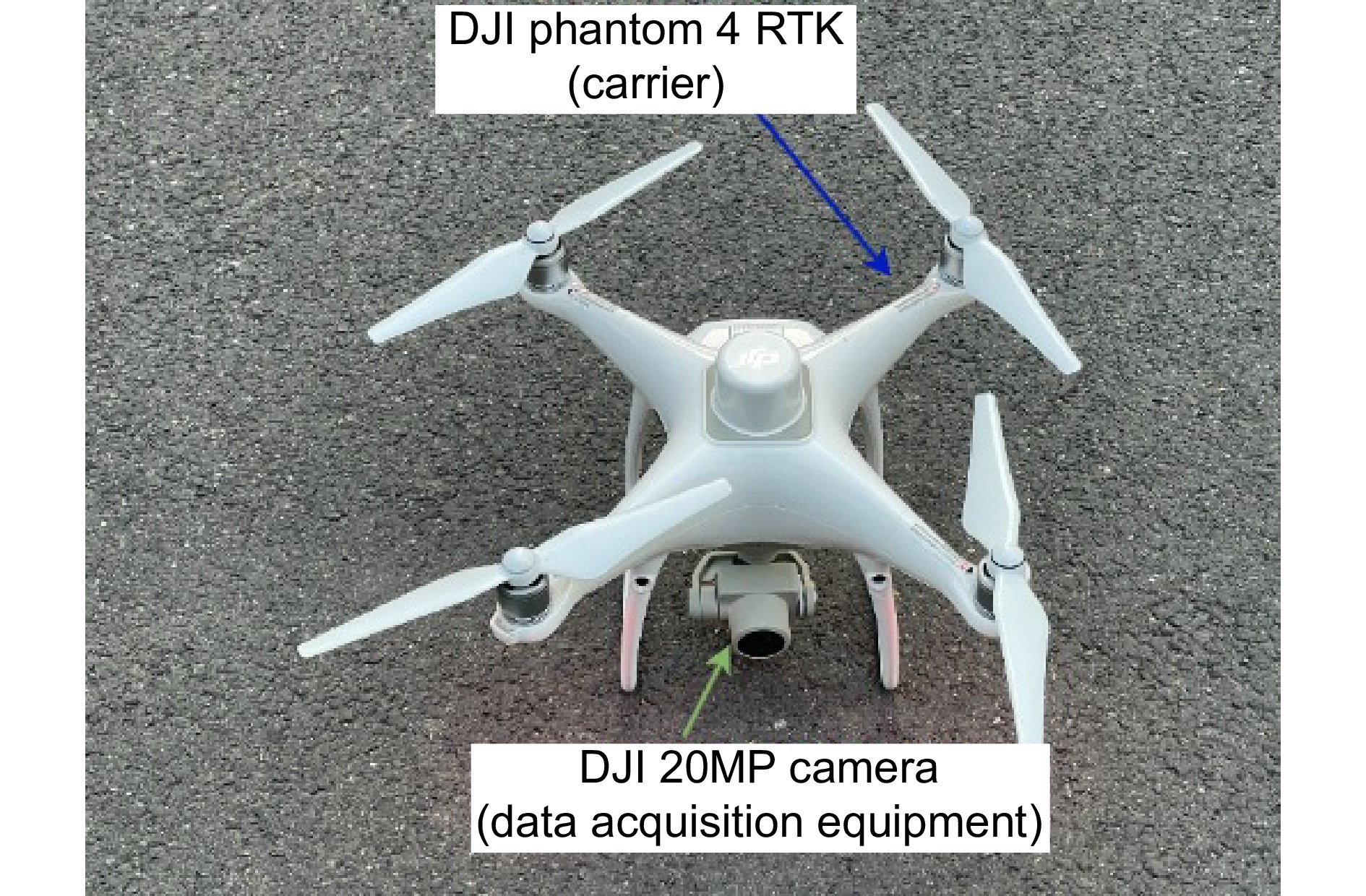

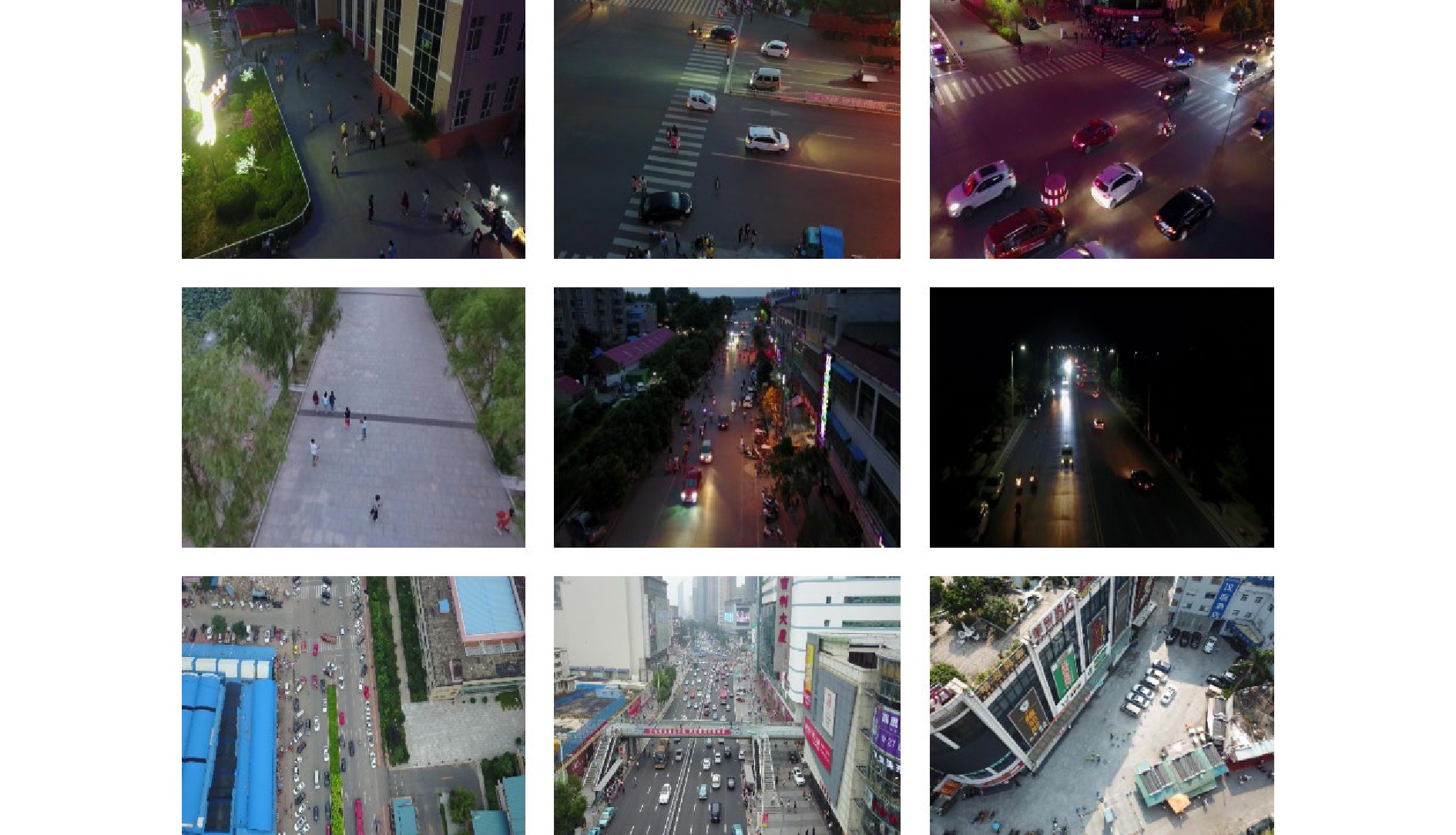

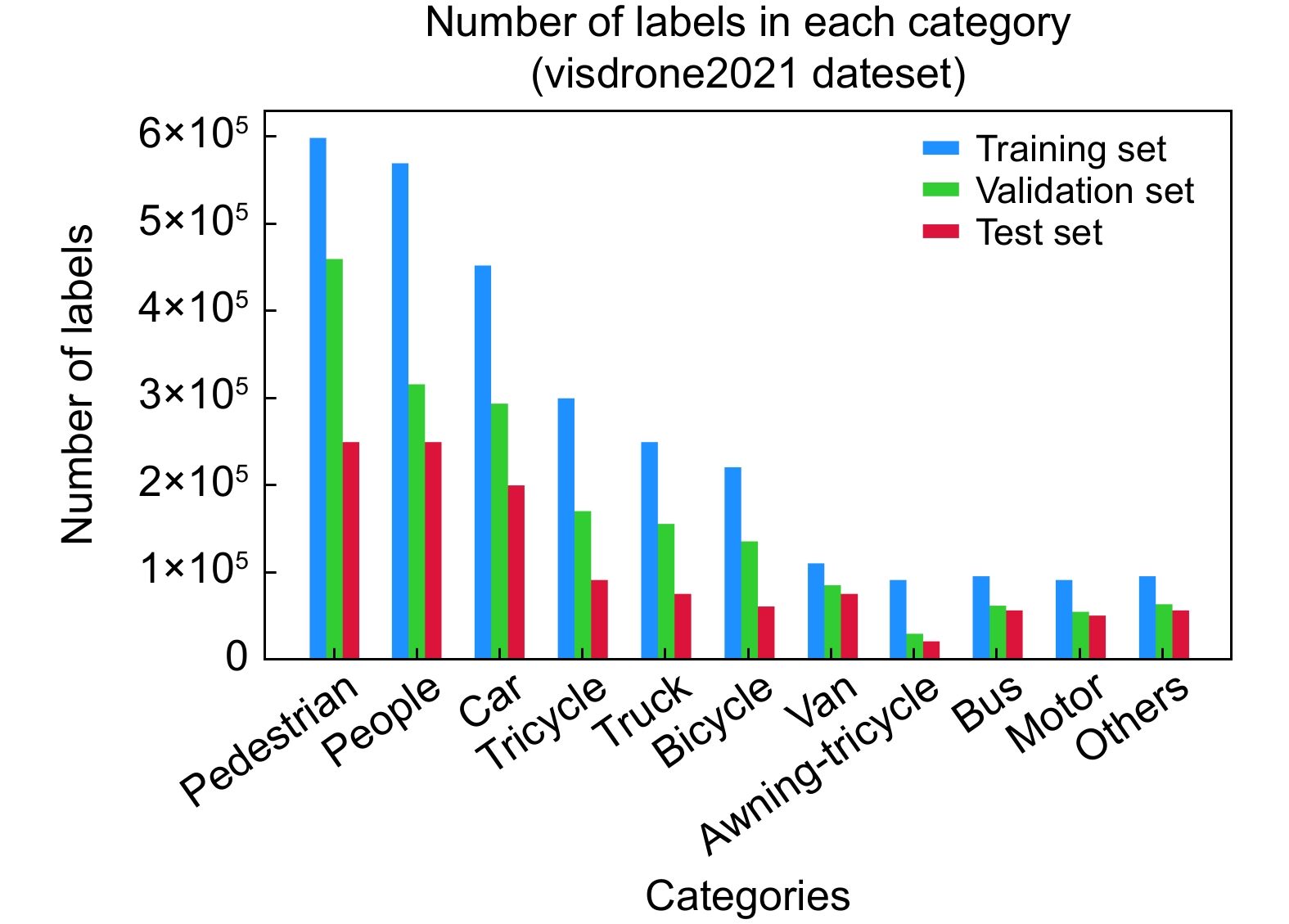

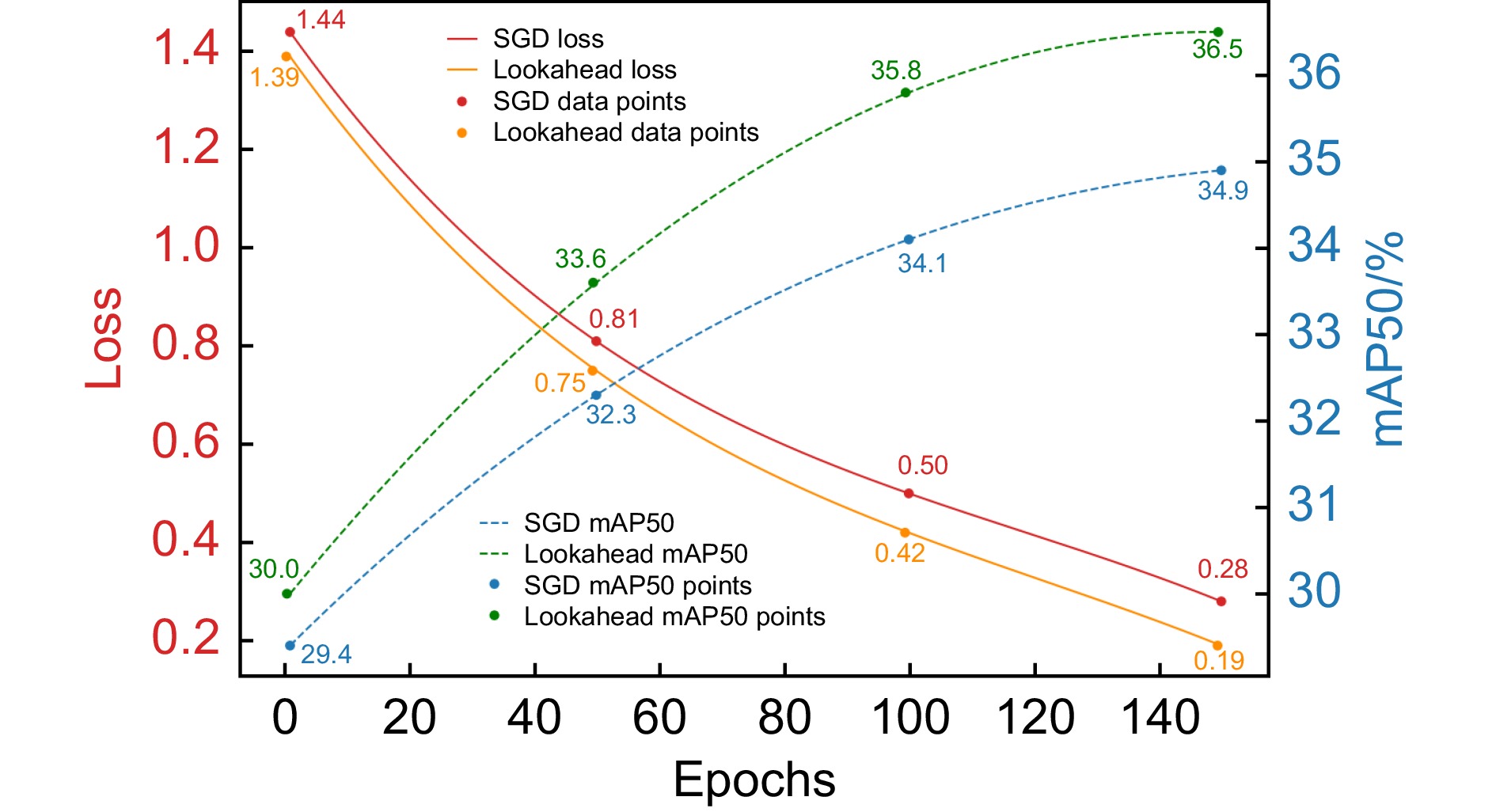

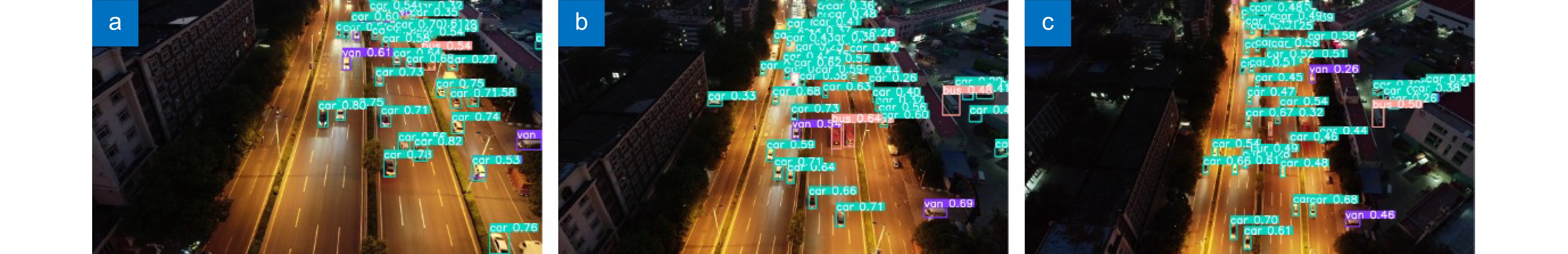

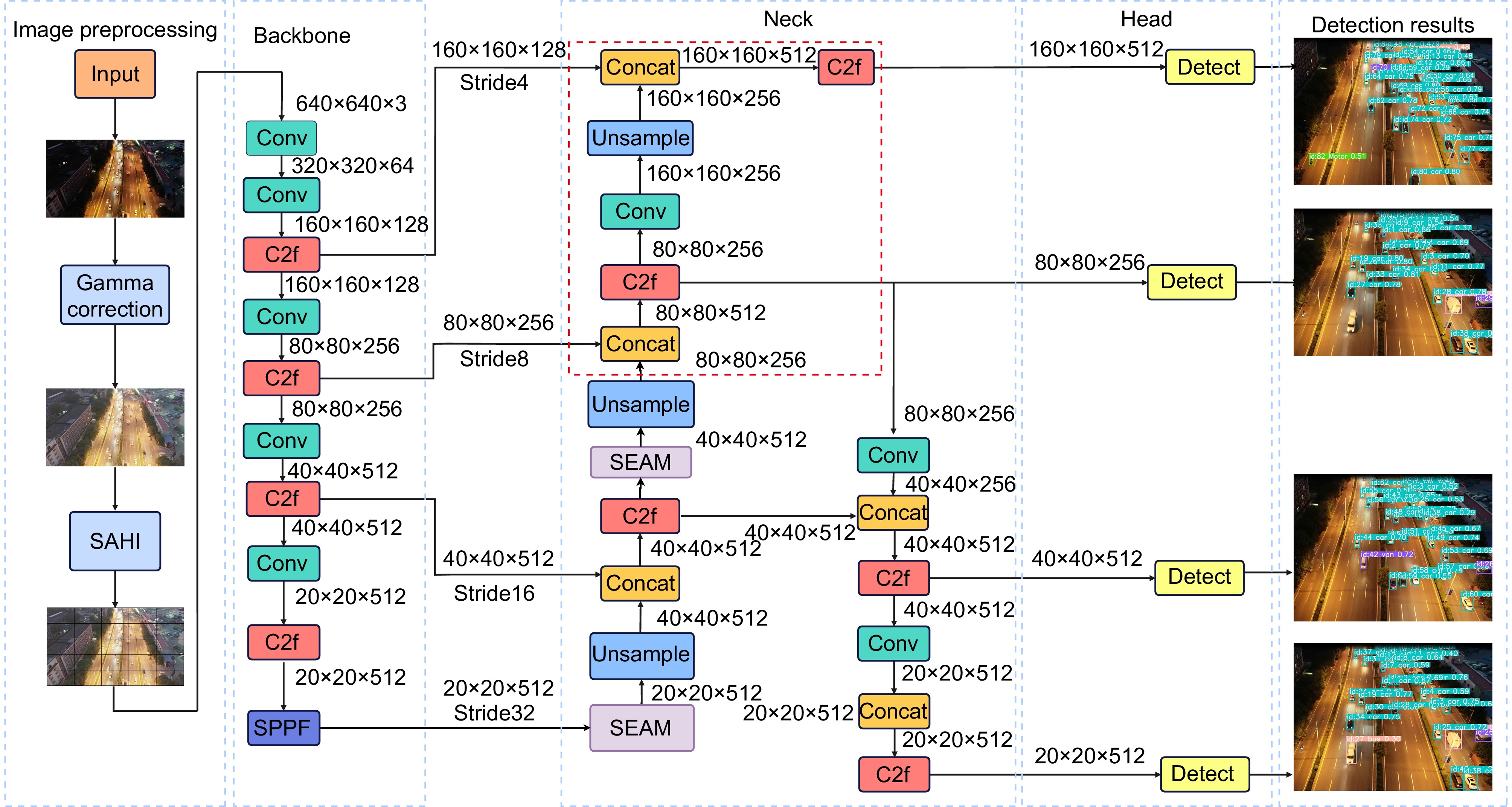

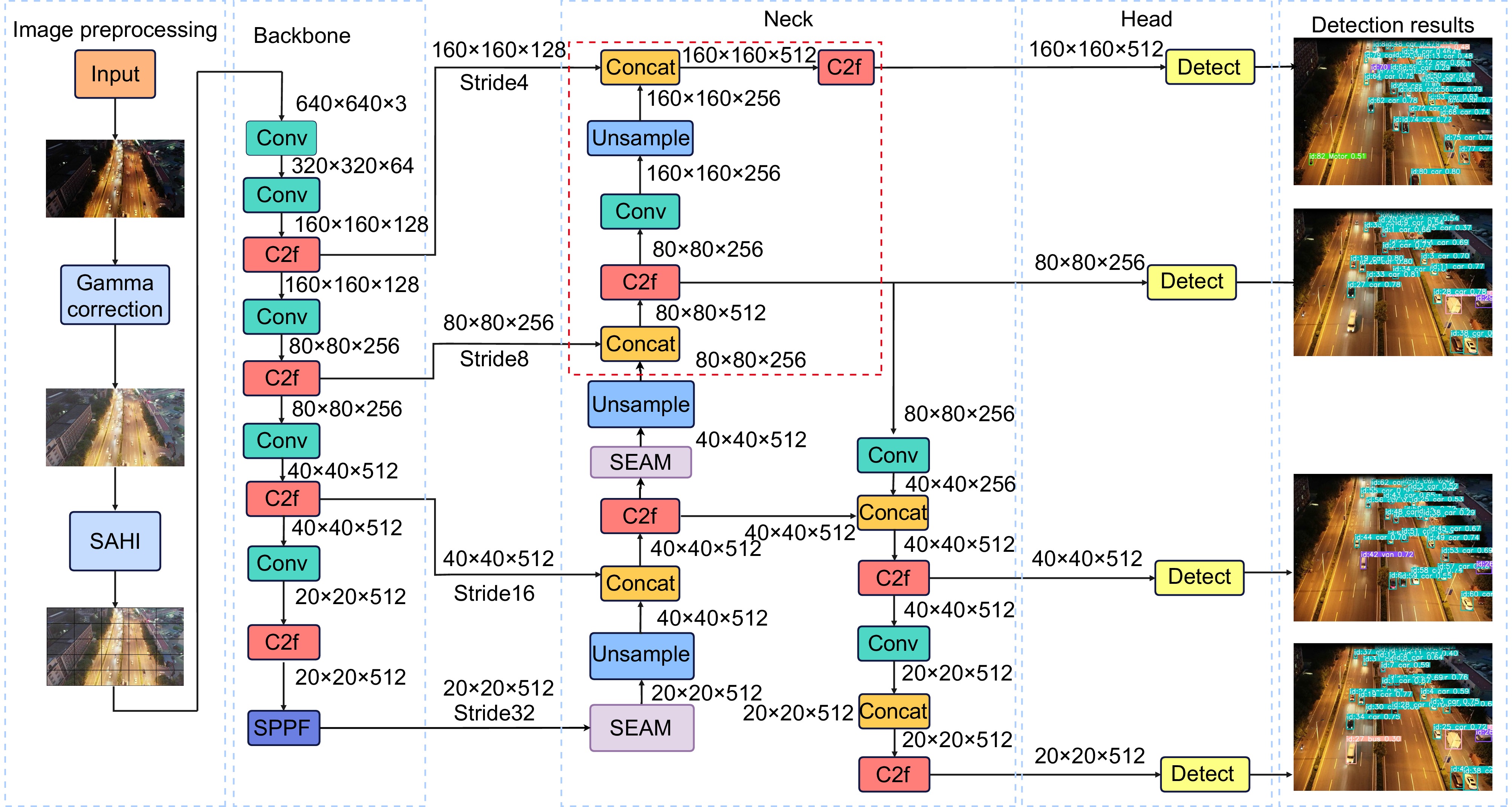

针对昏暗场景中背景复杂导致目标边缘模糊、小目标易被遮挡和误检漏检的问题,提出一种改进的YOLOv8s算法—YOLOv8-GAIS。采用四头自适应多维特征融合(four-head adaptive multi-dimensional feature fusion,FAMFF)策略,过滤空间中的冲突信息。添加小目标检测头,解决航拍视角下目标尺度变化大问题。引入空间增强注意力机制(spatially enhanced attention mechanism,SEAM),增强网络对昏暗和遮挡部分的捕捉能力。采用更关注核心部分的InnerSIoU损失函数,提升被遮挡目标的检测性能。采集实地场景扩充VisDrone2021数据集,应用Gamma和SAHI (slicing aided hyper inference)算法进行预处理,平衡低照度场景中不同目标种类的数量,优化模型的泛化能力和检测精度。通过对比实验,改进模型相对于初始模型,参数量减少1.53 MB,mAP50提高6.9%,mAP50-95增加5.6%,模型计算量减少7.2 GFLOPs。在天津市津南区大沽南路进行实地实验,探究无人机(UAV)最适宜的图像采集高度,实验结果表明,当飞行高度设置为60 m时,该模型的检测精度mAP50最高为77.8%。

-

关键词:

- 目标检测 /

- 低照度图像 /

- 四头自适应多维特征融合策略 /

- 无人机 /

- YOLOv8

Abstract

To address the issue of complex backgrounds in dim scenes, which cause object edge blurring and obscure small objects, leading to misdetection and omission, an improved YOLOv8-GAIS algorithm is proposed. The FAMFF (four-head adaptive multi-dimensional feature fusion) strategy is designed to achieve spatial filtering of conflicting information. A small object detection head is incorporated to address the issue of large object scale variation in aerial views. The SEAM (spatially enhanced attention mechanism) is introduced to enhance the network's attention and capture ability for occluded parts in low illumination situations. The InnerSIoU loss function is adopted to emphasize the core regions, thereby improving the detection performance of occluded objects. Field scenes are collected to expand the VisDrone2021 dataset, and the Gamma and SAHI (slicing aided hyper inference) algorithms are applied for preprocessing. This helps balance the distribution of different object types in low-illumination scenarios, optimizing the model's generalization ability and detection accuracy. Comparative experiments show that the improved model reduces the number of parameters by 1.53 MB, and increases mAP50 by 6.9%, mAP50-95 by 5.6%, and model computation by 7.2 GFLOPs compared to the baseline model. In addition, field experiments were conducted in Dagu South Road, Jinnan District, Tianjin City, China, to determine the optimal altitude for image acquisition by UAVs. The results show that, at a flight altitude of 60 m, the model achieves the detection accuracy of 77.8% mAP50.

-

Overview

Overview: With the exponential growth of processor computing power and the continuous breakthroughs of deep learning algorithms, deep learning-based computer vision has become a key component in the field of object detection. In the field of traffic supervision, the use of a UAV remote sensing platform equipped with advanced sensors and deep learning algorithms achieves intelligent vehicle detection. This approach provides technical support for the construction of a new rapid response and accurate determination system for traffic accidents, laying a solid foundation for its implementation. Traditional object detection algorithms primarily depend on extracting distinctive features from the input data. These methods require stable lighting and minimal interference during data acquisition, limiting their effectiveness in dynamic scenarios where lighting, shadows, and weather changes affect detection accuracy. Therefore, this paper proposes an improved YOLOv8-GAIS object detection algorithm to achieve effective object detection in urban expressways under low-brightness scenes. The FAMFF (four-head adaptive multi-dimensional feature fusion) strategy is specifically designed to enhance the spatial filtering process, effectively managing conflicting information within the data. To further enhance performance, particularly in scenarios involving aerial views, a small object detection head is incorporated into the system. The SEAM (spatially enhanced attention mechanism) is incorporated to improve the network's ability to enhance the feature extraction ability, particularly in low-brightness environments and occluded parts. This enhancement enables the network to better detect parts of objects that might otherwise be missed due to occlusion. Additionally, the InnerSIoU loss function is utilized to give greater weight to the central areas of objects, thereby significantly enhancing the model's performance in detecting occluded objects more accurately. To enhance the generalization ability of the algorithm, this paper also expands the VisDrone2021 dataset by incorporating field collection scenarios. Comparative experiments reveal that the enhanced model achieves several improvements over the baseline. It reduces the parameter count by 1.53 MB, and increases mAP50 by 6.9%, and boosts mAP50-95 by 5.6%. Additionally, the model's calculation wreduced by 7.2 GFLOPs, leading to improved efficiency and faster processing times without compromising detection accuracy. To further assess its effectiveness, field experiments were conducted along Dagu South Road in Jinnan District, Tianjin City, China, to determine the optimal UAV flight altitude for image acquisition. The findings indicate that at a flight altitude of 60 m, the model reaches the detection accuracy of 77.8% mAP50. These results highlight the model's superior performance in real-world scenarios, demonstrating its robustness and adaptability to complex environments.

-

-

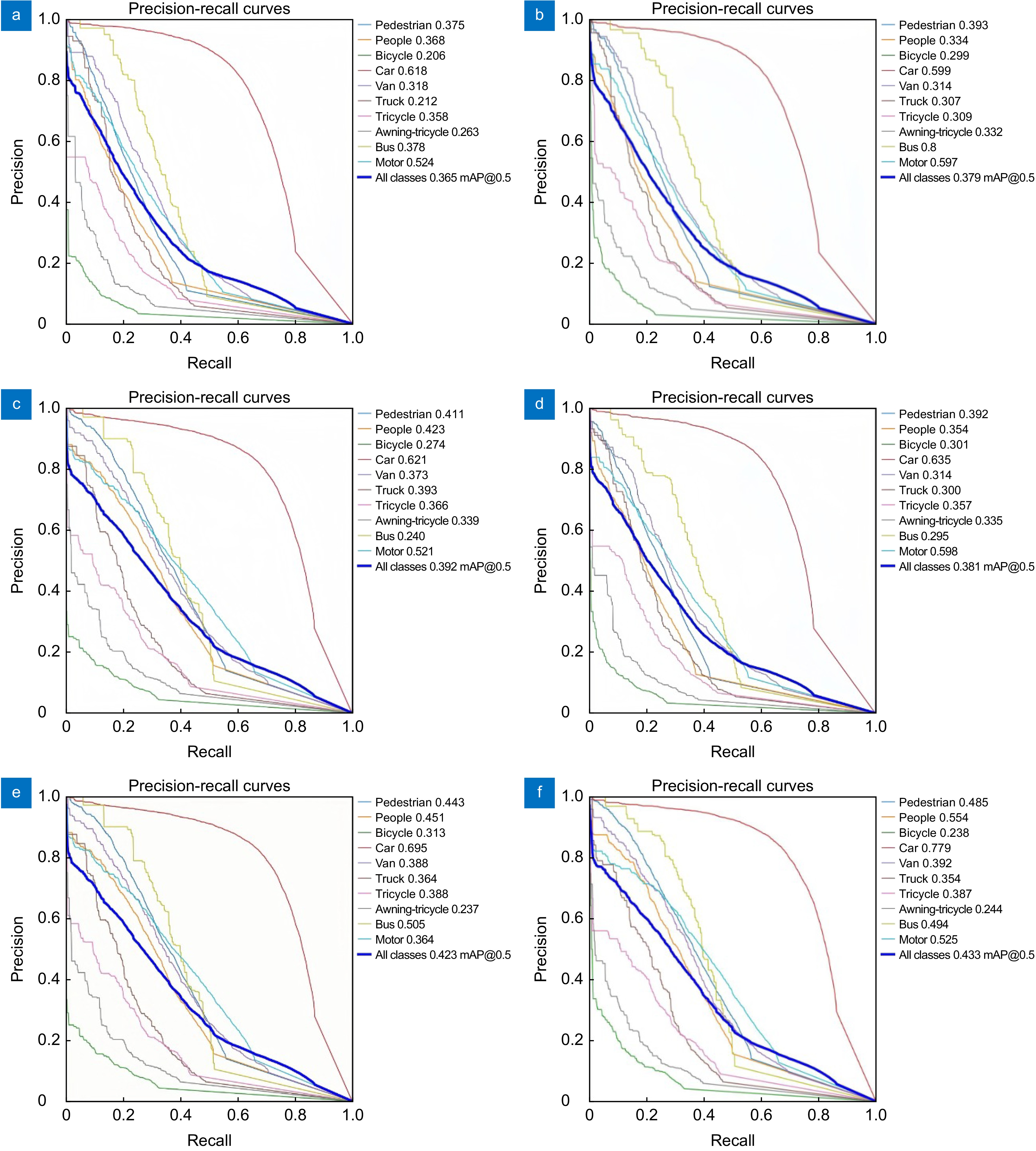

图 10 精确率-召回率曲线。(a) YOLOv8s;(b)图像增强;(c)图像增强、AirNet; (d)图像增强、AirNet、SEAM; (e)图像增强、AirNet、SEAM、FAMFF; (f)图像增强、AirNet、SEAM、FAMFF、InnerSIoU

Figure 10. Precision-recall curves. (a) YOLOv8s; (b) Image enhancement; (c) Image enhancement and AirNet; (d) Image enhancement, AirNet, and SEAM; (e) Image enhancement, AirNet, SEAM, and FAMFF; (f) Image enhancement, AirNet, SEAM, FAMFF, and InnerSIoU

表 1 不同模型对比结果

Table 1. Results of comparison of different models

Model Resolution $ \mathrm{mAP}50 $ mAP50/% Pedestrian People Bicycle Car Three-box van Truck Tricycle Awning-tricycle Bus Motor RetinaNet[10] / 35.9 27.7 13.5 34.4 54.4 30.6 24.7 25.1 20.1 39.9 25.4 CornetNet[10] / 28.1 29.2 18.7 12.8 60.3 33.3 23.9 19.0 12.6 35.9 25.1 CenterNet[25] / 26.6 23.1 21.1 15.4 60.6 24.5 21.1 20.3 17.4 38.3 24.4 Faster R-CNN / 33.6 / / / / / / / / / / YOLOv8s 640×640 36.5 37.5 36.8 20.6 61.8 31.8 22.2 45.8 26.3 37.8 52.4 YOLOv5s 864×864 40.3 47.7 49.0 26.4 56.9 39.9 35.2 35.4 25.5 36.5 50.2 YOLOv8-GAIS 640×640 43.2 48.5 55.4 23.8 77.9 39.2 35.4 38.7 24.4 49.4 52.5 表 2 不同注意力机制对比实验结果

Table 2. Results of comparative experiments on different attention mechanisms

Attention mechanism Param/MB GFLOPs $ \mathrm{mAP}50 $/% YOLOv8s 11.23 28.5 36.5 YOLOv8s-CBAM 7.10 24.1 36.8 YOLOv8s-SE 7.05 24.0 37.1 YOLOv8s-SimAM 7.15 23.6 37.3 YOLOv8s-ECA 7.40 23.9 37.8 YOLOv8s-SEAM 7.30 23.9 38.1 表 3 消融实验结果

Table 3. Results of ablation experiments

YOLOv8s Data enhancement AirNet SEAM FAMFF InnerSIoU Param/MB GFLOPs $ {\rm{mAP}}50 $ /% $ {\rm{mAP}}50{\text{-}}95 $/% Precision/% Recall/% √ 11.23 28.5 36.5 16.8 40.8 33.3 √ √ 11.23 26.3 37.9 18.5 40.4 35.9 √ √ √ 12.50 23.9 39.3 18.7 41.9 35.6 √ √ √ √ 7.30 20.3 38.1 18.5 41.0 35.7 √ √ √ √ √ 8.52 21.7 42.3 21.6 43.6 34.3 √ √ √ √ √ √ 9.70 21.3 43.4 22.4 45.7 35.1 表 4 无人机不同飞行高度检测结果对比

Table 4. Comparison of UAV detection results at different flight altitudes

Flight altitude/m mAP50/% Awning-tricycle Car Truck 30 39.7 77.1 36.8 60 38.0 77.8 40.2 100 27.1 62.6 25.6 -

参考文献

[1] 梁礼明, 陈康泉, 王成斌, 等. 融合视觉中心机制和并行补丁感知的遥感图像检测算法[J]. 光电工程, 2024, 51(7): 240099. doi: 10.12086/oee.2024.240099

Liang L M, Chen K Q, Wang C B, et al. Remote sensing image detection algorithm integrating visual center mechanism and parallel patch perception[J]. Opto-Electron Eng, 2024, 51(7): 240099. doi: 10.12086/oee.2024.240099

[2] 张文政, 吴长悦, 满卫东, 等. 双通道对抗网络在建筑物分割中的应用[J]. 计算机工程, 2024, 50(11): 297−307. doi: 10.19678/j.issn.1000-3428.0068527

Zhang W Z, Wu C Y, Man W D, et al. Application of dual-channel adversarial network in building segmentation[J]. Comput Eng, 2024, 50(11): 297−307. doi: 10.19678/j.issn.1000-3428.0068527

[3] 赵太飞, 王一琼, 郭佳豪, 等. 无线紫外光中继协作无人机集结编队方法[J]. 激光技术, 2024, 48(4): 477−483. doi: 10.7510/jgjs.issn.1001-3806.2024.04.004

Zhao T F, Wang Y Q, Guo J H, et al. The method of wireless ultraviolet relay cooperation UAV assembly formation[J]. Laser Technol, 2024, 48(4): 477−483. doi: 10.7510/jgjs.issn.1001-3806.2024.04.004

[4] Girshick R, Donahue J, Darrell T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]//Proceedings of 2014 IEEE Conference on Computer Vision and Pattern Recognition, 2014: 580–587. https://doi.org/10.1109/CVPR.2014.81.

[5] Ren S Q, He K M, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Trans Pattern Anal Mach Intell, 2017, 39(6): 1137−1149. doi: 10.1109/TPAMI.2016.2577031

[6] He K M, Gkioxari G, Dollár P, et al. Mask R-CNN[C]//Proceedings of 2017 IEEE International Conference on Computer Vision, 2017: 2980–2988. https://doi.org/10.1109/ICCV.2017.322.

[7] Redmon J. Yolov3: an incremental improvement[Z]. arXiv: 1804.02767, 2018. https://arxiv.org/abs/1804.02767.

[8] Zhang Y, Guo Z Y, Wu J Q, et al. Real-time vehicle detection based on improved YOLO v5[J]. Sustainability, 2022, 14(19): 12274. doi: 10.3390/su141912274

[9] Liu W, Anguelov D, Erhan D, et al. SSD: single shot MultiBox detector[C]//Proceedings of the 14th European Conference on Computer Vision–ECCV 2016, 2016: 21–37. https://doi.org/10.1007/978-3-319-46448-0_2.

[10] Liu J L, Liu X F, Chen Q, et al. A traffic parameter extraction model using small vehicle detection and tracking in low-brightness aerial images[J]. Sustainability, 2023, 15(11): 8505. doi: 10.3390/su15118505

[11] 曲立国, 张鑫, 卢自宝, 等. 基于改进YOLOv5的交通标志识别方法[J]. 光电工程, 2024, 51(6): 240055.

Qu L G, Zhang X, Lu Z B, et al. A traffic sign recognition method based on improved YOLOv5[J]. Opto-Electron Eng, 2024, 51(6): 240055.

[12] 刘勇, 吕丰顺, 李学琨, 等. 基于YOLOv8-LGA的遥感图像目标检测算法[J]. 光电子·激光, 2024. https://link.cnki.net/urlid/12.1182.o4.20240709.1518.016.

Liu Y, Lyu F S, Li X K, et al. Remote sensing image target detection algorithm based on YOLOv8-LGA[J]. J Optoelectron Laser, 2024. https://link.cnki.net/urlid/12.1182.o4.20240709.1518.016.

[13] Golcarenarenji G, Martinez-Alpiste I, Wang Q, et al. Illumination-aware image fusion for around-the-clock human detection in adverse environments from unmanned aerial vehicle[J]. Expert Syst Appl, 2022, 204: 117413. doi: 10.1016/j.eswa.2022.117413

[14] Talaat A S, El-Sappagh S. Enhanced aerial vehicle system techniques for detection and tracking in fog, sandstorm, and snow conditions[J]. J Supercomput, 2023, 79(14): 15868−15893. doi: 10.1007/s11227-023-05245-9

[15] 张润梅, 肖钰霏, 贾振楠, 等. 改进YOLOv7的无人机视角下复杂环境目标检测算法[J]. 光电工程, 2024, 51(5): 240051. doi: 10.12086/oee.2024.240051

Zhang R M, Xiao Y F, Jia Z N, et al. Improved YOLOv7 algorithm for target detection in complex environments from UAV perspective[J]. Opto-Electron Eng, 2024, 51(5): 240051. doi: 10.12086/oee.2024.240051

[16] Wu W T, Liu H, Li L L, et al. Application of local fully Convolutional Neural Network combined with YOLO v5 algorithm in small target detection of remote sensing image[J]. PLoS One, 2021, 16(10): e0259283. doi: 10.1371/journal.pone.0259283

[17] 潘玮, 韦超, 钱春雨, 等. 面向无人机视角下小目标检测的YOLOv8s改进模型[J]. 计算机工程与应用, 2024, 60(9): 142−150. doi: 10.3778/j.issn.1002-8331.2312-0043

Pan W, Wei C, Qian C Y, et al. Improved YOLOv8s model for small object detection from perspective of drones[J]. Comput Eng Appl, 2024, 60(9): 142−150. doi: 10.3778/j.issn.1002-8331.2312-0043

[18] 范博淦, 王淑青, 陈开元. 基于改进YOLOv8的航拍无人机小目标检测模型[J]. 计算机应用, 2024. https://link.cnki.net/urlid/51.1307.tp.20241017.1040.004.

Fan B G, Wang S Q, Chen K Y. Small target detection model for aerial photography UAV based on improved YOLOv8[J]. J Comput Appl, 2024. https://link.cnki.net/urlid/51.1307.tp.20241017.1040.004.

[19] 张泽江, 刘晓锋, 刘军黎. 基于机载激光雷达的低照度交通事故现场重建及实证研究[J]. 国外电子测量技术, 2024, 43(2): 174−182. doi: 10.19652/j.cnki.femt.2305456

Zhang Z J, Liu X F, Liu J L. Reconstruction of low-brightness traffic accident scene based on UAV-borne LiDAR and empirical research[J]. Foreign Electron Meas Technol, 2024, 43(2): 174−182. doi: 10.19652/j.cnki.femt.2305456

[20] Zhang Z J, Liu X F, Chen Q. Improving accident scene monitoring with multisource data fusion under low-brightness and occlusion conditions[J]. IEEE Access, 2024, 12: 136026−136040. doi: 10.1109/ACCESS.2024.3438158

[21] 刘建蓓, 单东辉, 郭忠印, 等. 无人机视频的交通参数提取方法及验证[J]. 公路交通科技, 2021, 38(8): 149−158. doi: 10.3969/j.issn.1002-0268.2021.08.020

Liu J B, Shan D H, Guo Z Y, et al. An approach for extracting traffic parameters from UAV video and verification[J]. J Highw Transp Res Dev, 2021, 38(8): 149−158. doi: 10.3969/j.issn.1002-0268.2021.08.020

[22] Li B Y, Liu X, Hu P, et al. All-in-one image restoration for unknown corruption[C]//Proceedings of 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022: 17431–17441. https://doi.org/10.1109/CVPR52688.2022.0169.

[23] Cao Y R, He Z J, Wang L J, et al. VisDrone-DET2021: the vision meets drone object detection challenge results[C]//Proceedings of 2021 IEEE/CVF International Conference on Computer Vision, 2021: 2847–2854. https://doi.org/10.1109/ICCVW54120.2021.00319.

[24] Zhang M R, Lucas J, Hinton G, et al. Lookahead optimizer: k steps forward, 1 step back[C]//Proceedings of the 33rd International Conference on Neural Information Processing Systems, 2019: 861.

[25] Pailla D R, Kollerathu V, Chennamsetty S S. Object detection on aerial imagery using CenterNet[Z]. arXiv: 1908.08244, 2019. https://arxiv.org/abs/1908.08244.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: