-

摘要

针对X射线安检图像中样本重叠遮挡占比高、关键特征提取困难、背景噪声大导致的漏检和误检问题,提出一种自适应全景聚焦X射线图像违禁品检测算法。首先,设计前景特征感知模块,通过强化前景目标的边缘结构和纹理细节,精准区分违禁品和背景噪声,提高特征表达的准确性和完整性。然后,结合多分支结构和双重交叉注意力机制构造多路径双维信息整合模块,优化通道和空间维度的特征交互与融合,加强关键特征的提取能力,有效抑制背景干扰。最后,构建全景动态聚焦检测头,通过频率自适应空洞卷积实现感受野的动态调整,以适配小尺寸违禁品目标的特征频率分布,增强模型对小目标的识别能力。在公开数据集SIXray和OPIXray上进行训练和测试,mAP@0.5分别达到93.3%和92.5%,优于其他对比算法。实验结果表明,该模型显著改善了X射线图像中违禁品的漏检和误检情况,具有较高的准确性和鲁棒性。

Abstract

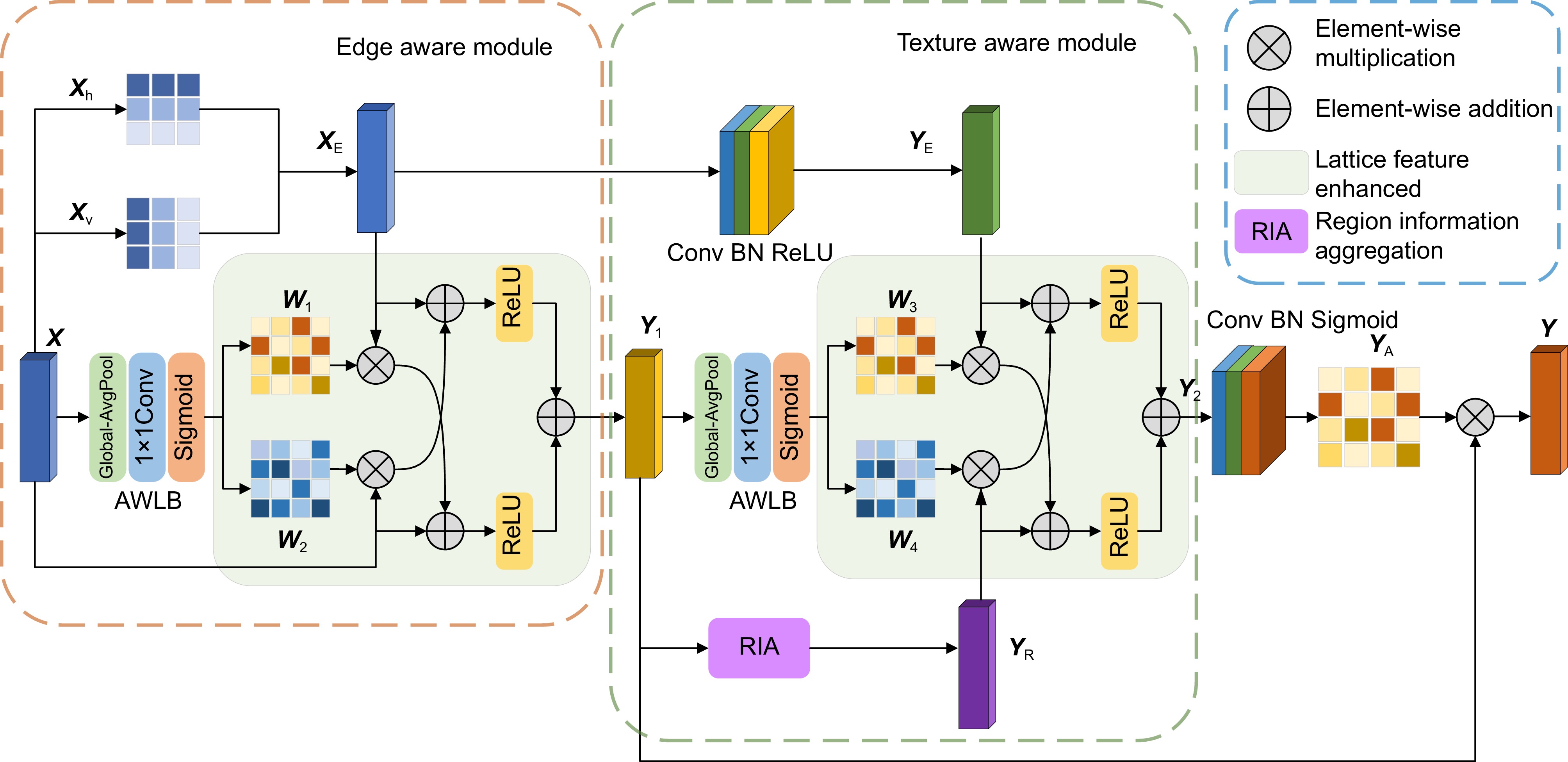

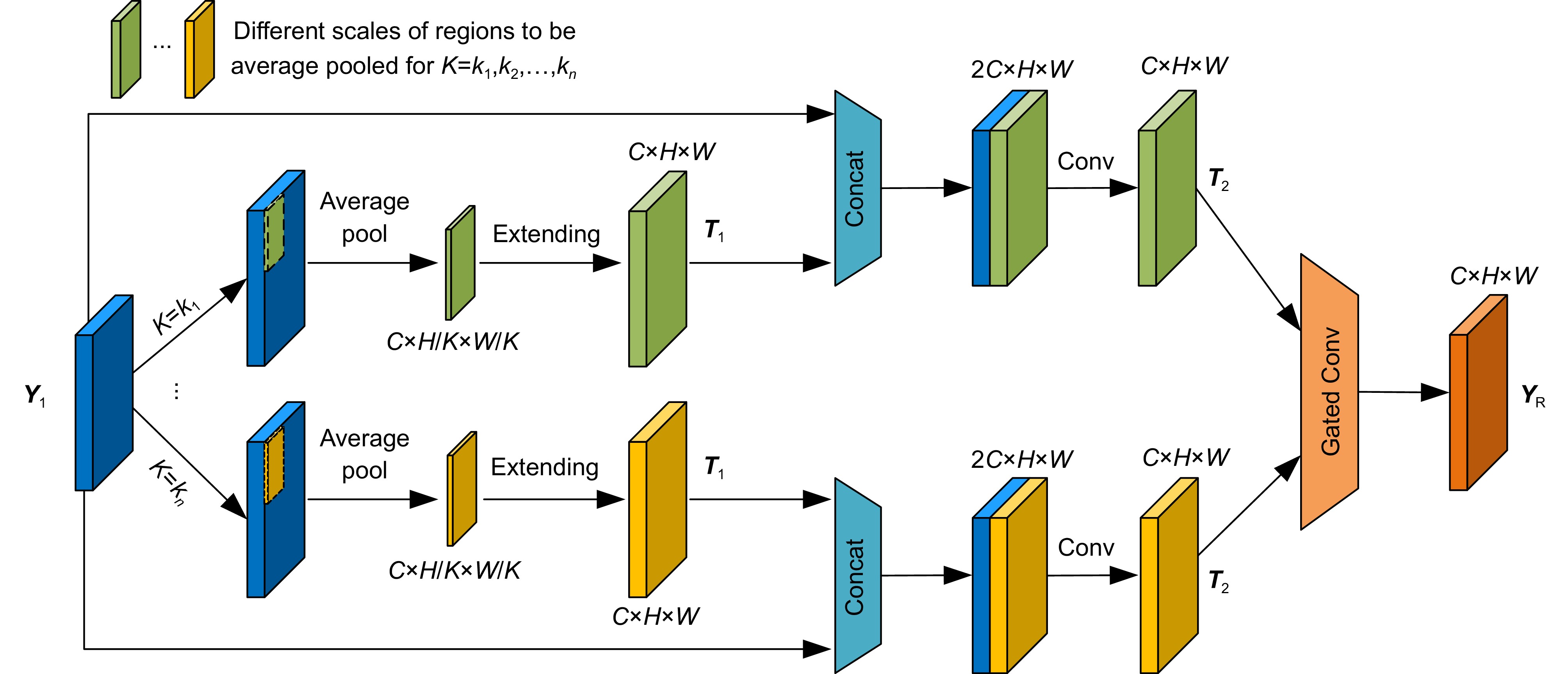

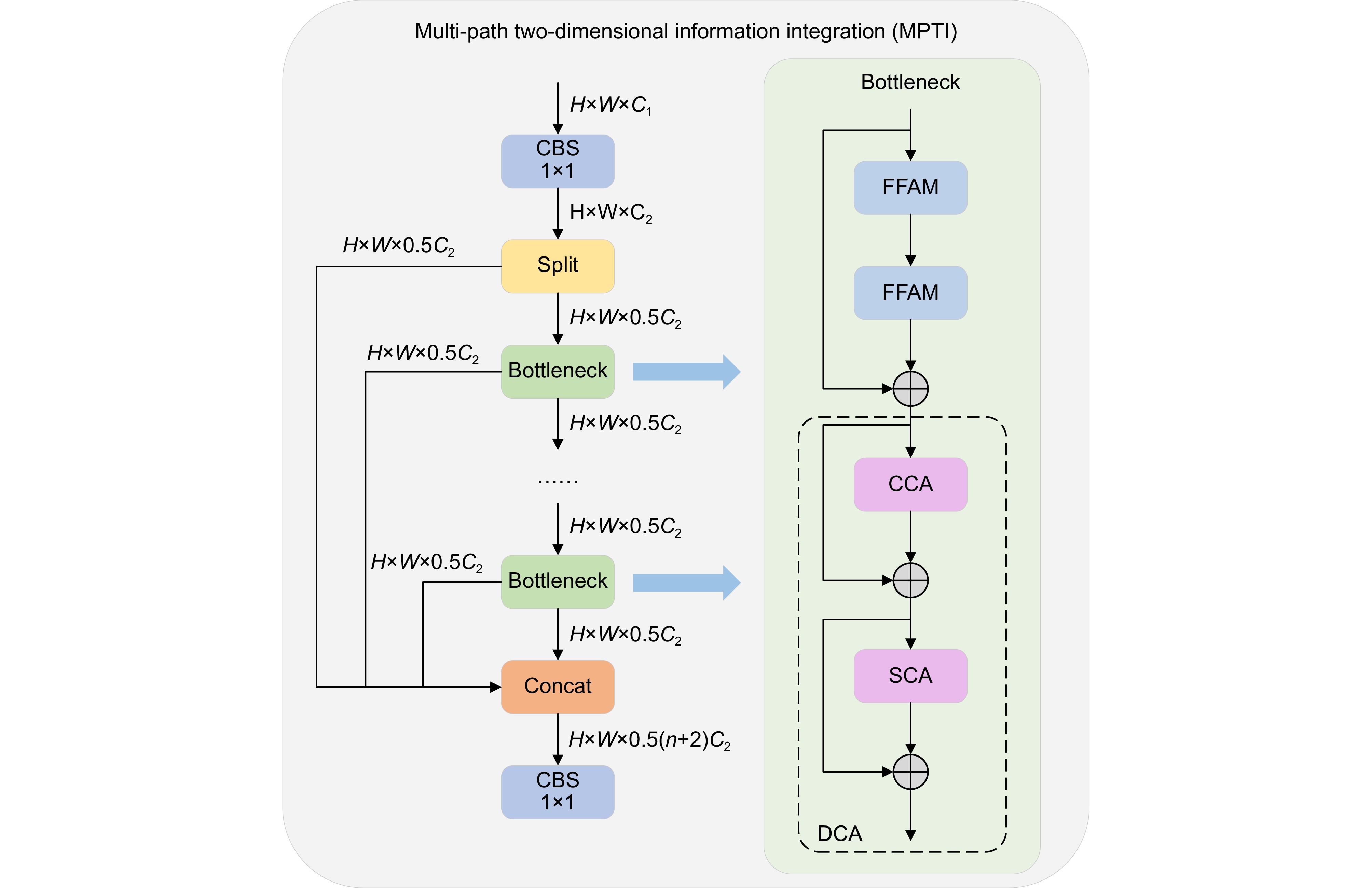

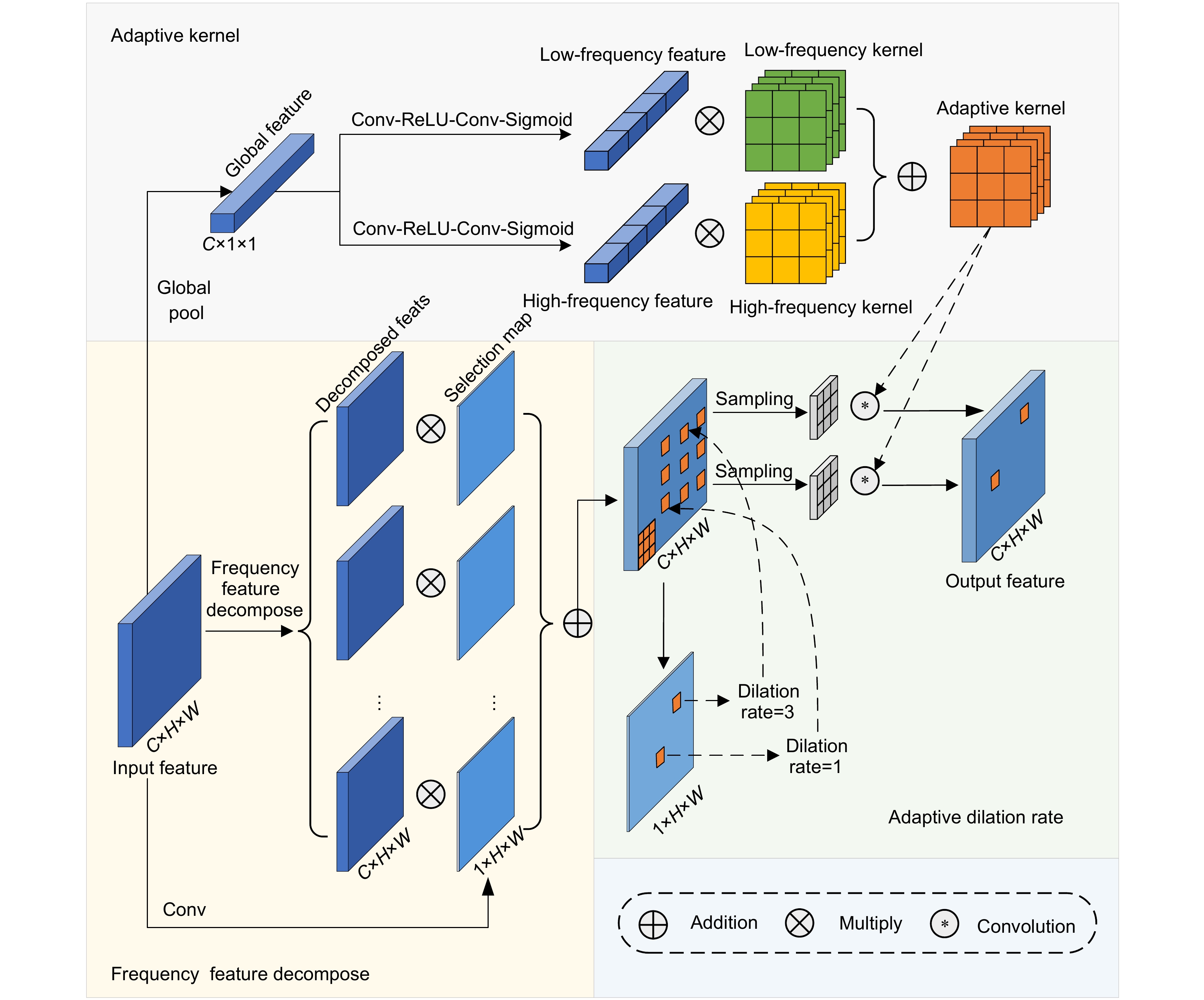

Aiming at the problem of leakage and misdetection caused by the high percentage of sample overlapping and occlusion, the difficulty of key feature extraction, and the large background noise in X-ray security images, an adaptive panoramic focusing X-ray image contraband detection algorithm is proposed. Firstly, the foreground feature awareness module is designed to accurately distinguish contraband and background noise by enhancing the edge structure and texture details of the foreground target to improve the accuracy and completeness of feature representation. Then, the multi-path two-dimensional information integration module is constructed by combining the multi-branch structure and dual cross attention mechanism to optimize the feature interaction and fusion in the channel and spatial dimensions, to strengthen the extraction capability of key features, and to effectively suppress the background interference. Finally, a panoramic dynamic focus detection head is constructed, which dynamically adjusts the receptive field through frequency adaptive dilated convolutions to accommodate the feature frequency distribution of small-sized contraband targets, thereby enhancing the model's ability to recognize small targets. Trained and tested on the public datasets SIXray and OPIXray, the mAP@0.5 reaches 93.3% and 92.5%, respectively, outperforming the other compared algorithms. The experimental results show that the proposed model significantly improves the leakage and false detection of contraband in X-ray images with high accuracy and robustness.

-

Overview

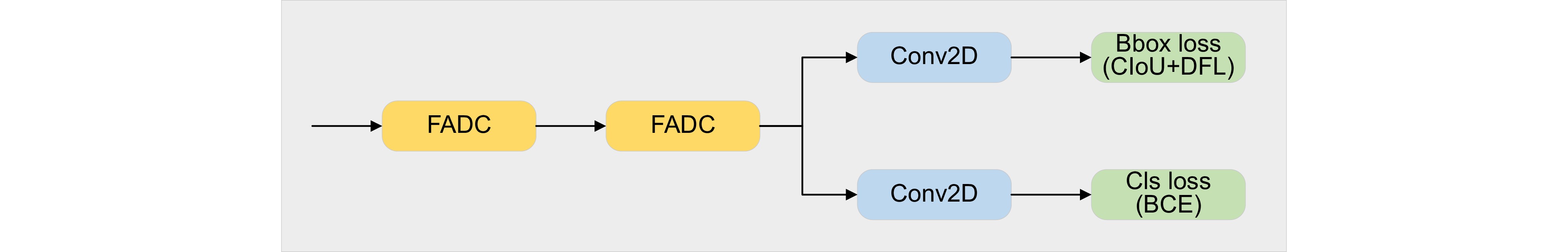

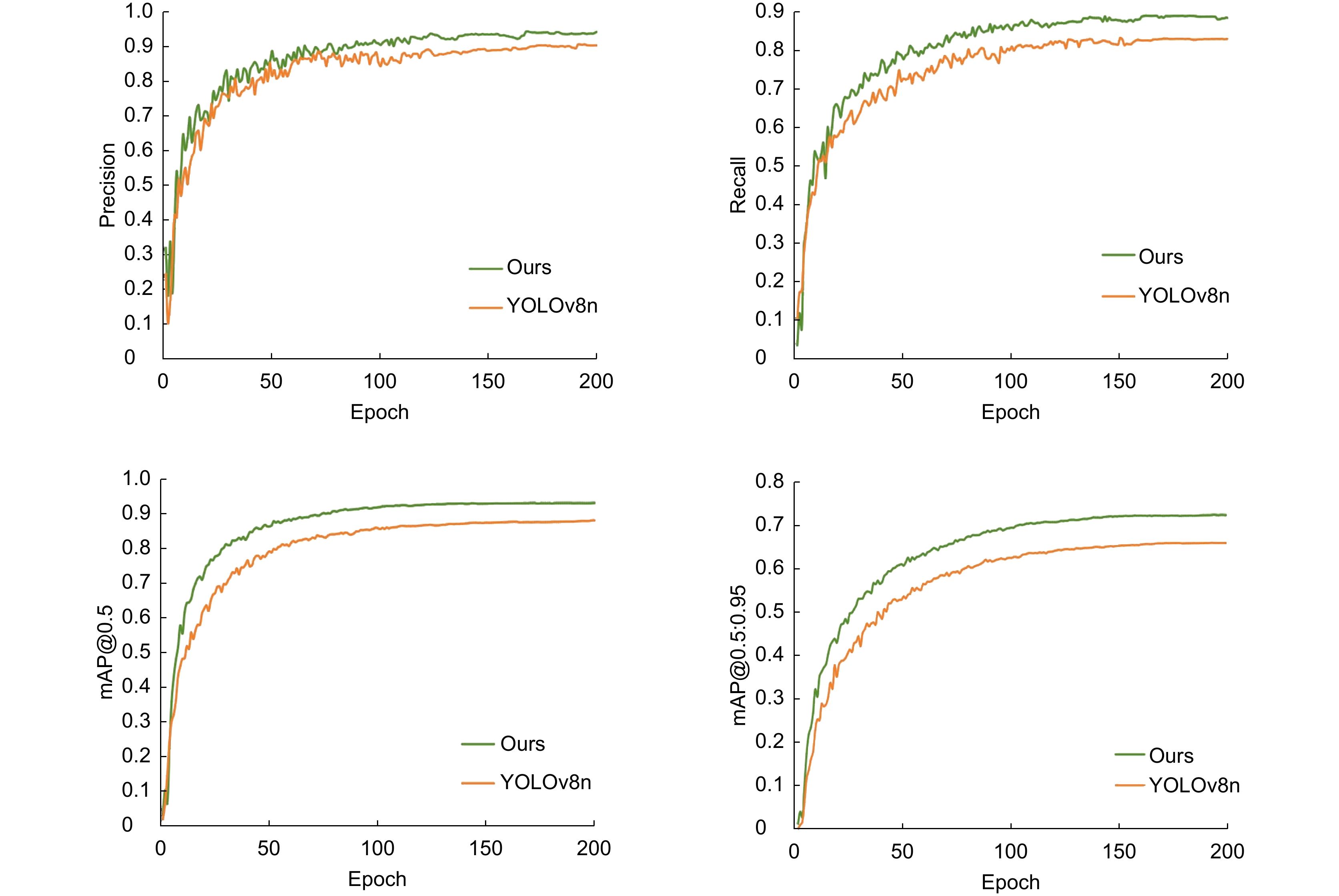

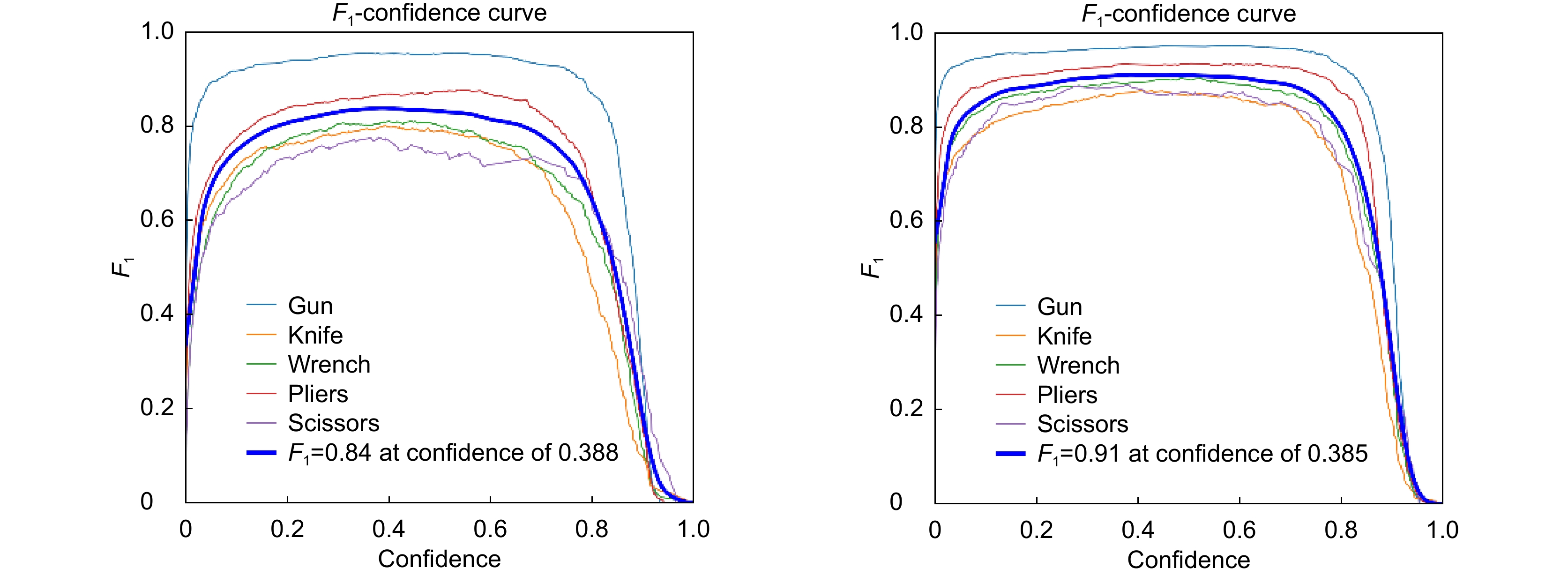

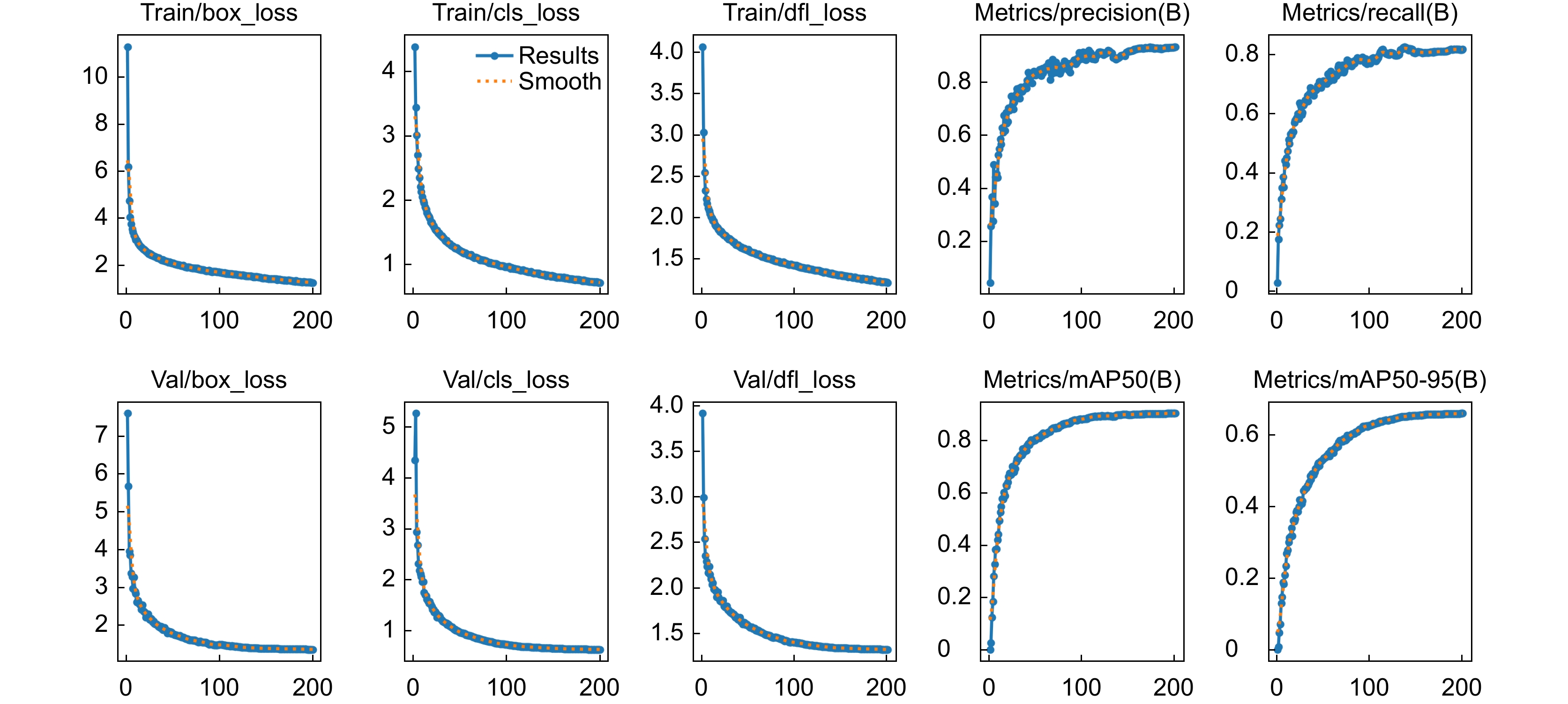

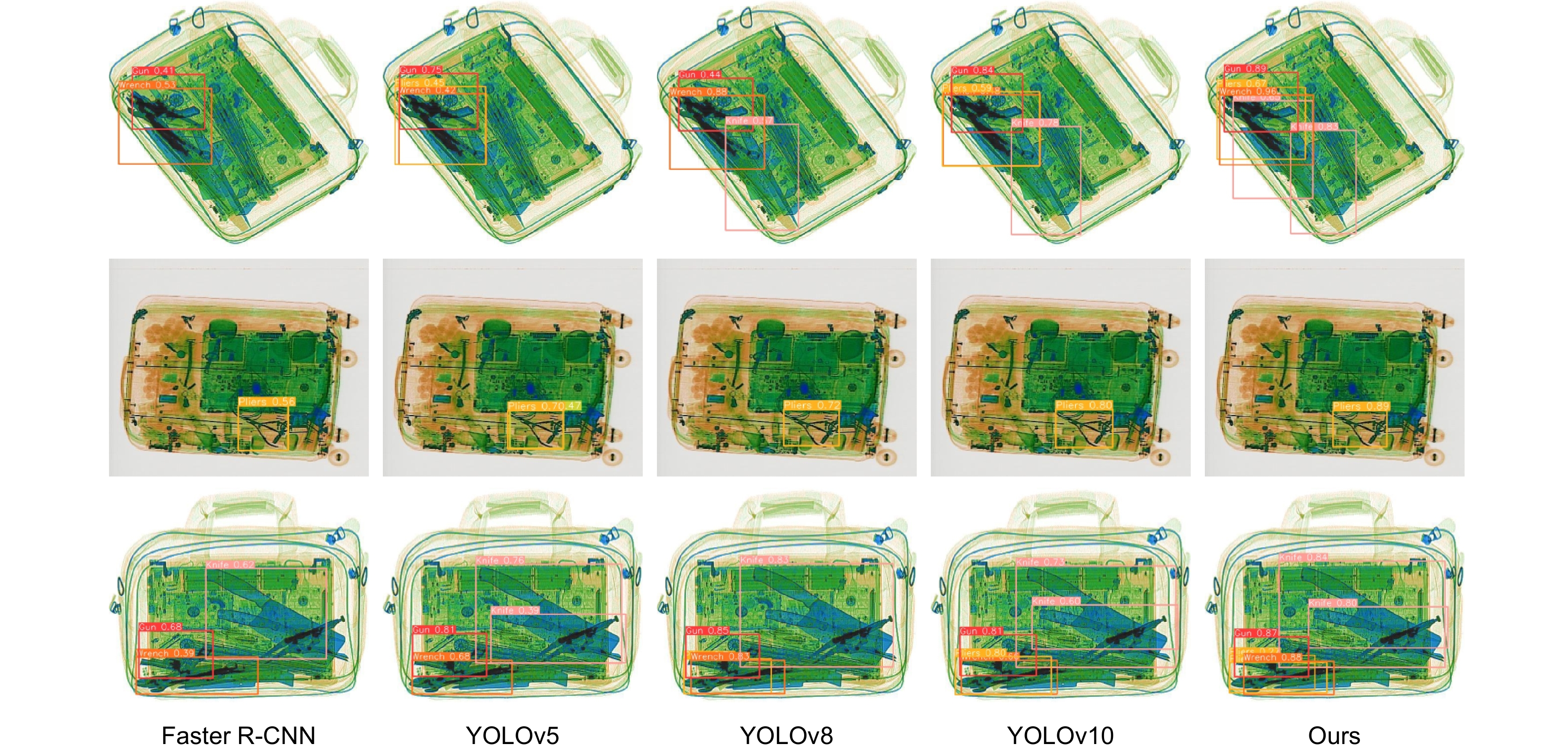

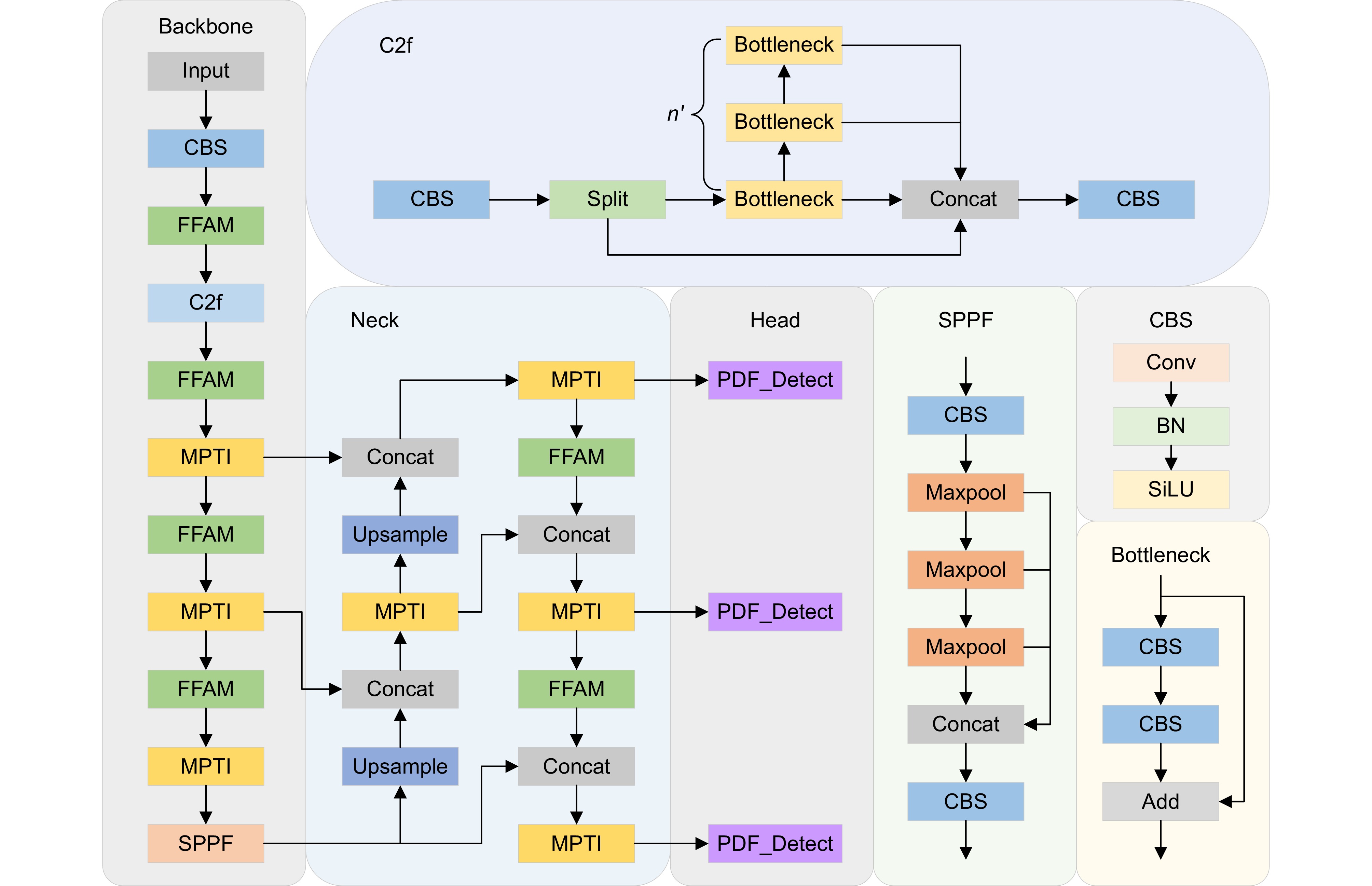

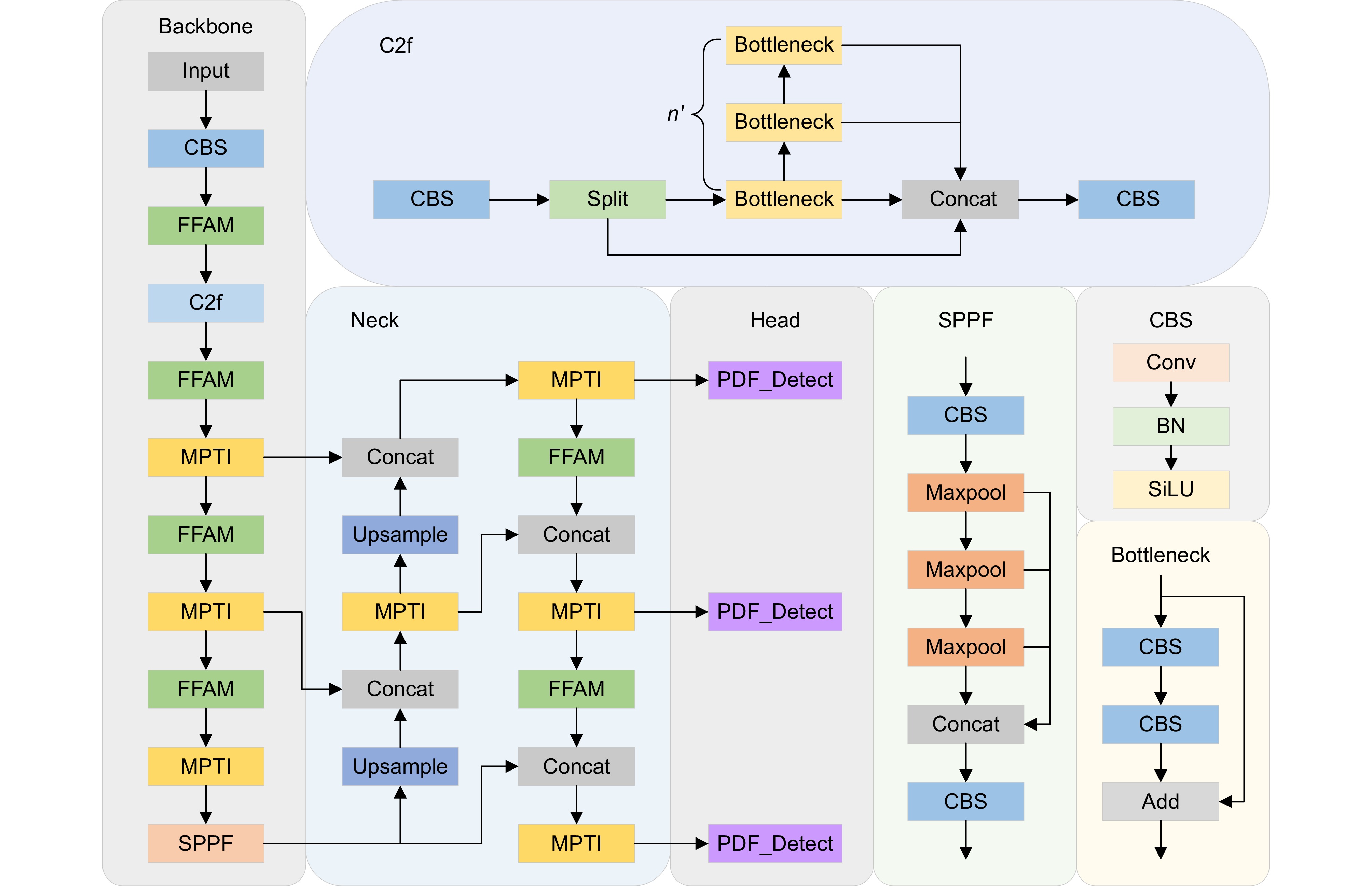

Overview: X-ray image detection of prohibited items plays a crucial role in various fields, including public transportation, logistics, and customs inspection. It is a key technology in image processing and object detection, with the primary task of accurately identifying the category and location of prohibited items within complex background environments to ensure the safety of human life, property, and goods transportation. Unlike natural images, prohibited item images are generated using X-ray imaging technology, where the targets exhibit diverse categories and varying shapes. Moreover, these images are often affected by challenges such as target stacking, occlusion, low contrast, and complex backgrounds, making it difficult to accurately identify the correct targets, thereby leading to missed and false detections. Consequently, achieving precise identification of prohibited items and improving detection efficiency have become critical challenges and focal points in current research. To address the issues of target overlap and occlusion, difficulty in key feature extraction, and missed detection of small-sized contraband in X-ray images, this paper proposes an adaptive panoramic focus X-ray contraband detection algorithm based on the YOLOv8n model. This algorithm incorporates several novel components designed to enhance detection accuracy and efficiency. First, a foreground feature awareness module (FFAM) is proposed to significantly enhance the model's ability to represent the features of foreground targets, enabling accurate identification of contraband objects in overlapping and occluded scenes. Second, a multi-path two-dimensional information integration (MPTI) module is designed to enhance the model's ability to recognize key features by optimizing the interaction and integration of multi-scale features across both channel and spatial dimensions, enabling the extraction of more comprehensive and richer contextual information. Finally, a panoramic dynamic focus detection head (PDF_Detect) is introduced. By incorporating frequency-adaptive dilated convolutions and a dynamic focusing mechanism, the model can adaptively select the optimal receptive field size based on the frequency distribution of features. This enhances the model's ability to focus on small-sized contraband targets, effectively improving the detection of small targets and reducing both missed and false detections in complex scenes. Experiments were conducted on the public datasets SIXray and OPIXray. The experimental results show that the proposed method achieved mAP@0.5 values of 93.3% and 92.5%, representing improvements of 3.6% and 2.8% over the baseline model, respectively, and outperforming other comparative algorithms. These results demonstrate that the proposed algorithm significantly reduces missed and false detections of contraband in X-ray images, exhibiting high accuracy and robustness.

-

-

表 1 改进算法在SIXray数据集的消融实验结果

Table 1. Ablation experiment results of the improved algorithm in the SIXray dataset

No. YOLOv8n A B C P/% R/% mAP@0.5/% mAP@0.5∶0.95/% Params/M GFLOPs 1 √ × × × 91.3 84.2 89.7 66.1 3.01 8.1 2 √ √ × × 93.8 85.4 91.8 68.6 4.17 11.3 3 √ × √ × 92.0 84.0 90.6 66.2 2.48 6.2 4 √ × × √ 91.8 84.9 91.3 66.5 2.78 7.7 5 √ √ √ × 94.1 86.3 92.2 69.4 2.86 7.8 6 √ √ × √ 94.7 86.7 92.6 70.7 3.59 9.4 7 √ × √ √ 94.3 87.3 91.9 68.6 2.65 6.8 8 √ √ √ √ 94.6 88.8 93.3 72.4 2.92 7.9 表 2 消融实验各类别精度对比结果

Table 2. Comparison results of the accuracy of ablation experiments by category

No. YOLOv8n A B C AP/% mAP@0.5/% GU KN WR PL SC 1 √ × × × 97.8 86.5 87.6 94.5 84.8 89.7 2 √ √ × × 98.5 87.8 90.5 95.3 87.4 91.8 3 √ × √ × 98.2 86.9 88.6 95.1 85.2 90.6 4 √ × × √ 98.4 87.4 88.4 94.6 87.9 91.3 5 √ √ √ × 99.2 88.4 90.7 96.0 86.5 92.2 6 √ √ × √ 99.1 88.2 92.1 95.8 86.8 92.6 7 √ × √ √ 98.7 86.8 92.6 95.6 85.8 91.9 8 √ √ √ √ 99.4 89.0 93.5 96.4 87.1 93.3 表 3 SIXray数据集对比实验结果

Table 3. Comparison experiment results on the SIXray dataset

Model AP/% P/% R/% mAP@0.5/% FPS Params/M GFLOPs GU KN WR PL SC SSD 91.6 74.8 69.8 80.9 83.4 83.5 70.8 79.6 60.4 26.28 62.7 Faster R-CNN 89.2 79.4 80.1 86.4 84.2 87.9 75.1 85.5 31 136.72 369.8 YOLOv5n 98.7 88.2 82.6 90.5 79.7 92.7 78.7 87.9 101 2.50 7.1 YOLOv6 97.1 83.7 87.0 92.2 81.1 87.4 82.1 88.2 121.6 4.23 11.8 YOLOv7-Tiny 97.7 82.6 85.0 89.8 78.7 86.6 81.3 86.7 94.5 6.02 13.2 YOLOv8n 97.8 86.5 87.6 94.5 84.8 91.3 84.2 89.7 108.6 3.01 8.1 YOLOv9 98.4 86.6 88.0 92.9 84.8 91.4 82.5 90.1 111.5 2.27 8.2 YOLOv10n 98.3 87.5 90.2 95.8 86.0 90.1 85.7 91.4 116.3 2.71 8.4 Ours 99.4 89.0 93.5 96.4 87.1 94.6 88.8 93.3 125.9 2.92 7.9 表 4 OPIXray数据集对比实验结果

Table 4. Comparison experiment results on OPIXray dataset

Model AP/% P/% R/% mAP@0.5/% FPS Params/M GFLOPs ST FO SC UT MU SSD 33.5 73.4 89.5 64.1 80.8 70.5 62.8 65.7 53.2 26.28 62.7 Faster R-CNN 68.3 88.7 90.0 82.5 89.4 89.0 82.7 84.8 25.4 136.72 369.8 YOLOv5n 72.6 92.4 98.5 85.1 93.3 87.8 83.9 88.4 109.8 2.50 7.1 YOLOv6 78.6 92.0 98.3 87.1 94.6 89.2 86.1 90.1 119.5 4.23 11.8 YOLOv7-Tiny 65.7 91.6 97.9 84.0 84.0 90.7 81.4 86.4 102.9 6.02 13.2 YOLOv8n 76.9 94.3 98.0 85.3 93.8 90.5 85.5 89.7 116.8 3.01 8.1 YOLOv9 75.0 91.6 98.6 83.0 93.5 88.5 85.2 88.3 111.7 2.27 8.2 YOLOv10n 68.9 93.8 97.4 81.8 93.4 88.3 81.5 84.9 108.5 2.71 8.4 Ours 79.4 95.9 99.2 88.5 96.1 93.2 89.4 92.5 123.8 2.92 7.9 -

参考文献

[1] 常青青, 陈嘉敏, 李维姣. 城市轨道交通安检中基于X射线图像的危险品识别技术研究[J]. 城市轨道交通研究, 2022, 25(4): 205−209. doi: 10.16037/j.1007-869x.2022.04.044

Chang Q Q, Chen J M, Li W J. Dangerous goods detection technology based on X-ray images in urban rail transit security inspection[J]. Urban Mass Transit, 2022, 25(4): 205−209. doi: 10.16037/j.1007-869x.2022.04.044

[2] 陈志强, 张丽, 金鑫. X射线安全检查技术研究新进展[J]. 科学通报, 2017, 62(13): 1350−1364. doi: 10.1360/N972016-00698

Chen Z Q, Zhang L, Jin X. Recent progress on X-ray security inspection technologies[J]. Chin Sci Bull, 2017, 62(13): 1350−1364. doi: 10.1360/N972016-00698

[3] Akcay S, Breckon T. Towards automatic threat detection: a survey of advances of deep learning within X-ray security imaging[J]. Pattern Recognit, 2022, 122: 108245. doi: 10.1016/j.patcog.2021.108245

[4] 梁添汾, 张南峰, 张艳喜, 等. 违禁品X光图像检测技术应用研究进展综述[J]. 计算机工程与应用, 2021, 57(16): 74−82. doi: 10.3778/j.issn.1002-8331.2103-0476

Liang T F, Zhang N F, Zhang Y X, et al. Summary of research progress on application of prohibited item detection in X-ray images[J]. Comput Eng Appl, 2021, 57(16): 74−82. doi: 10.3778/j.issn.1002-8331.2103-0476

[5] Mery D, Riffo V, Zuccar I, et al. Object recognition in X-ray testing using an efficient search algorithm in multiple views[J]. Insight-Non-Destr Test Cond Monit, 2017, 59(2): 85−92. doi: 10.1784/insi.2017.59.2.85

[6] 王宇, 邹文辉, 杨晓敏, 等. 基于计算机视觉的X射线图像异物分类研究[J]. 液晶与显示, 2017, 32(4): 287−293. doi: 10.3788/YJYXS20173204.0287

Wang Y, Zou W H, Yang X M, et al. X-ray image illegal object classification based on computer vision[J]. Chin J Liq Cryst Disp, 2017, 32(4): 287−293. doi: 10.3788/YJYXS20173204.0287

[7] Turcsany D, Mouton A, Breckon T P. Improving feature-based object recognition for X-ray baggage security screening using primed visualwords[C]//Proceedings of 2013 IEEE International Conference on Industrial Technology, 2013: 1140–1145. https://doi.org/10.1109/ICIT.2013.6505833.

[8] Dai J F, Li Y, He K M, et al. R-FCN: object detection via region-based fully convolutional networks[C]//Proceedings of the 30th International Conference on Neural Information Processing Systems, 2016: 379–387.

[9] Ren S Q, He K M, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Trans Pattern Anal Mach Intell, 2017, 39(6): 1137−1149. doi: 10.1109/TPAMI.2016.2577031

[10] Liu W, Anguelov D, Erhan D, et al. SSD: single shot multibox detector[C]//Proceedings of the 14th European Conference on Computer Vision, 2016: 21–37. https://doi.org/10.1007/978-3-319-46448-0_2.

[11] Lin T Y, Goyal P, Girshick R, et al. Focal loss for dense object detection[J]. IEEE Trans Pattern Anal Mach Intell, 2020, 42(2): 318−327. doi: 10.1109/TPAMI.2018.2858826

[12] Redmon J, Divvala S, Girshick R, et al. You only look once: unified, real-time object detection[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, 2016: 779–788. https://doi.org/10.1109/CVPR.2016.91.

[13] Redmon J, Farhadi A. YOLO9000: better, faster, stronger[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, 2017: 6517–6525. https://doi.org/10.1109/CVPR.2017.690.

[14] Redmon J, Farhadi A. Yolov3: an incremental improvement[Z]. arXiv: 1804.02767, 2018. https://arxiv.org/abs/1804.02767.

[15] Bochkovskiy A, Wang C Y, Liao H Y M. YOLOv4: optimal speed and accuracy of object detection[Z]. arXiv: 2004.10934, 2020. https://arxiv.org/abs/2004.10934.

[16] Ge Z, Liu S T, Wang F, et al. YOLOX: exceeding YOLO series in 2021[Z]. arXiv: 2107.08430, 2021. https://arxiv.org/abs/2107.08430.

[17] Zhu Z M, Zhu Y, Wang H R, et al. FDTNet: enhancing frequency-aware representation for prohibited object detection from X-ray images via dual-stream transformers[J]. Eng Appl Artif Intell, 2024, 133: 108076. doi: 10.1016/j.engappai.2024.108076

[18] Ahmed A, Velayudhan D, Hassan T, et al. Enhancing security in X-ray baggage scans: a contour-driven learning approach for abnormality classification and instance segmentation[J]. Eng Appl Artif Intell, 2024, 130: 107639. doi: 10.1016/j.engappai.2023.107639

[19] 董乙杉, 郭靖圆, 李明泽, 等. 基于反向瓶颈和LCBAM设计的X光违禁品检测[J]. 计算机科学与探索, 2024, 18(5): 1259−1270. doi: 10.3778/j.issn.1673-9418.2301041

Dong Y S, Guo J Y, Li M Z, et al. X-ray prohibited items detection based on inverted bottleneck and light convolution block attention module[J]. J Front Comput Sci Technol, 2024, 18(5): 1259−1270. doi: 10.3778/j.issn.1673-9418.2301041

[20] Zhou Y T, Cao K Y, Piao J C. Fine-YOLO: a simplified X-ray prohibited object detection network based on feature aggregation and normalized Wasserstein distance[J]. Sensors (Basel), 2024, 24(11): 3588. doi: 10.3390/s24113588

[21] Han L, Ma C H, Liu Y, et al. SC-Lite: an efficient lightweight model for real-time X-ray security check[J]. IEEE Access, 2024, 12: 103419−103432. doi: 10.1109/ACCESS.2024.3433455

[22] Han L, Ma C H, Liu Y, et al. SC-YOLOv8: a security check model for the inspection of prohibited items in X-ray images[J]. Electronics, 2023, 12(20): 4208. doi: 10.3390/electronics12204208

[23] Wang A L, Yuan P F, Wu H B, et al. Improved YOLOv8 for dangerous goods detection in X-ray security images[J]. Electronics, 2024, 13(16): 3238. doi: 10.3390/electronics13163238

[24] Wang Z S, Wang X H, Shi Y T, et al. Lightweight detection method for X-ray security inspection with occlusion[J]. Sensors, 2024, 24(3): 1002. doi: 10.3390/s24031002

[25] Chaple G, Daruwala R D. Design of Sobel operator based image edge detection algorithm on FPGA[C]//Proceedings of 2014 International Conference on Communication and Signal Processing, 2014: 788–792. https://doi.org/10.1109/ICCSP.2014.6949951.

[26] Lin M, Chen Q, Yan S C. Network in network[Z]. arXiv: 1312.4400, 2014. https://arxiv.org/abs/1312.4400.

[27] Yin X Y, Goudriaan J, Lantinga E A, et al. A flexible sigmoid function of determinate growth[J]. Ann Bot, 2003, 91(3): 361−371. doi: 10.1093/aob/mcg029

[28] Yang F H, Jiang R Q, Yan Y, et al. Dual-mode learning for multi-dataset X-ray security image detection[J]. IEEE Trans Inf Foren Secur, 2024, 19: 3510−3524. doi: 10.1109/TIFS.2024.3364368

[29] Wei Y L, Tao R S, Wu Z J, et al. Occluded prohibited items detection: an X-ray security inspection benchmark and de-occlusion attention module[C]//Proceedings of the 28th ACM International Conference on Multimedia, 2020: 138–146. https://doi.org/10.1145/3394171.3413828.

[30] Guo S C, Jin Q Z, Wang H Z, et al. Learnable gated convolutional neural network for semantic segmentation in remote-sensing images[J]. Remote Sens, 2019, 11(16): 1922. doi: 10.3390/rs11161922

[31] Arora R, Basu A, Mianjy P, et al. Understanding deep neural networks with rectified linear units[C]//Proceedings of the 6th International Conference on Learning Representations, 2018.

[32] Ding M Y, Xiao B, Codella N, et al. DaViT: dual attention vision transformers[C]//Proceedings of the 17th European Conference on Computer Vision, 2022: 74–92. https://doi.org/10.1007/978-3-031-20053-3_5.

[33] Sun G Q, Pan Y Z, Kong W K, et al. DA-TransUNet: integrating spatial and channel dual attention with transformer U-net for medical image segmentation[J]. Front Bioeng Biotechnol, 2024, 12: 1398237. doi: 10.3389/fbioe.2024.1398237

[34] Wang H N, Cao P, Wang J Q, et al. UCTransNet: rethinking the skip connections in U-Net from a channel-wise perspective with transformer[C]//Proceedings of the 36th AAAI Conference on Artificial Intelligence, 2022: 2441–2449. https://doi.org/10.1609/aaai.v36i3.20144.

[35] Lei Ba J, Kiros J R, Hinton G E. Layer normalization[Z]. arXiv: 1607.06450, 2016. https://arxiv.org/abs/1607.06450.

[36] Hendrycks D, Gimpel K. Gaussian error linear units (GELUs)[Z]. arXiv: 1606.08415v4, 2023. https://arxiv.org/abs/1606.08415v4.

[37] Chollet F. Xception: deep learning with depthwise separable convolutions[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, 2017: 1800–1807. https://doi.org/10.1109/CVPR.2017.195.

[38] Yu F, Koltun V. Multi-scale context aggregation by dilated convolutions[C]//Proceedings of the 4th International Conference on Learning Representations, 2016.

[39] Yu F, Koltun V, Funkhouser T. Dilated residual networks[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, 2017: 636–644. https://doi.org/10.1109/CVPR.2017.75.

[40] Chen L W, Gu L, Zheng D Z, et al. Frequency-adaptive dilated convolution for semantic segmentation[C]//Proceedings of 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024: 3414–3425. https://doi.org/10.1109/CVPR52733.2024.00328.

[41] Wahab M F, Gritti F, O'Haver T C. Discrete Fourier transform techniques for noise reduction and digital enhancement of analytical signals[J]. TrAC Trends Anal Chem, 2021, 143: 116354. doi: 10.1016/j.trac.2021.116354

[42] Miao C J, Xie L X, Wan F, et al. SIXray: a large-scale security inspection X-ray benchmark for prohibited item discovery in overlapping images[C]//Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019: 2114–2123. https://doi.org/10.1109/CVPR.2019.00222.

[43] Li C Y, Li L L, Jiang H L, et al. YOLOv6: a single-stage object detection framework for industrial applications[Z]. arXiv: 2209.02976, 2022. https://arxiv.org/abs/2209.02976.

[44] Liu C, Hong Z Y, Yu W H, et al. An efficient helmet wearing detection method based on YOLOv7-tiny[C]//Proceedings of the 6th International Conference on Machine Learning and Machine Intelligence, 2023: 92–99. https://doi.org/10.1145/3635638.3635652.

[45] Wang C Y, Yeh I H, Mark Liao H Y. YOLOv9: learning what you want to learn using programmable gradient information[C]//Proceedings of the 18th European Conference on Computer Vision, 2024: 1–21. https://doi.org/10.1007/978-3-031-72751-1_1.

[46] Wang A, Chen H, Liu L H, et al. Yolov10: real-time end-to-end object detection[C]//Proceedings of the 38th Conference on Neural Information Processing Systems, 2024.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: