-

摘要

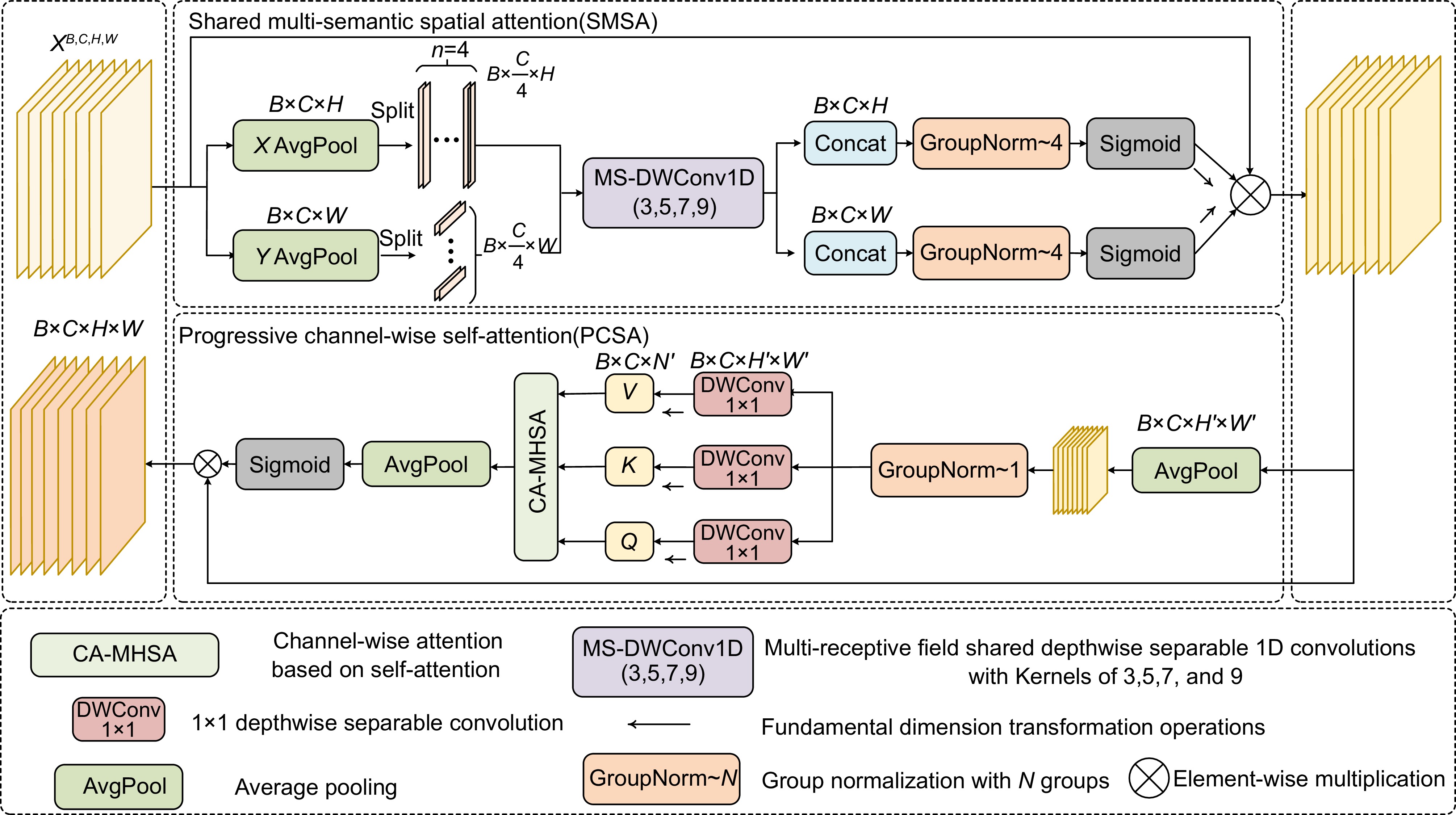

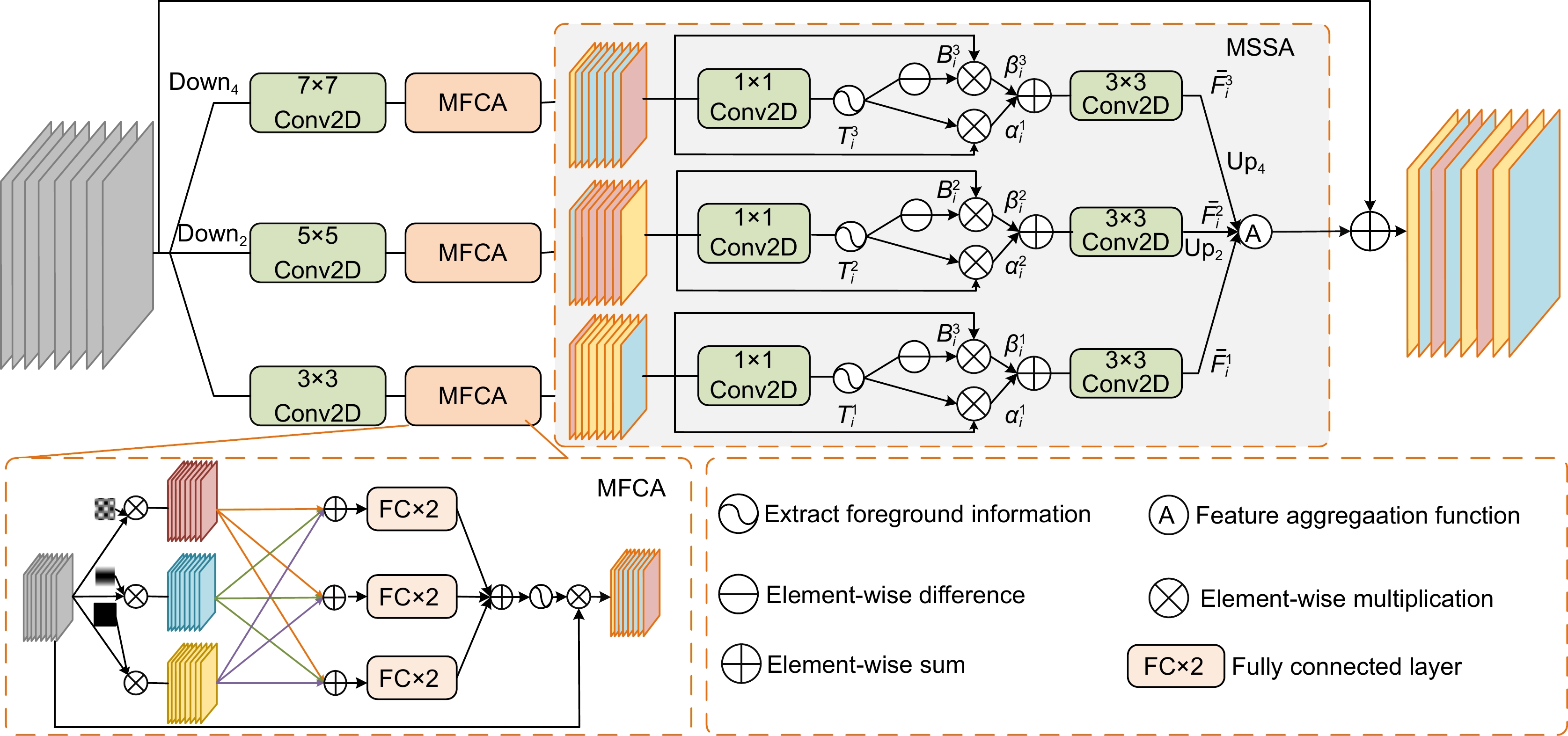

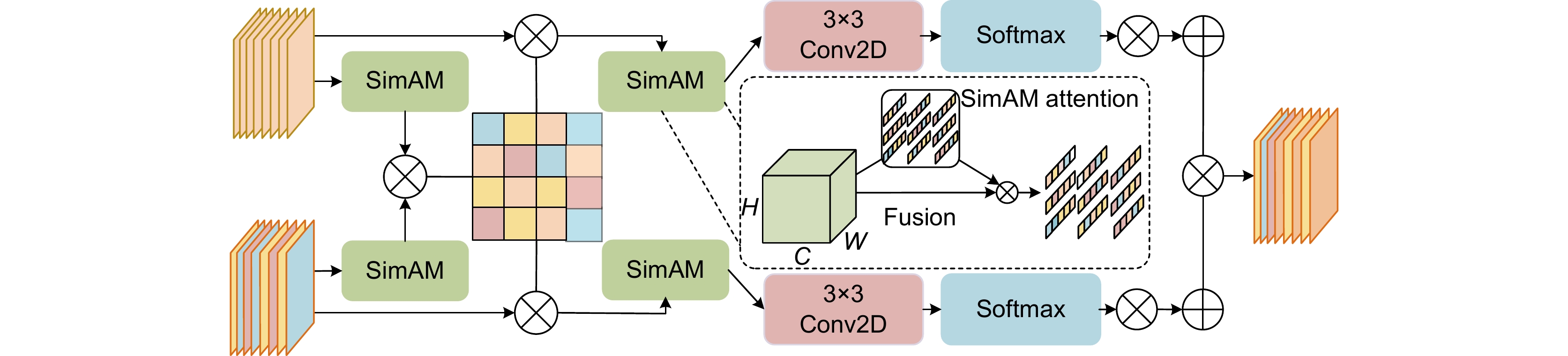

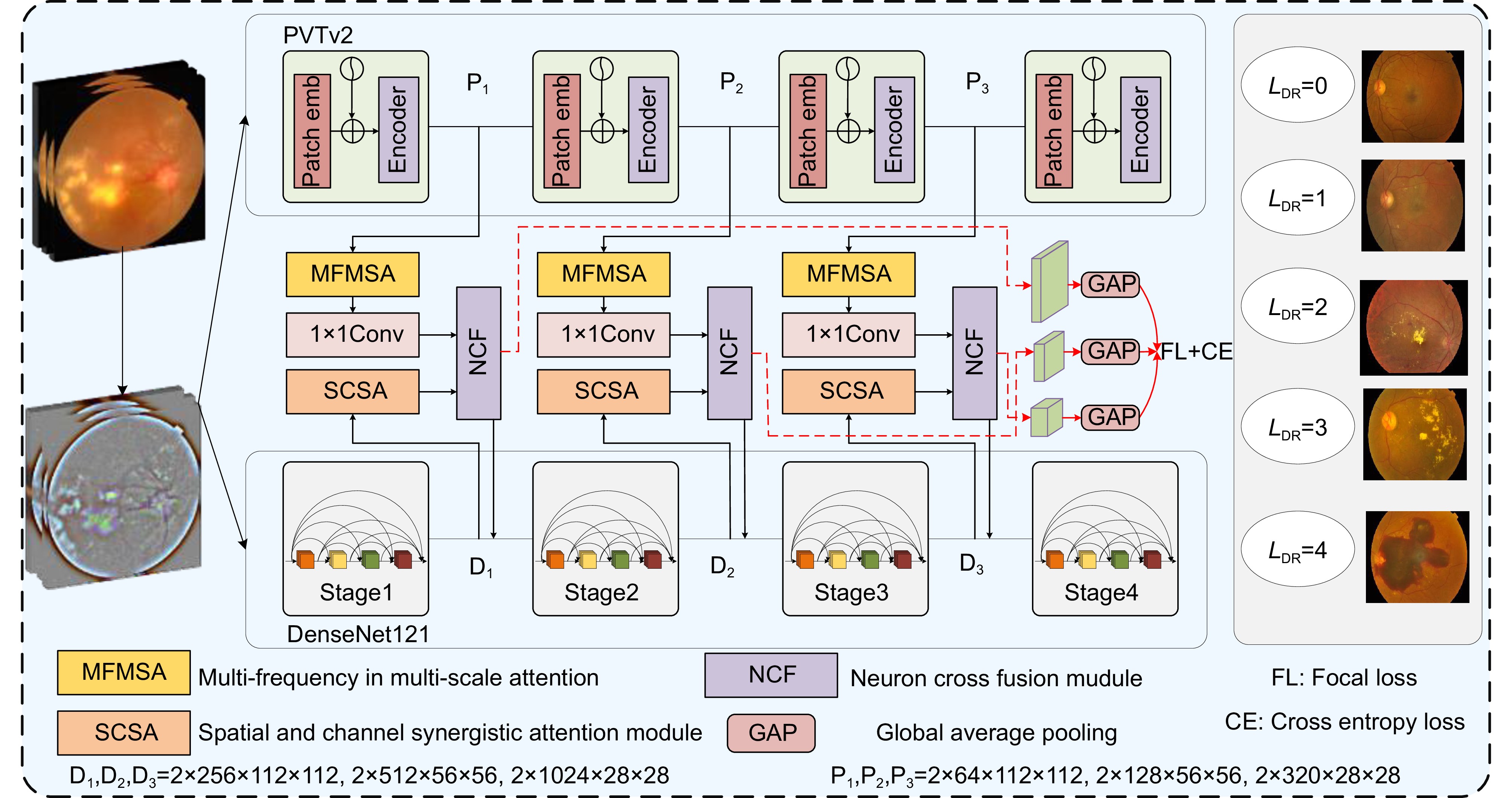

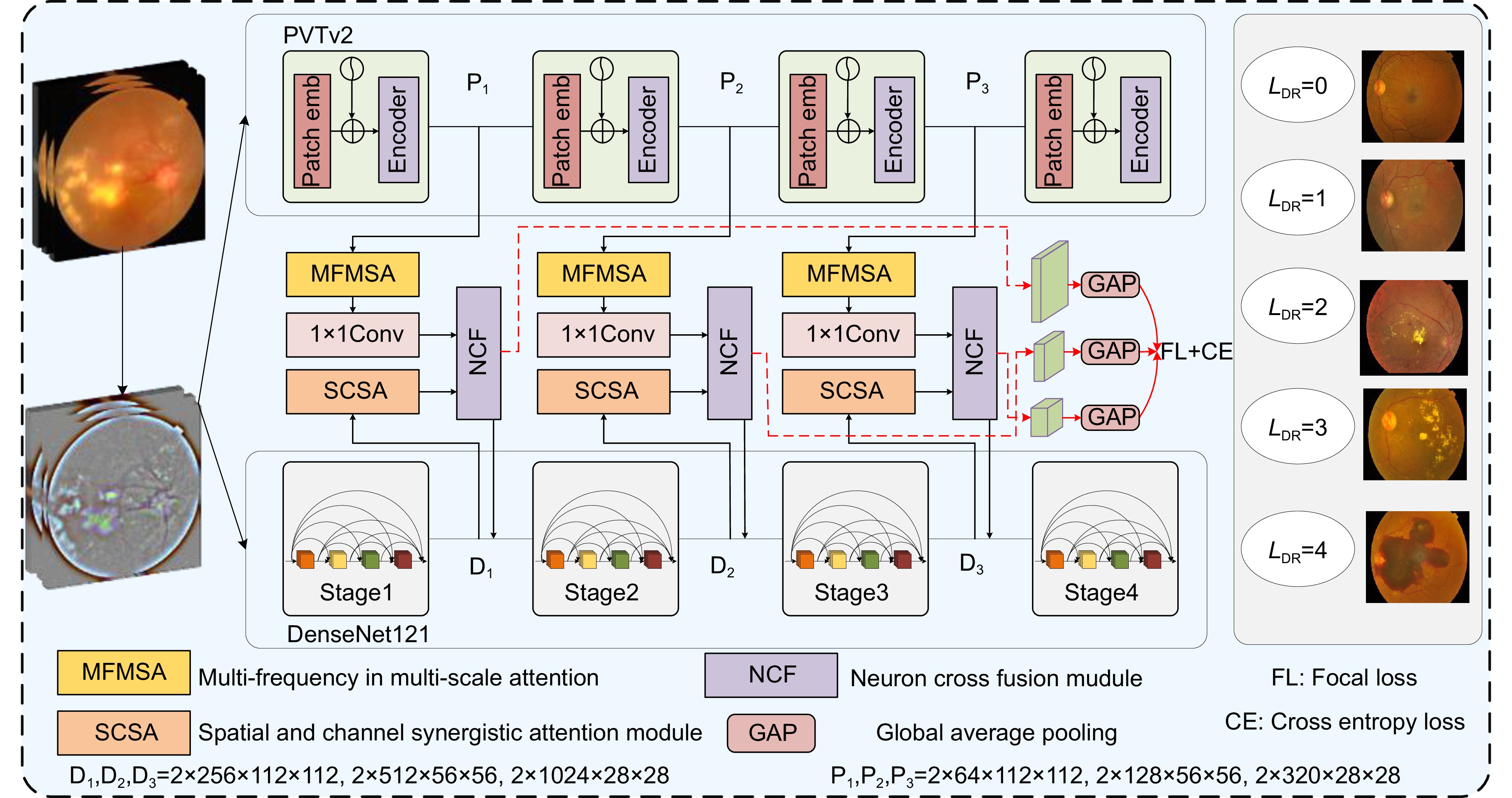

针对视网膜眼底病变图像数据集类间分布不均和病灶区域识别困难的问题,提出一种融合金字塔视觉变压器(pyramid vision transformer v2, PVTv2)和DenseNet121双注意力视网膜病变分级算法。首先,该算法经由PVTv2和DenseNet121组成的双分支网络,对视网膜图像的全局和局部信息进行初步提取;其次,在PVTv2和DenseNet121输出处分别采用空间通道协同注意力模块和多频率多尺度模块,优化局部特征细节,突显微小病灶特征,增强模型对复杂微小病变特征敏感性和病灶的定位感知;再次设计神经元交叉融合模块,建立病灶区域宏观布局和微观纹理信息之间的远程依赖关系,进而提高视网膜病变分级准确率;最后,利用混合损失函数缓解样本分布不均所导致的各等级之间模型关注度不平衡情况。在IDRID和APTOS 2019数据集上进行实验验证,其二次加权系数分别为90.68%和90.35%,IDRID数据集上的准确率和APTOS 2019数据集ROC曲线下方面积分别为80.58%和93.22%。实验结果表明,所提算法在视网膜病变分级领域具有一定应用价值。

-

关键词:

- 视网膜病变分级 /

- 空间通道协同注意力模块 /

- 多频率多尺度注意力模块 /

- 神经元交叉融合模块

Abstract

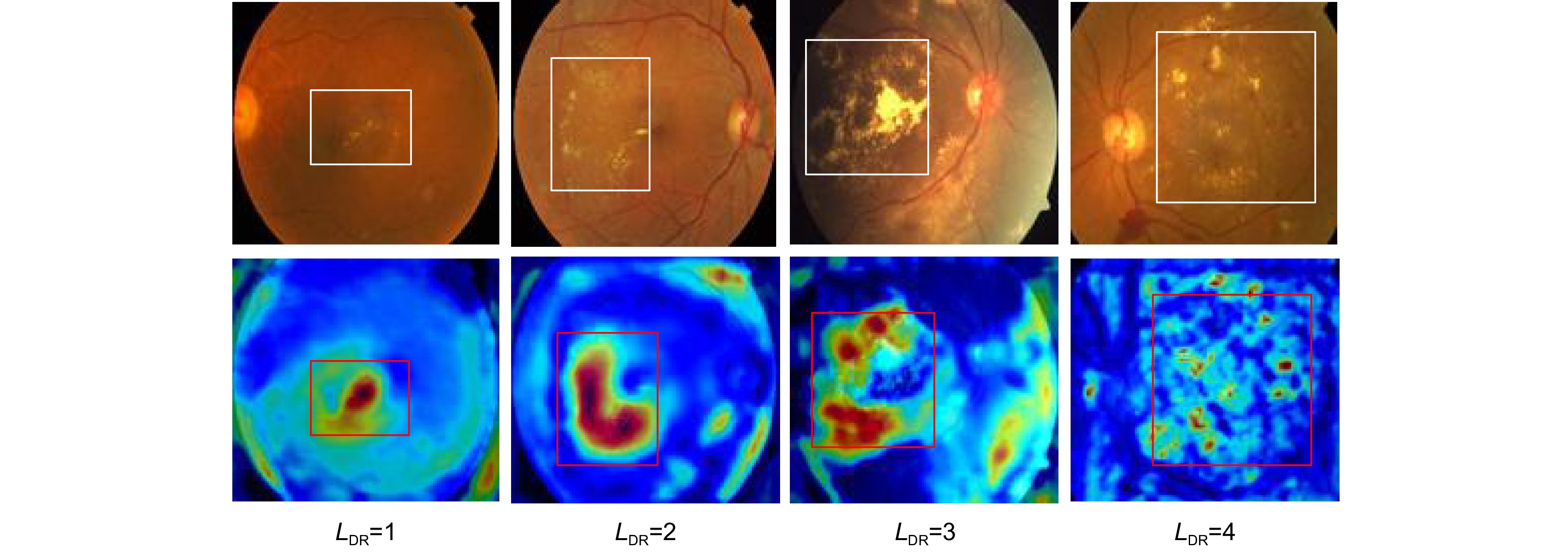

To address the challenges of uneven inter-class distribution and difficulty in lesion area recognition in retinal fundus image datasets, this paper proposes a fusion dual-attention retinal disease grading algorithm with PVTv2 and DenseNet121. First, retinal images are preliminarily processed through a dual-branch network of PVTv2 and DenseNet121 to extract global and local information. Next, spatial-channel synergistic attention modules and multi-frequency multi-scale attention modules are applied to PVTv2 and DenseNet121, respectively. These modules refine local feature details, highlight subtle lesion features, and enhance the model's sensitivity to complex micro-lesions and its spatial perception of lesions areas. Subsequently, a neuron cross-fusion module is designed to establish long-range dependencies between the macroscopic layout and microscopic texture information of lesion areas, thereby improving the accuracy of retinal disease grading. Finally, a hybrid loss function is employed to mitigate the imbalance in model attention across grades caused by uneven sample distribution. Experimental validation on the IDRID and APTOS 2019 datasets yields quadratic weighted kappa scores of 90.68% and 90.35%, respectively. The accuracy on the IDRID dataset and the area under the ROC curve on the APTOS 2019 dataset reached 80.58% and 93.22%, respectively. The experimental results demonstrate that the proposed algorithm holds significant potential for application in retinal disease grading.

-

Overview

Overview: Diabetic retinopathy (DR) is a retinal disease caused by microvascular leakage and obstruction resulting from chronic diabetes. Delayed treatment can lead to irreversible vision impairment. However, the number of diabetic patients is increasing year by year, and the retinal fundus lesions are complex and diverse, which makes accurate diagnosis difficult. Even though retinal imaging can reveal structural changes in the retina, screening for ocular lesions remains time-consuming and labor-intensive for experienced clinicians. Therefore, developing an automated DR grading algorithm is of great significance for clinical medical diagnosis. In recent years, deep learning has made significant progress in the field of diabetic retinopathy grading, especially with the widespread application of convolutional neural networks (CNN) in image processing. CNNs can automatically extract multi-level features from images, thus improving the accuracy of retinal disease detection. These advancements not only enhance the grading accuracy of diabetic retinopathy but also provide ophthalmologists with more efficient diagnostic tools, promoting the application of intelligent diagnostic systems in clinical settings. However, there are still some shortcomings in the retinal disease grading task: the class distribution in datasets is imbalanced, and the lesion features in retinal images often present small and complex shapes, making them difficult to identify. Additionally, it is challenging to balance both macro and micro features simultaneously. To address these issues, this paper proposes a retinal disease grading algorithm that integrates PVTv2 and DenseNet121 with dual attention mechanisms. The algorithm first uses a dual-branch network consisting of PVTv2 and DenseNet121 to extract global and local information from retinal images. Then, spatial-channel collaborative attention modules and multi-frequency multi-scale modules are applied at the outputs of PVTv2 and DenseNet121 to optimize local feature details, highlight micro-lesion features, and improve the model's sensitivity to complex micro-lesion characteristics and its ability to locate lesions. Furthermore, a neuron-cross-fusion module is designed to establish long-range dependencies between macroscopic lesion layout and microscopic texture information, thus improving the grading accuracy of retinal diseases. Finally, a hybrid loss function is used to mitigate the imbalance in model attention across different grades caused by uneven sample distribution. The algorithm is experimentally validated on the IDRID and APTOS 2019 datasets. On the IDRID dataset, the secondary weighted coefficient, accuracy, sensitivity, and specificity are 90.68%, 80.58%, 95.65%, and 97.06%, respectively. On the APTOS 2019 dataset, the secondary weighted coefficient, accuracy, sensitivity, and area under the ROC curve are 90.35%, 84.83%, 87.94%, and 93.22%, respectively. The experimental results show that the proposed algorithm has significant application value in retinal disease grading and provides a new approach for intelligent grading and clinical diagnosis assistance for retinal diseases.

-

-

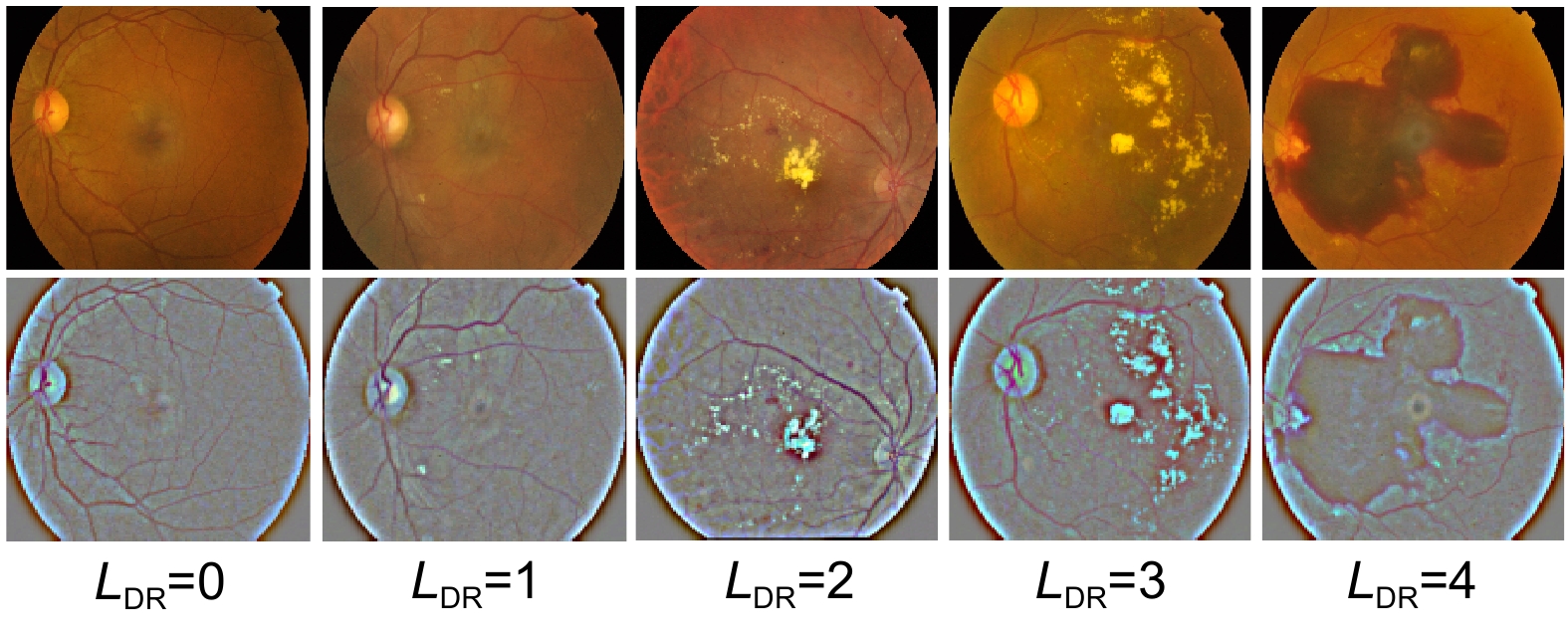

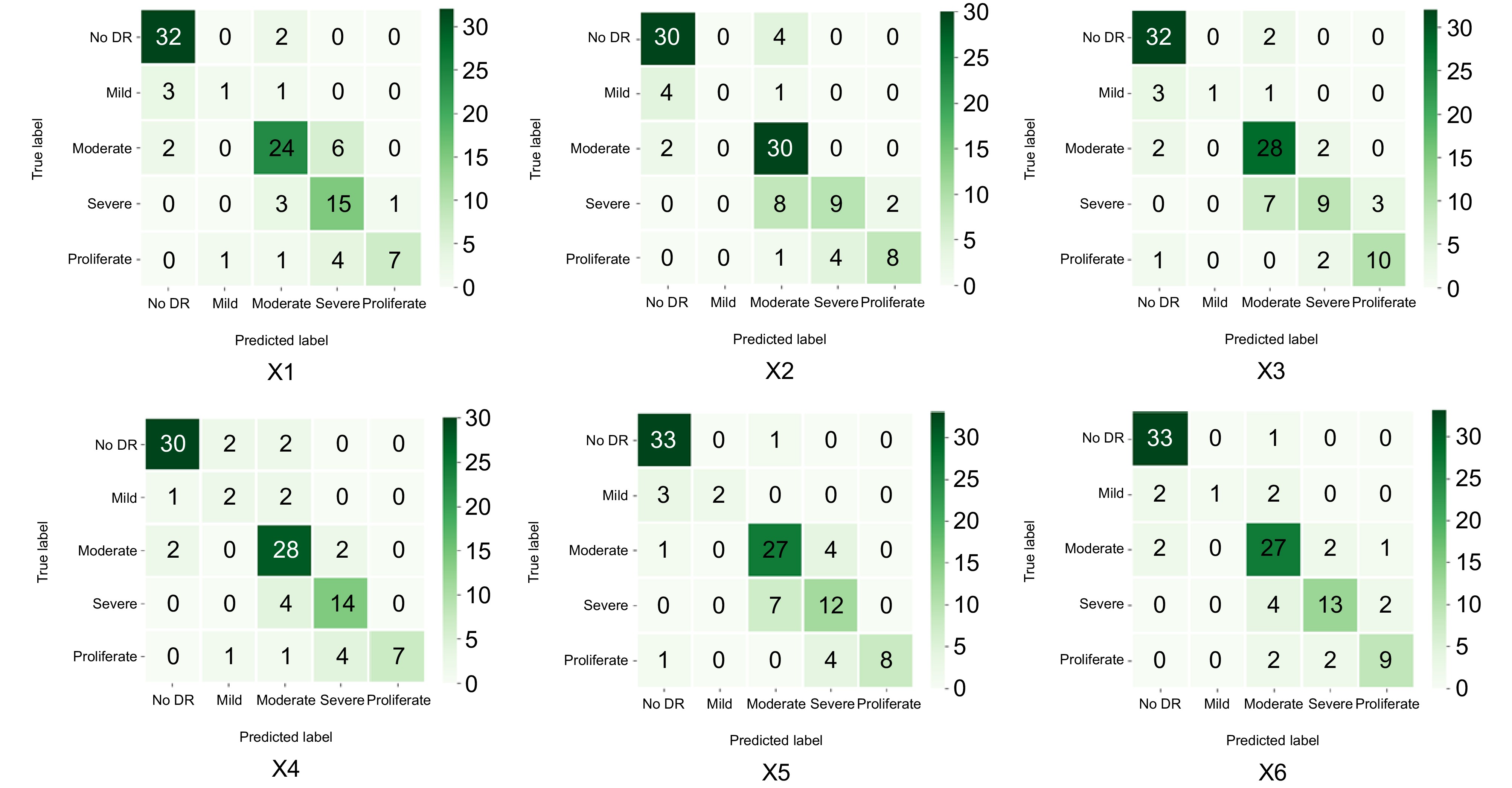

表 1 IDRID与APTOS 2019数据集的类分布特征

Table 1. The class distribution characteristics of the IDRID and APTOS 2019 datasets

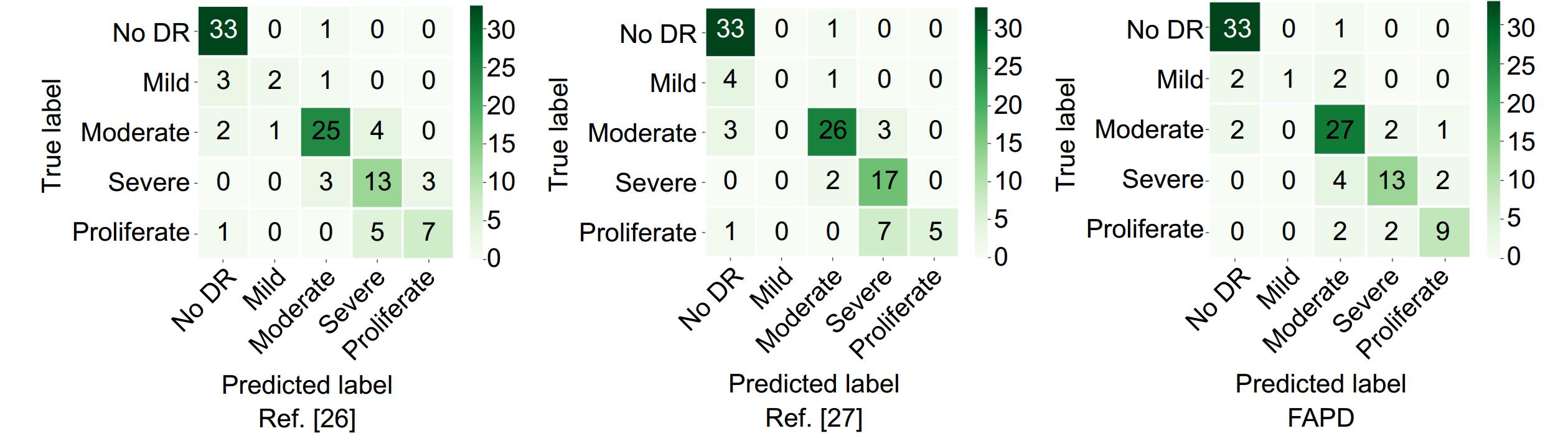

Dataset Class distribution characteristic Total LDR=0 LDR=1 LDR=2 LDR=3 LDR=4 IDRID 168 25 168 93 62 516 APTOS 2019 1805 370 999 193 295 3662 表 2 不同算法在IDRID数据集结果表现

Table 2. Performance of different algorithms on the IDRID dataset

表 3 不同算法在APTOS 2019数据集结果表现

Table 3. Performance of different algorithms on the APTOS 2019 dataset

Methods Model QWK/% Acc/% Se/% AUC/% Ref. [23] Res2Net-50+DenseNet121 90.29 84.42 87.40 93.60 Ref. [26] CMAL-Net 86.08 81.96 86.43 92.46 Ref. [27] FBSD 86.34 84.28 85.32 92.78 Ref. [28] LA-NSVM 75.64 84.31 66.16 - Ref. [29] DenseNet201 78.37 85.93 69.72 - Ref. [30] Ensemble voting 77.78 85.28 86.00 - Ours FAPD 90.35 84.83 87.94 93.22 表 4 FAPD在EyePACS数据集上泛化能力验证结果

Table 4. Verification results of the generalization ability of FAPD on the EyePACS dataset

表 5 IDRID数据集上不同加权因子模型性能指标

Table 5. Performance indicators of models with different weighting factors on the IDRID dataset

$ \alpha $ QWK/% Acc/% Se/% Sp/% 0 88.21 78.64 91.27 88.23 0.1 84.52 74.39 92.75 85.29 0.2 89.13 80.58 94.20 97.06 0.3 88.97 77.67 95.65 94.11 0.4 86.32 79.61 91.30 94.12 0.5 90.55 78.64 92.75 96.25 0.6 90.85 79.61 95.65 94.11 0.7 88.69 76.69 89.85 91.48 0.8 91.34 78.64 94.20 94.20 0.9 968 80.58 95.65 97.06 表 6 加入高斯噪声前后模型的性能指标

Table 6. Performance of the model before and after adding Gaussian noise

Model QWK/% Acc/% Se/% Sp/% M1 89.90 79.61 94.20 94.12 M2 90.68 80.58 95.65 97.06 表 7 在IDRID数据集上的消融结果

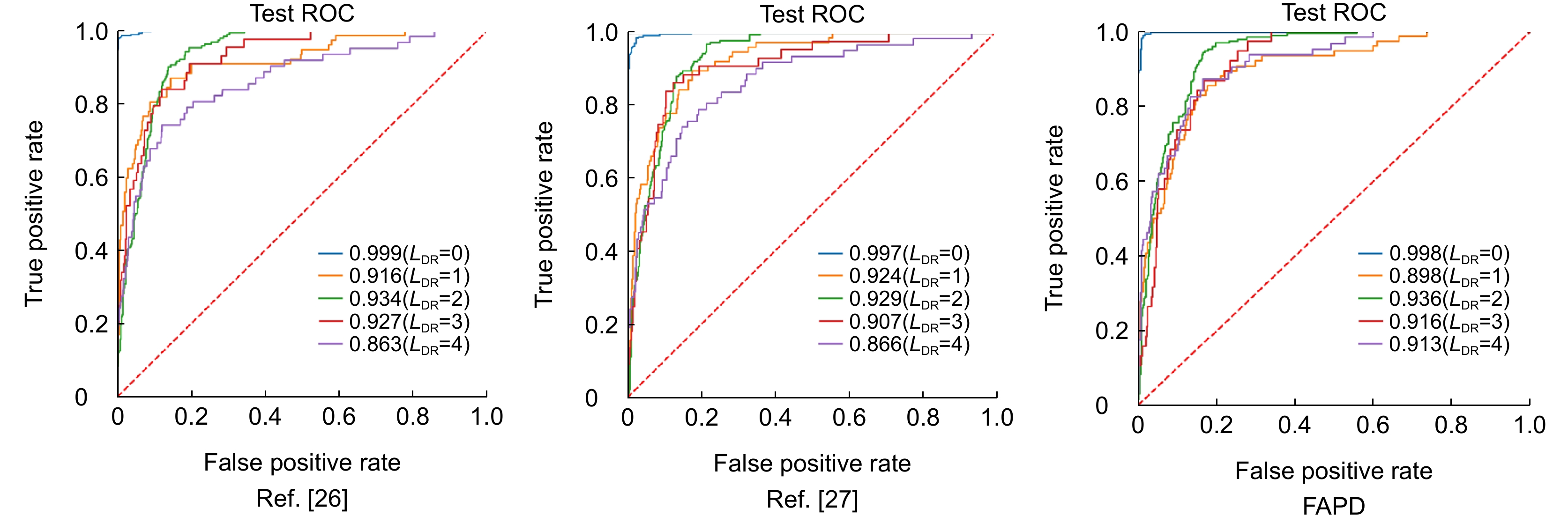

Table 7. Ablation results on the IDRID dataset

Model QWK/% Acc/% Se/% Sp/% X1 88.25 76.69 92.75 94.11 X2 87.88 74.75 91.30 88.24 X3 87.75 77.67 91.30 94.12 X4 88.21 78.64 95.65 88.23 X5 89.37 79.62 92.75 97.06 X6 90.68 80.58 95.65 97.06 -

参考文献

[1] Che H X, Cheng Y H, Jin H B, et al. Towards generalizable diabetic retinopathy grading in unseen domains[C]//Proceedings of the 26th International Conference on Medical Image Computing and Computer-Assisted Intervention, Vancouver, 2023: 430–440. https://doi.org/10.1007/978-3-031-43904-9_42.

[2] Sumathi K, Sendhil Kumar K S. A systematic review of fundus image analysis for diagnosing diabetic retinopathy[J]. Int J Intell Syst Appl Eng, 2024, 12(16s): 167−181.

[3] Huang J J, Fan J Y, He Y, et al. Physical compensation method for dispersion of multiple materials in swept source optical coherence tomography[J]. J Biophotonics, 2023, 16(10): e202300167. doi: 10.1002/jbio.202300167

[4] Ge X, Chen S, Lin K, et al. Deblurring, artifact-free optical coherence tomography with deconvolution-random phase modulation[J]. Opto-Electron Sci, 2024, 3(1): 230020. doi: 10.29026/oes.2024.230020

[5] Wang J, Zong Y, He Y, et al. Domain adaptation-based automated detection of retinal diseases from optical coherence tomography images[J]. Curr Eye Res, 2023, 48(9): 836−842. doi: 10.1080/02713683.2023.2212878

[6] Chen Y W, He Y, Ye H, et al. Unified deep learning model for predicting fundus fluorescein angiography image from fundus structure image[J]. J Innov Opt Health Sci, 2024, 17(3): 2450003. doi: 10.1142/S1793545824500032

[7] Xu X B, Liu D H, Huang G H, et al. Computer aided diagnosis of diabetic retinopathy based on multi-view joint learning[J]. Comput Biol Med, 2024, 174: 108428. doi: 10.1016/j.compbiomed.2024.108428

[8] 杨建文, 黄江杰, 何益, 等. 线聚焦谱域光学相干层析成像的分段色散补偿像质优化方法[J]. 光电工程, 2024, 51(6): 240042. doi: 10.12086/oee.2024.240042

Yang J W, Huang J J, He Y, et al. Image quality optimization of line-focused spectral domain optical coherence tomography with subsection dispersion compensation[J]. Opto-Electron Eng, 2024, 51(6): 240042. doi: 10.12086/oee.2024.240042

[9] Yue G H, Li Y, Zhou T W, et al. Attention-driven cascaded network for diabetic retinopathy grading from fundus images[J]. Biomed Signal Process Control, 2023, 80: 104370. doi: 10.1016/j.bspc.2022.104370

[10] Khanna M, Singh L K, Thawkar S, et al. Deep learning based computer-aided automatic prediction and grading system for diabetic retinopathy[J]. Multimed Tools Appl, 2023, 82(25): 39255−39302. doi: 10.1007/s11042-023-14970-5

[11] Durai D B J, Jaya T. Automatic severity grade classification of diabetic retinopathy using deformable ladder Bi attention U-net and deep adaptive CNN[J]. Med Biol Eng Comput, 2023, 61(8): 2091−2113. doi: 10.1007/s11517-023-02860-9

[12] Wang Y P, Wang L J, Guo Z Q, et al. A graph convolutional network with dynamic weight fusion of multi-scale local features for diabetic retinopathy grading[J]. Sci Rep, 2024, 14(1): 5791. doi: 10.1038/s41598-024-56389-4

[13] 欧阳继红, 郭泽琪, 刘思光. 糖尿病视网膜病变分期双分支混合注意力决策网络[J]. 吉林大学学报(工学版), 2022, 52(3): 648−656. doi: 10.13229/j.cnki.jdxbgxb20200813

Ouyang J H, Guo Z Q, Liu S G. Dual-branch hybrid attention decision net for diabetic retinopathy classification[J]. J Jilin Univ (Eng Technol Ed), 2022, 52(3): 648−656. doi: 10.13229/j.cnki.jdxbgxb20200813

[14] Vij R, Arora S. A novel deep transfer learning based computerized diagnostic systems for Multi-class imbalanced diabetic retinopathy severity classification[J]. Multimed Tools Appl, 2023, 82(22): 34847−34884. doi: 10.1007/s11042-023-14963-4

[15] Wang W H, Xie E Z, Li X, et al. Pvt v2: improved baselines with pyramid vision transformer[J]. Comput Visual Med, 2022, 8(3): 415−424. doi: 10.1007/s41095-022-0274-8

[16] Vellaichamy A S, Swaminathan A, Varun C, et al. Multiple plant leaf disease classification using densenet-121 architecture[J]. Int J Electr Eng Technol, 2021, 12(5): 38−57 doi: 10.34218/IJEET.12.5.2021.005

[17] Si Y Z, Xu H Y, Zhu X Z, et al. SCSA: exploring the synergistic effects between spatial and channel attention[J]. arXiv: 2407.05128, 2024. https://doi.org/10.48550/arXiv.2407.05128

[18] Nam J H, Syazwany N S, Kim S J, et al. Modality-agnostic domain generalizable medical image segmentation by multi-frequency in multi-scale attention[C]//Proceedings of 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 2024: 11480–11491. https://doi.org/10.1109/CVPR52733.2024.01091.

[19] Zhou H, Luo F L, Zhuang H P, et al. Attention multihop graph and multiscale convolutional fusion network for hyperspectral image classification[J]. IEEE Trans Geosci Remote Sens, 2023, 61: 5508614. doi: 10.1109/TGRS.2023.3265879

[20] Yang L X, Zhang R Y, Li L D, et al. SimAM: a simple, parameter-free attention module for convolutional neural networks[C]//Proceedings of the 38th International Conference on Machine Learning, Oxford, UK, 2021: 11863–11874.

[21] Rezaei-Dastjerdehei M R, Mijani A, Fatemizadeh E. Addressing imbalance in multi-label classification using weighted cross entropy loss function[C]//Proceedings of the 2020 27th National and 5th International Iranian Conference on Biomedical Engineering, Tehran, Iran, 2020: 333–338. https://doi.org/10.1109/ICBME51989.2020.9319440.

[22] Mukhoti J, Kulharia V, Sanyal A, et al. Calibrating deep neural networks using focal loss[C]//Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 2020: 1282.

[23] 梁礼明, 金家新, 冯耀, 等. 融合坐标感知与混合提取的视网膜病变分级算法[J]. 光电工程, 2024, 51(1): 230276. doi: 10.12086/oee.2024.230276

Liang L M, Jin J X, Feng Y, et al. Retinal lesions graded algorithm that integrates coordinate perception and hybrid extraction[J]. Opto-Electron Eng, 2024, 51(1): 230276. doi: 10.12086/oee.2024.230276

[24] Shi L, Wang B, Zhang J X. A multi-stage transfer learning framework for diabetic retinopathy grading on small data[C]//Proceedings of IEEE International Conference on Communications, Rome, Italy, 2023: 3388–3393. https://doi.org/10.1109/ICC45041.2023.10279479.

[25] Bhardwaj C, Jain S, Sood M. Transfer learning based robust automatic detection system for diabetic retinopathy grading[J]. Neural Comput Appl, 2021, 33(20): 13999−14019. doi: 10.1007/s00521-021-06042-2

[26] Liu D C, Zhao L J, Wang Y, et al. Learn from each other to Classify better: cross-layer mutual attention learning for fine-grained visual classification[J]. Pattern Recogn, 2023, 140: 109550. doi: 10.1016/j.patcog.2023.109550

[27] Song J W, Yang R Y. Feature boosting, suppression, and diversification for fine-grained visual classification[C]//Proceedings of 2021 International Joint Conference on Neural Networks, Shenzhen, China, 2021: 1–8. https://doi.org/10.1109/IJCNN52387.2021.9534004.

[28] Shaik N S, Cherukuri T K. Lesion-aware attention with neural support vector machine for retinopathy diagnosis[J]. Mach Vis Appl, 2021, 32(6): 126. doi: 10.1007/s00138-021-01253-y

[29] Kobat S G, Baygin N, Yusufoglu E, et al. Automated diabetic retinopathy detection using horizontal and vertical patch division-based pre-trained DenseNET with digital fundus images[J]. Diagnostics, 2022, 12(8): 1975. doi: 10.3390/diagnostics12081975

[30] Oulhadj M, Riffi J, Chaimae K, et al. Diabetic retinopathy prediction based on deep learning and deformable registration[J]. Multimed Tools Appl, 2022, 81(20): 28709−28727. doi: 10.1007/s11042-022-12968-z

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: