Remote sensing image road extraction by integrating ResNeSt and multi-scale feature fusion

-

摘要:

针对高分辨率遥感影像的道路提取存在道路边缘分割不连续、小目标道路分割精度不高和目标道路误分的问题,本文提出了结合ResNeSt和多尺度特征融合的遥感影像道路提取方法用于遥感影像道路提取 (ResT-UNet)。参考ResNeSt网络模块构造U型网编码器,使前期编码器可以更完整的提取信息,分割目标边缘更加连续;首先在编码器部分引入Triplet Attention注意力机制,抑制无用的特征信息;其次使用卷积块代替最大池化操作,增加特征维度和网络深度,减少道路信息丢失;最后在编码器网络和解码器网络的桥连接部分使用多尺度特征融合模块 (multi-acale feature fusion, MSFF),以捕获区域间的远程依赖关系,提高道路的分割效果。实验在Massachusetts道路数据集和DeepGlobe数据集上进行实验,实验结果表明,该方法分别在数据集上IoU达到了64.76%和64.45%,相比于近几年网络MINet模型提高了1.42%和1.74%,表明ResT-UNet网络有效提高遥感影像道路的提取精度,为解译遥感图像语义信息提供一种新思路。

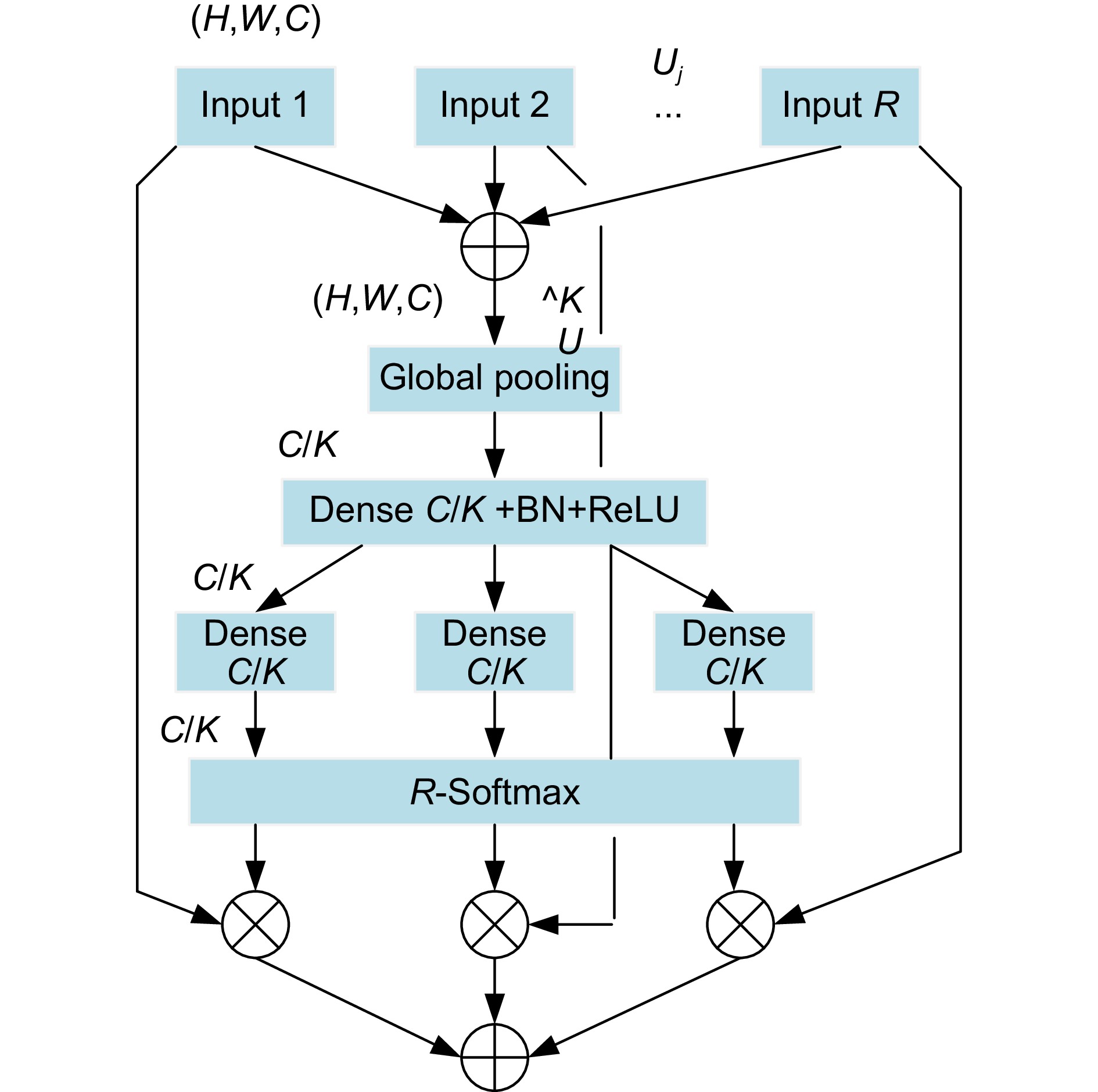

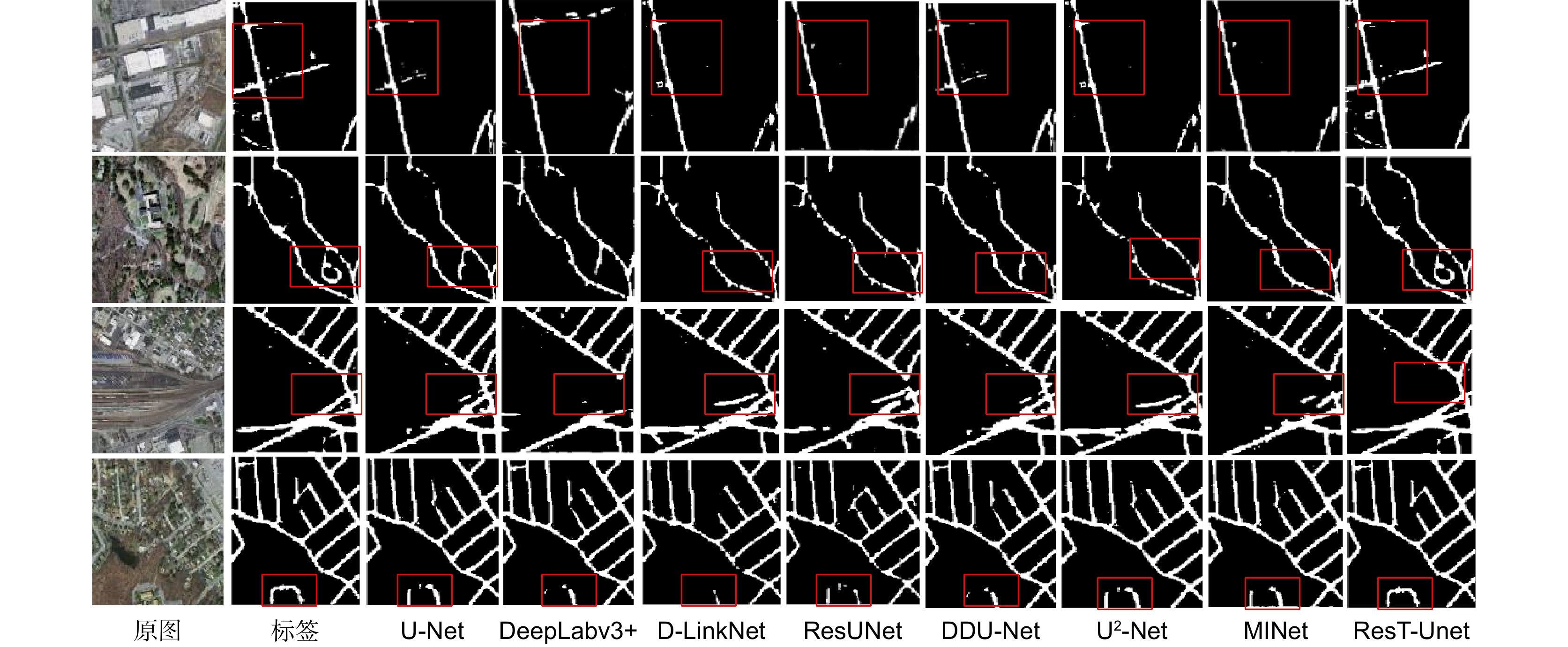

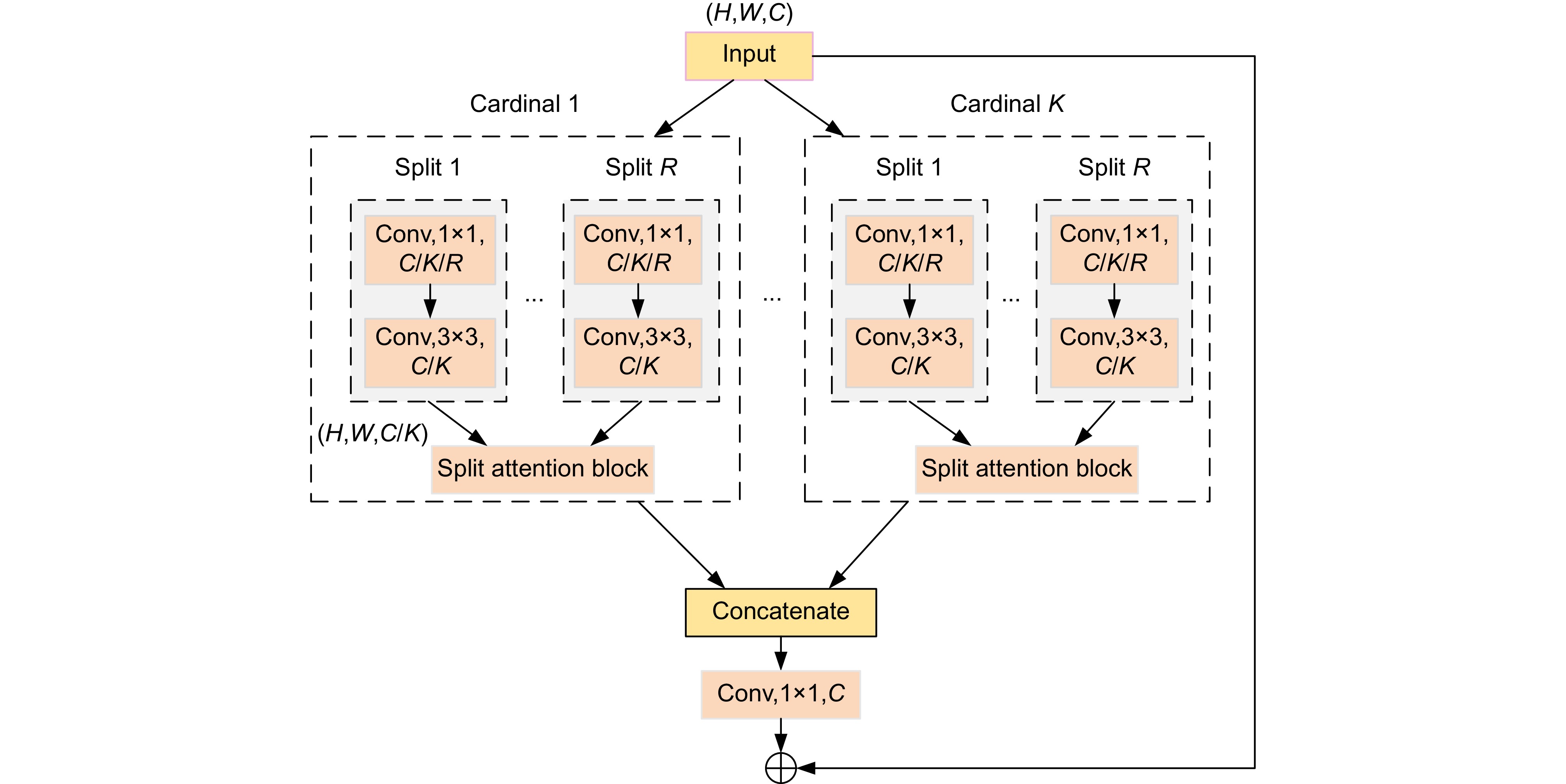

Abstract:Aiming at the issues of discontinuous road edge segmentation, low accuracy in segmenting small-scale roads, and misclassification of target roads in high-resolution remote sensing imagery, this paper proposes a road extraction method that integrates ResNeSt and multi-scale feature fusion for road extraction from remote sensing imagery. Referencing the ResNeSt network module, a U-shaped network encoder is constructed to enable the initial encoder to extract information more entirely and ensure more continuous segmentation of target edges. Firstly, Triplet Attention is introduced into the encoder to suppress useless feature information. Secondly, convolutional blocks replace max pooling operations, increasing feature dimensionality and network depth while reducing the loss of road information. Finally, a multi-scale feature fusion (MSFF) module is utilized at the bridge connection between the encoder and decoder networks to capture long-range dependencies between regions and improve road segmentation performance. The experiments were conducted on the Massachusetts Roads dataset and the DeepGlobe dataset. The experimental results demonstrate that our proposed method achieved Intersection over Union scores of 65.39% and 65.45% , respectively, on these datasets, representing improvements of 1.42% and 1.74% compared to the original MINet model. These findings indicate that the ResT-UNet network effectively enhances the extraction accuracy of road features in remote sensing imagery, providing a novel approach for interpreting semantic information in remote sensing images.

-

Overview: Road extraction from high-resolution remote sensing imagery is critical for applications like urban planning, autonomous driving, and road network updates. However, challenges such as discontinuous road edges, low accuracy in small road feature segmentation, and misclassification remain. This paper proposes ResT-UNet, a novel method that integrates the ResNeSt network and multi-scale feature fusion to address these challenges and improve road extraction accuracy. The main objective of this study is to enhance road extraction performance by improving feature extraction and preserving road details. The ResT-UNet architecture builds upon the U-Net model, which is widely used in semantic segmentation. The first modification replaces U-Net's initial convolution layer with a ResNeSt block, which enhances feature extraction and ensures smoother road edge segmentation. Additionally, a triplet attention mechanism is introduced in the encoder to suppress irrelevant features and focus on key road-related information, improving the capture of fine road details by strengthening spatial and channel relationships. Furthermore, ResT-UNet replaces max pooling with convolutional blocks to retain more spatial information, reducing road feature loss. A multi-scale feature fusion (MSFF) module is added between the encoder and decoder, enabling the network to capture long-range dependencies and multi-scale features. This fusion of features from different scales improves road detection in complex environments. The method was evaluated on the Massachusetts Roads and DeepGlobe datasets. Experimental results showed that ResT-UNet outperformed the MINet model, achieving intersection over union (IoU) scores of 64.76% and 64.45%, respectively, representing improvements of 1.42% and 1.74%. These results confirm that ResT-UNet significantly enhances road extraction accuracy, especially in handling complex road boundaries and small-scale features. In conclusion, ResT-UNet offers an effective solution for road extraction from remote sensing imagery, with improved segmentation accuracy. The integration of the ResNeSt block, triplet attention, and multi-scale feature fusion significantly enhances road detection, making the model suitable for applications in autonomous driving, urban planning, and geographic information systems. Future work will focus on further optimization and application to more complex datasets.

-

-

表 1 不同改进实验结果对比 (Massachusetts)

Table 1. Comparison of experimental results of different improvements (Massachusetts)

Method OA/% F1/% IoU/% mIoU/% U-Net 97.71 87.46 62.37 80.01 ResNeSt block+U-Net 97.79 87.51 62.79 80.36 Att+U-Net 97.76 87.49 62.41 80.15 Transition+U-Net 97.73 87.48 62.39 80.10 U-Net+MSFF 97.85 87.67 62.76 80.59 ResNeSt block+Att+U-Net 97.89 87.83 63.11 80.68 ResNeSt block+Att+Transition+U-Net 97.91 87.91 63.57 80.74 ResNeSt block+Att+Transition+U-Net+MSFF 98.09 88.83 64.76 81.94 表 2 不同改进实验结果对比 (DeepGlobe)

Table 2. Comparison of experimental results of different improvements (DeepGlobe)

Method OA/% F1/% IoU/% mIoU/% U-Net 97.07 86.12 61.98 79.82 ResNeSt block+U-Net 97.13 86.27 62.08 80.01 Att+U-Net 97.32 86.41 62.34 80.13 Transition+U-Net 97.11 86.03 61.13 79.69 U-Net+MSFF 97.73 86.91 62.60 80.61 ResNeSt block+Att+U-Net 97.81 87.29 63.07 80.94 ResNeSt block+Att+Transition+U-Net 97.93 87.55 63.41 81.01 ResNeSt block+Att+Transition+U-Net+MSFF 98.05 88.01 64.45 81.35 表 3 不同网络道路提取结果比较 (Massachusetts)

Table 3. Comparison of road extraction results from different networks (Massachusetts)

Method OA/% F1/% IoU/% mIoU/% U-Net 97.71 87.46 62.37 80.01 DDUNet 97.62 87.13 61.67 79.63 ResUNet 97.72 87.88 62.43 80.16 DeepLabV3+ 97.62 87.48 61.62 79.71 D-LinkNet 97.83 87.91 63.11 80.83 U2-Net 97.65 87.16 61.85 79.65 MINet 97.88 88.10 63.34 80.37 ResT-UNet 98.09 88.83 64.76 81.94 表 4 不同网络道路提取结果比较 (DeepGlobe)

Table 4. Comparison of road extraction results from different networks (DeepGlobe)

Method OA/% F1/% IoU/% mIoU/% U-Net 97.07 86.12 61.98 79.82 DDUNet 97.23 86.87 62.18 80.12 ResUNet 97.11 86.28 62.10 79.97 DeepLabV3+ 96.13 85.84 61.02 78.94 D-LinkNet 97.52 86.57 63.07 80.76 U2-Net 97.43 86.18 62.84 80.56 MINet 97.48 86.88 62.71 80.41 ResT-UNet 98.05 88.01 64.45 81.35 表 5 不同算法的复杂度

Table 5. Complexity of different algorithms

Algorithm U-Net DeepLabv3+ D-LinkNet ResUNet U2-Net DDUNet MINet ResT-UNet FLOPs/M 25947.00 26474.89 120408.56 41837.93 157679.07 77489.09 384409.60 54994.39 Params/M 24.73 5.83 213.87 22.60 44.17 65.84 47.56 51.13 Latency/s 3.78 3.49 4.79 3.71 5.00 4.13 6.45 3.57 -

[1] Huang B, Zhao B, Song Y M. Urban land-use mapping using a deep convolutional neural network with high spatial resolution multispectral remote sensing imagery[J]. Remote Sens Environ, 2018, 214: 73−86. doi: 10.1016/j.rse.2018.04.050

[2] Xu Y X, Chen H, Du C, et al. MSACon: mining spatial attention-based contextual information for road extraction[J]. IEEE Trans Geosci Remote Sens, 2022, 60: 5604317. doi: 10.1109/TGRS.2021.3073923

[3] 肖振久, 张杰浩, 林渤翰. 特征协同与细粒度感知的遥感图像小目标检测[J]. 光电工程, 2024, 51(6): 240066. doi: 10.12086/oee.2024.240066

Xiao Z J, Zhang J H, Lin B H. Feature coordination and fine-grained perception of small targets in remote sensing images[J]. Opto-Electron Eng, 2024, 51(6): 240066. doi: 10.12086/oee.2024.240066

[4] 梁礼明, 陈康泉, 王成斌, 等. 融合视觉中心机制和并行补丁感知的遥感图像检测算法[J]. 光电工程, 2024, 51(7): 240099. doi: 10.12086/oee.2024.240099

Liang L M, Chen K Q, Wang C B, et al. Remote sensing image detection algorithm integrating visual center mechanism and parallel patch perception[J]. Opto-Electron Eng, 2024, 51(7): 240099. doi: 10.12086/oee.2024.240099

[5] Yuan Q Q, Shen H F, Li T W, et al. Deep learning in environmental remote sensing: achievements and challenges[J]. Remote Sens Environ, 2020, 241: 111716. doi: 10.1016/j.rse.2020.111716

[6] Zhu Q Q, Zhang Y A, Wang L Z, et al. A global context-aware and batch-independent network for road extraction from VHR satellite imagery[J]. ISPRS J Photogramm Remote Sens, 2021, 175: 353−365 doi: 10.1016/j.isprsjprs.2021.03.016

[7] He D, Shi Q, Liu X P, et al. Generating 2 m fine-scale urban tree cover product over 34 metropolises in China based on deep context-aware sub-pixel mapping network[J]. Int J Appl Earth Obs Geoinf, 2022, 106: 102667. doi: 10.1016/j.jag.2021.102667

[8] 林娜, 张小青, 王岚, 等. 空洞卷积U-Net的遥感影像道路提取方法[J]. 测绘科学, 2021, 46(9): 109−114,156. doi: 10.16251/j.cnki.1009-2307.2021.09.014

Lin N, Zhang X Q, Wang L, et al. Road extraction from remote sensing images based on dilated convolutions U-Net[J]. Sci Surv Mapp, 2021, 46(9): 109−114,156. doi: 10.16251/j.cnki.1009-2307.2021.09.014

[9] 杨佳林, 郭学俊, 陈泽华. 改进U-Net型网络的遥感图像道路提取[J]. 中国图象图形学报, 2021, 26(12): 3005−3014. doi: 10.11834/jig.200579

Yang J L, Guo X J, Chen Z H. Road extraction method from remote sensing images based on improved U-Net network[J]. J Image Graph, 2021, 26(12): 3005−3014. doi: 10.11834/jig.200579

[10] Shafiq M, Gu Z Q. Deep residual learning for image recognition: a survey[J]. Appl Sci, 2022, 12(18): 8972. doi: 10.3390/app12188972

[11] Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation[C]//Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 2015: 234–241. https://doi.org/10.1007/978-3-319-24574-4_28.

[12] Zhang Z X, Liu Q J, Wang Y H. Road extraction by deep residual U-Net[J]. IEEE Geosci Remote Sens Lett, 2018, 15(5): 749−753. doi: 10.1109/LGRS.2018.2802944

[13] Zhou L C, Zhang C, Wu M. D-LinkNet: LinkNet with pretrained encoder and dilated convolution for high resolution satellite imagery road extraction[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 2018: 182–186. https://doi.org/10.1109/CVPRW.2018.00034.

[14] 张正, 陈仲柱, 柳长安. 基于LinkNet和特征聚合模块的遥感图像中道路提取技术[J]. 中国科技信息, 2022, 672(7): 116−119 doi: 10.3969/j.issn.1001-8972.2022.07.038

Zhang Z, Chen Z, Liu C. Road extraction technology from remote sensing images based on LinkNet and feature aggregation module[J]. China Science and Technology Information, 2022, 672(7): 116−119 doi: 10.3969/j.issn.1001-8972.2022.07.038

[15] Gao L P, Wang J Y, Wang Q X, et al. Road extraction using a dual attention dilated-LinkNet based on satellite images and floating vehicle trajectory data[J]. IEEE J Sel Top Appl Earth Obs Remote Sens, 2021, 14: 10428−10438. doi: 10.1109/JSTARS.2021.3116281

[16] Zhang H, Wu C R, Zhang Z Y, et al. ResNeSt: split-attention networks[C]//Proceedings of 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, New Orleans, LA, USA, 2022: 2735–2745. https://doi.org/10.1109/CVPRW56347.2022.00309.

[17] Cui Y, Yu Z K, Han J C, et al. Dual-triple attention network for hyperspectral image classification using limited training samples[J]. IEEE Geosci Remote Sens Lett, 2022, 19: 5504705. doi: 10.1109/LGRS.2021.3067348

[18] Chen L C, Zhu Y K, Papandreou G, et al. Encoder-decoder with atrous separable convolution for semantic image segmentation[C]//Proceedings of the 15th European Conference on Computer Vision, Munich, Germany, 2018: 833–851. https://doi.org/10.1007/978-3-030-01234-2_49.

[19] Diakogiannis F I, Waldner F, Caccetta P, et al. ResUNet-a: a deep learning framework for semantic segmentation of remotely sensed data[J]. ISPRS J Photogramm Remote Sens, 2020, 162: 94−114. doi: 10.1016/j.isprsjprs.2020.01.013

[20] Wang Y, Peng Y X, Li W, et al. DDU-Net: dual-decoder-U-Net for road extraction using high-resolution remote sensing images[J]. IEEE Trans Geosci Remote Sens, 2022, 60: 4412612. doi: 10.1109/TGRS.2022.3197546

[21] Qin X B, Zhang Z C, Huang C Y, et al. U2-Net: going deeper with nested U-structure for salient object detection[J]. Pattern Recognit, 2020, 106: 107404. doi: 10.1016/j.patcog.2020.107404

[22] Pang Y W, Zhao X Q, Zhang L H, et al. Multi-scale interactive network for salient object detection[C]//Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 2020: 9410–9419. https://doi.org/10.1109/CVPR42600.2020.00943.

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: