-

摘要

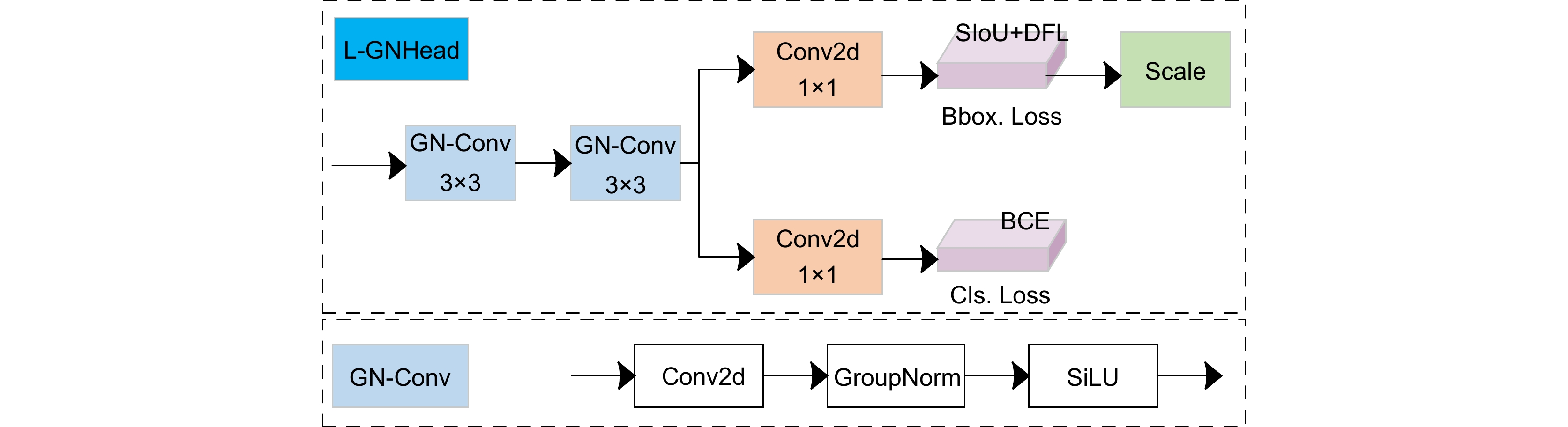

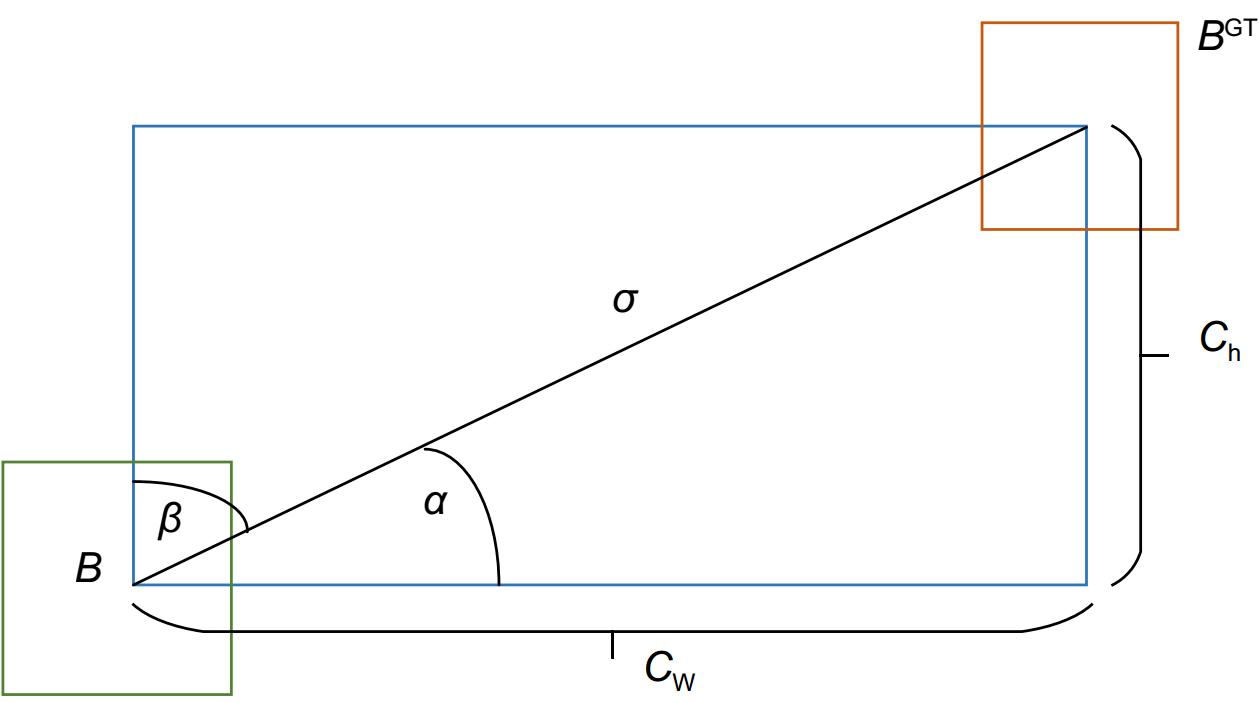

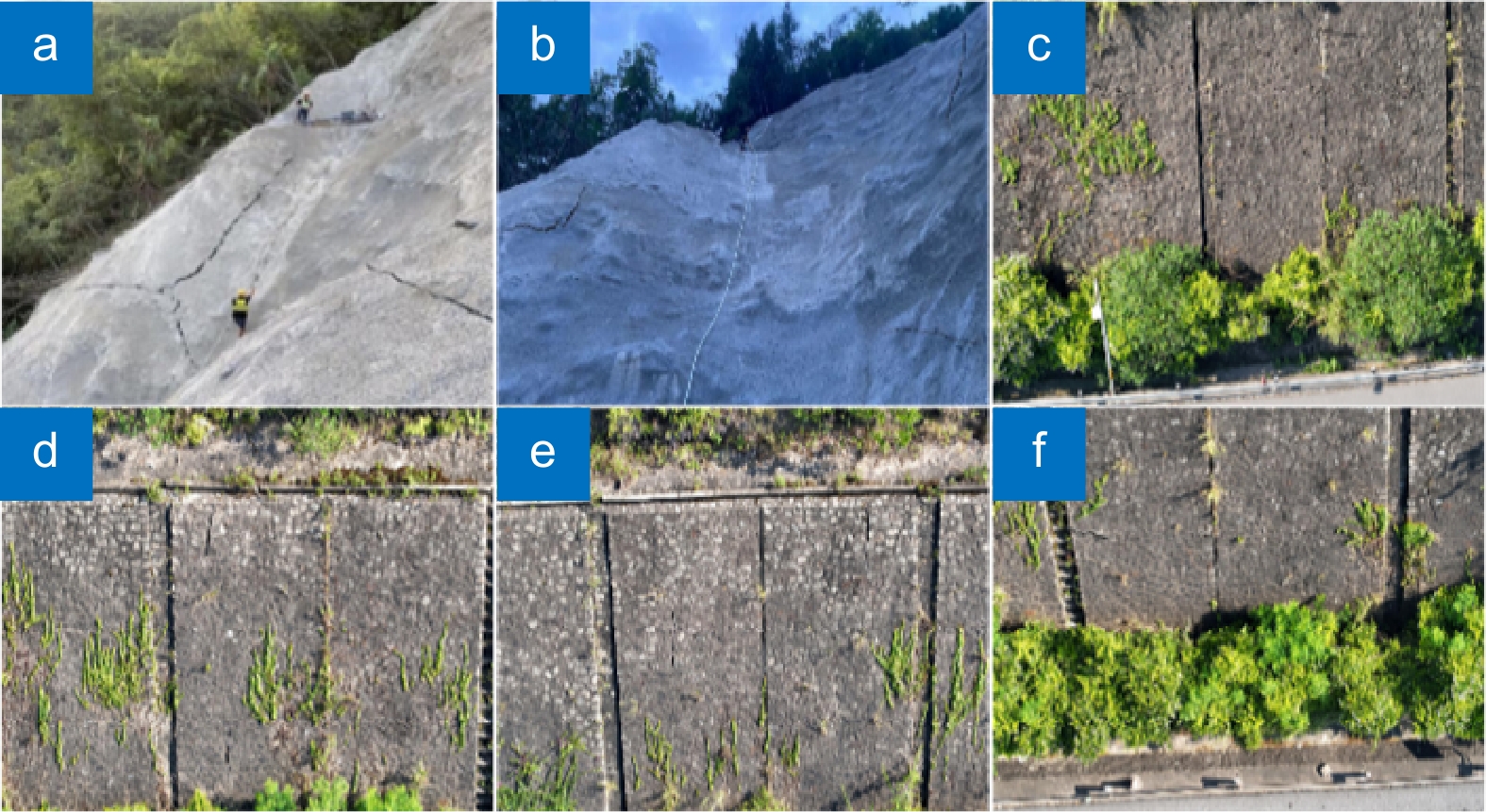

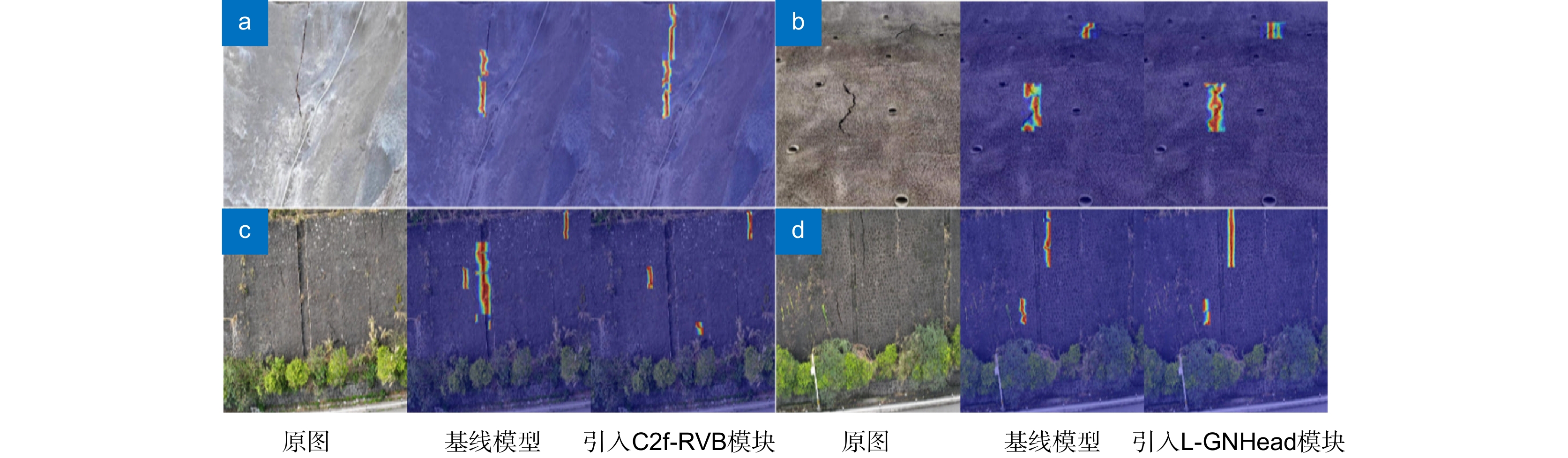

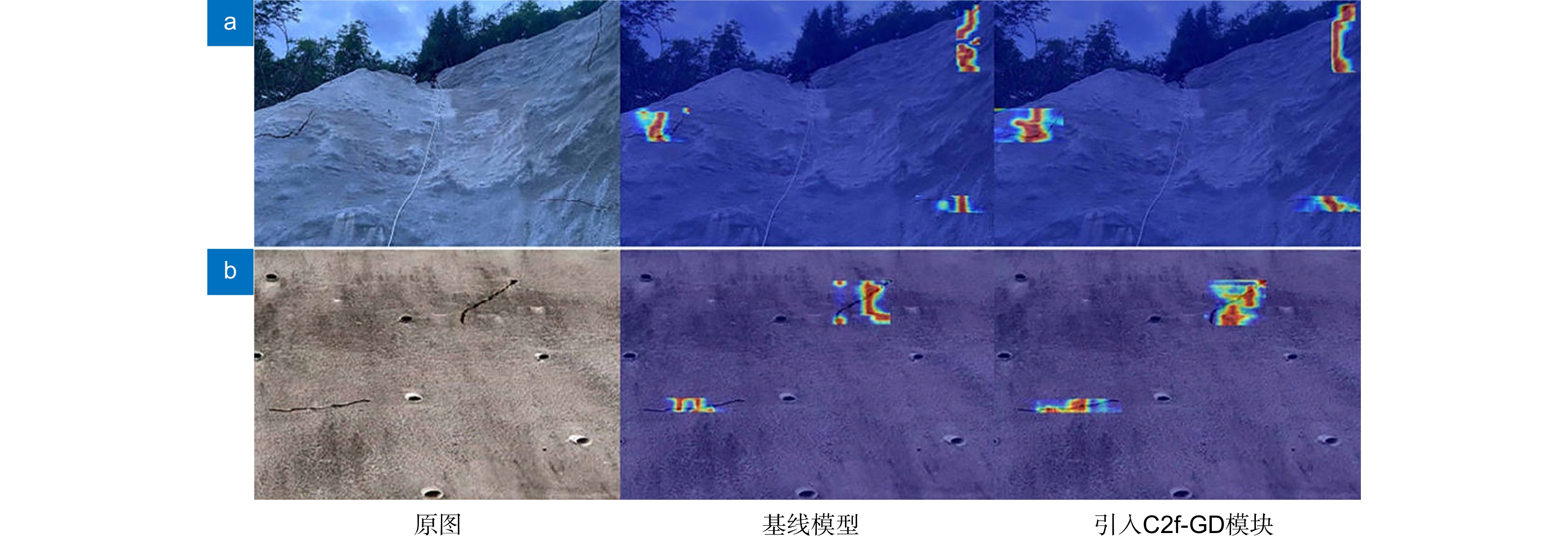

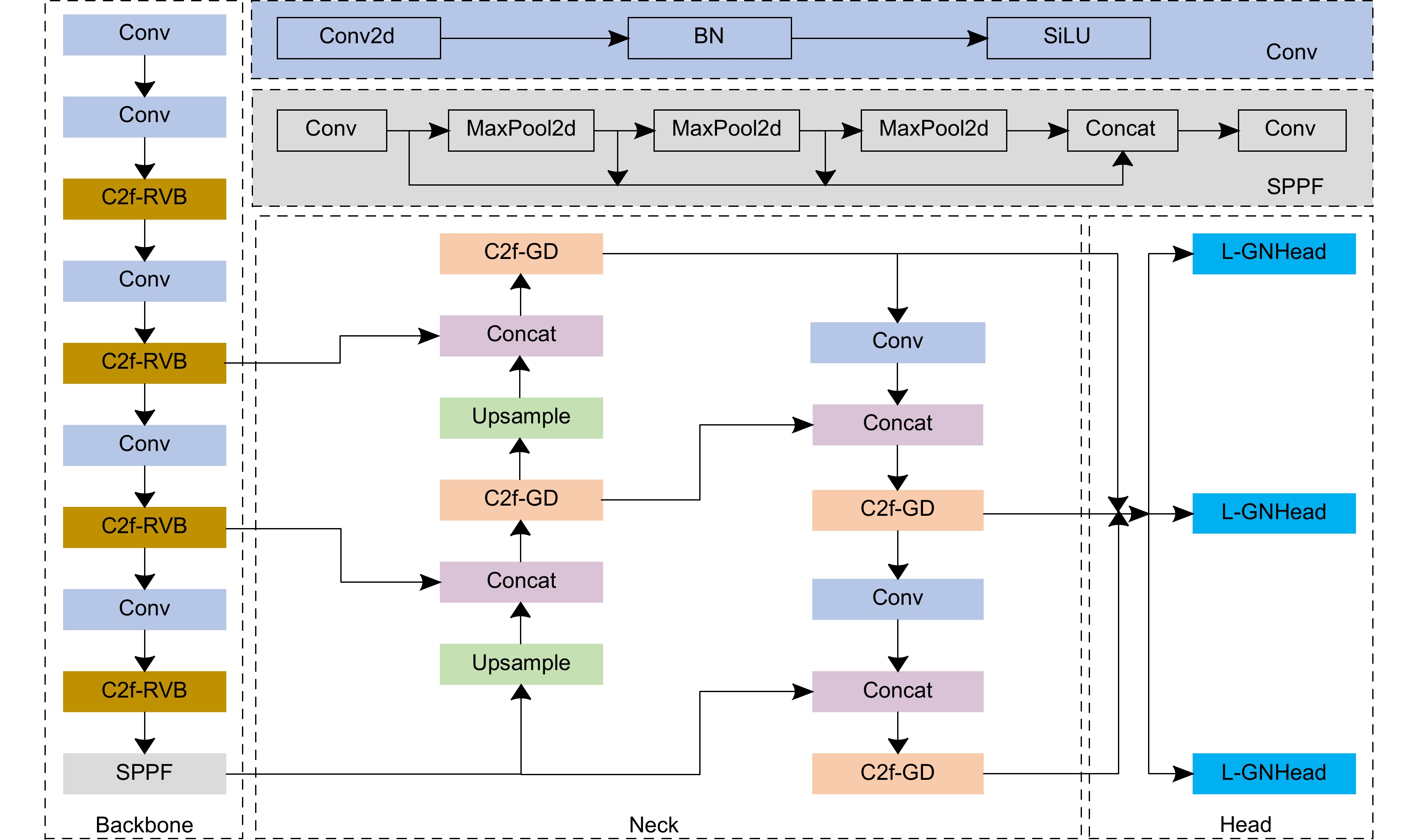

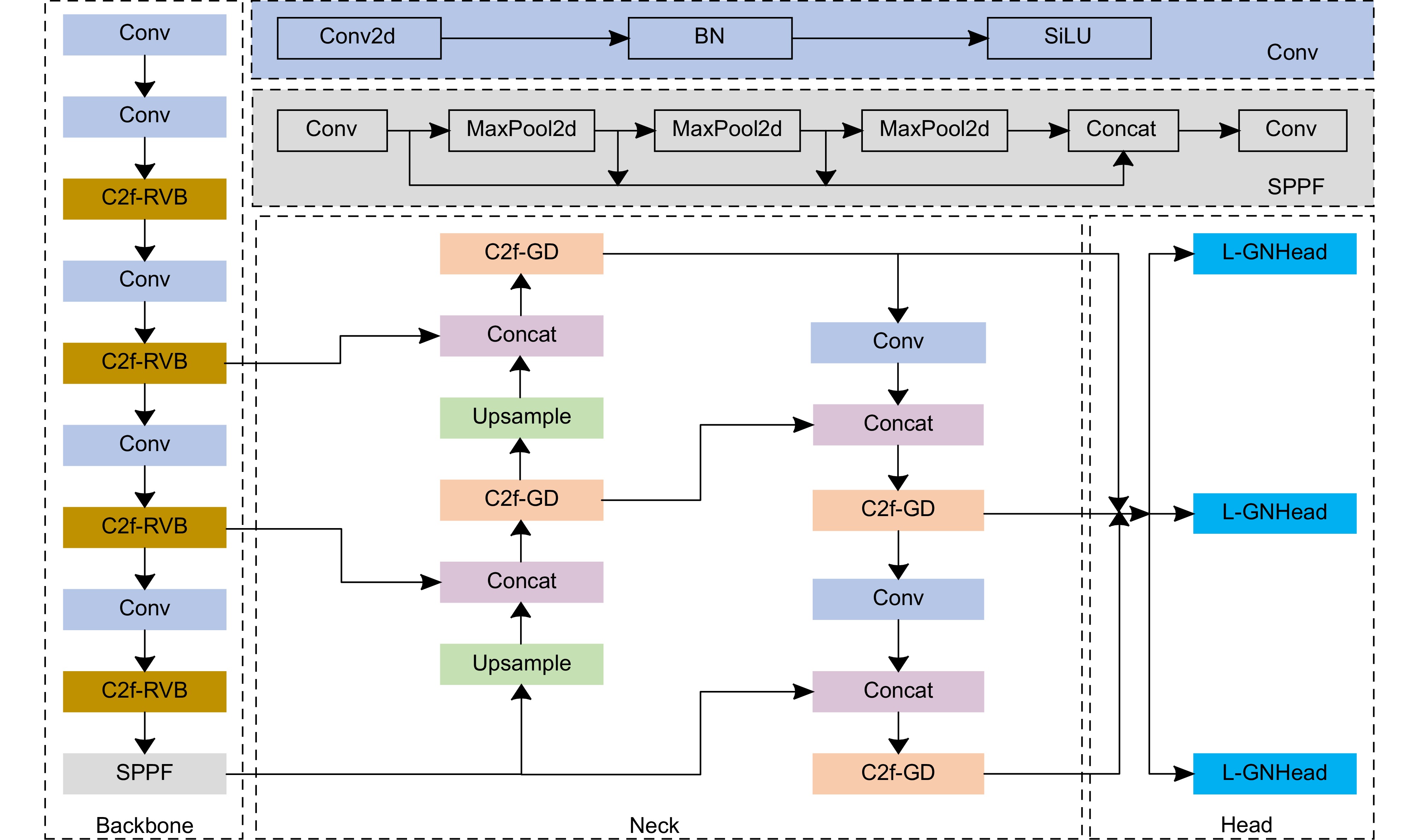

针对现有路基边坡裂缝检测算法中检测精度低、泛化能力弱等问题,提出了一种改进YOLOv8的路基边坡裂缝检测算法。首先,在主干网络中嵌入重参数化模块和轻量化模型的同时捕获裂缝细节与全局信息,提高模型的检测精度。其次,设计C2f-GD模块实现模型特征高效融合,增强模型的泛化能力。最后,设计轻量级检测头L-GNHead,提高对不同尺度的裂缝检测精度,同时采用SIoU损失函数加速模型收敛。在自建的路基边坡裂缝数据集上的实验结果表明,改进算法与原算法相比,mAP50和mAP50-95分别提升了3.3%和2.5%,参数量和计算量分别降低了46.6%和44.4%,速度提高了18 f/s。在数据集RDD2022的泛化性验证结果表明,改进算法不仅达到更高的检测精度,且检测速度更快。

Abstract

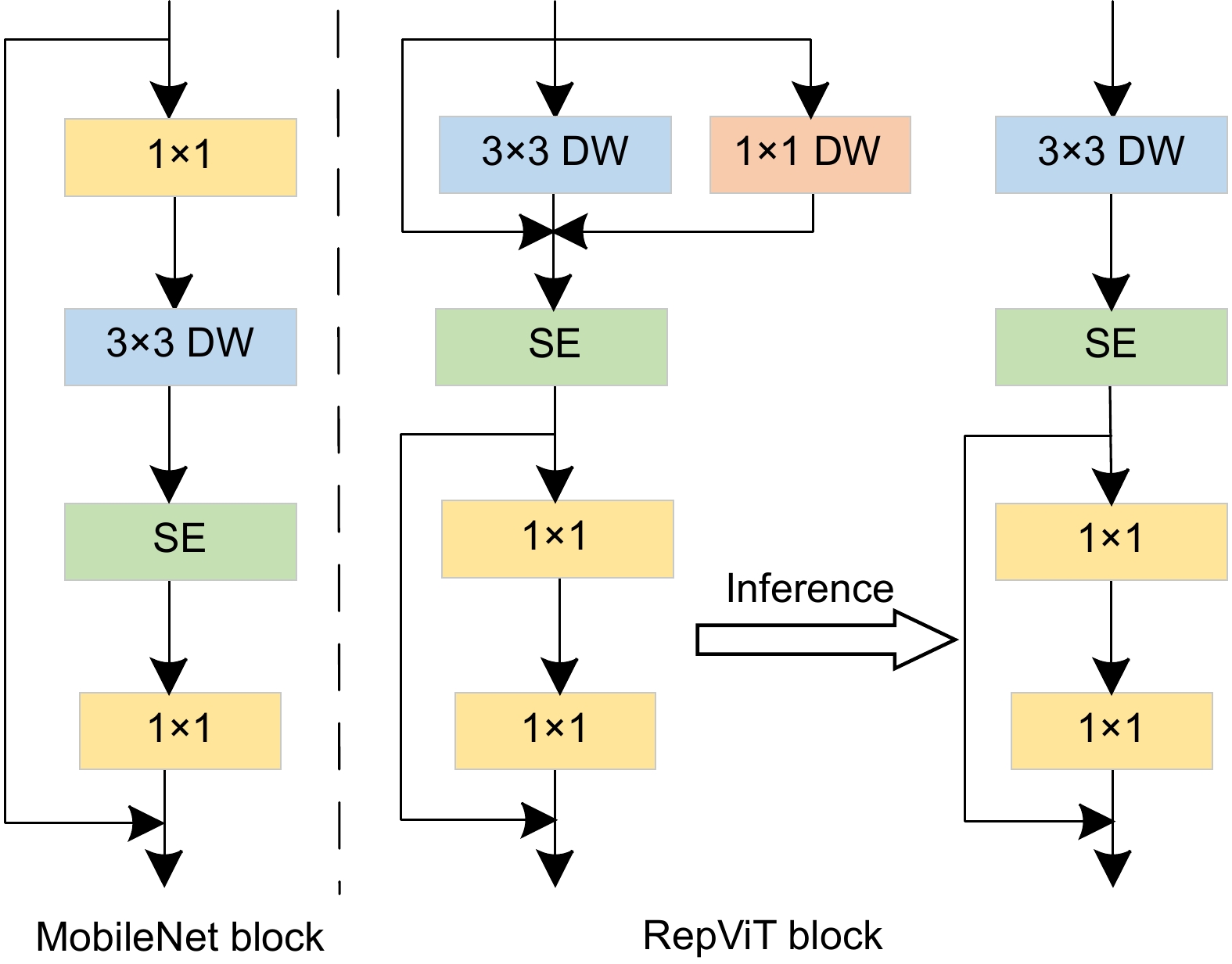

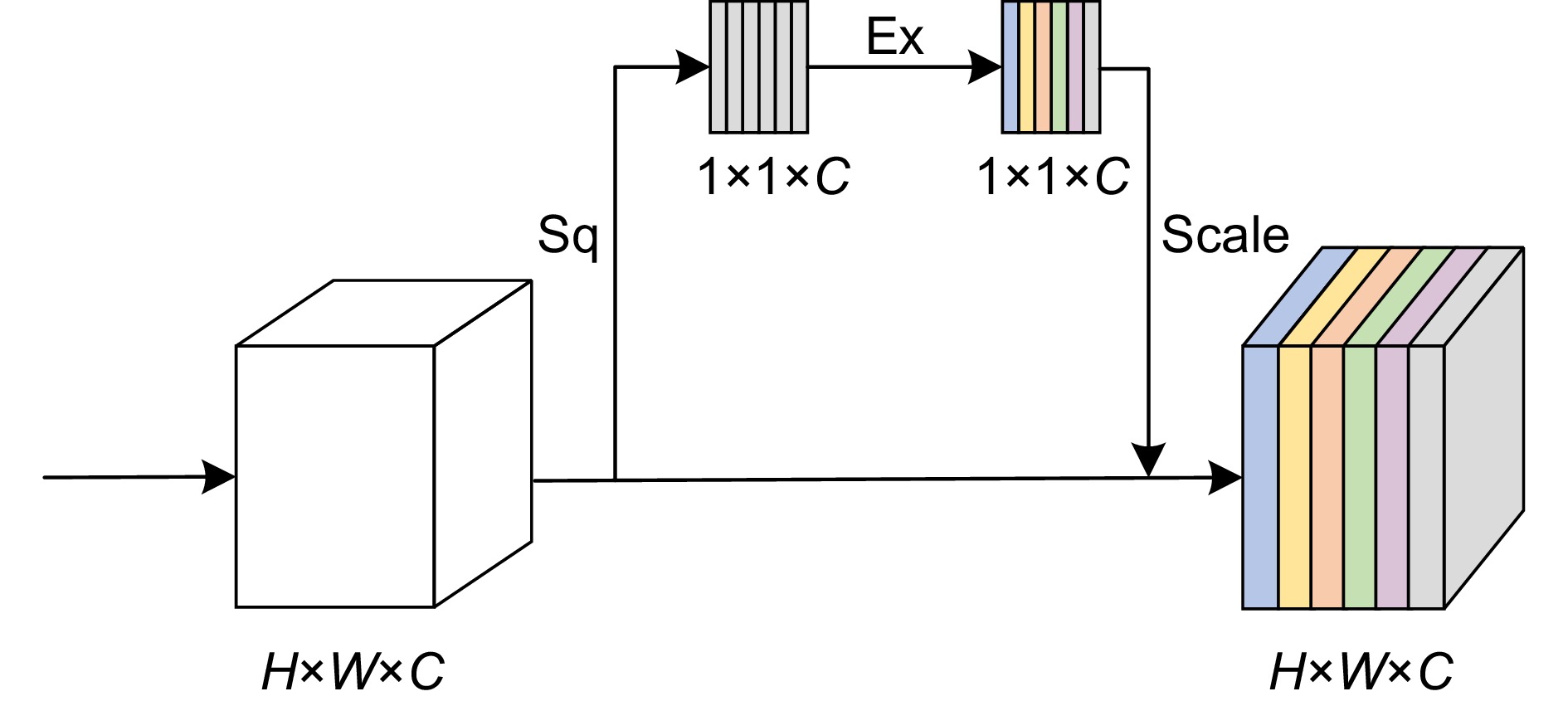

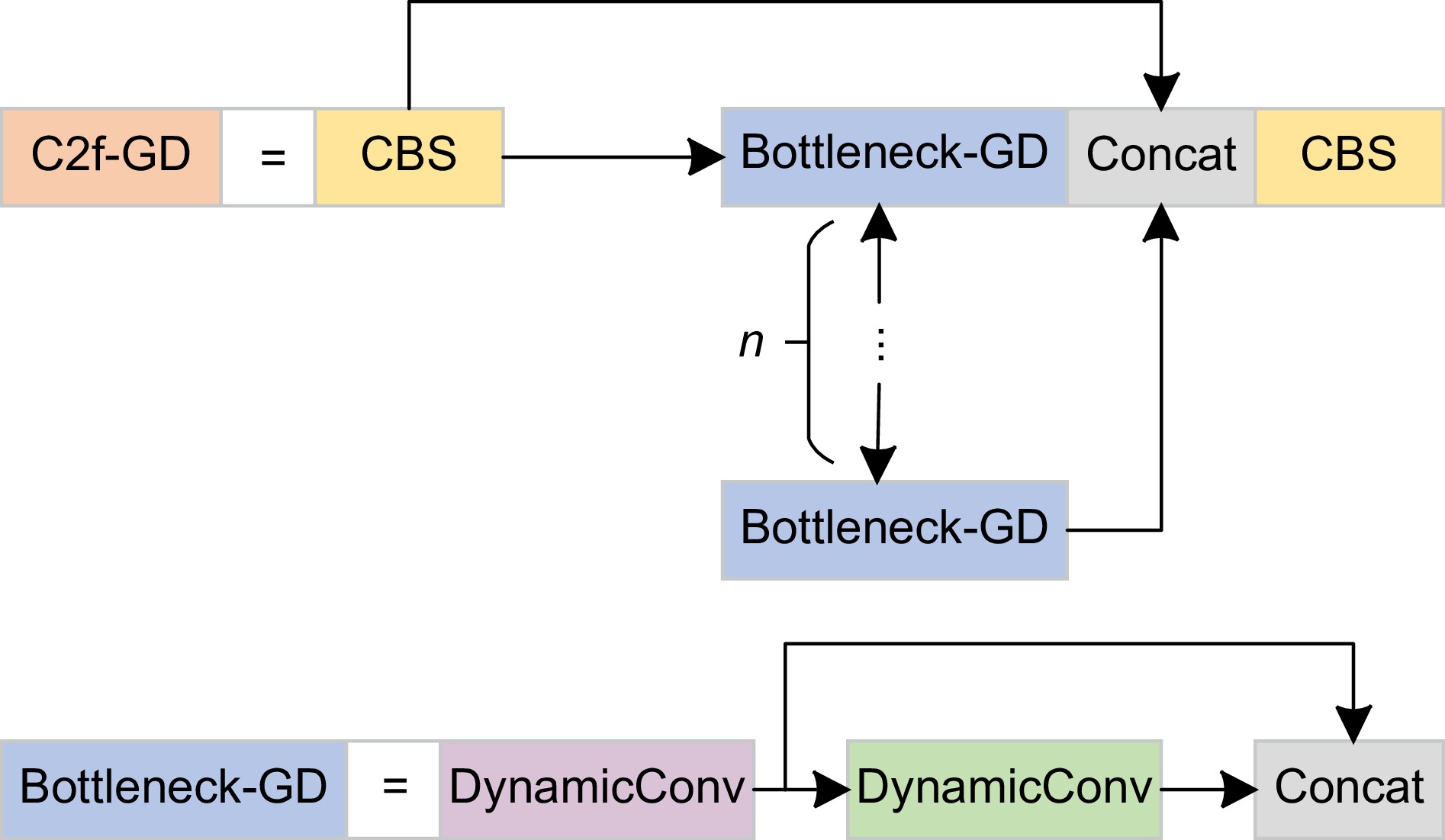

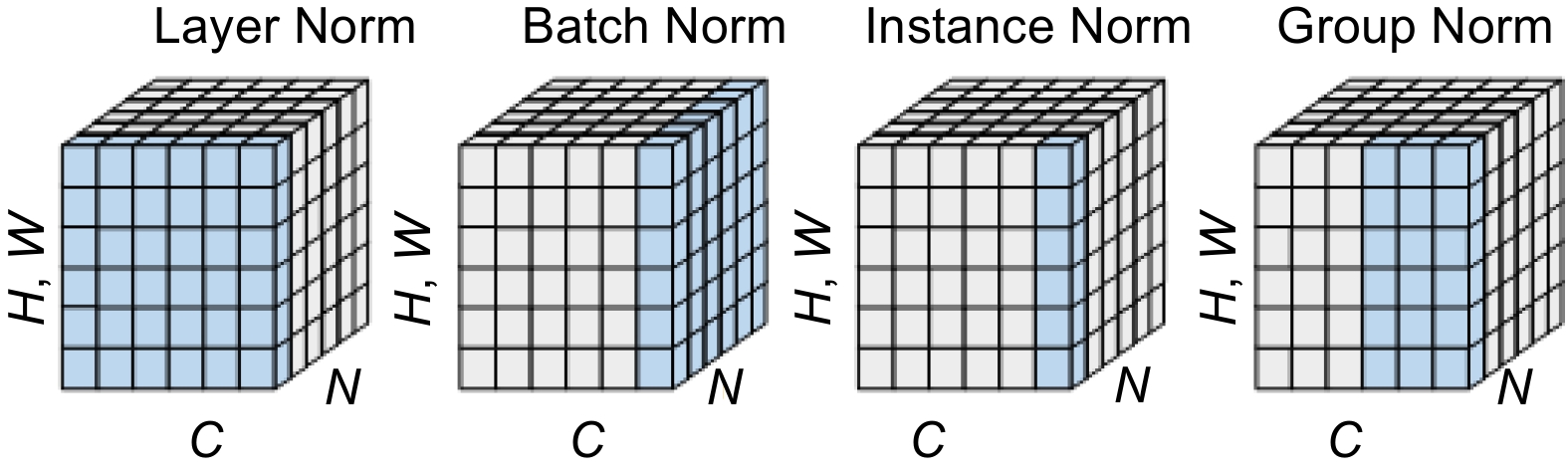

An improved YOLOv8 algorithm is proposed to address the problems of low detection accuracy and weak generalization ability in existing roadbed slope crack detection algorithms. Firstly, a reparameterization module is embedded in the backbone network to lighten the model while capturing crack details and global information, improving detection accuracy of the model. Secondly, the C2f-GD module is designed to achieve efficient fusion of model features and enhance the generalization ability of the model. Finally, the lightweight detection head L-GNHead is designed to improve the crack detection accuracy for different scales, while the SIoU loss function is used to accelerate model convergence. The experimental results on the self-constructed roadbed slope crack dataset show that the improved algorithm improves mAP50 and mAP50-95 by 3.3% and 2.5% respectively, reduces parameters and computational costs by 46.6% and 44.4% respectively, and improves FPS by 18 frames/s compared with the original algorithm. The generalization validation results on the dataset RDD2022 show that the improved algorithm not only achieves higher detection accuracy, but also faster detection speed.

-

Key words:

- YOLOv8 /

- slope cracks /

- reparameterization /

- C2f-GD /

- L-GNHead /

- SIoU

-

Overview

Overview: The road transportation network in our country is constantly optimizing, and the slope engineering of highways is a key link to ensure the safety and stability of roadbeds, and its importance is becoming increasingly prominent. Cracks, as the initial signs of most highway slope diseases, their increase, expansion, and evolution are intuitive manifestations of slope instability. Therefore, timely and accurate identification of these roadbed slope cracks is the significant for real-time monitoring and warning of highway slope disasters, as well as ensuring smooth and safe traffic. Traditional slope crack detection mainly relies on manual inspection, resulting in high detection costs and low efficiency. In recent years,while deep learning based object detection algorithms can identify cracks, they tend to be limited to a single simple scene. However, due to differences in the shape of slope cracks, complex backgrounds, and lighting conditions, there are problems such as low detection accuracy, complex network models that are difficult to meet real-time requirements, and poor model generalization. In response to the problems in current slope crack detection algorithms, this paper proposes an improved YOLOv8 algorithm roadbed slope crack detection algorithm. Firstly, a reparameterization module is embedded in the backbone network to enhance the network's feature extraction ability and improve the detection accuracy of the model. Then, a lightweight C2f-GD module is built in the neck network, which enhances the generalisation ability of the model. In addition, the lightweight detection head L-GNHead is designed, which greatly reduces the complexity of the model and improves the detection accuracy of slope cracks at different scales. Finally, the SIoU loss function is used to accelerate the model convergence and improve the detection accuracy. The experiment results show that the improved algorithm improves mAP50 and mAP50-95 by 3.3% and 2.5% respectively on the self-constructed slope crack dataset, effectively reducing missed and false detections of slope cracks. At the same time, the number of parameters and computational complexity of the model is reduced by 46.6% and 44.4% respectively, and the FPS is improved by 18 frames/s. In addition, this paper conducts generalization validation on the public dataset RDD2022, and the comprehensive results show that the improved algorithm makes the model more lightweight and efficient, which helps promote deployment on edge devices. The next step focuses on the deployment of the model on mobile devices and in-depth exploration based on the actual detection performance to better meet the needs of high-accuracy and real-time applications.

-

-

表 1 消融实验结果

Table 1. Results of ablation experiments

Model C2f-RVB C2f-GD L-GNHead SIoU mAP50/% mAP50-95/% Params/M GFLOPs FPS YOLOv8n 85.1 43.2 3.00 8.1 96.6 YOLOv8n_1 √ 86.5 44.6 2.64 7.0 86.8 YOLOv8n_2 √ 86.4 44.2 2.63 7.1 93.8 YOLOv8n_3 √ 86.1 43.9 2.36 6.5 103.1 YOLOv8n_4 √ 85.7 43.4 3.00 8.1 97.9 YOLOv8n_5 √ √ √ 87.9 46.0 1.95 5.6 99.4 YOLOv8n_6 √ √ 87.2 43.1 2.24 6.1 106.9 YOLOv8n_7 √ √ √ 87.6 45.3 1.60 4.5 104.0 YOLOv8n_8 √ √ √ √ 88.4 45.7 1.60 4.5 114.7 表 2 对比实验结果

Table 2. Comparison of the experimental results

Model mAP50/% mAP50-95/% Params/M GFLOPs FPS SSD 69.2 34.6 63.82 124.5 96.6 Faster-RCNN 70.4 37.8 137.42 371.4 18.7 RT-DETR-L[32] 81.6 39.8 28.44 100.6 32.8 YOLOv5n 82.4 41.3 2.50 7.1 110.1 YOLOv7tiny 81.5 39.5 6.12 12.4 109.8 YOLOv8n 85.1 43.2 3.00 8.1 96.6 YOLOv9s[33] 87.9 44.6 7.33 26.8 64.7 文献[34] 88.7 44.9 89.1 15.4 — 文献[35] 87.8 45.5 33.4 — — Ours 88.4 45.7 1.60 4.5 114.7 表 3 不同位置SE对比

Table 3. Comparison of SE at different positions

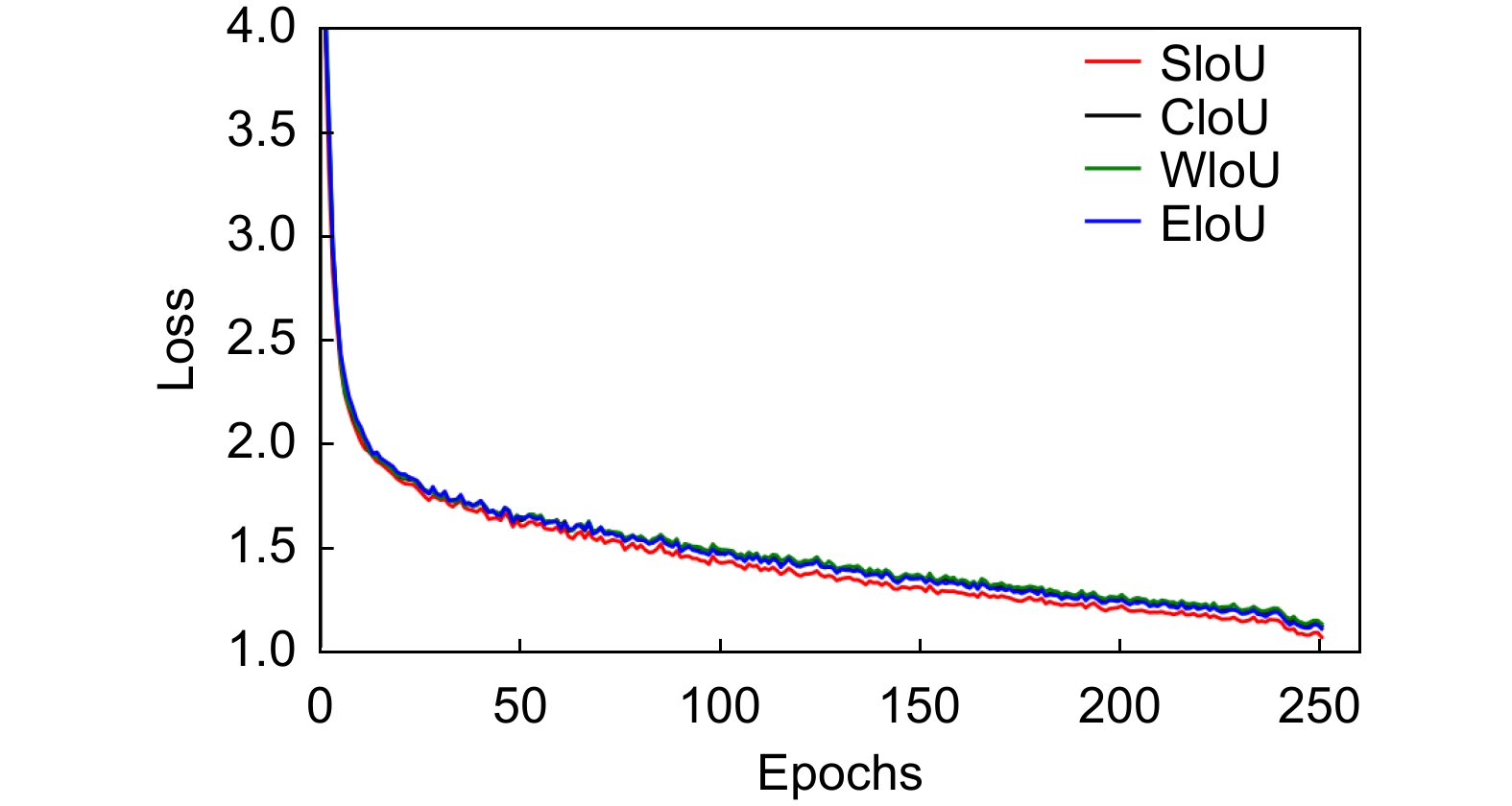

P/% R/% mAP50/% Params/M YOLOv8n 90.2 83.0 85.1 3.00 ① 89.5 82.9 84.9 2.64 ② 88.5 81.7 82.1 2.64 ③ 88.4 81.1 85.8 2.64 Ours 91.0 83.5 86.5 2.64 表 4 不同损失函数对比结果

Table 4. Comparison results of different loss functions

Loss P/% R/% mAP50/% Params/M CIoU 90.2 83.0 85.1 3.0 EIoU 89.5 82.6 83.8 3.0 WIoU 89.9 84.1 84.2 3.0 SIoU 89.9 84.2 85.7 3.0 表 5 RDD2022数据集对比实验结果

Table 5. Comparison of the experimental results on RDD2022 dataset

Model mAP50/% mAP50-95/% Params/M GFLOPs FPS Faster-RCNN 63.1 34.5 137.42 371.4 18.2 RT-DETR-L[32] 73.1 40.4 28.44 100.6 32.4 YOLOv5n 75.9 44.2 2.50 7.1 107.8 YOLOv7tiny 75.5 44.1 6.12 12.4 108.5 YOLOv8n 76.3 44.9 3.00 8.1 95.8 YOLOv9s[33] 77.9 44.6 7.33 26.8 65.2 文献[34] 78.4 45.1 89.1 15.4 — 文献[35] 78.1 44.5 33.4 — — Ours 78.9 44.7 1.60 4.5 111.6 -

参考文献

[1] Phi T T, Kulatilake P H S W, Ankah M L Y, et al. Rock mass statistical homogeneity investigation along a highway corridor in Vietnam[J]. Eng Geol, 2021, 289: 106176. doi: 10.1016/j.enggeo.2021.106176

[2] Yan K, Zhang Z H. Automated asphalt highway pavement crack detection based on deformable single shot multi-box detector under a complex environment[J]. IEEE Access, 2021, 9: 150925−150938. doi: 10.1109/ACCESS.2021.3125703

[3] Zhang X. Highway crack material detection algorithm based on digital image processing technology[J]. J Phys Conf Ser, 2023, 2425(1): 012067. doi: 10.1088/1742-6596/2425/1/012067

[4] Weng X X, Huang Y C, Li Y N, et al. Unsupervised domain adaptation for crack detection[J]. Autom Constr, 2023, 153: 104939. doi: 10.1016/j.autcon.2023.104939

[5] Yang Y T, Mei G. Deep transfer learning approach for identifying slope surface cracks[J]. Appl Sci, 2021, 11(23): 11193. doi: 10.3390/app112311193

[6] Huang Z K, Chang D D, Yang X F, et al. A deep learning-based approach for crack damage detection using strain field[J]. Eng Fract Mech, 2023, 293: 109703. doi: 10.1016/j.engfracmech.2023.109703

[7] Zhu X K, Lyu S C, Wang X, et al. TPH-YOLOv5: improved YOLOv5 based on transformer prediction head for object detection on drone-captured scenarios[C]//Proceedings of 2021 IEEE/CVF International Conference on Computer Vision Workshops, 2021: 2778–2788. https://doi.org/10.1109/ICCVW54120.2021.00312.

[8] Li C Y, Li L L, Jiang H L, et al. YOLOv6: a single-stage object detection framework for industrial applications[Z]. arXiv: 2209.02976, 2022. https://arxiv.org/abs/2209.02976.

[9] Wang C Y, Bochkovskiy A, Liao H Y M. YOLOv7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023: 7464–7475. https://doi.org/10.1109/CVPR52729.2023.00721.

[10] Gong L X, Huang X, Chao Y K, et al. Correction to: an enhanced SSD with feature cross-reinforcement for small-object detection[J]. Appl Intell, 2023, 53(18): 21483−21484. doi: 10.1007/s10489-023-04640-2

[11] 刘星, 莫思特, 张江, 等. 轻量化模型的PeleeNet_yolov3地表裂缝识别[J]. 哈尔滨工业大学学报, 2023, 55(4): 81−89. doi: 10.11918/202112015

Liu X, Mo S T, Zhang J, et al. PeleeNet_yolov3 surface crack identification with lightweight model[J]. J Harbin Inst Technol, 2023, 55(4): 81−89. doi: 10.11918/202112015

[12] 周中, 张俊杰, 鲁四平. 基于改进YOLOv4的隧道衬砌裂缝检测算法[J]. 铁道学报, 2023, 45(10): 162−170. doi: 10.3969/j.issn.1001-8360.2023.10.019

Zhou Z, Zhang J J, Lu S P. Tunnel lining crack detection algorithm based on improved YOLOv4[J]. J China Railway Soc, 2023, 45(10): 162−170. doi: 10.3969/j.issn.1001-8360.2023.10.019

[13] Hu Q F, Wang P, Li S M, et al. Research on intelligent crack detection in a deep-cut canal slope in the Chinese South–North water transfer project[J]. Remote Sens, 2022, 14(21): 5384. doi: 10.3390/rs14215384

[14] 陈旭, 彭冬亮, 谷雨. 基于改进YOLOv5s的无人机图像实时目标检测[J]. 光电工程, 2022, 49(3): 210372. doi: 10.12086/oee.2022.210372

Chen X, Peng D L, Gu Y. Real-time object detection for UAV images based on improved YOLOv5s[J]. Opto-Electron Eng, 2022, 49(3): 210372. doi: 10.12086/oee.2022.210372

[15] Zhao C, Shu X, Yan X, et al. RDD-YOLO: a modified YOLO for detection of steel surface defects[J]. Measurement, 2023, 214: 112776. doi: 10.1016/j.measurement.2023.112776

[16] 倪昌双, 李林, 罗文婷, 等. 改进YOLOv7的沥青路面病害检测[J]. 计算机工程与应用, 2023, 59(13): 305−316. doi: 10.3778/j.issn.1002-8331.2301-0098

Ni C S, Li L, Luo W T, et al. Disease detection of asphalt pavement based on improved YOLOv7[J]. Comput Eng Appl, 2023, 59(13): 305−316. doi: 10.3778/j.issn.1002-8331.2301-0098

[17] 梁礼明, 龙鹏威, 卢宝贺, 等. 改进GBS-YOLOv7t的钢材表面缺陷检测[J]. 光电工程, 2024, 51(5): 240044. doi: 10.12086/oee.2024.240044

Liang L M, Long P W, Lu B H, et al. Improvement of GBS-YOLOv7t for steel surface defect detection[J]. Opto-Electron Eng, 2024, 51(5): 240044. doi: 10.12086/oee.2024.240044

[18] Xu X Q, Li Q, Li S E, et al. Crack width recognition of tunnel tube sheet based on YOLOv8 algorithm and 3D imaging[J]. Buildings, 2024, 14(2): 531. doi: 10.3390/buildings14020531

[19] Talaat F M, Zaineldin H. An improved fire detection approach based on YOLO-v8 for smart cities[J]. Neural Comput Appl, 2023, 35(28): 20939−20954. doi: 10.1007/s00521-023-08809-1

[20] Beyene D A, Tran D Q, Maru M B, et al. Unsupervised domain adaptation-based crack segmentation using transformer network[J]. J Build Eng, 2023, 80: 107889. doi: 10.1016/j.jobe.2023.107889

[21] Wang A, Chen H, Lin Z J, et al. Rep ViT: revisiting mobile CNN from ViT perspective[C]//Proceedings of 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024. https://doi.org/10.1109/CVPR52733.2024.01506.

[22] Howard A, Sandler M, Chen B, et al. Searching for MobileNetV3[C]//Proceedings of 2019 IEEE/CVF International Conference on Computer Vision, 2019: 1314–1324. https://doi.org/10.1109/ICCV.2019.00140.

[23] Hu J, Shen L, Albanie S, et al. Squeeze-and-excitation networks[J]. IEEE Trans Pattern Anal Mach Intell, 2020, 42(8): 2011−2023. doi: 10.1109/TPAMI.2019.2913372

[24] Han K, Wang Y H, Tian Q, et al. GhostNet: more features from cheap operations[C]//Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020: 1577–1586. https://doi.org/10.1109/CVPR42600.2020.00165.

[25] Chen Y P, Dai X Y, Liu M C, et al. Dynamic convolution: attention over convolution kernels[C]//Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020: 1030–11039. https://doi.org/10.1109/CVPR42600.2020.01104.

[26] Han K, Wang Y H, Guo J Y, et al. ParameterNet: parameters are all you need for large-scale visual pretraining of mobile networks[C]//Proceedings of 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024: 15751–15761. https://doi.org/10.1109/CVPR52733.2024.01491.

[27] Wu Y X, He K M. Group normalization[C]//Proceedings of the 15th European Conference on Computer Vision, 2018: 3–19. https://doi.org/10.1007/978-3-030-01261-8_1.

[28] Lin T Y, Dollár P, Girshick R, et al. Feature pyramid networks for object detection[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, 2017: 936–944. https://doi.org/10.1109/CVPR.2017.106.

[29] Zheng Z H, Wang P, Liu W, et al. Distance-IoU loss: faster and better learning for bounding box regression[C]//Proceedings of the 34th AAAI Conference on Artificial Intelligence, 2020: 12993–13000. https://doi.org/10.1609/aaai.v34i07.6999.

[30] Gevorgyan Z. SIoU loss: more powerful learning for bounding box regression[Z]. arXiv: 2205.12740, 2022. https://arxiv.org/abs/2205.12740.

[31] Arya D, Maeda H, Ghosh S K, et al. RDD2022: a multi‐national image dataset for automatic road damage detection[Z]. arXiv: 2209.08538, 2022. https://arxiv.org/abs/2209.08538.

[32] Zhao Y, Lv W Y, Xu S L, et al. DETRs beat YOLOs on real-time object detection[C]//Proceedings of 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024: 16965–16974. https://doi.org/10.1109/CVPR52733.2024.01605.

[33] Shi Y G, Li S K, Liu Z Y, et al. MTP-YOLO: you only look once based maritime tiny person detector for emergency rescue[J]. J Mar Sci Eng, 2024, 12(4): 669. doi: 10.3390/jmse12040669

[34] Woo S, Debnath S, Hu R H, et al. ConvNeXt V2: co-designing and scaling ConvNets with masked autoencoders[C]// Proceedings of 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023: 16133–16142. https://doi.org/10.1109/CVPR52729.2023.01548.

[35] Subramanyam A V, Singal N, Verma V K. Resource efficient perception for vision systems[Z]. arXiv: 2405.07166, 2024. https://arxiv.org/abs/2405.07166.

[36] Zhang Y F, Ren W Q, Zhang Z, et al. Focal and efficient IOU loss for accurate bounding box regression[J]. Neurocomputing, 2022, 506: 146−157. doi: 10.1016/j.neucom.2022.07.042

[37] Tong Z J, Chen Y H, Xu Z W, et al. Wise-IoU: bounding box regression loss with dynamic focusing mechanism[Z]. arXiv: 2301.10051, 2023. https://arxiv.org/abs/2301.10051.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: