No-reference light field image quality assessment based on joint spatial-angular information

-

摘要

光场图像通过记录多个视点信息可为用户提供更加全面真实的视觉体验,但采集和可视化过程中引入的失真会严重影响其视觉质量。因此,如何有效地评价光场图像质量是一个巨大挑战。本文结合空间-角度特征和极平面信息提出了一种基于深度学习的无参考光场图像质量评价方法。首先,构建了空间-角度特征提取网络,通过多级连接以达到捕获多尺度语义信息的目的,并采用多尺度融合方式实现双重特征有效提取;其次,提出双向极平面图像特征学习网络,以有效评估光场图像角度一致性;最后,通过跨特征融合并线性回归输出图像质量分数。在三个通用数据集上的对比实验结果表明,所提出方法明显优于经典的2D图像和光场图像质量评价方法,其评价结果与主观评价结果的一致性更高。

Abstract

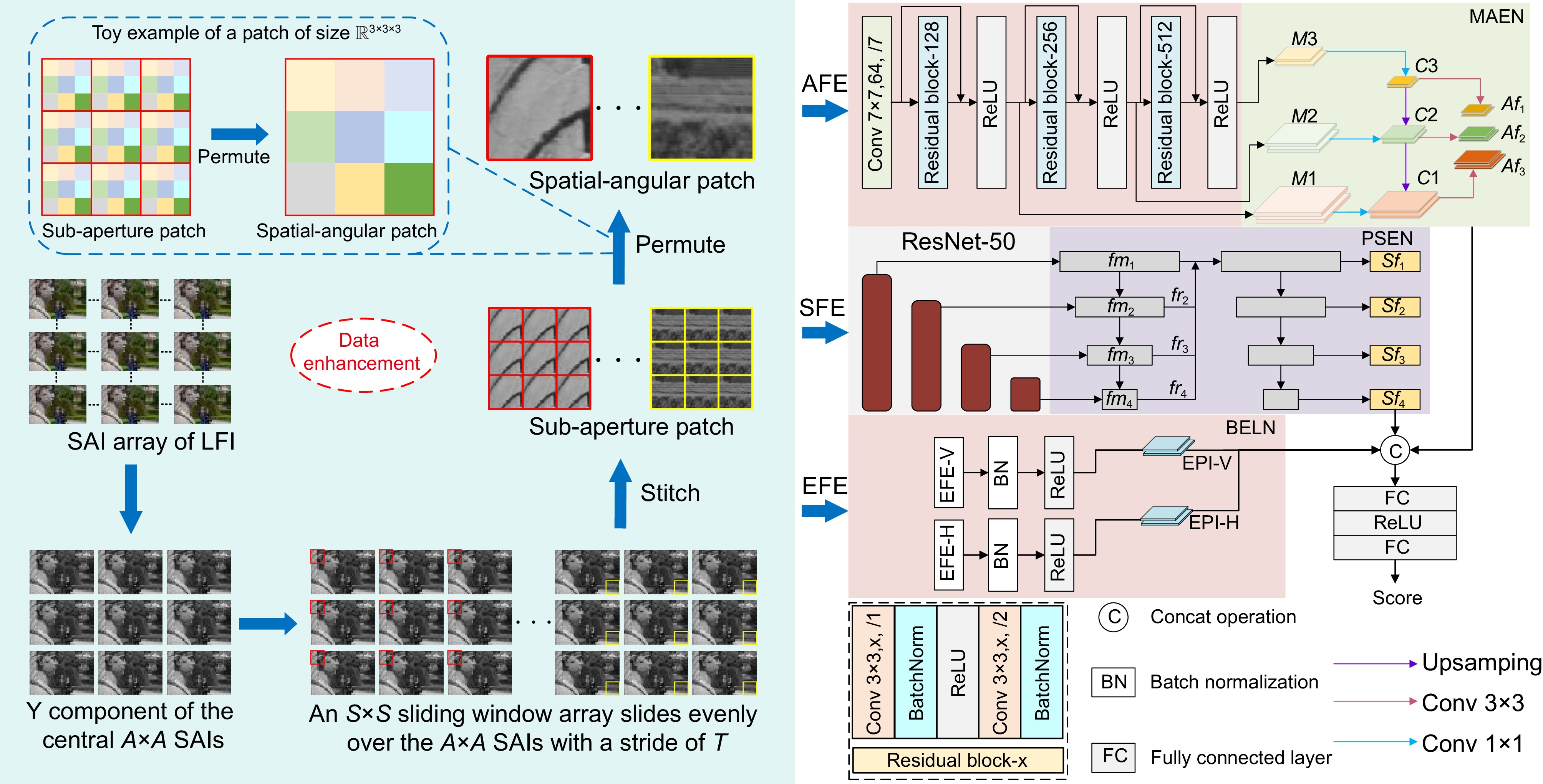

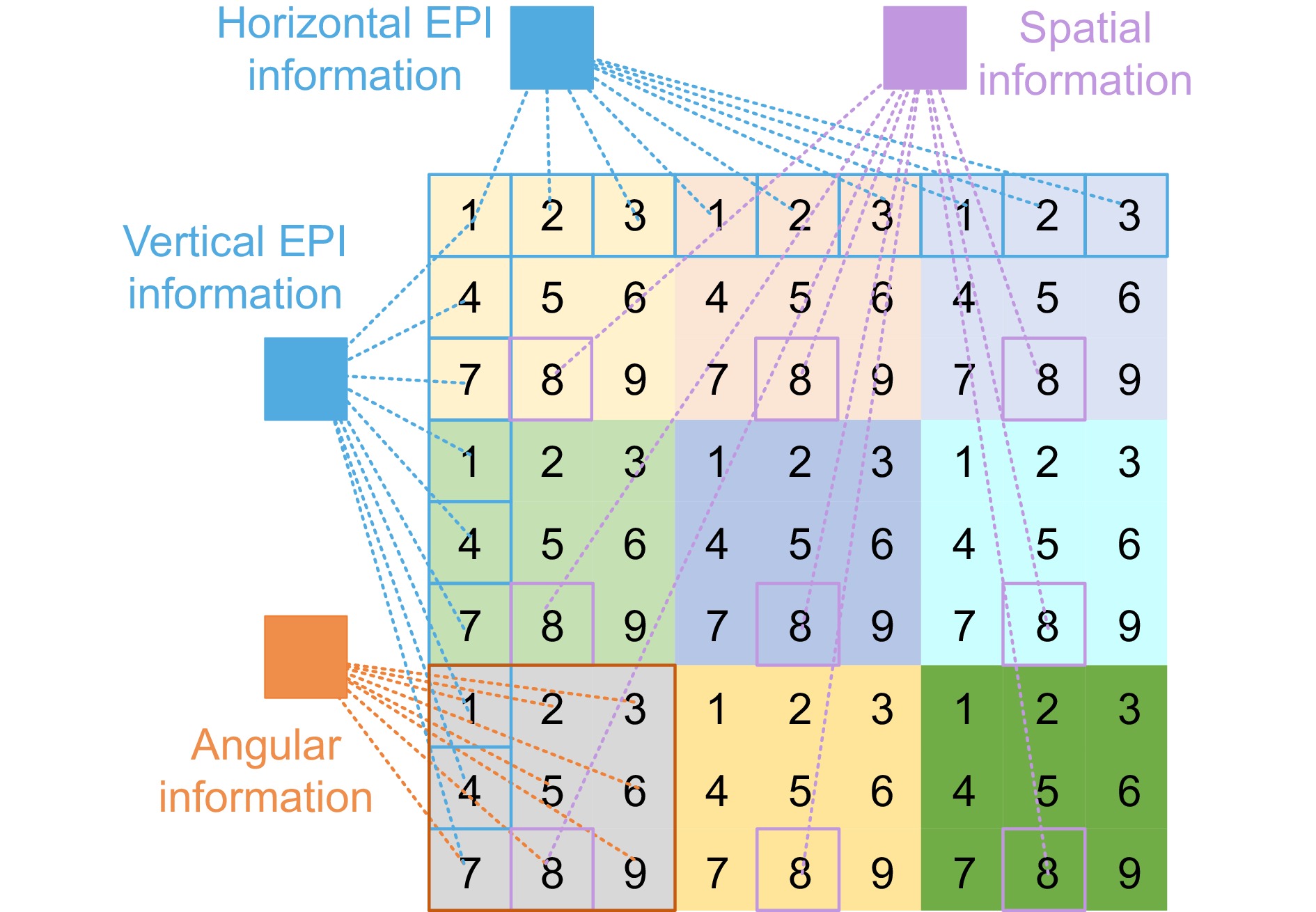

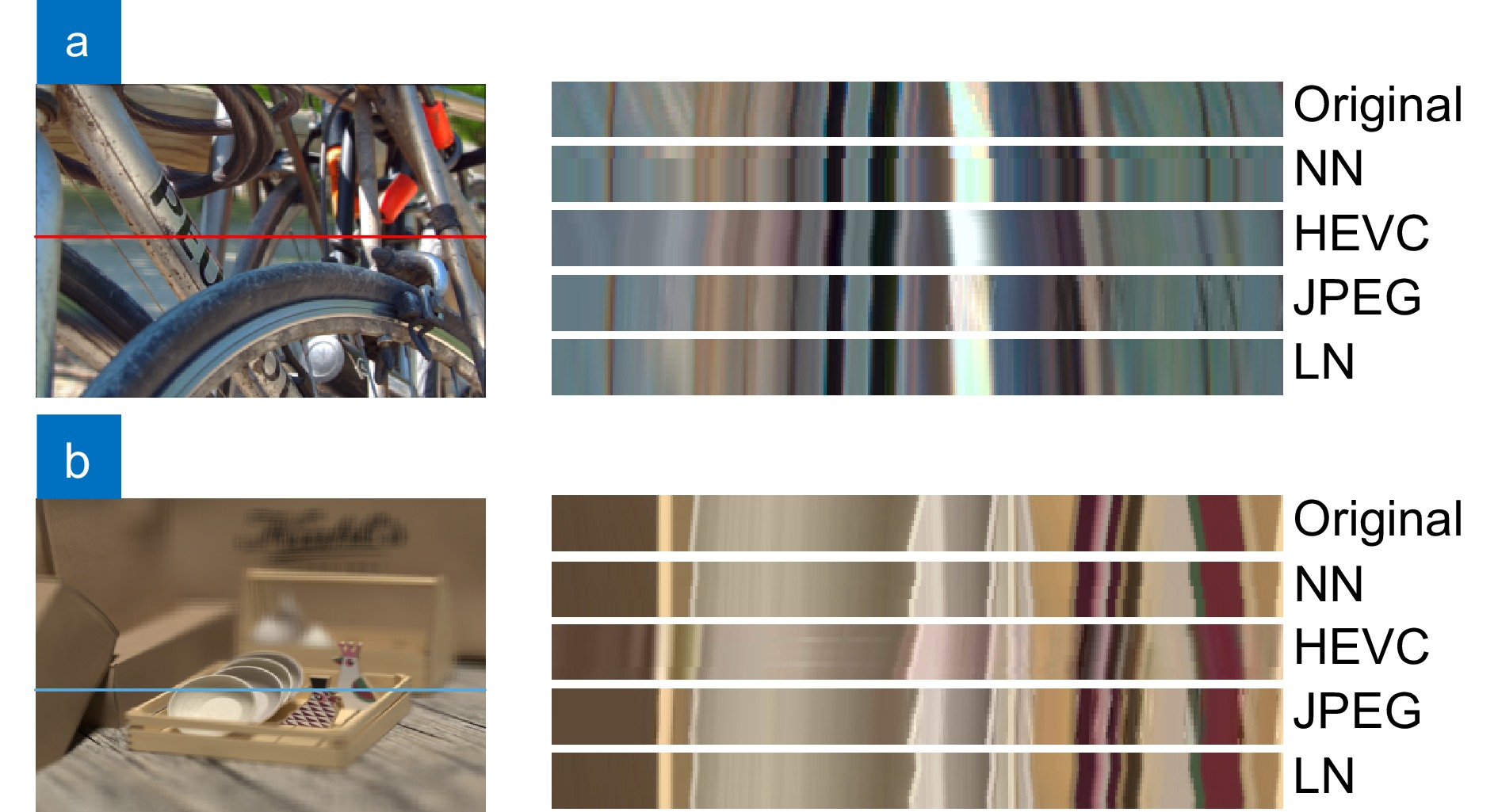

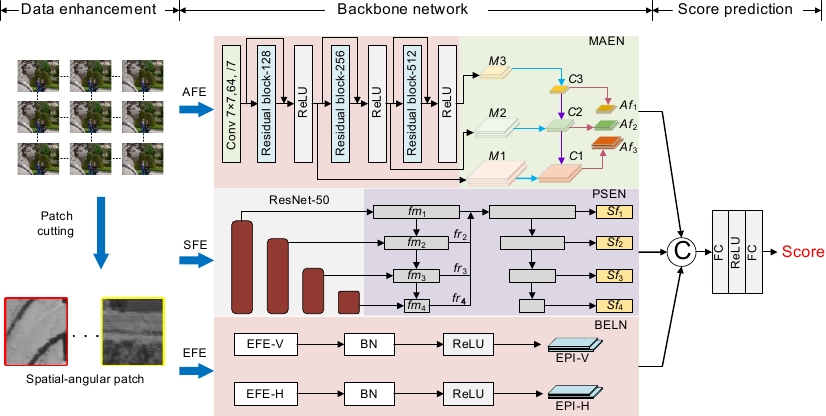

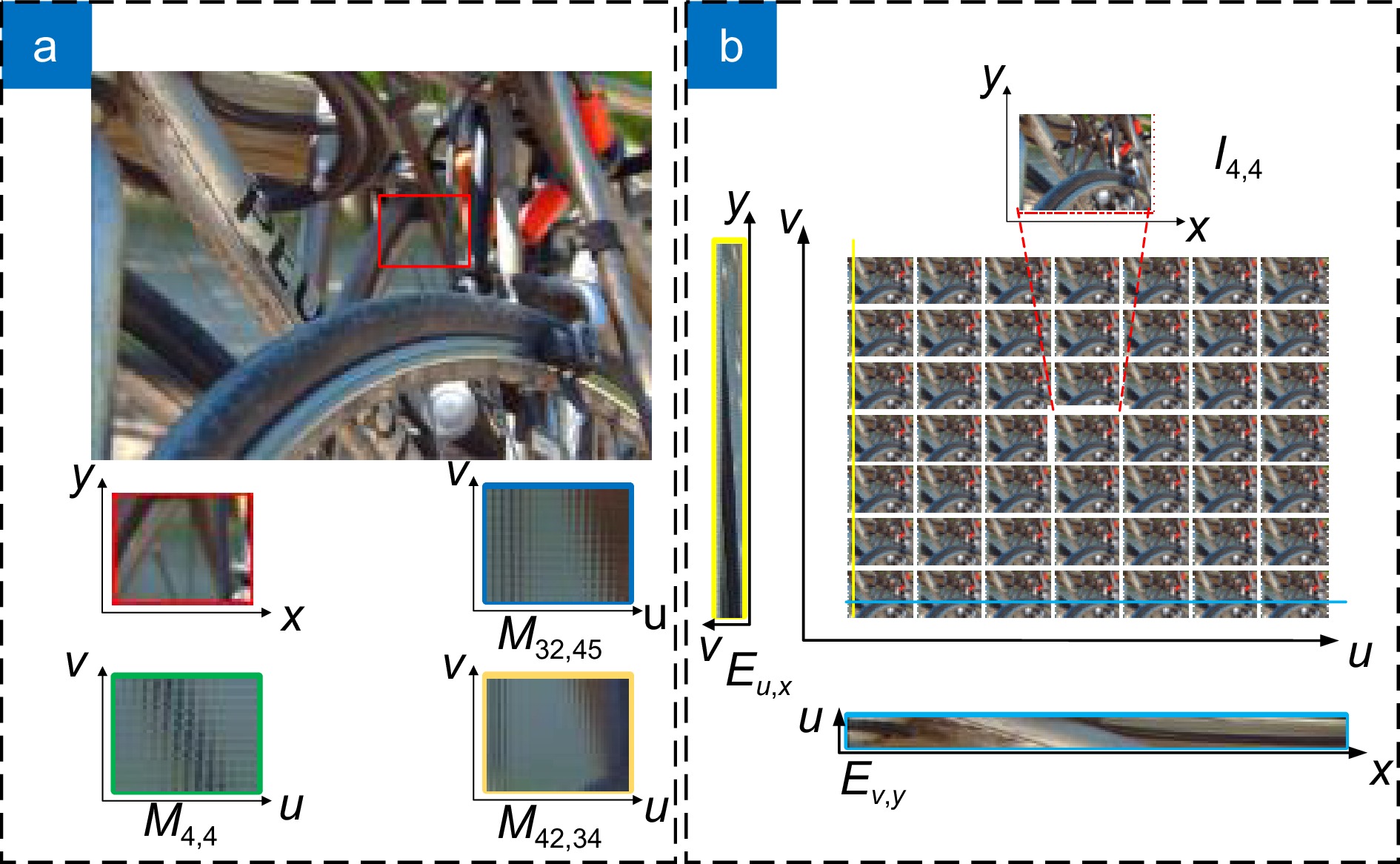

Light field images provide users with a more comprehensive and realistic visual experience by recording information from multiple viewpoints. However, distortions introduced during the acquisition and visualization process can severely impact their visual quality. Therefore, effectively evaluating the quality of light field images is a significant challenge. This paper proposes a no-reference light field image quality assessment method based on deep learning, combining spatial-angular features and epipolar plane information. Firstly, a spatial-angular feature extraction network is constructed to capture multi-scale semantic information through multi-level connections, and a multi-scale fusion approach is employed to achieve effective dual-feature extraction. Secondly, a bidirectional epipolar plane image feature learning network is proposed to effectively assess the angular consistency of light field images. Finally, image quality scores are output through cross-feature fusion and linear regression. Comparative experimental results on three common datasets indicate that the proposed method significantly outperforms classical 2D image and light field image quality assessment methods, with a higher consistency with subjective evaluation results.

-

Overview

Overview: Light field imaging, as an emerging media dissemination method, differs from traditional 2D and stereoscopic images in its ability to capture the intensity of light in scenes and the directional information of light rays in free space. Due to its rich spatial and angular information, light field imaging finds extensive applications in depth estimation, refocusing, and 3D reconstruction. However, during acquisition, compression, transmission, and reconstruction, light field images inevitably suffer from various distortions, leading to a decline in image quality. Light field image quality assessment (LFIQA) plays a crucial role in enhancing the quality of these images. Based on the characteristics of light field images, this paper proposes a no-reference image quality assessment (NRIQA) scheme that integrates spatial-angular information and epipolar plane image (EPI) information using deep learning. Specifically, this approach estimates the overall quality of distorted light field images by assessing the perceptual quality of image blocks. To simulate human visual perception, it employs two multi-scale feature extraction methods to establish subtle correlations between local and global features, thereby capturing information on spatial and angular distortions. Considering the unique angular properties of light field images, a bidirectional EPI feature learning network is additionally designed to acquire vertical and horizontal disparity information, enhancing consideration of angular consistency distortions in images. Finally, by aggregating across different features, the method integrates three distinct image features to predict the quality of distorted images. Experimental results conducted on three publicly available light field image quality assessment datasets demonstrate that the proposed method achieves higher consistency between objective quality prediction and subjective evaluation, showcasing excellent predictive accuracy.

-

-

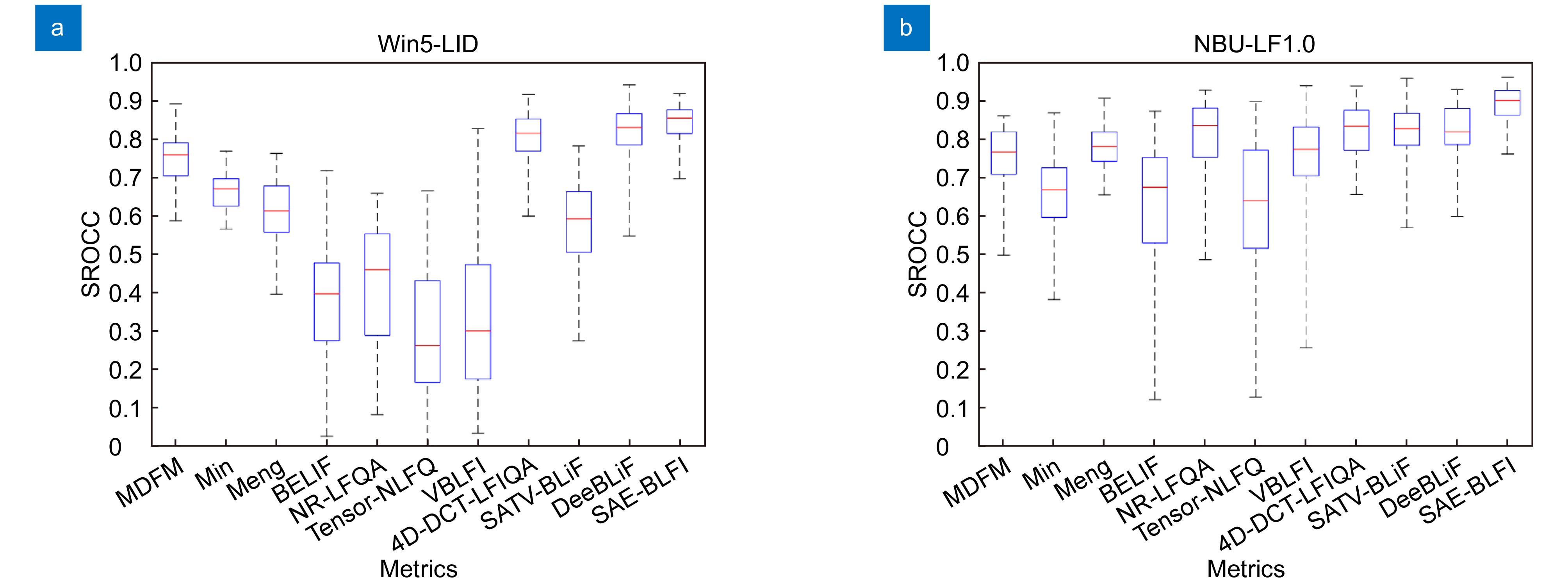

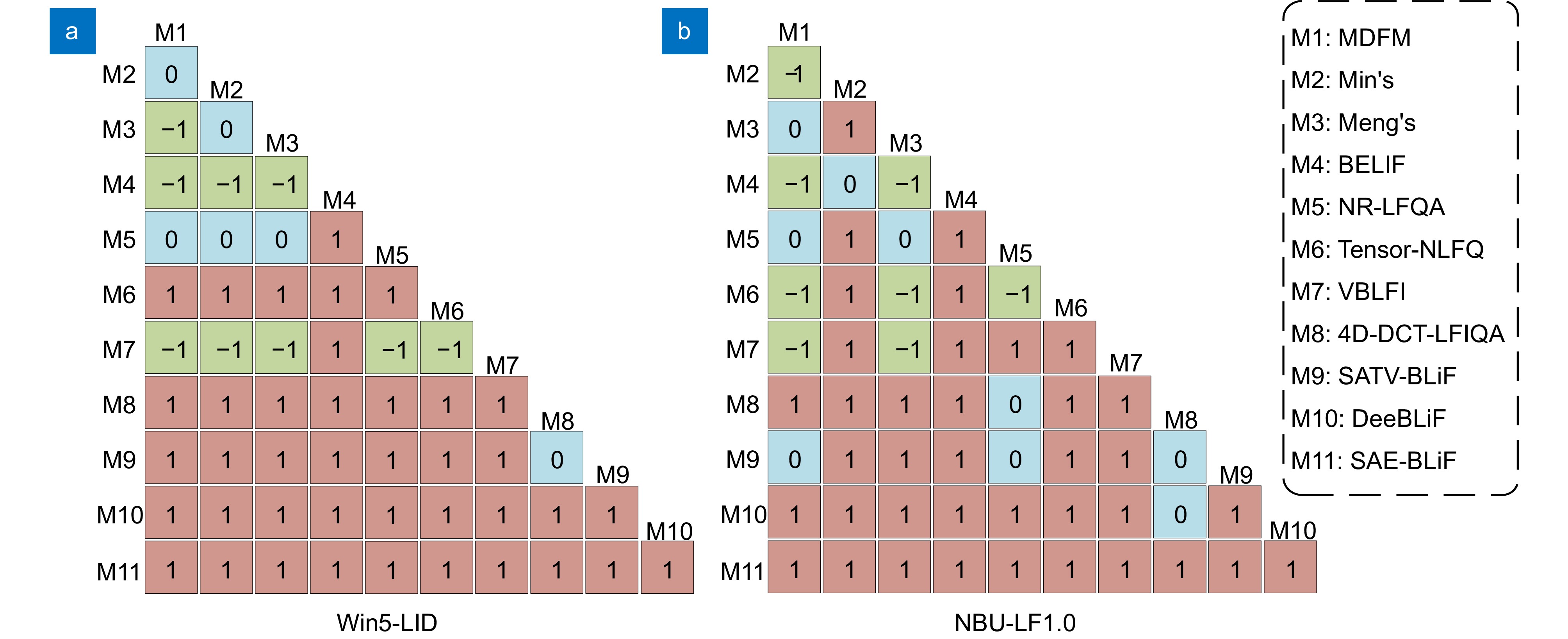

表 1 不同LFI数据集上不同方法的总体性能比较

Table 1. Overall performance comparison of different methods on different LFI datasets

Types Methods Win5-LID NBU-LF1.0 SHU PLCC↑ SROCC↑ RMSE↓ PLCC↑ SROCC↑ RMSE↓ PLCC↑ SROCC↑ RMSE↓ NR 2D-IQA BRISQUE[24] 0.6217 0.4537 0.7604 0.4989 0.3871 0.7879 0.9011 0.8883 0.4591 GLBP[25] 0.5357 0.4150 0.8130 0.5056 0.3490 0.7647 0.7168 0.6565 0.7504 FR LF-IQA MDFM[5] 0.7763 0.7471 0.6249 0.7888 0.7559 0.5649 0.8947 0.8908 0.4863 Min's[7] 0.7281 0.6645 0.6874 0.7104 0.6579 0.6439 0.8497 0.8470 0.5757 Meng's[26] 0.6983 0.6347 0.7203 0.8404 0.7825 0.4889 0.9279 0.9203 0.4039 NR LF-IQA BELIF[27] 0.5751 0.5059 0.7865 0.7014 0.6389 0.6276 0.8967 0.8656 0.4803 NR-LFQA[8] 0.7298 0.6979 0.6271 0.8528 0.8113 0.4658 0.9224 0.9229 0.4132 Tensor-NLFQ[12] 0.5813 0.4885 0.7706 0.6884 0.6246 0.6305 0.9307 0.9061 0.3857 VBLFI[10] 0.7213 0.6704 0.6843 0.8027 0.7539 0.5218 0.9235 0.8996 0.4064 4D-DCT-LFIQA[2] 0.8234 0.8074 0.5446 0.8395 0.8217 0.4871 0.9400 0.9320 0.3691 DeeBLiF[18] 0.8427 0.8186 0.5160 0.8583 0.8229 0.4588 0.9548 0.9419 0.3185 SATV-BLiF[28] 0.7933 0.7704 0.5842 0.8515 0.8237 0.4686 0.9332 0.9284 0.3897 Proposed 0.8653 0.8451 0.4863 0.9108 0.8937 0.3658 0.9649 0.9547 0.2808 表 2 在Win5-LID和NBU-LF1.0数据集上,不同方法针对于不同失真类型的SROCC值

Table 2. SROCC values for different distortion types across various methods on Win5-LID and NBU-LF1.0 datasets

Types Methods Win5-LID NBU-LF1.0 Hit

countHEVC JEPG2K LN NN NN BI EPICNN MDR VDSR NR 2DIQA BRISQUE[24] 0.5641 0.7801 0.5222 0.2462 0.3435 0.4145 0.5795 0.4331 0.7937 0 GLBP[25] 0.7165 0.4853 0.4678 0.3011 0.3229 0.3995 0.4344 0.4478 0.7381 0 FR LF-IQA MDFM[5] 0.7922 0.7669 0.6437 0.6692 0.8025 0.9089 0.7899 0.7386 0.8709 1 Min's[7] 0.6997 0.6507 0.6159 0.6288 0.8156 0.8667 0.7361 0.7963 0.9376 1 Meng's[26] 0.8886 0.6939 0.8459 0.8001 0.7429 0.9018 0.7997 0.5783 0.9225 2 NR LF-IQA BELIF[27] 0.7666 0.6379 0.6097 0.5452 0.7680 0.7122 0.6874 0.6128 0.7989 0 NR-LFQA[8] 0.7571 0.7338 0.6362 0.7026 0.8930 0.8807 0.7653 0.6111 0.8164 0 Tensor-NLFQ[12] 0.6853 0.5799 0.5663 0.5897 0.6946 0.7203 0.5245 0.5417 0.8018 0 VBLFI[10] 0.7141 0.7449 0.6908 0.7197 0.8316 0.8372 0.7195 0.4613 0.9134 0 4D-DCT-LFIQA[2] 0.8698 0.8946 0.8127 0.8235 0.9040 0.8719 0.7100 0.8095 0.8882 2 DeeBLiF[18] 0.9648 0.8195 0.7928 0.8306 0.9184 0.8876 0.7248 0.6961 0.8857 3 SATV-BLiF[28] 0.7918 0.8685 0.7566 0.8525 0.9282 0.9190 0.7722 0.6498 0.8617 2 Proposed 0.9417 0.8955 0.8472 0.8742 0.9165 0.9153 0.7749 0.8443 0.9294 7 表 3 Win5-LID和NBU-LF1.0数据集上不同功能模块的消融实验

Table 3. Ablation experiments of different functional modules on Win5-LID and NBU-LF1.0 datasets

Win5-LID NBU-LF1.0 PLCC SROCC RMSE PLCC SROCC RMSE SF 0.8338 0.8179 0.5217 0.8853 0.8654 0.4104 AF 0.8195 0.7992 0.5322 0.8709 0.8566 0.4208 EF 0.7950 0.7992 0.5652 0.8637 0.8478 0.4176 SF+AF 0.8513 0.8285 0.5057 0.9057 0.8857 0.3845 SF+AF+EF 0.8653 0.8451 0.4863 0.9108 0.8937 0.3658 表 4 不同NR LF-IQA方法运行时间对比

Table 4. Comparison of running time for different NR LF-IQA methods

表 5 在Win5-LID数据集上训练模型并在NBU-LF1.0和SHU数据集上测试的结果

Table 5. The results of training the model on the Win5-LID dataset and testing it on the NBU-LF1.0 and SHU datasets

Methods NBU-LF1.0 (NN) SHU (JPEG2000) PLCC SROCC PLCC SROCC 4D-DCT-LFIQA 0.7753 0.7040 0.7824 0.7967 DeeBLiF 0.8253 0.7265 0.7609 0.7252 Proposed 0.9082 0.8610 0.8821 0.8717 -

参考文献

[1] 左超, 陈钱. 计算光学成像: 何来, 何处, 何去, 何从?[J]. 红外与激光工程, 2022, 51(2): 20220110. doi: 10.3788/IRLA20220110

Zuo C, Chen Q. Computational optical imaging: an overview[J]. Infrared Laser Eng, 2022, 51(2): 20220110. doi: 10.3788/IRLA20220110

[2] Xiang J J, Jiang G Y, Yu M, et al. No-reference light field image quality assessment using four-dimensional sparse transform[J]. IEEE Trans Multimedia, 2023, 25: 457−472. doi: 10.1109/TMM.2021.3127398

[3] 吕天琪, 武迎春, 赵贤凌. 角度差异强化的光场图像超分网络[J]. 光电工程, 2023, 50(2): 220185. doi: 10.12086/oee.2023.220185

Lv T Q, Wu Y C, Zhao X L. Light field image super-resolution network based on angular difference enhancement[J]. Opto-Electron Eng, 2023, 50(2): 220185. doi: 10.12086/oee.2023.220185

[4] 于淼, 刘诚. 基于单次曝光光场成像的全焦图像重建技术[J]. 应用光学, 2021, 42(1): 71−78. doi: 10.5768/JAO202142.0102004

Yu M, Liu C. Single exposure light field imaging based all-in-focus image reconstruction technology[J]. J Appl Opt, 2021, 42(1): 71−78. doi: 10.5768/JAO202142.0102004

[5] Tian Y, Zeng H Q, Xing L, et al. A multi-order derivative feature-based quality assessment model for light field image[J]. J Vis Commun Image Represent, 2018, 57: 212−217. doi: 10.1016/j.jvcir.2018.11.005

[6] Huang H L, Zeng H Q, Hou J H, et al. A spatial and geometry feature-based quality assessment model for the light field images[J]. IEEE Trans Image Process, 2022, 31: 3765−3779. doi: 10.1109/TIP.2022.3175619

[7] Min X K, Zhou J T, Zhai G T, et al. A metric for light field reconstruction, compression, and display quality evaluation[J]. IEEE Trans Image Process, 2020, 29: 3790−3804. doi: 10.1109/TIP.2020.2966081

[8] Shi L K, Zhou W, Chen Z B, et al. No-reference light field image quality assessment based on spatial-angular measurement[J]. IEEE Trans Circuits Syst Video Technol, 2020, 30(11): 4114−4128. doi: 10.1109/TCSVT.2019.2955011

[9] Luo Z Y, Zhou W, Shi L K, et al. No-reference light field image quality assessment based on micro-lens image[C]//2019 Picture Coding Symposium (PCS), 2019: 1–5. https://doi.org/10.1109/PCS48520.2019.8954551.

[10] Xiang J J, Yu M, Chen H, et al. VBLFI: visualization-based blind light field image quality assessment[C]//2020 IEEE International Conference on Multimedia and Expo (ICME), 2020: 1–6. https://doi.org/10.1109/ICME46284.2020.9102963.

[11] Lamichhane K, Battisti F, Paudyal P, et al. Exploiting saliency in quality assessment for light field images[C]//2021 Picture Coding Symposium (PCS), 2021: 1–5. https://doi.org/10.1109/PCS50896.2021.9477451.

[12] Zhou W, Shi L K, Chen Z B, et al. Tensor oriented no-reference light field image quality assessment[J]. IEEE Trans Image Process, 2020, 29: 4070−4084. doi: 10.1109/TIP.2020.2969777

[13] Xiang J J, Yu M, Jiang G Y, et al. Pseudo video and refocused images-based blind light field image quality assessment[J]. IEEE Trans Circuits Syst Video Technol, 2021, 31(7): 2575−2590. doi: 10.1109/TCSVT.2020.3030049

[14] Alamgeer S, Farias M C Q. No-Reference light field image quality assessment method based on a long-short term memory neural network[C]//2022 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), 2022: 1–6. https://doi.org/10.1109/ICMEW56448.2022.9859419.

[15] Qu Q, Chen X M, Chung V, et al. Light field image quality assessment with auxiliary learning based on depthwise and anglewise separable convolutions[J]. IEEE Trans Broadcast, 2021, 67(4): 837−850. doi: 10.1109/TBC.2021.3099737

[16] Qu Q, Chen X M, Chung Y Y, et al. LFACon: Introducing anglewise attention to no-reference quality assessment in light field space[J]. IEEE Trans Vis Comput Graph, 2023, 29(5): 2239−2248. doi: 10.1109/TVCG.2023.3247069

[17] Zhao P, Chen X M, Chung V, et al. DeLFIQE: a low-complexity deep learning-based light field image quality evaluator[J]. IEEE Trans Instrum Meas, 2021, 70: 5014811. doi: 10.1109/TIM.2021.3106113

[18] Zhang Z Y, Tian S S, Zou W B, et al. Deeblif: deep blind light field image quality assessment by extracting angular and spatial information[C]//2022 IEEE International Conference on Image Processing (ICIP), 2022: 2266–2270. https://doi.org/10.1109/ICIP46576.2022.9897951.

[19] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016: 770–778. https://doi.org/10.1109/CVPR.2016.90.

[20] Lu S Y, Ding Y M, Liu M Z, et al. Multiscale feature extraction and fusion of image and text in VQA[J]. Int J Comput Intell Syst, 2023, 16(1): 54. doi: 10.1007/s44196-023-00233-6

[21] Shi L K, Zhao S Y, Zhou W, et al. Perceptual evaluation of light field image[C]//2018 25th IEEE International Conference on Image Processing (ICIP), 2018: 41–45. https://doi.org/10.1109/ICIP.2018.8451077.

[22] Huang Z J, Yu M, Jiang G Y, et al. Reconstruction distortion oriented light field image dataset for visual communication[C]//2019 International Symposium on Networks, Computers and Communications (ISNCC), 2019: 1–5. https://doi.org/10.1109/ISNCC.2019.8909170.

[23] Shan L, An P, Meng C L, et al. A no-reference image quality assessment metric by multiple characteristics of light field images[J]. IEEE Access, 2019, 7: 127217−127229. doi: 10.1109/ACCESS.2019.2940093

[24] Mittal A, Moorthy A K, Bovik A C. No-reference image quality assessment in the spatial domain[J]. IEEE Trans Image Process, 2012, 21(12): 4695−4708. doi: 10.1109/TIP.2012.2214050

[25] Li Q H, Lin W S, Fang Y M. No-reference quality assessment for multiply-distorted images in gradient domain[J]. IEEE Signal Process Lett, 2016, 23(4): 541−545. doi: 10.1109/LSP.2016.2537321

[26] Meng C L, An P, Huang X P, et al. Full reference light field image quality evaluation based on angular-spatial characteristic[J]. IEEE Signal Process Lett, 2020, 27: 525−529. doi: 10.1109/LSP.2020.2982060

[27] Shi L K, Zhao S Y, Chen Z B. Belif: blind quality evaluator of light field image with tensor structure variation index[C]//2019 IEEE International Conference on Image Processing (ICIP), 2019: 3781–3785. https://doi.org/10.1109/ICIP.2019.8803559.

[28] Zhang Z Y, Tian S S, Zou W B, et al. Blind quality assessment of light field image based on spatio-angular textural variation[C]//2023 IEEE International Conference on Image Processing (ICIP), 2023: 2385–2389. https://doi.org/10.1109/ICIP49359.2023.10222216.

[29] Rerabek M, Ebrahimi T. New light field image dataset[C]//8th International Conference on Quality of Multimedia Experience (QoMEX), 2016: 1–2.

[30] VQEG. Final Report From the Video Quality Experts Group on the Validation of Objective Models of Video Quality Assessment, 2000 [EB/OL]. http://www.vqeg.org.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: