Classification algorithm of retinopathy based on attention mechanism and multi feature fusion

-

摘要:

糖尿病视网膜病变(Diabetic Retinopathy,DR)是糖尿病的一个常见的急性阶段,可引起视网膜的视功能异常。针对视网膜眼底图像病灶区域识别困难以及分级效率不高等问题,本文提出一种基于注意力机制多特征融合的算法来对DR进行诊断分级。首先对输入的图像采用高斯滤波等形态学预处理来提升眼底图像特征对比度;然后用ResNeSt50残差网络作为模型的骨干,引入多尺度特征增强模块对视网膜病变图像病变区域进行特征增强,提高分级准确率;再后利用图形特征融合模块对主干输出的特征增强后的局部特征进行信息融合;最后采用中心损失和焦点损失组合的加权损失函数进一步提升分类效果。在印度糖尿病视网膜病变(IDRID)数据集中灵敏度和特异性分别为95.65%和91.17%,二次加权一致性检验系数为90.38%。在Kaggle比赛数据集中准确率为84.41%,受试者工作特征曲线下的面积为90.36%。仿真实验表明,本文算法在糖尿病视网膜病变分级中具有一定的应用价值。

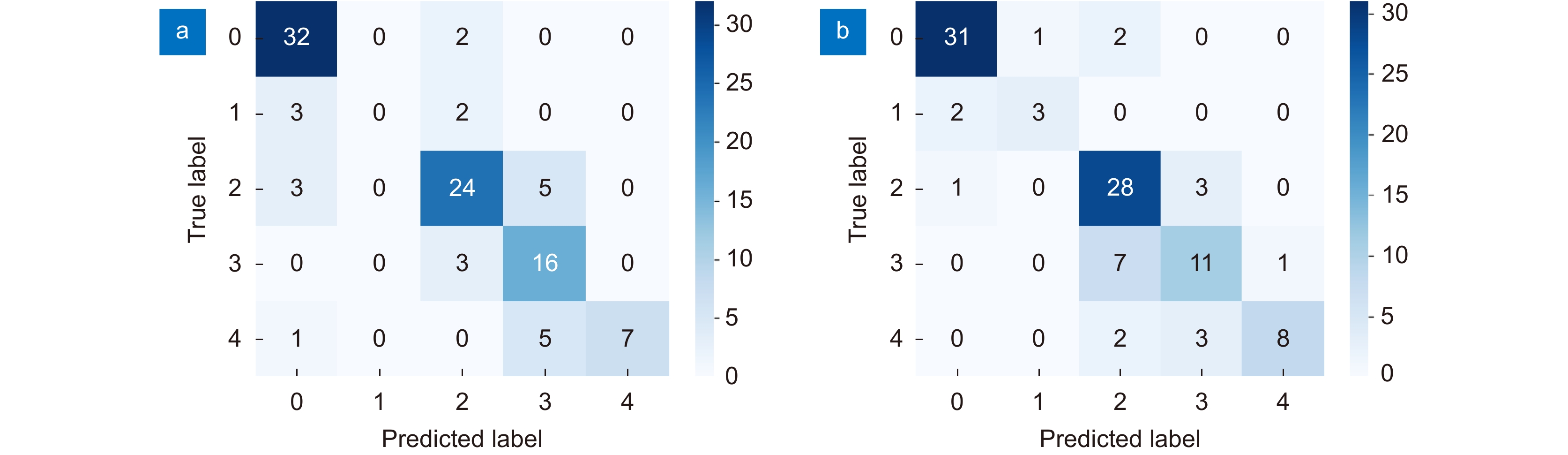

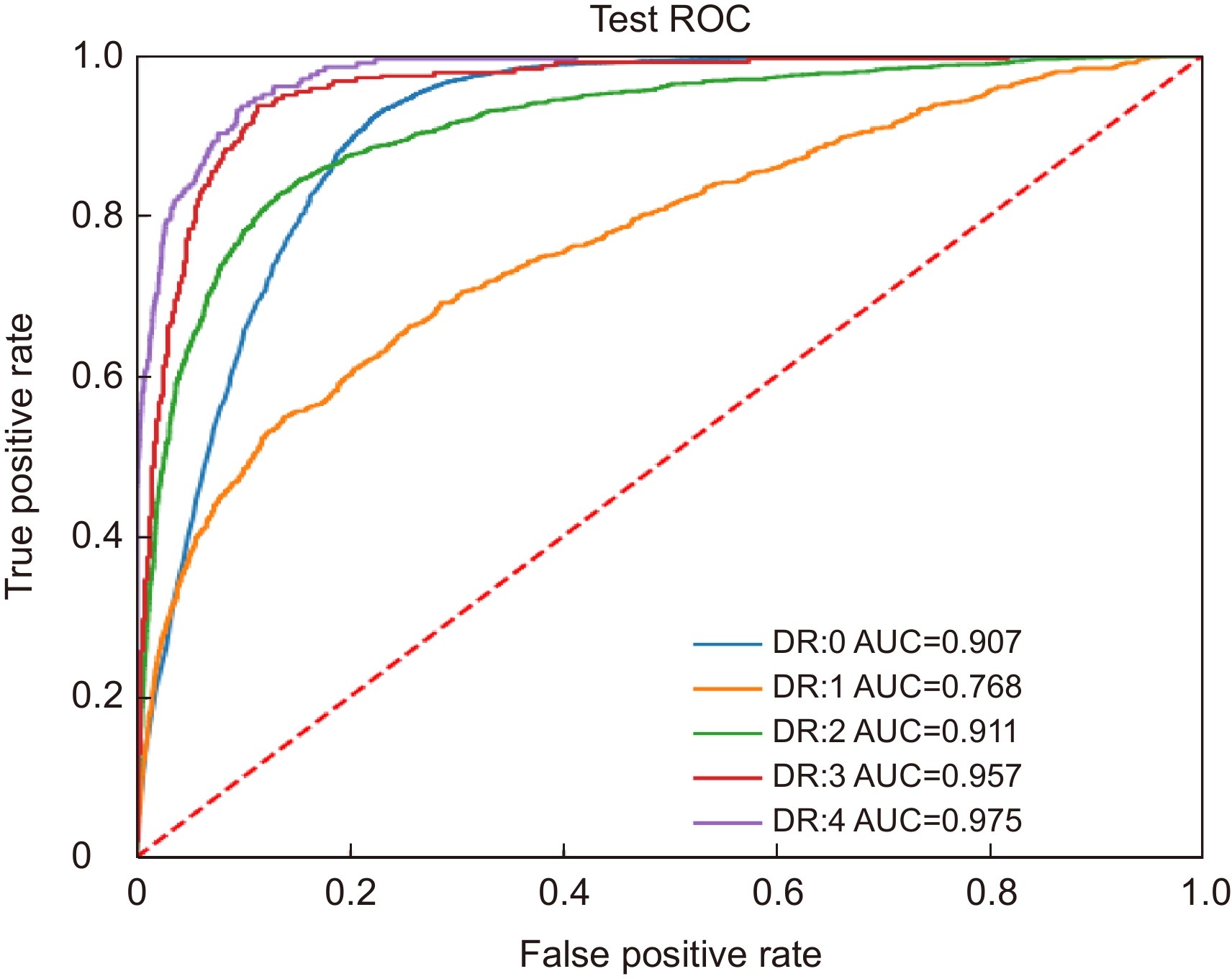

Abstract:Diabetic Retinopathy (DR) is a prevalent acute stage of diabetes mellitus that causes vision-effecting abnormalities on the retina. In view of the difficulty in identifying the lesion area in retinal fundus images and the low grading efficiency, this paper proposes an algorithm based on multi-feature fusion of attention mechanism to diagnose and grade DR. Firstly, morphological preprocessing such as Gaussian filtering is applied to the input image to improve the feature contrast of the fundus image. Secondly, the ResNeSt50 residual network is used as the backbone of the model, and a multi-scale feature enhancement module is introduced to enhance the feature of the lesion area of the retinopathy image to improve the classification accuracy. Then, the graphic feature fusion module is used to fuse the enhanced local features of the main output. Finally, a weighted loss function combining center loss and focal loss is used to further improve the classification effect. In the Indian Diabetic Retinopathy (IDRID) dataset, the sensitivity and specificity were 95.65% and 91.17%, respectively, and the quadratic weighted agreement test coefficient was 90.38%. In the Kaggle competition dataset, the accuracy rate is 84.41%, and the area under the receiver operating characteristic curve was 90.36%. Simulation experiments show that the proposed algorithm has certain application value in the grading of diabetic retinopathy.

-

Key words:

- retinopathy grade /

- multiscale features /

- attention mechanism /

- graphic feature fusion

-

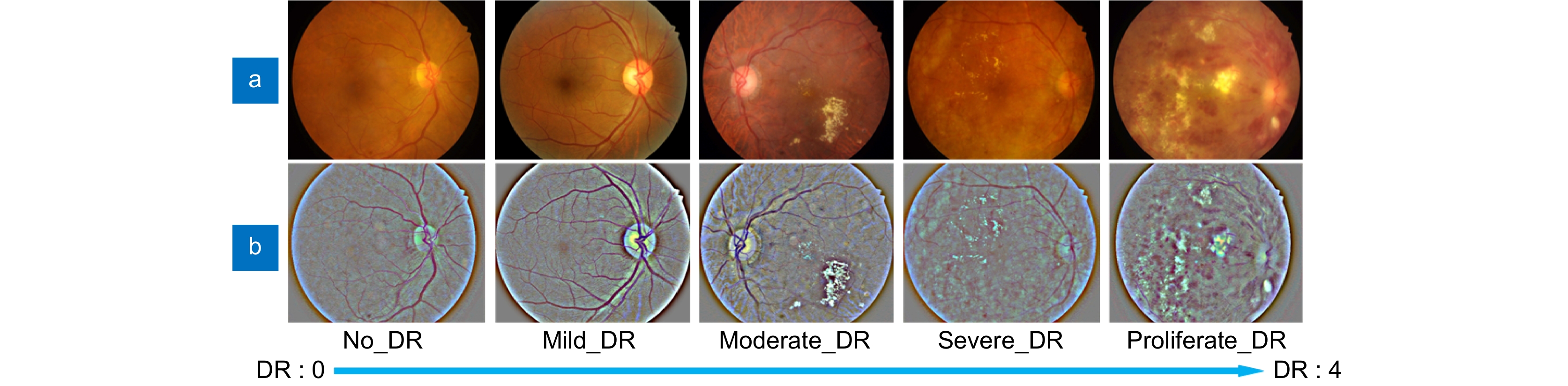

Overview: Diabetic Retinopathy (DR) is a common acute stage of diabetes, which can cause abnormal retinal visual function; if not detected early and treated, it can lead to blindness. In recent years, the research on the intelligent diagnosis of DR classification has been a hot topic in the field of medical image processing. With the high-quality development of deep learning, the technology of intelligent diagnosis using deep neural network algorithms for DR image processing has been widely used. However, there are still two limitations in the current DR grading intelligent diagnosis process: ① In DR images, the features of microscopic lesions such as microaneurysms, hard exudates, and hemorrhages have little difference with the surrounding environment of the retina, and the feature extraction is insufficient; ② The distribution of various samples in the public datasets in the medical field is uneven.

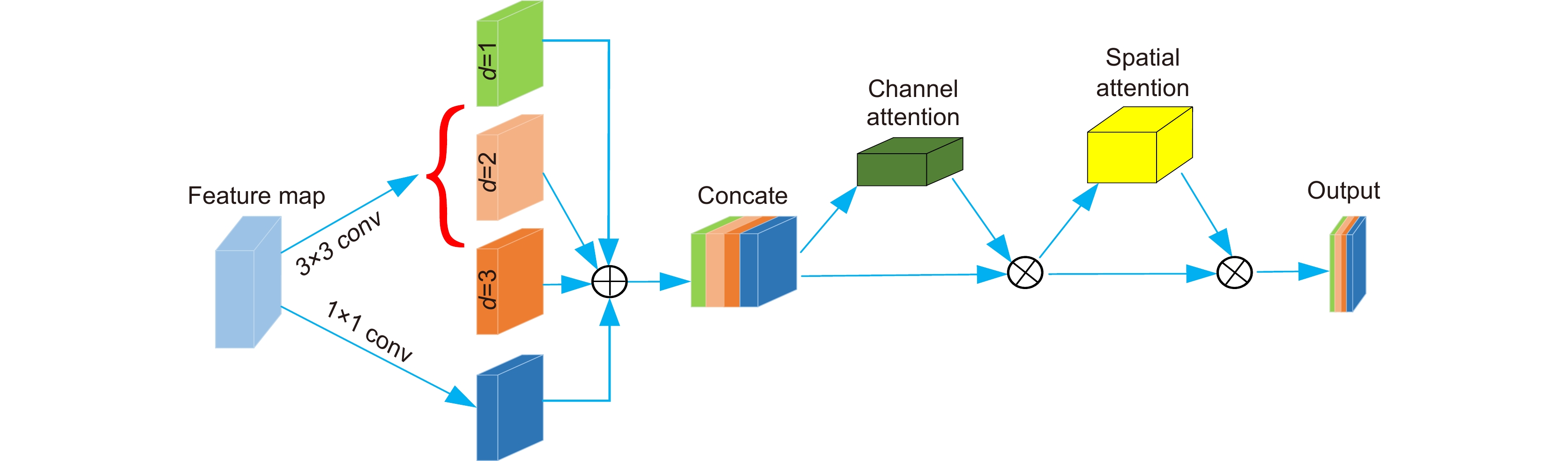

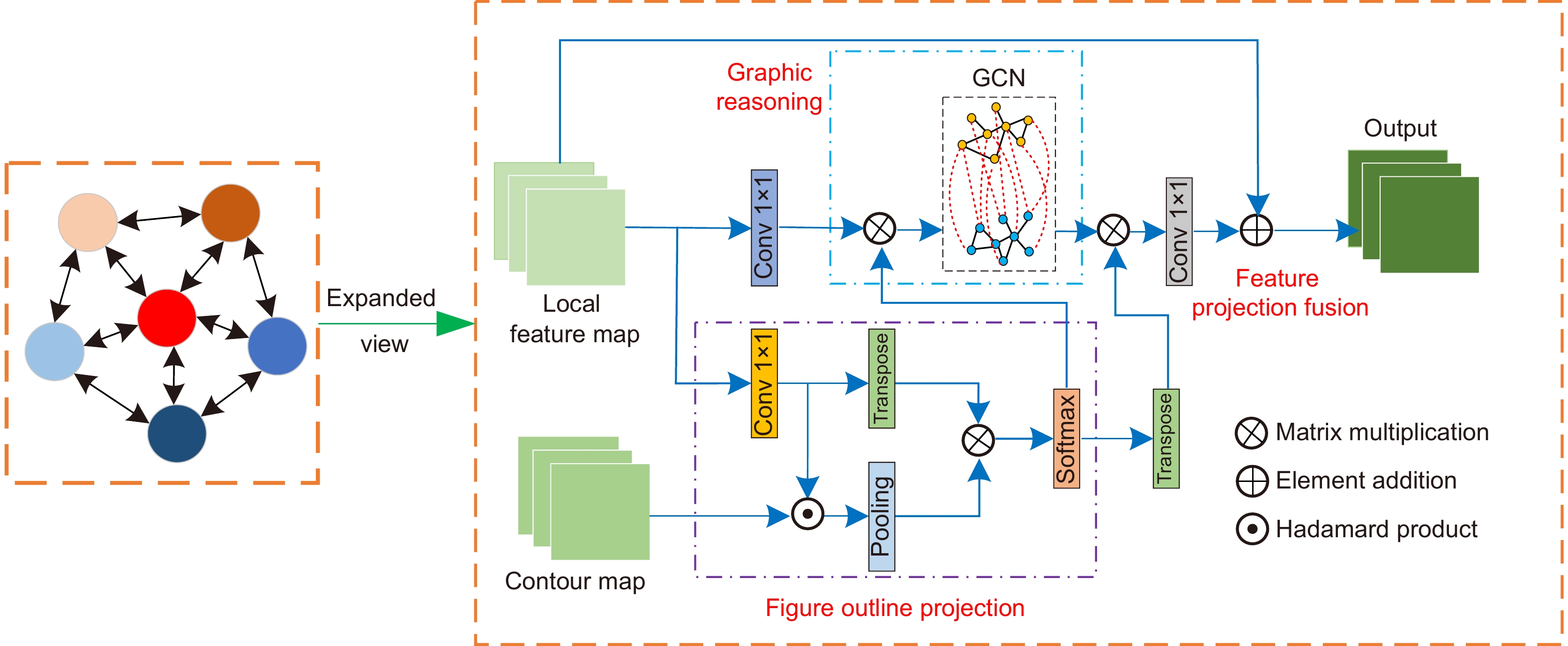

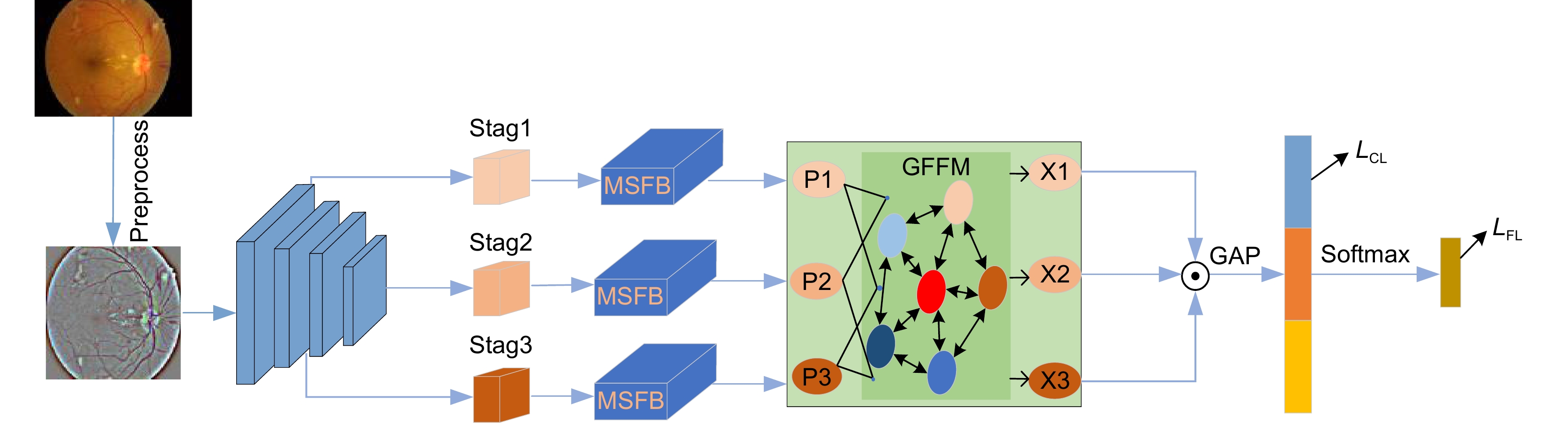

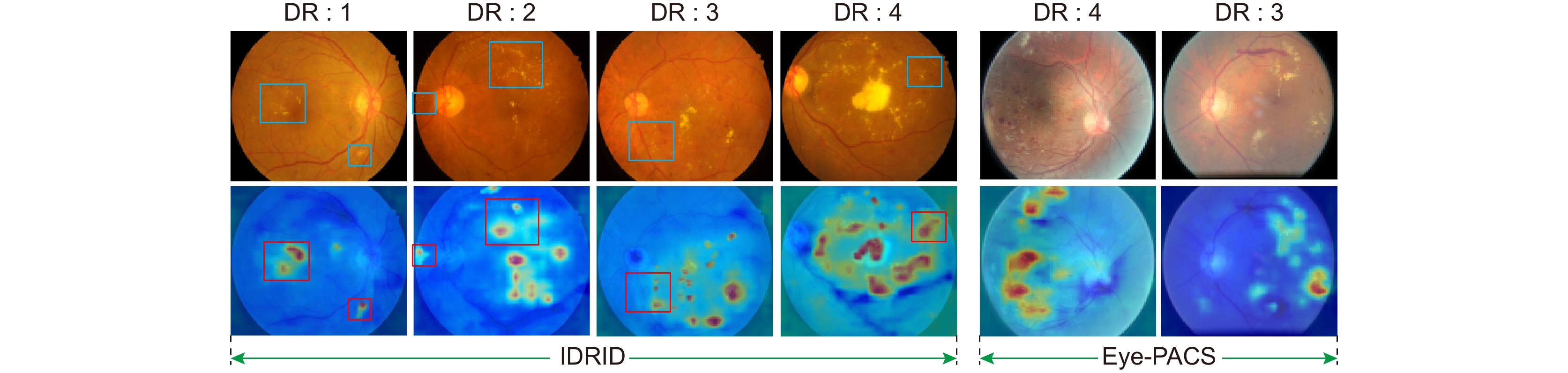

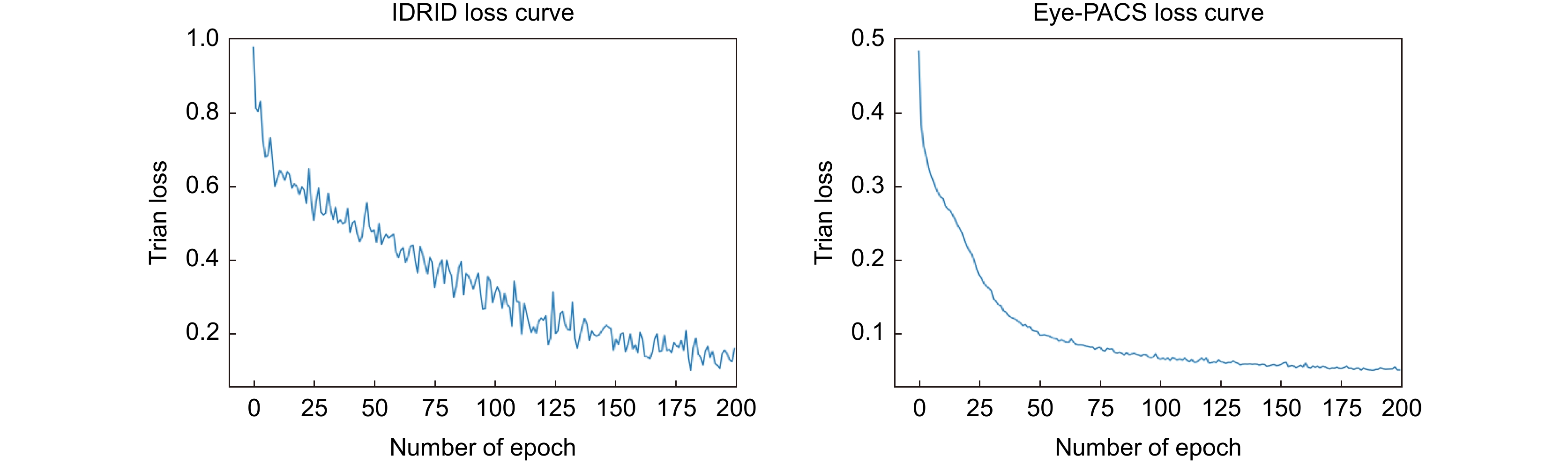

In view of the difficulty in identifying the lesion area in retinal fundus images and the low grading efficiency, this paper proposes an algorithm based on multi-feature fusion of attention mechanism to diagnose and grade DR. The overall structure of the algorithm is mainly composed of ResNeSt backbone network, multi-scale feature enhancement module (MSFB), and graph feature fusion module (GFFM), and a combined weighted loss function is introduced to alleviate the problem of unbalanced sample distribution and indistinguishable differences between classes. Firstly, feature enhancement is performed on the pathological area of retinopathy image through MSFB, improving classification accuracy, and optimizing model performance. Then, the graphic feature fusion module is used to perform information fusion on the local features after the feature enhancement of the backbone output. Finally, a weighted loss function combining center loss and focal loss is used to further improve the classification effect. Although it shows good performance on two datasets, this paper also has some shortcomings. For example, the overall number of parameters is slightly larger, which makes the network more complicated and increases the training and testing time. The average time per round is 46 seconds on the IDRID dataset and 48 minutes per round on the Eye-PACS dataset.

In IDRID dataset, the sensitivity and specificity were 95.65% and 91.17%, respectively, and the quadratic weighted agreement test coefficient was 90.38%. In the Kaggle competition dataset, the accuracy rate is 84.41%, and the area under the receiver operating characteristic curve was 90.36%. The experimental results show that the algorithm in this paper has certain application value in the field of DR. In view of the shortcomings of the above model, the next key task is to streamline the network model and further improve the model performance as much as possible.

-

-

表 1 消融实验在IDRID数据集中的表现

Table 1. Performance of ablation experiments in the IDRID dataset

Model QWK/% Acc/% Se/% Sp/% M1 84.83 71.84 88.48 88.23 M2 85.08 74.75 89.80 94.11 M3 87.70 77.66 92.75 89.80 M4 89.59 76.69 96.10 88.23 AMMF 90.38 78.64 95.65 91.17 表 2 不同模型在IDRID数据集中的表现

Table 2. Performance of different models in the IDRID dataset

表 3 不同模型在Eye-PACS数据集中的表现

Table 3. Performance of different models in the Eye-PACS dataset

-

[1] 梁礼明, 周珑颂, 陈鑫, 等. 鬼影卷积自适应视网膜血管分割算法[J]. 光电工程, 2021, 48(10): 210291. doi: 10.12086/oee.2021.210291

Liang L M, Zhou L S, Chen X, et al. Ghost convolution adaptive retinal vessel segmentation algorithm[J]. Opto-Electron Eng, 2021, 48(10): 210291. doi: 10.12086/oee.2021.210291

[2] 韦春苗, 徐岩, 蒋新辉, 等. 基于PiT的皮肤镜图像分类方法研究[J]. 光电子·激光, 2022, 33(5): 505−512. doi: 10.16136/j.joel.2022.05.0619

Wei C M, Xu Y, Jiang X H, et al. Study on dermoscope image classification method based on PiT[J]. J Optoelectron·Laser, 2022, 33(5): 505−512. doi: 10.16136/j.joel.2022.05.0619

[3] Zhou K, Gu Z W, Liu W, et al. Multi-cell multi-task convolutional neural networks for diabetic retinopathy grading[C]//Annual International Conference of the IEEE Engineering in Medicine and Biology Society, 2018: 2724–2727. https://doi.org/10.1109/EMBC.2018.8512828.

[4] Du R Y, Chang D L, Bhunia A K, et al. Fine-grained visual classification via progressive multi-granularity training of jigsaw patches[C]//16th European Conference on Computer Vision, 2020: 153–168. https://doi.org/10.1007/978-3-030-58565-5_10.

[5] 郑雯, 沈琪浩, 任佳. 基于Improved DR-Net算法的糖尿病视网膜病变识别与分级[J]. 光学学报, 2021, 41(22): 2210002. doi: 10.3788/AOS202141.2210002

Zheng W, Shen Q H, Ren J. Recognition and classification of diabetic retinopathy based on improved DR-Net algorithm[J]. Acta Opt Sin, 2021, 41(22): 2210002. doi: 10.3788/AOS202141.2210002

[6] 顾婷菲, 郝鹏翼, 白琮, 等. 结合多通道注意力的糖尿病性视网膜病变分级[J]. 中国图象图形学报, 2021, 26(7): 1726−1736. doi: 10.11834/jig.200518

Gu T F, Hao P Y, Bai Z, et al. Diabetic retinopathy grading based on multi-channel attention[J]. J Image Graphics, 2021, 26(7): 1726−1736. doi: 10.11834/jig.200518

[7] Zhang H, Wu C R, Zhang Z Y, et al. ResNeSt: split-attention networks[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 2022: 2735–2745. https://doi.org/10.1109/CVPRW56347.2022.00309.

[8] 唐贤伦, 杜一铭, 刘雨微, 等. 基于条件深度卷积生成对抗网络的图像识别方法[J]. 自动化学报, 2018, 44(5): 855−864. doi: 10.16383/j.aas.2018.c170470

Tang X L, Du Y M, Liu Y W, et al. Image recognition with conditional deep convolutional generative adversarial networks[J]. Acta Autom Sin, 2018, 44(5): 855−864. doi: 10.16383/j.aas.2018.c170470

[9] Shao Q B, Gong L J, Ma K, et al. Attentive CT lesion detection using deep pyramid inference with multi-scale booster[C]//22nd International Conference on Medical Image Computing and Computer Assisted Intervention, 2019: 301–309. https://doi.org/10.1007/978-3-030-32226-7_34.

[10] Chen L C, Papandreou G, Kokkinos I, et al. DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs[J]. IEEE Trans Pattern Anal Mach Intell, 2018, 40(4): 834−848. doi: 10.1109/TPAMI.2017.2699184

[11] Wang K, Zhang X H, Lu Y T, et al. CGRNet: contour-guided graph reasoning network for ambiguous biomedical image segmentation[J]. Biomed Signal Process Control, 2022, 75: 103621. doi: 10.1016/j.bspc.2022.103621

[12] Kipf T N, Welling M. Semi-supervised classification with graph convolutional networks[Z]. arXiv: 1609.02907, 2016. https://arxiv.org/abs/1609.02907v3.

[13] Li Q M, Han Z C, Wu X M. Deeper insights into graph convolutional networks for semi-supervised learning[C]//32nd AAAI Conference on Artificial Intelligence, 2018: 3538-3545.

[14] Li Y, Gupta A. Beyond grids: learning graph representations for visual recognition[C]//Proceedings of the 32nd International Conference on Neural Information Processing Systems, 2018: 9245–9255. https://doi.org/10.5555/3327546.3327596.

[15] Lin T Y, Goyal P, Girshick R, et al. Focal loss for dense object detection[C]//Proceedings of the IEEE International Conference on Computer Vision, 2017: 2999–3007. https://doi.org/10.1109/ICCV.2017.324.

[16] 尚群锋, 沈炜, 帅世渊. 基于深度学习高分辨率遥感影像语义分割[J]. 计算机系统应用, 2020, 29(7): 180−185. doi: 10.15888/j.cnki.csa.007487

Shang Q F, Shen W, Shuai S Y. Semantic segmentation of high resolution remote sensing image based on deep learning[J]. Comput Syst Appl, 2020, 29(7): 180−185. doi: 10.15888/j.cnki.csa.007487

[17] Cuadros J, Bresnick G. EyePACS: an adaptable telemedicine system for diabetic retinopathy screening[J]. J Diabetes Sci Technol, 2009, 3(3): 509−516. doi: 10.1177/193229680900300315

[18] Bhardwaj C, Jain S, Sood M. Transfer learning based robust automatic detection system for diabetic retinopathy grading[J]. Neural Comput Appl, 2021, 33(20): 13999−14019. doi: 10.1007/s00521-021-06042-2

[19] Wu Z, Shi G L, Chen Y, et al. Coarse-to-fine classification for diabetic retinopathy grading using convolutional neural network[J]. Artif Intell Med, 2020, 108: 101936. doi: 10.1016/j.artmed.2020.101936

[20] Song J W, Yang R Y. Feature boosting, suppression, and diversification for fine-grained visual classification[C]//2021 International Joint Conference on Neural Networks (IJCNN), 2021: 1–8. https://doi.org/10.1109/IJCNN52387.2021.9534004.

[21] Shi L, Zhang J X. Few-shot learning based on multi-stage transfer and class-balanced loss for diabetic retinopathy grading[Z]. arXiv: 2109.11806, 2021. https://arxiv.org/abs/2109.11806.

[22] Kamothi N, Thakur R. Detection of diabetic retinopathy using transfer learning approach[J]. BIOINFOLET, 2021, 18(1a): 51−54.

[23] Thota N B, Reddy D U. Improving the accuracy of diabetic retinopathy severity classification with transfer learning[C]//2020 IEEE 63rd International Midwest Symposium on Circuits and Systems (MWSCAS), 2020: 1003–1006. https://doi.org/10.1109/MWSCAS48704.2020.9184473.

[24] Saxena G, Verma D K, Paraye A, et al. Improved and robust deep learning agent for preliminary detection of diabetic retinopathy using public datasets[J]. Intell Based Med, 2020, 3–4: 100022. doi: 10.1016/j.ibmed.2020.100022

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: