-

摘要:

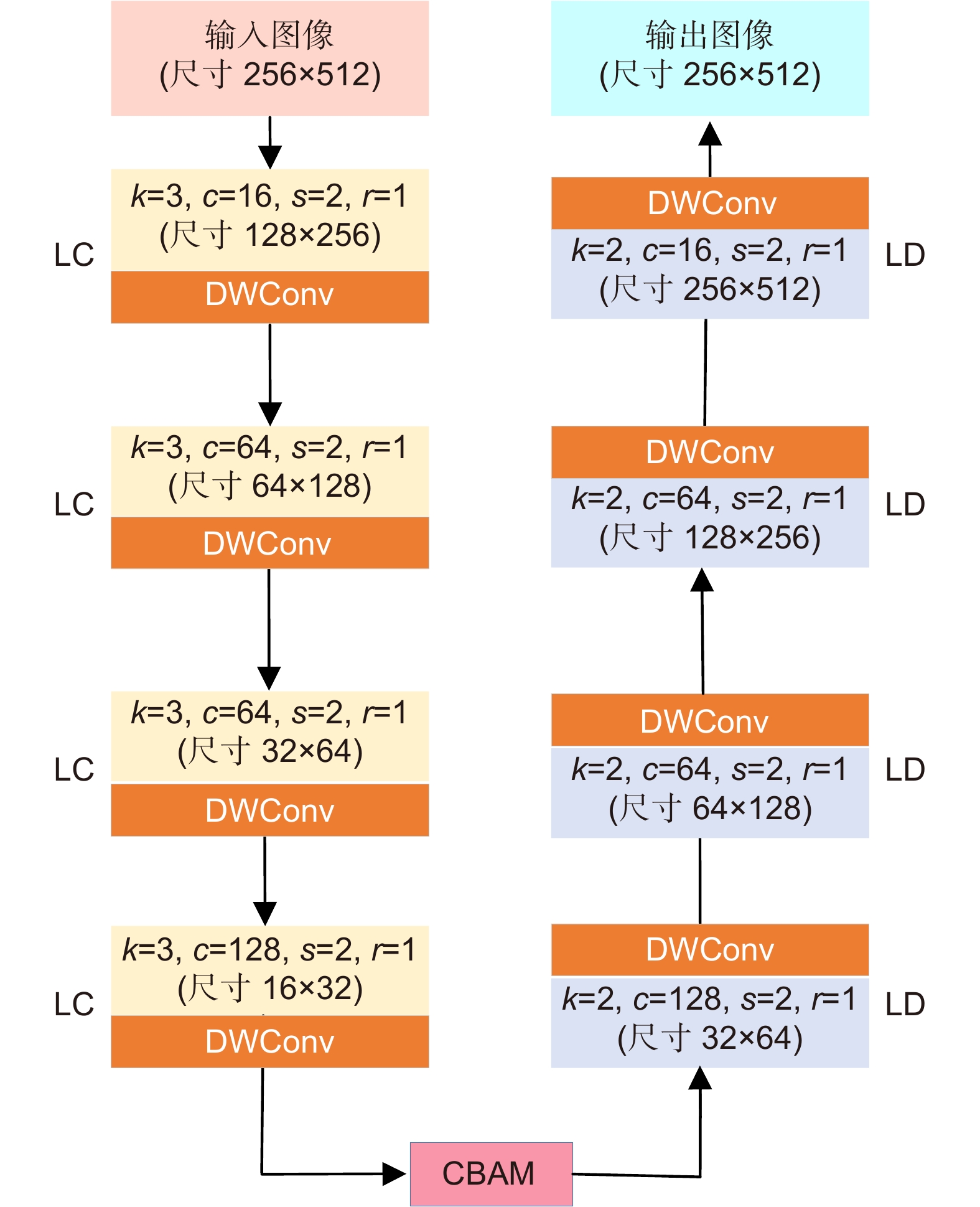

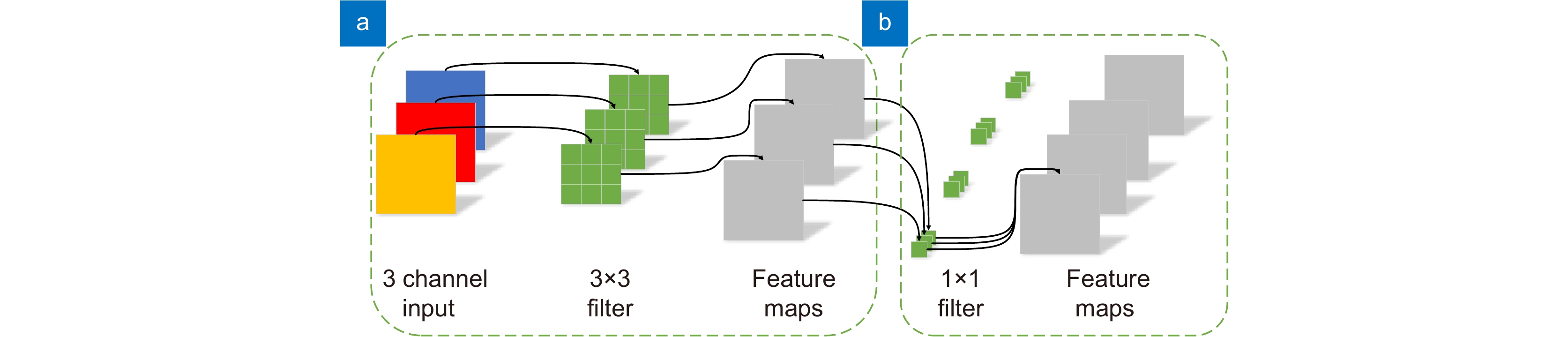

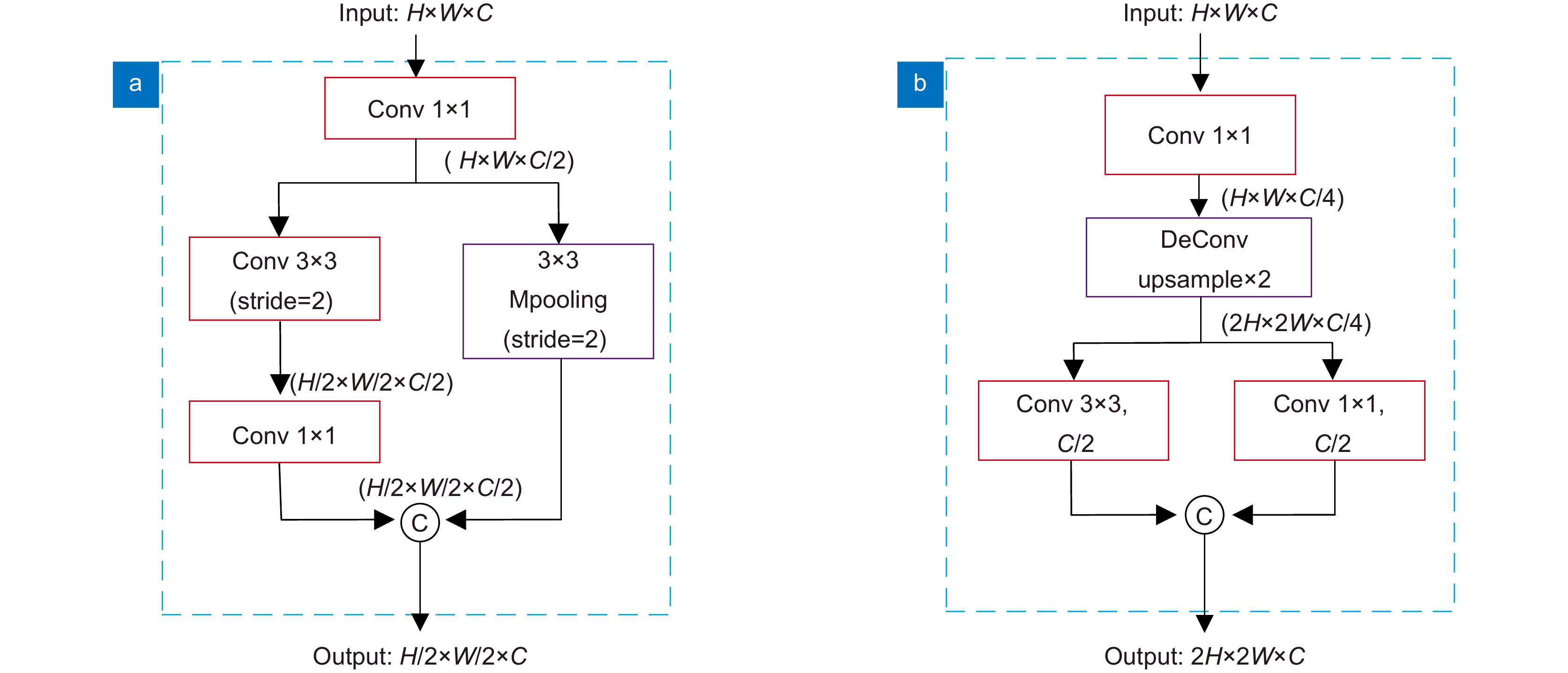

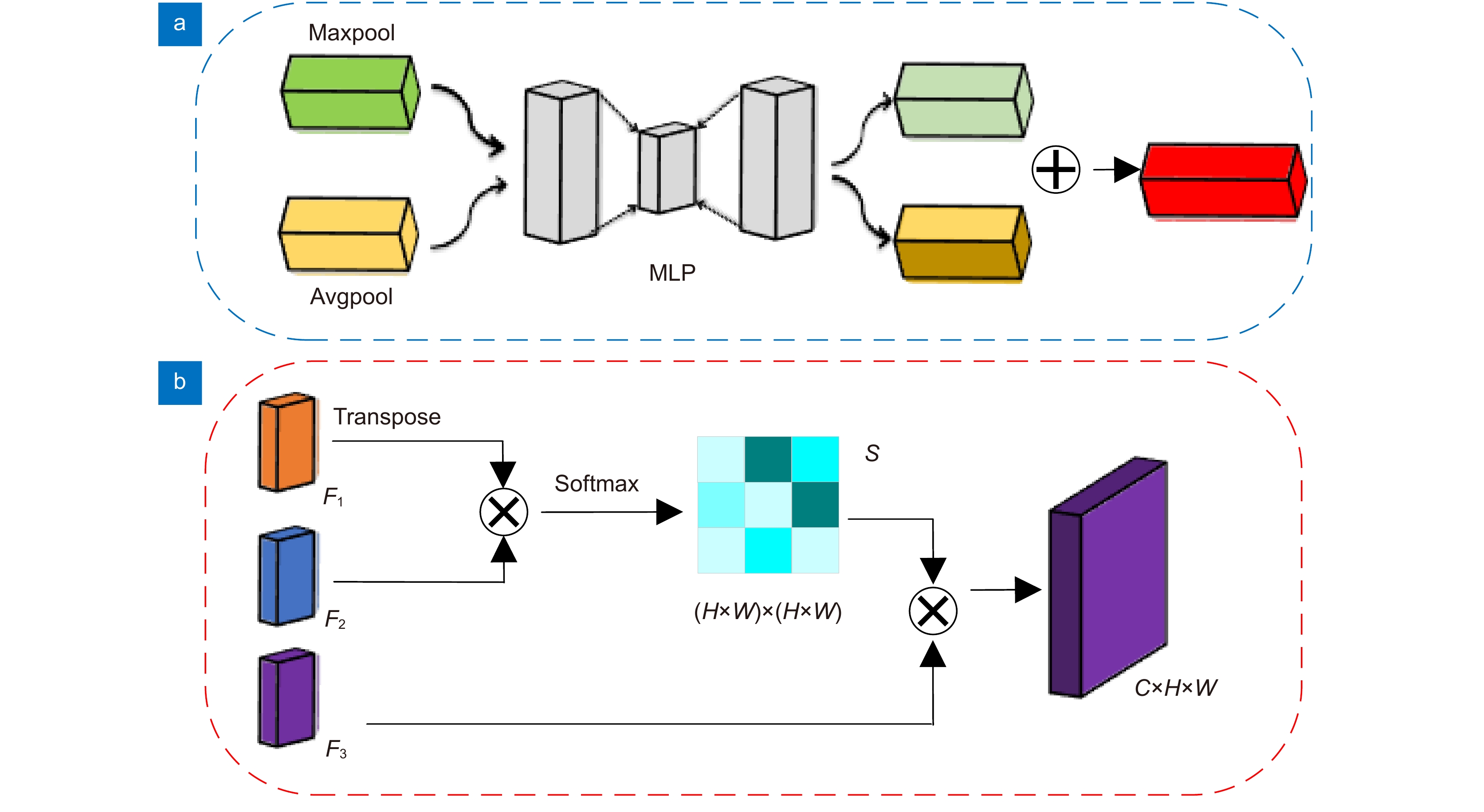

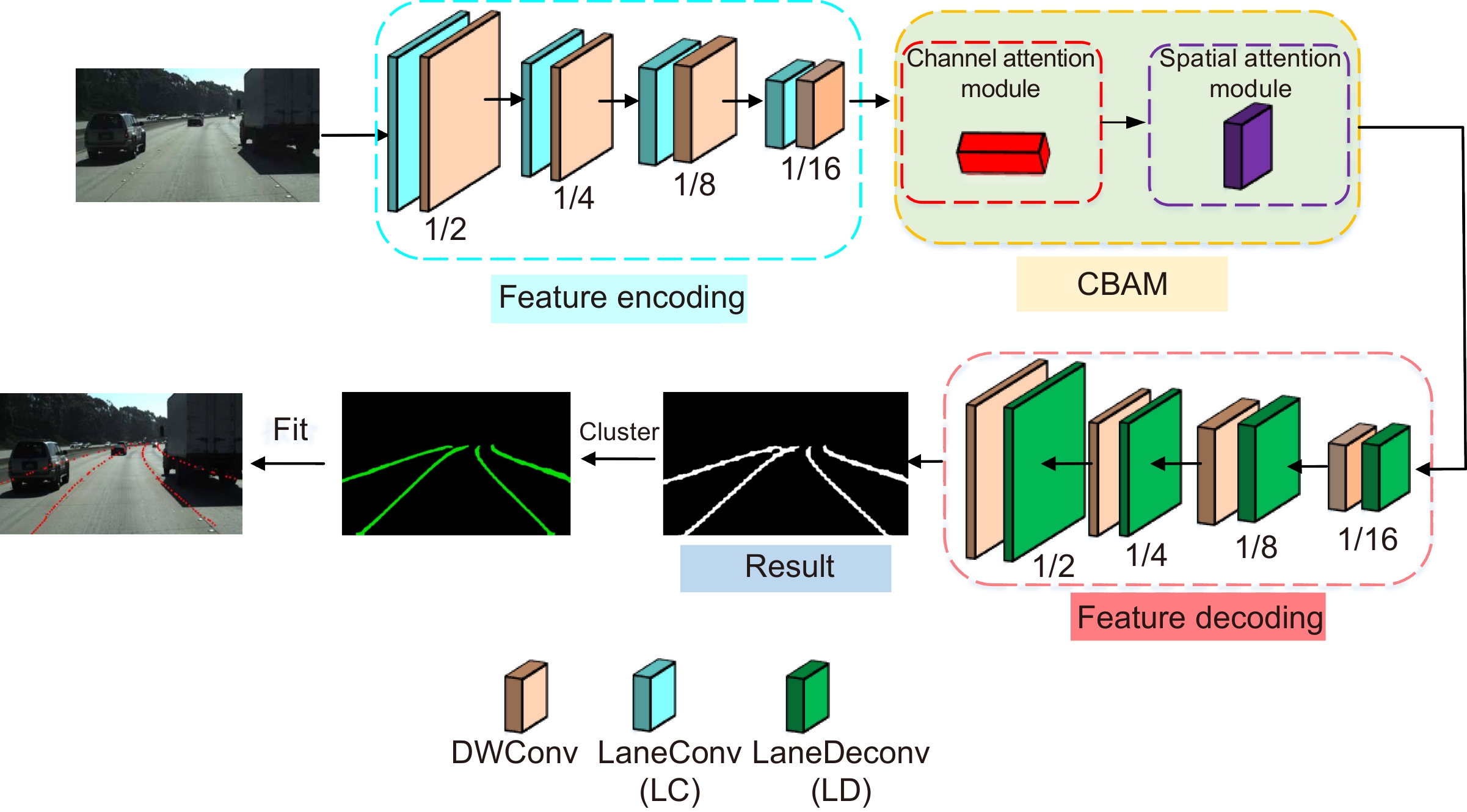

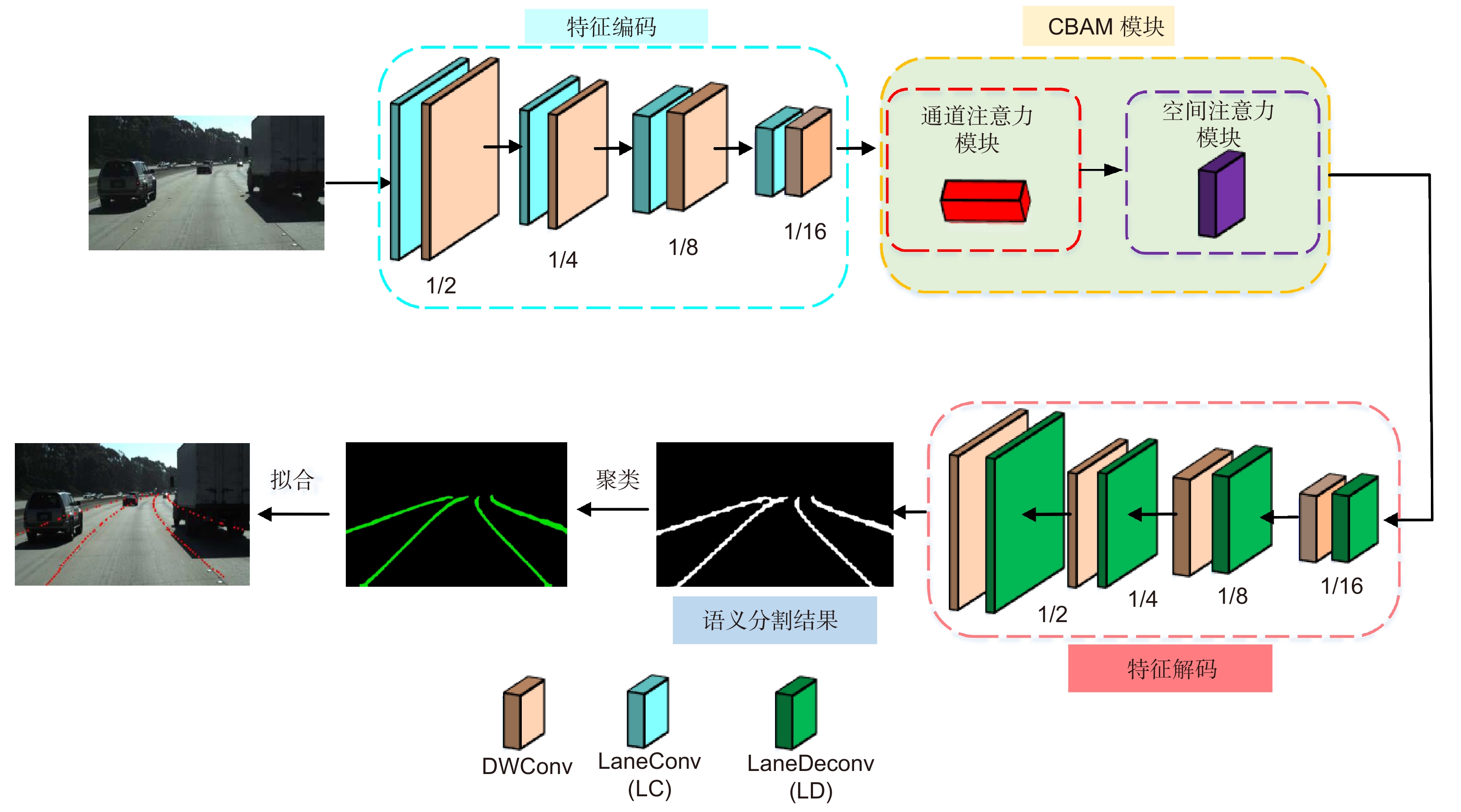

车道线识别是自动驾驶环境感知的一项重要任务。近年来,基于卷积神经网络的深度学习方法在目标检测和场景分割中取得了很好的效果。本文借鉴语义分割的思想,设计了一个基于编码解码结构的轻量级车道线分割网络。针对卷积神经网络计算量大的问题,引入深度可分离卷积来替代普通卷积以减少卷积运算量。此外,提出了一种更高效的卷积结构LaneConv和LaneDeconv来进一步提高计算效率。为了获取更好的车道线特征表示能力,在编码阶段本文引入了一种将空间注意力和通道注意力串联的双注意力机制模块(CBAM)来提高车道线分割精度。在Tusimple车道线数据集上进行了大量实验,结果表明,本文方法能够显著提升车道线的分割速度,且在各种条件下都具有良好的分割效果和鲁棒性。与现有的车道线分割模型相比,本文方法在分割精度方面相似甚至更优,而在速度方面则有明显提升。

Abstract:Lane line recognition is an important task of automatic driving environment perception. In recent years, the deep learning method based on convolutional neural network has achieved good results in target detection and scene segmentation. Based on the idea of semantic segmentation, this paper designs a lightweight Lane segmentation network based on encoding and decoding structure. Aiming at the problem of large amount of computation of convolution neural network, the deep separable convolution is introduced to replace the ordinary convolution to reduce the amount of convolution computation. Moreover, a more efficient convolution structure of laneconv and lanedeconv is proposed to further improve the computational efficiency. Secondly, in order to obtain better lane line feature representation ability, in the coding stage, a dual attention mechanism module (CBAM) connecting spatial attention and channel attention in series is introduced to improve the accuracy of lane line segmentation. A large number of experiments are carried out on tusimple lane line data set. The results show that this method can significantly improve the lane line segmentation speed, and has a good segmentation effect and robustness under various conditions. Compared with the existing lane line segmentation models, the proposed method is similar or even better in segmentation accuracy, but significantly improved in speed.

-

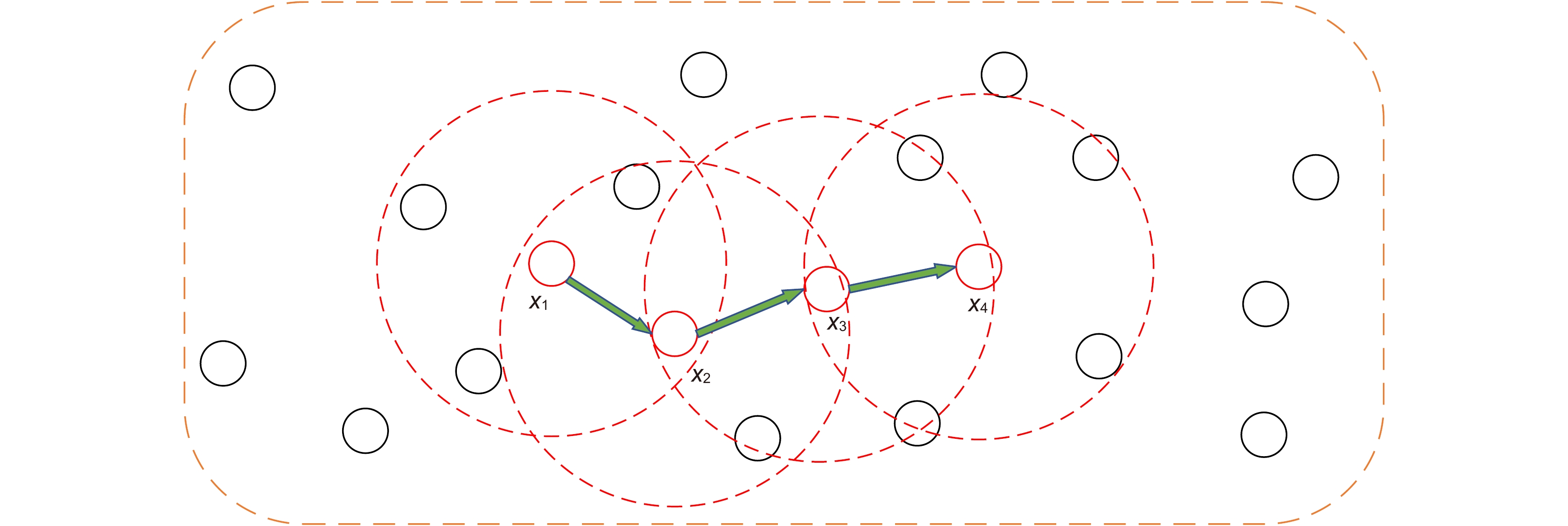

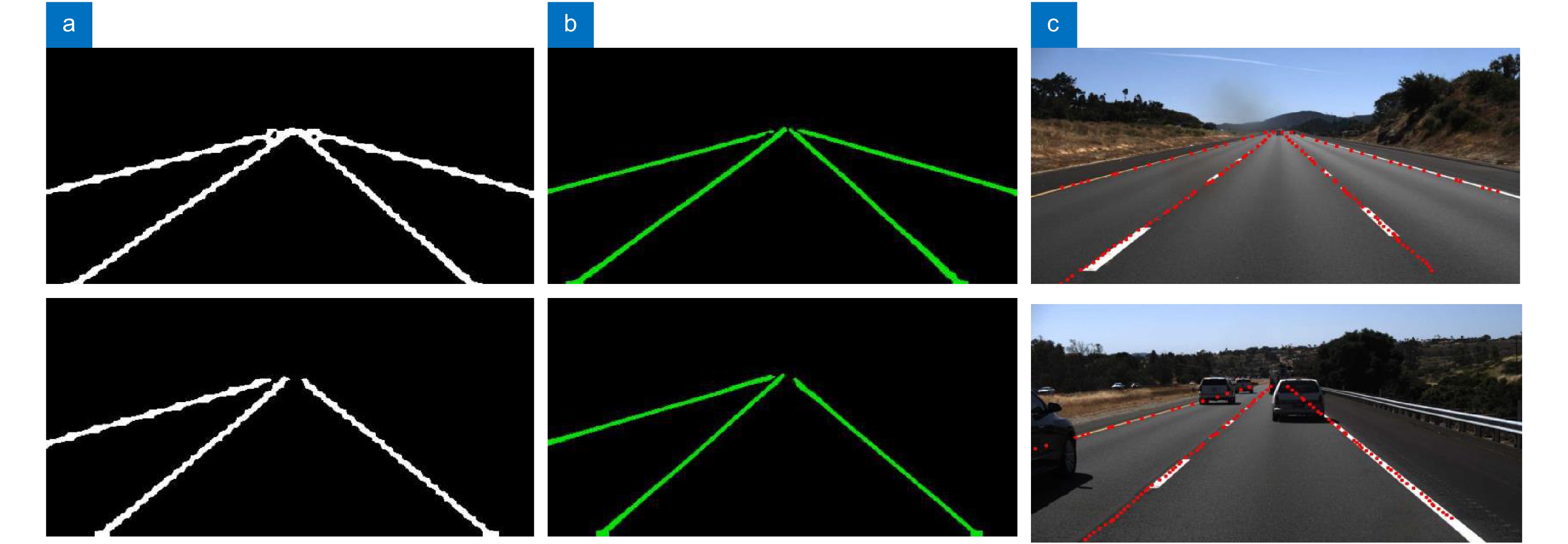

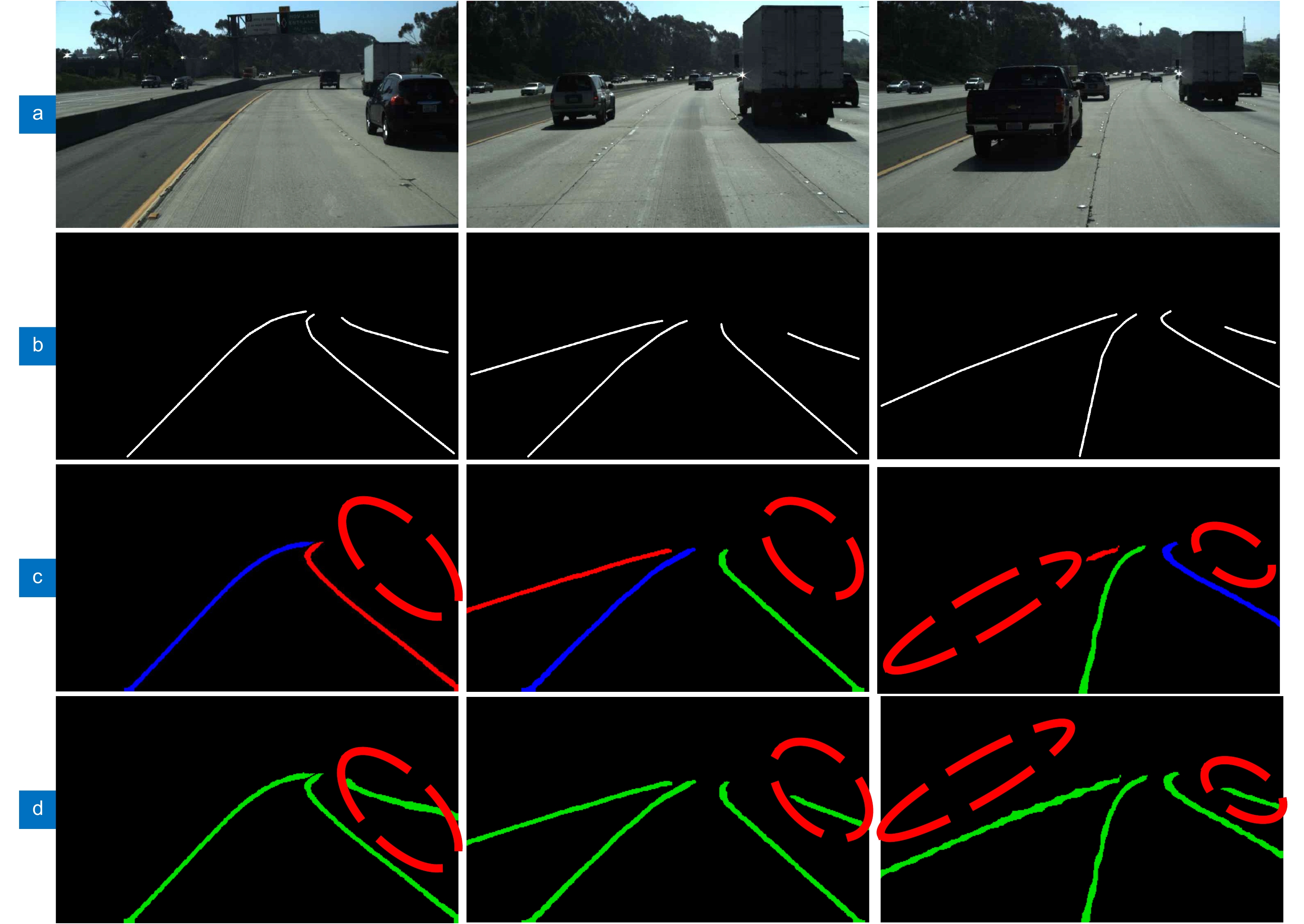

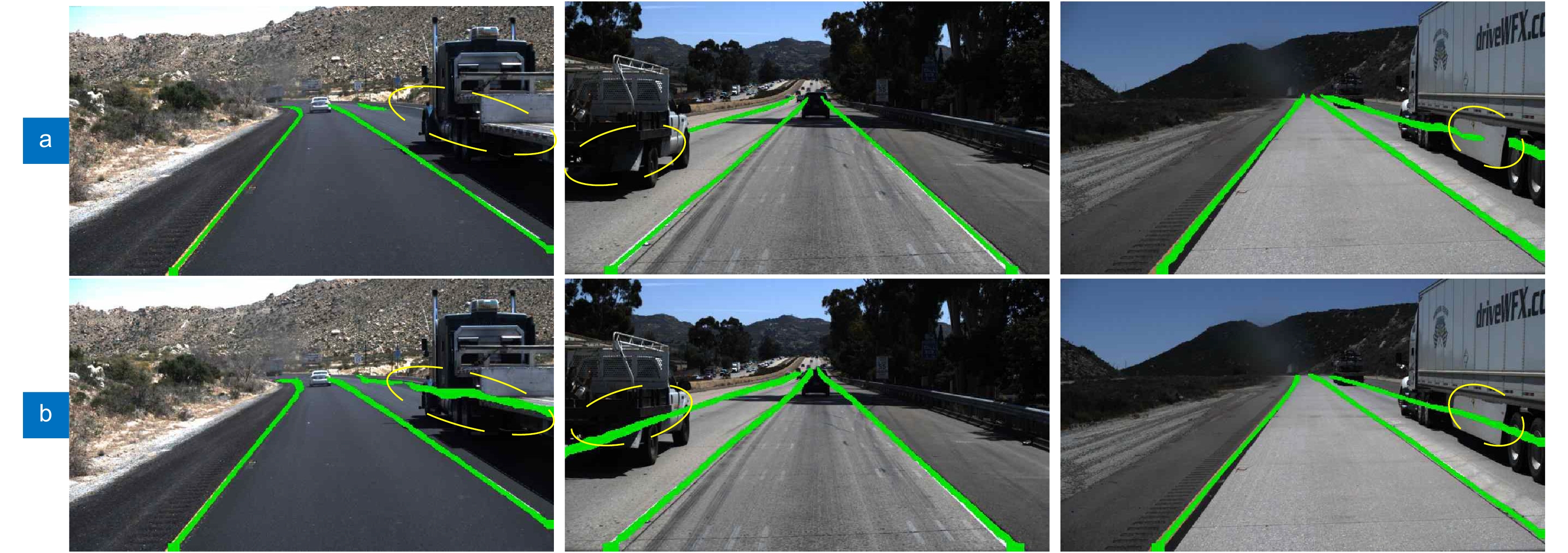

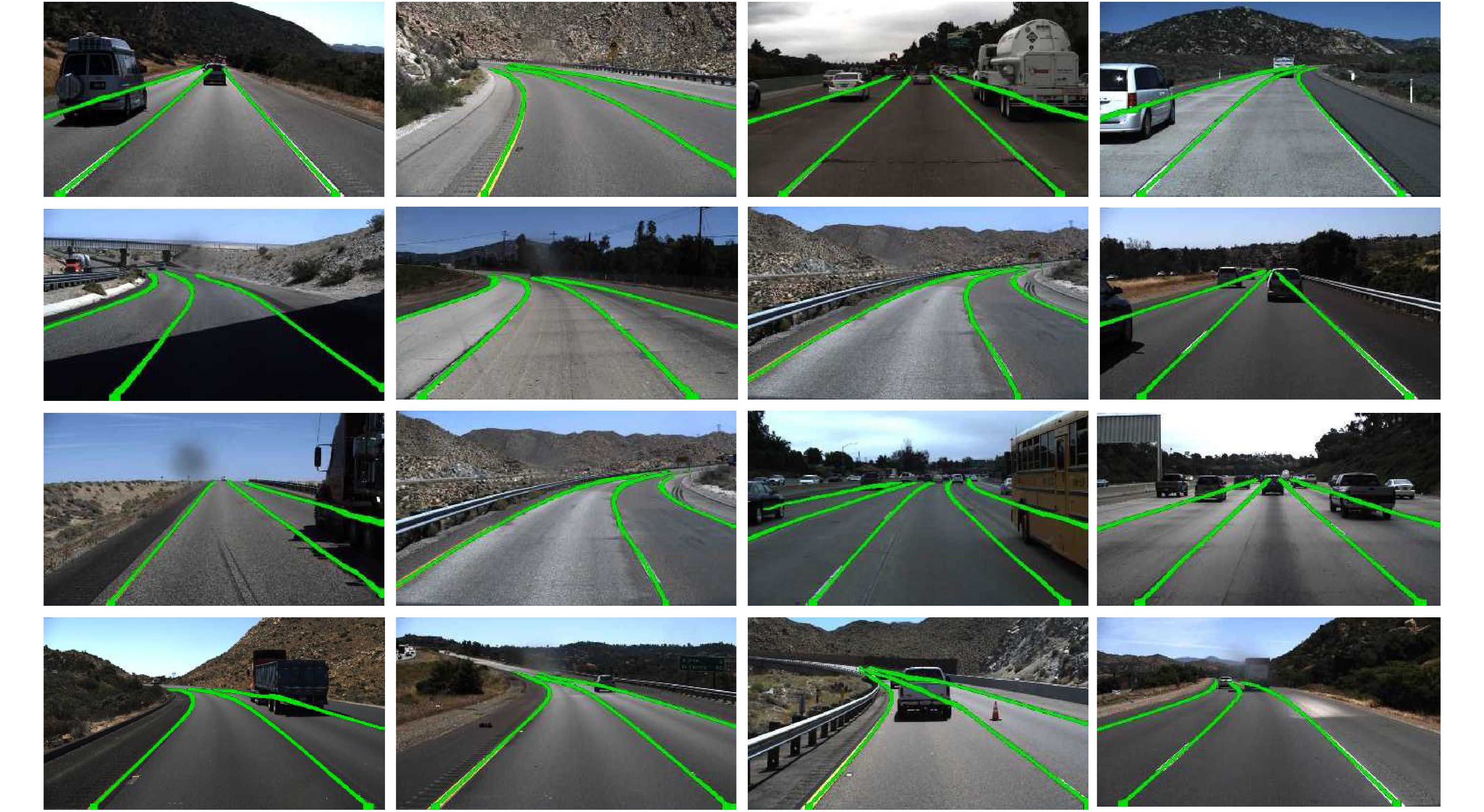

Overview: In recent years, the rapid development of neural network has greatly improved the efficiency of lane detection. However, convolutional neural network has become a new problem restricting the development of lane detection because of its large amount of calculation and high hardware requirements. Lane detection methods based on deep learning can be divided into two categories: detection based methods and segmentation based methods. The method based on detection has the advantages of high speed and strong ability to deal with straight lane. However, when the environment is complex and there are many curves, the detection effect is obviously not as good as the segmentation based method. This paper adopts the segmentation based method, and considers that the performance of lane detection can be improved by establishing global context correlation and enhancing the effective expression of important Lane feature channels. Attention mechanism is a model that can significantly improve network performance. It imitates the human visual processing mechanism, strengthens the attention to important information, so as to reasonably allocate network resources and improve the detection efficiency and accuracy of the network. Therefore, this paper uses the CBAM model. In this model, channel attention and spatial attention are serial to obtain better feature representation ability. Spatial attention learns the positional relationship between lane line pixels, and channel attention learns the importance of different channel features. In addition, in order to solve the problem of complex convolution calculation and slow running speed based on segmentation model, a more efficient convolution structure is proposed to improve the computational efficiency. A new fast down sampling module laneconv and a new fast up sampling module laneconv are introduced, and the depth separable convolution is introduced to further reduce the amount of calculation. They are located in the coding part of the network. The decoding part outputs the binary segmentation result. Then, the results are clustered by DBSCAN to obtain the lane line. After clustering, compared with the complex post-processing in other literature, this paper only uses simple cubic fitting to fit the lane line, which further improves the speed. Therefore, the running speed of the model proposed in this paper is better than most segmentation based methods. Finally, a large number of experiments are carried out on tusimple Lane database. The results show that the method has good robustness under various road conditions, especially in the case of occlusion. Compared with the existing models, it has comprehensive advantages in detection accuracy and speed.

-

-

表 1 参数量和计算量对比

Table 1. Comparison of parameters and computations

Name Parameters Computations 3*3 Conv 9C2 9HWC2 LaneConv 3C2 9HWC2/8 2*2 DeConv 4C2 4HWC2 LaneDeConv 7C2/4 7HWC2/4 表 2 与其他方法在Tusimple数据集上的比较结果

Table 2. Comparison results with other methods on tusimple dataset

方法 Acc/(%) FP/(%) FN/(%) Speed/(f/s) mIoU/(%) 基于检测的方法 PointLaneNet 96.34 4.67 5.18 71.0 N/A PolyLaneNet 93.36 9.42 9.33 115.0 N/A 基于分割的方法 SCNN 96.53 6.17 1.80 7.5 57.37 VGG-LaneNet 94.03 10.2 11.0 1.7 41.34 LaneNet 94.42 9.0 9.0 62.5 56.59 Method 1 94.34 9.1 8.4 102.4 56.08 Method 2 95.70 8.3 4.31 58.6 65.22 Method 3 95.64 8.5 4.45 98.7 64.46 注意:表中的N/A表示相关论文未提及或无法复制该项目,Method 1加入了新引入的卷积结构但未加入CBAM,Method 2使用普通卷积结构但加入了CBAM,Method 3同时加入了CBAM和新引入的卷积结构 -

[1] Wang Y, Shen D G, Teoh E K. Lane detection using spline model[J]. Pattern Recognit Lett, 2000, 21(8): 677−689. doi: 10.1016/S0167-8655(00)00021-0

[2] Jiang R Y, Reinhard K, Tobi V, et al. Lane detection and tracking using a new lane model and distance transform[J]. Mach Vis Appl, 2011, 22(4): 721−737. doi: 10.1007/s00138-010-0307-7

[3] 朱鸿宇, 杨帆, 高晓倩, 等. 基于级联霍夫变换的车道线快速检测算法[J]. 计算机技术与发展, 2021, 31(1): 88−93. doi: 10.3969/j.issn.1673-629X.2021.01.016

Zhu H Y, Yang F, Gao X Q, et al. A fast lane detection algorithm based on cascade Hough transform[J]. Comput Technol Dev, 2021, 31(1): 88−93. doi: 10.3969/j.issn.1673-629X.2021.01.016

[4] Tian J, Liu S W, Zhong X Y, et al. LSD-based adaptive lane detection and tracking for ADAS in structured road environment[J]. Soft Comput, 2021, 25(7): 5709−5722. doi: 10.1007/s00500-020-05566-4

[5] Qin Z Q, Wang H Y, Li X. Ultra fast structure-aware deep lane detection[C]//16th European Conference on Computer Vision, 2020: 276–291.

[6] Chen Z P, Liu Q F, Lian C F. PointLaneNet: efficient end-to-end CNNs for accurate real-time lane detection[C]//2019 IEEE Intelligent Vehicles Symposium (IV), 2019: 2563–2568.

[7] Tabelini L, Berriel R, Paixão T M, et al. PolyLaneNet: lane estimation via deep polynomial regression[C]//2020 25th International Conference on Pattern Recognition (ICPR), 2021: 6150–6156.

[8] Ji G Q, Zheng Y C. Lane line detection system based on improved Yolo V3 algorithm[Z]. Research Square: 2021. https://doi.org/10.21203/rs.3.rs-961172/v1.

[9] Neven D, De Brabandere B, Georgoulis S, et al. Towards end-to-end lane detection: an instance segmentation approach[C]//2018 IEEE Intelligent Vehicles Symposium (IV), 2018: 286–291.

[10] 刘彬, 刘宏哲. 基于改进Enet网络的车道线检测算法[J]. 计算机科学, 2020, 47(4): 142−149. doi: 10.11896/jsjkx.190500021

Liu B, Liu H Z. Lane detection algorithm based on improved Enet network[J]. Comput Sci, 2020, 47(4): 142−149. doi: 10.11896/jsjkx.190500021

[11] 田锦, 袁家政, 刘宏哲. 基于实例分割的车道线检测及自适应拟合算法[J]. 计算机应用, 2020, 40(7): 1932−1937. doi: 10.11772/j.issn.1001-9081.2019112030

Tian J, Yuan J Z, Liu H Z. Instance segmentation based lane line detection and adaptive fitting algorithm[J]. J Comput Appl, 2020, 40(7): 1932−1937. doi: 10.11772/j.issn.1001-9081.2019112030

[12] Pan X G, Shi J P, Luo P, et al. Spatial as deep: spatial CNN for traffic scene understanding[C]//Thirty-Second AAAI Conference on Artificial Intelligence, 2018: 7276–7283.

[13] Woo S, Park J, Lee J Y, et al. CBAM: convolutional block attention module[C]//Proceedings of the 15th European Conference on Computer Vision (ECCV), 2018: 3–19.

[14] Howard A G, Zhu M L, Chen B, et al. MobileNets: efficient convolutional neural networks for mobile vision applications[Z]. arXiv:1704.04861, 2017. http://www.arxiv.org/abs/1704.04861.

[15] Yu F, Koltun V. Multi-scale context aggregation by dilated convolutions[C]//4th International Conference on Learning Representations, 2016.

[16] Chollet F. Xception: deep learning with depthwise separable convolutions[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2017: 1800–1807.

[17] Szegedy C, Vanhoucke V, Ioffe S, et al. Rethinking the inception architecture for computer vision[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016: 2818–2826.

[18] Wu B C, Wan A, Yue X Y, et al. SqueezeSeg: convolutional neural nets with recurrent CRF for real-time road-object segmentation from 3D LiDAR point cloud[C]//2018 IEEE International Conference on Robotics and Automation (ICRA), 2018: 1887–1893.

[19] Ren S Q, He K M, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Trans Pattern Anal Mach Intell, 2017, 39(6): 1137−1149. doi: 10.1109/TPAMI.2016.2577031

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: