-

摘要:

与高质量可见光图像相比,红外图像在行人检测任务中往往存在较高的虚警率。其主要原因在于红外图像受成像分辨率及光谱特性限制,缺乏清晰的纹理特征,同时部分样本的特征质量较差,干扰网络的正常学习。本文提出基于多任务学习框架的红外行人检测算法,其在多尺度检测框架的基础上,做出以下改进:1) 引入显著性检测任务作为协同分支与目标检测网络构成多任务学习框架,以共同学习的方式侧面强化检测器对强显著区域及其边缘信息的关注。2) 通过将样本显著性强度引入分类损失函数,抑制噪声样本的学习权重。在公开KAIST数据集上的检测结果证实,本文的算法相较于基准算法RetinaNet能够降低对数平均丢失率(MR-2)4.43%。

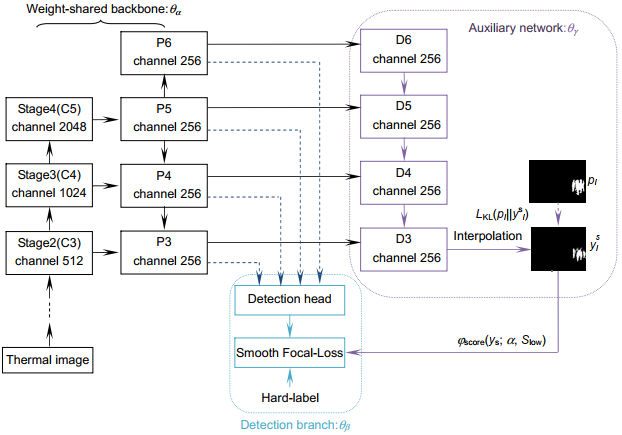

Abstract:Compared with high-quality RGB images, thermal images tend to have a higher false alarm rate in pedestrian detection tasks. The main reason is that thermal images are limited by imaging resolution and spectral characteristics, lacking clear texture features, while some samples have poor feature quality, which interferes with the network training. We propose a thermal pedestrian algorithm based on a multi-task learning framework, which makes the following improvements based on the multiscale detection framework. First, saliency detection tasks are introduced as an auxiliary branch with the target detection network to form a multitask learning framework, which side-step the detector's attention to illuminate salient regions and their edge information in a co-learning manner. Second, the learning weight of noisy samples is suppressed by introducing the saliency strength into the classification loss function. The detection results on the publicly available KAIST dataset confirm that our learning method can effectively reduce the log-average miss rate by 4.43% compared to the baseline, RetinaNet.

-

Key words:

- thermal pedestrian detection /

- multi-task learning /

- saliency detection

-

Overview: In recent years, pedestrian detection techniques based on visible images have been developed rapidly. However, interference from light, smoke, and occlusion makes it difficult to achieve robust detection around the clock by relying on these images alone. Thermal images, on the other hand, can sense the thermal radiation information in the specified wavelength band emitted by the target, which are highly resistant to interference, ambient lighting, etc, and widely used in security and transportation. At present, the detection performance of thermal images still needs to be improved, which suffers from the poor image quality of thermal images and the interference of some noisy samples to network learning.

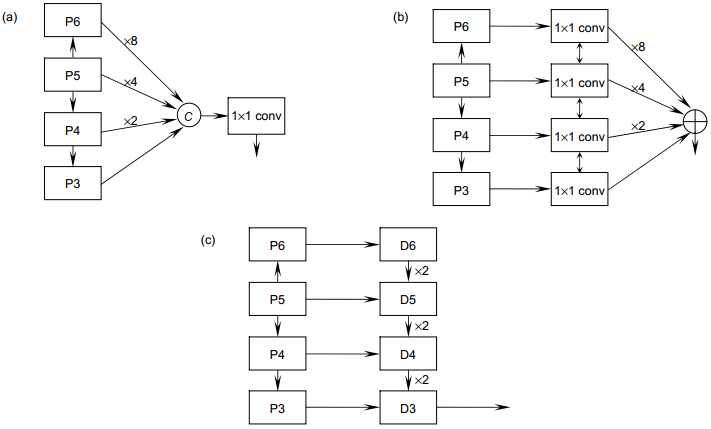

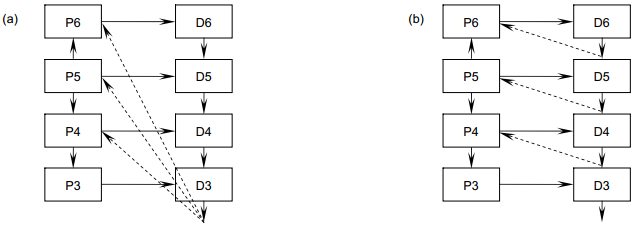

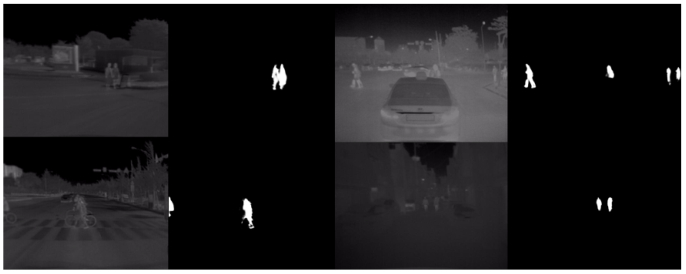

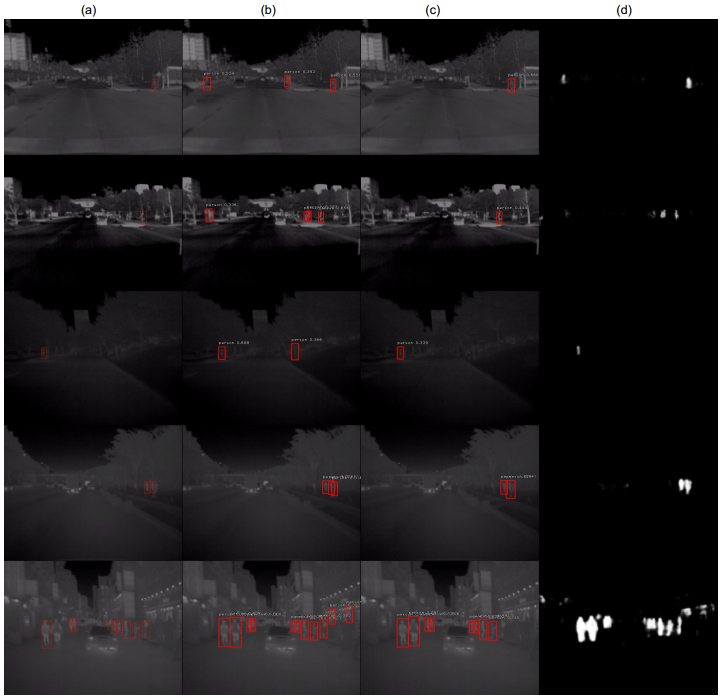

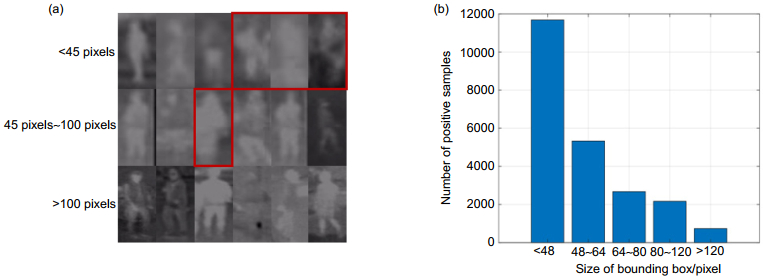

In order to improve the performance of the thermal pedestrian detection algorithm, we firstly introduce a saliency detection map as supervised information and adopt a framework of multi-task learning, where the main network completes the pedestrian detection task and the auxiliary network satisfies the saliency detection task. By sharing the feature extraction modules of both tasks, the network has saliency detection capability while guiding the network to focus on salient regions. To search for the most reasonable framework of the auxiliary network, we test four different kinds of design from the independent-learning to the guided-attentive model. Secondly, through the visualization of the pedestrian samples, we induce noisy samples that have lower saliency expressions in the thermal images and introduce the saliency strengths of different samples into the classification loss function by hand-designing the mapping function to relieve the interference of noisy samples on the network learning. To achieve this goal, we adopt a sigmoid function with reasonable transformation as our mapping function, which maps the saliency area percentage to the saliency score. Finally, we introduce the saliency score to the Focal Loss and design the Smooth Focal Loss, which can decrease the loss of low-saliency samples with reasonable settings.

Extensive experiments on KAIST thermal images have proved the conclusions as follows. First, compared with other auxiliary frameworks, our cascaded model achieves impressive performance with independent design. Besides, compared with the RetinaNet, we decrease the log-average miss rate by 4.43%, which achieves competitive results among popular thermal pedestrian detection methods. Finally, our method has no impact on the computational cost in the inference process as a network training strategy. Although the effectiveness of our method has been proven, one still needs to set the super-parameters manually. In the future, how to enable the network to adapt to various detection conditions will be our next research point.

-

-

表 1 R3Net显著性检测结果的定量分析

Table 1. Quantitative analyses of saliency detection results on R3Net

Method Fβ QUOTE -Score MAE R3Net 0.6875 0.0045 表 2 独立学习式框架性能测试

Table 2. Individual learning framework contrastive test

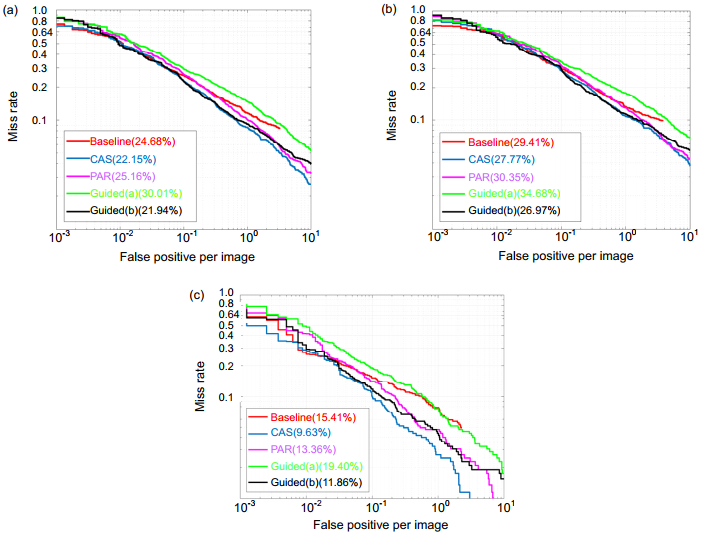

Algorithm Reasonable-all Reasonable-day Reasonable-night QUOTE MR-2/(%) mAP/(%) MR-2/(%) mAP/(%) MR-2/(%) mAP/(%) RetinaNet(baseline) 24.68 83.20 29.41 80.45 15.41 89.11 PAR 25.16 84.00 30.35 80.80 13.36 91.43 CAS 22.15 86.39 27.77 82.75 9.63 94.16 表 3 引导注意力式框架性能测试

Table 3. Guided-attention framework contrastive test

Algorithm Reasonable-all Reasonable-day Reasonable-night QUOTE MR-2/(%) mAP/(%) MR-2/(%) mAP/(%) MR-2/(%) mAP/(%) RetinaNet(baseline) 24.68 83.20 29.41 80.45 15.41 89.11 Guided(a) 30.01 79.96 34.68 76.86 19.40 87.23 Guided(b) 21.94 85.74 26.97 82.72 11.86 92.38 表 4 不同参数下的检测性能对比实验

Table 4. Contrastive testing experiment with different parameters

Slow w b α Reasonable-all Reasonable-day Reasonable-night MR-2/(%) mAP/(%) MR-2/(%) mAP/(%) MR-2/(%) mAP/(%) 0.75 0.5 0.5 16 20.63 86.50 25.15 83.55 10.63 93.19 14 21.88 85.57 26.66 82.80 12.67 91.53 12 22.37 85.30 27.64 81.97 11.25 92.58 0.7 0.6 0.4 16 22.72 85.36 28.51 81.86 10.84 92.78 14 20.25 86.13 25.18 82.81 9.57 93.57 12 21.76 85.82 26.36 82.90 11.46 92.39 0.65 0.7 0.3 16 21.13 85.89 26.06 82.83 10.37 92.80 14 22.35 84.58 27.04 81.62 12.52 91.30 12 21.05 86.15 24.99 83.63 12.18 91.97 表 5 KAIST红外行人检测算法性能测试对比,其中+SM表示采用文献[13]的方式引入显著图

Table 5. Contrastive experiments on various thermal pedestrian detection methods, where +SM represents introducing Saliency Map in the way of Ref. [13]

Detectors MR-2-all MR-2-day MR-2-night Faster RCNN-T[13] 47.59 50.13 40.93 Faster RCNN+SM[13] — 30.4 21 Bottom up[25] 35.2 40 20.5 TC-thermal[14] 28.53 36.59 11.03 TC-Det[14] 27.11 34.81 10.31 RetinaNet(baseline) 24.68 29.41 15.41 RetinaNet+SM 23.47 30.30 9.85 Ours(CAS) 22.15 27.77 9.63 Ours(CAS+Smooth FL) 20.25 25.18 9.57 -

[1] Zhang L L, Lin L, Liang X D, et al. Is faster R-CNN doing well for pedestrian detection?[C]//Proceedings of the 14th European Conference on Computer Vision, 2016: 443–457.

[2] Li J N, Liang X D, Shen S M, et al. Scale-aware fast R-CNN for pedestrian detection[J]. IEEE Trans Multimed, 2018, 20(4): 985–996. https://ieeexplore.ieee.org/document/8060595

[3] 张宝华, 朱思雨, 吕晓琪, 等. 软多标签和深度特征融合的无监督行人重识别[J]. 光电工程, 2020, 47(12): 190636. doi: 10.12086/oee.2020.190636

Zhang B H, Zhu S Y, Lv X Q, et al. Soft multilabel learning and deep feature fusion for unsupervised person re-identification[J]. Opto-Electron Eng, 2020, 47(12): 190636. doi: 10.12086/oee.2020.190636

[4] 张晓艳, 张宝华, 吕晓琪, 等. 深度双重注意力的生成与判别联合学习的行人重识别[J]. 光电工程, 2021, 48(5): 200388. doi: 10.12086/oee.2021.200388

Zhang X Y, Zhang B H, Lv X Q, et al. The joint discriminative and generative learning for person re-identification of deep dual attention[J]. Opto-Electron Eng, 2021, 48(5): 200388. doi: 10.12086/oee.2021.200388

[5] Hwang S, Park J, Kim N, et al. Multispectral pedestrian detection: Benchmark dataset and baseline[C]//Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2015: 1037–1045.

[6] Liu J J, Zhang S T, Wang S, et al. Multispectral deep neural networks for pedestrian detection[Z]. arXiv preprint arXiv: 1611.02644, 2016.

[7] 汪荣贵, 王静, 杨娟, 等. 基于红外和可见光模态的随机融合特征金子塔行人重识别[J]. 光电工程, 2020, 47(12): 190669. doi: 10.12086/oee.2020.190669

Wang R G, Wang J, Yang J, et al. Feature pyramid random fusion network for visible-infrared modality person re-identification[J]. Opto-Electron Eng, 2020, 47(12): 190669. doi: 10.12086/oee.2020.190669

[8] 张汝榛, 张建林, 祁小平, 等. 复杂场景下的红外目标检测[J]. 光电工程, 2020, 47(10): 200314. doi: 10.12086/oee.2020.200314

Zhang R Z, Zhang J L, Qi X P, et al. Infrared target detection and recognition in complex scene[J]. Opto-Electron Eng, 2020, 47(10): 200314. doi: 10.12086/oee.2020.200314

[9] Ren S, He K, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE transactions on pattern analysis and machine intelligence, 2016, 39(6): 1137–1149. https://ieeexplore.ieee.org/document/7485869

[10] Redmon J, Divvala S, Girshick R, et al. You only look once: unified, real-time object detection[C]//Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016: 779–788.

[11] John V, Mita S, Liu Z, et al. Pedestrian detection in thermal images using adaptive fuzzy C-means clustering and convolutional neural networks[C]//2015 14th IAPR International Conference on Machine Vision Applications (MVA), 2015: 246–249.

[12] Devaguptapu C, Akolekar N, Sharma M M, et al. Borrow from anywhere: pseudo multi-modal object detection in thermal imagery[C]//Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2019: 1029–1038.

[13] Ghose D, Desai S M, Bhattacharya S, et al. Pedestrian detection in thermal images using saliency maps[C]//Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2019: 988–997.

[14] Kieu M, Bagdanov AD, Bertini M, et al. Task-conditioned domain adaptation for pedestrian detection in thermal imagery[C]//Proceedings of the 16th European Conference on Computer Vision, 2020: 546–562.

[15] Lin T Y, Goyal P, Girshick R, et al. Focal loss for dense object detection[C]//Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), 2017: 2999–3007.

[16] Deng Z J, Hu X W, Zhu L, et al. R3Net: recurrent residual refinement network for saliency detection[C]//Proceedings of the 27th International Joint Conference on Artificial Intelligence, 2018: 684–690.

[17] Koch C, Ullman S. Shifts in selective visual attention: towards the underlying neural circuitry[J]. Hum Neurobiol, 1985, 4(4): 219–227. https://www.cin.ufpe.br/~fsq/Artigos/200.pdf

[18] Hou X D, Zhang L Q. Saliency detection: a spectral residual approach[C]//2007 IEEE Conference on Computer Vision and Pattern Recognition, 2007: 1–8.

[19] Montabone S, Soto A. Human detection using a mobile platform and novel features derived from a visual saliency mechanism[J]. Image Vis Comput, 2010, 28(3): 391–402. doi: 10.1016/j.imavis.2009.06.006

[20] Liu N, Han J W, Yang M H. PiCANet: learning pixel-wise contextual attention for saliency detection[C]//Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018: 3089–3098.

[21] Li C Y, Song D, Tong R F, et al. Illumination-aware faster R-CNN for robust multispectral pedestrian detection[J]. Pattern Recognit, 2019, 85: 161–171. doi: 10.1016/j.patcog.2018.08.005

[22] Li C Y, Song D, Tong R F, et al. Multispectral pedestrian detection via simultaneous detection and segmentation[Z]. arXiv preprint arXiv: 1808.04818, 2018.

[23] Guo T T, Huynh C P, Solh M. Domain-adaptive pedestrian detection in thermal images[C]//2019 IEEE International Conference on Image Processing (ICIP), 2019: 1660–1664.

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: