-

摘要:

光场成像能同时记录光线的强度信息与方向信息且具备估计场景深度的能力。然而,深度估计的精度却容易受光场遮挡的影响。因此,本文提出一种边框加权角相关的深度估计方法来解决该问题。首先,该方法将光场角度域图像分成四个边框子集并分别度量这些子集中像素的相关性来构建四个代价体积,以此解决不同类型的遮挡。其次,该方法提出加权融合策略来融合四个代价体积,进一步增强算法的鲁棒性,同时保留算法的抗遮挡能力。最后,融合后的代价体积利用引导滤波对其进行优化,以提升深度估计的精度。实验结果表明,提出的方法在量化指标上优于现有的方法。同时,在绝对深度测量实验中,提出的方法能实现高精度的测量。

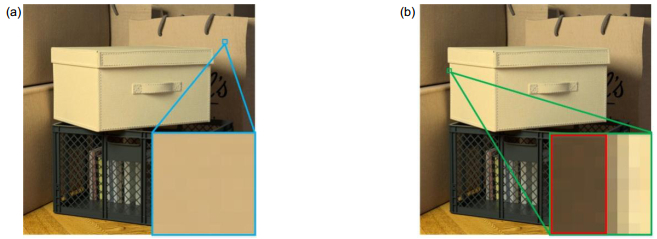

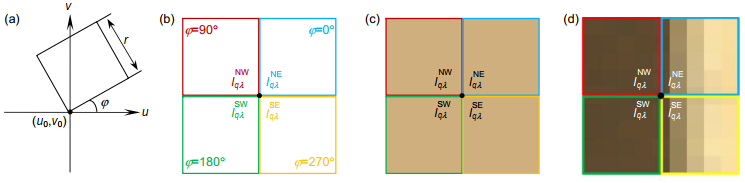

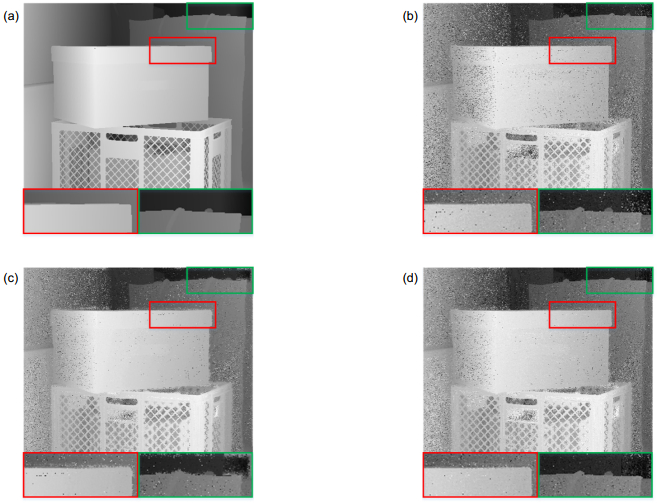

Abstract:Light field imaging recodes not only the intensity information of the rays, but also its direction information, and has the ability to estimate the depth of the scene. However, the accuracy of the depth estimation is easily influenced by light field occlusion. This paper proposes a method of weighted side window angular coherence to deal with different types of occlusions. Firstly, the angular patch is divided into four side window subsets, and the coherence of the pixels in these subsets is measured to construct four cost volumes to solve different types of occlusion. Secondly, the weighted fusion strategy is proposed to fuse the four cost volumes to further enhance the robustness of the algorithm and retain the anti-occlusion of the algorithm. Finally, the fused cost volume is optimized by the guided filter to further improve the accuracy of depth estimation. Experimental results show that the proposed method is superior to the existing methods in the quantitative index and can achieve high-precision measurement in the absolute depth measurement experiment.

-

Key words:

- side window angular coherence /

- weighted fusion /

- light field imaging /

- depth estimation

-

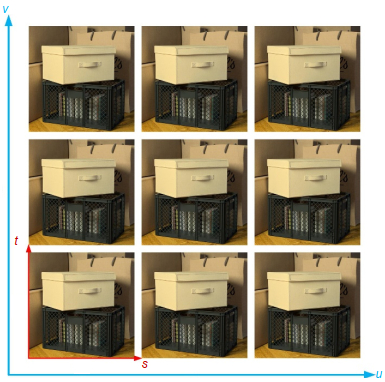

Overview: Compared with traditional imaging techniques, the light field imaging technique can recode the intensity information and direction information of rays at the same time, which is favored by both scientific research and commercial fields. Meanwhile, the equipment of recoding light field has also been greatly developed, such as programmable aperture light field, camera array, the gantry system, and micro-lens-array based light field cameras. Due to the characteristics of light field imaging, it is also applied to many fields, such as refocus, 3D reconstruction, super-resolution, object detection, light field edit, and depth estimation. Among them, depth estimation is a key step in the application of light field to high-dimensional vision, such as 3D reconstruction. However, the accuracy of depth estimation is easily influenced by the light field occlusion.

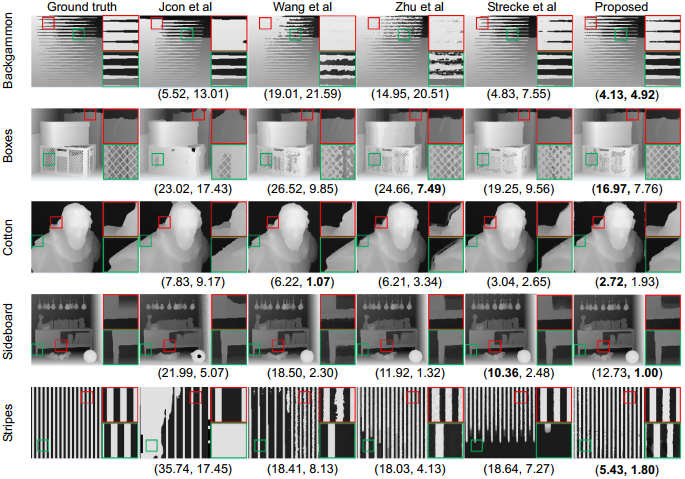

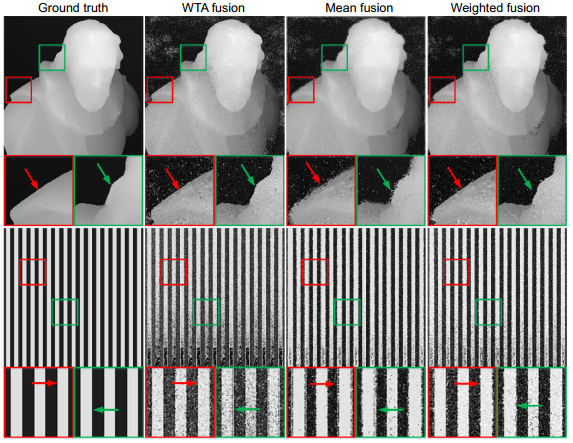

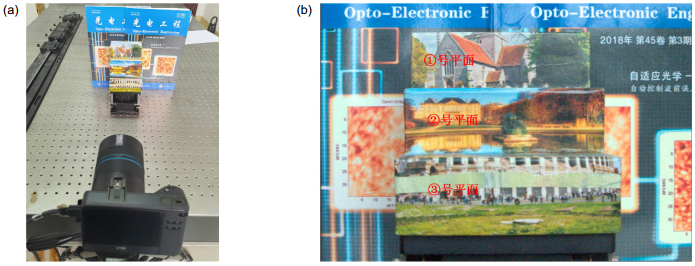

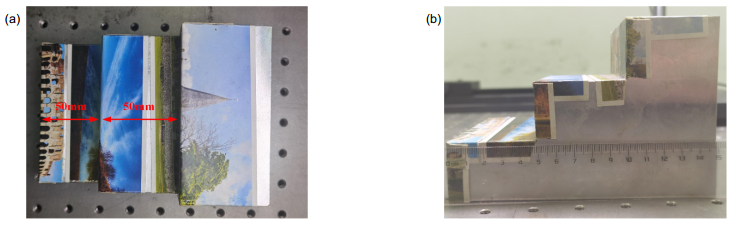

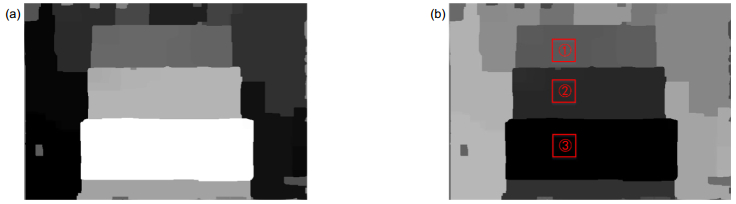

In this paper, we proposed a method of weighted side window angular coherence to solve different types of occlusion problems. Firstly, the angular patch image is divided into four different patterns of side window subsets and the pixels in these subsets are measured respectively to construct four cost volumes. The side window subset which only contains the pixels from the occluded point will exhibit the photo consistency when the depth label represents the true depth label. Then, the true depth can be obtained by the cost volume corresponding to the subsets only containing the occluded pixels, which can deal with the occlusion problem well. Secondly, we proposed a weighted strategy to fuse four cost volumes into one cost volume. According to the characteristics of four side window subsets, the cost volume corresponding to four side window subsets is given by different weighted values, which can enhance the robustness of the proposed algorithm and retain its ability to resist different types of occlusions. Finally, the fused cost volume is optimized by the guided filter to further improve the quality of the depth map. Experimental results in both synthetics and real scenes show the proposed method can handle the occlusion problem well and outperform the existing methods, especially near occlusion boundaries. In synthetic scenes, the proposed method is nearly as good as the other methods in the quantitative index of some scenes, which further proves the effectiveness of our method. In the real scene captured by our light field camera, combined with the calibration parameters of the light field camera, our method can accurately measure the surface size of the standard part, which proves the robustness of our method.

-

-

表 1 使用WTA策略与加权融合策略处理代价体积的性能在BadPix(0.07)指标上的对比

Table 1. Comparison of results in BadPix(0.07) under WTA and weighted strategy

Method Backgammon Boxes Cotton Sideboard Pyramids Stripes WTA 4.24 19.48 4.63 19.71 2.40 26.43 Proposed 4.13 16.97 2.72 12.73 1.81 5.43 表 2 使用WTA策略与加权融合策略处理代价体积的性能在MSE指标上的对比

Table 2. Comparison of results in MSE under WTA and weighted strategy

Method Backgammon Boxes Cotton Sideboard Pyramids Stripes WTA 4.70 7.34 0.99 1.20 0.07 2.53 Proposed 4.92 7.76 1.93 1.00 0.05 1.80 表 3 提出方法绝对深度测量结果

Table 3. The results of absolute depth measurement by the proposed method

Plane Ground truth/mm Proposed/mm ①—② 50 58 ②—③ 50 47 -

[1] Levoy M, Hanrahan P. Light field rendering[C]//Proceedings of the 23rd Annual Conference on Computer Graphics and Interactive Techniques, New York, 1996: 31–42.

[2] Ng R. Fourier slice photography[J]. ACM Trans Graph, 2005, 24(3): 735–744. doi: 10.1145/1073204.1073256

[3] 赵圆圆, 施圣贤. 融合多尺度特征的光场图像超分辨率方法[J]. 光电工程, 2020, 47(12): 200007. doi: 10.12086/oee.2020.200007

Zhao Y Y, Shi S X. Light-field image super-resolution based on multi-scale feature fusion[J]. Opto-Electron Eng, 2020, 47(12): 200007. doi: 10.12086/oee.2020.200007

[4] CDATA[Piao Y R, Rong Z K, Xu S, et al. DUT-LFSaliency: versatile dataset and light field-to-RGB saliency detection[Z]. arXiv preprint arXiv: 2012.15124, 2020.

[5] 吴迪, 张旭东, 范之国, 等. 基于光场内联遮挡处理的噪声场景深度获取[J]. 光电工程, 2021, 48(7): 200422. doi: 10.12086/oee.2021.200422

Wu D, Zhang X D, Fan Z G, et al. Depth acquisition of noisy scene based on inline occlusion handling of light field[J]. Opto-Electron Eng, 2021, 48(7): 200422. doi: 10.12086/oee.2021.200422

[6] Wang T C, Efros A A, Ramamoorthi R. Depth estimation with occlusion modeling using light-field cameras[J]. IEEE Trans Pattern Anal Mach Intell, 2016, 38(11): 2170–2181. doi: 10.1109/TPAMI.2016.2515615

[7] Jeon H G, Park J, Choe G, et al. Accurate depth map estimation from a lenslet light field camera[C]//Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, 2015: 1547–1555.

[8] Johannsen O, Sulc A, Goldluecke B. What sparse light field coding reveals about scene structure[C]//Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, 2016: 3262–3270.

[9] Chen C, Lin H T, Yu Z, et al. Light field stereo matching using bilateral statistics of surface cameras[C]//2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, 2014: 1518–1525.

[10] Zhang S, Sheng H, Li C, et al. Robust depth estimation for light field via spinning parallelogram operator[J]. Comput Vis Image Underst, 2016, 145: 148–159. doi: 10.1016/j.cviu.2015.12.007

[11] Wang W K, Lin Y F, Zhang S. Enhanced spinning parallelogram operator combining color constraint and histogram integration for robust light field depth estimation[J]. IEEE Signal Process Lett, 2021, 28: 1080–1084. doi: 10.1109/LSP.2021.3079844

[12] Zhu H, Wang Q, Yu J Y. Occlusion-model guided antiocclusion depth estimation in light field[J]. IEEE J Sel Top Signal Process, 2017, 11(7): 965–978. doi: 10.1109/JSTSP.2017.2730818

[13] Ng R, Levoy M, Brédif M, et al. Light field photography with a hand-held plenoptic camera[R]. Computer Science Technical Report CSTR, 2005.

[14] Ma S, Guo Z H, Wu J L, et al. Occlusion-aware light field depth estimation using side window angular coherence[J]. Appl Opt, 2021, 60(2): 392–404. doi: 10.1364/AO.411070

[15] Boykov Y, Veksler O, Zabih R. Fast approximate energy minimization via graph cuts[J]. IEEE Trans Pattern Anal Mach Intell, 2001, 23(11): 1222–1239. doi: 10.1109/34.969114

[16] He K M, Sun J, Tang X O. Guided image filtering[J]. IEEE Trans Pattern Anal Mach Intell, 2013, 35(6): 1397–1409. doi: 10.1109/TPAMI.2012.213

[17] Honauer K, Johannsen O, Kondermann D, et al. A dataset and evaluation methodology for depth estimation on 4d light fields[C]//Proceedings of the 13th Asian Conference on Computer Vision, Taiwan, China, 2016: 19–34.

[18] Strecke M, Alperovich A, Goldluecke B. Accurate depth and normal maps from occlusion-aware focal stack symmetry[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, 2017: 2529–2537.

[19] 石梦迪, 张旭东, 董运流, 等. 一种双引导滤波的光场去马赛克方法[J]. 光电工程, 2019, 46(12): 180539. doi: 10.12086/oee.2019.180539

Shi M D, Zhang X D, Dong Y L, et al. A light field demosaicing method with double guided filtering[J]. Opto-Electron Eng, 2019, 46(12): 180539. doi: 10.12086/oee.2019.180539

[20] Dansereau D G, Pizarro O, Williams S B. Decoding, calibration and rectification for lenselet-based plenoptic cameras[C]//Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, 2013: 1027–1034.

[21] Williem, Park I K, Lee K M. Robust light field depth estimation using occlusion-noise aware data costs[J]. IEEE Trans Pattern Anal Mach Intell, 2017, 40(10): 2484–2497.

[22] Bok Y, Jeon H G, Kweon I S. Geometric calibration of micro-lens-based light field cameras using line features[J]. IEEE Transn Pattern Anal Mach Intell, 2017, 39(2): 287–300. doi: 10.1109/TPAMI.2016.2541145

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: