-

摘要

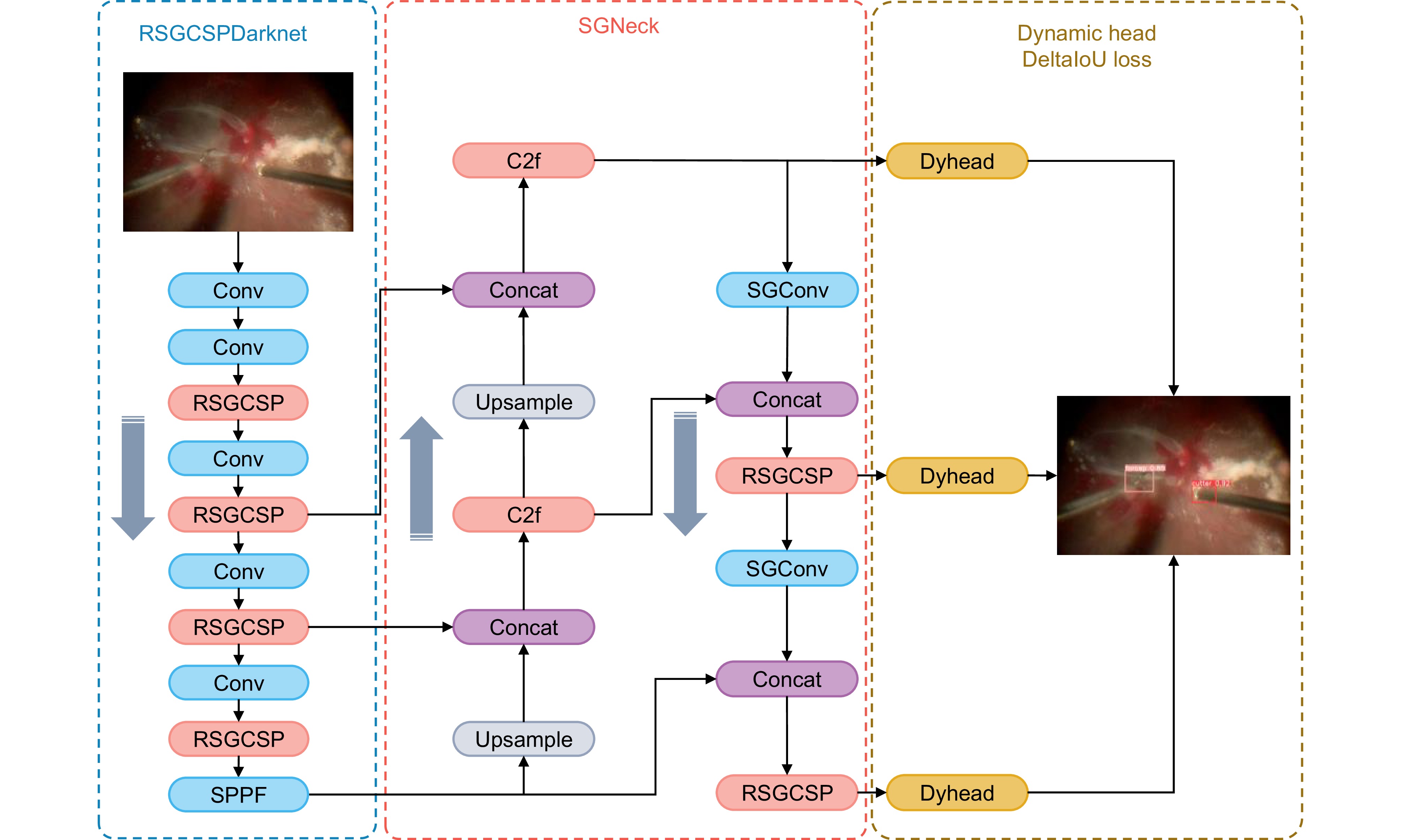

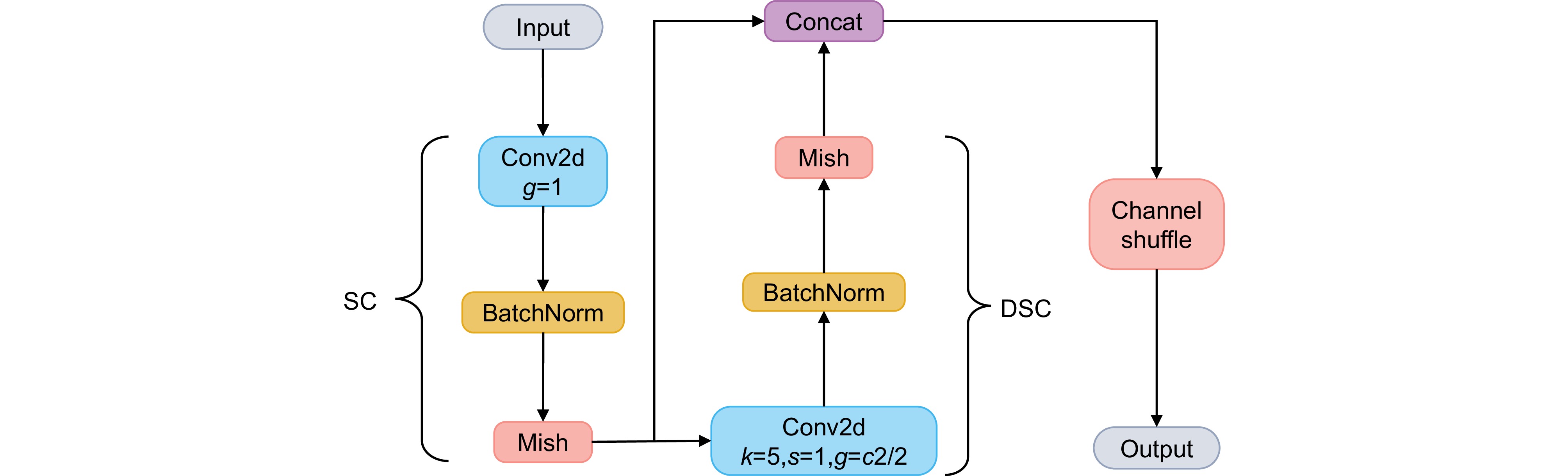

针对视网膜显微手术中的复杂干扰情况,本文利用深度学习的方法提出一种手术器械检测算法。首先,构建并手动标注了RET1数据集,并以YOLO框架为基础,针对部分图像退化,提出利用SGConv和RGSCSP特征提取模块增强模型对图像细节特征的提取能力。针对IoU损失函数收敛速度慢以及边界框回归不准确的问题,提出DeltaIoU边界框损失函数。最后,运用动态头部和解耦头部的集成对特征融合的目标进行检测。实验结果表明,提出的方法在RET1数据集上mAP50-95达到72.4%,相较原有算法提升了3.8%,并能在复杂手术场景中对器械有效检测,为后续手术显微镜自动跟踪以及智能化手术导航提供有效帮助。

Abstract

To address the challenges posed by complex interference in retinal microsurgery, this study presents a deep learning-based algorithm for surgical instrument detection. The RET1 dataset was first constructed and meticulously annotated to provide a reliable basis for training and evaluation. Building upon the YOLO framework, this study introduces the SGConv and RGSCSP feature extraction modules, specifically designed to enhance the model's capability to capture fine-grained image details, especially in scenarios involving degraded image quality. Furthermore, to address the issues of slow convergence in IoU loss and inaccuracies in bounding box regression, the DeltaIoU bounding box loss function was proposed to improve both detection precision and training efficiency. Additionally, the integration of dynamic and decoupled heads optimizes feature fusion, further enhancing the detection performance. Experimental results demonstrate that the proposed method achieves 72.4% mAP50-95 on the RET1 dataset, marking a 3.8% improvement over existing algorithms. The method also exhibits robust performance in detecting surgical instruments under various complex surgical scenarios, underscoring its potential to support automatic tracking in surgical microscopes and intelligent surgical navigation systems.

-

Key words:

- retinal microsurgery /

- object detection /

- YOLO /

- surgical microscope /

- loss function

-

Overview

Overview: The integration of computer vision into ophthalmic surgical procedures, particularly in digital navigation microscopes, has opened new avenues for real-time instrument tracking. Accurate localization of surgical instruments during retinal surgery presents unique challenges, such as reflections, motion artifacts, and obstructions, which impede precise detection. To address these challenges, this study introduces RM-YOLO, a specialized deep learning-based detection algorithm tailored for retinal microsurgery. The model is designed to ensure accurate instrument localization in real-time, offering substantial advancements over existing approaches.

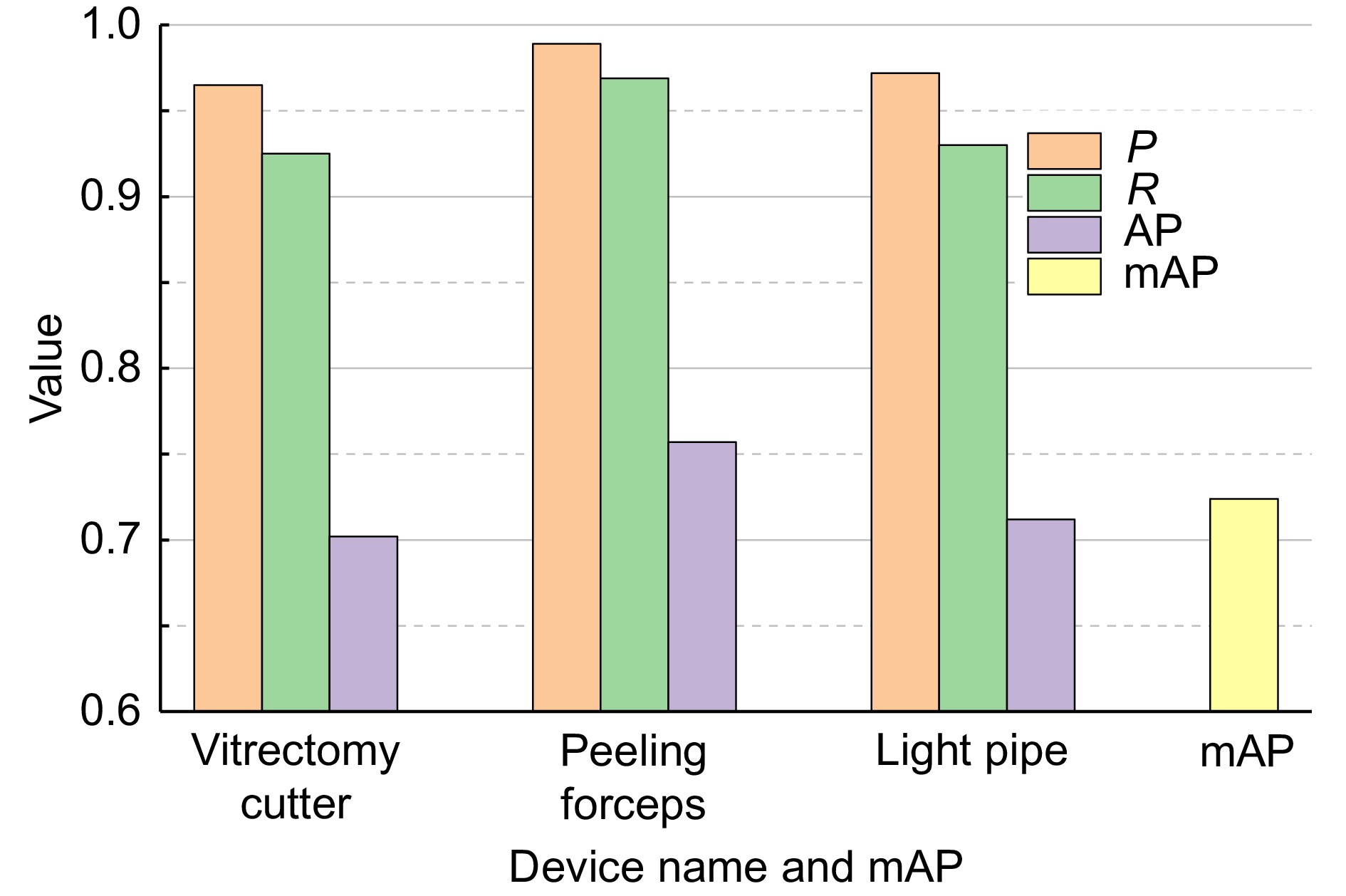

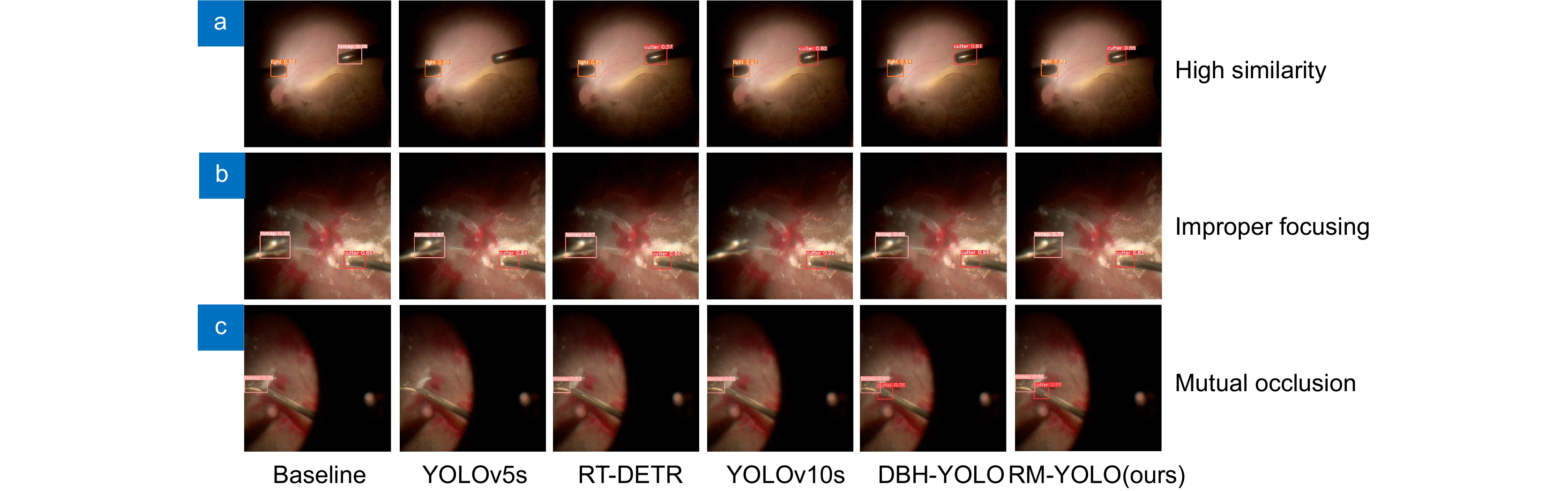

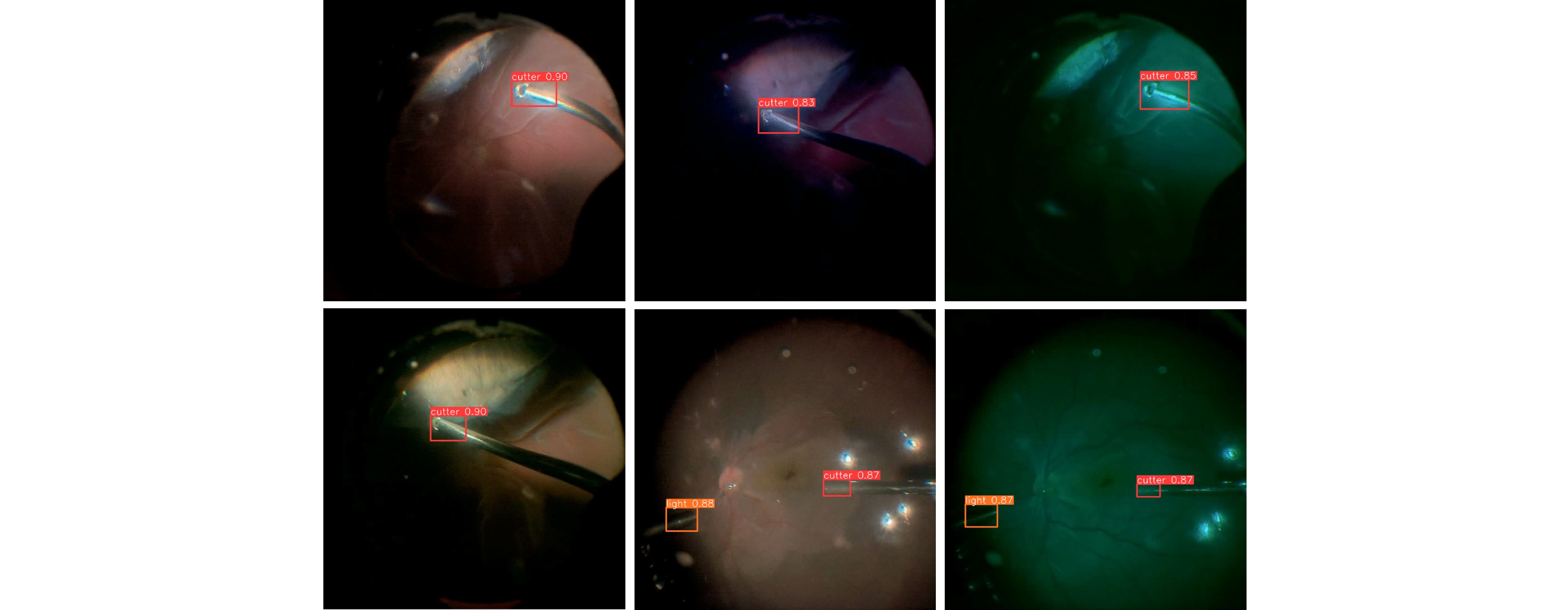

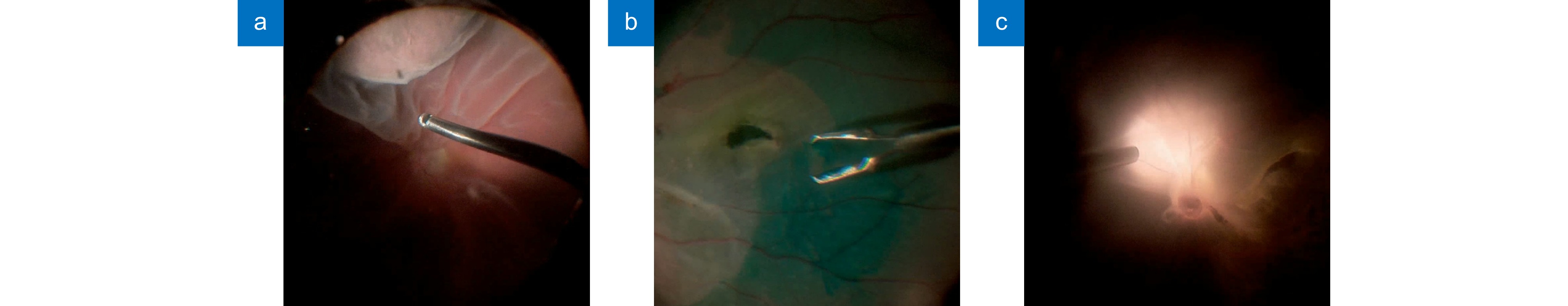

Given the scarcity of annotated data specific to retinal microsurgery, the RET1 dataset was constructed, derived from high-resolution surgical videos and manually annotated for three primary instruments: vitrectomy cutter, light pipe, and peeling forceps. This dataset encompasses various surgical conditions, including occlusions, low-light environments, and reflections, ensuring robust model training and evaluation.

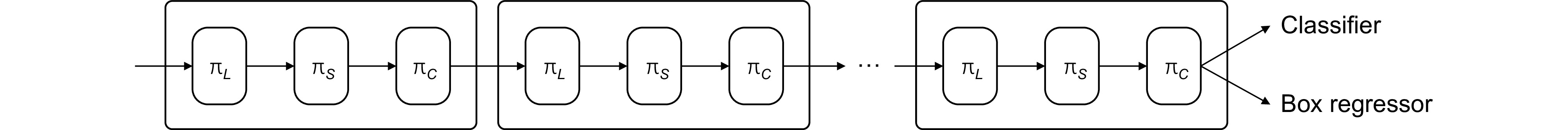

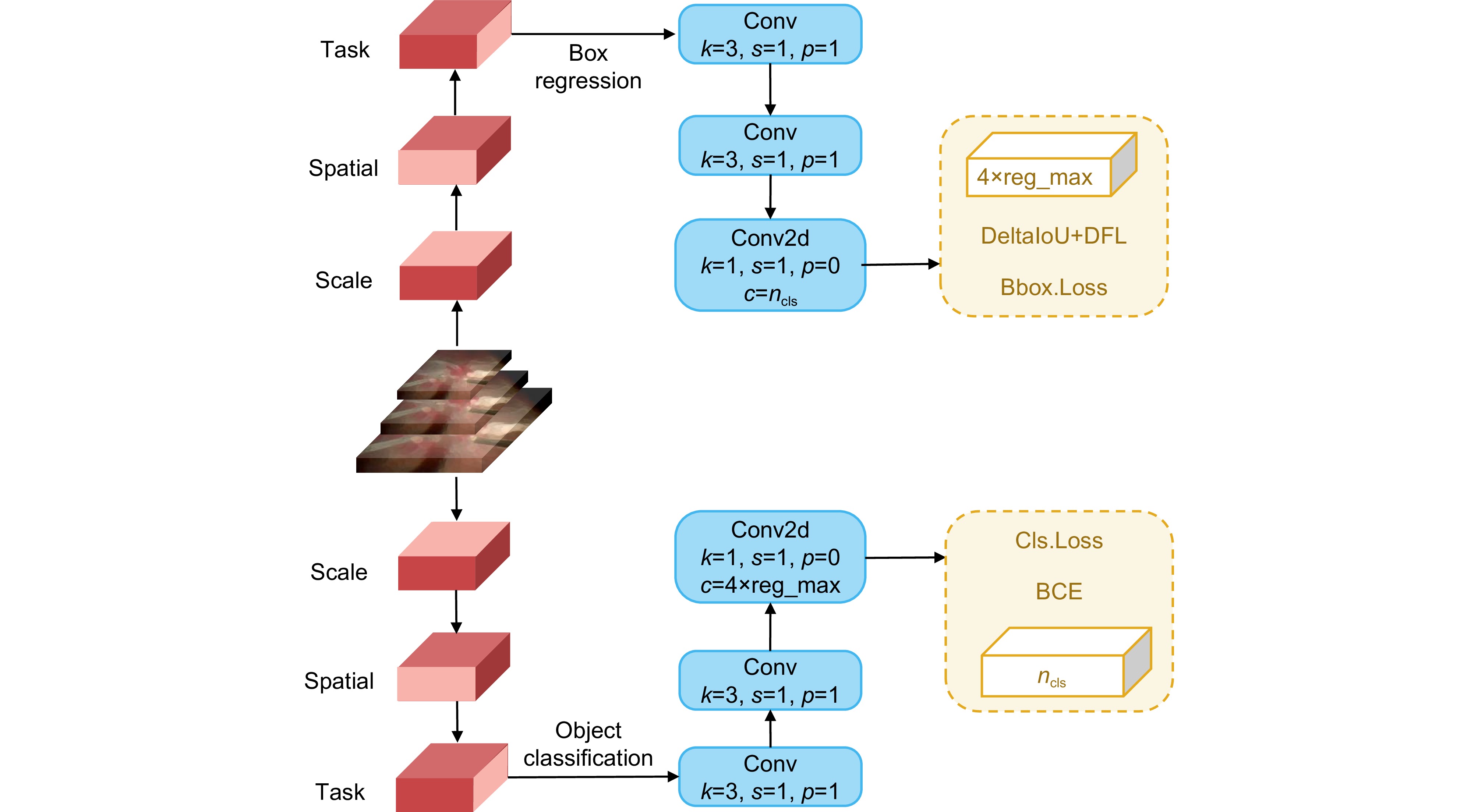

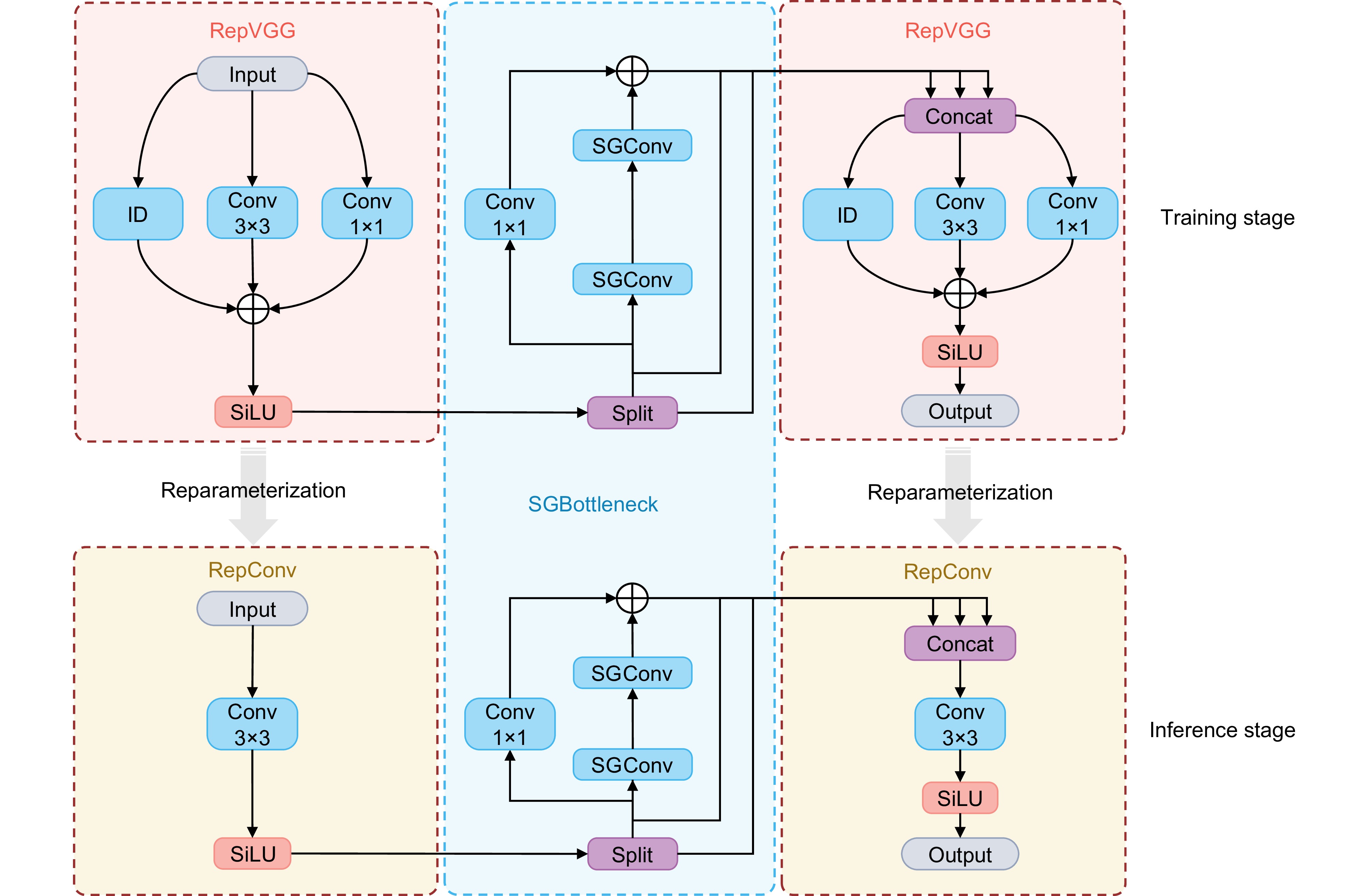

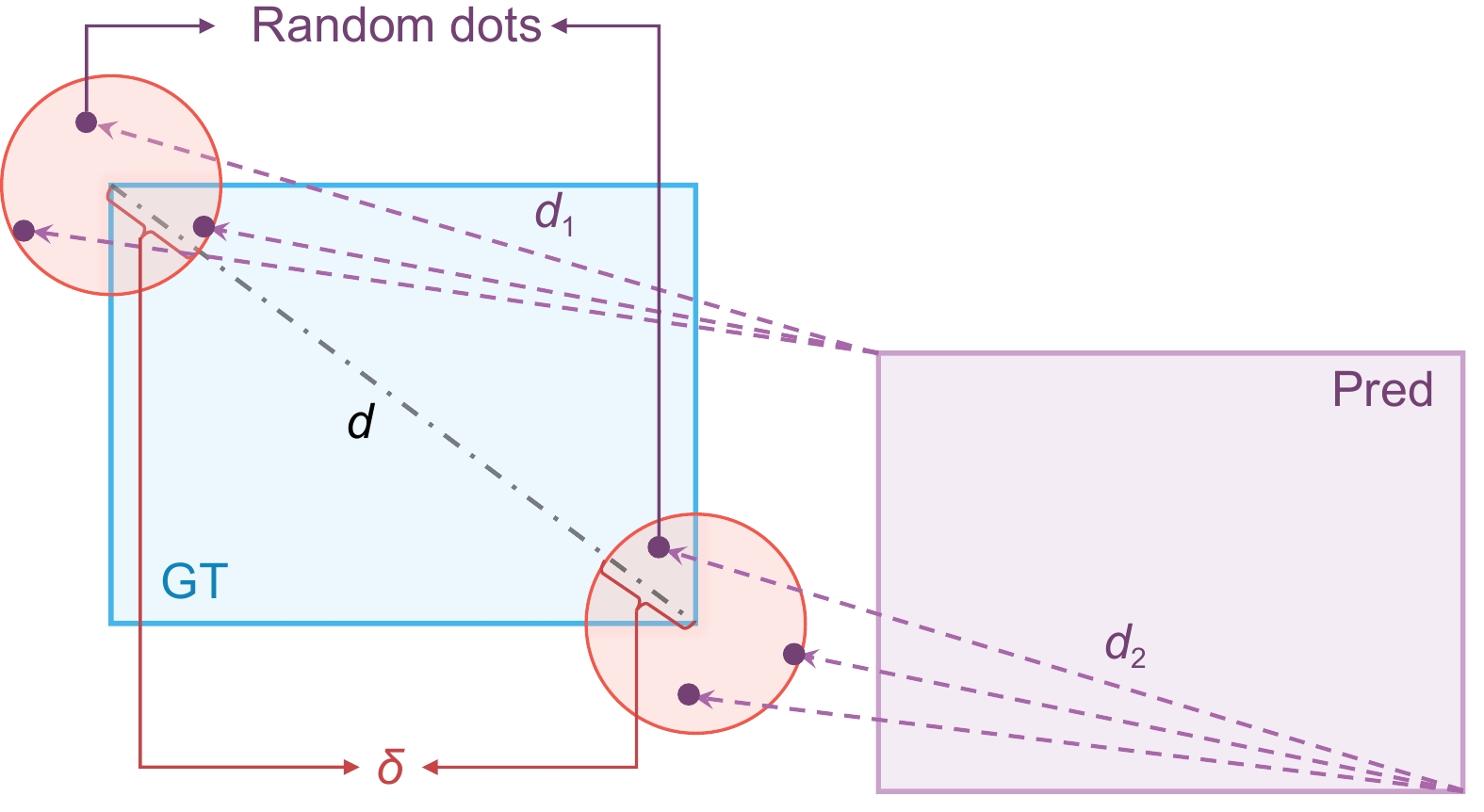

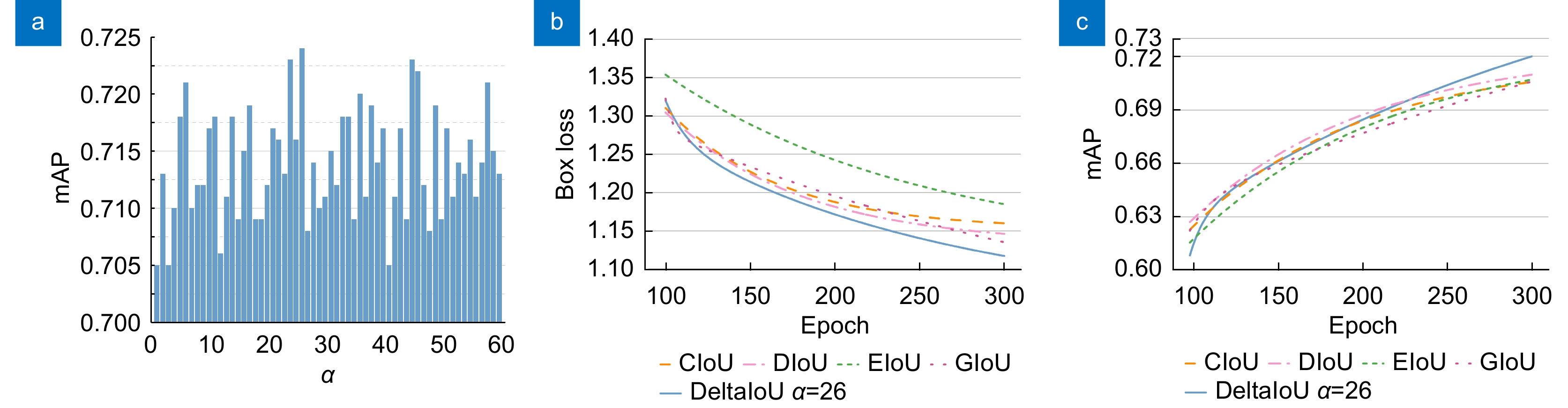

The proposed algorithm leverages a customized YOLO framework and incorporates novel modules to enhance performance. The SGConv and RGSCSP modules were specifically designed to improve feature extraction capabilities, addressing the limitations of conventional convolutional layers by employing channel shuffling and re-parameterization techniques to maximize feature diversity and minimize parameter count. Additionally, a dynamic head architecture was implemented to integrate multi-scale, spatial, and task-specific attention mechanisms, enhancing the model's ability to capture complex features across varying scales. For bounding box regression, DeltaIoU loss was introduced as a refined metric that improves convergence speed and accuracy, particularly in ambiguous annotation scenarios.

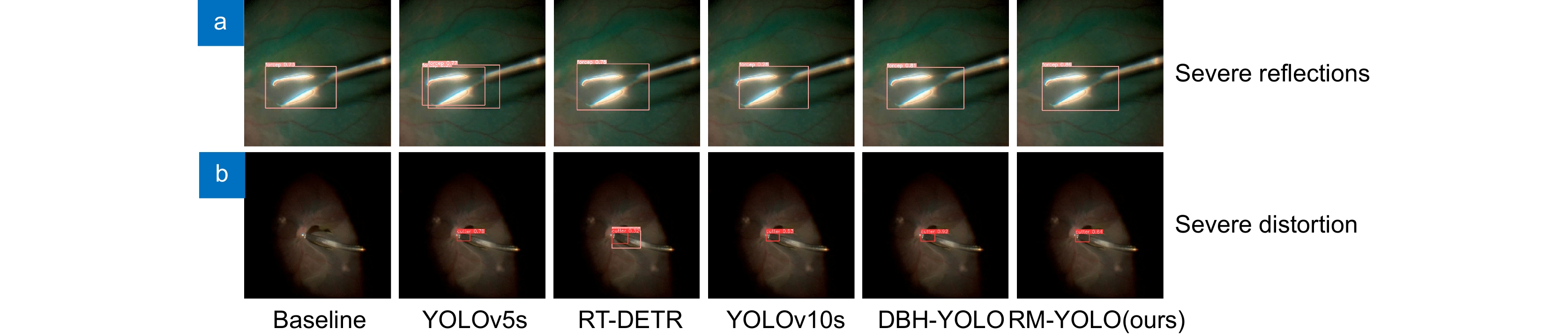

Extensive experiments on the RET1 dataset demonstrate that RM-YOLO achieves an mAP50-95 of 72.4%, outperforming existing models in precision and recall with only 7.4 million parameters and 20.7 GFLOPs. Comparative analysis with traditional and modern detection models, including Faster R-CNN, YOLO series, and RT-DETR, reveals that RM-YOLO not only achieves superior accuracy but also addresses the high rate of missed detections common in retinal microsurgery applications.

The ablation studies underscore the contributions of each module, with dynamic head and RSGCSP modules providing significant boosts in model performance by enhancing the robustness of feature representation. DeltaIoU loss further complements these improvements by ensuring precise bounding box regression in challenging visual conditions.

-

-

表 1 实验环境配置

Table 1. Experimental environment configuration

Configuration Configuration parameters Operating system Windows 11 GPU Nvidia Geforce RTX 4070 Super Programming language Python 3.11 Framework Pytorch 2.1 GPU computing framework Cuda 12.1 GPU acceleration library Cudnn 8.0 Learning rate 0.001 Momentum 0.9 Weight rate decay 0.0005 Batch size 32 Epochs 300 表 2 消融实验结果

Table 2. Ablation experiments results

Model Dynamic head RSGCSP(SGConv) DeltaIoU loss P R mAP50-95 FPS A 0.960 0.925 0.686 206 B √ 0.966 0.923 0.706 128 C √ 0.977 0.929 0.691 210 D √ 0.970 0.928 0.680 216 E √ √ 0.960 0.931 0.702 136 F √ √ 0.980 0.926 0.707 198 G √ √ 0.985 0.930 0.711 115 H √ √ √ 0.975 0.941 0.724 143 表 3 对比实验结果

Table 3. Comparison experiments results

Model P R mAP50-95 Parameters/M GFLOPs FPS Faster R-CNN 0.961 0.919 0.652 / / 85 YOLOv3s[24] 0.981 0.931 0.687 15.32 43.8 147 YOLOv5s 0.965 0.927 0.683 9.11 23.8 194 YOLOv6s[25] 0.960 0.914 0.681 16.3 44.0 192 RT-DETR 0.964 0.895 0.623 10.56 23.9 131 YOLOv9m[26] 0.952 0.927 0.685 20.02 76.5 89 YOLOv10s[27] 0.947 0.876 0.684 8.04 24.5 182 DBH-YOLO 0.975 0.918 0.643 20.86 47.9 128 RM-YOLO(ours) 0.975 0.941 0.724 7.4 20.7 143 -

参考文献

[1] Ma L, Fei B W. Comprehensive review of surgical microscopes: technology development and medical applications[J]. J Biomed Opt, 2021, 26(1): 010901. doi: 10.1117/1.JBO.26.1.010901

[2] Ehlers J P, Dupps W J, Kaiser P K, et al. The prospective intraoperative and perioperative ophthalmic ImagiNg with optical CoherEncE TomogRaphy (PIONEER) study: 2-year results[J]. Am J Ophthalmol, 2014, 158(5): 999−1007. e1 doi: 10.1016/j.ajo.2014.07.034

[3] Ravasio C S, Pissas T, Bloch E, et al. Learned optical flow for intra-operative tracking of the retinal fundus[J]. Int J Comput Assist Radiol Surg, 2020, 15(5): 827−836. doi: 10.1007/s11548-020-02160-9

[4] 李云耀, 樊金宇, 蒋天亮, 等. 光学相干层析技术在眼科手术导航方面的研究进展[J]. 光电工程, 2023, 50(1): 220027. doi: 10.12086/oee.2023.220027

Li Y Y, Fan J Y, Jiang T L, et al. Review of the development of optical coherence tomography imaging navigation technology in ophthalmic surgery[J]. Opto-Electron Eng, 2023, 50(1): 220027. doi: 10.12086/oee.2023.220027

[5] 杨建文, 黄江杰, 何益, 等. 线聚焦谱域光学相干层析成像的分段色散补偿像质优化方法[J]. 光电工程, 2024, 51(6): 240042. doi: 10.12086/oee.2024.240042

Yang J W, Huang J J, He Y, et al. Image quality optimization of line-focused spectral domain optical coherence tomography with subsection dispersion compensation[J]. Opto-Electron Eng, 2024, 51(6): 240042. doi: 10.12086/oee.2024.240042

[6] Bouget D, Allan M, Stoyanov D, et al. Vision-based and marker-less surgical tool detection and tracking: a review of the literature[J]. Med Image Anal, 2017, 35: 633−654. doi: 10.1016/j.media.2016.09.003

[7] Allan M, Ourselin S, Thompson S, et al. Toward detection and localization of instruments in minimally invasive surgery[J]. IEEE Trans Biomed Eng, 2013, 60(4): 1050−1058. doi: 10.1109/TBME.2012.2229278

[8] Alsheakhali M, Yigitsoy M, Eslami A, et al. Real time medical instrument detection and tracking in microsurgery[C]//Proceedings of the Algorithmen-Systeme-Anwendungen on Bildverarbeitung für die Medizin, Lübeck, 2015: 185–190. https://doi.org/10.1007/978-3-662-46224-9_33.

[9] Sznitman R, Richa R, Taylor R H, et al. Unified detection and tracking of instruments during retinal microsurgery[J]. IEEE Trans Pattern Anal Mach Intell, 2013, 35(5): 1263−1273. doi: 10.1109/TPAMI.2012.209

[10] Sun Y W, Pan B, Fu Y L. Lightweight deep neural network for articulated joint detection of surgical instrument in minimally invasive surgical robot[J]. J Digit Imaging, 2022, 35(4): 923−937. doi: 10.1007/s10278-022-00616-9

[11] Girshick R. Fast R-CNN[C]//Proceedings of 2015 IEEE International Conference on Computer Vision, Santiago, 2015: 1440–1448. https://doi.org/10.1109/ICCV.2015.169.

[12] Ren S Q, He K M, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Trans Pattern Anal Mach Intell, 2017, 39(6): 1137−1149. doi: 10.1109/TPAMI.2016.2577031

[13] Redmon J, Divvala S, Girshick R, et al. You only look once: unified, real-time object detection[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, 2016. https://doi.org/10.1109/CVPR.2016.91.

[14] Sarikaya D, Corso J J, Guru K A. Detection and localization of robotic tools in robot-assisted surgery videos using deep neural networks for region proposal and detection[J]. IEEE Trans Med Imaging, 2017, 36(7): 1542−1549. doi: 10.1109/TMI.2017.2665671

[15] Zhang B B, Wang S S, Dong L Y, et al. Surgical tools detection based on modulated anchoring network in laparoscopic videos[J]. IEEE Access, 2020, 8: 23748−23758. doi: 10.1109/ACCESS.2020.2969885

[16] Pan X Y, Bi M R, Wang H, et al. DBH-YOLO: a surgical instrument detection method based on feature separation in laparoscopic surgery[J]. Int J Comput Assist Radiol Surg, 2024, 19(11): 2215−2225. doi: 10.1007/s11548-024-03115-0

[17] Zhao Z J, Chen Z R, Voros S, et al. Real-time tracking of surgical instruments based on spatio-temporal context and deep learning[J]. Comput Assist Surg, 2019, 24(S1): 20−29. doi: 10.1080/24699322.2018.1560097

[18] Dai X Y, Chen Y P, Xiao B, et al. Dynamic head: unifying object detection heads with attentions[C]//Proceedings of 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, 2021: 7373–7382. https://doi.org/10.1109/CVPR46437.2021.00729.

[19] Han K, Wang Y H, Tian Q, et al. GhostNet: more features from cheap operations[C]//Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, 2020: 1580–1589. https://doi.org/10.1109/CVPR42600.2020.00165.

[20] Li H L, Li J, Wei H B, et al. Slim-neck by GSConv: a lightweight-design for real-time detector architectures[J]. J Real Time Image Process, 2024, 21(3): 62. doi: 10.1007/s11554-024-01436-6

[21] Ding X H, Zhang X Y, Ma N N, et al. RepVGG: making VGG-style ConvNets great again[C]//Proceedings of 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, 2021: 13733–13742. https://doi.org/10.1109/CVPR46437.2021.01352.

[22] Ma S L, Xu Y. MPDIoU: a loss for efficient and accurate bounding box regression[Z]. arXiv: 2307.07662, 2023. https://doi.org/10.48550/arXiv.2307.07662.

[23] Zhao Y, Lv W Y, Xu S L, et al. DETRs beat YOLOs on real-time object detection[C]//Proceedings of 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, 2024: 16965–16974. https://doi.org/10.1109/CVPR52733.2024.01605.

[24] Redmon J, Farhadi A. YOLOv3: an incremental improvement[Z]. arXiv: 1804.02767, 2018. https://doi.org/10.48550/arXiv.1804.02767.

[25] Li C Y, Li L L, Jiang H L, et al. YOLOv6: a single-stage object detection framework for industrial applications[Z]. arXiv: 2209.02976, 2022. https://doi.org/10.48550/arXiv.2209.02976.

[26] Wang C Y, Yeh I H, Liao H Y M. YOLOv9: learning what you want to learn using programmable gradient information[C]//Proceedings of the 18th European Conference on Computer Vision, Milan, 2025. https://doi.org/10.1007/978-3-031-72751-1_1.

[27] Wang A, Chen H, Liu L H, et al. YOLOv10: real-time end-to-end object detection[Z]. arXiv: 2405.14458, 2024. https://doi.org/10.48550/arXiv.2405.14458.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: