-

摘要

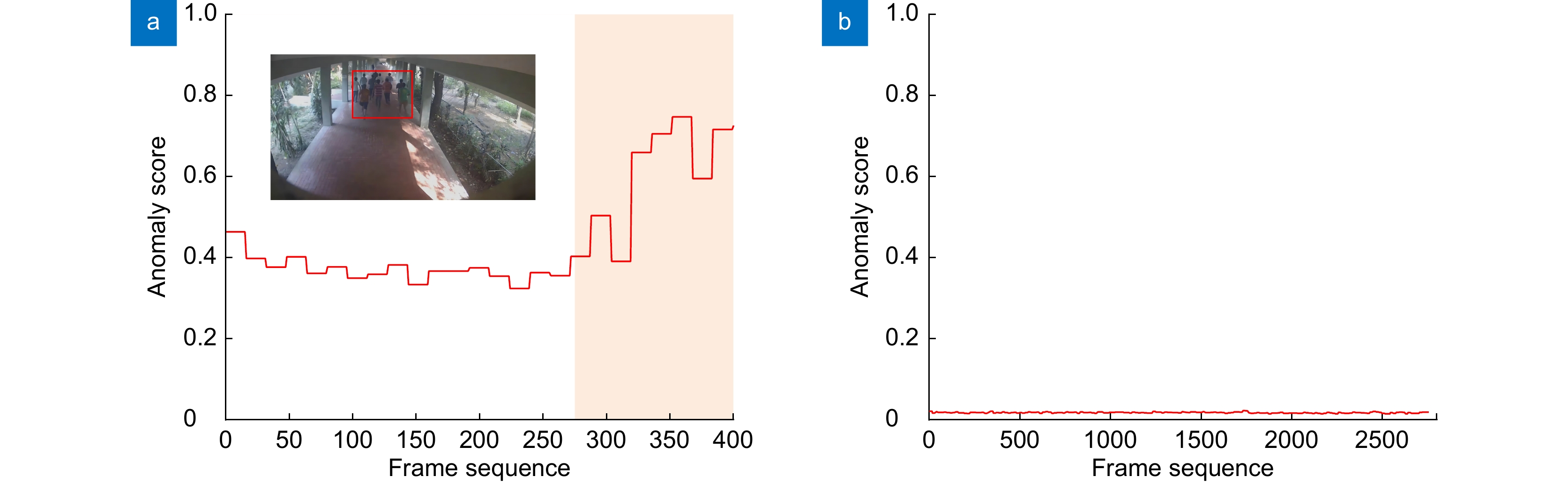

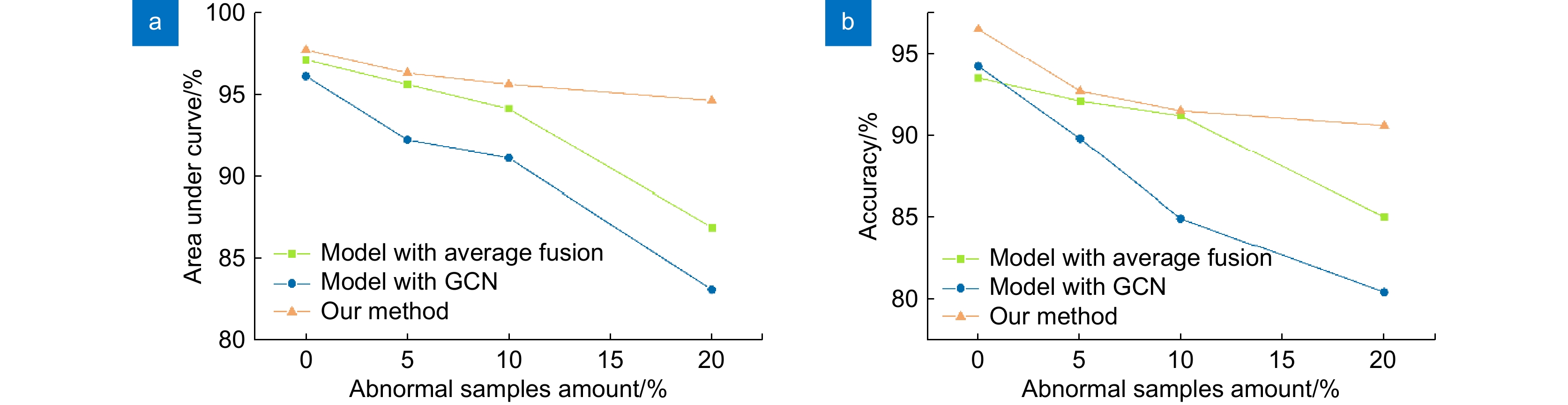

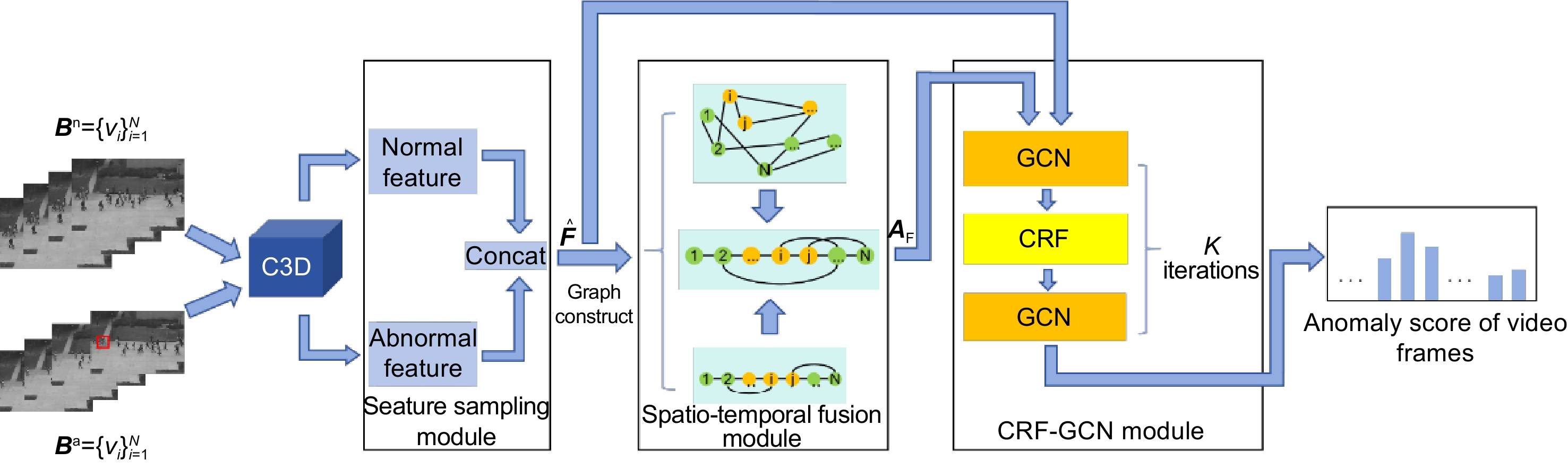

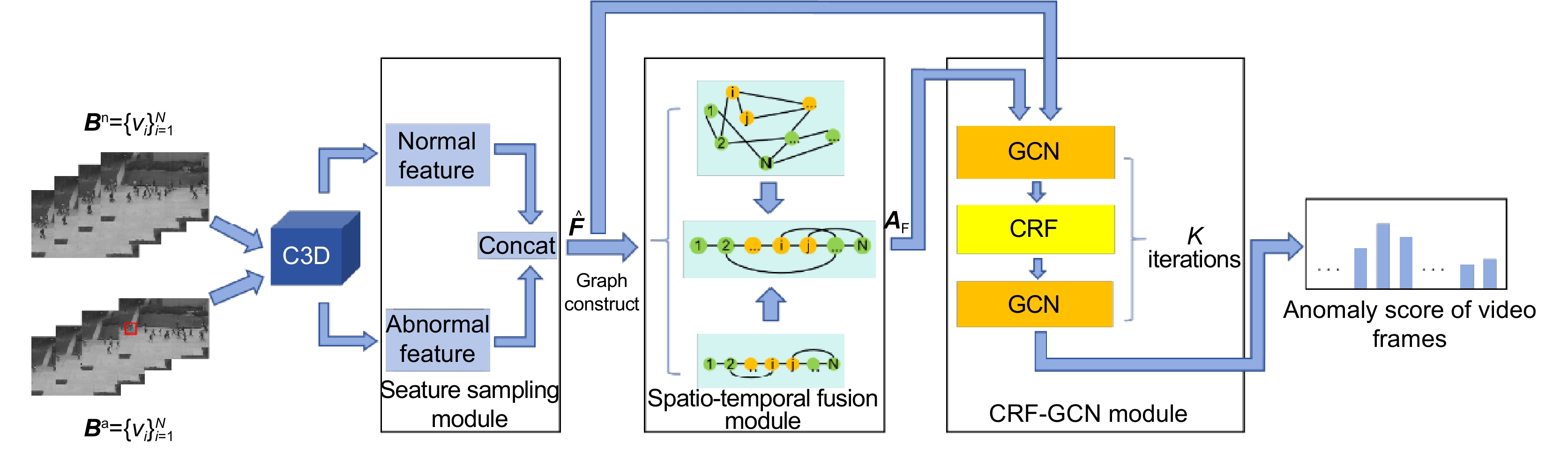

为了对异常事件中对象的时空相互作用进行精准捕捉,提出一种改进时空图卷积网络的视频异常检测方法。在图卷积网络中引入条件随机场,利用其对帧间特征关联性的影响,对跨帧时空特征之间的相互作用进行建模,以捕捉其上下文关系。在此基础上,以视频段为节点构建空间相似图和时间依赖图,通过二者自适应融合学习视频时空特征,从而提高检测准确性。在UCSD Ped2、ShanghaiTech和IITB-Corridor三个视频异常事件数据集上进行了实验,帧级别AUC值分别达到97.7%、90.4%和86.0%,准确率分别达到96.5%、88.6%和88.0%。

Abstract

An improved spatio-temporal graph convolutional network for video anomaly detection is proposed to accurately capture the spatio-temporal interactions of objects in anomalous events. The graph convolutional network integrates conditional random fields, effectively modeling the interactions between spatio-temporal features across frames and capturing their contextual relationship by exploiting inter-frame feature correlations. Based on this, a spatial similarity graph and a temporal dependency graph are constructed with video segments as nodes, facilitating the adaptive fusion of the two to learn video spatio-temporal features, thus improving the detection accuracy. Experiments were conducted on three video anomaly event datasets, UCSD Ped2, ShanghaiTech, and IITB-Corridor, yielding frame-level AUC values of 97.7%, 90.4%, and 86.0%, respectively, and achieving accuracy rates of 96.5%, 88.6%, and 88.0%, respectively.

-

Overview

Overview: Video surveillance systems are increasingly widely used in public places and play an important role in maintaining social security and stability. However, the collection and labeling of anomalous videos are subject to subjective factors, resulting in video data containing only video-level labels and lacking detailed information, limiting the intelligent analysis of videos, especially in the field of anomaly detection, where richer data information is needed to improve model performance.

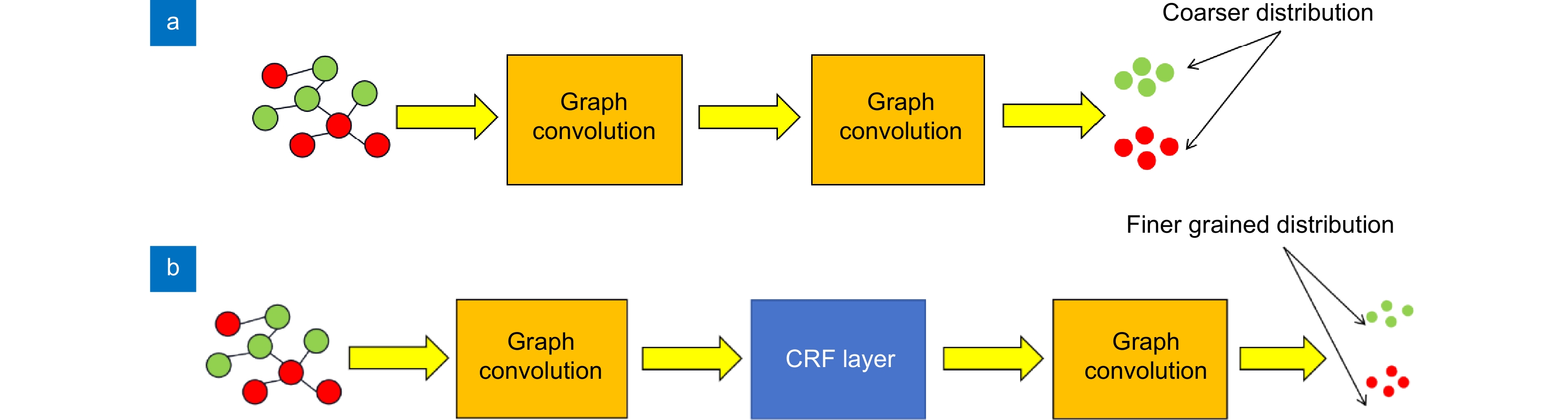

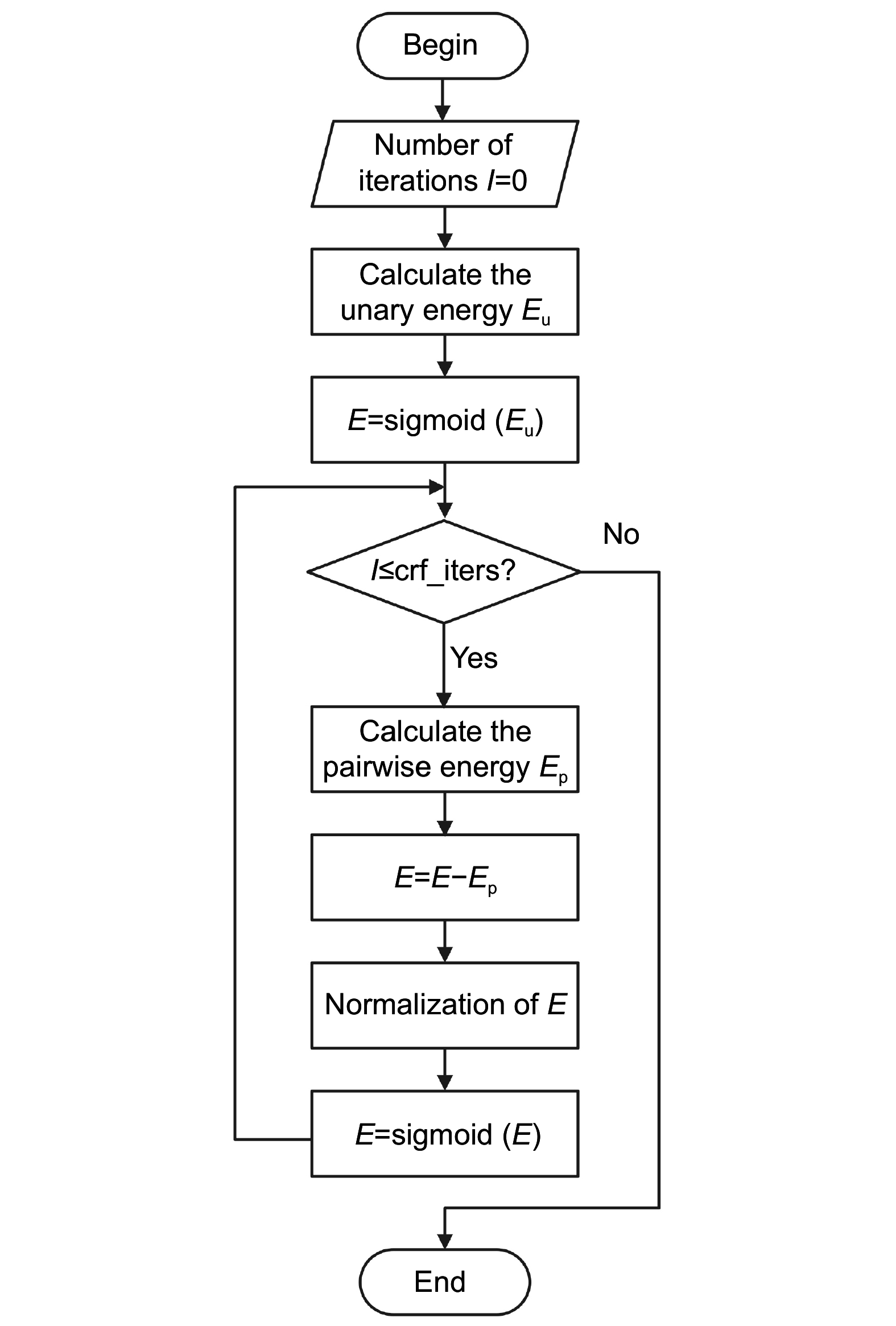

Video data is typical spatio-temporal data, the spatio-temporal features shown by the abnormal events in the video have significant correlation, and the connection between the segments in the video can be constructed by introducing the graph structure in both time perspective and space perspective, but the traditional convolution operation can not be directly applied to the graph. Although Graph Convolutional Neural Network (GCN) can effectively process data with the graph structure, it is still deficient in capturing the intrinsic relationship between objects in neighbouring frames, especially in coping with the complex spatio-temporal dependencies between frames in a video sequence. To model the spatio-temporal correlations of video segments more reasonably under the graph structure, and then effectively detect and locate video anomalies, this paper proposes an improved video anomaly detection method with spatio-temporal graph convolutional networks. Each clip in the video is regarded as a node; two key graph models, a spatial similarity graph, and a temporal dependency graph are constructed. The video features are learned by adaptive fusion based on the consideration of spatio-temporal connections between clips. Since anomalous events can be formed through spatio-temporal interactions between multiple objects, taking advantage of the good graph modeling benefits of Conditional Random Field (CRF), a CRF layer is introduced into the GCN model to model the interactions between spatio-temporal features across frames to capture their contextual relationships, thus improving the detection accuracy of the model.

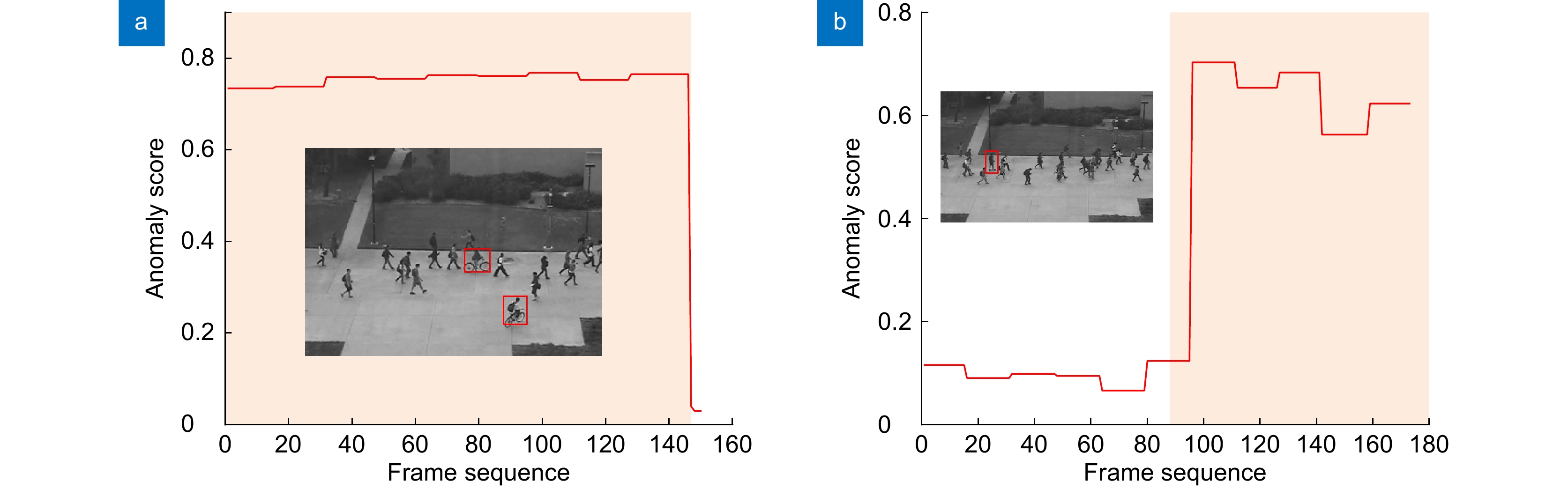

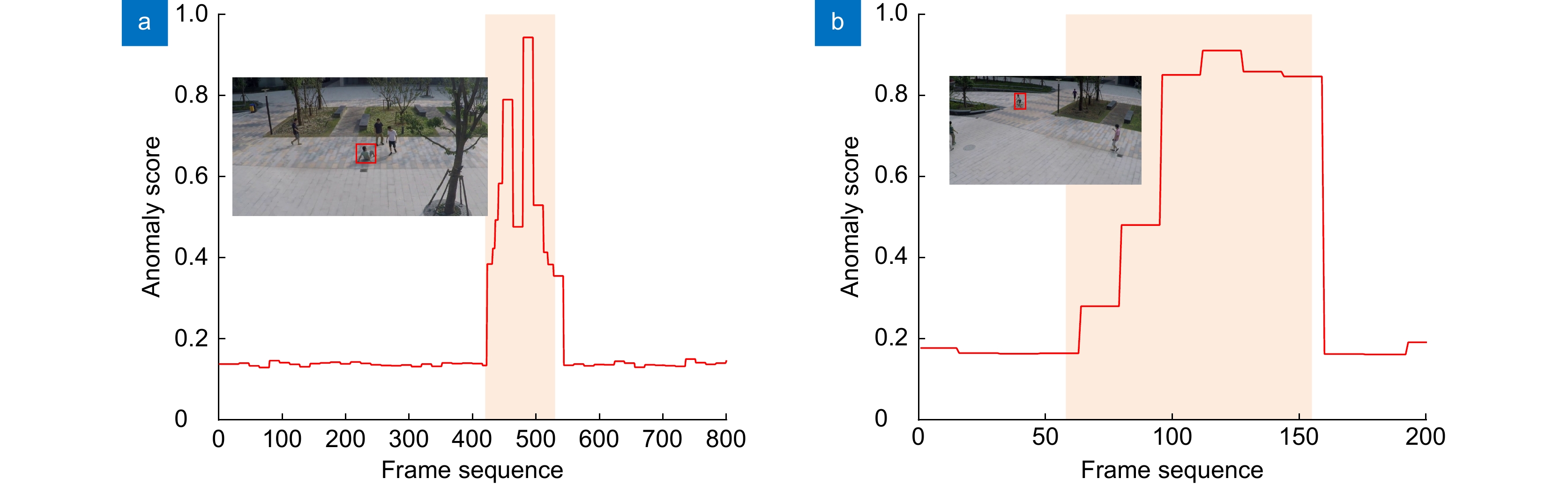

Experiments were conducted on three video anomaly event datasets, including UCSD Ped2, ShanghaiTech, and IITB-Corridor. The frame-level AUC values reach 97.7%, 90.4%, and 86.0%, respectively, and the experimental results verify the effectiveness of the proposed method.

-

-

表 1 UCSD Ped2、ShanghaiTech和IITB-Corridor数据集

Table 1. UCSD Ped2, ShanghaiTech and IITB-Corridor datasets

数据集 帧数 年份 标注 分辨率 异常类型 UCSD Ped2 4560 2010 Frame-level 360×240 骑自行车、小型车辆 ShanghaiTech 317398 2016 Frame-level 480×856 骑自行车、逃票、打架 IITB-Corridor 483566 2020 Frame-level 1920×1080 抗议、打斗、追逐等 表 2 UCSD Ped2数据集上不同方法的对比结果

Table 2. Comparison results of different methods on UCSD Ped2 dataset

表 3 ShanghaiTech数据集上不同方法的对比结果

Table 3. Comparison results of different methods on ShanghaiTech dataset

表 4 IITB-Corridor数据集上不同方法的对比结果

Table 4. Comparison results of different methods on IITB-Corridor dataset

表 5 算法复杂度对比

Table 5. Comparison results of different methods on complexity

表 6 消融实验结果

Table 6. Results of ablation experiments

时间依赖图 空间相似图 图融合方式 CRF AUC/% 准确率/% √ - 96.6 96.2 √ - 97.1 96.1 √ √ 平均融合[29] 89.2 86.9 √ √ 自适应时空融合 96.1 94.2 √ √ 自适应时空融合 √ 97.7 96.5 -

参考文献

[1] 龚益玲, 张鑫昕, 陈松. 基于深度学习的视频异常检测研究综述[J]. 数据通信, 2023(3): 45−49. doi: 10.3969/j.issn.1002-5057.2023.03.012

Gong Y L, Zhang X X, Chen S. Survey on deep learning approach for video anomaly detection[J]. Data Commun, 2023(3): 45−49. doi: 10.3969/j.issn.1002-5057.2023.03.012

[2] Wang X G, Yan Y L, Tang P, et al. Revisiting multiple instance neural networks[J]. Pattern Recognit, 2018, 74: 15−24. doi: 10.1016/j.patcog.2017.08.026

[3] Zhou Z H, Sun Y Y, Li Y F. Multi-instance learning by treating instances as non-I. I. D. samples[C]//Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, 2009: 1249–1256. https://doi.org/10.1145/1553374.1553534.

[4] 程稳, 陈忠碧, 李庆庆, 等. 时空特征对齐的多目标跟踪算法[J]. 光电工程, 2023, 50(6): 230009. doi: 10.12086/oee.2023.230009

Cheng W, Chen Z B, Li Q Q, et al. Multiple object tracking with aligned spatial-temporal feature[J]. Opto-Electron Eng, 2023, 50(6): 230009. doi: 10.12086/oee.2023.230009

[5] 李荆, 刘钰, 邹磊. 基于时空建模的动态图卷积神经网络[J]. 北京大学学报(自然科学版), 2021, 57(4): 605−613. doi: 10.13209/j.0479-8023.2021.052

Li J, Liu Y, Zou L. A dynamic graph convolutional network based on spatial-temporal modeling[J]. Acta Sci Nat Univ Pekins, 2021, 57(4): 605−613. doi: 10.13209/j.0479-8023.2021.052

[6] 吕佳, 王泽宇, 梁浩城. 边界注意力辅助的动态图卷积视网膜血管分割[J]. 光电工程, 2023, 50(1): 220116. doi: 10.12086/oee.2023.220116

Lv J, Wang Z Y, Liang H C. Boundary attention assisted dynamic graph convolution for retinal vascular segmentation[J]. Opto-Electron Eng, 2023, 50(1): 220116. doi: 10.12086/oee.2023.220116

[7] Sultani W, Chen C, Shah M. Real-world anomaly detection in surveillance videos[C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, 2018: 6479–6488. https://doi.org/10.1109/CVPR.2018.00678.

[8] Zhang J G, Qing L Y, Miao J. Temporal convolutional network with complementary inner bag loss for weakly supervised anomaly detection[C]//2019 IEEE International Conference on Image Processing (ICIP), Taipei, China, 2019: 4030–4034. https://doi.org/10.1109/ICIP.2019.8803657.

[9] Li S, Liu F, Jiao L C. Self-training multi-sequence learning with transformer for weakly supervised video anomaly detection[C]//Proceedings of the 36th AAAI Conference on Artificial Intelligence, 2022: 1395–1403. https://doi.org/10.1609/aaai.v36i2.20028.

[10] Liang W J, Zhang J M, Zhan Y Z. Weakly supervised video anomaly detection based on spatial–temporal feature fusion enhancement[J]. Signal, Image Video Process, 2024, 18(2): 1111−1118. doi: 10.1007/s11760-023-02828-0

[11] Kipf T N, Welling M. Semi-supervised classification with graph convolutional networks[C]//Proceedings of the 5th International Conference on Learning Representations, Toulon, 2017.

[12] 周航, 詹永照, 毛启容. 基于时空融合图网络学习的视频异常事件检测[J]. 计算机研究与发展, 2021, 58(1): 48−59. doi: 10.7544/issn1000-1239202120200264

Zhou H, Zhan Y Z, Mao Q R. Video anomaly detection based on space-time fusion graph network learning[J]. J Comput Res Dev, 2021, 58(1): 48−59. doi: 10.7544/issn1000-1239202120200264

[13] Purwanto D, Chen Y T, Fang W H. Dance with self-attention: a new look of conditional random fields on anomaly detection in videos[C]//2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, 2021: 173–183. https://doi.org/10.1109/ICCV48922.2021.00024.

[14] Mu H Y, Sun R Z, Wang M, et al. Spatio-temporal graph-based CNNs for anomaly detection in weakly-labeled videos[J]. Inf Process Manage, 2022, 59(4): 102983. doi: 10.1016/j.ipm.2022.102983

[15] Liu M T, Li X R, Liu Y G, et al. Weakly supervised anomaly detection with multi-level contextual modeling[J]. Multimedia Syst, 2023, 29(4): 2153−2164. doi: 10.1007/s00530-023-01093-y

[16] Cheng K, Zeng X H, Liu Y, et al. Spatial-temporal graph convolutional network boosted flow-frame prediction for video anomaly detection[C]//ICASSP 2023–2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, 2023: 1–5. https://doi.org/10.1109/ICASSP49357.2023.10095170.

[17] Li X B, Wang W, Li Q Y, et al. Spatial-temporal graph-guided global attention network for video-based person re-identification[J]. Mach Vision Appl, 2024, 35(1): 8. doi: 10.1007/s00138-023-01489-w

[18] Wan B Y, Fang Y M, Xia X, et al. Weakly supervised video anomaly detection via center-guided discriminative learning[C]//2020 IEEE International Conference on Multimedia and Expo (ICME), London, 2020: 1–6. https://doi.org/10.1109/ICME46284.2020.9102722.

[19] Feng J C, Hong F T, Zheng W S. MIST: multiple instance self-training framework for video anomaly detection[C]//2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, 2021: 14004–14013. https://doi.org/10.1109/CVPR46437.2021.01379.

[20] Lafferty J D, McCallum A, Pereira F C N. Conditional random fields: probabilistic models for segmenting and labeling sequence data[C]//Proceedings of the Eighteenth International Conference on Machine Learning, San Francisco, 2001: 282–289.

[21] Gao H C, Pei J, Huang H. Conditional random field enhanced graph convolutional neural networks[C]//Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, 2019: 276–284. https://doi.org/10.1145/3292500.3330888.

[22] Krähenbühl P, Koltun V. Efficient inference in fully connected CRFs with Gaussian edge potentials[C]//Proceedings of the 24th International Conference on Neural Information Processing Systems, Granada, 2011: 109–117.

[23] Zhang J W, Zhang X L, Zhu Z Q, et al. Efficient combination graph model based on conditional random field for online multi-object tracking[J]. Complex Intell Syst, 2023, 9(3): 3261−3276. doi: 10.1007/s40747-022-00922-3

[24] Chen D Y, Wang P T, Yue L Y, et al. Anomaly detection in surveillance video based on bidirectional prediction[J]. Image Vision Comput, 2020, 98: 103915. doi: 10.1016/j.imavis.2020.103915

[25] Lu C W, Shi J P, Jia J Y. Abnormal event detection at 150 FPS in MATLAB[C]//Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, 2013: 2720–2727. https://doi.org/10.1109/ICCV.2013.338.

[26] Rodrigues R, Bhargava N, Velmurugan R, et al. Multi-timescale trajectory prediction for abnormal human activity detection[C]//Proceedings of the 2020 IEEE Winter Conference on Applications of Computer Vision, Snowmass, 2020: 2615–2623. https://doi.org/10.1109/WACV45572.2020.9093633.

[27] Zhong J X, Li N N, Kong W J, et al. Graph convolutional label noise cleaner: train a plug-and-play action classifier for anomaly detection[C]//2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, 2019: 1237–1246. https://doi.org/10.1109/CVPR.2019.00133.

[28] Hasan M, Choi J, Neumann J, et al. Learning temporal regularity in video sequences[C]//Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, 2016: 733–742. https://doi.org/10.1109/CVPR.2016.86.

[29] Gong D, Liu L Q, Le V, et al. Memorizing normality to detect anomaly: memory-augmented deep autoencoder for unsupervised anomaly detection[C]//Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, 2019: 1705–1714. https://doi.org/10.1109/ICCV.2019.00179.

[30] Yu G, Wang S Q, Cai Z P, et al. Cloze test helps: effective video anomaly detection via learning to complete video events[C]//Proceedings of the 28th ACM International Conference on Multimedia, Seattle, 2020: 583–591. https://doi.org/10.1145/3394171.3413973.

[31] Taghinezhad N, Yazdi M. A new unsupervised video anomaly detection using multi-scale feature memorization and multipath temporal information prediction[J]. IEEE Access, 2023, 11: 9295−9310. doi: 10.1109/ACCESS.2023.3237028

[32] Tian Y, Pang G S, Chen Y H, et al. Weakly-supervised video anomaly detection with robust temporal feature magnitude learning[C]//Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, 2021: 4955–4966. https://doi.org/10.1109/ICCV48922.2021.00493.

[33] Chen H Y, Mei X, Ma Z Y, et al. Spatial–temporal graph attention network for video anomaly detection[J]. Image Vision Comput, 2023, 131: 104629. doi: 10.1016/j.imavis.2023.104629

[34] Wang L, Tian J W, Zhou S P, et al. Memory-augmented appearance-motion network for video anomaly detection[J]. Pattern Recognit, 2023, 138: 109335. doi: 10.1016/j.patcog.2023.109335

[35] Tur A O, Dall’Asen N, Beyan C, et al. Exploring diffusion models for unsupervised video anomaly detection[C]//2023 IEEE International Conference on Image Processing (ICIP), Kuala Lumpur, 2023: 2540–2544. https://doi.org/10.1109/ICIP49359.2023.10222594.

[36] Acsintoae A, Florescu A, Georgescu M I, et al. UBnormal: new benchmark for supervised open-set video anomaly detection[C]//Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, 2022: 20111–20121. https://doi.org/10.1109/CVPR52688.2022.01951.

[37] Zeng X L, Jiang Y L, Ding W R, et al. A hierarchical spatio-temporal graph convolutional neural network for anomaly detection in videos[J]. IEEE Trans Circuits Syst Video Technol, 2023, 33(1): 200−212. doi: 10.1109/TCSVT.2021.3134410

[38] Li J, Huang Q W, Du Y J, et al. Variational abnormal behavior detection with motion consistency[J]. IEEE Trans Image Process, 2022, 31: 275−286. doi: 10.1109/TIP.2021.3130545

[39] Cao C Q, Lu Y, Wang P, et al. A new comprehensive benchmark for semi-supervised video anomaly detection and anticipation[C]//Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, 2023: 20392–20401. https://doi.org/10.1109/CVPR52729.2023.01953.

[40] Majhi S, Dai R, Kong Q, et al. Human-Scene Network: a novel baseline with self-rectifying loss for weakly supervised video anomaly detection[J]. Comput Vis Image Underst, 2024, 241: 103955. doi: 10.1016/j.cviu.2024.103955

[41] Markovitz A, Sharir G, Friedman I, et al. Graph embedded pose clustering for anomaly detection[C]//Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, 2020: 10536–10544. https://doi.org/10.1109/CVPR42600.2020.01055.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: