-

摘要

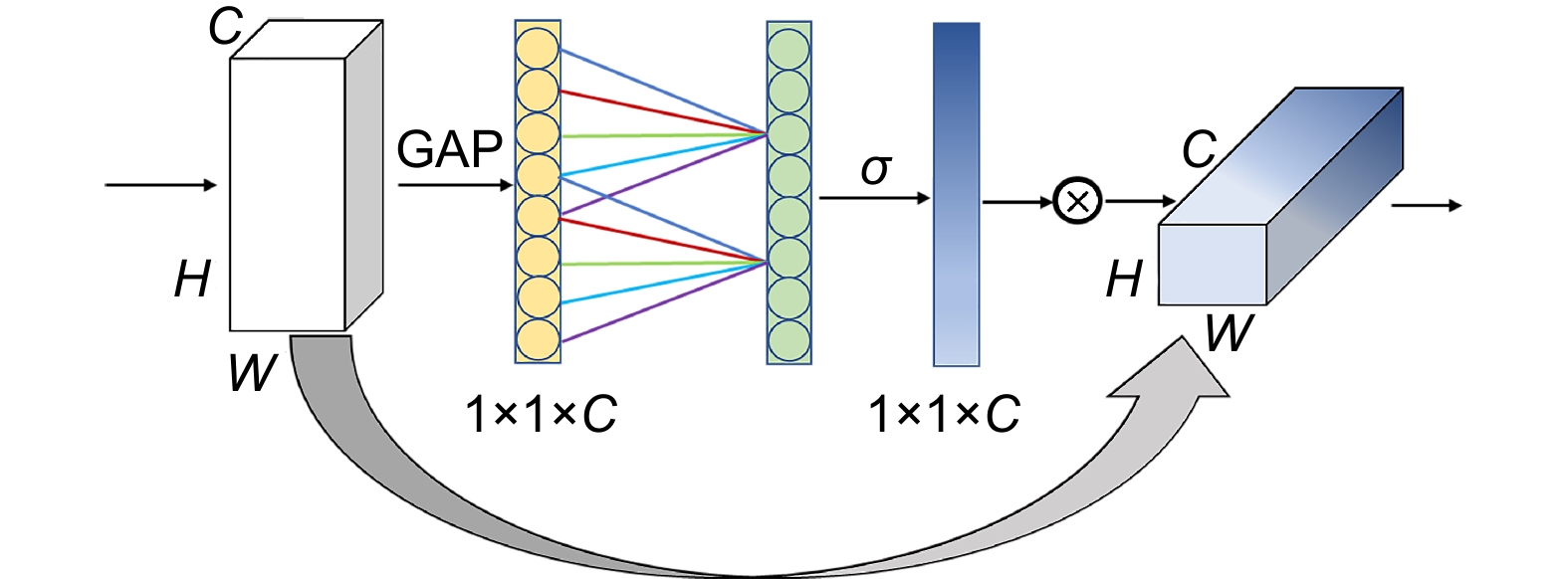

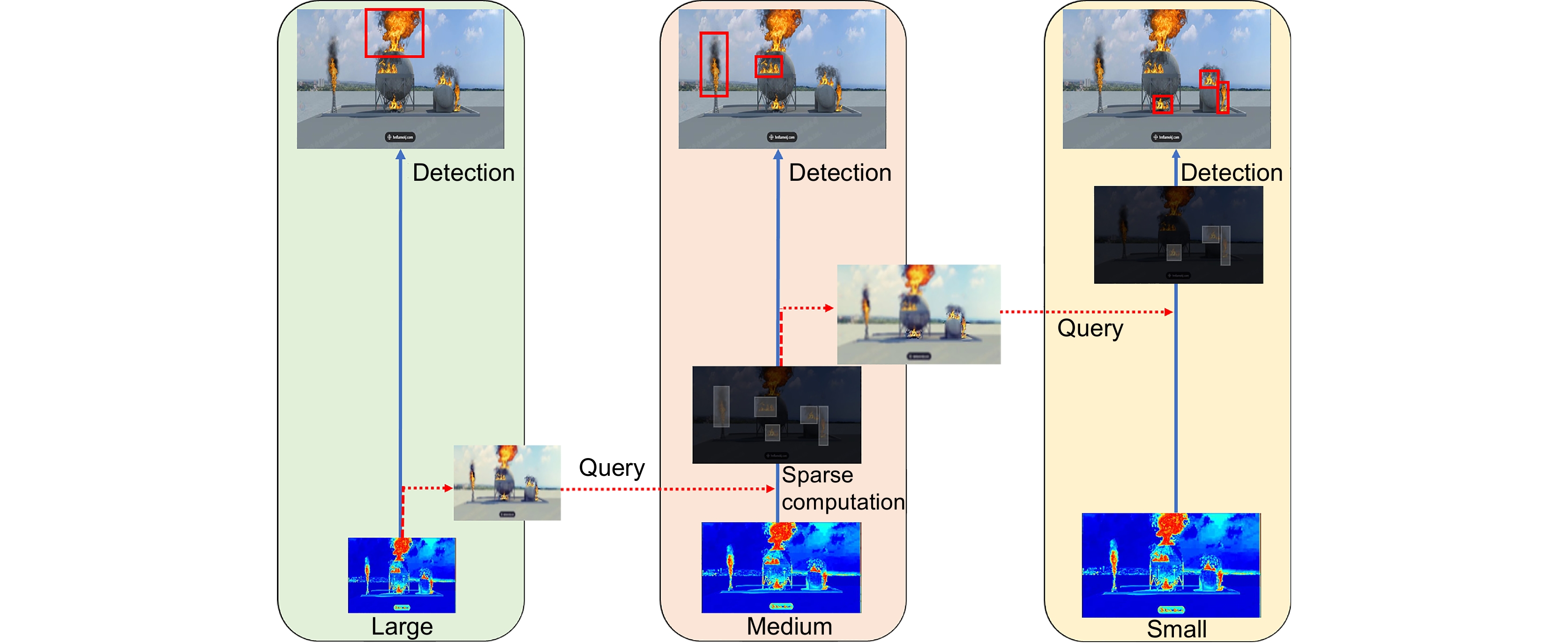

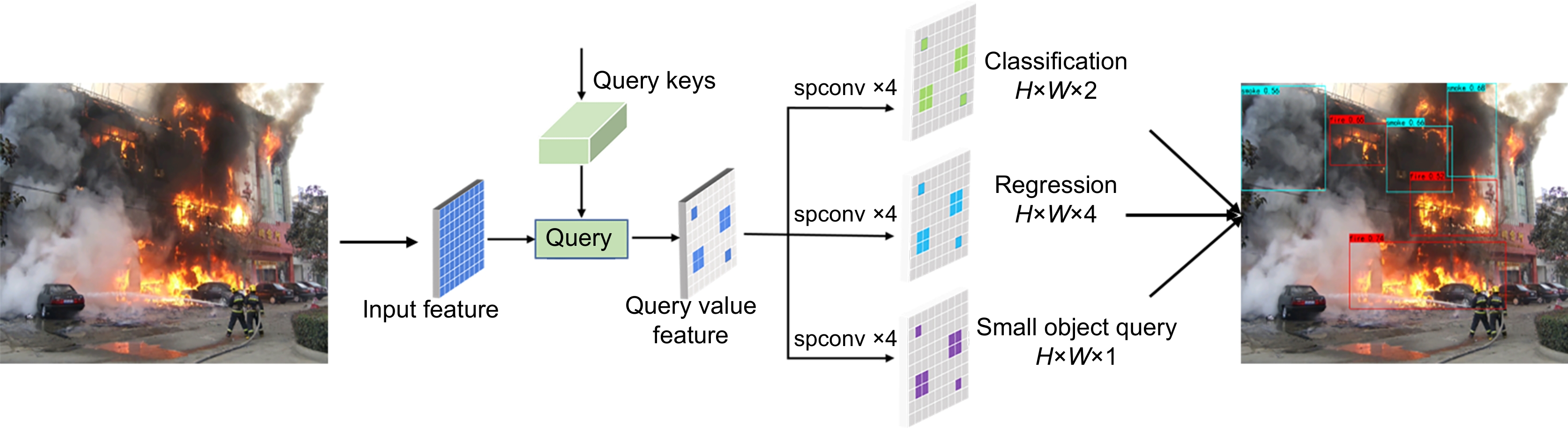

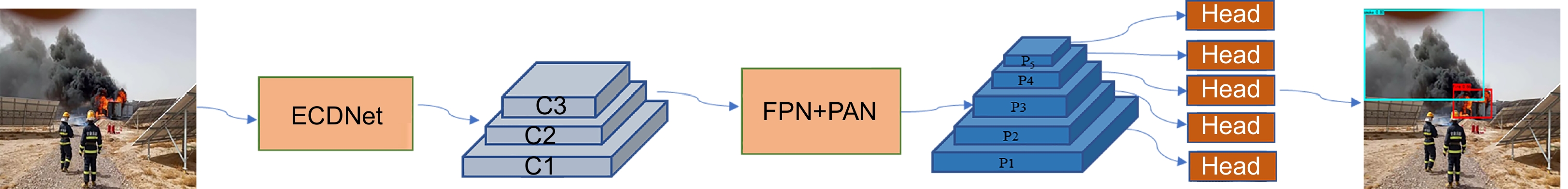

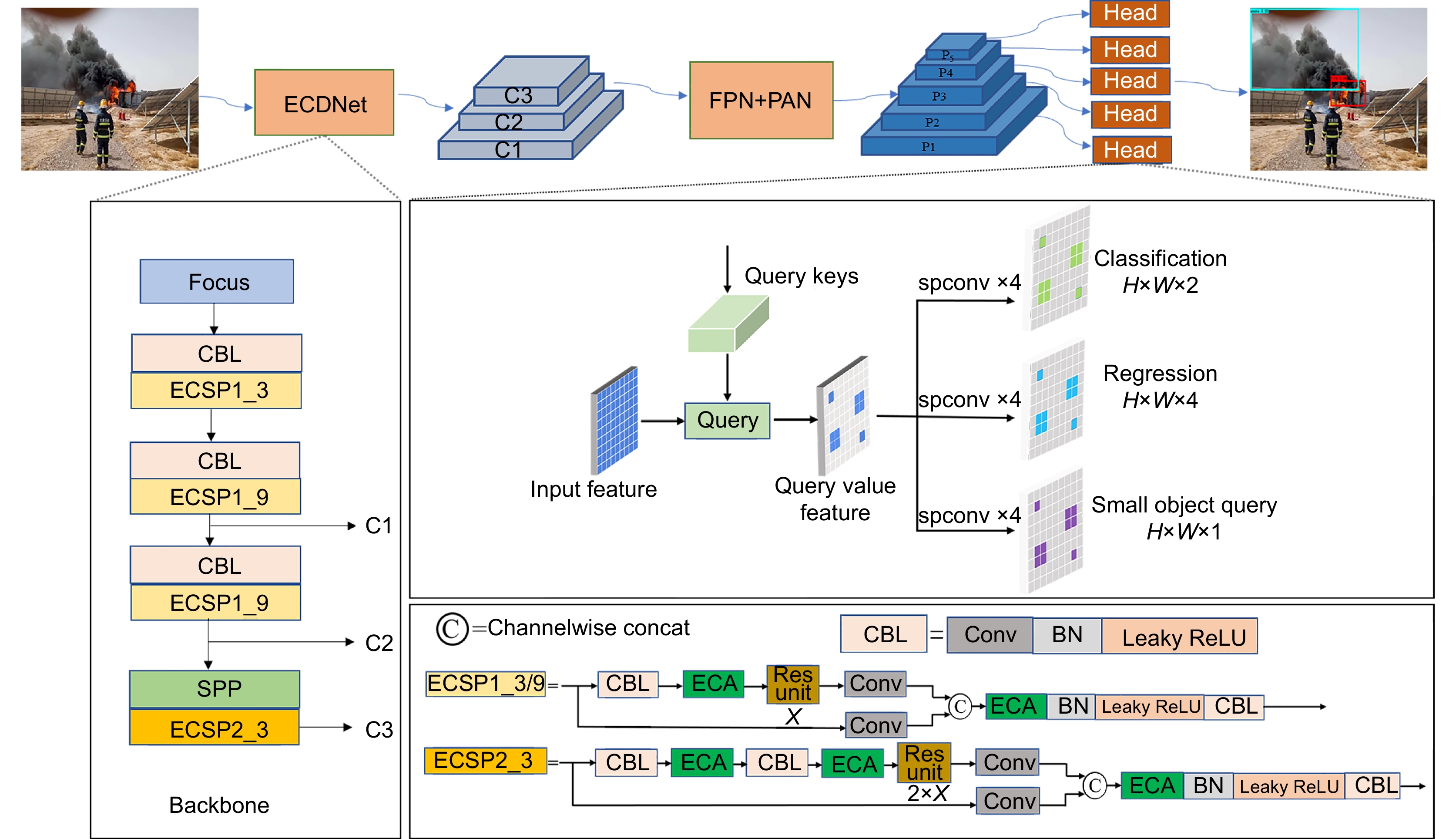

针对现有火灾检测算法仍存在的模型复杂、检测速度慢、误检率高等问题,提出一种基于级联稀疏查询机制的轻量化火灾检测网络LFNet。首先,建立了轻量化的图像特征提取模块ECDNet,其通过在YOLOv5s主干网络中嵌入轻量化注意力模块ECA (efficient channel attention),用于解决火灾检测中火焰与烟雾的多尺度难点;其次,利用深层特征提取模块FPN+PAN,对不同层级的特征图进行深度处理和多尺度融合;最后,利用嵌入轻量化的级联稀疏查询模块CSQ (cascade sparse query)提升对早期火灾中的小火焰与薄烟雾的检测准确率。实验表明,本文方法在mAP和Precision等客观指标上的综合表现达到最优,同时在实现较高检测精度时的参数量也较低,能够满足实际场景的火灾检测要求。

Abstract

To address the challenges of complex models, slow detection speed, and high false detection rate during fire detection, a lightweight fire detection algorithm is proposed based on cascading sparse query mechanism, called LFNet. In the study, firstly, a lightweight feature extraction module ECDNet is established to extract more fine-grained features in different levels of feature layers by embedding the lightweight attention module ECA (efficient channel attention) in YOLOv5s backbone network, which is used to solve the multi-scale of flame and smoke in fire detection. Secondly, deep feature extraction module FPN+PAN is adopted to improve multi-scale fusion of feature maps at different levels. Finally, the Cascade Sparse Query embedded lightweight cascade sparse query module is applied to improve the detection accuracy of small flames and thin smoke in early fires. Experimental results show that the comprehensive performance of the proposed method in objective indicators such as mAP and Precision is the best on SF-dataset, D-fire and FIRESENSE. Furthermore, the proposed model achieves lower parameters and higher detection accuracy, which can meet the fire detection requirements of challenge scenes.

-

Key words:

- object detection /

- fire detection /

- lightweight /

- cascade sparse query /

- Slimming

-

Overview

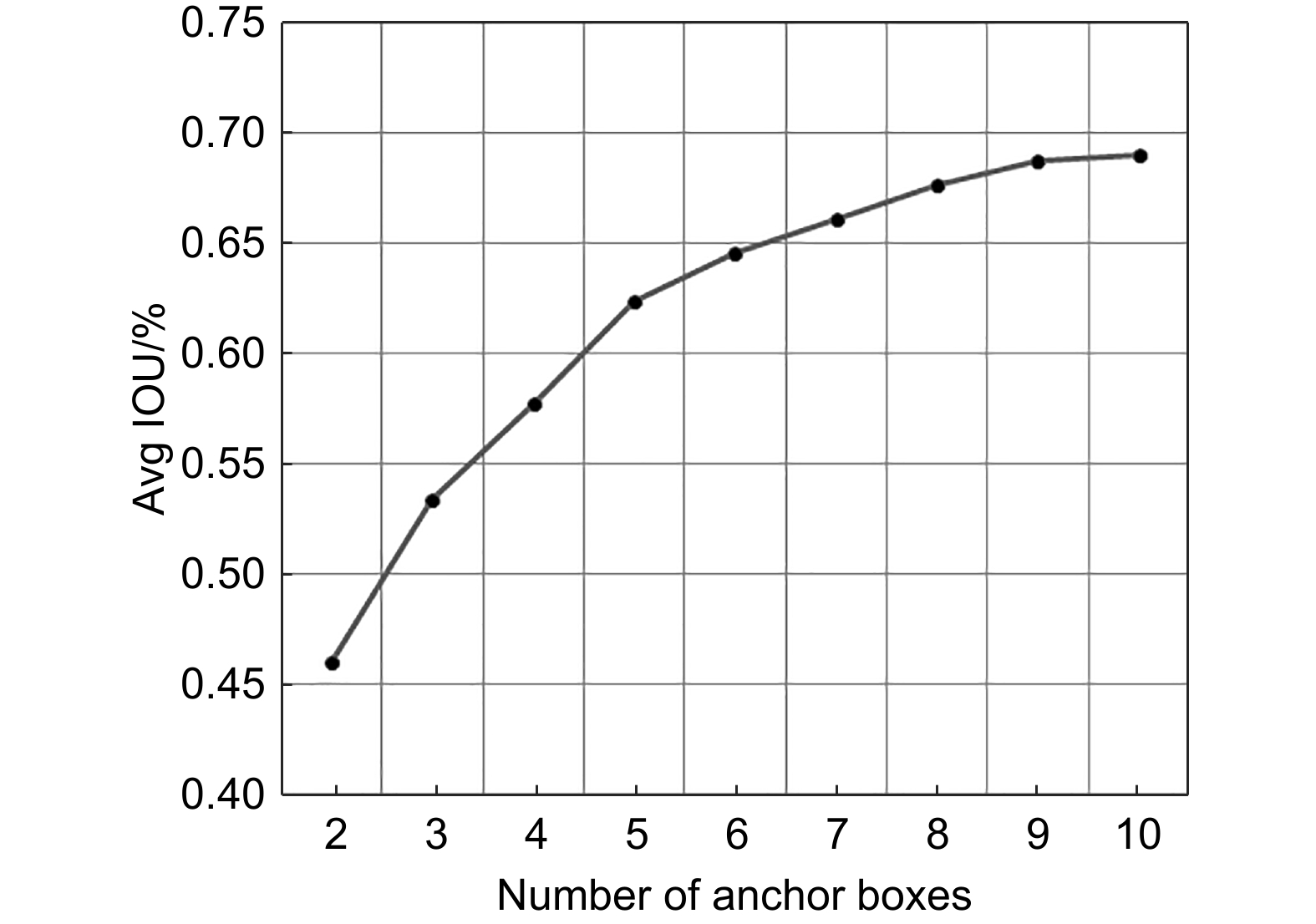

Overview: The Visual Fire Detection task is designed to detect flames and smoke using visual algorithms from images and videos to achieve fire alarms. In recent years, fire detection algorithms based on convolutional neural networks have greatly improved the detection accuracy of flames and smoke. However, the following questions still exist in the current methods: 1) The generalization ability of the model still needs to be improved; 2) Low fire detection accuracy for small objects; 3) The tradeoff between the detection accuracy and speed fails to achieve. In order to overcome the above problems, a lightweight fire detection algorithm is proposed based on cascading sparse query mechanism, called LFNet. In this study, firstly, a lightweight feature extraction module ECDNet is established to extract more fine-grained features in different levels of feature layers by embedding the lightweight attention module ECA (Efficient Channel Attention) in YOLOv5s backbone network, which is used to solve the multi-scale of flame and smoke in fire detection. Secondly, deep feature extraction module FPN+PAN is adopted to improve multi-scale fusion of feature maps at different levels. Finally, the Cascade Sparse Query embedded lightweight cascade sparse query module is applied to enhance the detection accuracy of small flames and thin smoke in early fires. Furthermore, to further decrease the parameters and calculation of the model, the Slimming pruning algorithm is adopted to change the size of the model. The experimental results on the three fire datasets of SF-dataset, D-fire and FIRESENSE show that the comprehensive performance of the proposed method on objective indicators such as mAP and Recall is best. On the SF-dataset dataset, the LFNet achieves the best mAP and Recall, which are 71.76% and 52.98%, respectively. On the D-fire dataset, the mAP of our method reachs 71.76%, which is far superior to other fire detection methods. On the FIRESENSE dataset, our method achieves 70.61% mAP. Our method effectively alleviates the main problems of current fire detection algorithms, such as low detection accuracy, high missed detection rate for small objects, and difficulty in balancing speed and accuracy. The network trains and builds a fire detection model on self-built datasets and other fire datasets. The experimental results show that on the condition that the model size is suitable and the speed is relatively fast, our method achieves an optimal detection effect on both the self-built fire dataset and the public fire datasets, and will potentially promote the application of deep learning-based fire detection methods in industries.

-

-

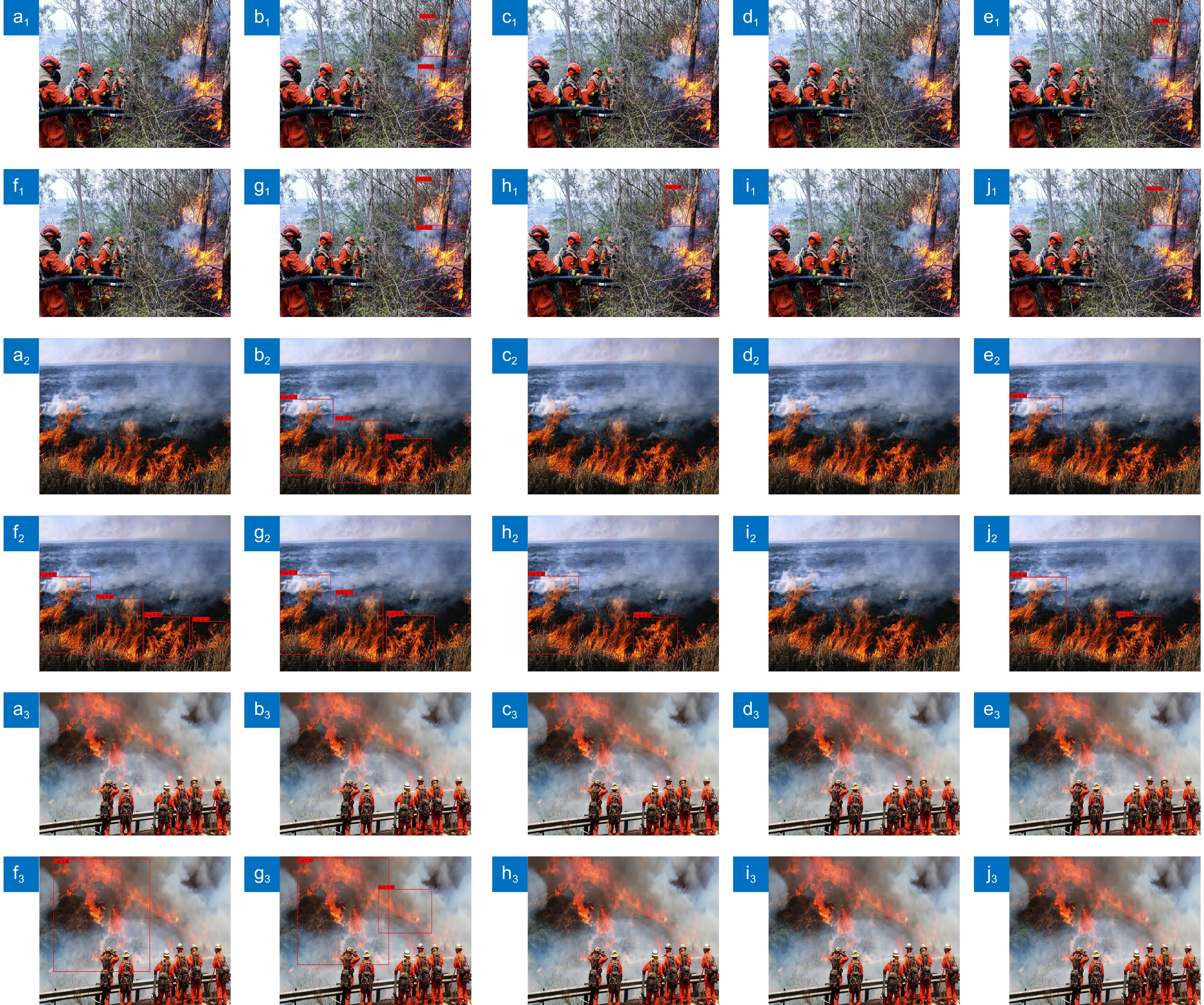

图 8 SF-dataset数据集比较实验检测结果。(a) Images;(b) Ours;(c) EFDNet;(d) Y-Edge;(e) M-YOLO;(f) Fire-YOLO;(g) YOLOX-Tiny;(h) PicoDet;(i) PP-YOLOE;(j) YOLOv7

Figure 8. Comparison experiment detection results for the SF-dataset. (a) Images;(b) Ours;(c) EFDNet;(d) Y-Edge;(e) M-YOLO;(f) Fire-YOLO;(g) YOLOX-Tiny;(h) PicoDet;(i) PP-YOLOE;(j) YOLOv7

图 9 D-fire数据集比较实验检测结果。(a) Images;(b) Ours;(c) EFDNet;(d) Y-Edge;(e) M-YOLO;(f) Fire-YOLO;(g) YOLOX-Tiny;(h) PicoDet;(i) PP-YOLOE;(j) YOLOv7;

Figure 9. Comparison experiment detection results for the D-fire dataset. (a) Images;(b) Ours;(c) EFDNet;(d) Y-Edge;(e) M-YOLO;(f) Fire-YOLO;(g) YOLOX-Tiny;(h) PicoDet;(i) PP-YOLOE;(j) YOLOv7;

图 10 FIRESENSE数据集比较实验检测结果。(a) Images;(b) Ours;(c) EFDNet;(d) Y-Edge;(e) M-YOLO; (f) Fire-YOLO;(g) YOLOX-Tiny;(h) PicoDet;(i) PP-YOLOE;(j) YOLOv7

Figure 10. Comparison experiment detection results for the FIRESENSE dataset. (a) Images;(b) Ours;(c) EFDNet;(d) Y-Edge;(e) M-YOLO;(f) Fire-YOLO;(g) YOLOX-Tiny;(h) PicoDet;(i) PP-YOLOE;(j) YOLOv7

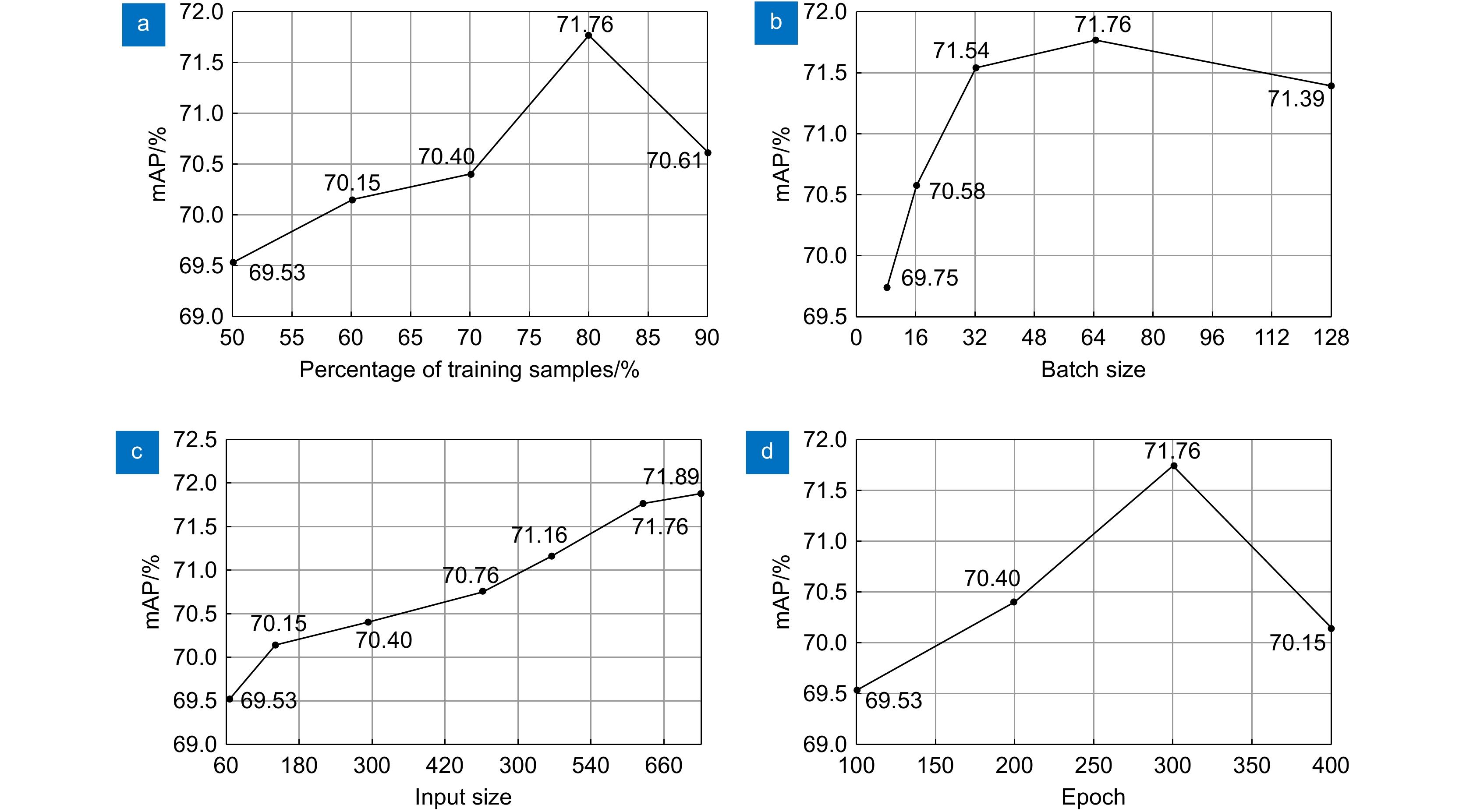

图 11 在SF-dataset数据集上训练数据集样本比例、Batch size、模型输入尺寸以及迭代次数epoch实验结果(a)训练数据集样本比例;(b) Batch size;(c)模型输入尺寸;(d)迭代次数epoch

Figure 11. Parameters experiment of percentage of training samples, batch size and patch size on the Santa Barbara dataset (a) Percentage of training dataset samples; (b) Batch size; (c) Model input size; (d) Epoch

表 1 三个数据集的训练集,验证集,测试集的详细统计数据

Table 1. Numbers of training set, validation set and testing set for the three datasets

数据集 训练集 验证集 测试集 总数 Fire Smoke None Fire Smoke None Fire Smoke None SF-dataset 4859 4859 4859 607 607 607 607 607 607 18219 D-Fire 4658 4693 7870 582 587 984 582 587 984 21527 FIRESENSE(video) 9 11 21 1 1 2 1 1 2 49 表 2 不同数据集上不同方法的精度对比实验结果

Table 2. Experimental precision results of different comparative methods on different datasets

数据集 方法 指标 Recall/% Precision/% Accuracy/% mAP/% SF-dataset Celik 等[19] 38.45 69.54 72.12 41.58 Demirel 等[2] 42.28 73.65 78.54 47.15 Zhang 等[20] 40.12 72.77 73.15 45.4 Fire-YOLO[21] 49.93 87.05 90.09 69.38 EFDNet[22] 44.27 80.45 82.28 59.89 Pruned+KD[23] 47.25 83.16 85.64 63.1 YOLOX-Tiny[24] 49.95 86.89 89.24 69.08 PicoDet[25] 50.1 87.1 90.13 69.42 YOLOv7[26] 54.12 88.64 94.88 71.69 LFNet 54.98 89.12 98.5 71.76 D-fire Celik 等[19] 35.90 65.78 68.42 39.65 Demirel 等[2] 40.68 73.12 78.27 45.85 Zhang 等[20] 38.67 70.45 70.86 43.94 Fire-YOLO[21] 51.18 84.12 88.21 68.88 EFDNet[22] 43.68 76.57 77.94 58.77 Pruned+KD[23] 46.02 79.85 82.4 62.71 YOLOX-Tiny[24] 51.06 83.94 86.14 68.14 PicoDet[25] 51.27 84.32 88.26 68.95 YOLOv7[26] 53.12 86.44 93.85 70.65 LFNet 53.35 87.68 97.92 71.15 FIRESENSE Celik 等[19] 33.7 56.07 60.21 36.48 Demirel 等[2] 38.55 64.28 69.44 42.66 Zhang 等[20] 36.96 61.58 62.38 40.36 Fire-YOLO[21] 52.47 79.88 85.19 68.12 EFDNet[22] 42.74 68.25 70.22 56.97 Pruned+KD[23] 46.80 72.94 75.1 61.35 YOLOX-Tiny[24] 53.94 80.44 84.12 68.02 PicoDet[25] 52.96 80.92 85.91 68.89 YOLOv7[26] 54.53 89.76 96.28 70.15 LFNet 53.19 92.44 98.42 70.61 注:加粗字体表示最优结果 表 3 SF-dataset上不同方法的速度对比实验结果

Table 3. Experimental speed results of different comparative methods on SF-dataset

方法 指标 Flops/G Parameter/M Speed/(f/s) Infer time/ms mAP/% M-YOLO[27] 7.54 23.8 18 50.6 66.6 Fire-YOLO[21] 45.12 62 28 32.17 69.38 EFDNet[22] 1.99 3.6 63 7.21 59.89 Y-Edge[25] 30.47 53.8 36 27.97 65.95 Prund+KD[23] 16.8 26.3 45 17.25 63.1 PPYOLO-Tiny[28] 4.96 17.84 42 23.8 68.36 YOLOX-Tiny[24] 5.42 19.19 40 25.21 69.08 PicoDet[25] 0.73 4 105 6.65 69.42 PPYOLOE[29] 29.42 52.20 98 25.64 70.85 YOLOv7[26] 18.42 36.9 88 19.85 71.69 LFNet 3.85 12.6 98 10.24 71.76 表 4 消融实验的实验结果

Table 4. Experimental results of Ablation experiments

Model 方法 指标 ECDNet FPN+P CSQH QFocal-CIOU Slimming mAP(%) Recall/% Parameter/M) Speed/(f/s) YOLOv5s 67.89 49.38 27.6 84 √ 68.89 50.96 27.6 84 √ √ 70.15 51.53 27.7 80 √ √ √ 70.61 52.12 27.7 91 √ √ √ √ 71.15 53.35 27.7 91 √ √ √ √ √ 71.76 52.98 12.6 98 注:加粗字体表示最优结果 -

参考文献

[1] 肖潇, 孔凡芝, 刘金华. 基于动静态特征的监控视频火灾检测算法[J]. 计算机科学, 2019, 46(S1): 284−286,299.

Xiao X, Kong F Z, Liu J H. Monitoring video fire detection algorithm based on dynamic characteristics and static characteristics[J]. Comput Sci, 2019, 46(S1): 284−286,299.

[2] Çelik T, Demirel H. Fire detection in video sequences using a generic color model[J]. Fire Saf J, 2009, 44(2): 147−158. doi: 10.1016/j.firesaf.2008.05.005

[3] Qiu T, Yan Y, Lu G. An autoadaptive edge-detection algorithm for flame and fire image processing[J]. IEEE Trans Instrum Meas, 2012, 61(5): 1486−1493. doi: 10.1109/TIM.2011.2175833

[4] 邵婧, 王冠香, 郭蔚. 基于视频动态纹理的火灾检测[J]. 中国图象图形学报, 2013, 18(6): 647−653. doi: 10.11834/jig.20130605

Shao J, Wang G X, Guo W. Fire detection based on video dynamic texture[J]. J Image Graph, 2013, 18(6): 647−653. doi: 10.11834/jig.20130605

[5] Surit S, Chatwiriya W. Forest fire smoke detection in video based on digital image processing approach with static and dynamic characteristic analysis[C]//Proceedings of the 2011 First ACIS/JNU International Conference on Computers, Networks, Systems and Industrial Engineering, 2011: 35–39. https://doi.org/10.1109/CNSI.2011.47.

[6] Zhang Q J, Xu J L, Xu L, et al. Deep convolutional neural networks for forest fire detection[C]//Proceedings of 2016 International Forum on Management, Education and Information Technology Application, 2016: 568–575. https://doi.org/10.2991/ifmeita-16.2016.105.

[7] Dunnings A J, Breckon T P. Experimentally defined convolutional neural network architecture variants for non-temporal real-time fire detection[C]//Proceedings of 2018 25th IEEE International Conference on Image Processing, 2018: 1558–1562. https://doi.org/10.1109/ICIP.2018.8451657.

[8] Sharma J, Granmo O C, Goodwin M, et al. Deep convolutional neural networks for fire detection in images[C]//Proceedings of the 18th International Conference on Engineering Applications of Neural Networks, 2017: 183–193. https://doi.org/10.1007/978-3-319-65172-9_16.

[9] Akhloufi M A, Tokime R B, Elassady H. Wildland fires detection and segmentation using deep learning[J]. Proc SPIE, 2018, 10649: 106490B. doi: 10.1117/12.2304936

[10] 任嘉锋, 熊卫华, 吴之昊, 等. 基于改进YOLOv3的火灾检测与识别[J]. 计算机系统应用, 2019, 28(12): 171−176. doi: 10.15888/j.cnki.csa.007184

Ren J F, Xiong W H, Wu Z H, et al. Fire detection and identification based on improved YOLOv3[J]. Comput Syst Appl, 2019, 28(12): 171−176. doi: 10.15888/j.cnki.csa.007184

[11] 缪伟志, 陆兆纳, 王俊龙, 等. 基于视觉的火灾检测研究[J]. 森林工程, 2022, 38(1): 86−92,100. doi: 10.16270/j.cnki.slgc.2022.01.007

Miao W Z, Lu Z N, Wang J L, et al. Fire detection research based on vision[J]. For Eng, 2022, 38(1): 86−92,100. doi: 10.16270/j.cnki.slgc.2022.01.007

[12] 刘凯, 魏艳秀, 许京港, 等. 基于计算机视觉的森林火灾识别算法设计[J]. 森林工程, 2018, 34(4): 89−95. doi: 10.3969/j.issn.1006-8023.2018.04.015

Liu K, Wei Y X, Xu J G, et al. Design of forest fire identification algorithm based on computer vision[J]. For Eng, 2018, 34(4): 89−95. doi: 10.3969/j.issn.1006-8023.2018.04.015

[13] 皮骏, 刘宇恒, 李久昊. 基于YOLOv5s的轻量化森林火灾检测算法研究[J]. 图学学报, 2023, 44(1): 26−32. doi: 10.11996/JG.j.2095-302X.2023010026

Pi J, Liu Y H, Li J H. Research on lightweight forest fire detection algorithm based on YOLOv5s[J]. J Graph, 2023, 44(1): 26−32. doi: 10.11996/JG.j.2095-302X.2023010026

[14] Wang Q L, Wu B G, Zhu P F, et al. ECA-Net: efficient channel attention for deep convolutional neural networks[C]//Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020: 11534–11542. https://doi.org/10.1109/CVPR42600.2020.01155.

[15] Lin T Y, Dollár P, Girshick R, et al. Feature pyramid networks for object detection[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, 2017: 2117–2125. https://doi.org/10.1109/CVPR.2017.106.

[16] Wang W H, Xie E Z, Song X G, et al. Efficient and accurate arbitrary-shaped text detection with pixel aggregation network[C]//Proceedings of 2019 IEEE/CVF International Conference on Computer Vision, 2019: 8440–8449. https://doi.org/10.1109/ICCV.2019.00853.

[17] Yang C H Y, Huang Z H, Wang N Y. QueryDet: cascaded sparse query for accelerating high-resolution small object detection[C]//Proceedings of 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022: 13668–13677. https://doi.org/10.1109/CVPR52688.2022.01330.

[18] Liu Z, Li J G, Shen Z Q, et al. Learning efficient convolutional networks through network slimming[C]//Proceedings of 2017 IEEE International Conference on Computer Vision, 2017: 2736–2744. https://doi.org/10.1109/ICCV.2017.298.

[19] Celik T, Ozkaramanli H, Demirel H. Fire pixel classification using fuzzy logic and statistical color model[C]//Proceedings of 2007 IEEE International Conference on Acoustics, Speech and Signal Processing, 2007: I-1205–I-1208. https://doi.org/10.1109/ICASSP.2007.366130.

[20] Zhang D Y, Han S Z, Zhao J H, et al. Image based forest fire detection using dynamic characteristics with artificial neural networks[C]//Proceedings of 2009 International Joint Conference on Artificial Intelligence, 2009: 290–293. https://doi.org/10.1109/JCAI.2009.79.

[21] Zhao L, Zhi L Q, Zhao C, et al. Fire-YOLO: a small target object detection method for fire inspection[J]. Sustainability, 2022, 14(9): 4930. doi: 10.3390/su14094930

[22] Li S B, Yang Q D, Liu P. An efficient fire detection method based on multiscale feature extraction, implicit deep supervision and channel attention mechanism[J]. IEEE Trans Image Proc, 2020, 29: 8467−8475. doi: 10.1109/TIP.2020.3016431

[23] Wang S Y, Zhao J, Ta N, et al. A real-time deep learning forest fire monitoring algorithm based on an improved pruned + KD model[J]. J Real-Time Image Proc, 2021, 18(6): 2319−2329. doi: 10.1007/s11554-021-01124-9

[24] Ge Z, Liu S T, Wang F, et al. YOLOX: exceeding YOLO series in 2021[Z]. arXiv: 2107.08430, 2021. https://arxiv.org/abs/2107.08430.

[25] Yu G H, Chang Q Y, Lv W Y, et al. PP-PicoDet: a better real-time object detector on mobile devices[Z]. arXiv: 2111.00902, 2021. https://arxiv.org/abs/2111.00902.

[26] Wang C Y, Bochkovskiy A, Liao H Y M. YOLOv7: trainable bag-of-freebies sets new state-of-the-art for real-time object detectors[C]//Proceedings of 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023: 7464–7475. https://doi.org/10.1109/CVPR52729.2023.00721.

[27] Wang S Y, Chen T, Lv X Y, et al. Forest fire detection based on lightweight Yolo[C]//Proceedings of 2021 33rd Chinese Control and Decision Conference, 2021: 1560–1565. https://doi.org/10.1109/CCDC52312.2021.9601362.

[28] Long X, Deng K P, Wang G Z, et al. PP-YOLO: an effective and efficient implementation of object detector[Z]. arXiv: 2007.12099, 2020. https://arxiv.org/abs/2007.12099.

[29] Xu S L, Wang X X, Lv W Y, et al. PP-YOLOE: an evolved version of YOLO[Z]. arXiv: 2203.16250, 2022. https://arxiv.org/abs/2203.16250.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: