-

摘要:

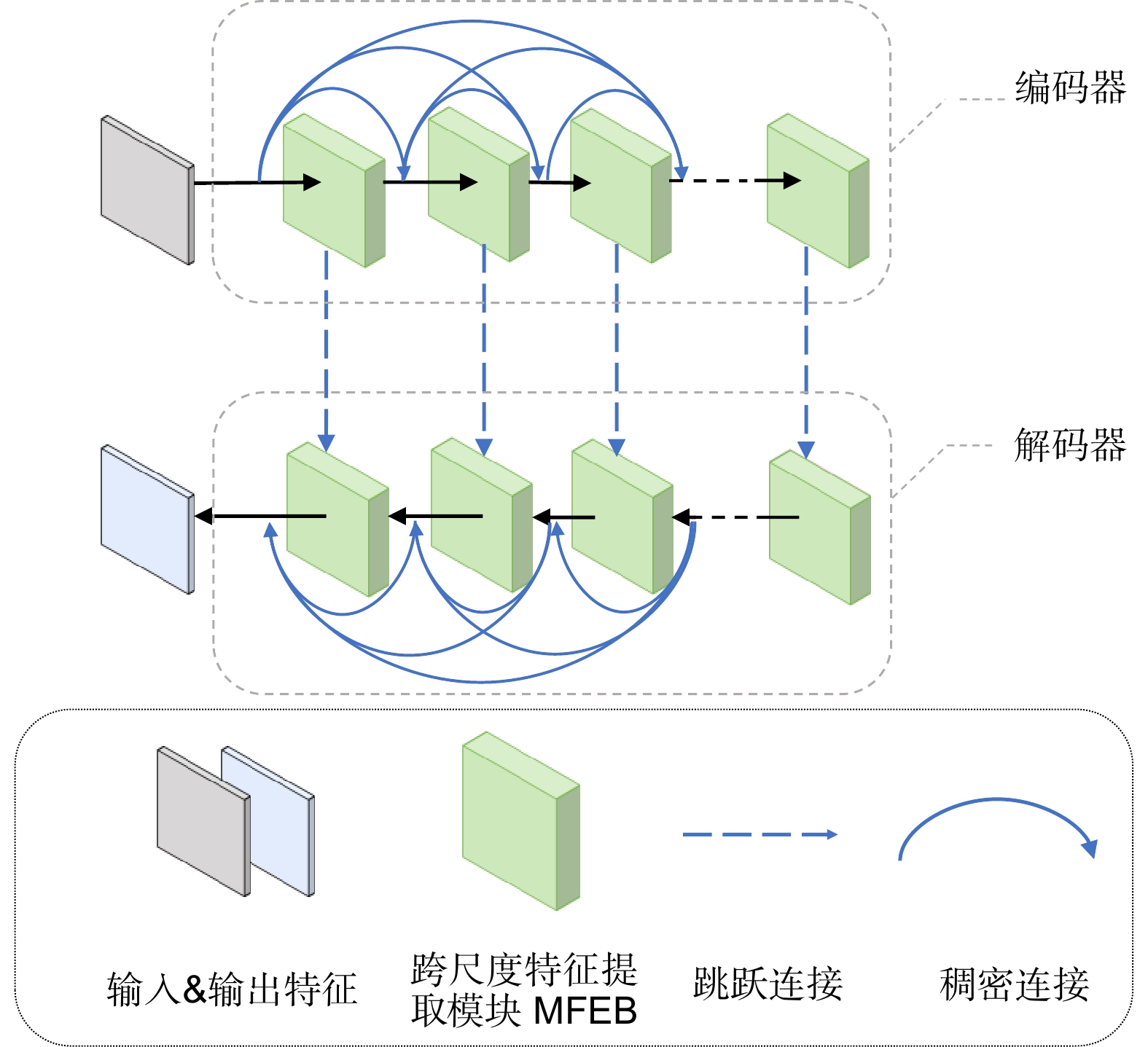

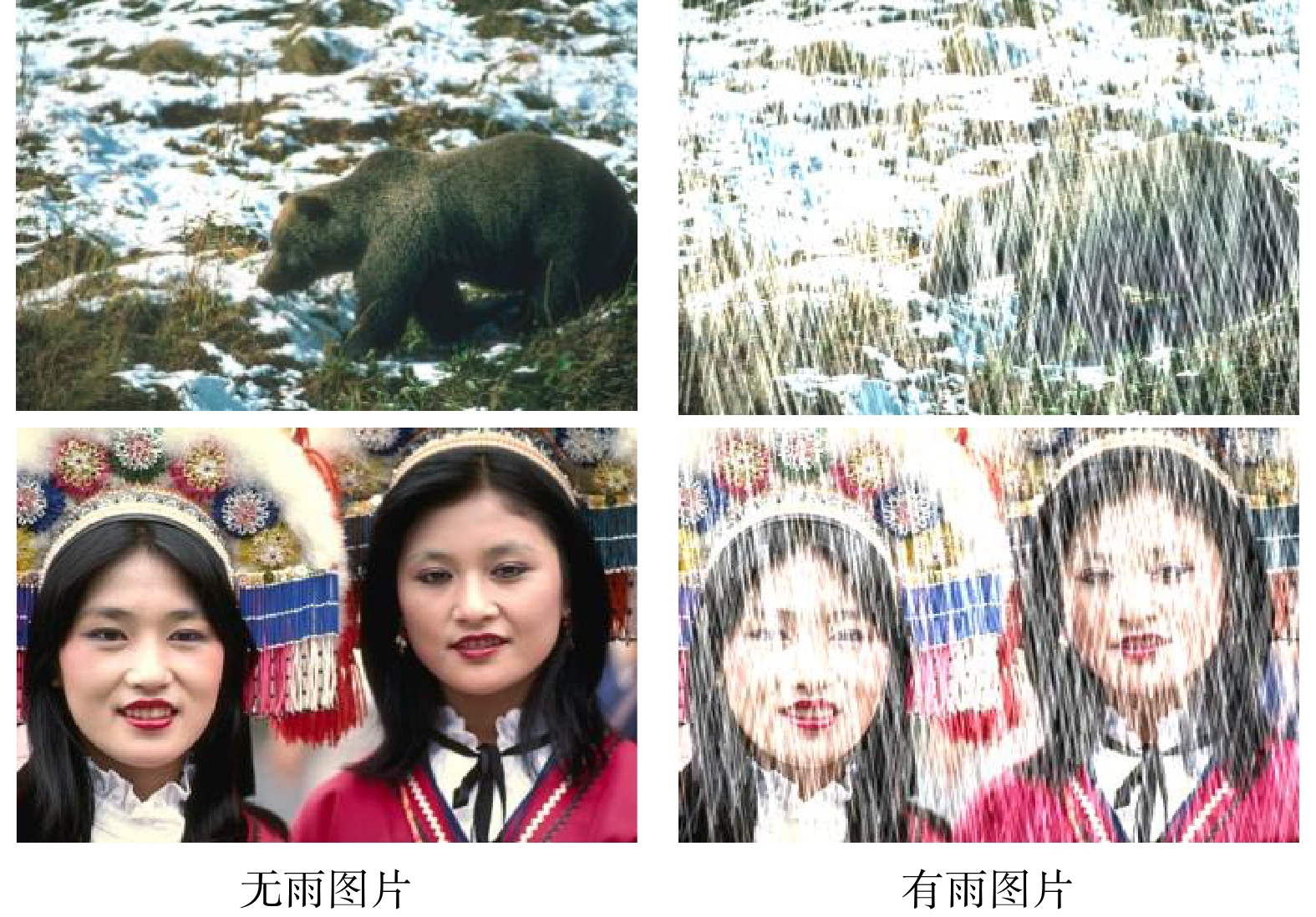

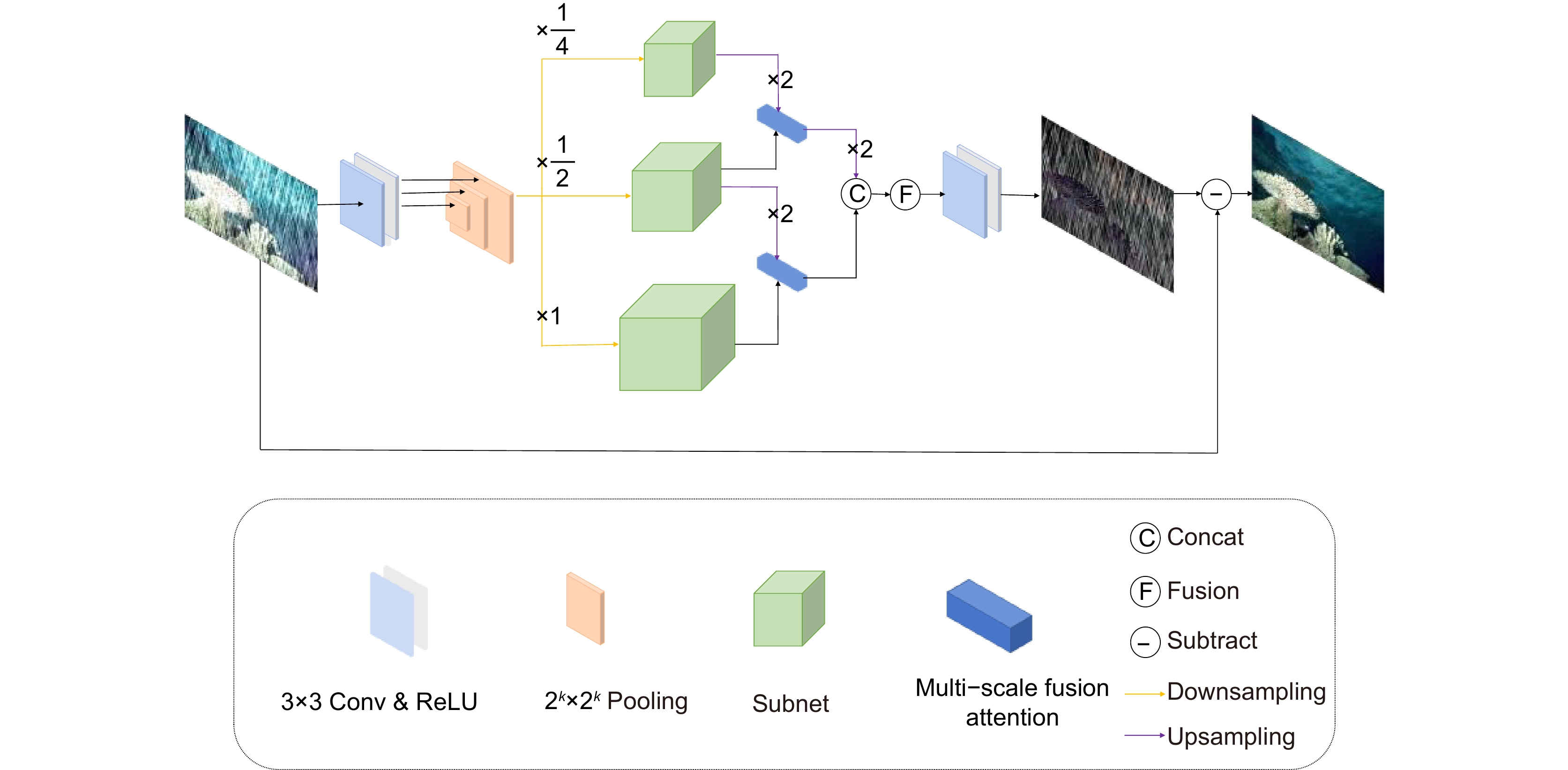

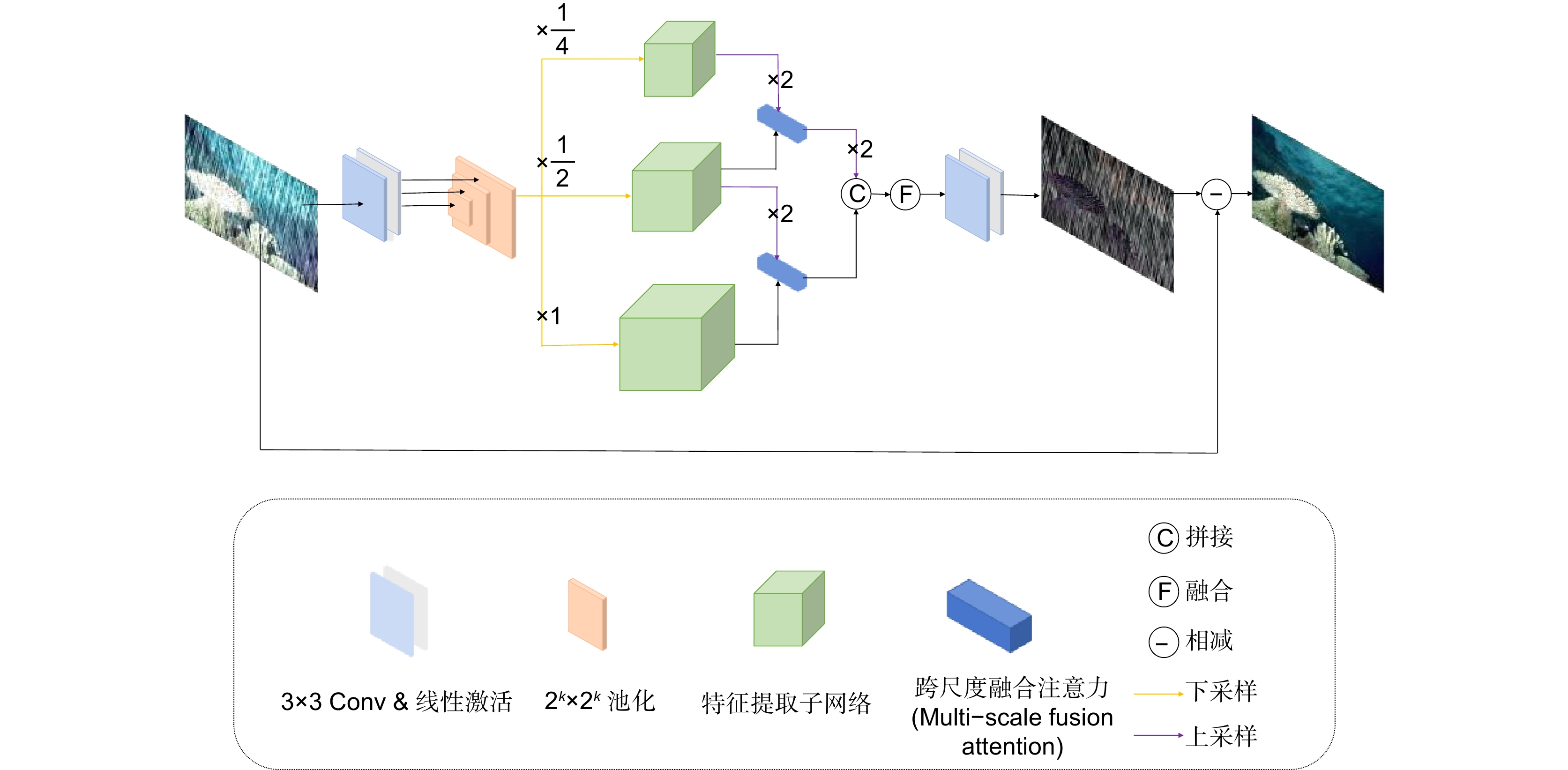

单幅图像去雨算法旨在将有雨图像中的雨纹去除生成高质量无雨图。目前基于深度学习的多尺度去雨算法较难捕获不同层次的细节,忽视尺度之间的信息互补,易导致生成图像失真,雨纹去除不彻底等问题。为此,本文提出了基于跨尺度注意力融合的图像去雨网络,在去除密集雨纹的同时尽量保留原本图片的细节,改善去雨图像的视觉质量。去雨网络由三个子网构成,每个子网用于获取不同尺度上的雨纹信息。各子网由跨尺度特征提取模块通过稠密连接的方式构成,该模块以跨尺度融合注意力为核心,构造不同尺度之间的关联实现信息互补,使图像兼顾细节与整体信息。实验结果表明,本文模型在合成数据集Rain200H和Rain200L上取得显著的去雨效果,去雨处理后的图片峰值信噪比达到了29.91/39.23 dB,结构相似度为0.92/0.99,优于一般的主流方法,并取得了良好的视觉效果,在保证去雨效果自然的同时保持了图像的细节。

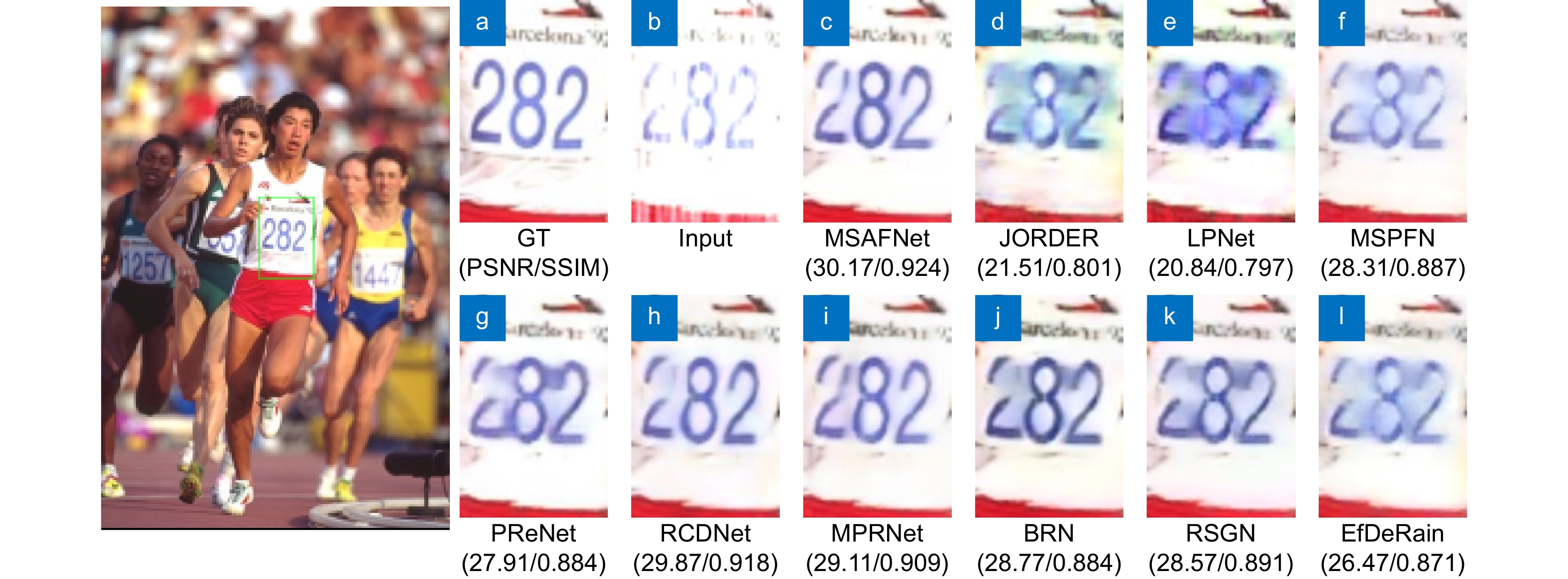

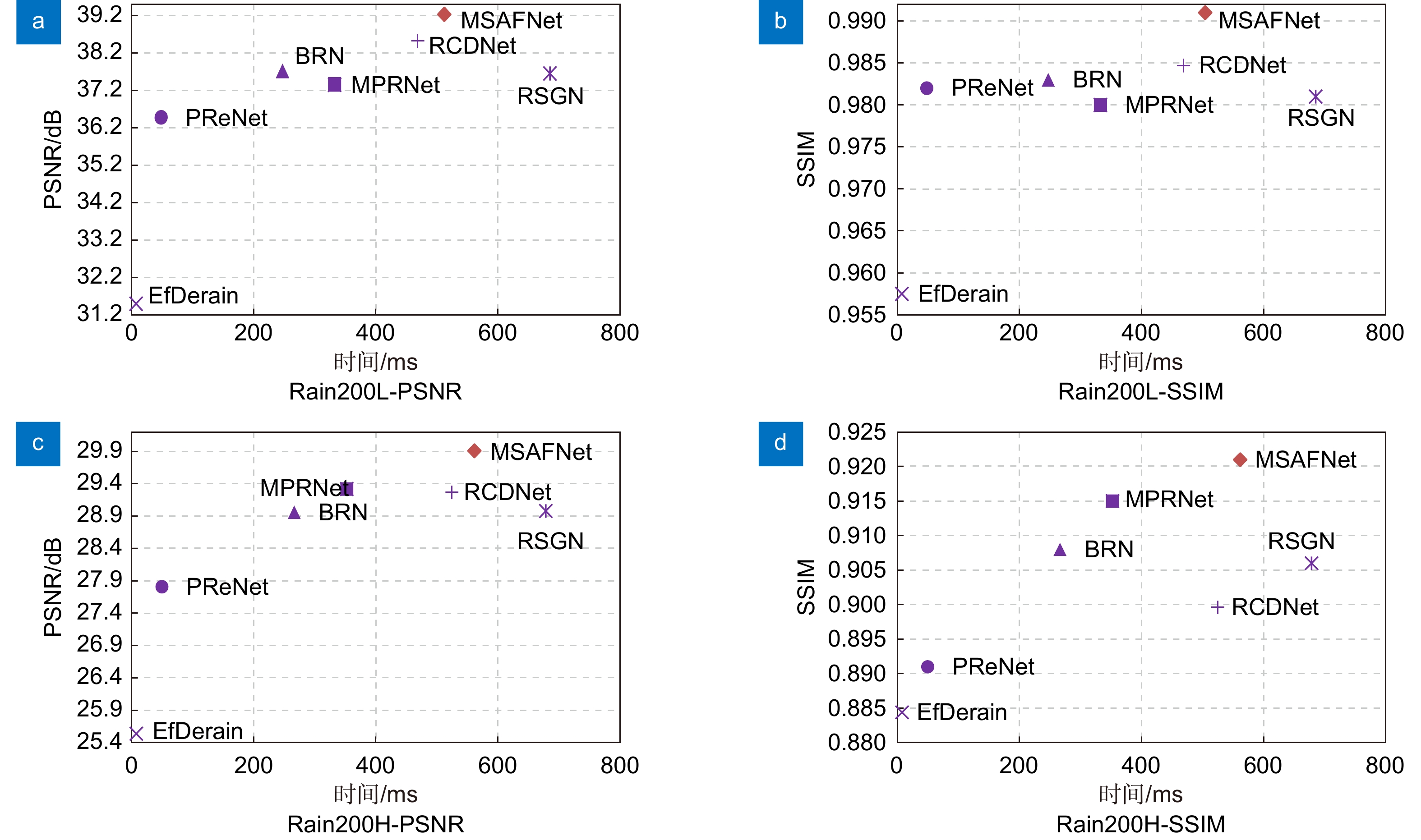

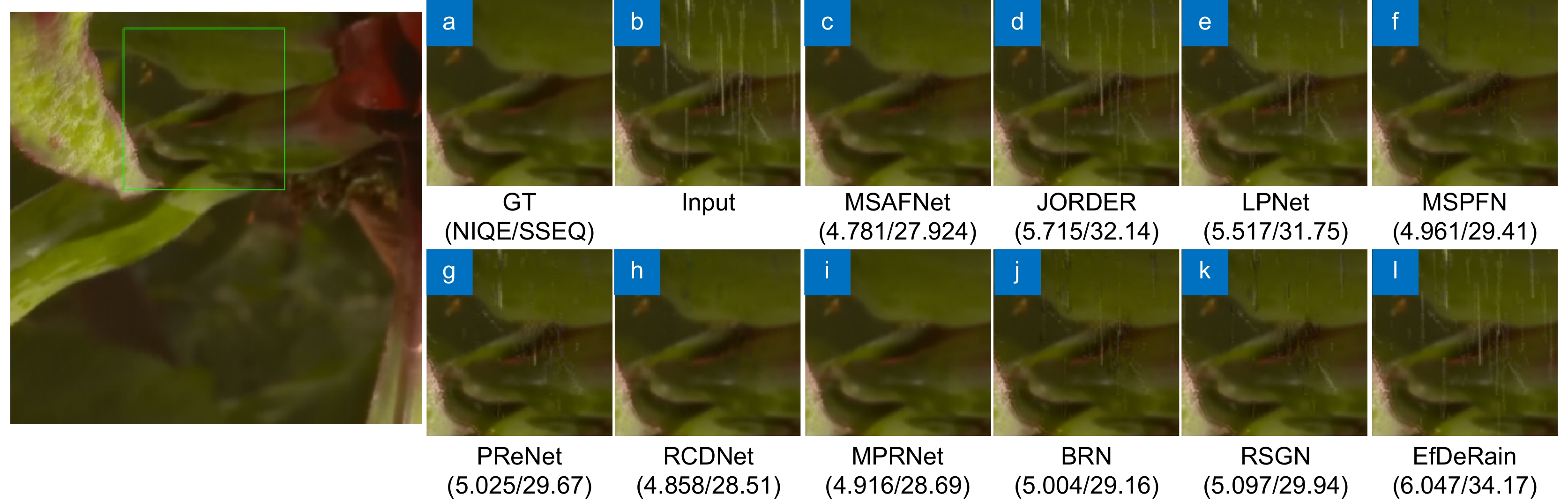

Abstract:Single-image rain removal is a crucial task in computer vision, aiming to eliminate rain streaks from rainy images and generate high-quality rain-free images. Current deep learning-based multi-scale rain removal algorithms face challenges in capturing details at different scales and neglecting information complementarity among scales, which can lead to image distortion and incomplete rain streak removal. To address these issues, this paper proposes an image rain removal network based on cross-scale attention fusion, aiming to remove dense rain streaks while preserving original image details to improve the visual quality of the rain removal image. The rain removal network consists of three sub-networks, each dedicated to obtaining rain pattern information at different scales. Each sub-network is composed of densely connected cross-scale feature fusion modules. The designed module takes the cross-scale attention fusion as the core, which establishes inter-scale relationships to achieve information complementarity and enables the network to consider both details and global information. Experimental results demonstrate the effectiveness of the proposed model on synthetic datasets Rain200H and Rain200L. The peak signal-to-noise ratio (PSNR) of the derained images reaches 29.91/39.23 dB, and the structural similarity index (SSIM) is 0.92/0.99, outperforming general mainstream methods and achieving favorable visual effects while preserving image details and ensuring natural rain removal.

-

Key words:

- machine learning /

- image deraining /

- multi-scale /

- attention mechanism /

- feature fusion

-

Overview: Single-image rain removal is an important task in computer vision, aiming to remove rain streaks from rainy images and generate high-quality rain-free images, which has extensive applications in video surveillance analysis and autonomous driving. However, existing rain removal algorithms based on deep learning face challenges in obtaining global information from rainy images, leading to issues such as loss of image details and incomplete rain streak removal. To address these problems, many rain removal algorithms construct multi-scale networks to enhance the detailed information for image deraining. Although these multi-scale deraining algorithms have achieved good results, directly fusing information from different scales without considering the inter-scale relationships may lead to the loss of background details and image distortion during the upsampling process. Therefore, it is important to consider how to establish relationships across different scales to achieve scale feature complementarity, which enables algorithms to balance both details and global information. In response to the above issues, this article proposes an image rain removal network based on cross-scale attention fusion, which aims to remove dense rain streaks while preserving the details of the original image as much as possible, improving the visual quality of the rain removal image. The network is based on a cross-scale feature fusion module, which can effectively extract feature information at three scales. To solve the problem of image degradation caused by neglecting scale correlation, the convolutions used in the module to extract information at different resolutions are connected in a cross-scale manner, enhancing the ability to capture information at different resolutions. The attention module added in cross-scale connections is used to enhance the feature propagation between neighboring scales, achieving information complementarity across different resolution levels. The rain removal network consists of three sub-networks which are composed of densely connected cross-scale feature fusion modules, and each sub-network is used to obtain rain pattern information at different scales. Experimental results demonstrate the effectiveness of the proposed model on synthetic datasets Rain200H and Rain200L. The peak signal-to-noise ratio (PSNR) of derained images reaches 29.91/39.23 dB, and the structural similarity index (SSIM) is 0.92/0.99. These performances outperform the general mainstream methods and achieve better visual effects in terms of preserving image details. In terms of time efficiency, the proposed model also shows advantages compared to some baseline models while ensuring natural deraining effects and maintaining processing speed.

-

-

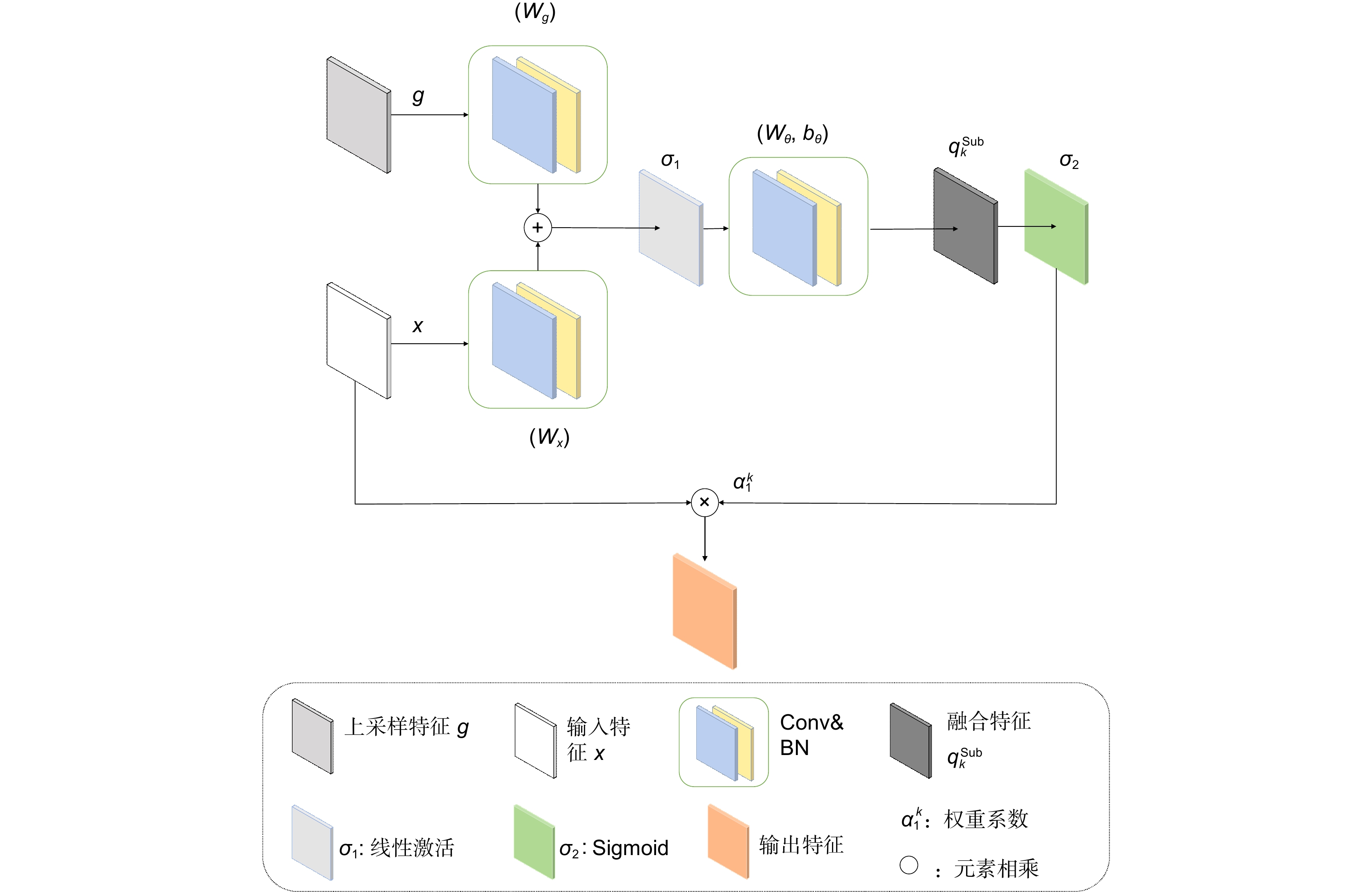

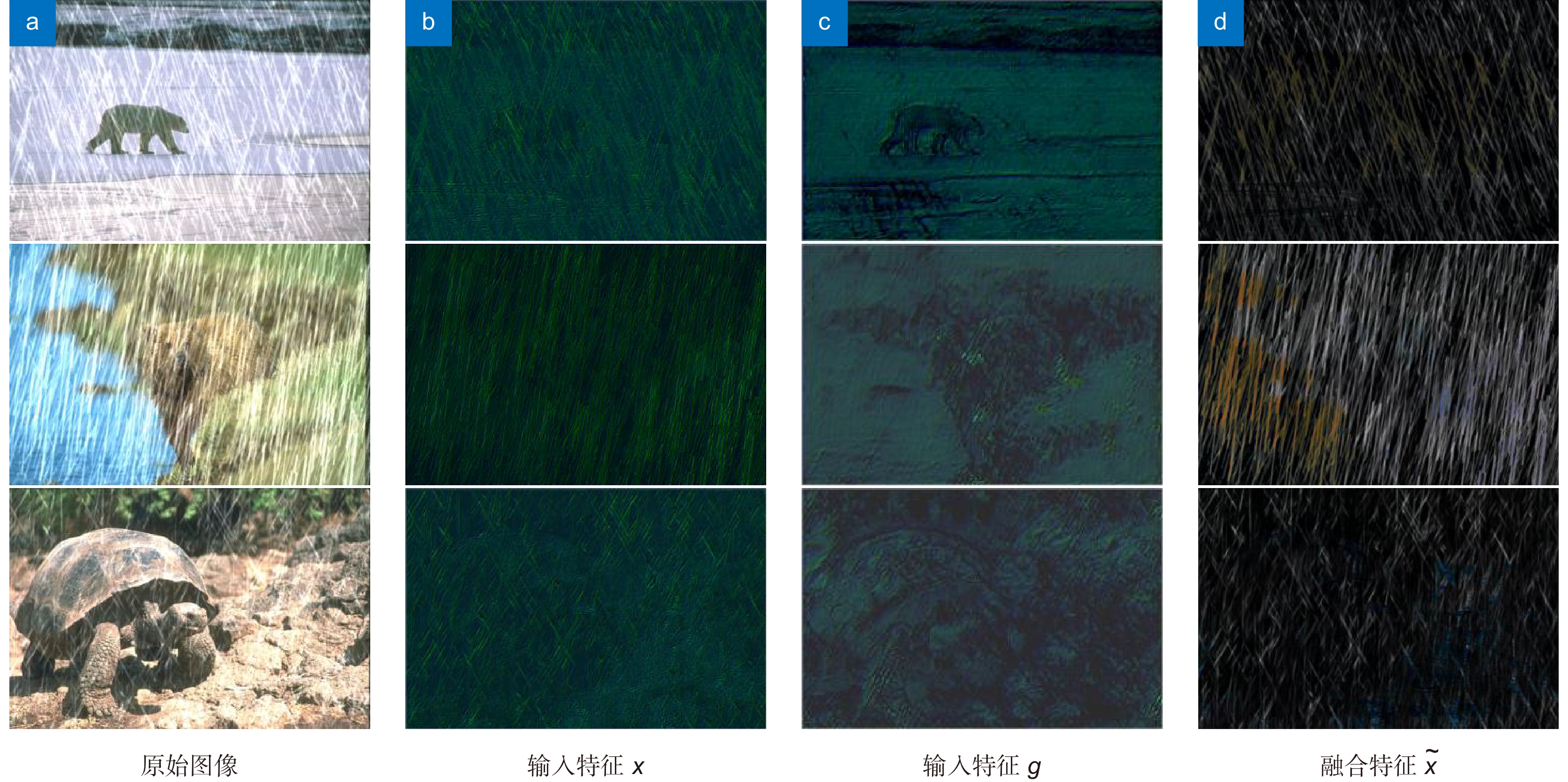

Algorithm 1: 跨尺度融合注意力伪代码 Input:上采样特征g,当前尺度特征x, 卷积归一化层Wg,Wx,Wθ ,激活函数ReLU, Sigmoid Output:跨尺度融合特征Fusion 1 begin 2 Featureg = Wg(g); 3 Featurex= Wx(x); 4 ψ = ReLU (Featureg+Featurex); 5 ψ = Wθ(ψ); 6 α = Sigmoid (ψ); 7 Fusion = α $* $ x; 8 return Fusion; 9 end 表 1 在合成数据集上与其他方法的对比

Table 1. Comparison with other methods on synthetic datasets

Rain200H Rain200L Rain800 Params/M PSNR/dB SSIM PSNR/dB SSIM PSNR/dB SSIM JORDER[11] 23.54 0.805 36.11 0.974 23.47 0.869 3.89 LPNet[12] 21.96 0.785 32.12 0.955 22.81 0.820 0.008 MSPFN[13] 28.64 0.899 37.67 0.975 25.49 0.861 13.35 PReNet[7] 27.81 0.891 36.47 0.982 25.18 0.853 0.16 RCDNet[32] 29.27 0.899 38.52 0.985 26.38 0.872 2.99 MPRNe[33] 29.32 0.915 37.35 0.980 26.10 0.895 20.6 BRN[30] 28.96 0.908 37.71 0.983 25.88 0.857 0.39 RSGN[31] 28.31 0.905 37.65 0.981 26.37 0.893 4.16 EfDeRain[26] 25.54 0.884 31.51 0.957 24.16 0.839 27.61 MSAFNet 29.91 0.921 39.23 0.991 26.63 0.896 12.8 表 2 在真实数据集上与其它方法的对比

Table 2. Comparison with other methods on real dataset

表 3 在Rain200H数据集上消融实验结果

Table 3. Results of ablation experiments on the Rain200H dataset

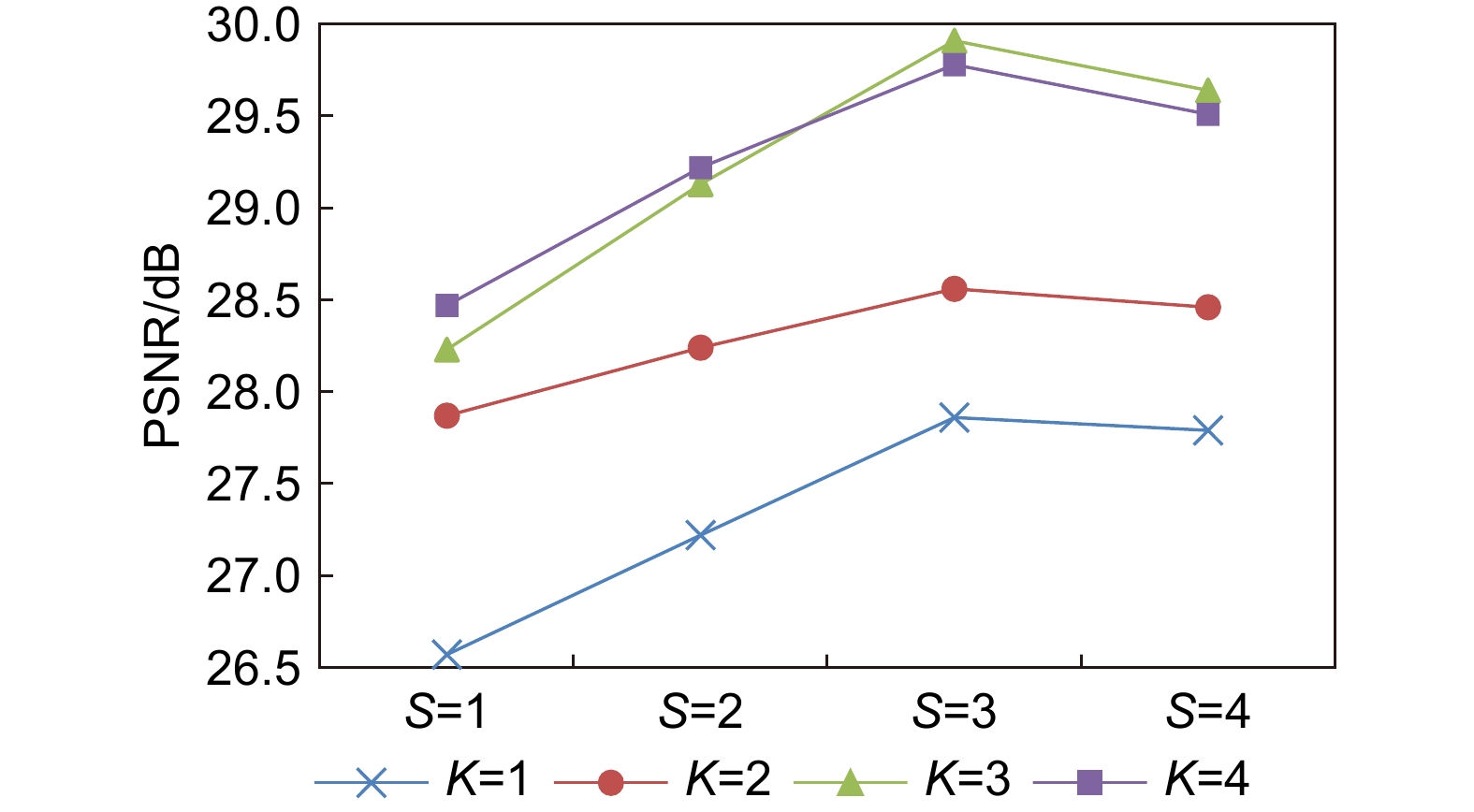

序号 多尺度子网 MFA (Subnet) MFA (MFEB) PSNR/dB SSIM 1 × × × 26.76 0.871 2 √ × × 27.83 0.881 3 × × √ 27.92 0.884 4 √ √ × 28.51 0.898 5 √ × √ 29.47 0.916 6 √ √ √ 29.91 0.921 表 4 子网及MFEB中尺度数量对PSNR的影响

Table 4. The effect of subnet and MFEB scale number on PSNR

S=1 S=2 S=3 S=4 K=1 26.57 27.22 27.86 27.79 K=2 27.87 28.24 28.56 28.46 K=3 28.23 29.13 29.91 29.64 K=4 28.47 29.22 29.78 29.51 -

[1] Kang L W, Lin C W, Fu Y H. Automatic single-image-based rain streaks removal via image decomposition[J]. IEEE Trans Image Process, 2012, 21(4): 1742−1755. doi: 10.1109/TIP.2011.2179057

[2] Kim J H, Lee C, Sim J Y, et al. Single-image deraining using an adaptive nonlocal means filter[C]//2013 IEEE International Conference on Image Processing, Melbourne, VIC, Australia, 2013: 914–917. https://doi.org/10.1109/ICIP.2013.6738189.

[3] Li Y, Tan R T, Guo X J, et al. Rain streak removal using layer priors[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 2016: 2736–2744. https://doi.org/10.1109/CVPR.2016.299.

[4] Eigen D, Krishnan D, Fergus R. Restoring an image taken through a window covered with dirt or rain[C]//Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 2013: 633–640. https://doi.org/10.1109/ICCV.2013.84.

[5] Fu X Y, Huang J B, Zeng D L, et al. Removing rain from single images via a deep detail network[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 2017: 1715–1723. https://doi.org/10.1109/CVPR.2017.186.

[6] Zhang H, Patel V M. Density-aware single image de-raining using a multi-stream dense network[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 2018: 695–704. https://doi.org/10.1109/CVPR.2018.00079.

[7] Ren D W, Zuo W M, Hu Q H, et al. Progressive image deraining networks: a better and simpler baseline[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, USA, 2019: 3932–3941. https://doi.org/10.1109/CVPR.2019.00406.

[8] Qian R, Tan R T, Yang W H, et al. Attentive generative adversarial network for raindrop removal from a single image[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 2018: 2482–2491. https://doi.org/10.1109/CVPR.2018.00263.

[9] Wei Y Y, Zhang Z, Wang Y, et al. DerainCycleGAN: rain attentive CycleGAN for single image deraining and rainmaking[J]. IEEE Trans Image Process, 2021, 30: 4788−4801. doi: 10.1109/TIP.2021.3074804

[10] Huang H B, Yu A J, He R. Memory oriented transfer learning for semi-supervised image deraining[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 2021: 7728–7737. https://doi.org/10.1109/CVPR46437.2021.00764.

[11] Yang W H, Tan R T, Feng J S, et al. Deep joint rain detection and removal from a single image[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 2017: 1685–1694. https://doi.org/10.1109/CVPR.2017.183.

[12] Fu X Y, Liang B R, Huang Y, et al. Lightweight pyramid networks for image deraining[J]. IEEE Trans Neural Netw Learn Syst, 2020, 31(6): 1794−1807. doi: 10.1109/TNNLS.2019.2926481

[13] Jiang K, Wang Z Y, Yi P, et al. Multi-scale progressive fusion network for single image deraining[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 2020: 8343–8352. https://doi.org/10.1109/CVPR42600.2020.00837.

[14] Wang Z D, Cun X D, Bao J M, et al. Uformer: a general U-shaped transformer for image restoration[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 2022: 17662–17672. https://doi.org/10.1109/CVPR52688.2022.01716.

[15] Chen X, Li H, Li M Q, et al. Learning a sparse transformer network for effective image deraining[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 2023: 5896–5905. https://doi.org/10.1109/CVPR52729.2023.00571.

[16] Liu J J, Hou Q B, Cheng M M, et al. A simple pooling-based design for real-time salient object detection[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 2019: 3912–3921. https://doi.org/10.1109/CVPR.2019.00404.

[17] Gao Z T, Wang L M, Wu G S. LIP: local importance-based pooling[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 2019: 3354–3363. https://doi.org/10.1109/ICCV.2019.00345.

[18] 陈龙, 张建林, 彭昊, 等. 多尺度注意力与领域自适应的小样本图像识别[J]. 光电工程, 2023, 50(4): 220232. doi: 10.12086/oee.2023.220232

Chen L, Zhang J L, Peng H, et al. Few-shot image classification via multi-scale attention and domain adaptation[J]. Opto-Electron Eng, 2023, 50(4): 220232. doi: 10.12086/oee.2023.220232

[19] 赵冬冬, 叶逸飞, 陈朋, 等. 基于残差和注意力网络的声呐图像去噪方法[J]. 光电工程, 2023, 50(6): 230017. doi: 10.12086/oee.2023.230017

Zhao D D, Ye Y F, Chen P, et al. Sonar image denoising method based on residual and attention network[J]. Opto-Electron Eng, 2023, 50(6): 230017. doi: 10.12086/oee.2023.230017

[20] Huang G, Liu Z, Van Der Maaten L, et al. Densely connected convolutional networks[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 2017: 2261–2269. https://doi.org/10.1109/CVPR.2017.243.

[21] 赵圆圆, 施圣贤. 融合多尺度特征的光场图像超分辨率方法[J]. 光电工程, 2020, 47(12): 200007. doi: 10.12086/oee.2020.200007

Zhao Y Y, Shi S X. Light-field image super-resolution based on multi-scale feature fusion[J]. Opto-Electron Eng, 2020, 47(12): 200007. doi: 10.12086/oee.2020.200007

[22] Fan Z W, Wu H F, Fu X Y, et al. Residual-guide feature fusion network for single image deraining[Z]. arXiv: 1804.07493, 2018. https://doi.org/10.48550/arXiv.1804.07493.

[23] Wu X, Huang T Z, Deng L J, et al. A decoder-free transformer-like architecture for high-efficiency single image deraining[C]//Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, Vienna, Austria, 2022: 1474–1480. https://doi.org/10.24963/ijcai.2022/205.

[24] Chen Z, Bi X J, Zhang Y, et al. LightweightDeRain: learning a lightweight multi-scale high-order feedback network for single image de-raining[J]. Neural Comput Appl, 2022, 34(7): 5431−5448. doi: 10.1007/s00521-021-06700-5

[25] Wang T Y, Yang X, Xu K, et al. Spatial attentive single-image deraining with a high quality real rain dataset[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 2019: 12262–12271. https://doi.org/10.1109/CVPR.2019.01255.

[26] Guo Q, Sun J Y, Xu J F, et al. EfficientDeRain: learning pixel-wise dilation filtering for high-efficiency single-image deraining[C]//Proceedings of the 35th AAAI Conference on Artificial Intelligence, 2021: 1487–1495. https://doi.org/10.1609/aaai.v35i2.16239.

[27] Guo X, Fu X Y, Zhou M, et al. Exploring Fourier prior for single image rain removal[C]//Proceedings of the Thirty-First International Joint Conference on Artificial Intelligence, Vienna, Austria, 2022: 935–941. https://doi.org/10.24963/ijcai.2022/131.

[28] Wang Z, Bovik A C, Sheikh H R, et al. Image quality assessment: from error visibility to structural similarity[J]. IEEE Trans Image Process, 2004, 13(4): 600−612. doi: 10.1109/TIP.2003.819861

[29] Zhang H, Sindagi V, Patel V M. Image de-raining using a conditional generative adversarial network[J]. IEEE Trans Circuits Syst Video Technol, 2020, 30(11): 3943−3956. doi: 10.1109/TCSVT.2019.2920407

[30] Ren D W, Shang W, Zhu P F, et al. Single image deraining using bilateral recurrent network[J]. IEEE Trans Image Process, 2020, 29: 6852−6863. doi: 10.1109/TIP.2020.2994443

[31] Wang C, Zhu H H, Fan W S, et al. Single image rain removal using recurrent scale-guide networks[J]. Neurocomputing, 2022, 467: 242−255. doi: 10.1016/j.neucom.2021.10.029

[32] Wang H, Xie Q, Zhao Q, et al. A model-driven deep neural network for single image rain removal[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 2020: 3100–3109. https://doi.org/10.1109/CVPR42600.2020.00317.

[33] Zamir S W, Arora A, Khan S, et al. Multi-stage progressive image restoration[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 2021: 14816–14826. https://doi.org/10.1109/CVPR46437.2021.01458.

[34] Mittal A, Soundararajan R, Bovik A C. Making a “completely blind” image quality analyzer[J]. IEEE Signal Process Lett, 2013, 20(3): 209−212. doi: 10.1109/LSP.2012.2227726

[35] Liu L X, Liu B, Huang H, et al. No-reference image quality assessment based on spatial and spectral entropies[J]. Signal Process Image Commun, 2014, 29(8): 856−863. doi: 10.1016/j.image.2014.06.006

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: