-

摘要

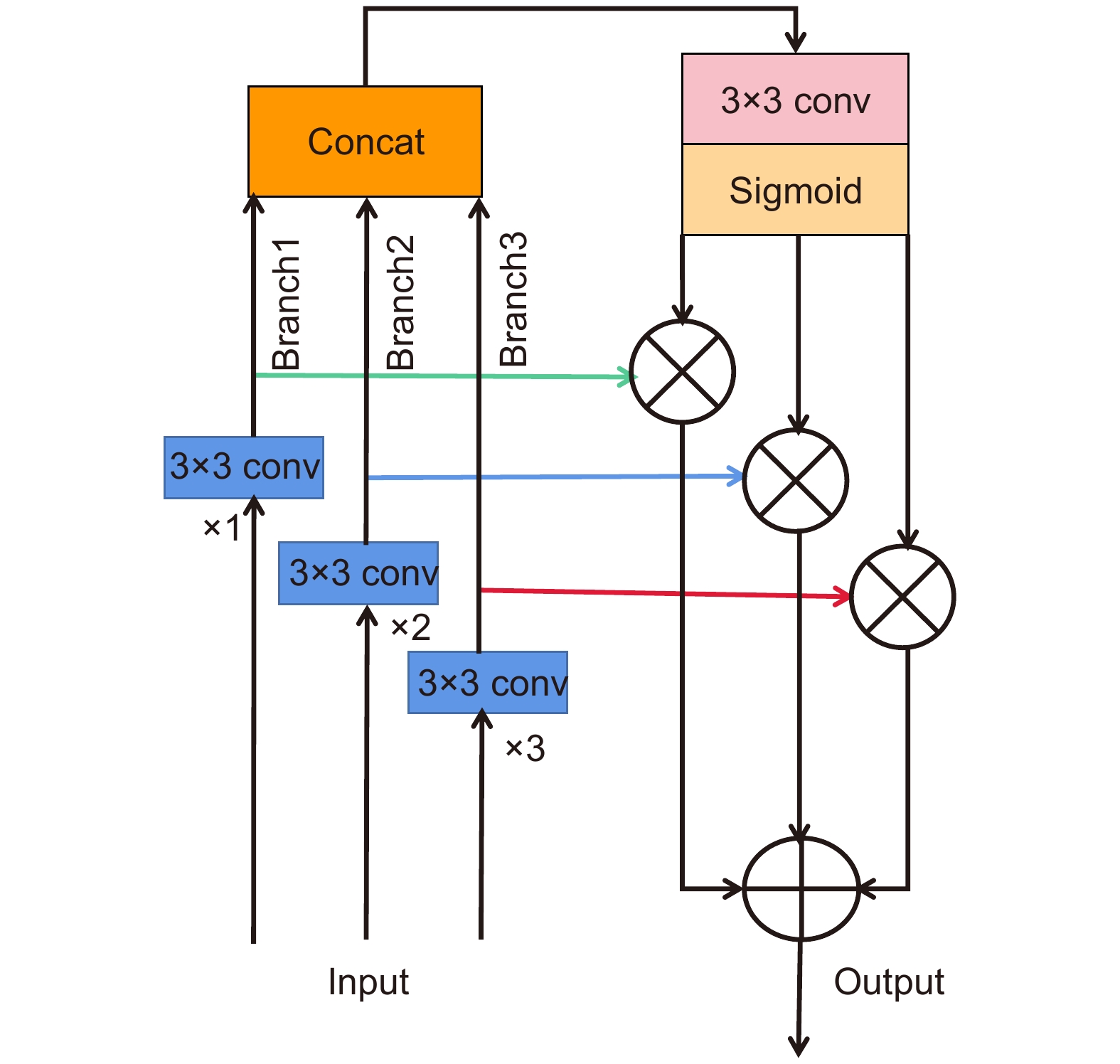

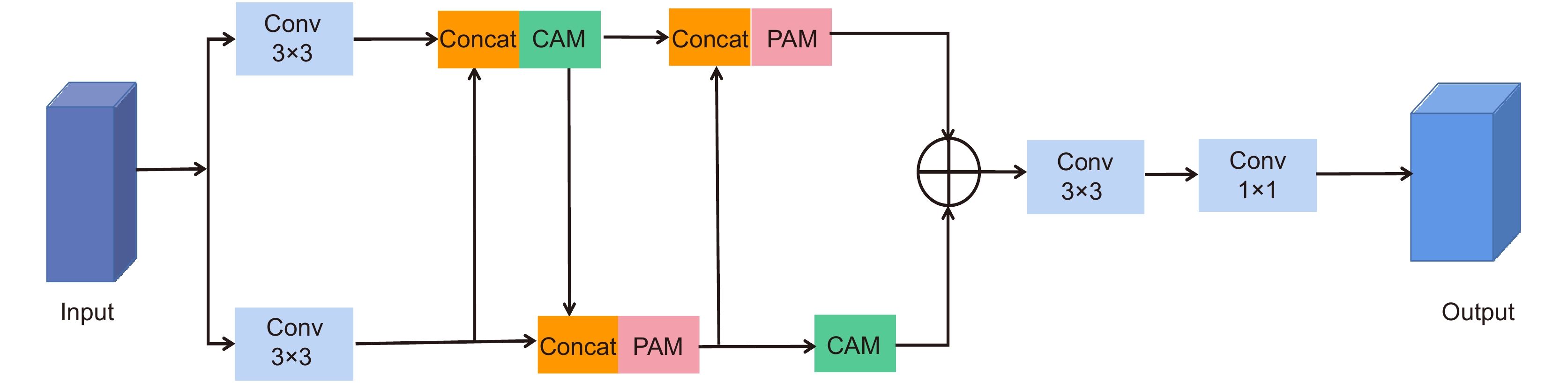

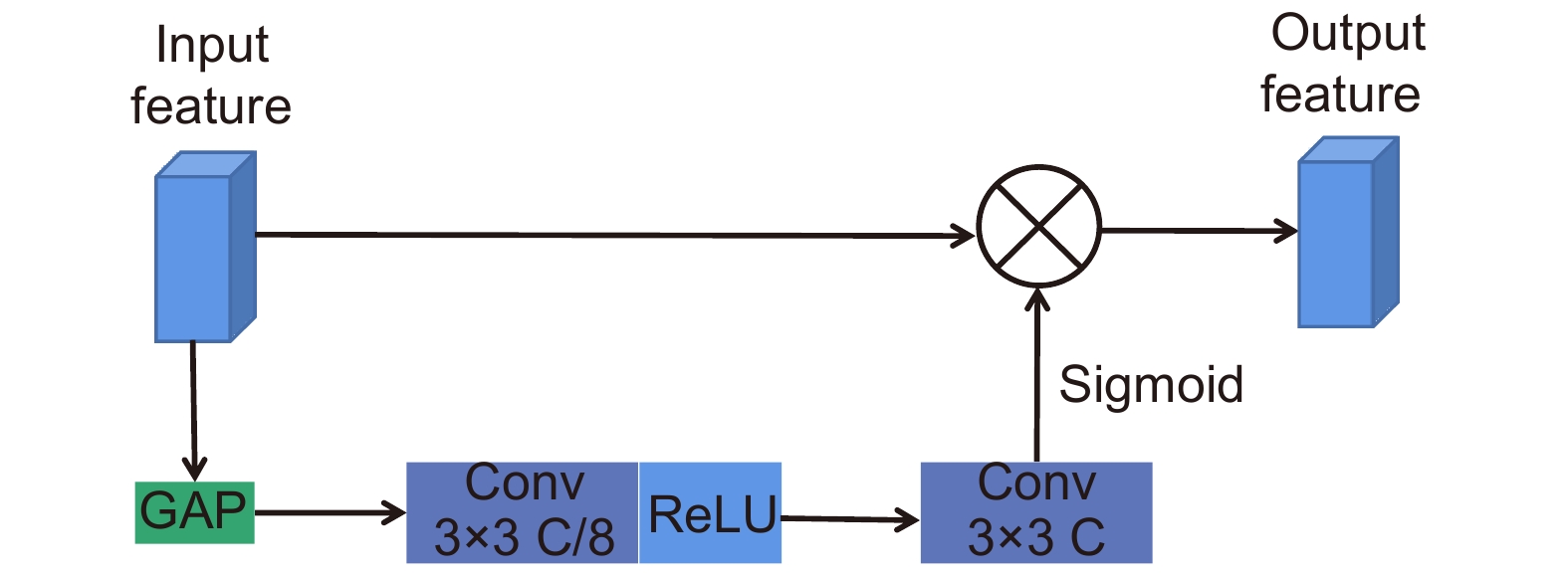

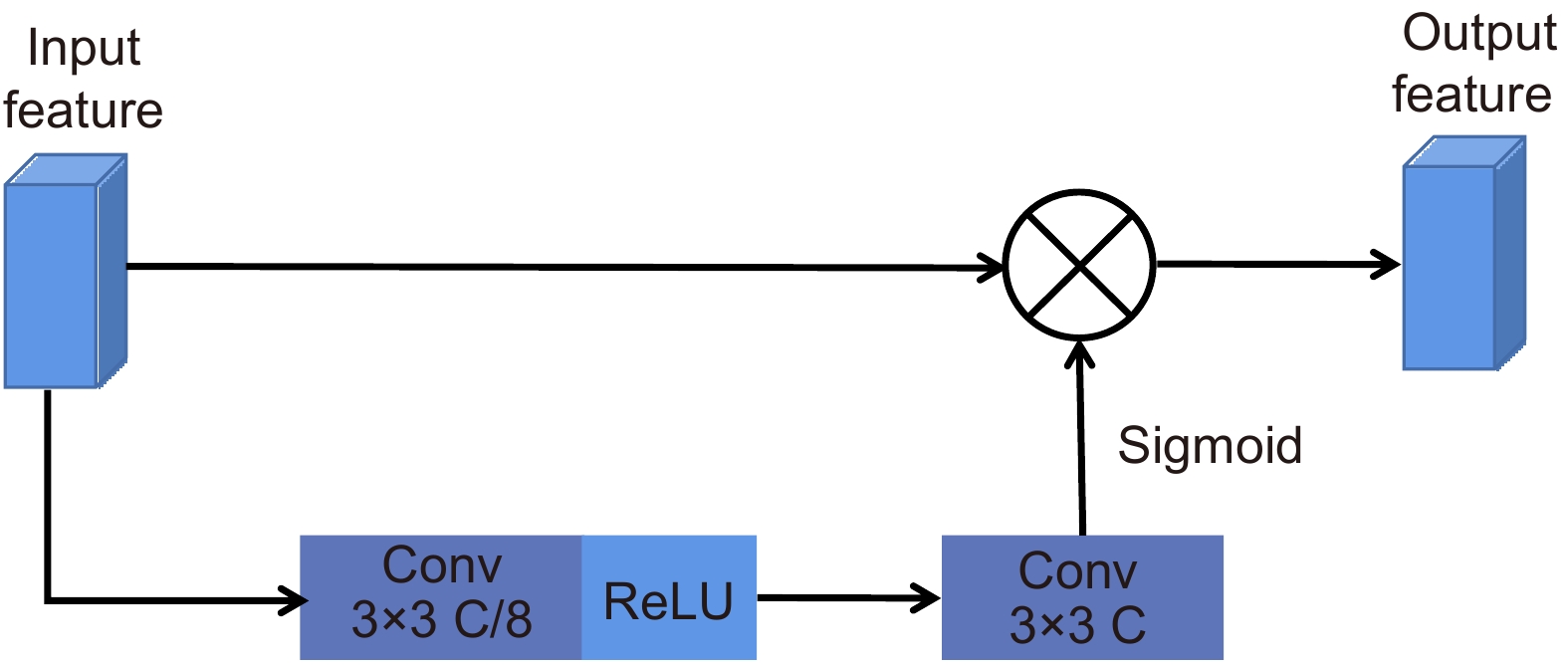

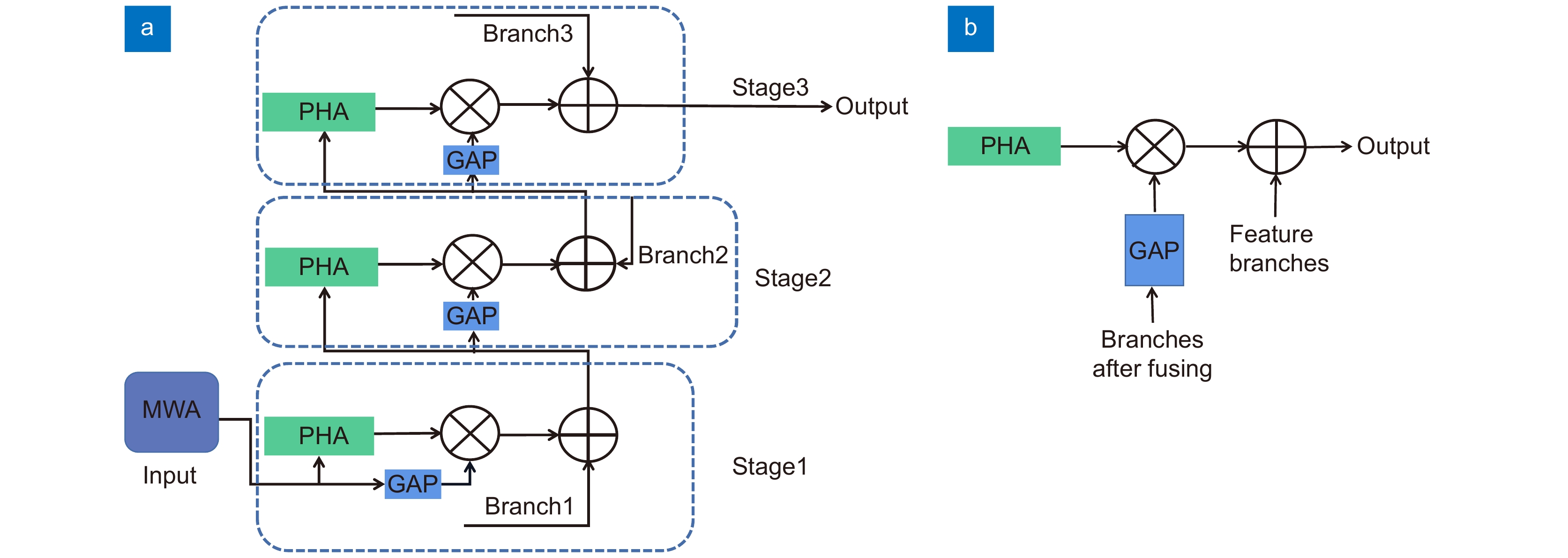

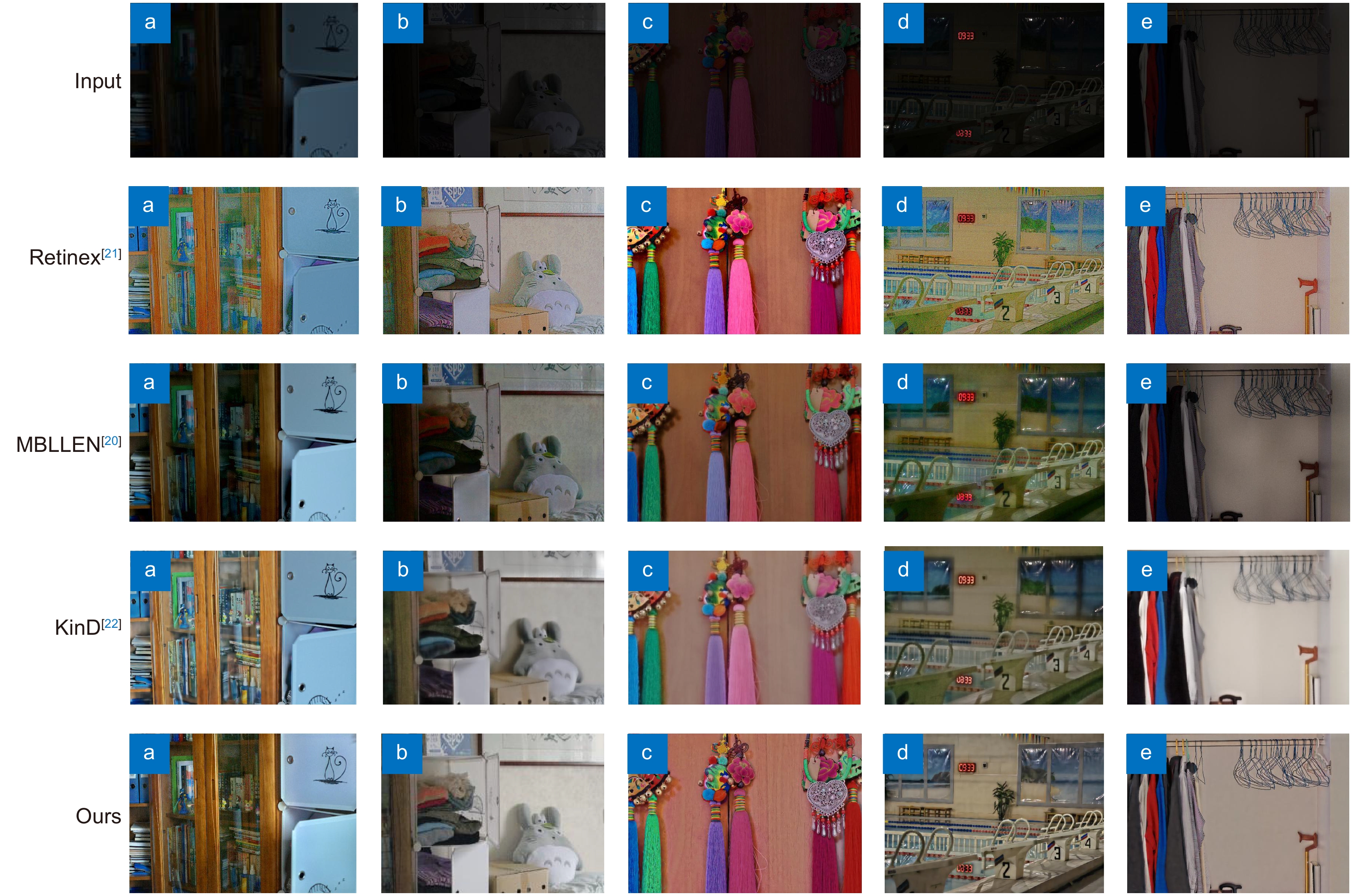

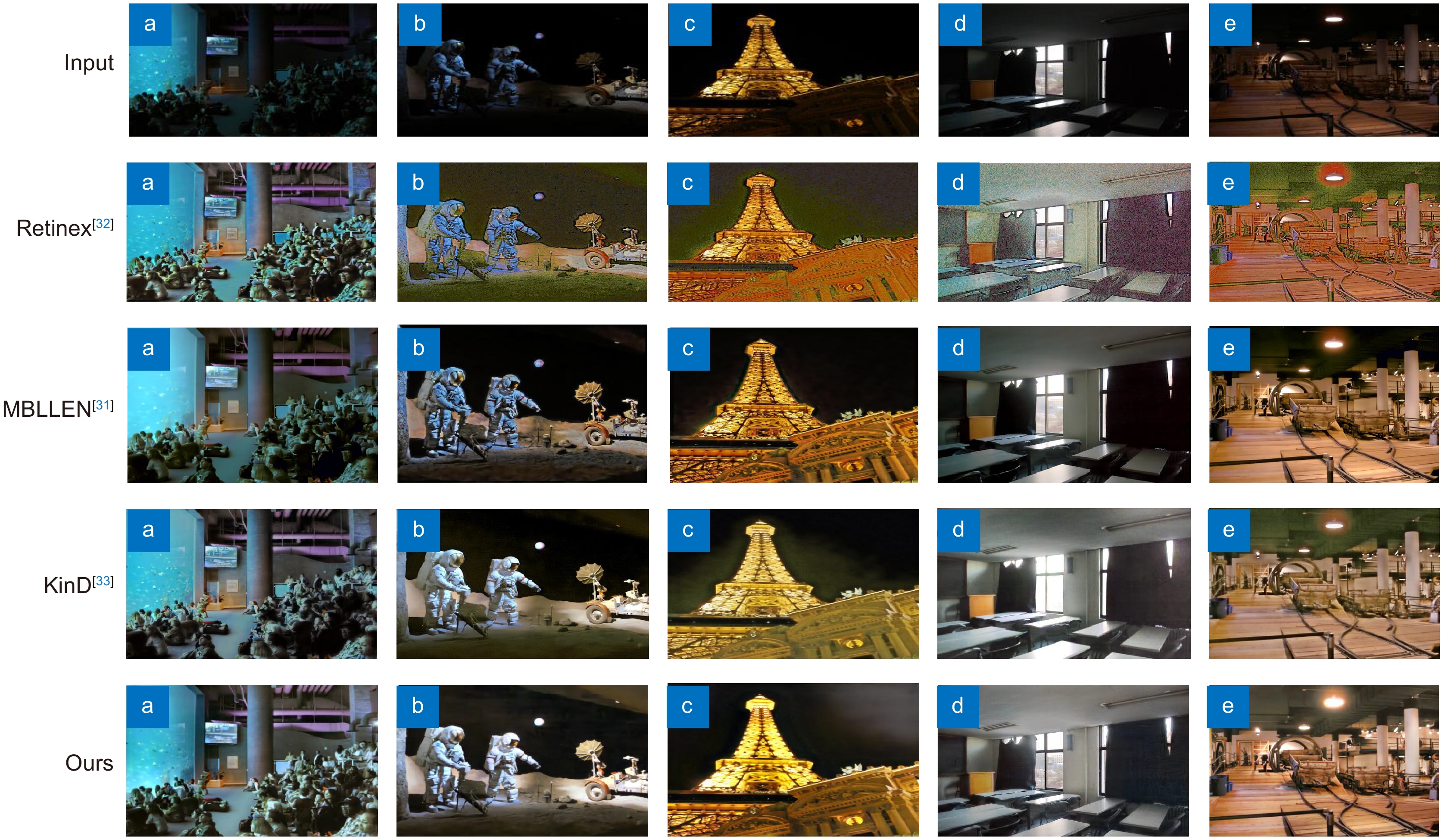

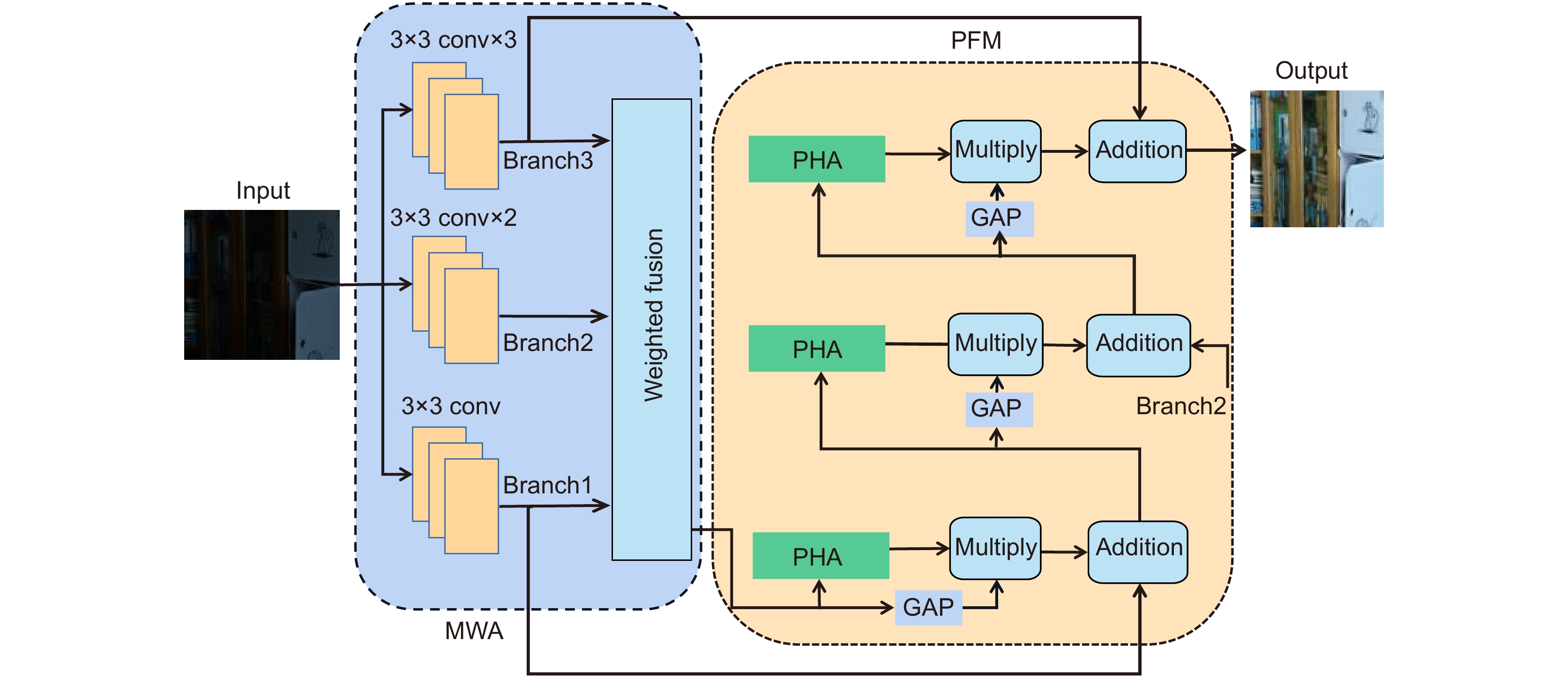

针对低照度图像增强过程中出现的色彩失真、噪声放大和细节信息丢失等问题,提出一种并行混合注意力的渐进融合图像增强方法(progressive fusion of parallel hybrid attention,PFA)。首先,设计多尺度加权聚合网络(multi-scale weighted aggregation,MWA),通过聚合不同感受野下学习到的多尺度特征,促进局部特征的全域化表征,加强原始图像细节信息的保留;其次,提出并行混合注意力结构(parallel hybrid attention module,PHA),利用像素注意力和通道注意力并联组合排列,缓解不同分支注意力分布滞后造成的颜色差异,通过相邻注意力间的信息相互补充有效提高图像的色彩表现力并弱化噪声;最后,设计渐进特征融合模块(progressive feature fusion module,PFM),在三个阶段由粗及细对前阶段输入特征进行再处理,补充因网络深度增加造成的浅层特征流失,避免因单阶段特征堆叠导致的信息冗余。LOL、DICM、MEF和LIME数据集上的实验结果表明,本文方法在多个评价指标上的表现均优于对比方法。

Abstract

Aiming at the problems of color distortion, noise amplification, and loss of detailed information in the process of low illumination image enhancement, a progressive fusion of parallel hybrid attention (PFA) is proposed. First, a multi-scale weighted aggregation (MWA) network is designed to aggregate multi-scale features learned from different receptive fields, promote the global representation of local features, and strengthen the retention of original image details; Secondly, a parallel hybrid attention module (PHA) is proposed. Pixel attention and channel attention are combined in parallel to alleviate the color difference caused by the distribution lag of different branches of attention, and the information between adjacent attention is used to complement each other to effectively improve the color representation of images and reduce noise; Finally, a progressive feature fusion module (PFM) is designed to reprocess the input features of the previous stage from coarse to fine in three stages, supplement the shallow feature loss caused by the increase of network depth, and avoid the information redundancy caused by single stage feature stacking. The experimental results on LOL, DICM, MEF, and LIME datasets show that the performance of the method in this paper is better than that of the comparison methods on multiple evaluation indicators.

-

Overview

Overview: In many scenes in real life, collecting high-quality images is one of the key factors to achieve high accuracy in object detection, image segmentation, automatic driving, medical surgery, and other works. However, images and videos collected by electronic devices are very vulnerable to various environmental factors, such as poor lighting, resulting in low image brightness, color distortion, more noise, effective details, and texture information loss, which brings many difficulties to subsequent tasks and works. The enhancement of low-illumination images generally restores image clarity by increasing brightness, removing noise, and restoring image color. In recent years, the depth neural network has had a strong nonlinear fitting ability, which has achieved good results in low illumination enhancement, image deblurring, and other fields. However, the existing low illumination image enhancement algorithms will lead to color imbalance when improving image brightness and contrast, and easily ignore the impact of some noises. Based on the above questions, this paper proposes an image enhancement method with parallel mixed attention step-by-step fusion. With the aid of the limited correlation between local features extracted by weighting different multi-scale branches, the local image details under multiple receptive fields can complement each other, and use parallel mixed attention to focus on color information and lighting features at the same time, which effectively improves the detail representation of the network and reduces noises. Finally, shallow feature information is fused in multiple stages. In order to alleviate the model confusion caused by the weakening of color information expression and single-stage feature superposition caused by the increase of network depth. The ablation experiment, module multi-stage experiment, and multiple evaluation indexes are compared with the existing advanced methods on four commonly used datasets, which fully proves that the method proposed in this paper is superior to the comparison methods on multiple evaluation parameters, and can effectively improve the overall brightness of the image, adjust the image color imbalance and remove noises. Combining the follow-up research task of the subject and analyzing the shortcomings of the network, a way to simplify the model and improve the operation speed will be the key direction of the follow-up research task.

-

-

表 1 在LOL数据集上与先进的图像增强方法进行量比较

Table 1. Compares the amount of LOL data set with advanced image enhancement methods

Methods SSIM$ \uparrow $ PSNR$ \uparrow $ /dB LPIPS$ \downarrow $ GMSD$ \downarrow $ FSIM$ \uparrow $ UQI$ \uparrow $ LIME[19] 0.5649 16.7586 0.4183 0.1541 0.8549 0.8805 MBLLEN[20] 0.7247 17.8583 0.3672 0.1160 0.9262 0.8261 Retinex[21] 0.5997 16.7740 0.4249 0.1549 0.8642 0.9110 KinD[22] 0.8025 20.8741 0.5137 0.0888 0.9397 0.9250 EnGAN [23] 0.6515 17.4828 0.3903 0.1046 0.9226 0.8499 Zero-DCE[24] 0.5623 14.8671 0.3852 0.1646 0.9276 0.7205 GLAD[25] 0.7247 19.7182 0.3994 0.2035 0.9329 0.9204 Ours 0.9053 21.8939 0.3557 0.1035 0.9381 0.9266 (上箭头$ \uparrow $和下箭头$ \downarrow $分别表示随着指标数值变大或减小,并将最优结果加粗标出) 表 2 在LIME、DICM、MEF数据集上与先进的图像增强方法进行量比较

Table 2. Compares the values of Lime, DICM and MEF data sets with those of advanced image enhancement methods

表 3 在LOL数据集上加入不同网络模块后的量化比较

Table 3. Quantitative comparison after adding different network modules to LOL data set

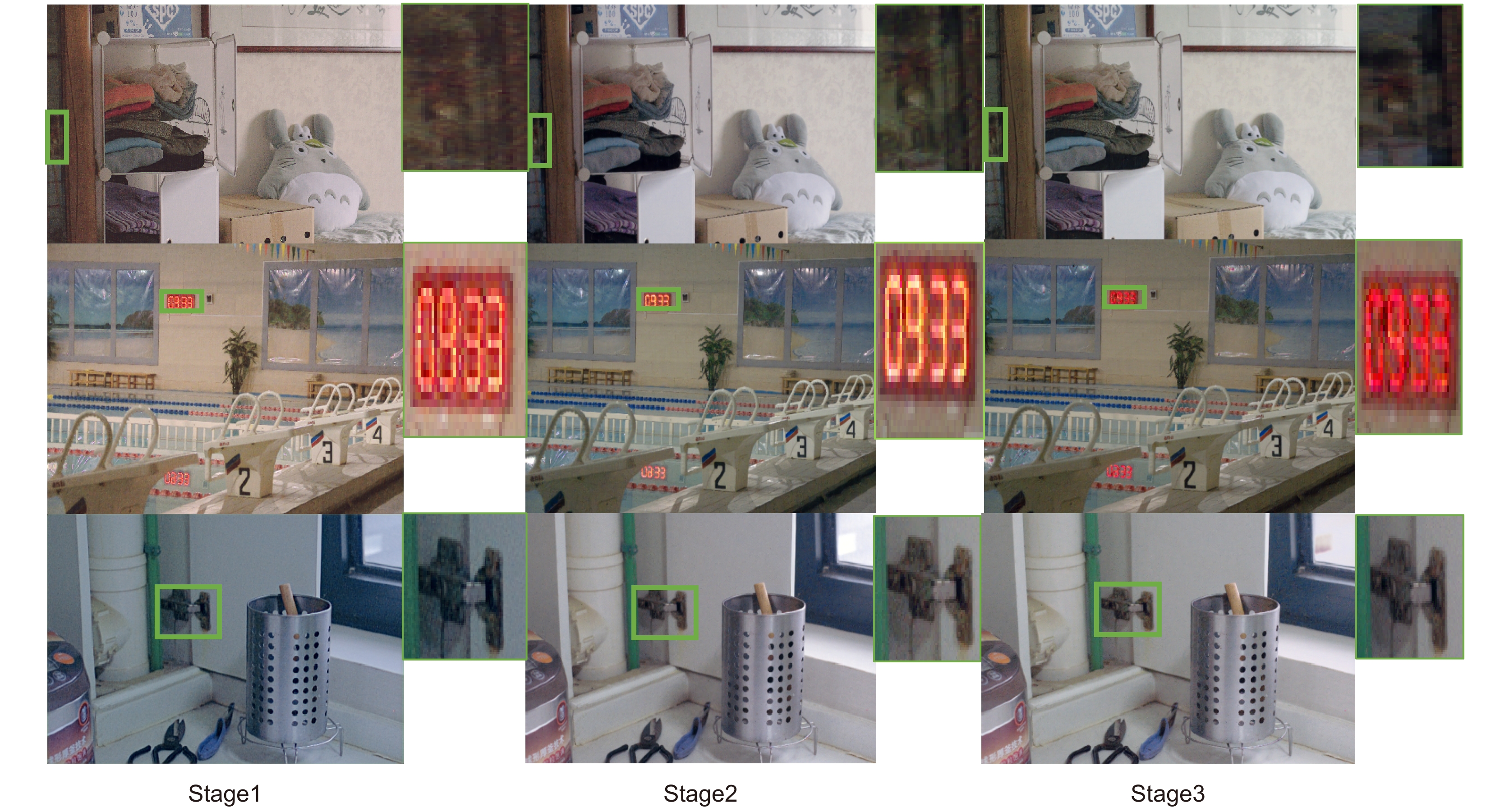

Methods PSNR/dB SSIM Baseline 18.44 0.73 w/o PHA、PFM,with MWA 19.07 0.78 With PHA,w/o MWA、PFM 20.53 0.84 Ours 21.87 0.89 表 4 渐进特征融合在不同阶段增强后的量化结果

Table 4. Quantitative results of progressive fusion after enhancement in different stages

Stage PSNR/dB SSIM With 1 , w/o 2、3 20.08 0.76 With 1、2, w/o 3 20.91 0.83 With 1、2、3 21.53 0.87 -

参考文献

[1] Jiang Y F, Gong X Y, Liu D, et al. EnlightenGAN: deep light enhancement without paired supervision[J]. IEEE Trans Image Process, 2021, 30: 2340−2349. doi: 10.1109/TIP.2021.3051462

[2] 肖进胜, 单姗姗, 段鹏飞, 等. 基于不同色彩空间融合的快速图像增强算法[J]. 自动化学报, 2014, 40(4): 697−705. doi: 10.3724/SP.J.1004.2014.00697

Xiao J S, Shan S S, Duan P F, et al. A fast image enhancement algorithm based on fusion of different color spaces[J]. Acta Autom Sin, 2014, 40(4): 697−705. doi: 10.3724/SP.J.1004.2014.00697

[3] Pei S C, Shen C T. Color enhancement with adaptive illumination estimation for low-backlighted displays[J]. IEEE Trans Multimedia, 2017, 19(8): 1956−1961. doi: 10.1109/TMM.2017.2688924

[4] Sahoo S, Nanda P K. Adaptive feature fusion and spatio-temporal background modeling in KDE framework for object detection and shadow removal[J]. IEEE Trans Circuits Syst Video Technol, 2022, 32(3): 1103−1118. doi: 10.1109/TCSVT.2021.3074143

[5] Zheng C J, Shi D M, Shi W T. Adaptive unfolding total variation network for low-light image enhancement[C]//Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, 2021: 4419–4428. https://doi.org/10.1109/ICCV48922.2021.00440.

[6] Tian Q C, Cohen D. Global and local contrast adaptive enhancement for non-uniform illumination color images[C]//2017 IEEE International Conference on Computer Vision Workshops (ICCVW), 2018: 3023–3030. https://doi.org/10.1109/ICCVW.2017.357.

[7] Yang J Q, Wu C, Du B, et al. Enhanced multiscale feature fusion network for HSI classification[J]. IEEE Trans Geosci Remote Sens, 2021, 59(12): 10328−10347. doi: 10.1109/TGRS.2020.3046757

[8] Fan G D, Fan B, Gan M, et al. Multiscale low-light image enhancement network with illumination constraint[J]. IEEE Trans Circuits Syst Video Technol, 2022, 32(11): 7403−7417. doi: 10.1109/TCSVT.2022.3186880

[9] Zou D P, Yang B. Infrared and low-light visible image fusion based on hybrid multiscale decomposition and adaptive light adjustment[J]. Opt Lasers Eng, 2023, 160: 107268. doi: 10.1016/j.optlaseng.2022.107268

[10] Zhao S Y, Liu J Z, Wu S. Multiple disease detection method for greenhouse-cultivated strawberry based on multiscale feature fusion Faster R_CNN[J]. Comput Electron Agric, 2022, 199: 107176. doi: 10.1016/j.compag.2022.107176

[11] Huang Z X, Li J T, Hua Z. Attention-based for multiscale fusion underwater image enhancement[J]. KSII Trans Internet Inf Syst, 2022, 16(2): 544−564. doi: 10.3837/tiis.2022.02.010

[12] Zhou Y, Chen Z H, Sheng B, et al. AFF-Dehazing: Attention-based feature fusion network for low-light image Dehazing[J]. Comput Anim Virtual Worlds, 2021, 32(3–4): e2011. doi: 10.1002/cav.2011

[13] Yan Q S, Wang B, Zhang W, et al. Attention-guided deep neural network with multi-scale feature fusion for liver vessel segmentation[J]. IEEE J Biomed Health Inf, 2021, 25(7): 2629−2642. doi: 10.1109/JBHI.2020.3042069

[14] Xu X T, Li J J, Hua Z, et al. Attention-based multi-channel feature fusion enhancement network to process low-light images[J]. IET Image Process, 2022, 16(12): 3374−3393. doi: 10.1049/ipr2.12571

[15] Lv F F, Li Y, Lu F. Attention guided low-light image enhancement with a large scale low-light simulation dataset[J]. Int J Comput Vision, 2021, 129(7): 2175−2193. doi: 10.1007/s11263-021-01466-8

[16] Li J J, Feng X M, Hua Z. Low-light image enhancement via progressive-recursive network[J]. IEEE Trans Circuits Syst Video Technol, 2021, 31(11): 4227−4240. doi: 10.1109/TCSVT.2021.3049940

[17] Wang J, Song K C, Bao Y Q, et al. CGFNet: Cross-guided fusion network for RGB-T salient object detection[J]. IEEE Trans Circuits Syst Video Technol, 2022, 32(5): 2949−2961. doi: 10.1109/TCSVT.2021.3099120

[18] Sun Y P, Chang Z Y, Zhao Y, et al. Progressive two-stage network for low-light image enhancement[J]. Micromachines, 2021, 12(12): 1458. doi: 10.3390/mi12121458

[19] Tomosada H, Kudo T, Fujisawa T, et al. GAN-based image deblurring using DCT loss with customized datasets[J]. IEEE Access, 2021, 9: 135224−135233. doi: 10.1109/ACCESS.2021.3116194

[20] Tu Z Z, Talebi H, Zhang H, et al. MAXIM: multi-axis MLP for image processing[C]//2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022: 5759-5770. https://doi.org/10.1109/CVPR52688.2022.00568.

[21] Liu R S, Ma L, Zhang J A, et al. Retinex-inspired unrolling with cooperative prior architecture search for low-light image enhancement[C]//Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021: 10556–10565. https://doi.org/10.1109/CVPR46437.2021.01042.

[22] Wu W H, Weng J, Zhang P P, et al. URetinex-Net: Retinex-based deep unfolding network for low-light image enhancement[C]//Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022: 5891–5900. https://doi.org/10.1109/CVPR52688.2022.00581.

[23] Zamir S W, Arora A, Khan S, et al. Multi-stage progressive image restoration[C]//Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021: 14816–14826. https://doi.org/10.1109/CVPR46437.2021.01458.

[24] Kupyn O, Martyniuk T, Wu J R, et al. DeblurGAN-v2: Deblurring (orders-of-magnitude) faster and better[C]//Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, 2019: 8877–8886. https://doi.org/10.1109/ICCV.2019.00897.

[25] Zhang K H, Ren W Q, Luo W H, et al. Deep image deblurring: a survey[J]. Int J Comput Vision, 2022, 130(9): 2103−2130. doi: 10.1007/s11263-022-01633-5

[26] Singh N, Bhandari A K. Principal component analysis-based low-light image enhancement using reflection model[J]. IEEE Trans Instrum Meas, 2021, 70: 5012710. doi: 10.1109/TIM.2021.3096266

[27] Szalai S, Szürke S K, Harangozó D, et al. Investigation of deformations of a lithium polymer cell using the Digital Image Correlation Method (DICM)[J]. Rep Mech Eng, 2022, 3(1): 116−134. doi: 10.31181/rme20008022022s

[28] Liang J, Zhang A R, Xu J, et al. Fusion-correction network for single-exposure correction and multi-exposure fusion[Z]. arXiv: 2203.03624, 2022. https://doi.org/10.48550/arXiv.2203.03624.

[29] Wu L Y, Zhang X G, Chen H, et al. VP-NIQE: an opinion-unaware visual perception natural image quality evaluator[J]. Neurocomputing, 2021, 463: 17−28. doi: 10.1016/j.neucom.2021.08.048

[30] Singh K, Parihar A S. A comparative analysis of illumination estimation based Image Enhancement techniques[C]//2020 International Conference on Emerging Trends in Information Technology and Engineering (ic-ETITE), 2020: 1–5. https://doi.org/10.1109/ic-ETITE47903.2020.195.

[31] Agrawal A, Jadhav N, Gaur A, et al. Improving the accuracy of object detection in low light conditions using multiple Retinex theory-based image enhancement algorithms[C]//2022 Second International Conference on Advances in Electrical, Computing, Communication and Sustainable Technologies (ICAECT), Bhilai, 2022: 1–5. https://doi.org/10.1109/ICAECT54875.2022.9808011.

[32] Wang P, Wang Z W, Lv D, et al. Low illumination color image enhancement based on Gabor filtering and Retinex theory[J]. Multimed Tools Appl, 2021, 80(12): 17705−17719. doi: 10.1007/s11042-021-10607-7

[33] Feng X M, Li J J, Hua Z, et al. Low-light image enhancement based on multi-illumination estimation[J]. Appl Intell, 2021, 51(7): 5111−5131. doi: 10.1007/s10489-020-02119-y

[34] Shi Y M, Wang B Q, Wu X P, et al. Unsupervised low-light image enhancement by extracting structural similarity and color consistency[J]. IEEE Signal Process Lett, 2022, 29: 997−1001. doi: 10.1109/LSP.2022.3163686

[35] Krishnan N, Shone S J, Sashank C S, et al. A hybrid Low-light image enhancement method using Retinex decomposition and deep light curve estimation[J]. Optik, 2022, 260: 169023. doi: 10.1016/j.ijleo.2022.169023

[36] Zhou D X, Qian Y R, Ma Y Y, et al. Low illumination image enhancement based on multi-scale CycleGAN with deep residual shrinkage[J]. J Intell Fuzzy Syst, 2022, 42(3): 2383−2395. doi: 10.3233/JIFS-211664

[37] Liu F J, Hua Z, Li J J, et al. Low-light image enhancement network based on recursive network[J]. Front Neurorobot, 2022, 16: 836551. doi: 10.3389/fnbot.2022.836551

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: