Small object detection based on multi-scale feature fusion using remote sensing images

-

摘要

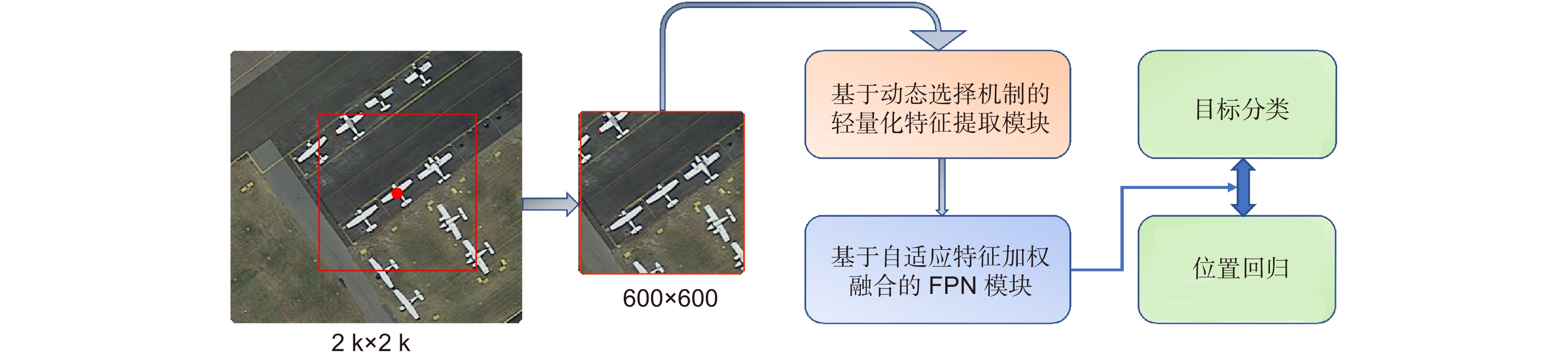

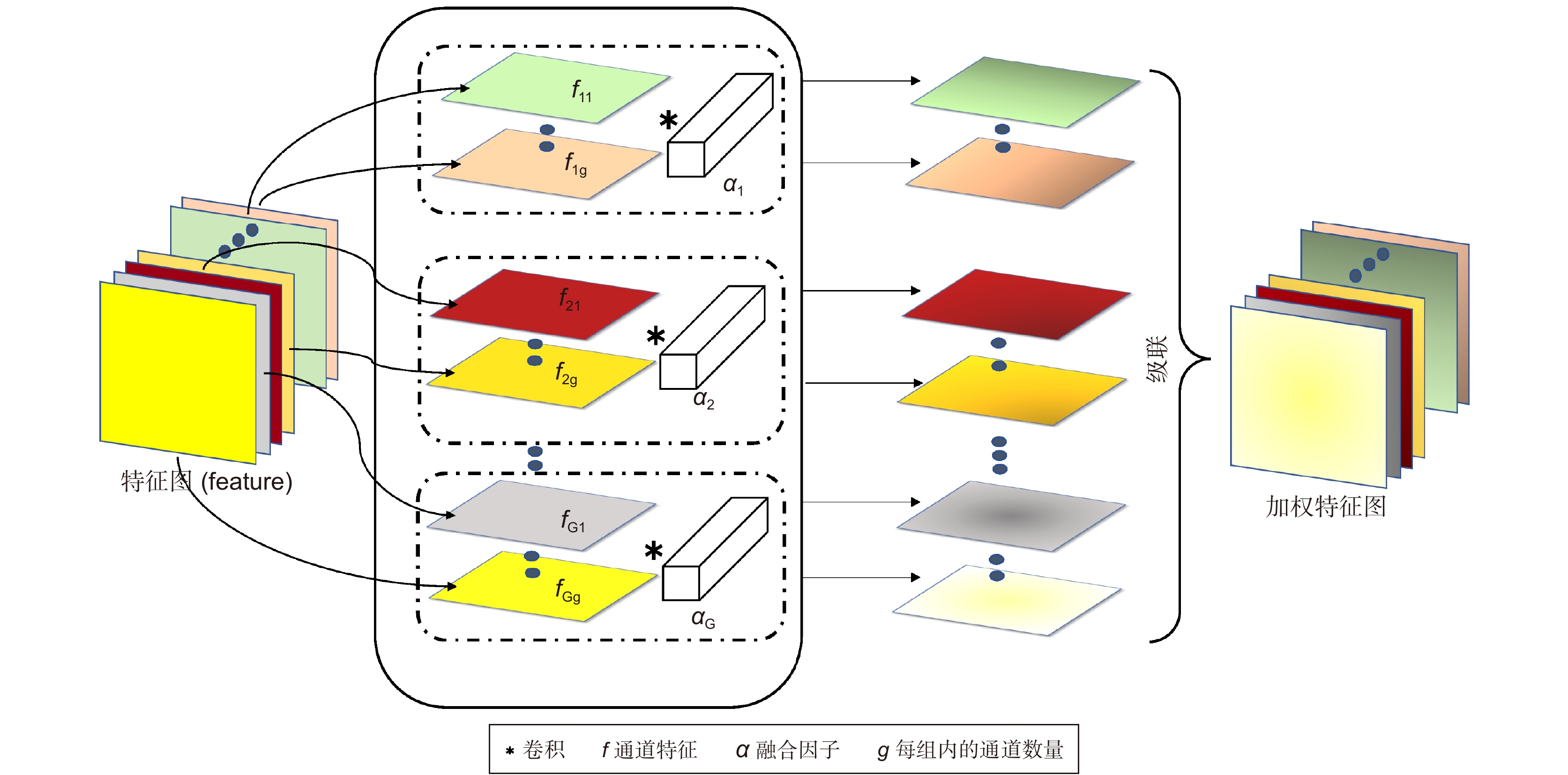

本文提出了一种鲁棒的基于多尺度特征融合的遥感图像小目标检测方法。考虑到常用的特征提取网络参数量庞大,过多的下采样可能导致小目标消失,同时基于自然图像的预训练模型直接应用到遥感图像中可能存在特征鸿沟。因此,根据数据集中所有目标尺寸的分布情况(即:先验知识),首先提出了一种基于动态选择机制的轻量化特征提取模块,它允许每个神经元依据目标的不同尺度自适应地分配用于检测的感受野大小并快速从头训练模型。其次,不同尺度特征所反应的信息量各不相同且各有侧重,因此提出了基于自适应特征加权融合的FPN (feature pyramid networks)模块,它利用分组卷积的方式对所有特征通道分组且组间互不影响,从而增加图像特征表达的准确性。另外,深度学习需要大量数据驱动,由于遥感小目标数据集匮乏,自建了一个遥感飞机小目标数据集,并对DOTA数据集中的飞机和小汽车目标做处理,使其尺寸分布满足小目标检测的任务。实验结果表明,与大多数主流检测方法对比,本文方法在DOTA和自建数据集上取得了更好的结果。

Abstract

This paper proposes a robust small object detection method based on multi-scale feature fusion using remote sensing images. When the natural image-based pre-training model is directly applied to the remote sensing images, the large number of parameters and excessive down sampling in widely feature extractions may lead to the disappearances of small objects due to feature gaps. Therefore, based on the distribution of all object sizes in the dataset (i.e., prior knowledge), a lightweight feature extraction module is first integrated via dynamic selection mechanism that allows each neuron to adaptively allocate the receptive field size for detection. Meanwhile, the information reflected by various scale features has different amounts and emphasis. To increase the accuracy of image feature expression, the FPN (feature pyramid networks) module based on adaptive feature weighted fusion is applied by using the grouping convolution to group all feature channels without affecting each other. In addition, deep learning needs a large amount of data to drive. Due to the lack of remote sensing small object dataset, we built a remote sensing plane small object dataset, and processed the plane and small-vehicle objects in DOTA dataset to make its distribution of size meet the requirement of small object detection. Experimental results show that compared with most mainstream detection methods, the proposed method achieves better results on DOTA and self-built datasets.

-

Key words:

- multi-scale features /

- small object detection /

- feature fusion /

- scene complexity

-

Overview

Overview: In recent years, with the continuous development of remote sensing optical technology, the acquisition of a large number of high-resolution remote sensing images has promoted the construction of environmental monitoring, animal protection, national defense and military. In numerous remote sensing image visual tasks, remote sensing aircraft detection is of great significance for civil and national defense. Research of the remote sensing small object detection technology is important. Currently, the object detection method based on deep learning has achieved excellent results in large and medium object testing tasks, but the performance and application of remote sensing small object detection are poor. The main reasons are the following: 1) the model is huge, and the real-time is poor; 2) remote sensing image is complicated and the object scale distribution is wide; 3) remote sensing small object detection dataset is extremely lacking.

To solve the above problems, this paper proposes a robust small object detection method based on multi-scale feature fusion using remote sensing images. The main work as follows. First, as the image will be sampled and convolved for many times after being input into common neural networks (such as ResNet and VGG-16), the features of small objects will be seriously lost and the final detection accuracy will be affected. To this end, according to the distribution of all object sizes in the dataset (i.e., prior knowledge), we propose a lightweight feature extraction module based on dynamic selection mechanism, which allows each neuron to adaptively allocate the receptive field size for detection and control the sampling times based on different scale of the objects. Second, although FPN is widely used to solve the problem of small object undetected, the information reflected by various scale features usually has different amounts and emphasis. Therefore, the FPN module based on adaptive feature weighted fusion is proposed, which uses the method of grouping convolution to group all feature channels without affecting each other, so as to further improve the accuracy of image feature expression. Third, for the issue of lack of remote sensing small object dataset, this paper built a remote sensing small object dataset of plane, and processed the plane and small-vehicle objects in DOTA-1.5 dataset to make its distribution of size meet the requirement of small object detection. Finally, experimental results on DOTA and self-built datasets show that our method posseses the best results compared with mainstream detection methods.

-

-

表 1 不同网络的参数量

Table 1. Parameters of different networks

模型 参数量M VGG16 138 ResNet50 25.6 ResNet101 44.6 Ours 0.49 表 2 特征图感受野与对应目标尺寸参数

Table 2. Receptive field of feature map and corresponding object size parameters

金字塔层数 检测目标尺寸 下采样倍数 感受野 感受野步长 感受野/目标尺寸 两层 1 6~25 4 55 4 3.5 2 25~50 8 95 8 2.5 三层 1 6~10 2 23 2 2.9 2 10~20 4 47 4 3.1 3 20~50 8 79 8 2.3 表 3 不同特征融合方案的检测结果

Table 3. Detection results of different feature fusion schemes

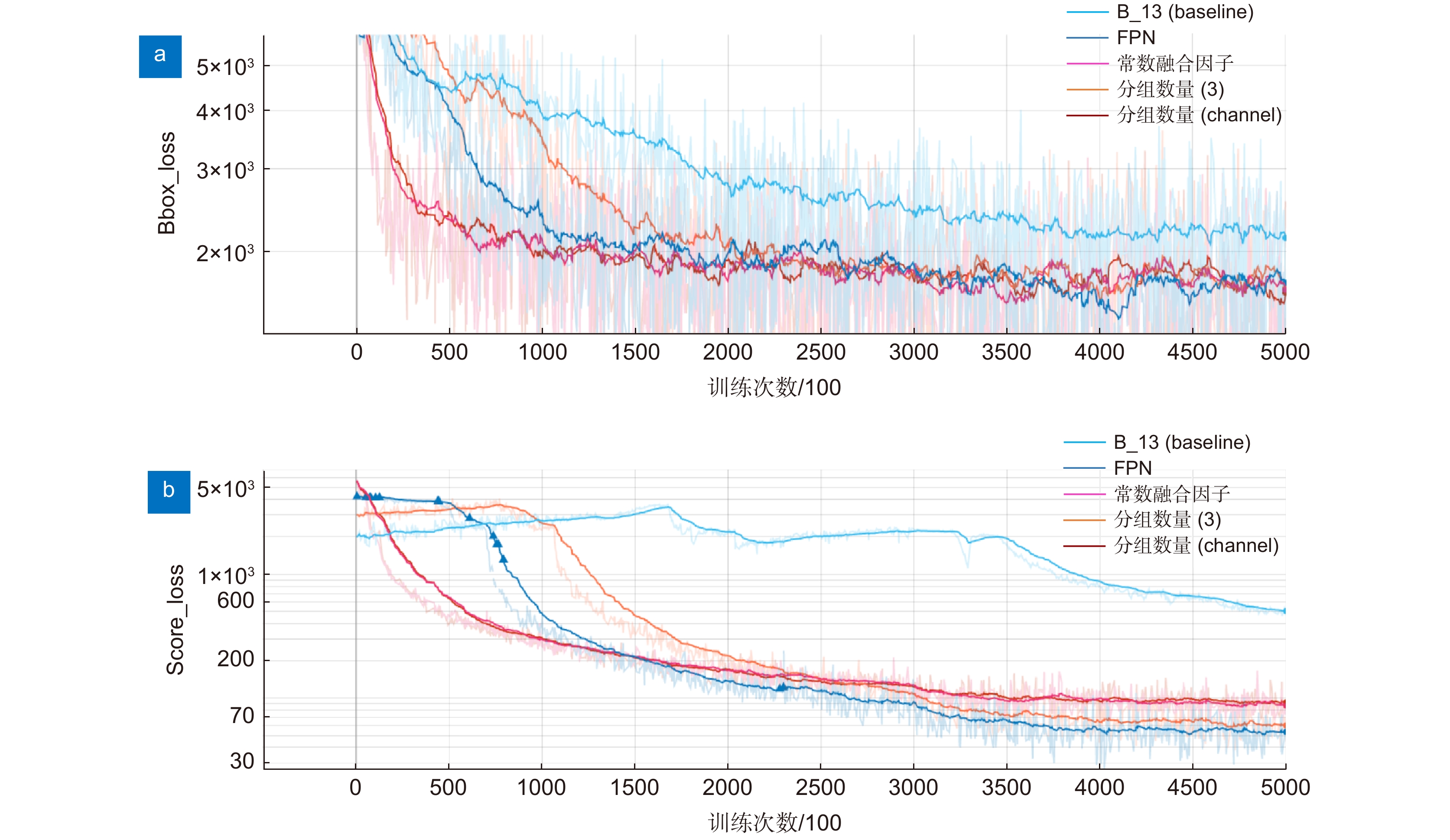

Basic unit FPN mAP Precision Recall 两层 B_11 - 86.8 76.8 88.9 B_11 √ 87.2 82.6 88.8 三层 B_10 - 87.4 47.4 91.8 B_10 √ 88.5 83.8 90.4 表 4 网络不同配置下的DOTA飞机数据集测试结果

Table 4. DOTA plane dataset test results under different network configurations

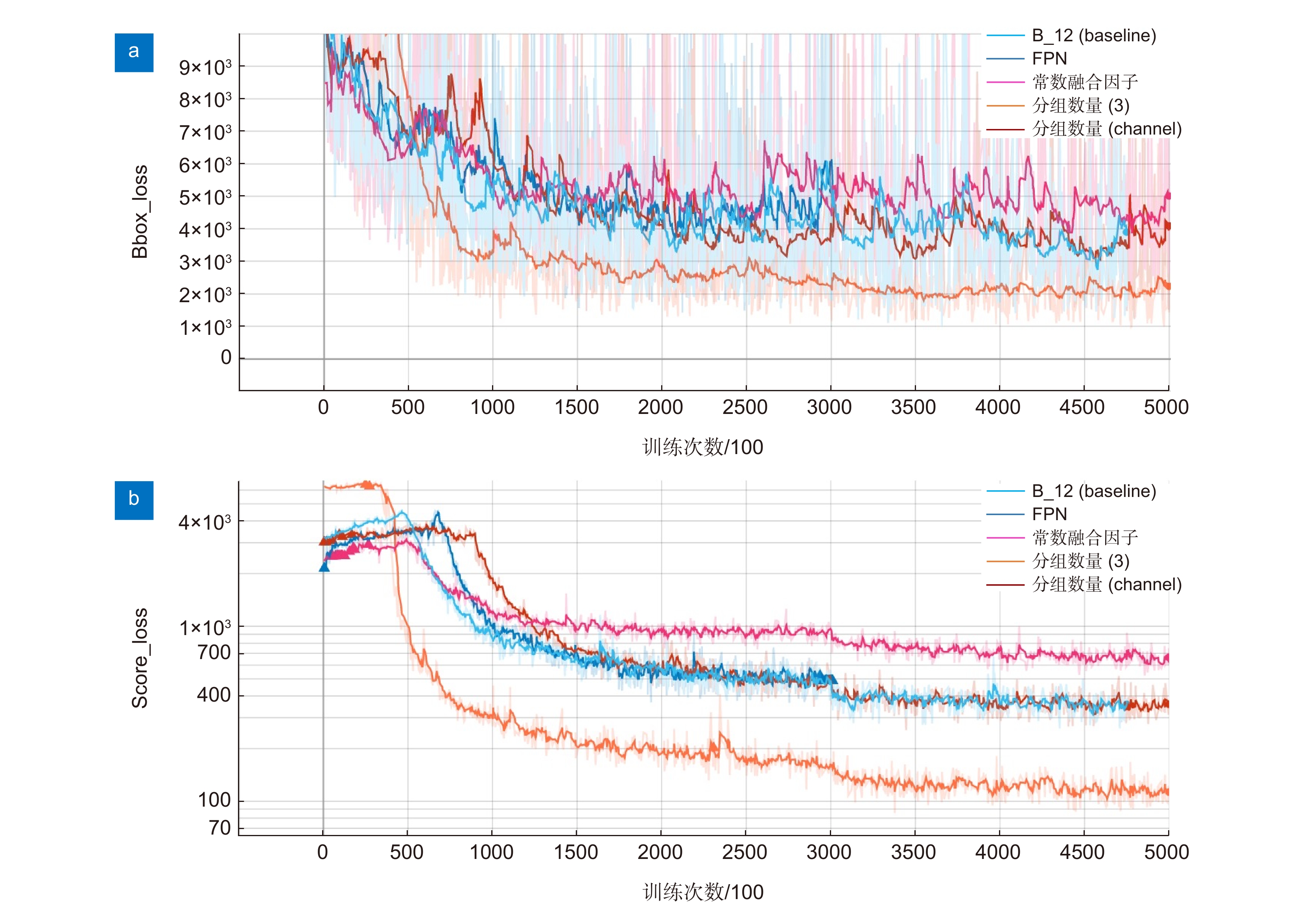

B_13 FPN 分组数量(3) 特征图通道(channel) 常数融合因子[0.71,0.87] mAP Precision Recall √ - - - - 80.5 63.4 82.8 √ √ - - - 82.0 81.4 85.1 √ √ √ - - 82.3 85.1 84.5 √ √ - √ - 83.6 85.5 87.0 √ √ - - √ 82.5 82.3 85.6 表 5 网络不同配置下的DOTA小汽车数据集测试结果

Table 5. DOTA small-vehicle dataset test results under different network configurations

B_12 FPN 分组数量(3) 特征图通道(channel) 常数融合因子[0.63,1.28] mAP Precision Recall √ - - - - 63.7 56.8 73.9 √ √ - - - 65.9 86.1 68.5 √ √ √ - - 66.3 83.3 68.9 √ √ - √ - 68.7 86.4 71.7 √ √ - - √ 64.4 84.0 67.3 表 6 网络不同配置下的自建数据集测试结果

Table 6. Test results of our dataset under different network configurations

B_10 FPN 分组数量(3) 特征图通道(channel) 常数融合因子[1.08,1.05] mAP Precision Recall √ - - - - 89.9 44.2 93.7 √ √ - - - 90.2 83.6 91.4 √ √ √ - - 90.6 84.8 92.0 √ √ - √ - 91.0 87.7 92.4 √ √ - - √ 未收敛 未收敛 未收敛 表 7 数据集各尺度目标分布数量统计

Table 7. Statistics of the distribution of each scale objects number

数据集 尺度 目标数量 常数融合因子$\left( { \dfrac{{\mathit{S}}_{\mathit{i}+1}}{{\mathit{S}}_{\mathit{i}}} } \right)$ DOTA飞机训练集 $ {\mathit{S}}_{1} $[6-12] 5503 0.87 $ {\mathit{S}}_{2} $[12-30] 4807 $ {\mathit{S}}_{3} $[30-70] 3428 0.71 DOTA小汽车训练集 $ {\mathit{S}}_{1} $[6-15] 59875 1.28 $ {\mathit{S}}_{2} $[15-25] 76615 $ {\mathit{S}}_{3} $[25-60] 48203 0.63 自建数据训练集 $ {\mathit{S}}_{1} $[6-10] 4963 1.05 $ {\mathit{S}}_{2} $[10-20] 5196 $ {\mathit{S}}_{3} $[20-50] 5625 1.08 表 8 融合因子初始值对检测性能的影响

Table 8. Influence of initial value of fusion factor on detection performance

融合因子初始值 mAP 1 83.6 随机初始化 80.7 表 9 CBAM与自适应融合模块对检测性能的影响

Table 9. Influence of CBAM and adaptive fusion module on detection performance

模型+数据集 mAP Precision Recall 推理速度/(s/张) B_10+FPN+CBAM(自建数据集) 90.5 83.8 90.6 0.036 B_10+FPN+自适应融合模块(自建数据集) 91.0 87.7 92.4 0.027 B_13+FPN+CBAM(DOTA飞机数据集) 83.0 82.6 85.8 0.048 B_13+FPN+自适应融合模块(DOTA飞机数据集) 83.6 85.5 87.0 0.037 B_12+FPN+CBAM(DOTA小汽车数据集) 67.6 83.0 71.1 0.043 B_12+FPN+自适应融合模块(DOTA小汽车数据集) 68.7 83.3 71.7 0.034 表 10 不同方法检测性能对比

Table 10. Comparison of detection performance of different methods

方法 DOTA飞机数据集(mAP) DOTA小汽车数据集(mAP) 自建数据集(mAP) SSD 63.4 43.3 64.4 RetinaNet 55.2 45.1 62.7 Yolov3-tiny 70.8 58.3 74.3 Faster R-CNN 73.0 59.0 88.6 Ours 83.6 68.7 91.0 表 11 基于自适应特征加权融合的FPN模块在Faster R-CNN上的性能

Table 11. Performance of FPN module based on adaptive feature weighted fusion on Faster R-CNN

Backbone+数据集 mAP ResNet50+FPN(自建数据集) 88.6 ResNet50+自适应融合模块(自建数据集) 89.7 ResNet50+FPN(DOTA飞机数据集) 73.0 ResNet50+自适应融合模块(DOTA飞机数据集) 73.8 ResNet50+FPN(DOTA小汽车数据集) 59.0 ResNet50+自适应融合模块(DOTA小汽车数据集) 63.2 -

参考文献

[1] Girshick R, Donahue J, Darrell T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]//Proceedings of 2014 IEEE Conference on Computer Vision and Pattern Recognition, 2014: 580–587.

[2] Girshick R. Fast R-Cnn[C]//Proceedings of 2015 IEEE International Conference on Computer Vision, 2015: 1440–1448.

[3] Ren S Q, He K M, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[C]//Proceedings of Advances in Neural Information Processing Systems 28: Annual Conference on Neural Information Processing Systems 2015, 2015, 28: 91–99.

[4] Liu W, Anguelov D, Erhan D, et al. Ssd: Single shot multibox detector[C]//Proceedings of the 14th European Conference on Computer Vision, 2016: 21–37.

[5] Redmon J, Farhadi A. YOLOV3: an incremental improvement[Z]. arXiv: 1804.02767, 2018. https://doi.org/10.48550/arXiv.1804.02767

[6] Lin T Y, Goyal P, Girshick R, et al. Focal loss for dense object detection[C]//Proceedings of 2017 IEEE International Conference on Computer Vision, 2017: 2999–3007.

[7] Lin T Y, Dollár P, Girshick R, et al. Feature pyramid networks for object detection[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, 2017: 936–944.

[8] Fu C Y, Liu W, Ranga A, et al. DSSD: deconvolutional single shot detector[Z]. arXiv: 1701.06659, 2017. https://arxiv.org/abs/1701.06659

[9] Li Z X, Zhou F Q. FSSD: feature fusion single shot multibox detector[Z]. arXiv: 1712.00960, 2017. https://doi.org/10.48550/arXiv.1712.00960

[10] Cui L S, Ma R, Lv P, et al. MDSSD: multi-scale deconvolutional single shot detector for small objects[Z]. arXiv: 1805.07009, 2018. https://doi.org/10.48550/arXiv.1805.07009

[11] Liang Z W, Shao J, Zhang D Y, et al. Small object detection using deep feature pyramid networks[C]//Proceedings of the 19th Pacific Rim Conference on Multimedia, 2018: 554–564.

[12] Cao G M, Xie X M, Yang W Z, et al. Feature-fused SSD: fast detection for small objects[J]. Proc SPIE, 2018, 10615: 106151E.

[13] Zhang S F, Wen L Y, Bian X, et al. Single-shot refinement neural network for object detection[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018: 4203–4212.

[14] Zhao Q J, Sheng T, Wang Y T, et al. M2Det: a single-shot object detector based on multi-level feature pyramid network[J]. Proc AAAI Conf Artif Intell, 2019, 33(1): 9259−9266.

[15] 徐安林, 杜丹, 王海红, 等. 结合层次化搜索与视觉残差网络的光学舰船目标检测方法[J]. 光电工程, 2021, 48(4): 200249.

Xu A L, Du D, Wang H H, et al. Optical ship target detection method combining hierarchical search and visual residual network[J]. Opto-Electron Eng, 2021, 48(4): 200249.

[16] 赵春梅, 陈忠碧, 张建林. 基于卷积网络的目标跟踪应用研究[J]. 光电工程, 2020, 47(1): 180668.

Zhao C M, Chen Z B, Zhang J L. Research on target tracking based on convolutional networks[J]. Opto-Electron Eng, 2020, 47(1): 180668.

[17] 金瑶, 张锐, 尹东. 城市道路视频中小像素目标检测[J]. 光电工程, 2019, 46(9): 190053.

Jin Y, Zhang R, Yin D. Object detection for small pixel in urban roads videos[J]. Opto-Electron Eng, 2019, 46(9): 190053.

[18] Pang J M, Li C, Shi J P, et al. R²-CNN: fast tiny object detection in large-scale remote sensing images[J]. IEEE Trans Geosci Remote Sens, 2019, 57(8): 5512−5524. doi: 10.1109/TGRS.2019.2899955

[19] Zhang G J, Lu S J, Zhang W. CAD-Net: a context-aware detection network for objects in remote sensing imagery[J]. IEEE Trans Geosci Remote Sens, 2019, 57(12): 10015−10024. doi: 10.1109/TGRS.2019.2930982

[20] Gong Y Q, Yu X H, Ding Y, et al. Effective fusion factor in FPN for tiny object detection[C]//Proceedings of 2021 IEEE Winter Conference on Applications of Computer Vision, 2021: 1159–1167.

[21] Xia G S, Bai X, Ding J, et al. DOTA: a large-scale dataset for object detection in aerial images[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018: 3974–3983.

[22] Ding J, Xue N, Xia G S, et al. Object detection in aerial images: a large-scale benchmark and challenges[Z]. arXiv: 2102.12219, 2021. https://doi.org/10.48550/arXiv.2102.12219

[23] Han J W, Zhang D W, Cheng G, et al. Object detection in optical remote sensing images based on weakly supervised learning and high-level feature learning[J]. IEEE Trans Geosci Remote Sens, 2015, 53(6): 3325−3337. doi: 10.1109/TGRS.2014.2374218

[24] Long Y, Gong Y P, Xiao Z F, et al. Accurate object localization in remote sensing images based on convolutional neural networks[J]. IEEE Trans Geosci Remote Sens, 2017, 55(5): 2486−2498. doi: 10.1109/TGRS.2016.2645610

[25] Hu F, Xia G S, Hu J W, et al. Transferring deep convolutional neural networks for the scene classification of high-resolution remote sensing imagery[J]. Remote Sens, 2015, 7(11): 14680−14707. doi: 10.3390/rs71114680

[26] Ševo I, Avramović A. Convolutional neural network based automatic object detection on aerial images[J]. IEEE Geosci Remote Sens Lett, 2016, 13(5): 740−744. doi: 10.1109/LGRS.2016.2542358

[27] Cheng G, Zhou P C, Han J W. Learning rotation-invariant convolutional neural networks for object detection in VHR optical remote sensing images[J]. IEEE Trans Geosci Remote Sens, 2016, 54(12): 7405−7415. doi: 10.1109/TGRS.2016.2601622

[28] 赵春梅, 陈忠碧, 张建林. 基于深度学习的飞机目标跟踪应用研究[J]. 光电工程, 2019, 46(9): 180261.

Zhao C M, Chen Z B, Zhang J L. Application of aircraft target tracking based on deep learning[J]. Opto-Electron Eng, 2019, 46(9): 180261.

[29] Deng J, Dong W, Socher R, et al. Imagenet: a large-scale hierarchical image database[C]//Proceedings of 2009 IEEE Conference on Computer Vision and Pattern Recognition, 2009: 248–255.

[30] Xu Y C, Fu M T, Wang Q M, et al. Gliding vertex on the horizontal bounding box for multi-oriented object detection[J]. IEEE Trans Pattern Anal Mach Intell, 2021, 43(4): 1452−1459. doi: 10.1109/TPAMI.2020.2974745

[31] Yang X, Yang J R, Yan J C, et al. SCRDet: towards more robust detection for small, cluttered and rotated objects[C]//Proceedings of 2019 IEEE/CVF International Conference on Computer Vision, 2019: 8231–8240.

[32] Azimi S M, Vig E, Bahmanyar R, et al. Towards multi-class object detection in unconstrained remote sensing imagery[C]//Proceedings of the 14th Asian Conference on Computer Vision, 2018: 150–165.

[33] He Y H, Xu D Z, Wu L F, et al. LFFD: a light and fast face detector for edge devices[Z]. arXiv: 1904.10633, 2019. https://doi.org/10.48550/arXiv.1904.10633

[34] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition[Z]. arXiv: 1409.1556, 2014. https://doi.org/10.48550/arXiv.1409.1556

[35] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, 2016: 770–778.

[36] Zhu C C, He Y H, Savvides M. Feature selective anchor-free module for single-shot object detection[C]//Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019: 840–849.

[37] Woo S, Park J, Lee J Y, et al. Cbam: convolutional block attention module[C]//Proceedings of the 15th European Conference on Computer Vision, 2018: 3–19.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: