-

摘要

针对实例分割算法在进行轮廓收敛时,普遍存在目标遮挡增加轮廓处理的时间以及降低检测框的准确性的问题。本文提出一种实时实例分割的算法,在处理轮廓中增加段落匹配、目标聚合损失函数和边界系数模块。首先对初始轮廓进行分段处理,在每一个段落内进行分配局部地面真值点,实现更自然、快捷和平滑的变形路径。其次利用目标聚合损失函数和边界系数模块对存在目标遮挡的物体进行预测,给出准确的检测框。最后利用循环卷积与Snake模型对匹配过的轮廓进行收敛,对顶点进行迭代计算得到分割结果。本文算法在COCO、Cityscapes、Kins等多个数据集上进行评估,其中COCO数据集上取得32.6% mAP和36.3 f/s的结果,在精度与速度上取得最佳平衡。

Abstract

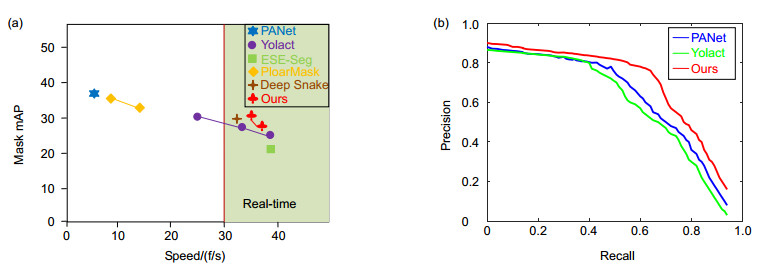

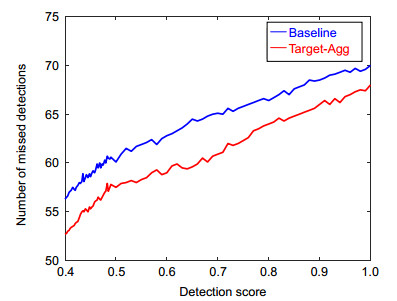

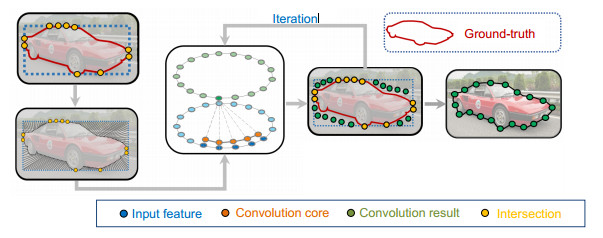

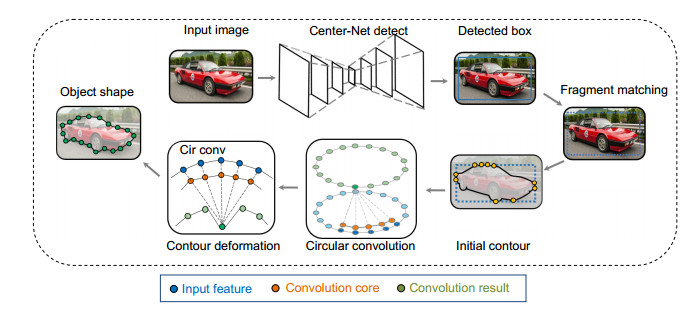

During the instance segmentation for contour convergence, it is a general problem that target occlusion increases the time for contour processing and reduces the accuracy of the detection box. This paper proposes an algorithm for real-time instance segmentation, adding fragment matching, target aggregation loss function and boundary coefficient modules to the processing contour. Firstly, fragment matching is performed on the initial contour formed by evenly spaced points, and local ground truth points are allocated in each fragment to achieve a more natural, faster, and smoother deformation path. Secondly, the target aggregation loss function and the boundary coefficient modules are used to predict the objects in the presence of object occlusion and give an accurate detection box. Finally, circular convolution and Snake model are used to converge the matched contours, and then the vertices are iteratively calculated to obtain segmentation results. The proposed method is evaluated on multiple data sets such as Cityscapes, Kins, COCO, et al, among which 30.7 mAP and 33.1 f/s results are obtained on the COCO dataset, achieving a compromise between accuracy and speed.

-

Key words:

- instance segmentation /

- object detection /

- snake model /

- object occlusion /

- initial contour

-

Overview

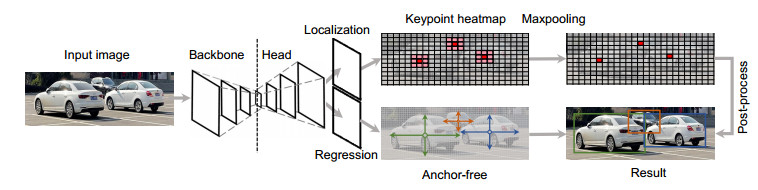

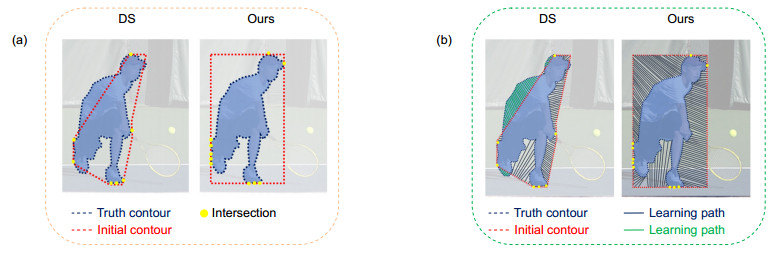

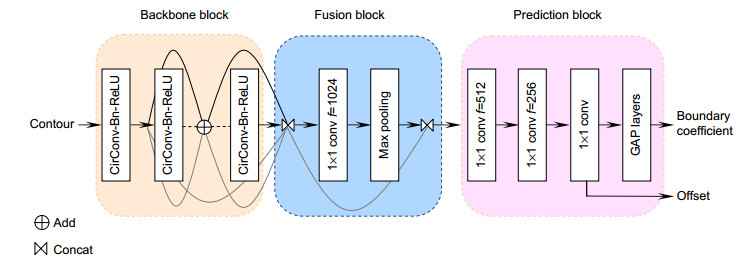

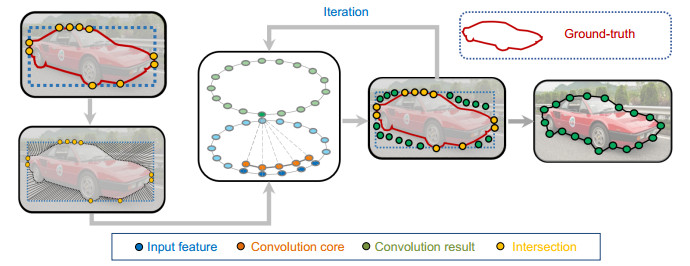

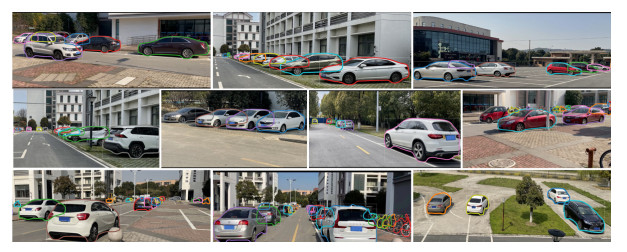

Overview: With the help of instance segmentation, the scene information can be better understood, and the perception system of autonomous driving can be effectively improved. However, due to the problems such as object occlusion and object blur during detection, the accuracy of instance segmentation is greatly reduced. Deep neural network is a common method to solve object occlusion and blur. Based on computing resources and real-time considerations, contour-based algorithms are other solutions. Active Contour Model (ACM) is a classic contour algorithm, which is called Snake model. Its parameters are less than those based on dense pixels, which speeds up the segmentation. A novel segmentation algorithm based on ACM combined with cyclic convolution is proposed. The algorithm uses center net as the target detector to update the vertices using the iterative calculation of cyclic convolution and vertex offset calculation, and finally fits the real shape of the body. The algorithm has three main contributions. Firstly, for object occlusion and blurring, a loss function (target aggregation loss) is introduced, which increases the positioning accuracy of the detection box by pulling and repelling surrounding objects to the target. Secondly, the initial contour processing is an important step based on the contour algorithm, which affects the accuracy and speed of subsequent instance segmentation. This paper proposes a method of processing the initial contour, which is fragment matching. The initial contour to be processed is caused by evenly spaced points. The detection box is adaptively divided into multiple segments. The segments correspond to the initial contour. Each segment is matched point by point and assigned vertices. These vertices are the key to subsequent deformation. Finally, in dense scenes, it is easy to lose the information of adjacent objects in the same detection box. This paper proposes a boundary coefficient module to correct the misjudged boundary information by dividing the area and aligning the features to ensure the accuracy of boundary segmentation. The algorithm in this paper is compared with multiple advanced algorithms in multiple data sets. In the Cityscapes dataset, an APvol of 37.7% and an AP result of 31.8% are obtained, which is an improvement of 1.2% APvol compared to PANet. In SBD dataset, the results of 62.1% AP50 and 48.5% AP70 were obtained, indicating that even if the IoU threshold changes, the AP does not change much, which proves its stability. Compared with other real-time algorithms in the COCO dataset, a trade-off between accuracy and speed was achieved, reaching 33.1 f/s, while the COCO test-dev has 30.7% mAP. After the above data analysis, it is proved that the algorithm in this paper has reached a good level in accuracy and speed.

-

-

表 1 与其他先进算法在Cityscapes的验证集与测试集对比结果

Table 1. Comparison results of the validation set and test set with state-of-the-art in Cityscapes

Method Speed/(f/s) APval/% AP/% AP50/% Person Car Rider Truck Bicycle Mask RCNN[6] 2.2 31.5 26.2 49.9 30.5 46.9 23.7 22.8 16.0 SECB[11] 11.0 - 27.6 50.9 34.5 52.4 26.1 21.7 18.9 PANet[12] < 1.0 36.5 31.8 57.1 36.8 54.8 30.4 27.0 20.8 Deep Snake[8] 4.6 37.4 31.7 58.4 37.2 56.0 27.0 29.5 16.4 Ours 5.9 39.1 33.8 60.6 38.5 56.8 27.7 30.7 17.3 表 2 与其他实例分割方法在Kins的AP对比

Table 2. Comparison of the AP of other instance segmentation methods in Kins

表 3 与其他的实时方法在COCO上的处理时间对比

Table 3. Comparison of processing time on COCO with other real-time methods

表 4 与先进方法的实例分割在SBD上的实时比较

Table 4. Real-time comparison of instance segmentation with state-of-the-art on SBD

表 5 消融实验在SBD的评估结果

Table 5. Evaluation results of ablation experiments in SBD

Initial contour Matching Loss AP/% AP50/% AP75/% APS/% APM/% APL/% BBox Fragment SmoothL1 30.3 50.5 32.4 16.4 34.0 46.2 BBox Uniformity Target-Agg 30.7 50.8 32.0 16.9 34.5 46.3 BBox Fragment Target-Agg 32.6 53.5 34.7 18.9 36.1 48.7 表 6 在COCO数据集中进行消融实验

Table 6. Ablation experiments performed in the COCO

Models APvol/% AP50/% AP70/% Baseline 53.9 61.5 48.1 +Initial contour 55.8 62.4 49.0 +Boundary coefficient 56.5 63.4 50.3 -

参考文献

[1] Fazeli N, Oller M, Wu J, et al. See, feel, act: Hierarchical learning for complex manipulation skills with multisensory fusion[J]. Sci Robot, 2019, 4(26): eaav3123. doi: 10.1126/scirobotics.aav3123

[2] 张颖, 杨晶, 杨玉峰. 雾对基于可见光的车辆定位性能的研究[J]. 光电工程, 2020, 47(4): 85–90 doi: 10.12086/oee.2020.190661

Zhang Y, Yang J, Yang Y F. The research on fog's positioning performance of vehicles based on visible light[J]. Opto-Electron Eng, 2020, 47(4): 85–90 doi: 10.12086/oee.2020.190661

[3] 孟凡俊, 尹东. 基于神经网络的车辆识别代号识别方法[J]. 光电工程, 2021, 48(1): 51–60 doi: 10.12086/oee.2021.200094

Meng F J, Yin D. Vehicle identification number recognition based on neural network[J]. Opto-Electron Eng, 2021, 48(1): 51–60 doi: 10.12086/oee.2021.200094

[4] Ma W C, Wang S L, Hu R, et al. Deep rigid instance scene flow[C]//Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 2019: 3609–3617.

[5] Cao J L, Cholakkal H, Anwer R M, et al. D2det: Towards high quality object detection and instance segmentation[C]//Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 2020: 11482–11491.

[6] He K M, Gkioxari G, Dollár P, et al. Mask R-CNN[C]//Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 2017: 2980–2988.

[7] Kass M, Witkin A, Terzopoulos D. Snakes: Active contour models[J]. Int J Computer Vis, 1988, 1(4): 321–331. doi: 10.1007/BF00133570

[8] Peng S D, Jiang W, Pi H J, et al. Deep Snake for real-time instance segmentation[C]//Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 2020: 8530–8539.

[9] Zhou X Y, Wang D Q, Krähenbühl P. Objects as points[Z]. arXiv preprint arXiv: 1904.07850, 2019.

[10] Wang X L, Xiao T T, Jiang Y N, et al. Repulsion loss: detecting pedestrians in a crowd[C]//Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 2018: 7774–7783.

[11] Neven D, Brabandere B D, Proesmans M, et al. Instance segmentation by jointly optimizing spatial embeddings and clustering bandwidth[C]//Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 2019: 8829–8837.

[12] Liu S, Qi L, Qin H F, et al. Path aggregation network for instance segmentation[C]//Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 2018: 8759–8768.

[13] Follmann P, König R, Härtinger P, et al. Learning to see the invisible: End-to-end trainable amodal instance segmentation[C]//2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2019: 1328–1336.

[14] Bolya D, Zhou C, Xiao F Y, et al. YOLACT: Real-time instance segmentation[C]//Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 2019: 9156–9165.

[15] Xu W Q, Wang H Y, Qi F B, et al. Explicit shape encoding for real-time instance segmentation[C]//Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision, Seoul, Korea (South), 2019: 5167–5176.

[16] Jetley S, Sapienza M, Golodetz S, et al. Straight to shapes: real-time detection of encoded shapes[C]//Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 2017: 4207–4216.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: