A comparative study of time of flight extraction methods in non-line-of-sight location

-

摘要

非视域定位是一种通过提取光子飞行时间判断视线外物体位置的主动探测技术,是近年的前沿研究热点。为了研究均值滤波、中值滤波以及高斯滤波方法提取光子飞行时间的性能差异,首先用光度学方法优化了光子飞行模型中的能量变化模型,然后对三种滤波方法中的参数进行了优化分析,接着分析了三种提取方法对最大值判定法和概率阈值加权判定法的适应性,最后分别以设备和非视域物体的位置为变量,对三种时间提取算法得到的定位精度和稳定性进行了对比。仿真表明,中值滤波适用于较为狭窄的定位环境,并且有较高的定位精度;高斯滤波定位稳定性较好,并且滤波参数的选择范围更大。

Abstract

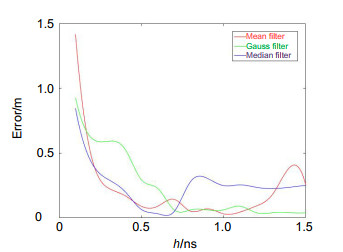

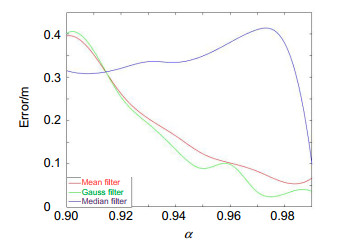

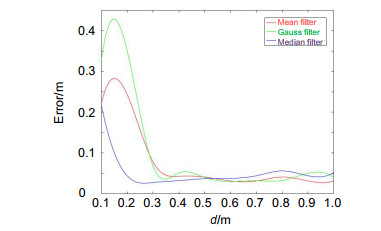

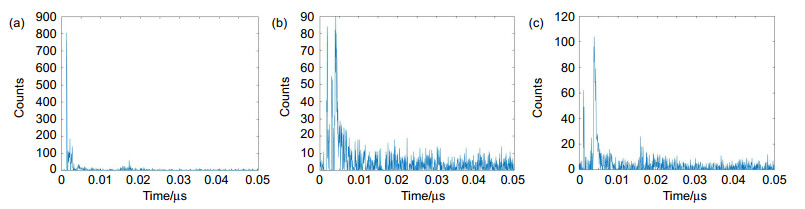

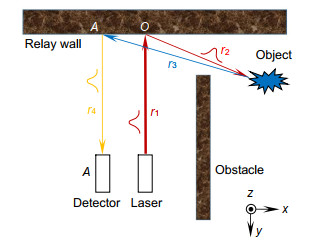

Non-line-of-sight location is an active detection technology which is used to detect the position of objects out of sight by extracting the time of flight. It is a research hotspot in recent years. In order to study the performance differences of mean filter, median filter and Gaussian filter in extracting time of flight, firstly, the energy changing model of photon flight model is optimized by photometry, and then the parameters of the three filtering methods are optimized and analyzed. After that, the adaptability of these three extraction methods to the maximum value judgment method and probability threshold weighted judgment method is analyzed. Finally, the accuracy and stability of these three time extraction algorithms are compared by using the positions of devices and invisible object as variables. The simulation results show that the median filter is suitable for a narrow environment and it has the high accuracy in positioning; the locations with Gaussian filter have good positioning stability and there is a wider selection range of filtering parameters when the signal is processed with Gaussian filter.

-

Key words:

- non-line-of-sight location /

- time of flight /

- filter algorithm /

- adaptability analysis

-

Overview

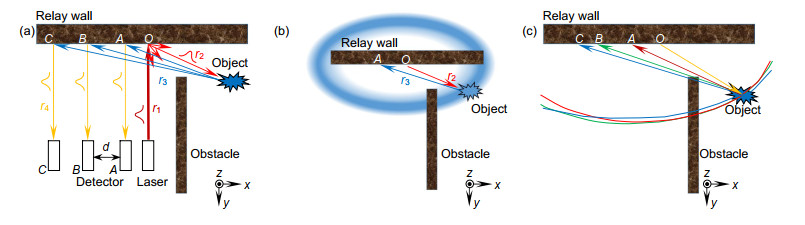

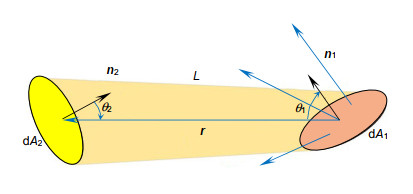

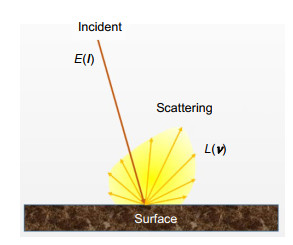

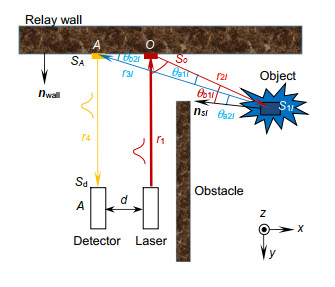

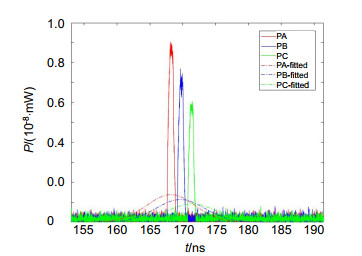

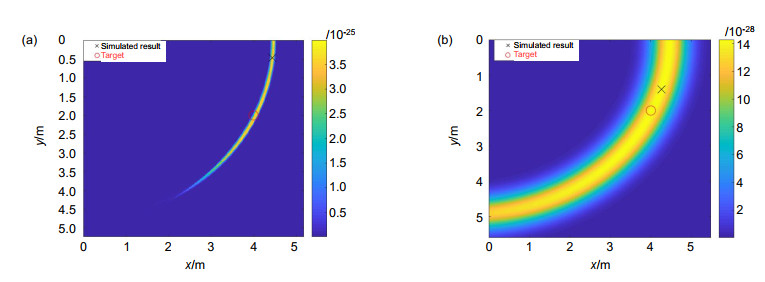

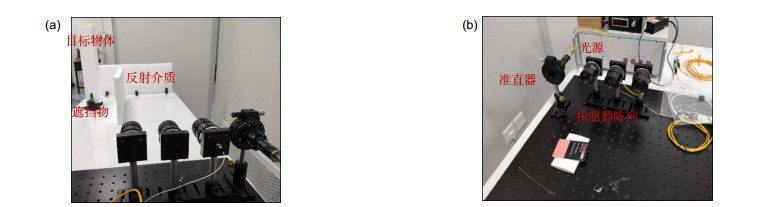

Overview: The detection of the information out of sight is always a difficult problem. It is valuable in complex scene such as autopilot and rescue. The casualty would be fewer if we obtain more decision time by getting the information of invisible area in advance. With the development of photoelectric technology, ultrafast lasers and detectors with high sensitivity and time resolution are invented, such as streak cameras, single photon avalanche diodes, superconducting nanowire single-photon detectors, and so on. It is possible to measure the time information of laser pulses in a photon by the single photon detector. The laser pulses can illuminate the scene of non-line-of-sight by bouncing on the relay surface and scattered back to relay surface again. The time of flight that pulses spent in the hidden area and the light intensity distribution on relay surface can be measured by the single photon detector, and the scene out of sight can be depicted from them. The back-projection algorithm, light-cone transform algorithm, Fermat flow and phase-field virtual wave optics have been proposed to calculate the scene out of sight. In order to obtain the light intensity distribution, the relay wall need to be scanned with the methods mentioned above and it is time-consuming. In most application environment, non-line-of-sight information needs to be acquired rapidly and the motion state of moving objects is more useful than their details. In the previous studies, the optical signal scattered by hidden targets is fitted into the Gauss distribution to extract the time of flight, and its position is figured out according to the time of flight. In this paper, we replace the Gauss fitting algorithm with the filtering algorithm to overcome its instabilities and improve the automation of that locating algorithm. Mean filter, medium filter and Gauss filter are proposed to improve the locating performance. In order to compare the characteristics of these three filters, the non-line-of-sight location is simulated with numerical simulation software based on the photon flight model which is optimized with photometry. Medium filter performs better than other two methods in a narrow application environment to obtain the more accurate locating result. For mean filter and Gauss filter, 0.5 m is a suitable distance between the laser source and detectors to locate the target reliable. As to Gauss filter, the position of target can be judged more accurately by probability weighting with an optimized threshold. The applicability of the fitting method and filtering methods are analyzed by comparing the locating error of 25 positions in the area of 5 m×5 m. Location information obtained by the Gauss fitting method is more stable than other two methods. In terms of the average of positioning error, medium filter performs better than other two methods. And the locating result of the fitting method is not accurate and stable as the filtering method.

-

-

表 1 多种光子飞行时间提取方法的定位结果对比

Table 1. Comparison of location results obtained by several time-of-flight extraction methods

位置1/m 位置2/m 位置3/m 真实位置 (0.47, 0.31) (0.52, 0.21) (0.46, 0.41) 高斯拟合 (0.44, 0.40) (0.10, 0.03) (0.12, 0.03) 均值滤波 (0.47, 0.29) (0.48, 0.23) (0.40, 0.43) 中值滤波 (0.43, 0.33) (0.55, 0.05) (0.42, 0.41) 高斯滤波 (0.48, 0.29) (0.52, 0.19) (0.44, 0.41) -

参考文献

[1] Velten A, Willwacher T, Gupta O, et al. Recovering three-dimensional shape around a corner using ultrafast time-of-flight imaging[J]. Nat Commun, 2012, 3: 745. doi: 10.1038/ncomms1747

[2] Laurenzis M, Velten A. Nonline-of-sight laser gated viewing of scattered photons[J]. Opt Eng, 2014, 53(2): 023102. doi: 10.1117/1.OE.53.2.023102

[3] Laurenzis M, Velten A. Feature selection and back-projection algorithms for nonline-of-sight laser–gated viewing[J]. J Electron Imaging, 2014, 23(6): 063003. doi: 10.1117/1.JEI.23.6.063003

[4] Buttafava M, Zeman J, Tosi A, et al. Non-line-of-sight imaging using a time-gated single photon avalanche diode[J]. Opt Express, 2015, 23(16): 20997–21011. doi: 10.1364/OE.23.020997

[5] Arellano V, Gutierrez D, Jarabo A. Fast back-projection for non-line of sight reconstruction[J]. Opt Express, 2017, 25(10): 11574–11583. doi: 10.1364/OE.25.011574

[6] Jin C F, Xie J H, Zhang S Q, et al. Reconstruction of multiple non-line-of-sight objects using back projection based on ellipsoid mode decomposition[J]. Opt Express, 2018, 26(16): 20089–20101. doi: 10.1364/OE.26.020089

[7] Klein J, Peters C, Martín J, et al. Tracking objects outside the line of sight using 2D intensity images[J]. Sci Rep, 2016, 6(1): 32491. doi: 10.1038/srep32491

[8] O'Toole M, Lindell D B, Wetzstein G. Confocal non-line-of-sight imaging based on the light-cone transform[J]. Nature, 2018, 555(7696): 338–341. doi: 10.1038/nature25489

[9] Xin S M, Nousias S, Kutulakos K N, et al. A theory of Fermat paths for non-line-of-sight shape reconstruction[C]//Proceedings of 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2019: 6800–6809.

[10] Liu X C, Guillén I, La Manna M, et al. Non-line-of-sight imaging using phasor-field virtual wave optics[J]. Nature, 2019, 572(7771): 620–623. doi: 10.1038/s41586-019-1461-3

[11] Gariepy G, Tonolini F, Henderson R, et al. Detection and tracking of moving objects hidden from view[J]. Nat Photonics, 2016, 10(1): 23–26. doi: 10.1038/nphoton.2015.234

[12] Chan S S, Warburton R E, Gariepy G, et al. Non-line-of-sight tracking of people at long range[J]. Opt Express, 2017, 25(9): 10109–10117. doi: 10.1364/OE.25.010109

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: