-

摘要

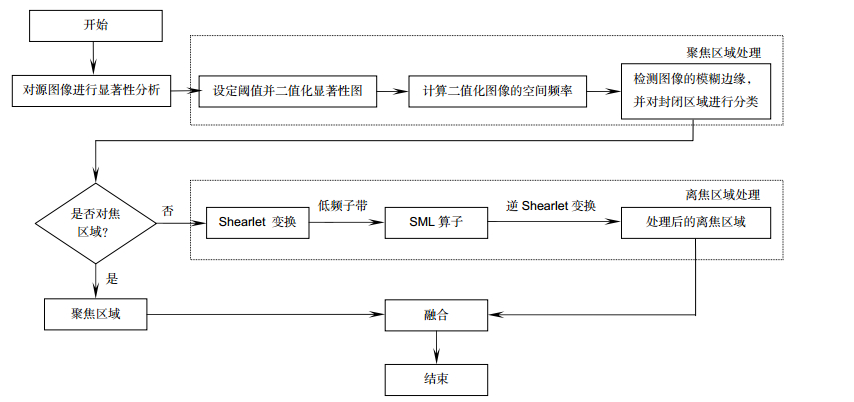

针对自动对焦技术中存在的全局对焦困难问题,本文提出一种新的基于区域显著性分析的图像融合方法。首先用基于图论的显著性分析(GBVS)算法定位源图像中的聚焦区域,然后使用分水岭和形态学方法进一步处理显著图的封闭区域以去除伪聚焦区域,得到精确提取的聚焦区域;离焦区域用剪切波变换处理后,以SML算子选取有用的细节信息作为融合依据。最后将处理后的聚焦区域和离焦区域融合为全聚焦图像。实验证明,所提出的方法融合图像边缘清晰,细节丰富,视觉效果最好,并且在清晰度和融合度的评价指标上较传统方法提高5%以上。

Abstract

In the study of autofocus technology, we propose an image fusion method based on saliency analysis, which can solve the problem of all in focus. First, the focal area in the source image is positioned by the graph-based visual saliency (GBVS) algorithm, and then the watershed and morphological methods are used to obtain the closed area of the saliency graph and the pseudo-focus region is removed. The defocused region is processed by the Shearlet transform, and the SML operator is used to choose the fusion parts. Finally, the precise focused region and the processed defocused region are fused into an all in focus image. Experiments show that the fused image of our method is clear and rich in detail, which has the best visual effect, and improves more than 5% in definition and fusion compared with traditional methods.

-

Key words:

- saliency analysis /

- image fusion /

- focus /

- GBVS /

- Shearlet transform

-

Overview

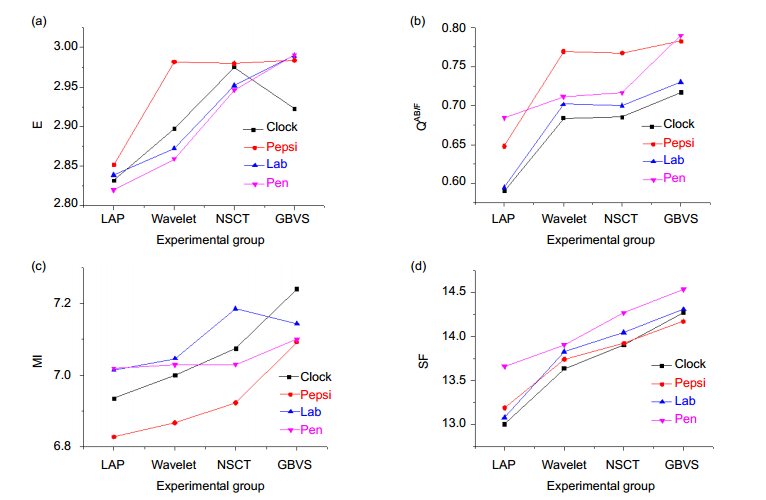

Abstract: In the study of autofocus technology, it is always difficult to obtain the all-in focus images because of optical system's limited focus depth, but a high definition image is very important to scientific research. In the research of autofocus technology, we know that detection of the focused region is the key issue of the multi-focus image fusion algorithm, the blurred boundary of the focused region increases the more difficulty of identifying focused regions accurately. According to these principles, the focused region of the source image should be directly fused as much as possible. We propose an image fusion method based on saliency analysis, which can solve the problem of all-in focus. The saliency analysis simulates the human visual attention mechanism by calculating color, direction, brightness and other characteristic information to get the visual significance of the image. The focus of human eyes usually falls in the region of higher saliency. With the saliency analysis, the saliency maps are obtained by comparing the differences among the components of the characteristics which are used to identify the focused regions of the multi-focus image. The process of our method can be described as follow: First, the focal area in the source image is positioned by the graph-based visual saliency (GBVS) algorithm, and then the watershed and morphological methods are used to obtain the closed area of the saliency region and the pseudo-focus regions are removed. In consideration of the defocused region containing much texture and direction information, the defocused region is processed by the Shearlet transform, and the SML operator is used to choose the fusion parts. Finally, the precisely focused region and the processed defocused region are fused into an all-in focus image. Experiments visually show that the fused image of the proposed method has clear and rich details. Compared with 3 traditional methods in 4 objective evaluation criteria: entropy, QAB/F, MI and SF, NSCT method performs best in the 3 traditional methods, while the entropy of the proposed method is 1% higher than that of NSCT method, QAB/F is about 4% higher, MI and SF are about 2% higher each, which means the proposed method has the clearest image and the best fusion performance. In the broken line graph, the merit of the proposed method is more obvious. The subjective visual effects and objective evaluation of the results demonstrate neatly that the proposed method is an effective image fusion method, and we will do further research on color image fusion.

-

-

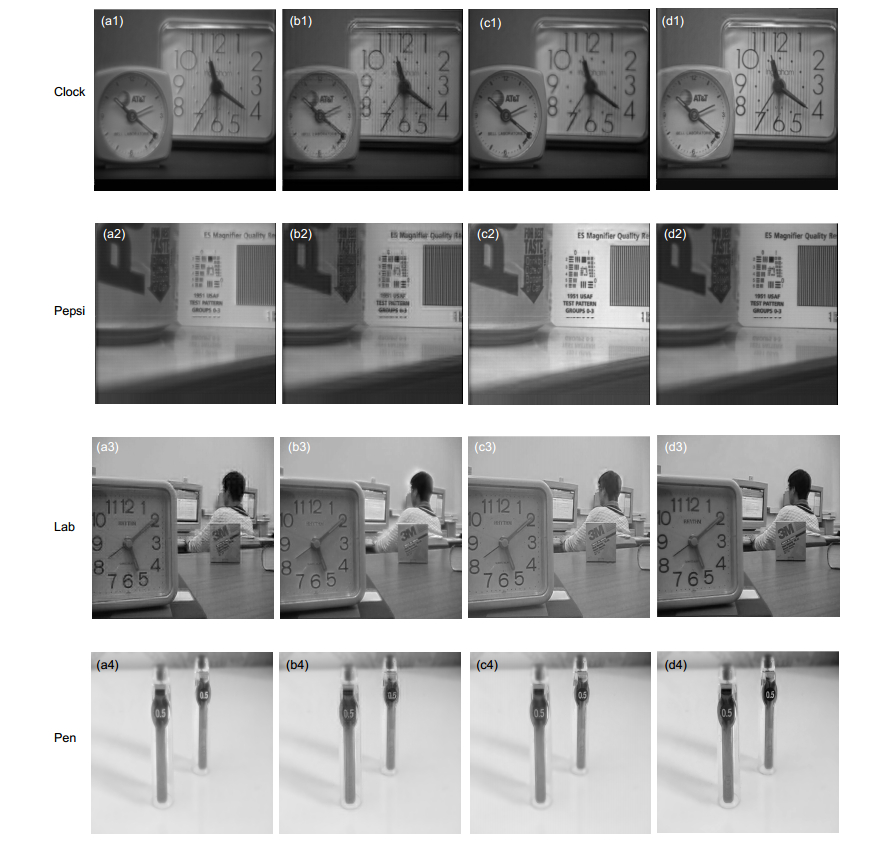

图 2 实验原图. (a1) Clock右对焦. (a2) Clock左对焦. (b1) Pepsi右对焦. (b2) Pepsi左对焦. (c1) Lab右对焦. (c2) Lab左对焦. (d1) Pen右对焦. (d2) Pen左对焦.

Figure 2. The source image of the experiment. (a1) Clock right focus. (a2) Clock left focus. (b1) Pepsi right focus. (b2) Pepsi left focus. (c1) Lab right focus. (c2) Lab left focus. (d1) Pen right focus. (d2) Pen left focus.

表 1 图像融合质量评价.

Table 1. Image fusion quality evaluation.

图像 实验方法 评价指标 E QAB/F MI SF Clock LAP 2.8318 0.5899 6.9354 13.0092 wavelet 2.8972 0.6837 6.9995 13.6347 NSCT 2.9748 0.6849 7.0747 13.9053 GBVS 2.9223 0.7168 7.2397 14.2661 Pepsi LAP 2.8516 0.6477 6.8282 13.1893 wavelet 2.9811 0.7695 6.8663 13.7388 NSCT 2.9792 0.7673 6.9228 13.9192 GBVS 2.9835 0.7826 7.0925 14.1657 Lab LAP 2.8388 0.5947 7.0148 13.0805 wavelet 2.8726 0.7014 7.0473 13.8231 NSCT 2.9517 0.7002 7.1846 14.0397 GBVS 2.9892 0.7301 7.1435 14.3055 Pen LAP 2.8201 0.6845 7.0198 13.6592 wavelet 2.8593 0.7113 7.0285 13.9003 NSCT 2.9461 0.7164 7.0302 14.2635 GBVS 2.9904 0.7901 7.0993 14.5338 -

参考文献

[1] Zhang Baohua, Lv Xiaoqi, Pei Haiquan, et al. Multi-focus image fusion algorithm based on focused region extraction[J]. Neurocomputing, 2016, 174: 733-748. doi: 10.1016/j.neucom.2015.09.092

[2] 张宝华, 裴海全, 吕晓琪.基于显著性检测和稀疏表示的多聚焦图像融合算法[J].小型微型计算机系统, 2016, 37(7): 1604-1607. http://www.cqvip.com/QK/95659X/201607/669327761.html

Zhang Baohua, Pei Haiquan, Lv Xiaoqi. Multi-focus image fusion based on saliency detection and sparse representation[J]. Journal of Chinese Computer Systems, 2016, 37(7): 1604-1607. http://www.cqvip.com/QK/95659X/201607/669327761.html

[3] Yan Xiang, Qin Hanlin, Li Jia, et al. Multi-focus image fusion using a guided-filter-based difference image[J]. Applied Optics, 2016, 55(9): 2230-2239. doi: 10.1364/AO.55.002230

[4] Duan Jiangyong, Meng Gaofeng, Xiang Shiming, et al. Multifocus image fusion via focus segmentation and region reconstruction[J]. Neurocomputing, 2014, 140(22): 193-209. https://www.sciencedirect.com/science/article/pii/S092523121400441X

[5] 张立凯. 多焦点图像融合方法的研究[D]. 长春: 吉林大学, 2013: 1-59.

Zhang Likai. The research of more focus image fusion method[D]. Changchun: Jilin University, 2013: 1-59.

http://cdmd.cnki.com.cn/Article/CDMD-10183-1013195437.htm [6] Luo Xiaoyan, Zhang Jun, Dai Qionghai. A regional image fusion based on similarity characteristics[J]. Signal Processing, 2012, 92(5): 1268-1280. doi: 10.1016/j.sigpro.2011.11.021

[7] Chai Yi, Li Huafeng, Li Zhaofei. Multifocus image fusion scheme using focused region detection and multiresolution[J]. Optics Communications, 2011, 284(19): 4376-4389. doi: 10.1016/j.optcom.2011.05.046

[8] Itti L, Koch C, Niebur E. A model of saliency-based visual attention for rapid scene analysis[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 1998, 20(11): 1254-1259. doi: 10.1109/34.730558

[9] Harel J, Koch C, Perona P. Graph-based visual saliency[M]//Schölkopf B, Platt J, Hofmann T. Advances in Neural Information Processing Systems. Cambridge, MA: MIT Press, 2006: 545-552.

[10] Behura J, Wapenaar K, Snieder R. Autofocus imaging: image reconstruction based on inverse scattering theory[J]. Geophysics, 2014, 79(3): A19-A26. doi: 10.1190/geo2013-0398.1

[11] Zhang Xuedian, Liu Zhaoqing, Jiang Minshan, et al. Fast and accurate auto-focusing algorithm based on the combination of depth from focus and improved depth from defocus[J]. Optics Express, 2014, 22(25): 31237-31247. doi: 10.1364/OE.22.031237

[12] Li Huafeng, Chai Yi, Li Zhaofei. Multi-focus image fusion based on nonsubsampled contourlet transform and focused regions detection[J]. Optik-International Journal for Light and Electron Optics, 2013, 124(1): 40-51. doi: 10.1016/j.ijleo.2011.11.088

[13] Guo Kanghui, Labate D. Optimally sparse multidimensional representation using shearlets[J]. SIAM Journal on Mathematical Analysis, 2007, 39(1): 298-318. doi: 10.1137/060649781

[14] 王飞, 王瑶, 史彩成.采用shearlet变换的多聚焦图像融合[J].计算机工程与应用, 2016, 52(2): 205-208. http://www.opticsjournal.net/abstract.htm?id=OJ130116000136JfMiOl

Wang Fei, Wang Yao, Shi Caicheng. Multi-focus image fusion using shearlet transform[J]. Computer Engineering and Applications, 2016, 52(2): 205-208. http://www.opticsjournal.net/abstract.htm?id=OJ130116000136JfMiOl

[15] 郑红, 郑晨, 闫秀生, 等.基于剪切波变换的可见光与红外图像融合算法[J].仪器仪表学报, 2012, 33(7): 1613-1619. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=yqyb201207025

Zheng Hong, Zheng Chen, Yan Xiusheng, et al. Visible and infrared image fusion algorithm based on shearlet transform[J]. Chinese Journal of Scientific Instrument. 2012, 33(7): 1613-1619. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=yqyb201207025

[16] 廖勇, 黄文龙, 尚琳, 等. Shearlet与改进PCNN相结合的图像融合[J].计算机工程与应用, 2014, 50(2): 142-146. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=jsjgcyyy201402030

Liao Yong, Huang Wenlong, Shang Lin, et al. Image fusion based on Shearlet and improved PCNN[J]. Computer Engineering and Applications, 2014, 50(2): 142-146. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=jsjgcyyy201402030

[17] 李美丽, 李言俊, 王红梅, 等.基于NSCT和PCNN的红外与可见光图像融合方法[J].光电工程, 2010, 37(6): 90-95. http://www.oee.ac.cn/CN/abstract/abstract842.shtml

Li Meili, Li Yanjun, Wang Hongmei, et al. Fusion algorithm of infrared and visible images based on NSCT and PCNN[J]. Opto-Electronic Engineering, 2010, 37(6): 90-95. http://www.oee.ac.cn/CN/abstract/abstract842.shtml

[18] 洪裕珍, 任国强, 孙健.离焦模糊图像清晰度评价函数的分析与改进[J].光学 精密工程, 2014, 22(12): 3401-3408. http://www.eope.net/gxjmgc/CN/abstract/abstract15597.shtml

Hong Yuzhen, Ren Guoqiang, Sun Jian. Analysis and improvement on sharpness evaluation function of defocused image[J]. Optics and Precision Engineering, 2014, 22(12): 3401-3408. http://www.eope.net/gxjmgc/CN/abstract/abstract15597.shtml

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: