Multi-granularity feature and shape-position similarity metric method for ship detection in SAR images

-

摘要

针对合成孔径雷达 (SAR)图像背景复杂、目标尺度变化大,尤其在小目标密集场景中容易出现误检和漏检问题,提出一种面向SAR图像舰船检测的多粒度特征与形位相似度量方法。在特征提取阶段,设计包含双分支多粒度特征聚合结构。一个分支通过Haar小波变换对特征图级联分解,以扩大全局感受野,从而提取粗粒度特征;另一分支引入空间和通道重建卷积,用于捕捉细节纹理信息,以减少特征图的上下文信息损失。两分支通过协同利用局部和非局部特征的相互作用,有效抑制复杂背景和杂波干扰,实现多尺度特征的精确提取。在检测回归阶段,利用欧几里得距离,并结合位置与形状信息,提出形位相似度量方法,以解决小目标密集场景中位置偏差敏感性问题,从而平衡正负样本的分配。在SSDD和HRSID数据集上与双阶段、单阶段及DETR系列共11种检测器进行综合对比,本文方法在两数据集上mAP和mAP50分别达到68.8%、98.3%和70.8%、93.8%。此外,模型参数量仅为2.4 M,计算量为6.4 GFLOPs,优于对比方法。本文方法在复杂背景和不同尺度舰船目标下表现出优异的检测性能,在降低误检率和漏检率的同时,具有更低的模型参数量和计算量。

Abstract

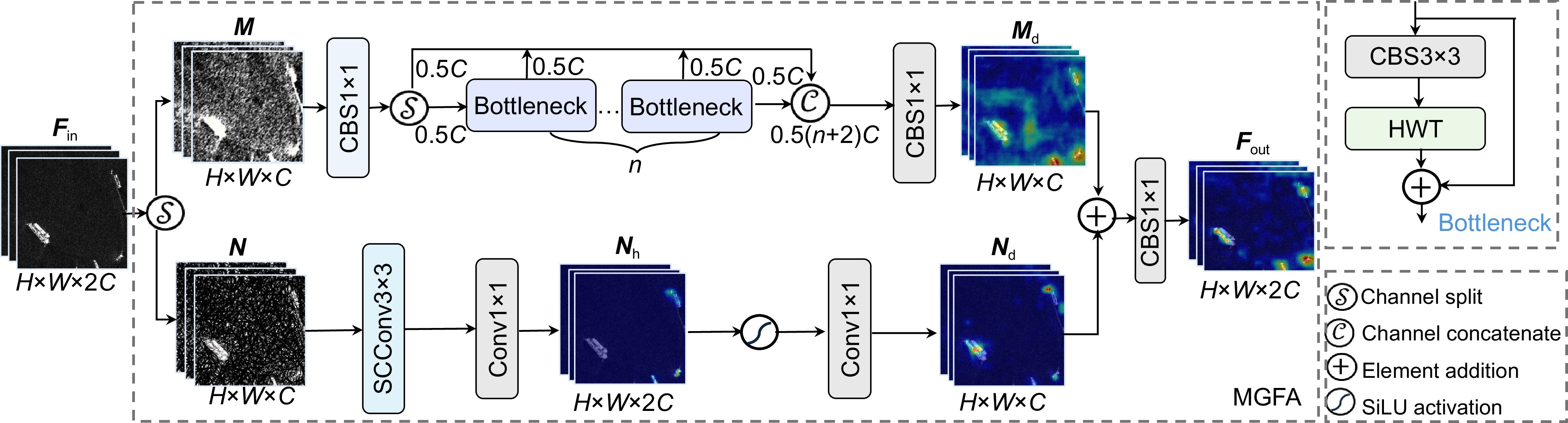

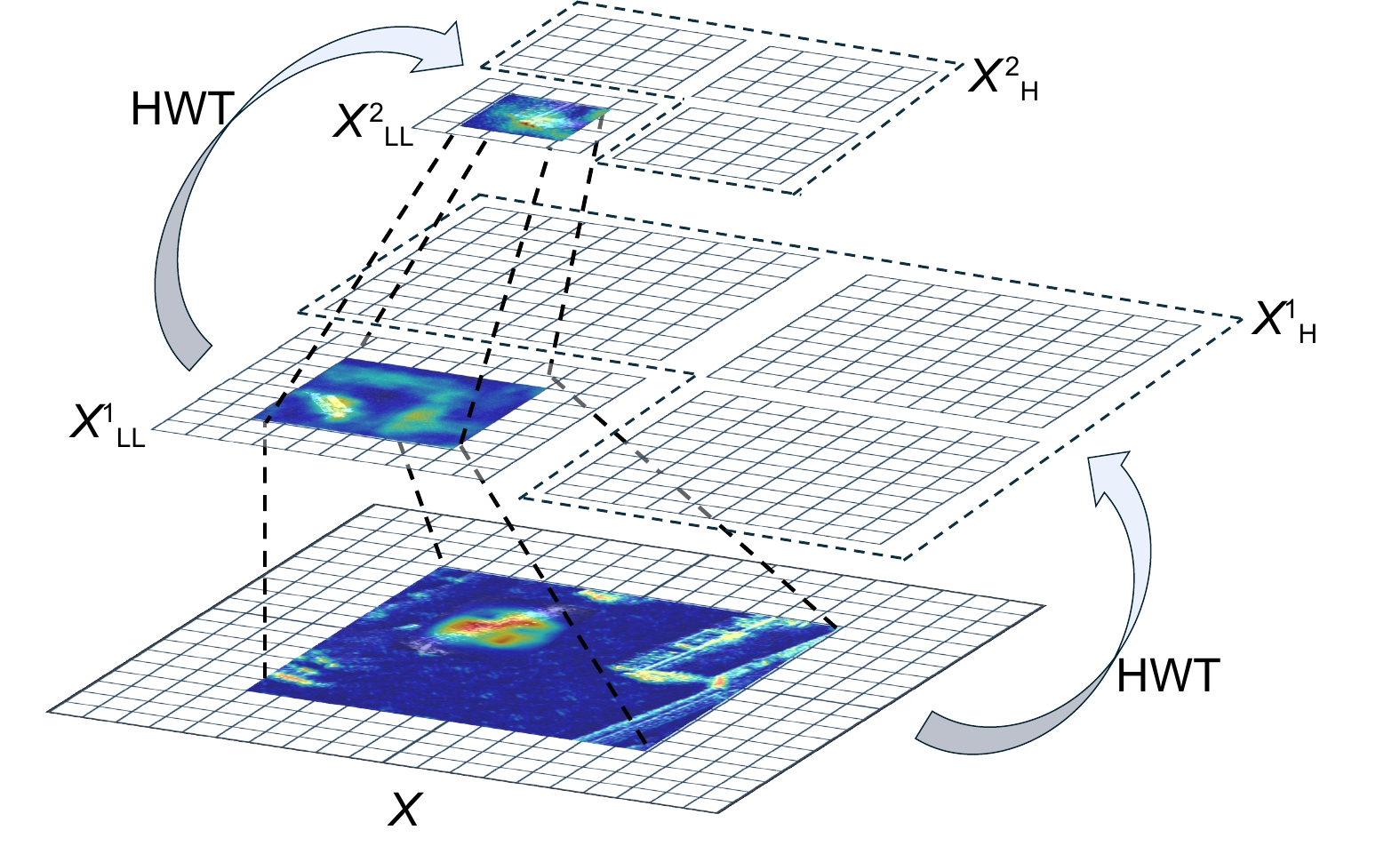

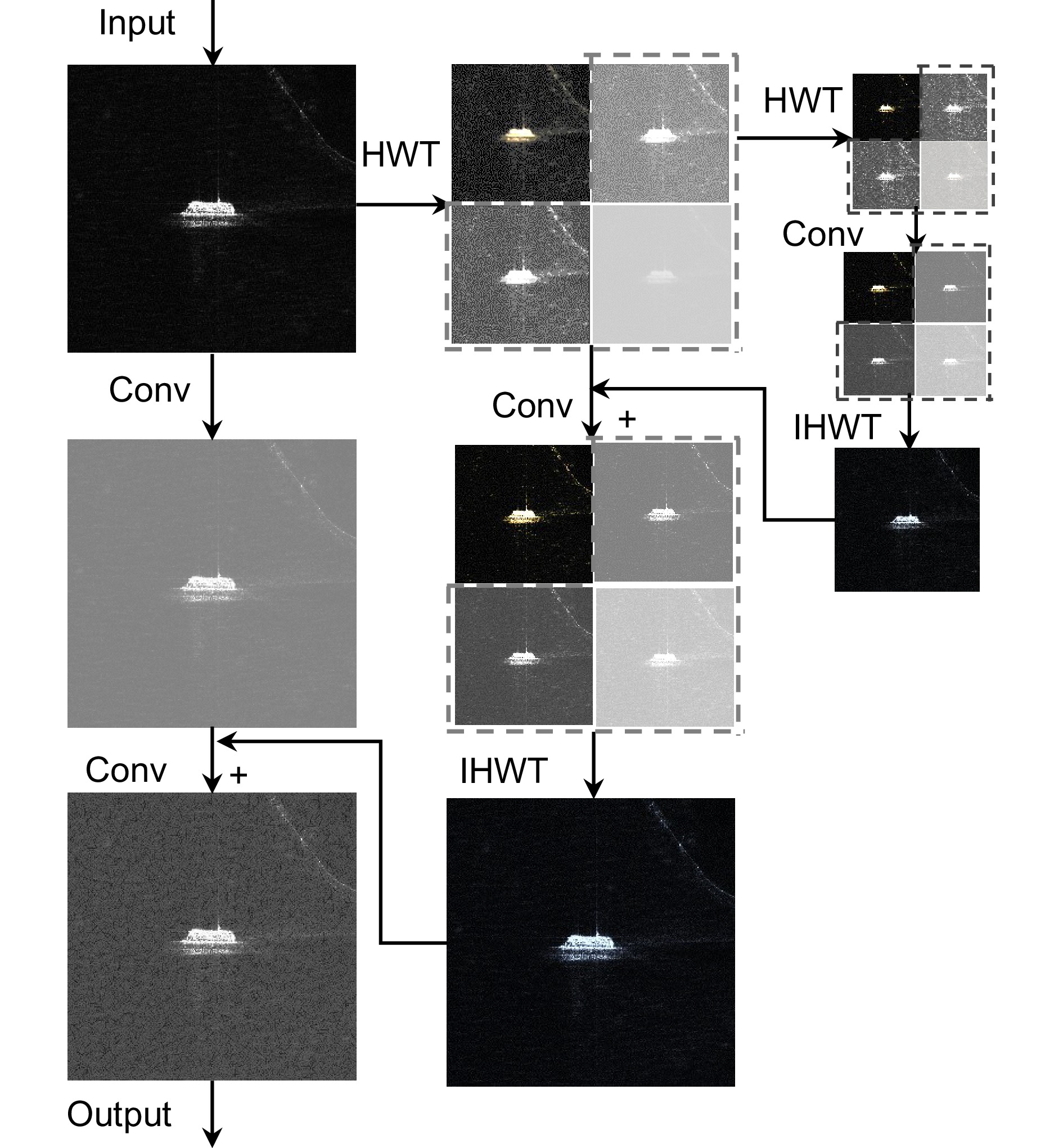

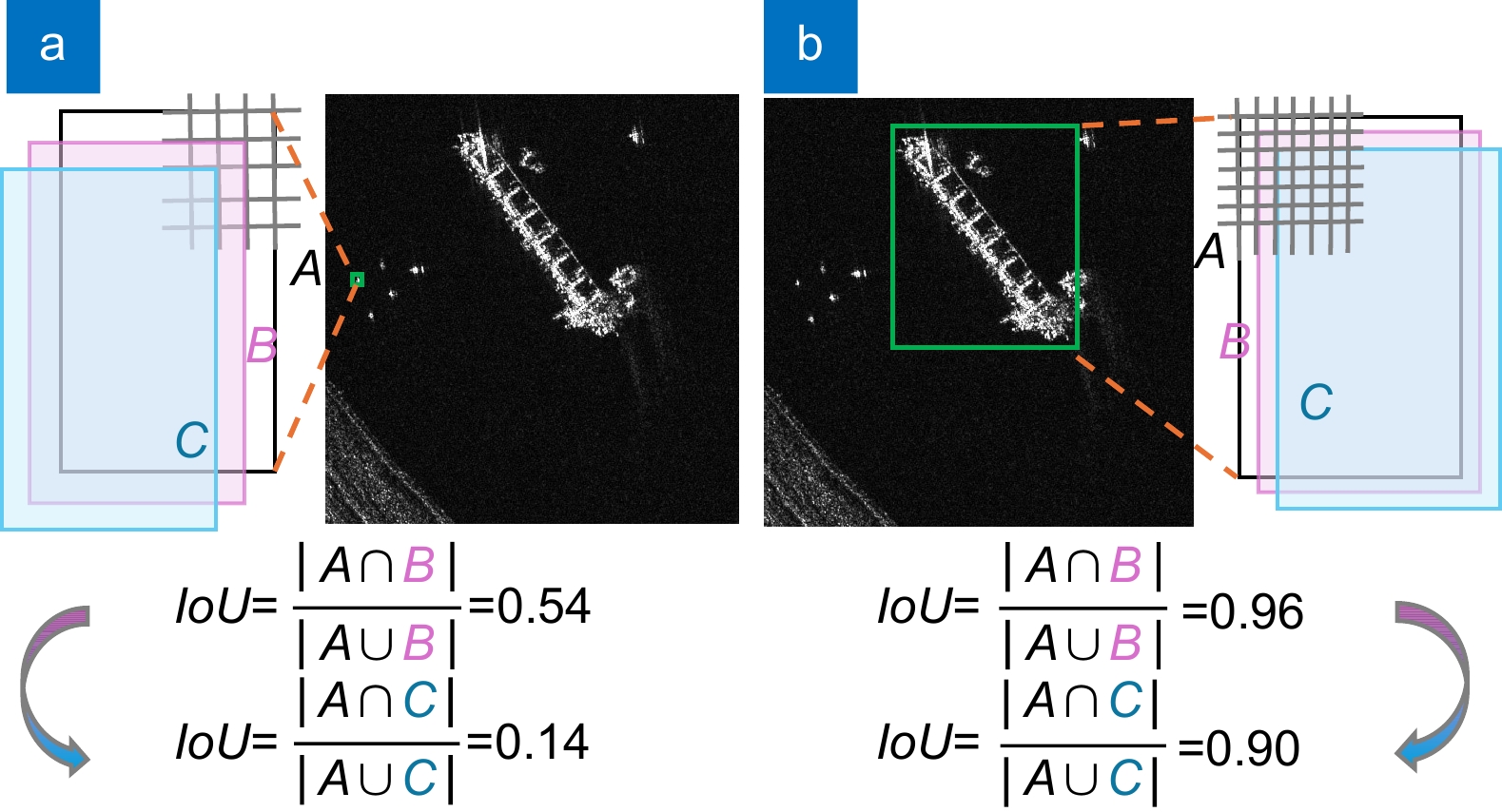

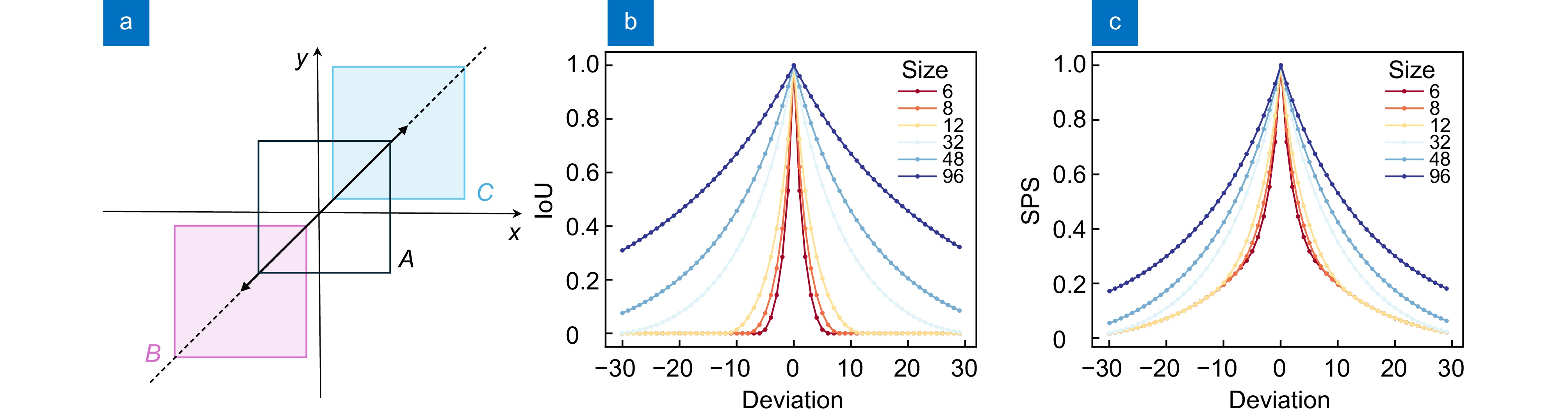

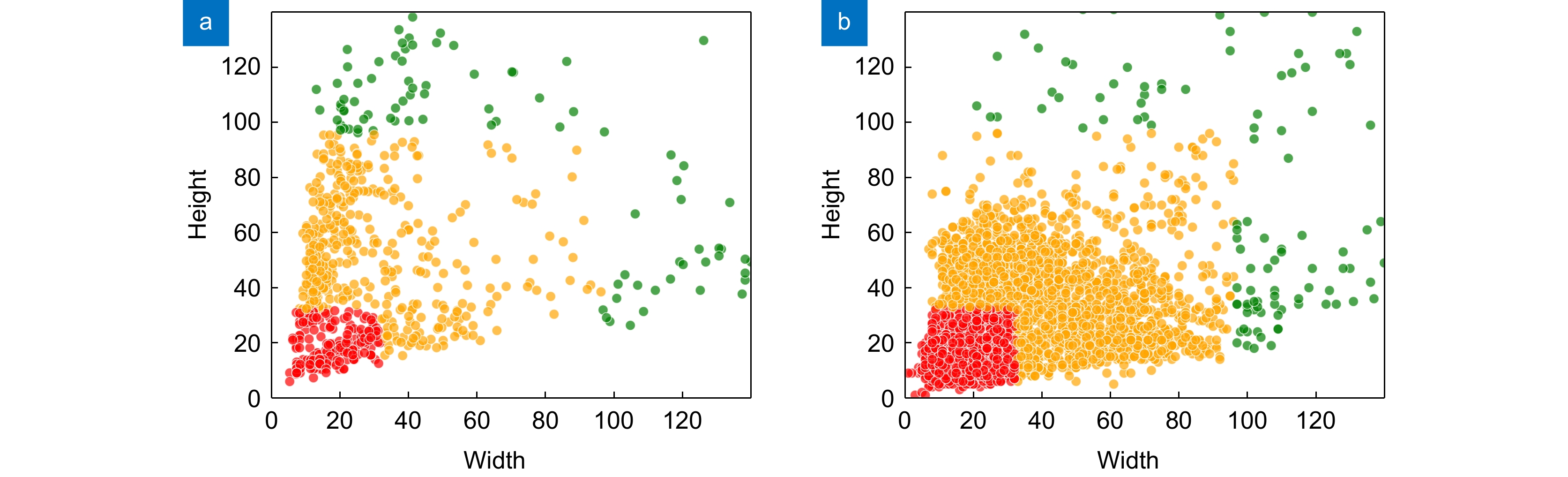

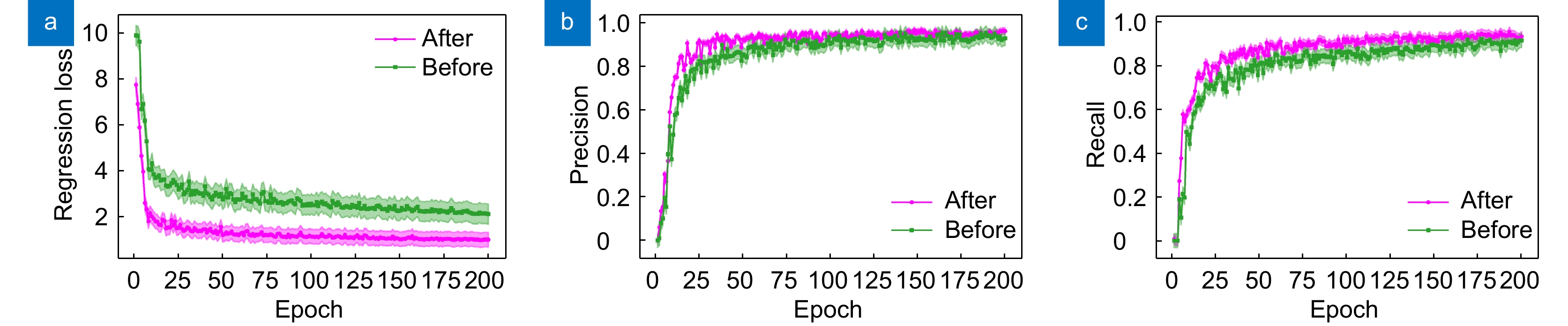

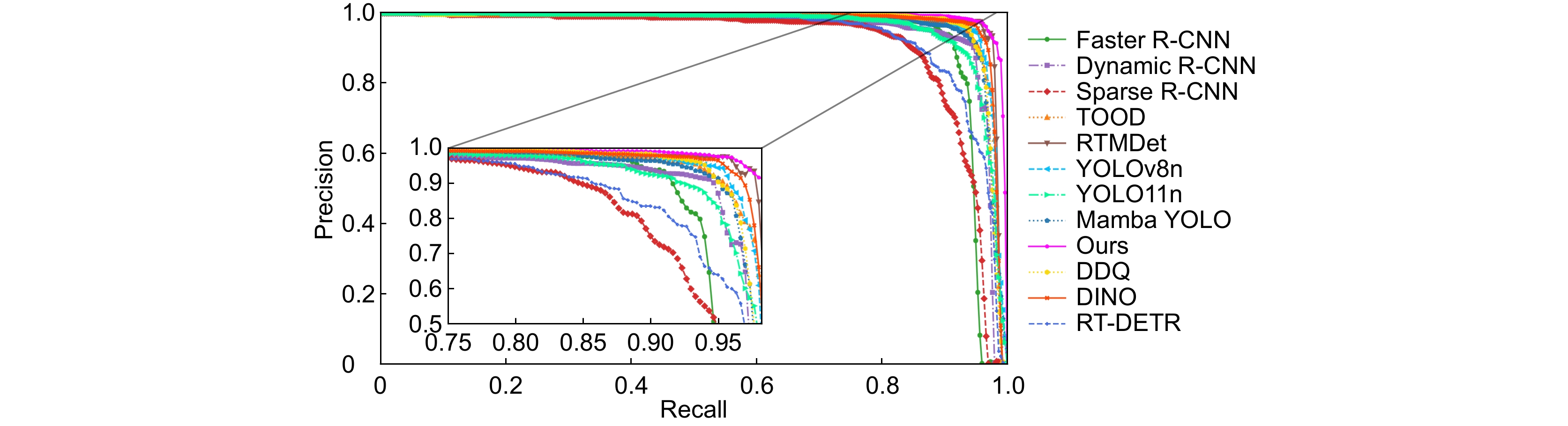

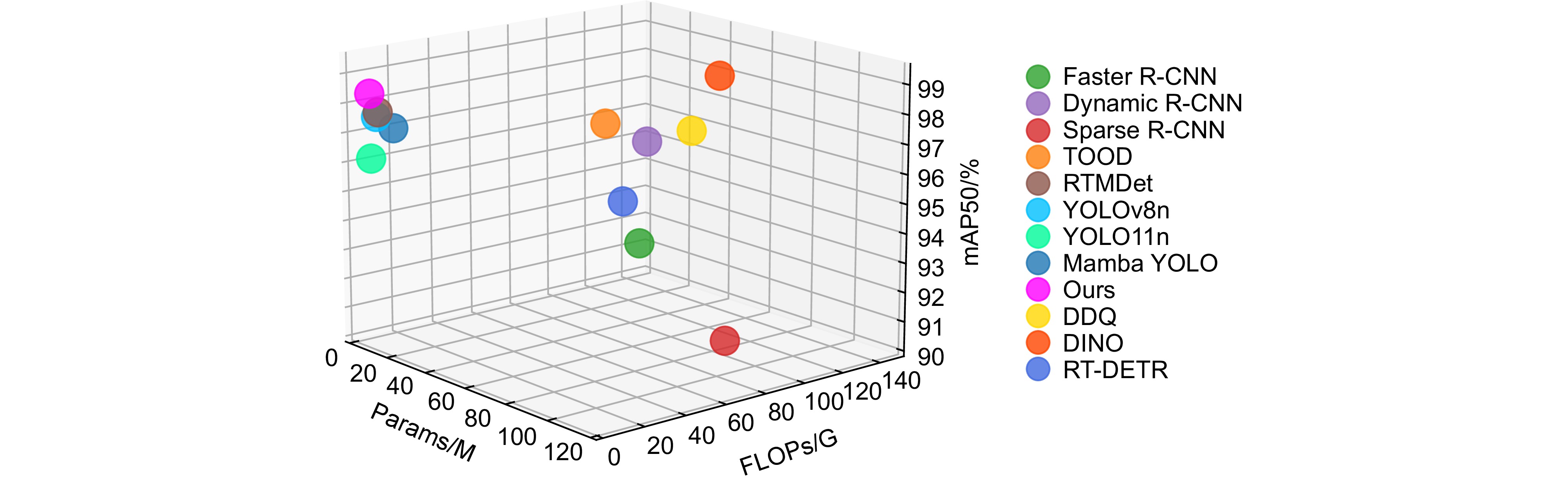

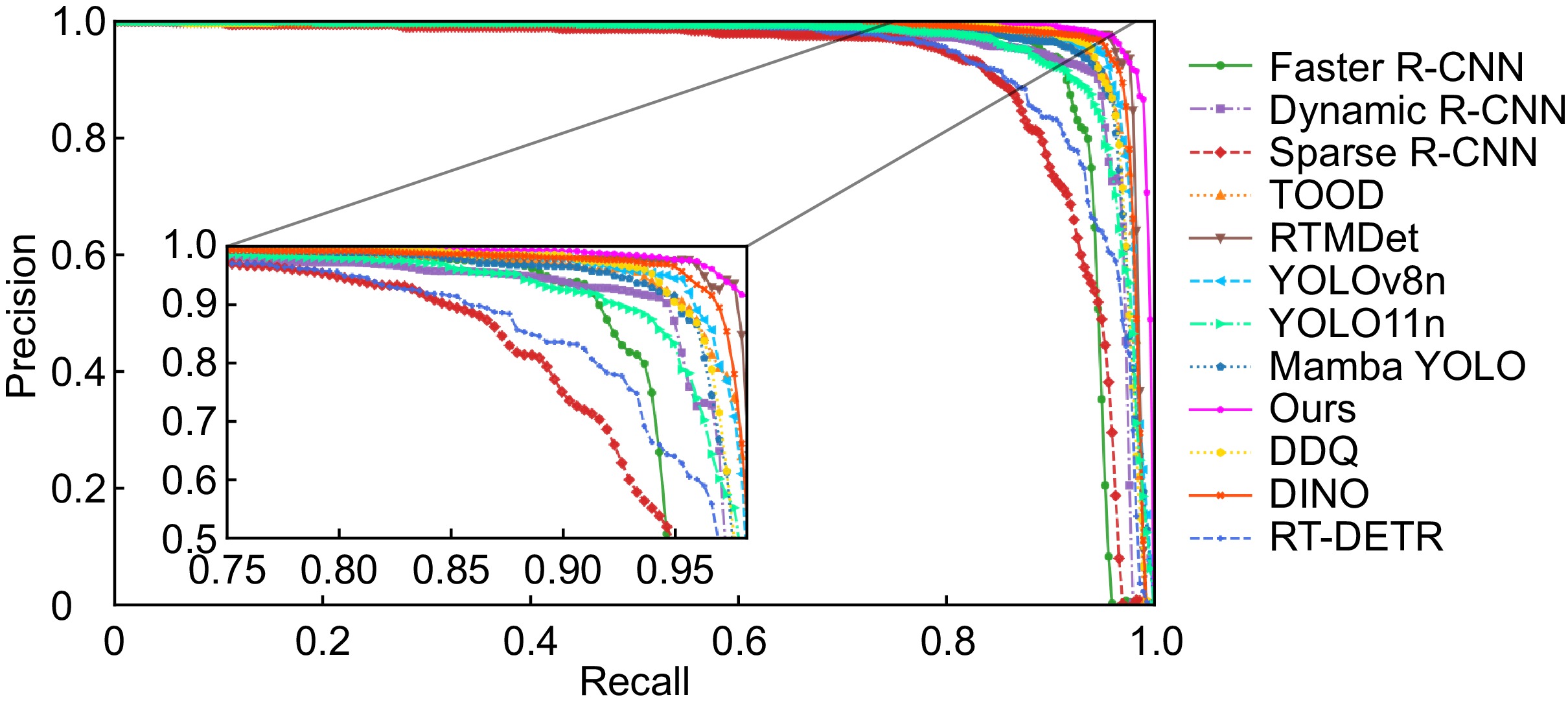

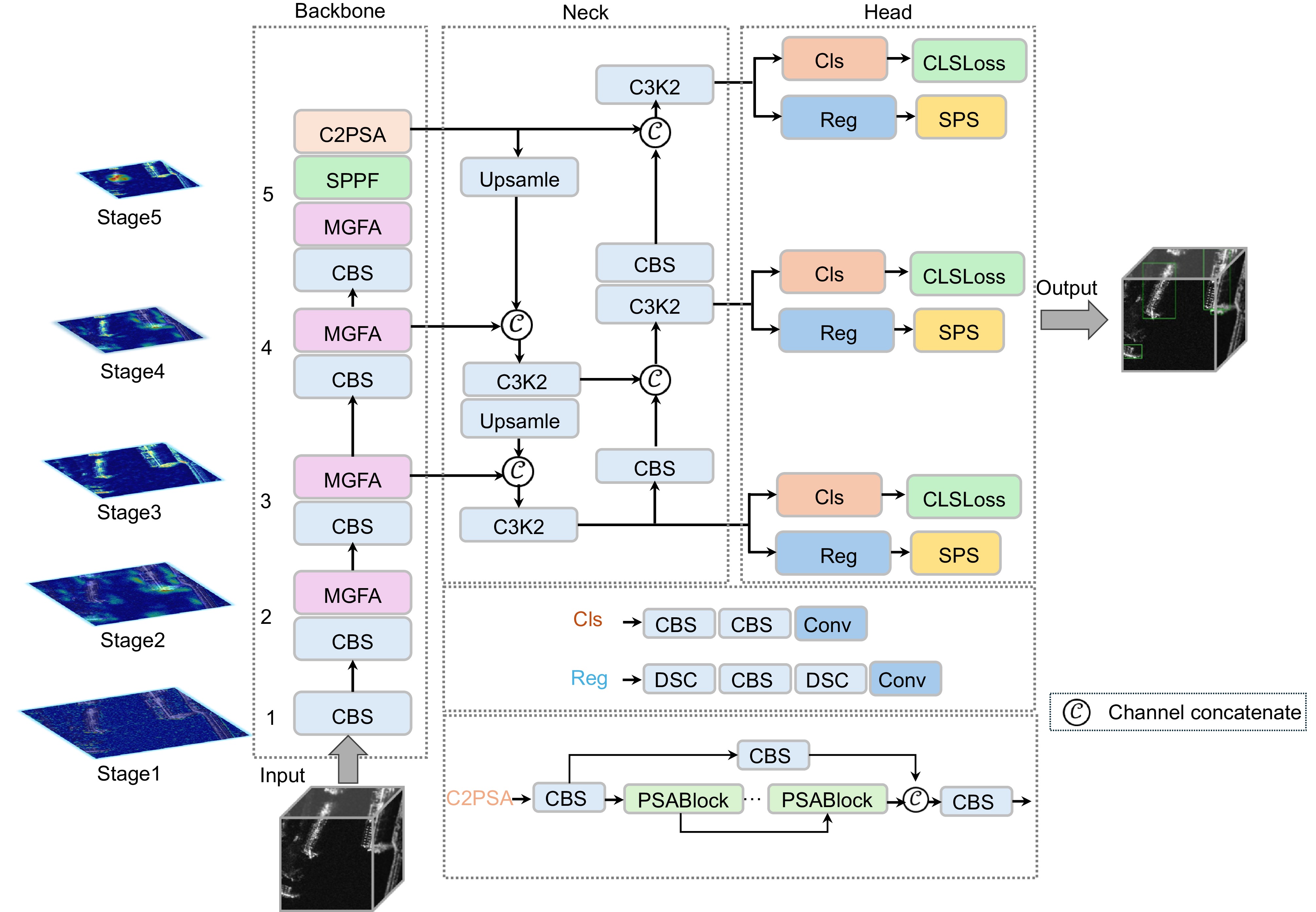

To address the challenges of background complexity and target scale changes in synthetic aperture radar (SAR) images, especially in densely populated small-target scenes prone to false and missed detections, a multi-granularity feature and shape-position similarity metric method for ship detection in SAR images is proposed. First, a multi-granularity feature aggregation structure containing two branches is designed in the feature extraction stage. One branch decomposes the feature map cascade by Haar wavelet transform to expand the global receptive field to extract coarse-grained features. The other branch introduces spatial and channel reconstruction convolution to capture detailed texture information, thereby minimizing the loss of contextual information. The two branches effectively suppress the complex background and clutter interference by synergistically exploiting the interaction of local and non-local features to achieve accurate extraction of multi-scale features. Next, by utilizing the Euclidean distance and combining position and shape information, we propose a shape-position similarity metric to solve the problem of position deviation sensitivity in small target-dense scenes, thereby balancing the allocation of positive and negative samples. In a comprehensive comparison with 11 detectors from one-stage, two-stage, and DETR series on the SSDD and HRSID datasets, our method achieves mAP scores of 68.8% and 98.3%, and mAP50 scores of 70.8% and 93.8%, respectively. In addition, our model is highly efficient, with just 2.4 M parameters and a computational load of only 6.4 GFLOPs, outperforming the comparison methods. The proposed method shows excellent detection performance under complex backgrounds and ship targets of different scales. While reducing the false detection rate and missed detection rate, it has a low model parameter amount and computational complexity.

-

Key words:

- SAR images /

- ship detection /

- feature extraction /

- wavelet transform /

- Euclidean distance

-

Overview

Overview: Synthetic aperture radar (SAR) images have all-weather and all-day observation capabilities, can penetrate clouds, rain, and fog, provide high-resolution images, and achieve large-scale coverage and fast scanning. Based on these advantages, SAR images have been widely used in ship detection, such as maritime patrol, search and rescue, fishery supervision, traffic management, and military surveillance. However, in actual detection, due to factors such as complex background interference, large changes in target scale, and dense distribution of small targets, missed detections and false detections are prone to occur. This paper proposes a multi-granularity feature and shape-position similarity method for ship detection in SAR images to meet these challenges and improve the detection effect. In the feature extraction stage, a dual-branch multi-granularity feature aggregation (MGFA) structure is designed to cope with the complex background and multi-scale targets in SAR images. MGFA uses two branches, coarse-grained and fine-grained, for bidirectional feature extraction. The coarse-grained feature extraction branch is implemented through the Haar wavelet transform, which can decompose the input features at multiple levels, thereby expanding the receptive field of the model and enhancing the ability to capture global context information. The fine-grained feature extraction branch uses spatial and channel reconstruction convolution (SCConv), which focuses more on the extraction of details and local information. SCConv can efficiently capture the detailed texture of small targets, reduce the influence of clutter interference and background noise, and thus improve the accuracy of small target detection. In the detection regression stage, the shape-position similarity (SPS) metric is proposed using Euclidean distance to replace the traditional IoU. For small ships, SPS solves the sensitivity problem of position deviation through center distance, and can accurately reflect the position deviation even when there is no overlap in the bounding box. For large ships, SPS introduces shape constraints, enhances the focus on the shape of the prediction box, and makes up for the deficiency of IoU focusing only on position overlap. By considering the similarity of position and shape, SPS effectively addresses the problem of ship detection in SAR images with dense small targets and significant scale changes. In a comprehensive comparison with 11 detectors from one-stage, two-stage, and DETR series on the SSDD and HRSID datasets, our method achieves mAP scores of 68.8% and 98.3%, and mAP50 scores of 70.8% and 93.8%, respectively. In addition, our model is highly efficient, with just 2.4 M parameters and a computational load of only 6.4 GFLOPs, outperforming the comparison methods.

-

-

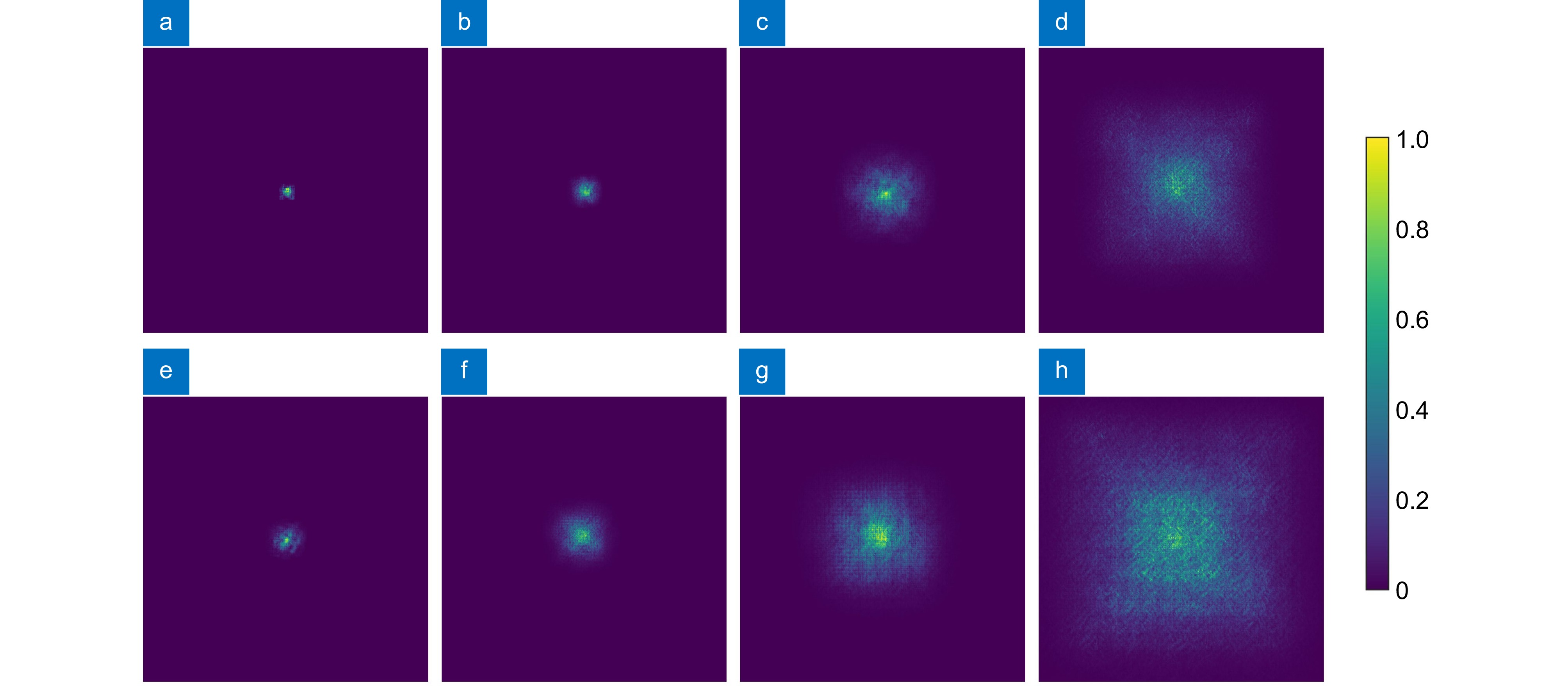

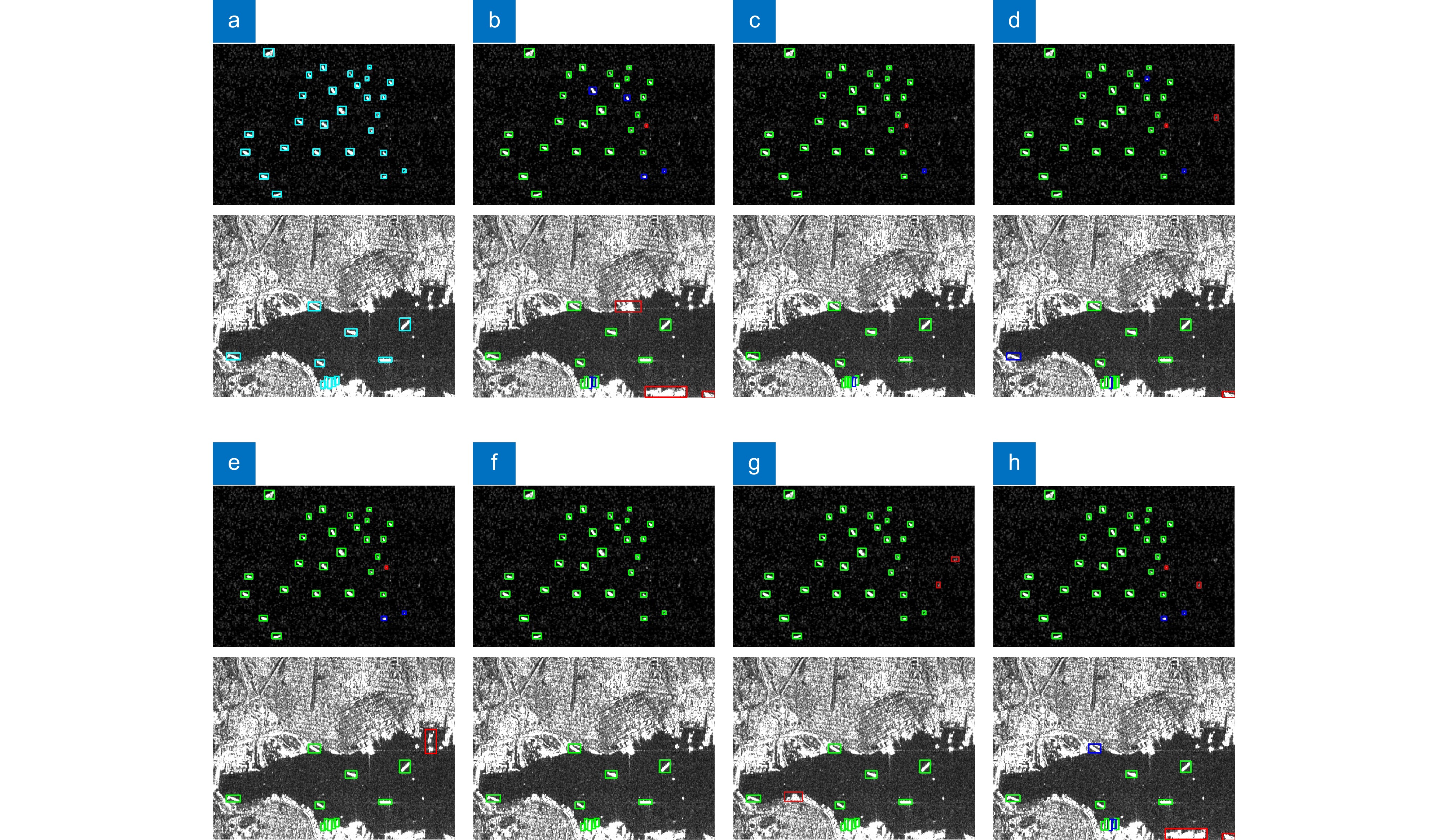

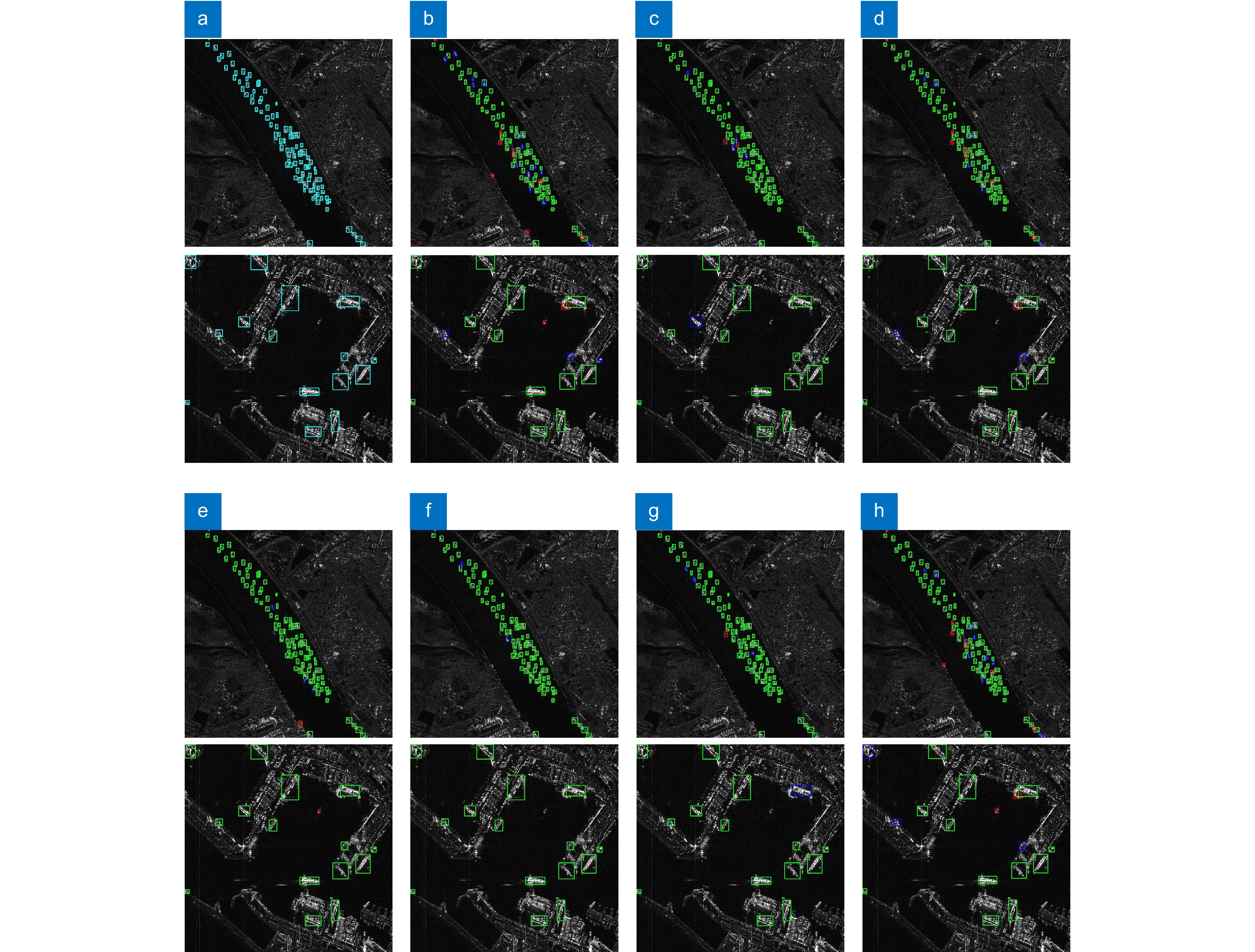

图 5 模型在使用HWT前后的感受野变化。使用HWT前(a) Stage2、(b) Stage3、(c) Stage4、(d) Stage5的感受野; 使用HWT后(e) Stage2、(f) Stage3、(g) Stage4、(h) Stage5的感受野

Figure 5. Changes in receptive field of the model before and after using HWT. Receptive fields of (a) Stage2, (b) Stage3, (c) Stage4, and (d) Stage5 before HWT; Receptive fields of (e) Stage2, (f) Stage3, (g) Stage4, and (h) Stage5 after HWT

表 1 HWT中不同配置对模型性能影响

Table 1. Influence of different configurations in HWT on model performance

Level Kernel size RFS mAP/% mAP50/% Params/k FLOPs/M 1 3 × 3 6 67.0 95.8 2.9 7.4 5 × 5 10 67.4 96.3 8.1 20.5 7 × 7 14 67.8 96.9 15.7 40.1 2 3 × 3 12 67.6 96.8 5.3 8.3 5 × 5 20 68.1 97.8 14.5 23.0 7 × 7 28 68.1 97.9 28.3 45.2 3 3 × 3 24 67.9 97.4 7.6 8.5 5 × 5 40 67.6 97.1 20.9 23.7 7 × 7 56 67.3 97.0 40.8 46.4 表 2 MGFA消融实验结果

Table 2. Results of MGFA ablation experiments

Coarse-grained feature Fine-grained feature mAP/% mAP50/% √ - 67.6 97.5 - √ 66.9 95.8 √ √ 68.1 97.8 表 3 权重系数对模型性能的影响

Table 3. Effect of weighting coefficients on model performance

α 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1.0 mAP/% 67.6 67.5 67.7 67.8 67.8 67.7 67.3 67.2 66.9 66.9 66.8 mAP50/% 96.7 96.7 97.1 97.3 97.1 96.9 96.4 96.4 96.0 95.8 95.6 表 4 本文方法消融实验结果

Table 4. Results of ablation experiments

MGFA SPS mAP/% mAP50/% - - 67.2 96.1 √ - 68.1 97.8 - √ 67.8 97.3 √ √ 68.8 98.3 表 5 SSDD数据集上不同度量对比

Table 5. Comparison of different metrics on SSDD dataset

Metric CIoU GIoU DIoU EIoU SIoU MPDIoU Powerful-IoU SPS mAP/% 67.2 67.2 67.4 66.8 67.1 67.6 67.5 67.8 mAP50/% 96.1 96.0 96.6 96.4 96.1 96.6 96.8 97.3 表 6 SSDD数据集上不同方法对比

Table 6. Comparison of different methods on SSDD dataset

Method mAP/% mAP50/% mAPs/% mAPm/% mAPl/% Params/M FLOPs/G Two

stageFaster R-CNN 60.7 92.6 55.4 70.1 69.0 41.4 103.2 Dynamic R-CNN 66.9 96.1 65.1 74.3 66.2 41.3 107.3 Sparse R-CNN 54.4 91.2 50.2 62.1 60.3 106.0 77.5 One

stageTOOD 67.1 96.7 62.8 75.0 83.7 32.0 95.3 RTMDet 68.0 97.7 66.5 78.7 74.5 4.9 8.0 YOLOv8n 68.3 97.5 65.8 75.5 62.9 3.2 8.7 YOLO11n 67.2 96.1 64.5 72.0 60.7 2.6 6.5 Mamba YOLO 68.6 97.1 65.1 74.4 68.4 6.1 14.3 Ours 68.8 98.3 66.8 72.5 63.1 2.4 6.4 DETR

likeDDQ 67.1 96.4 58.1 72.0 80.1 48.3 123.3 DINO 69.5 98.1 63.2 75.6 85.0 47.5 139.0 RT-DETR 64.2 94.0 59.7 69.7 57.0 36.0 100.2 表 7 HRSID数据集上不同度量对比

Table 7. Comparison of different metrics on HRSID dataset

Metric CIoU GIoU DIoU EIoU SIoU MPDIoU Powerful-IoU SPS mAP/% 68.5 68.7 68.9 66.6 68.1 68.6 69.3 69.6 mAP50/% 91.3 92.0 91.8 91.0 91.1 91.1 92.2 92.8 表 8 HRSID数据集上不同方法对比

Table 8. Comparison of different methods on HRSID dataset

Method mAP/% mAP50/% mAPs/% mAPm/% mAPl/% Params/M FLOPs/G Two

stageFaster R-CNN 63.5 85.4 48.8 78.7 49.9 41.4 103.2 Dynamic R-CNN 63.8 85.1 48.7 78.2 55.7 41.3 107.3 Sparse R-CNN 61.0 83.4 46.4 75.2 46.6 106.0 77.5 One

stageTOOD 70.3 92.0 58.1 82.2 67.6 32.0 95.3 RTMDet 69.0 90.5 55.9 81.3 63.7 4.9 8.0 YOLOv8n 69.4 93.6 57.8 79.7 57.6 3.2 8.7 YOLO11n 68.5 91.3 57.4 78.5 53.2 2.6 6.5 Mamba YOLO 70.4 93.7 58.6 80.8 67.7 6.1 14.3 Ours 70.8 93.8 60.2 79.3 57.9 2.4 6.4 DETR

likeDDQ 70.5 93.4 59.4 81.1 71.3 48.3 123.3 DINO 69.7 92.5 59.3 79.9 62.7 47.5 139.0 RT-DETR 61.9 89.5 48.1 75.0 42.6 36.0 100.2 -

参考文献

[1] Ai J Q, Yang X Z, Song J T, et al. An adaptively truncated clutter-statistics-based two-parameter CFAR detector in SAR imagery[J]. IEEE J Oceanic Eng, 2018, 43(1): 267−279. doi: 10.1109/joe.2017.2768198

[2] Eldhuset K. An automatic ship and ship wake detection system for spaceborne SAR images in coastal regions[J]. IEEE Trans Geosci Remote Sens, 1996, 34(4): 1010−1019. doi: 10.1109/36.508418

[3] Liu T, Zhang J F, Gao G, et al. CFAR ship detection in polarimetric synthetic aperture radar images based on whitening filter[J]. IEEE Trans Geosci Remote Sens, 2020, 58(1): 58−81. doi: 10.1109/tgrs.2019.2931353

[4] Girshick R. Fast R-CNN[C]//Proceedings of 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, 2015: 1440–1448. https://doi.org/10.1109/iccv.2015.169.

[5] Ren S Q, He K M, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Trans Pattern Anal Mach Intell, 2017, 39(6): 1137−1149. doi: 10.1109/tpami.2016.2577031

[6] Zhang H K, Chang H, Ma B P, et al. Dynamic R-CNN: towards high quality object detection via dynamic training[C]//Proceedings of the 16th European Conference on Computer Vision, Glasgow, 2020: 260–275. https://doi.org/10.1007/978-3-030-58555-6_16.

[7] Liu W, Anguelov D, Erhan D, et al. SSD: single shot multibox detector[C]//Proceedings of the 14th European Conference on Computer Vision, Amsterdam, 2016: 21–37. https://doi.org/10.1007/978-3-319-46448-0_2.

[8] Redmon J, Divvala S, Girshick R, et al. You only look once: unified, real-time object detection[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, 2016: 779–788. https://doi.org/10.1109/cvpr.2016.91.

[9] Tian Z, Shen C H, Chen H, et al. FCOS: a simple and strong anchor-free object detector[J]. IEEE Trans Pattern Anal Mach Intell, 2022, 44(4): 1922−1933. doi: 10.1109/tpami.2020.3032166

[10] Guo H Y, Yang X, Wang N N, et al. A CenterNet++ model for ship detection in SAR images[J]. Pattern Recognit, 2021, 112: 107787. doi: 10.1016/j.patcog.2020.107787

[11] 徐安林, 杜丹, 王海红, 等. 结合层次化搜索与视觉残差网络的光学舰船目标检测方法[J]. 光电工程, 2021, 48(4): 200249. doi: 10.12086/oee.2021.200249

Xu A L, Du D, Wang H H, et al. Optical ship target detection method combining hierarchical search and visual residual network[J]. Opto-Electron Eng, 2021, 48(4): 200249. doi: 10.12086/oee.2021.200249

[12] Yang X, Zhang X, Wang N N, et al. A robust one-stage detector for multiscale ship detection with complex background in massive SAR images[J]. IEEE Trans Geosci Remote Sens, 2022, 60: 5217712. doi: 10.1109/tgrs.2021.3128060

[13] 丁俊华, 袁明辉. 基于双分支多尺度融合网络的毫米波SAR图像多目标语义分割方法[J]. 光电工程, 2023, 50(12): 230242. doi: 10.12086/oee.2023.230242

Ding J H, Yuan M H. A multi-target semantic segmentation method for millimetre wave SAR images based on a dual-branch multi-scale fusion network[J]. Opto-Electron Eng, 2023, 50(12): 230242. doi: 10.12086/oee.2023.230242

[14] Bai L, Yao C, Ye Z, et al. Feature enhancement pyramid and shallow feature reconstruction network for SAR ship detection[J]. IEEE J Sel Top Appl Earth Obs Remote Sens, 2023, 16: 1042−1056. doi: 10.1109/jstars.2022.3230859

[15] Tang J, Han Y H, Xian Y T. SAR-ShipSwin: enhancing SAR ship detection with robustness in complex environment[J]. J Supercomput, 2024, 80(14): 2079−20812. doi: 10.1007/s11227-024-06237-z

[16] Cao S, Zhao C X, Dong J, et al. Ship detection in synthetic aperture radar images under complex geographical environments, based on deep learning and morphological networks[J]. Sensors, 2024, 24(13): 4290. doi: 10.3390/s24134290

[17] Zhang T W, Zhang X L, Ke X. Quad-FPN: a novel quad feature pyramid network for SAR ship detection[J]. Remote Sens, 2021, 13(14): 2771. doi: 10.3390/rs13142771

[18] Tang G, Zhao H R, Claramunt C, et al. PPA-Net: pyramid pooling attention network for multi-scale ship detection in SAR images[J]. Remote Sens, 2023, 15(11): 2855. doi: 10.3390/rs15112855

[19] 袁志安, 谷雨, 马淦. 面向多类别舰船多目标跟踪的改进CSTrack算法[J]. 光电工程, 2023, 50(12): 230218. doi: 10.12086/oee.2023.230218

Yuan Z A, Gu Y, Ma G. Improved CSTrack algorithm for multi-class ship multi-object tracking[J]. Opto-Electron Eng, 2023, 50(12): 230218. doi: 10.12086/oee.2023.230218

[20] Li X N, Chen P, Yang J S, et al. TKP-net: a three keypoint detection network for ships using SAR imagery[J]. IEEE J Sel Top Appl Earth Obs Remote Sens, 2024, 17: 364−376. doi: 10.1109/jstars.2023.3329252

[21] Tang Y, Wang S G, Wei J, et al. Scene-aware data augmentation for ship detection in SAR images[J]. Int J Remote Sens, 2024, 45(10): 3396−3411. doi: 10.1080/01431161.2024.2343433

[22] Chen S W, Mei L Y. Structure similarity virtual map generation network for optical and SAR image matching[J]. Front Phys, 2024, 12: 1287050. doi: 10.3389/fphy.2024.1287050

[23] Li Y X, Li X, Li W J, et al. SARDet-100k: towards open-source benchmark and toolkit for large-scale SAR object detection[Z]. arXiv: 2403.06534, 2024. https://doi.org/10.48550/arxiv.2403.06534.

[24] Khanam R, Hussain M. YOLOv11: an overview of the key architectural enhancements[Z]. arXiv: 2410.17725, 2024. https://doi.org/10.48550/arXiv.2410.17725.

[25] Feng C J, Zhong Y J, Gao Y, et al. TOOD: task-aligned one-stage object detection[C]//Proceedings of 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, 2021: 3490–3499. https://doi.org/10.1109/ICCV48922.2021.00349.

[26] Li J F, Wen Y, He L H. SCConv: spatial and channel reconstruction convolution for feature redundancy[C]//Proceedings of 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, 2023: 6153–6162. https://doi.org/10.1109/CVPR52729.2023.00596.

[27] Zhang T W, Zhang X L, Li J W, et al. SAR ship detection dataset (SSDD): official release and comprehensive data analysis[J]. Remote Sens, 2021, 13(18): 3690. doi: 10.3390/rs13183690

[28] Wei S J, Zeng X F, Qu Q Z, et al. HRSID: a high-resolution SAR images dataset for ship detection and instance segmentation[J]. IEEE Access, 2020, 8: 120234−120254. doi: 10.1109/access.2020.3005861

[29] Zheng Z H, Wang P, Liu W, et al. Distance-IoU loss: faster and better learning for bounding box regression[C]//Proceedings of the AAAI Conference on Artificial Intelligence, New York, 2020: 12993–13000. https://doi.org/10.1609/aaai.v34i07.6999.

[30] Rezatofighi H, Tsoi N, Gwak J Y, et al. Generalized intersection over union: a metric and a loss for bounding box regression[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, 2019: 658–666. https://doi.org/10.1109/cvpr.2019.00075.

[31] Zheng Z H, Wang P, Ren D W, et al. Enhancing geometric factors in model learning and inference for object detection and instance segmentation[J]. IEEE Trans Cybern, 2022, 52(8): 8574−8586. doi: 10.1109/TCYB.2021.3095305

[32] Gevorgyan Z. SIoU loss: more powerful learning for bounding box regression[Z]. arXiv: 2205.12740, 2022. https://doi.org/10.48550/arxiv.2205.12740.

[33] Ma S L, Xu Y. MPDIoU: a loss for efficient and accurate bounding box regression[Z]. arXiv: 2307.07662, 2023. https://doi.org/10.48550/arxiv.2307.07662.

[34] Liu C, Wang K G, Li Q, et al. Powerful-IoU: more straightforward and faster bounding box regression loss with a nonmonotonic focusing mechanism[J]. Neural Networks, 2024, 170: 276−284. doi: 10.1016/j.neunet.2023.11.041

[35] Sun P Z, Zhang R F, Jiang Y, et al. Sparse R-CNN: end-to-end object detection with learnable proposals[C]//Proceedings of 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, 2021: 14449–14458. https://doi.org/10.1109/CVPR46437.2021.01422.

[36] Lyu C Q, Zhang W W, Huang H A, et al. RTMDet: an empirical study of designing real-time object detectors[Z]. arXiv: 2212.07784, 2022. https://doi.org/10.48550/ARXIV.2212.07784.

[37] Jocher G, Qiu J, Chaurasia A. YOLOv8 by Ultralytics[EB/OL]//GitHub. (2023-01-01)[2023-12-17]. https://github.com/ultralytics/ultralytics.

[38] Wang Z Y, Li C, Xu H Y, et al. Mamba YOLO: a simple baseline for object detection with state space model[Z]. arXiv preprint arXiv: 2406.05835, 2024. https://doi.org/10.48550/arxiv.2406.05835.

[39] Zhang S L, Wang X J, Wang J Q, et al. Dense distinct query for end-to-end object detection[C]//Proceedings of 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, 2023: 7329–7338. https://doi.org/10.1109/cvpr52729.2023.00708.

[40] Zhang H, Li F, Liu S L, et al. DINO: DETR with improved DeNoising anchor boxes for end-to-end object detection[C]//Proceedings of the 11th International Conference on Learning Representations, Kigali, 2022: 1–19.

[41] Zhao Y, Lv W Y, Xu S L, et al. DETRs beat YOLOs on real-time object detection[C]//Proceedings of 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, 2024: 16965–16974. https://doi.org/10.1109/CVPR52733.2024.01605.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: