-

摘要:

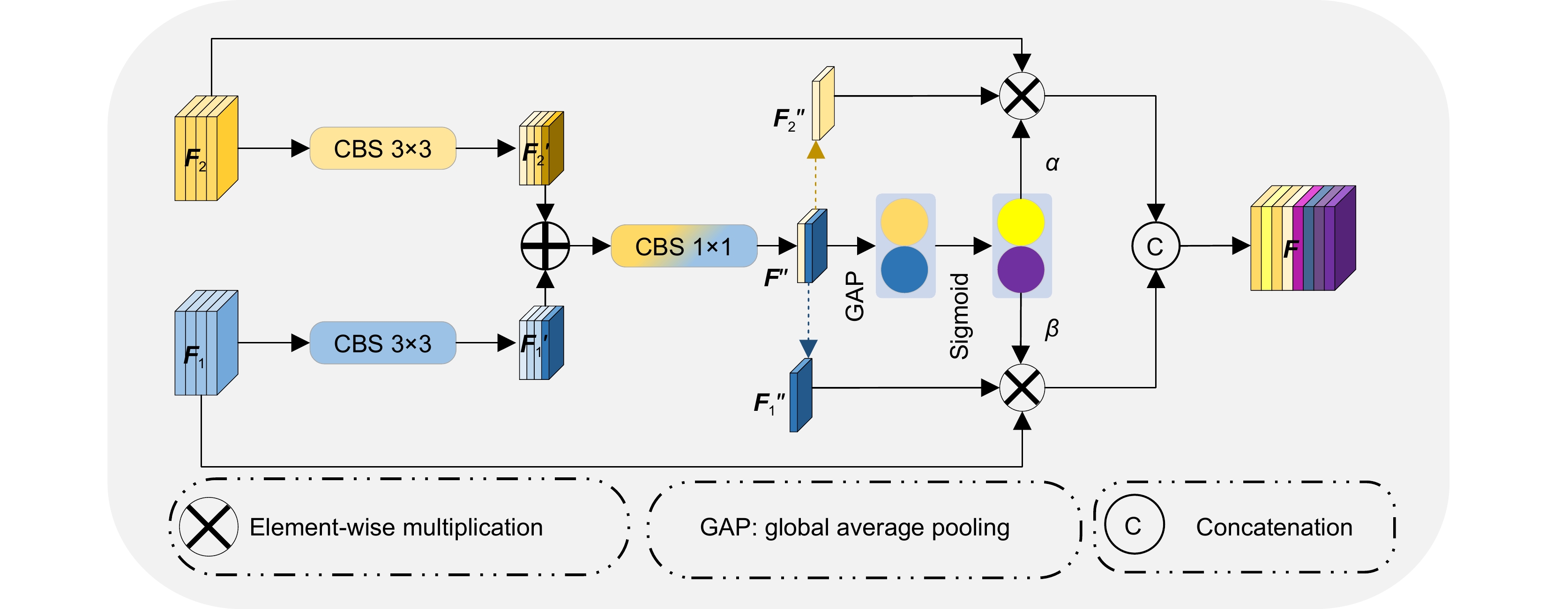

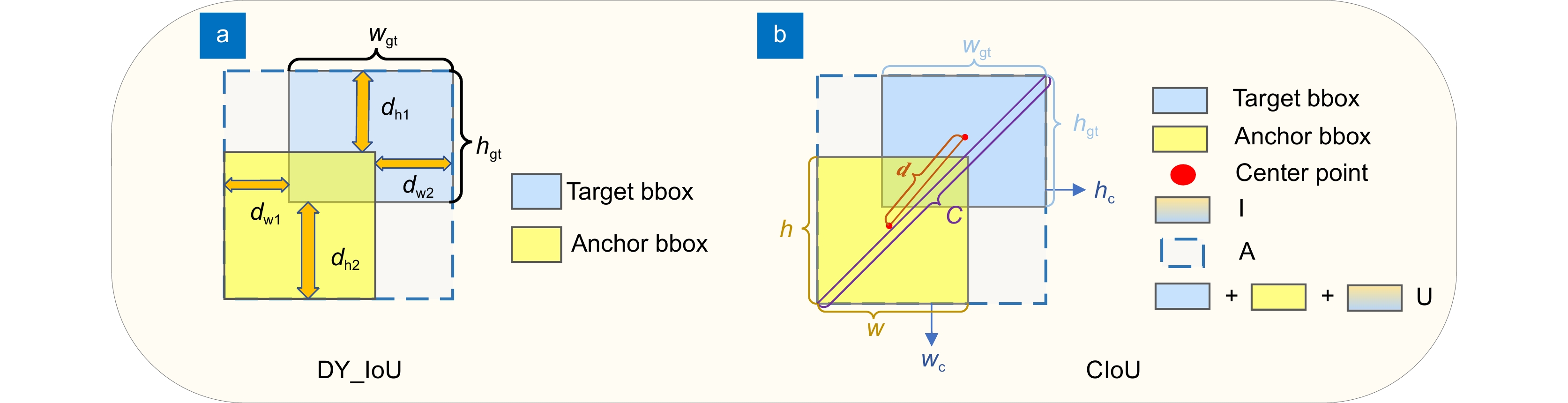

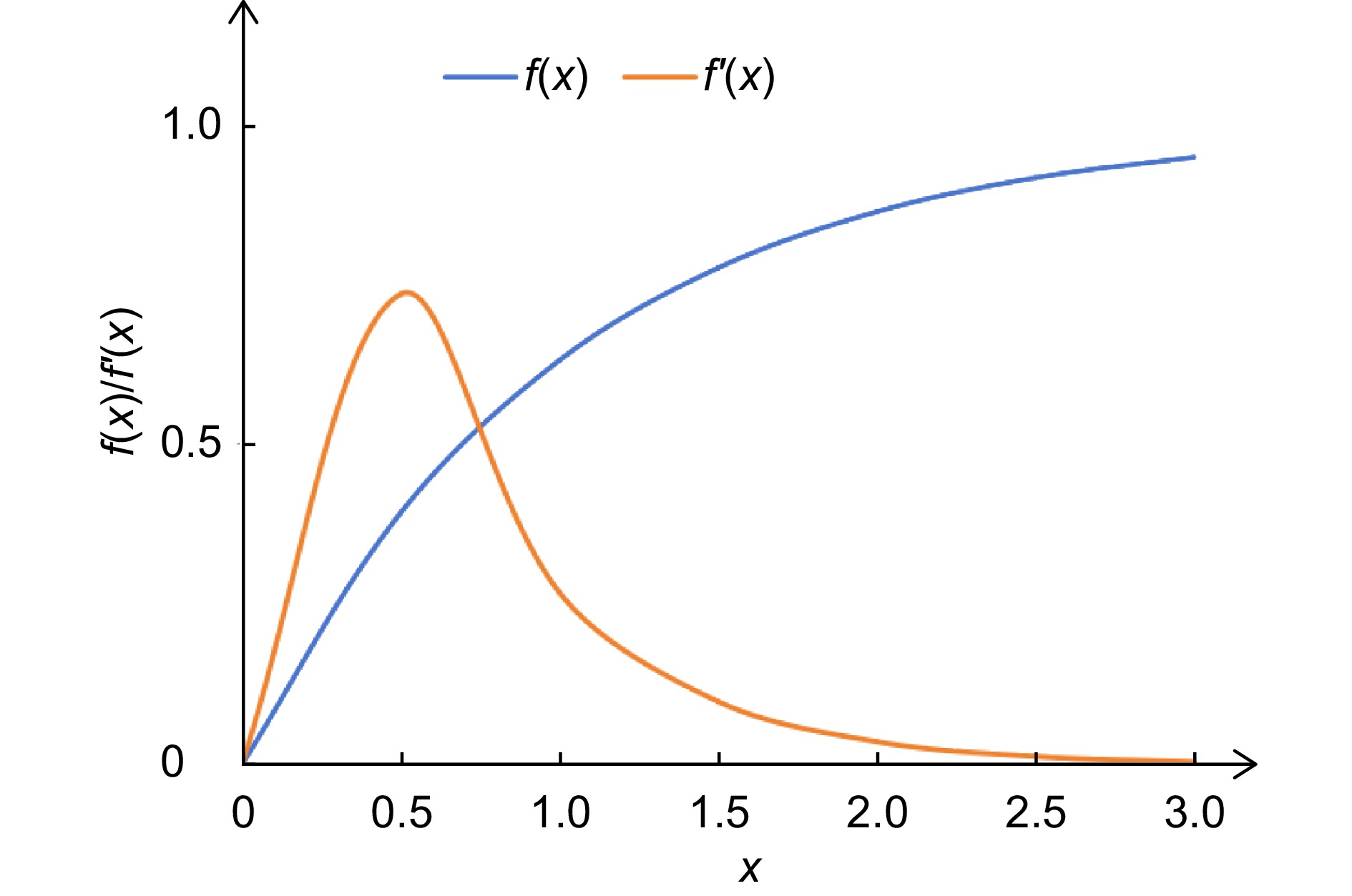

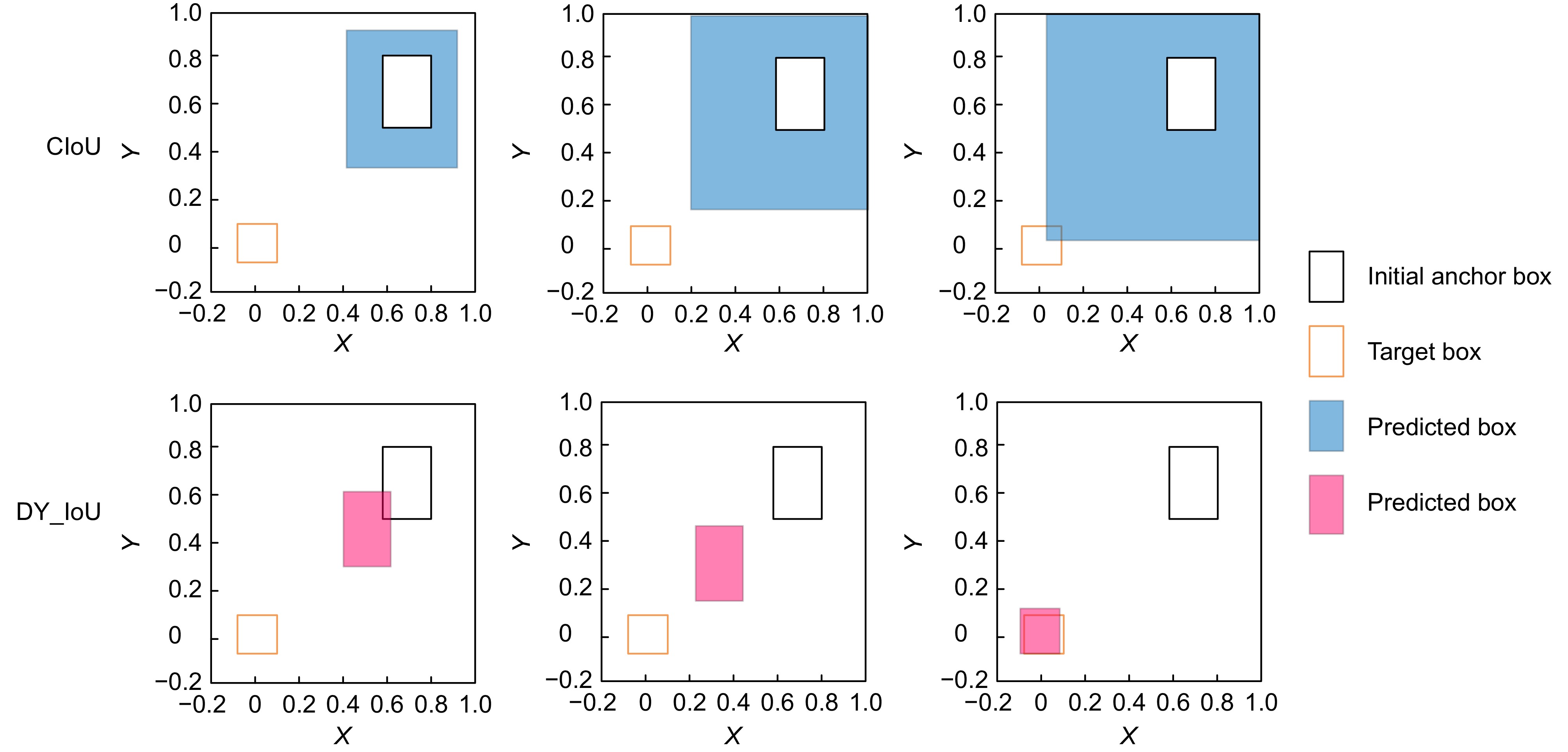

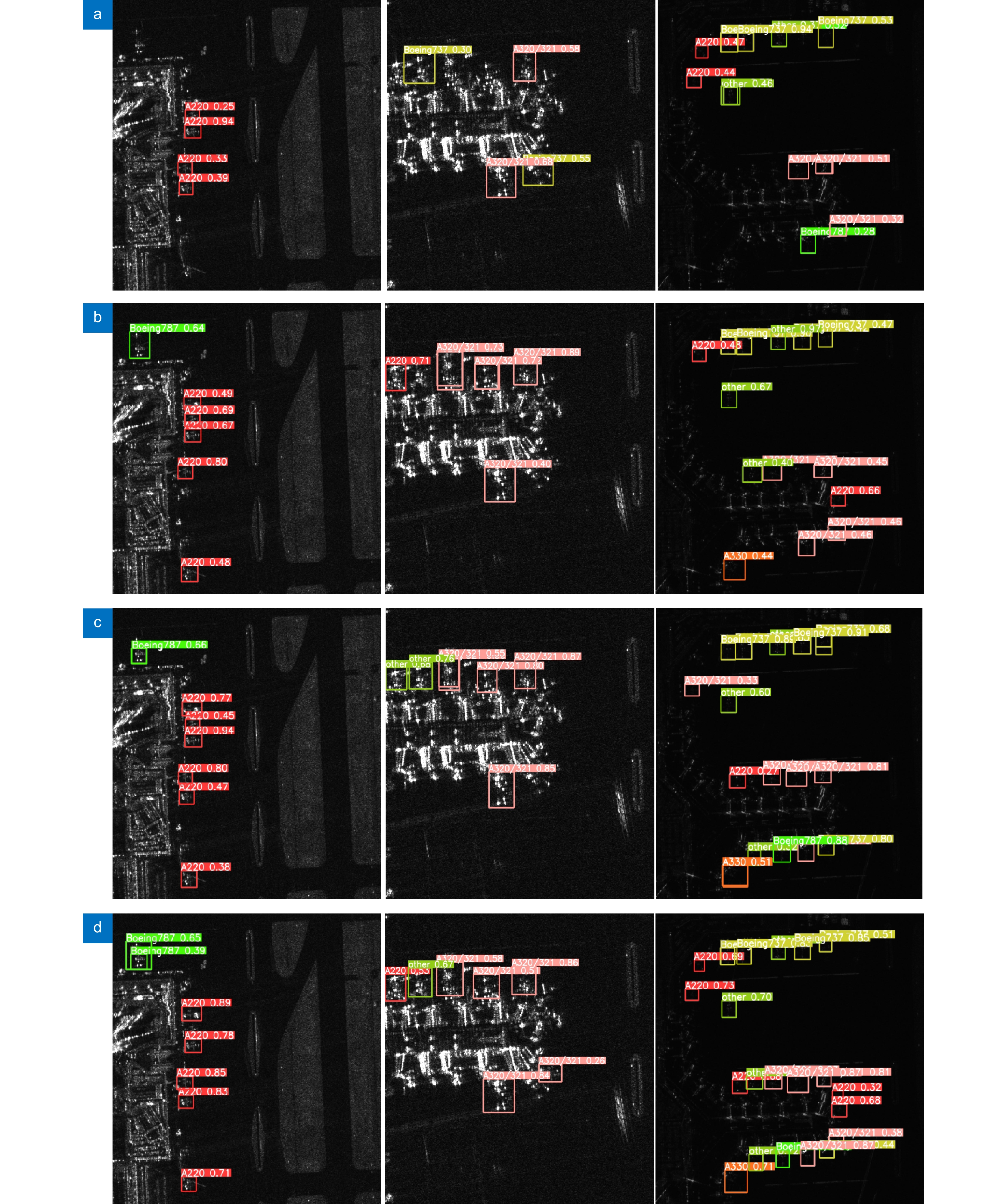

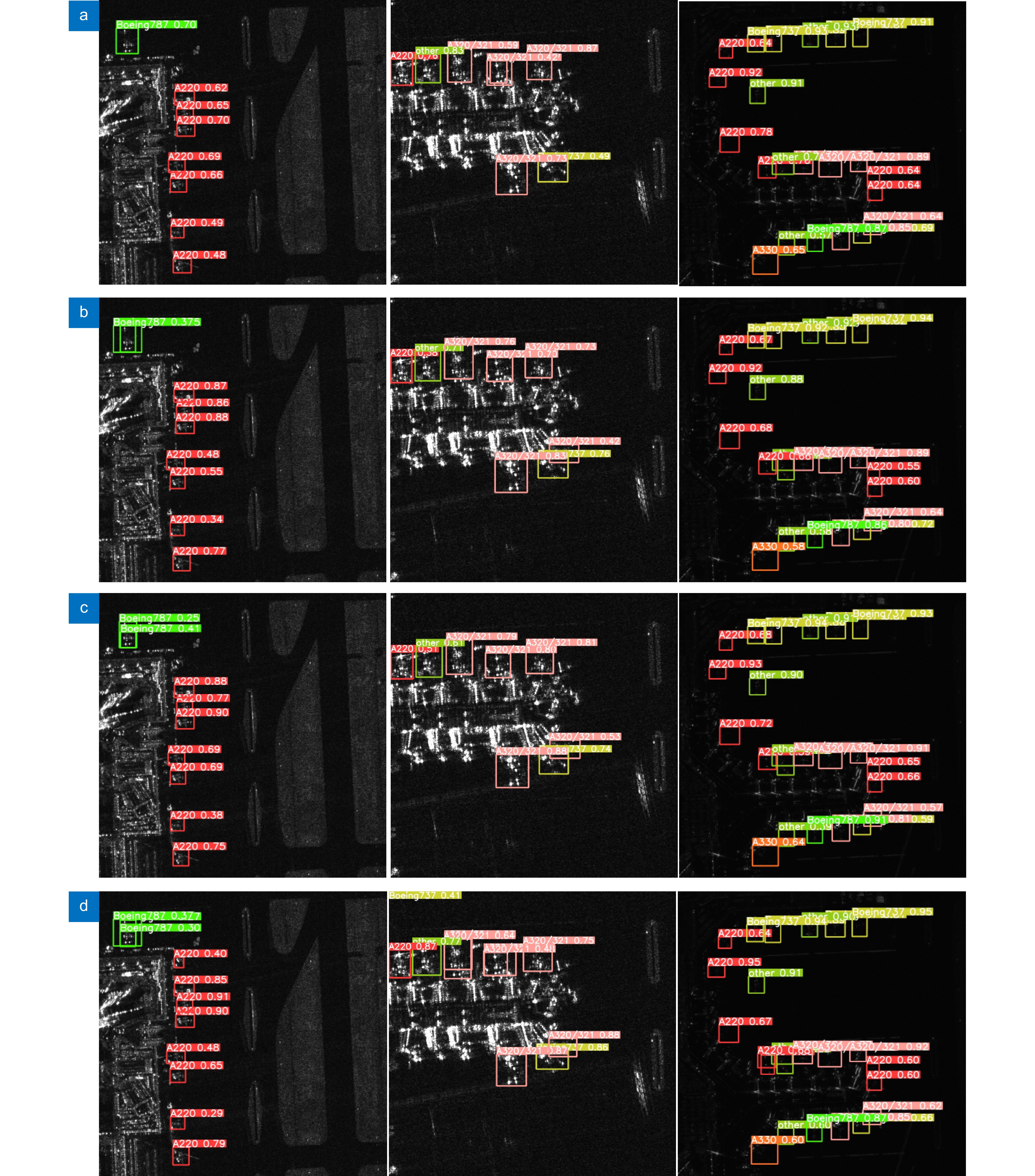

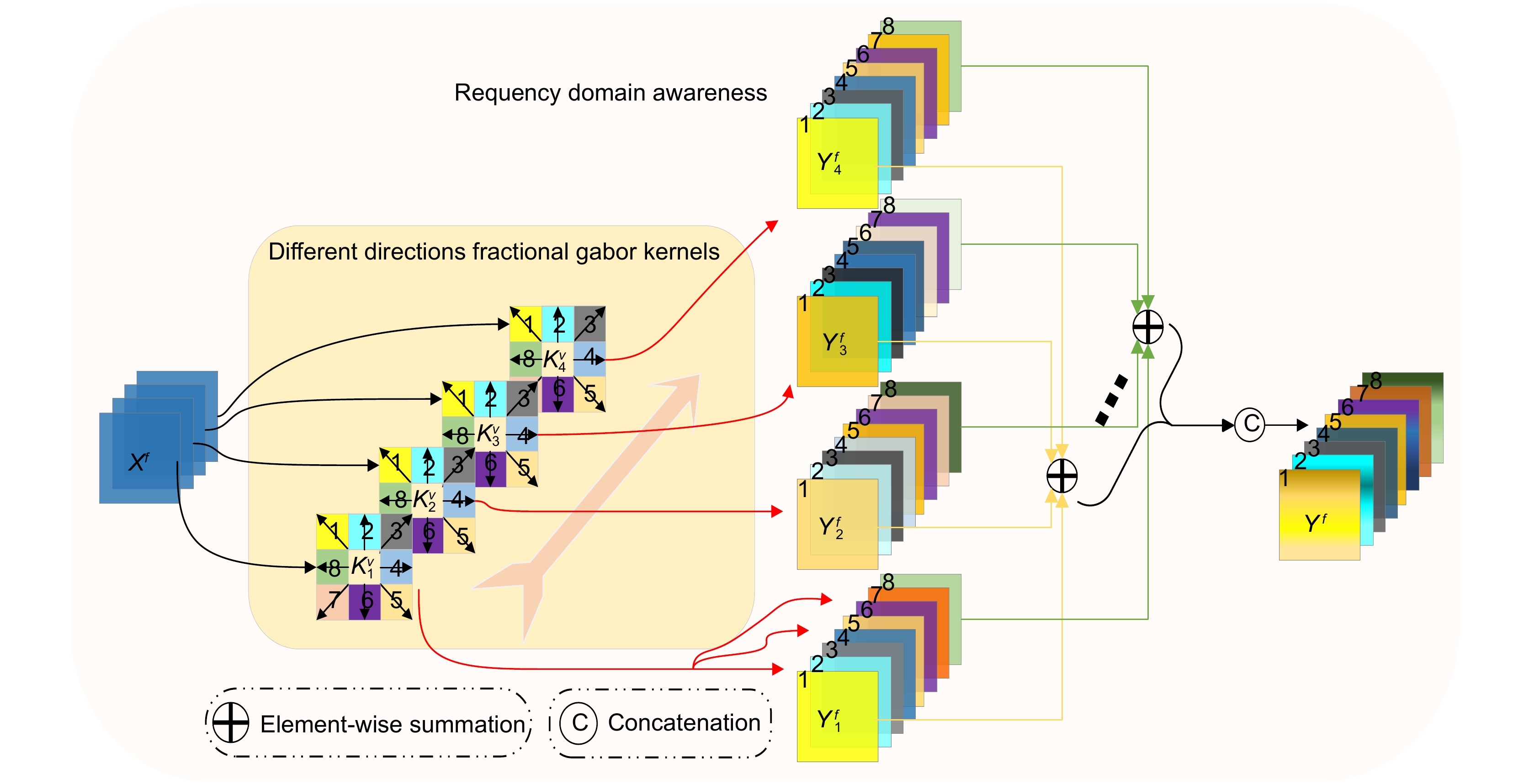

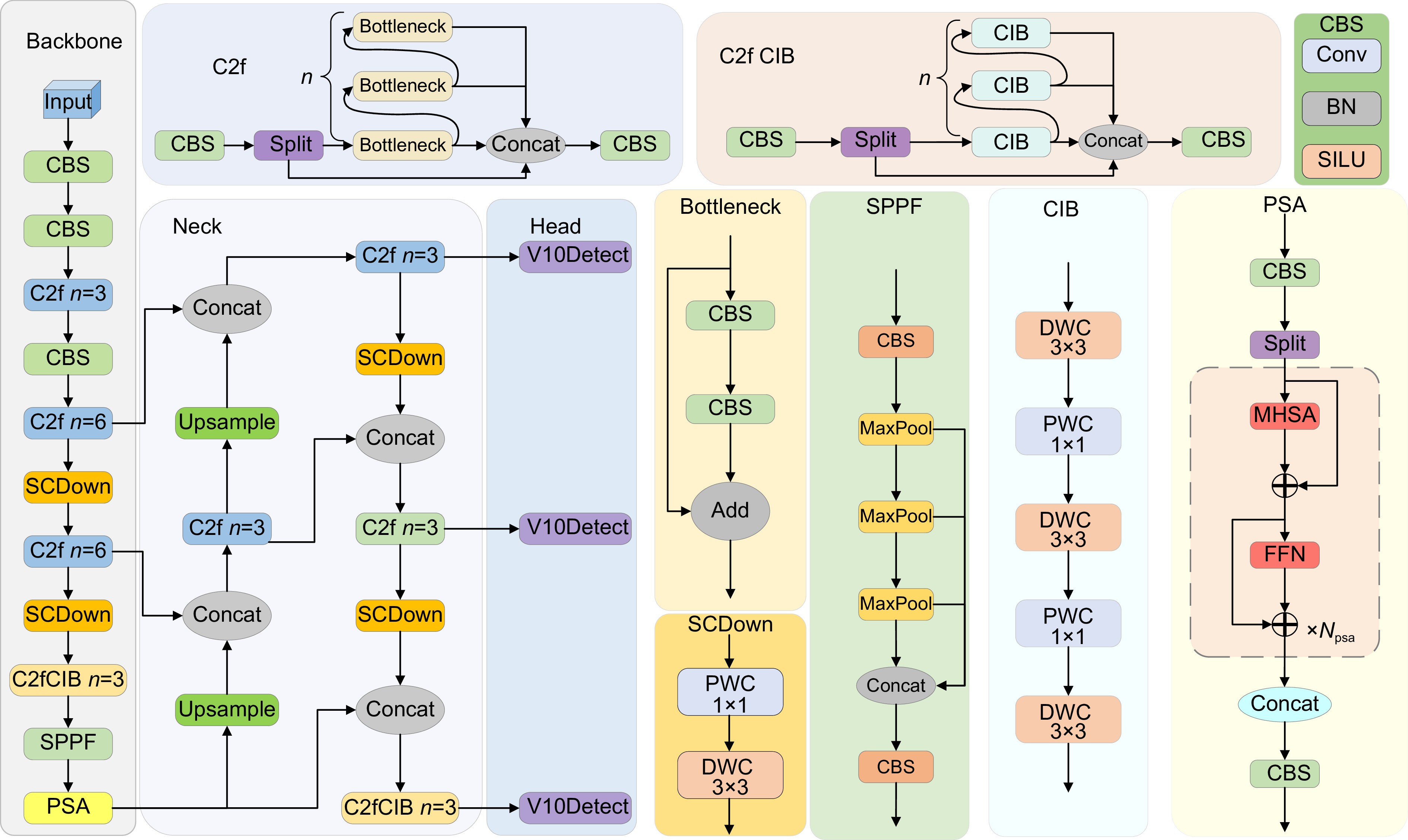

针对合成孔径雷达 (synthetic aperture radar, SAR)图像样本特征差异大、目标尺度不均衡、背景散斑噪声高所导致的检测精度低、推理速度慢问题,提出一种融合空-频域的动态SAR图像目标检测算法。首先,采用分流感知策略构造空-频域感知单元,结合动态感受野及分数阶Gabor变换法,增强算法对空间多样性特征和频率散射特征的捕获能力与感知力,优化模型对全局上下文信息的保留能力,加快推理速度,降低特征映射模式相似性与背景噪声干扰,有效改善漏检、误检情况。其次,采用重参数学习法设计自适应特征融合模块,优化多尺度特征间的交互与整合,丰富特征的多样性,缓解特征采样引起的差异映射与信息丢失问题,加强小目标信息与关键频率信息在融合过程中的显著性,提高多尺度样本检测精度。最后,引入DY_IoU动态回归损失函数,利用自适应尺度惩罚因子与动态非单调注意力机制解决锚框膨胀和位置偏差问题,进一步增强模型对多尺度目标的定位与检测能力,加快模型收敛速度,减少模型计算量。在公开数据集SAR-Acraft-1.0和HRSID上进行相关实验,实验结果表明:该方法mAP@0.5数值达到了95.9%和98.8%,较基线模型分别提升5.2%和1.2%,且优于其他对比算法。表明该算法显著提升了检测精度,具备良好的鲁棒性与泛化性。

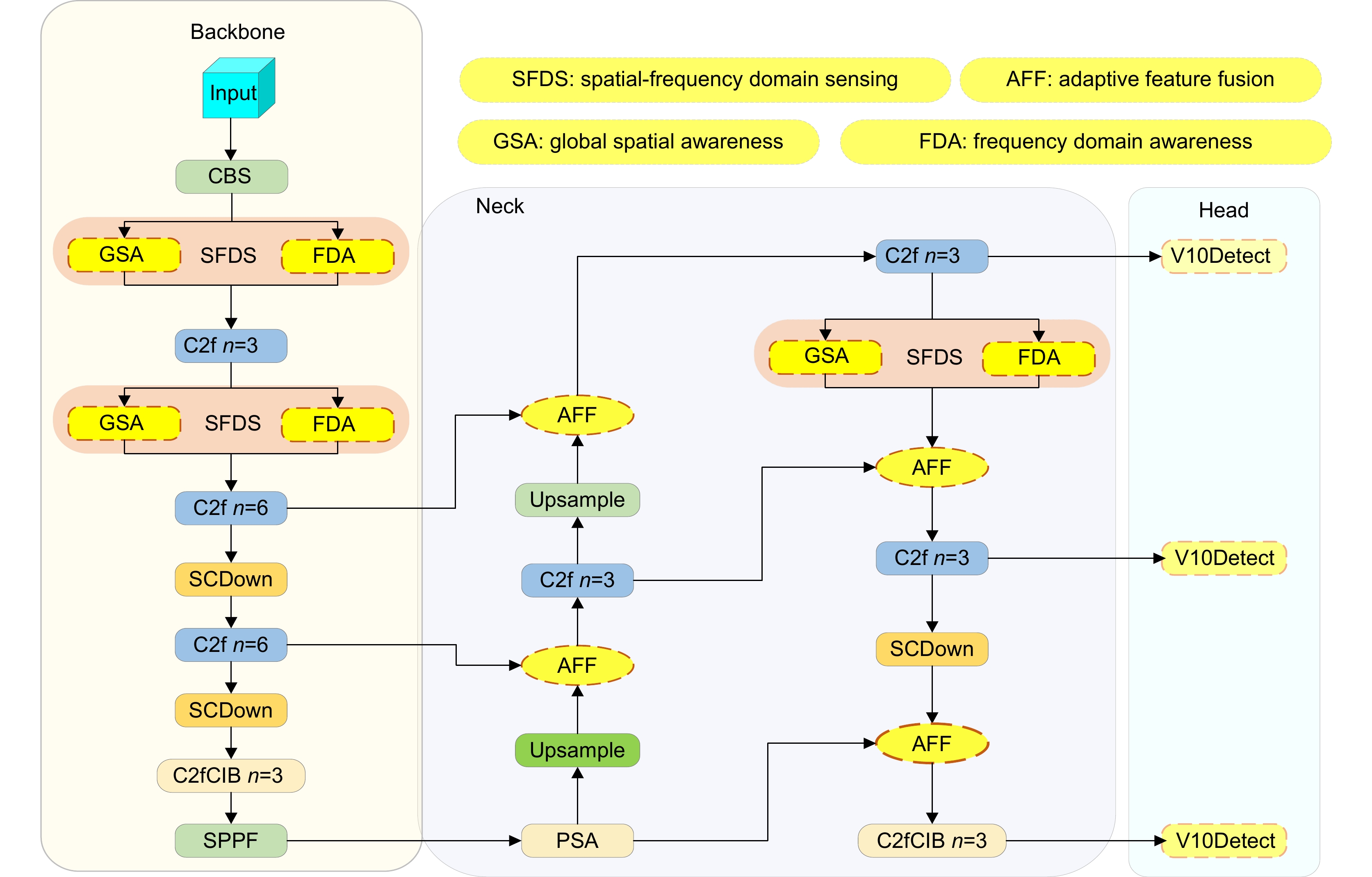

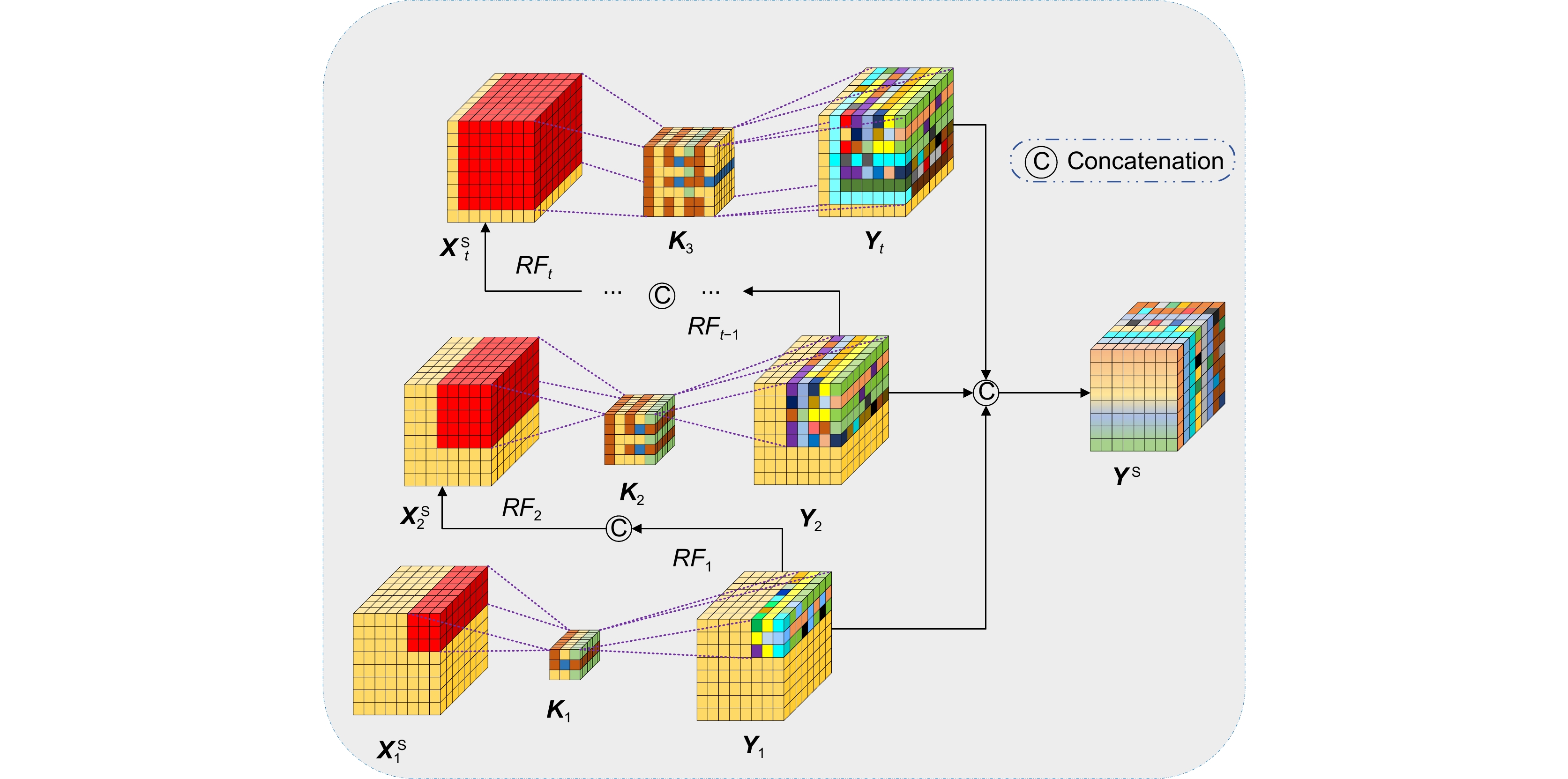

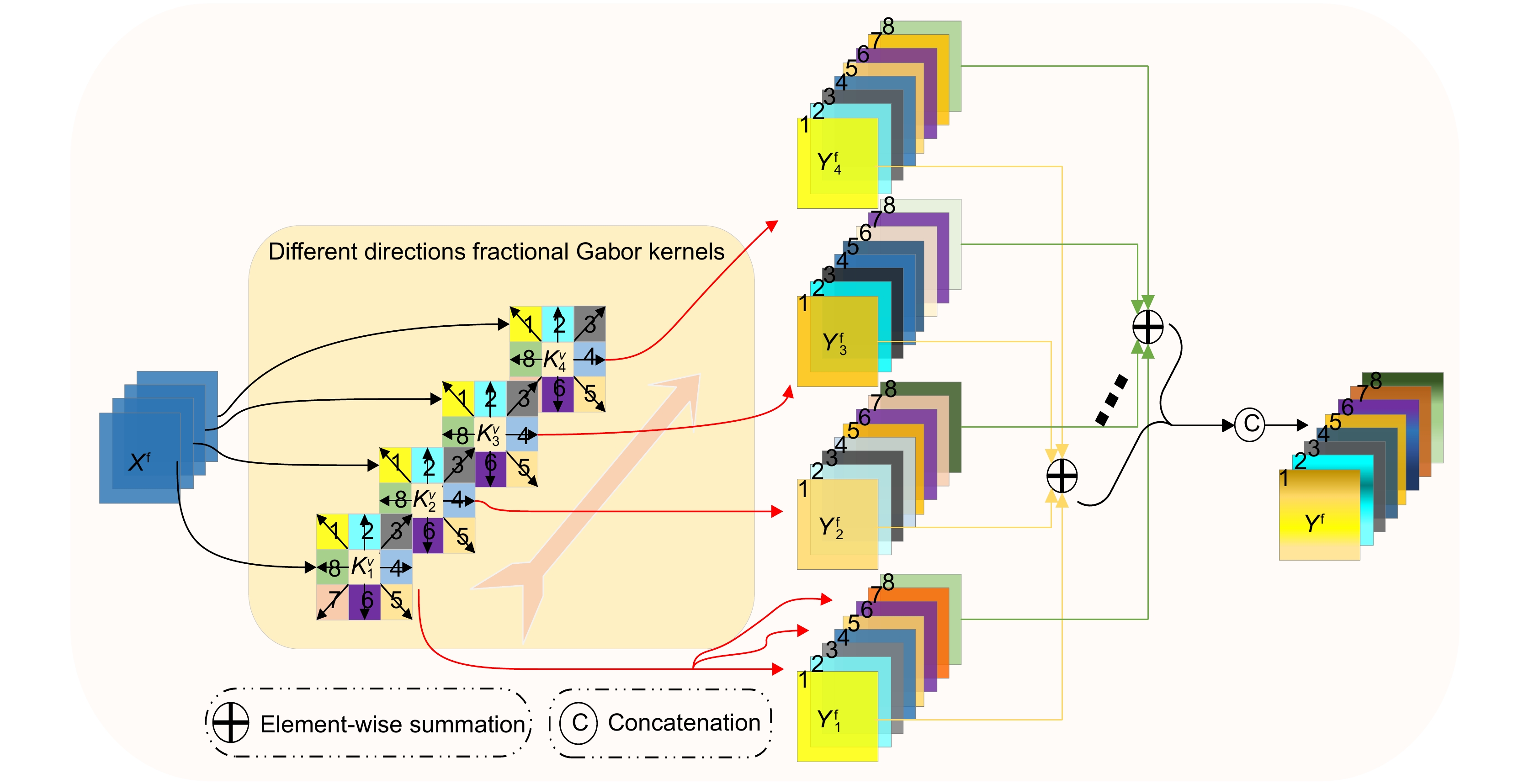

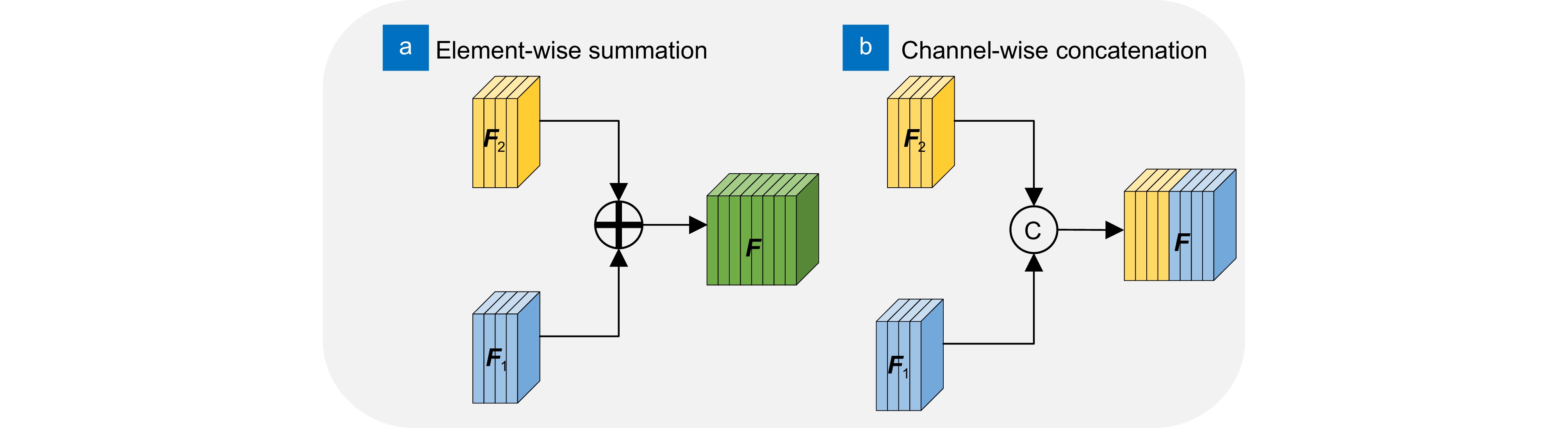

Abstract:A dynamic SAR image target detection algorithm integrating space-frequency domains is proposed to address challenges such as significant feature differences in SAR image samples, imbalanced target scales, and high speckle noise in the background, which result in low detection accuracy and slow inference speed. First, a dual-stream perception strategy constructs spatial-frequency perception units, leveraging dynamic receptive fields and fractional-order Gabor transforms to enhance the model’s ability to capture spatial diversity and frequency scattering features. This way improves the retention of global contextual information, accelerates inference, reduces the similarity of feature mapping patterns, and mitigates background noise interference, effectively reducing missed and false detections. Second, a re-parameterization-based adaptive feature fusion module is designed to optimize interactions across multi-scale features, enriching feature diversity, alleviating mapping discrepancies and information loss caused by feature sampling, and enhancing the salience of small target and key frequency information during fusion, thereby improving detection precision. Finally, the DY_IoU dynamic regression loss function is introduced, utilizing adaptive scale penalty factors and a dynamic non-monotonic attention mechanism to address anchor box expansion and positional deviation, further enhancing the localization and detection capabilities for multi-scale targets. This way also accelerates model convergence and reduces computational overhead. Experiments conducted on the public datasets SAR-Acraft-1.0 and HRSID demonstrate that the proposed method achieves mAP@0.5 values of 95.9% and 98.8%, respectively, representing 5.2% and 1.2% improvements over baseline models and outperforming other comparison algorithms. These results indicate that the proposed algorithm improves detection accuracy and exhibits strong robustness and generalization capabilities.

-

Overview: A dynamic SAR image target detection algorithm integrating spatial-frequency domains is proposed to address several challenges inherent to SAR imagery, including significant feature variability, imbalanced target scales, and high speckle noise in background regions. These challenges contribute to decreased detection accuracy and slower inference speeds, posing difficulties for real-time applications. The proposed method is specifically designed to overcome these limitations through multiple innovative components that enhance both detection performance and computational efficiency. The algorithm first employs a dual-stream perception strategy to construct spatial-frequency perception units. This design integrates both dynamic receptive fields and fractional-order Gabor transforms, significantly improving the model’s ability to capture spatial diversity and frequency scattering features. By expanding the receptive fields adaptively, the algorithm captures both local and global contexts, leading to more effective extraction of complex patterns in the input data. Using fractional-order Gabor transforms further enhances the model's sensitivity to fine-grained texture and frequency features, which helps retain important global contextual information. These improvements collectively speed up inference by minimizing redundant feature representations, reducing the interference from background noise, and decreasing the similarity of feature mapping patterns. Consequently, the algorithm effectively addresses common issues such as missed and false detections, are typical in cluttered SAR images. In the next stage, a re-parameterization-based adaptive feature fusion module is introduced to optimize the interaction between multi-scale features. This module facilitates the efficient integration of features across different scales, enriching feature diversity and mitigating the discrepancies introduced during the sampling process. Additionally, the fusion process highlights the salience of small targets and key frequency information, often challenging to detect in traditional SAR detection frameworks. This enhanced multi-scale feature integration improves the detection accuracy, particularly for small and subtle objects, which are crucial in applications like maritime surveillance and remote sensing. To further enhance the algorithm’s effectiveness, a dynamic regression loss function, DY_IoU, is incorporated. This loss function employs adaptive scale penalty factors and a dynamic non-monotonic attention mechanism to address anchor box expansion and positional deviations. By dynamically adjusting the focus during training, the model achieves more precise localization of multi-scale targets. Moreover, the improved loss function facilitates faster convergence, reduces the computational burden, and ensures the algorithm remains lightweight and efficient for practical deployment. The proposed method was evaluated on two publicly available datasets, SAR-Acraft-1.0 and HRSID. Experimental results show that the algorithm achieves mAP@0.5 values of 95.9% and 98.8%, respectively, representing 5.2% and 1.2% improvements over baseline models. Additionally, the proposed approach outperforms other comparison algorithms, demonstrating its superiority. These results confirm that the algorithm not only enhances detection accuracy but also exhibits strong robustness and generalization capabilities, making it suitable for a wide range of real-world applications.

-

-

表 1 所提算法在SAR-AIRcarft-1.0数据集的消融实验

Table 1. Ablation experiments of the proposed algorithm on the SAR-AIRcarft-1.0 dataset

YOLOV10 SFDS AFF DY_IoU Precision/% Recall/% mAP0.5/% Params/106 GFLOPs √ — — — 86.9 89.4 90.7 2.60 8.4 √ √ — — 87.6 92.1 92.9 1.98 6.6 √ — √ — 82.3 90.0 90.8 3.18 9.3 √ — — √ 84.9 90.9 91.7 2.47 7.8 √ √ √ — 88.8 94.0 94.5 2.58 8.1 √ √ — √ 90.9 95.7 95.1 2.43 7.7 √ — √ √ 86.0 92.8 93.1 2.61 8.4 √ √ √ √ 97.1 91.1 95.9 2.55 8.0 表 2 SAR-AIRcarft-1.0数据集对比实验结果

Table 2. Results of comparison experiments on the SAR-AIRcarft-1.0 dataset

Model Precision/% Recall/% mAP50/% F1/% GFLOPs Faster R-CNN 79.0 68.5 75.9 73.4 137.5 Center-Net 62.8 71.9 70.8 67.6 51.6 YOLOv5s 90.5 81.1 86.9 85.5 16.5 SKG-Net 85.6 75.8 70.6 59.7 120 YOLOv8s 92.3 81.8 90.0 86.7 28.6 YOLOv10s 96.9 89.4 90.7 88.1 8.4 SFS-CNet 94.7 84.5 89.9 89.3 6.9 Ours 97.1 91.1 95.9 94.0 6.2 表 3 HRSID数据集对比实验结果

Table 3. Results of comparison experiments on the HRSID dataset

算法 Precision/% Recall/% mAP50/% F1/% GFLOPs Faster R-CNN 86.3 81.6 86.3 83.9 137.5 Center-Net 90.5 74.1 83.3 81.5 51.6 YOLOv5s 94.3 89.4 88.1 91.7 16.5 SKG-Net 77.8 81.5 81.7 79.6 120 YOLOv8s 90.1 94.4 95.9 92.2 28.6 YOLOv10s 97.8 96.2 97.6 95.9 8.4 SFS-CNet 88.8 95.3 92.9 91.9 6.9 Ours 96.2 97.8 98.8 97.0 6.2 -

[1] 梁礼明, 陈康泉, 王成斌, 等. 融合视觉中心机制和并行补丁感知的遥感图像检测算法[J]. 光电工程, 2024, 51 (7): 240099. doi: 10.12086/oee.2024.240099

Liang L M, Chen K Q, Wang C B, et al. Remote sensing image detection algorithm integrating visual center mechanism and parallel patch perception[J]. Opto-Electron Eng, 2024, 51 (7): 240099. doi: 10.12086/oee.2024.240099

[2] 肖振久, 张杰浩, 林渤翰. 特征协同与细粒度感知的遥感图像小目标检测[J]. 光电工程, 2024, 51 (6): 240066. doi: 10.12086/oee.2024.240066

Xiao Z J, Zhang J H, Lin B H. Feature coordination and fine-grained perception of small targets in remote sensing images[J]. Opto-Electron Eng, 2024, 51 (6): 240066. doi: 10.12086/oee.2024.240066

[3] 马梁, 苟于涛, 雷涛, 等. 基于多尺度特征融合的遥感图像小目标检测[J]. 光电工程, 2022, 49 (4): 210363. doi: 10.12086/oee.2022.210363

Ma L, Gou Y T, Lei T, et al. Small object detection based on multi-scale feature fusion using remote sensing images[J]. Opto-Electron Eng, 2022, 49 (4): 210363. doi: 10.12086/oee.2022.210363

[4] Ren S Q, He K M, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Trans Pattern Anal Mach Intell, 2017, 39 (6): 1137−1149. doi: 10.1109/TPAMI.2016.2577031

[5] Redmon J, Divvala S, Girshick R, et al. You only look once: unified, real-time object detection[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016: 779–788. https://doi.org/10.1109/CVPR.2016.91.

[6] Redmon J, Farhadi A. YOLO9000: better, faster, stronger[C]//IEEE Conference on Computer Vision and Pattern Recognition, 2017: 6517–6525. https://doi.org/10.1109/CVPR.2017.690.

[7] Redmon J, Farhadi A. YOLOv3: an incremental improvement[Z]. arXiv: 1804.02767, 2018. https://doi.org/10.48550/arXiv.1804.02767.

[8] Bochkovskiy A, Wang C Y, Liao H Y M. YOLOv4: optimal speed and accuracy of object detection[Z]. arXiv: 2004.10934, 2020. https://doi.org/10.48550/arXiv.2004.10934.

[9] Ge Z, Liu S T, Wang F, et al. YOLOX: exceeding YOLO series in 2021[Z]. arXiv: 2107.08430, 2021. https://doi.org/10.48550/arXiv.2107.08430.

[10] Lyu Z W, Jin H F, Zhen T, et al. Small object recognition algorithm of grain pests based on SSD feature fusion[J]. IEEE Access, 2021, 9: 43202−43213. doi: 10.1109/ACCESS.2021.3066510

[11] Zhang L P, Liu Y, Zhao W D, et al. Frequency-adaptive learning for SAR ship detection in clutter scenes[J]. IEEE Trans Geosci Remote Sens, 2023, 61: 5215514. doi: 10.1109/TGRS.2023.3249349

[12] Si J H, Song B B, Wu J X, et al. Maritime ship detection method for satellite images based on multiscale feature fusion[J]. IEEE J Sel Top Appl Earth Obs Remote Sens, 2023, 16: 6642−6655. doi: 10.1109/JSTARS.2023.3296898

[13] Qin C, Wang X Q, Li G, et al. A semi-soft label-guided network with self-distillation for SAR inshore ship detection[J]. IEEE Trans Geosci Remote Sens, 2023, 61: 5211814. doi: 10.1109/TGRS.2023.3293535

[14] 胥小我, 张晓玲, 张天文, 等. 基于自适应锚框分配与IOU监督的复杂场景SAR舰船检测[J]. 雷达学报, 2023, 12 (5): 1097−1111. doi: 10.12000/JR23059

Xu X W, Zhang X L, Zhang T W, et al. SAR ship detection in complex scenes based on adaptive anchor assignment and IOU supervise[J]. J Radars, 2023, 12 (5): 1097−1111. doi: 10.12000/JR23059

[15] 肖振久, 林渤翰, 曲海成. 融合多重机制的SAR舰船检测[J]. 中国图象图形学报, 2024, 29 (2): 545−558. doi: 10.11834/jig.230166

Xiao Z J, Lin B H, Qu H C. SAR ship detection with multi-mechanism fusion[J]. J Image Graphics, 2024, 29 (2): 545−558. doi: 10.11834/jig.230166

[16] 孙培双, 温显斌. 基于改进YOLOv5模型的SAR图像舰船目标检测算法[J]. 电光与控制, 2024, 31 (8): 32−37,85. doi: 10.3969/j.issn.1671-637X.2024.08.005

Sun P S, Wen X B. An improved algorithm for detecting ship target in SAR images based on YOLOv5 model[J]. Electron-Opt Control, 2024, 31 (8): 32−37,85. doi: 10.3969/j.issn.1671-637X.2024.08.005

[17] Li K, Wang D, Hu Z Y, et al. Unleashing channel potential: space-frequency selection convolution for SAR object detection[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2024: 17323–17332. https://doi.org/10.1109/CVPR52733.2024.01640.

[18] Zhou J, Xiao C, Peng B, et al. DiffDet4SAR: diffusion-based aircraft target detection network for SAR images[J]. IEEE Geosci Remote Sens Lett, 2024, 21: 4007905. doi: 10.1109/LGRS.2024.3386020

[19] Wang A, Chen H, Liu L H, et al. YOLOv10: real-time end-to-end object detection[Z]. arXiv: 2405.14458, 2024. https://doi.org/10.48550/arXiv.2405.14458.

[20] Zhang P F, Lo E, Lu B T. High performance depthwise and pointwise convolutions on mobile devices[C]//Proceedings of the 34th AAAI Conference on Artificial Intelligence, 2020: 6795–6802. https://doi.org/10.1609/aaai.v34i04.6159.

[21] Guo Y H, Li Y D, Wang L Q, et al. Depthwise convolution is all you need for learning multiple visual domains[C]//Proceedings of the 33rd AAAI Conference on Artificial Intelligence, 2019: 8368–8375. https://doi.org/10.1609/aaai.v33i01.33018368.

[22] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016: 770–778. https://doi.org/10.1109/CVPR.2016.90.

[23] Wu H B, Kuo H C, Zheng N J, et al. Partially fake audio detection by self-attention-based fake span discovery[C]//ICASSP 2022–2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2022: 9236–9240. https://doi.org/10.1109/ICASSP43922.2022.9746162.

[24] Vaswani A, Shazeer N, Parmar N, et al. Attention is all you need[C]//Proceedings of the 31st International Conference on Neural Information Processing Systems, 2017: 6000–6010.

[25] Bebis G, Georgiopoulos M. Feed-forward neural networks[J]. IEEE Potentials, 1994, 13 (4): 27−31. doi: 10.1109/45.329294

[26] Chollet F. Xception: deep learning with depthwise separable convolutions[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2017: 1800–1807. https://doi.org/10.1109/CVPR.2017.195.

[27] Neubeck A, Van Gool L. Efficient non-maximum suppression[C]//18th International Conference on Pattern Recognition (ICPR'06), 2006: 850–855. https://doi.org/10.1109/ICPR.2006.479.

[28] Li J F, Wen Y, He L H. SCConv: spatial and channel reconstruction convolution for feature redundancy[C]// Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023: 6153–6162. https://doi.org/10.1109/CVPR52729.2023.00596.

[29] Hsiao T Y, Chang Y C, Chou H H, et al. Filter-based deep-compression with global average pooling for convolutional networks[J]. J Syst Archit, 2019, 95: 9−18. doi: 10.1016/j.sysarc.2019.02.008

[30] McClenny L, Braga-Neto U. Self-adaptive physics-informed neural networks using a soft attention mechanism[Z]. arXiv:2009.04544, 2020. https://doi.org/10.48550/arXiv.2009.04544.

[31] Chen J W, An D X, Ge B B, et al. Detection, parameters estimation, and imaging of moving targets based on extended post-Doppler STAP in multichannel WasSAR-GMTI[J]. IEEE Trans Geosci Remote Sens, 2024, 62: 5223515. doi: 10.1109/TGRS.2024.3465435

[32] Koç E, Alikaşifoğlu T, Aras A C, et al. Trainable fractional Fourier transform[J]. IEEE Signal Process Lett, 2024, 31: 751−755. doi: 10.1109/LSP.2024.3372779

[33] Luan S Z, Chen C, Zhang B C, et al. Gabor convolutional networks[J]. IEEE Trans Image Process, 2018, 27 (9): 4357−4366. doi: 10.1109/TIP.2018.2835143

[34] Yin X Y, Goudriaan J, Lantinga E A, et al. A flexible sigmoid function of determinate growth[J]. Ann Bot, 2003, 91 (3): 361−371. doi: 10.1093/aob/mcg029

[35] Gevorgyan Z. SIoU loss: more powerful learning for bounding box regression[Z]. arXiv: 2205.12740, 2022. https://doi.org/10.48550/arXiv.2205.12740.

[36] Zheng Z H, Wang P, Ren D W, et al. Enhancing geometric factors in model learning and inference for object detection and instance segmentation[J]. IEEE Trans Cybern, 2022, 52 (8): 8574−8586. doi: 10.1109/TCYB.2021.3095305

[37] Zhang Y F, Ren W Q, Zhang Z, et al. Focal and efficient IOU loss for accurate bounding box regression[J]. Neurocomputing, 2022, 506: 146−157. doi: 10.1016/j.neucom.2022.07.042

[38] Tong Z J, Chen Y H, Xu Z W, et al. Wise-IoU: bounding box regression loss with dynamic focusing mechanism[Z]. arXiv: 2301.10051, 2023. https://doi.org/10.48550/arXiv.2301.10051.

[39] 王智睿, 康玉卓, 曾璇, 等. SAR-AIRcraft-1.0: 高分辨率SAR飞机检测识别数据集[J]. 雷达学报, 2023, 12 (4): 906−922. doi: 10.12000/JR23043

Wang Z R, Kang Y Z, Zeng X, et al. SAR-AIRcraft-1.0: High-resolution SAR aircraft detection and recognition dataset[J]. J Radars, 2023, 12 (4): 906−922. doi: 10.12000/JR23043

[40] Wei S J, Zeng X F, Qu Q Z, et al. HRSID: a high-resolution SAR images dataset for ship detection and instance segmentation[J]. IEEE Access, 2020, 8: 120234−120254. doi: 10.1109/ACCESS.2020.3005861

[41] Wang Y Y, Wang C, Zhang H, et al. Automatic ship detection based on RetinaNet using multi-resolution Gaofen-3 imagery[J]. Remote Sens, 2019, 11 (5): 531. doi: 10.3390/rs11050531

[42] Pan D C, Gao X, Dai W, et al. SRT-net: scattering region topology network for oriented ship detection in large-scale SAR images[J]. IEEE Trans Geosci Remote Sens, 2024, 62: 5202318. doi: 10.1109/TGRS.2024.3351366

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: