A 3D reconstruction method based on multi-view fusion and hand-eye coordination for objects beyond the visual field

-

摘要

针对动态深度相机单帧视野受限问题,及多帧拼接中的噪声扰动,本文提出了一种基于多视角融合的大型3D目标的位姿测量与重建方法。该方法搭建了深度相机的性能梯度分层模型,采用基于点云法向量的多视角扫描位姿预测,并以高度约束的RANSAC (HC-RANSAC)拟合目标三维模型。以机械臂末端搭载的深度相机进行多角度扫描测量,并将多视角扫描采样所获数据在局部基准坐标系下进行目标模型重建。实验结果表明:与固定深度相机或基于云台视觉的三维重建相比,所提方法具有更大的重建视野和良好的重建精度,可在近距离范围中对大目标进行重建,解决了视野与精度难以兼顾的问题。

Abstract

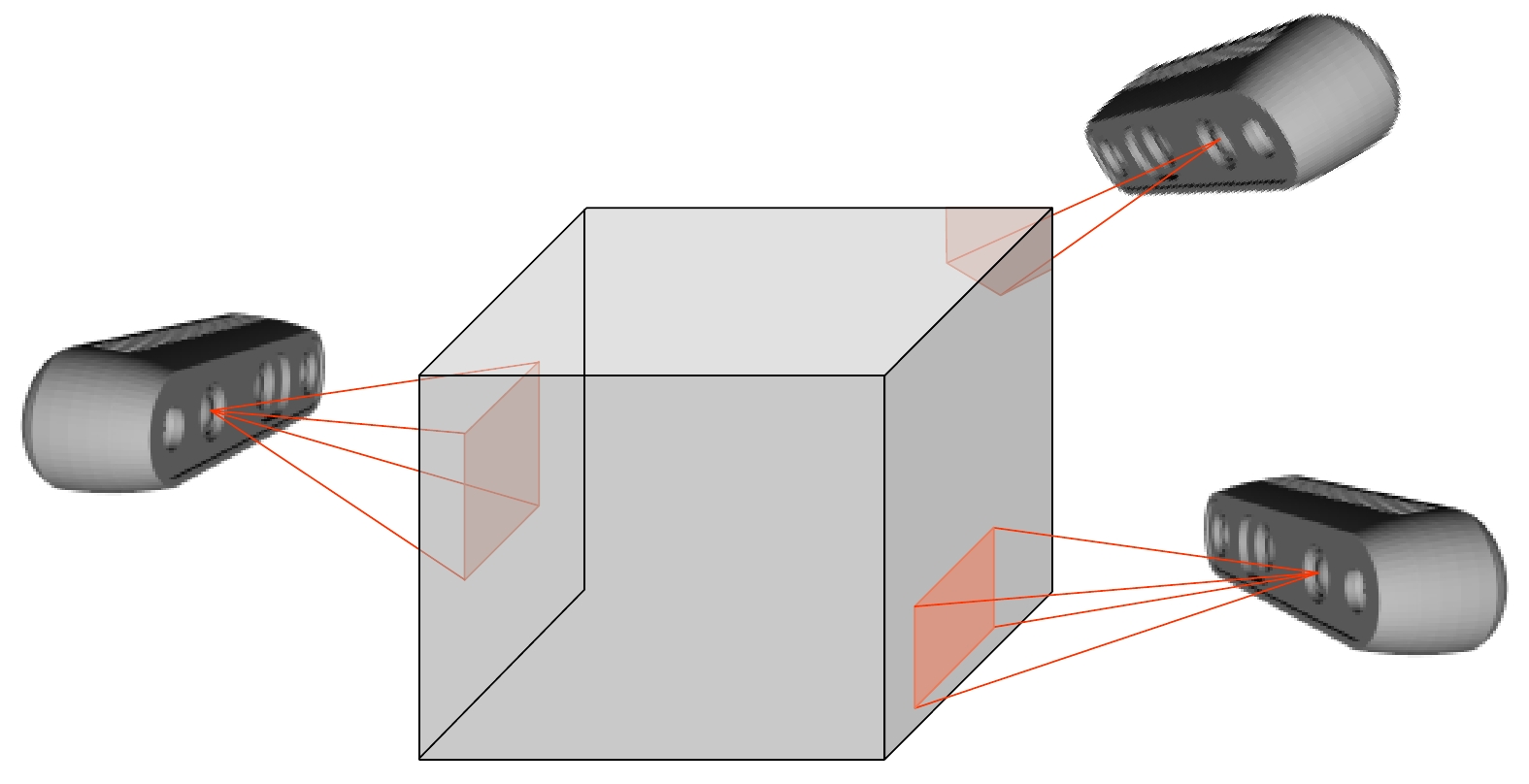

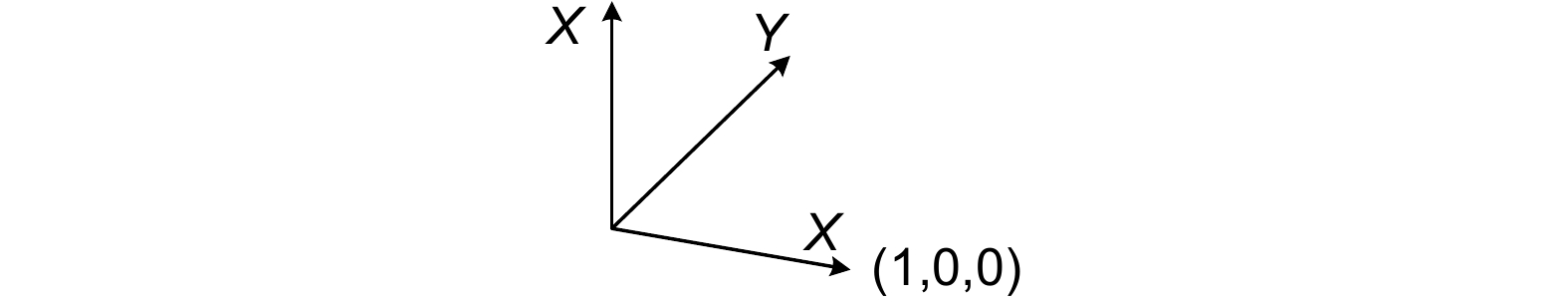

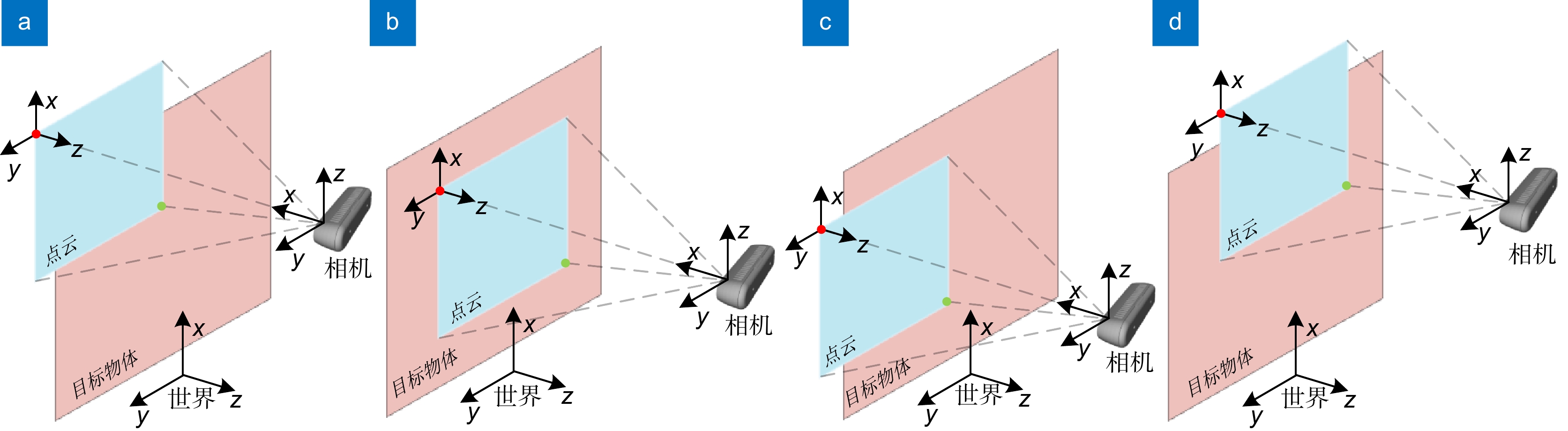

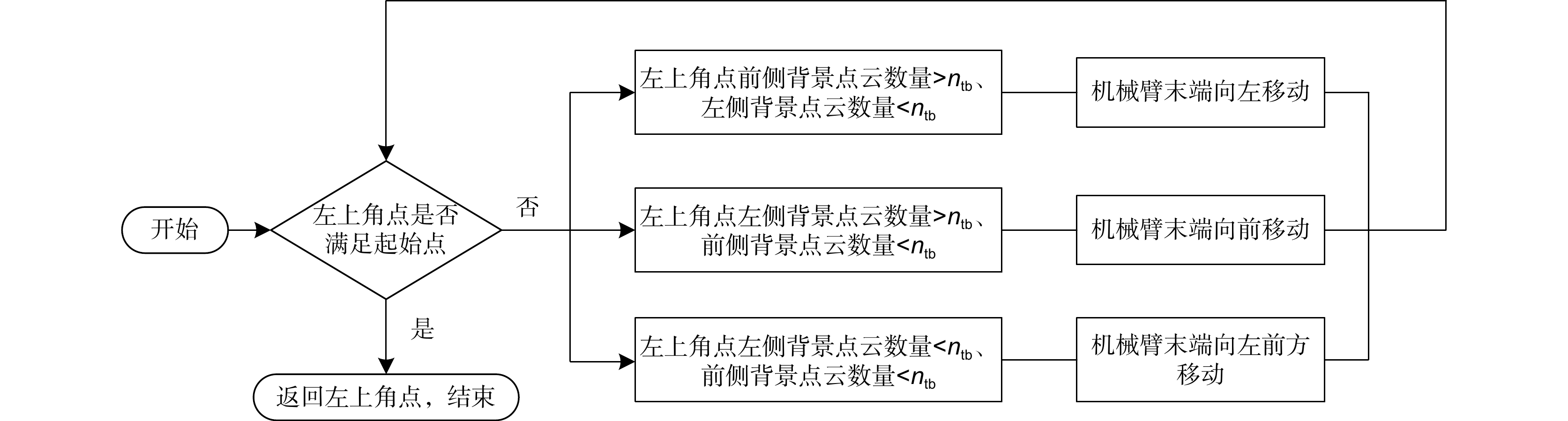

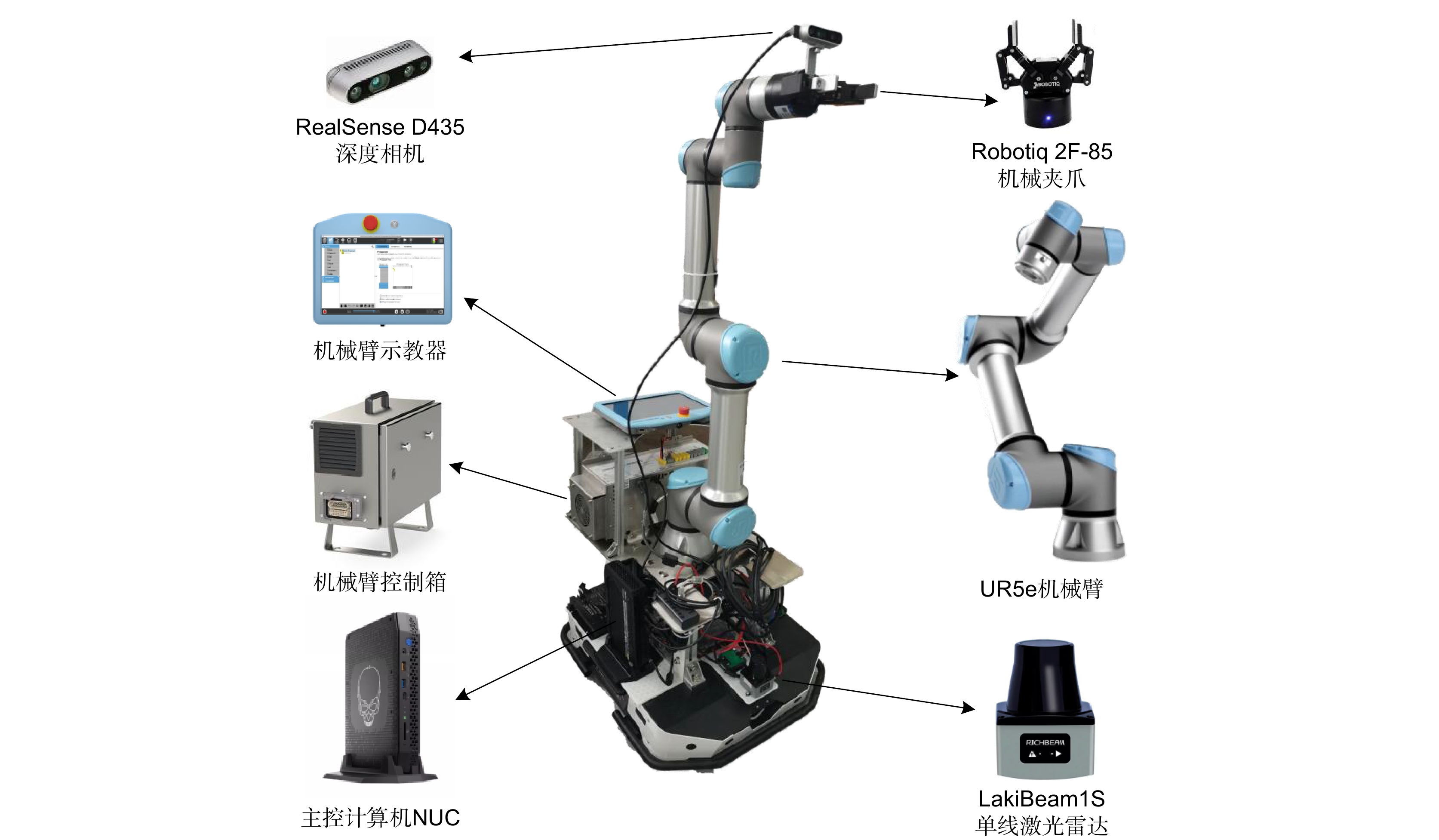

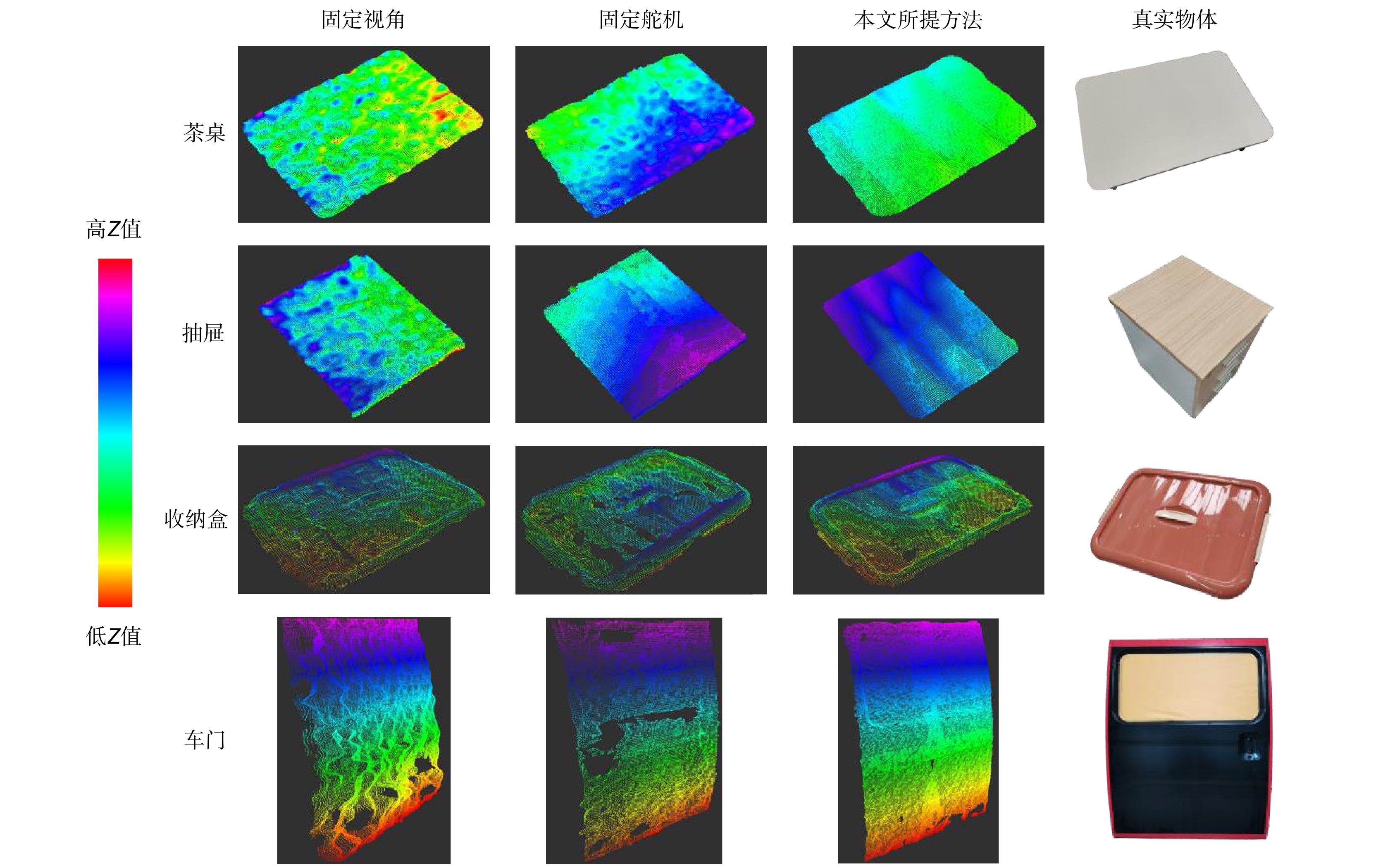

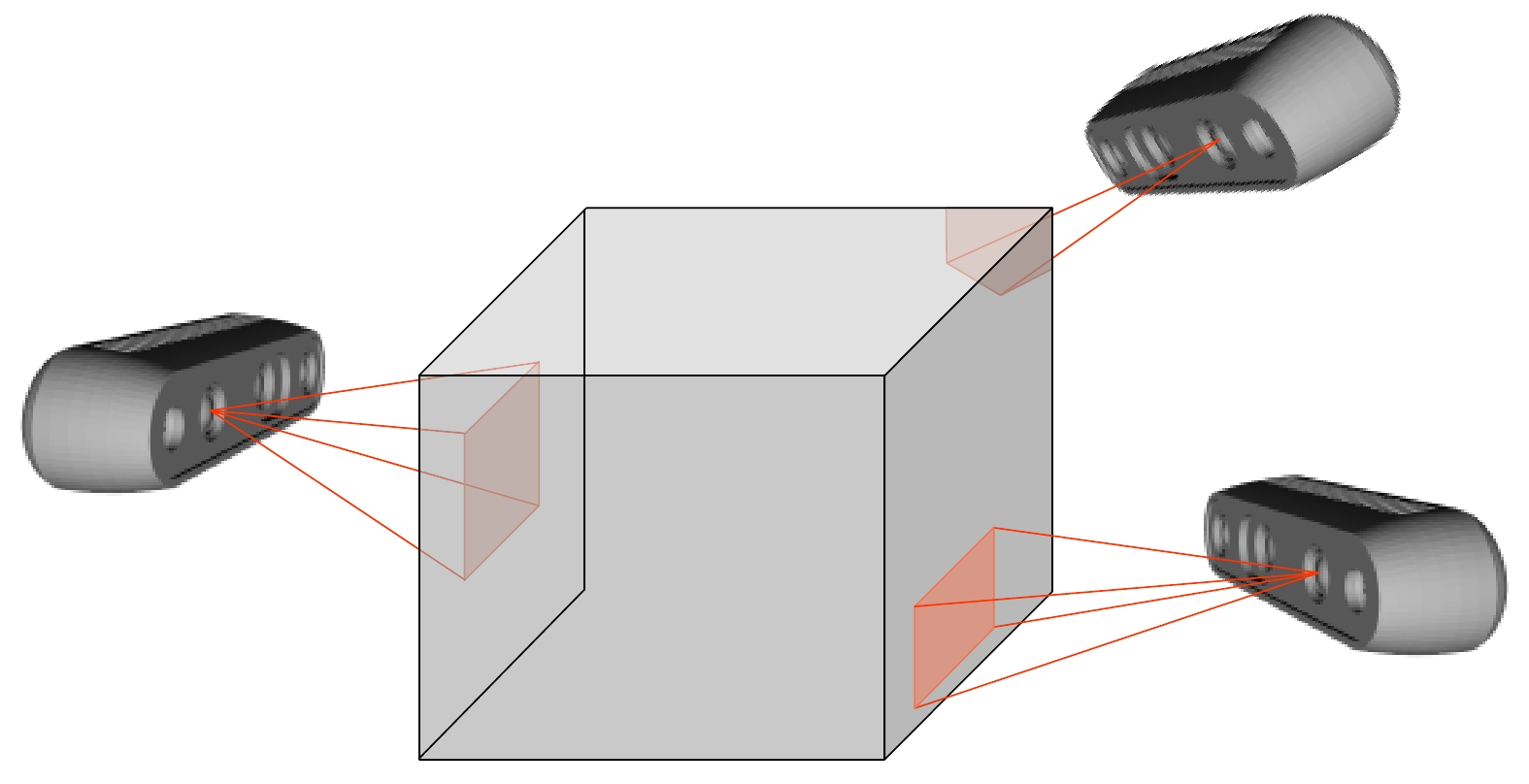

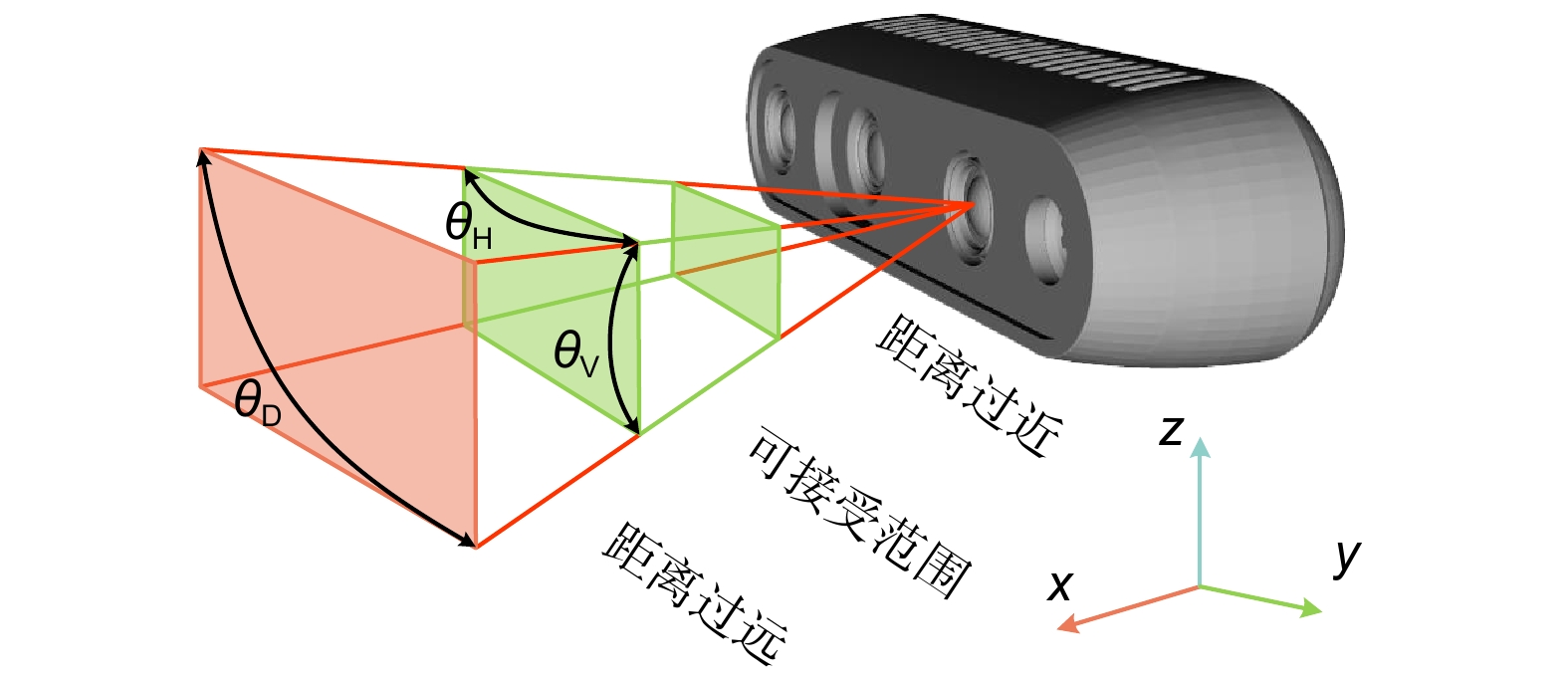

The dynamic depth camera has a limited single-frame field of view, and there is noise disturbance when stitching multiple frames. To deal with the aforementioned problems, a large-scale 3D target pose measurement and reconstruction method based on multi-view fusion is presented. This approach builds a hierarchical model of the depth camera's performance gradient, predicts the pose with a multi-view scanning method based on point cloud normal vectors, and fits 3D models of targets with height constraints RANSAC (height constraints RANSAC, HC-RANSAC). The depth camera installed on the end of the robotic manipulator scans and measures the target from various angles, and the sampled data is utilized to reconstruct the target model in the local coordinate system. Experimental results reveal that when compared to fixed-depth cameras and classical reconstruction approaches based on pan-tilt vision, the proposed approach has a larger reconstruction field of view and higher reconstruction accuracy. It can reconstruct huge targets at a close range, and get an excellent balance between field of vision and precision.

-

Key words:

- machine vision /

- hand-eye collaboration /

- multi-view fusion /

- posture measurement

-

Overview

Overview: In order to solve the challenges of a dynamic depth camera's limited field of view in a single frame and the systematic error of multi-frame stitching in complex environments with high-intensity interference, this paper presents a multi-view fusion-based method for pose measurement and reconstruction of a large range 3D target. The classic 3D reconstruction approach relies heavily on manual teaching, which is accomplished by the scanning point, scanning attitude, and scanning path of the manual teaching sensor, as well as hand-eye tracking of the path by the robot arm. Its drawbacks include complex processes, limited generalization ability, difficulty attaining efficient global optimization, and a strong reliance on the instructor's subjective experience. Because weak feature objects in complicated environments cannot adapt to traditional 3D reconstruction methods, this study employs autonomous scanning path planning of object models by a robot manipulator.

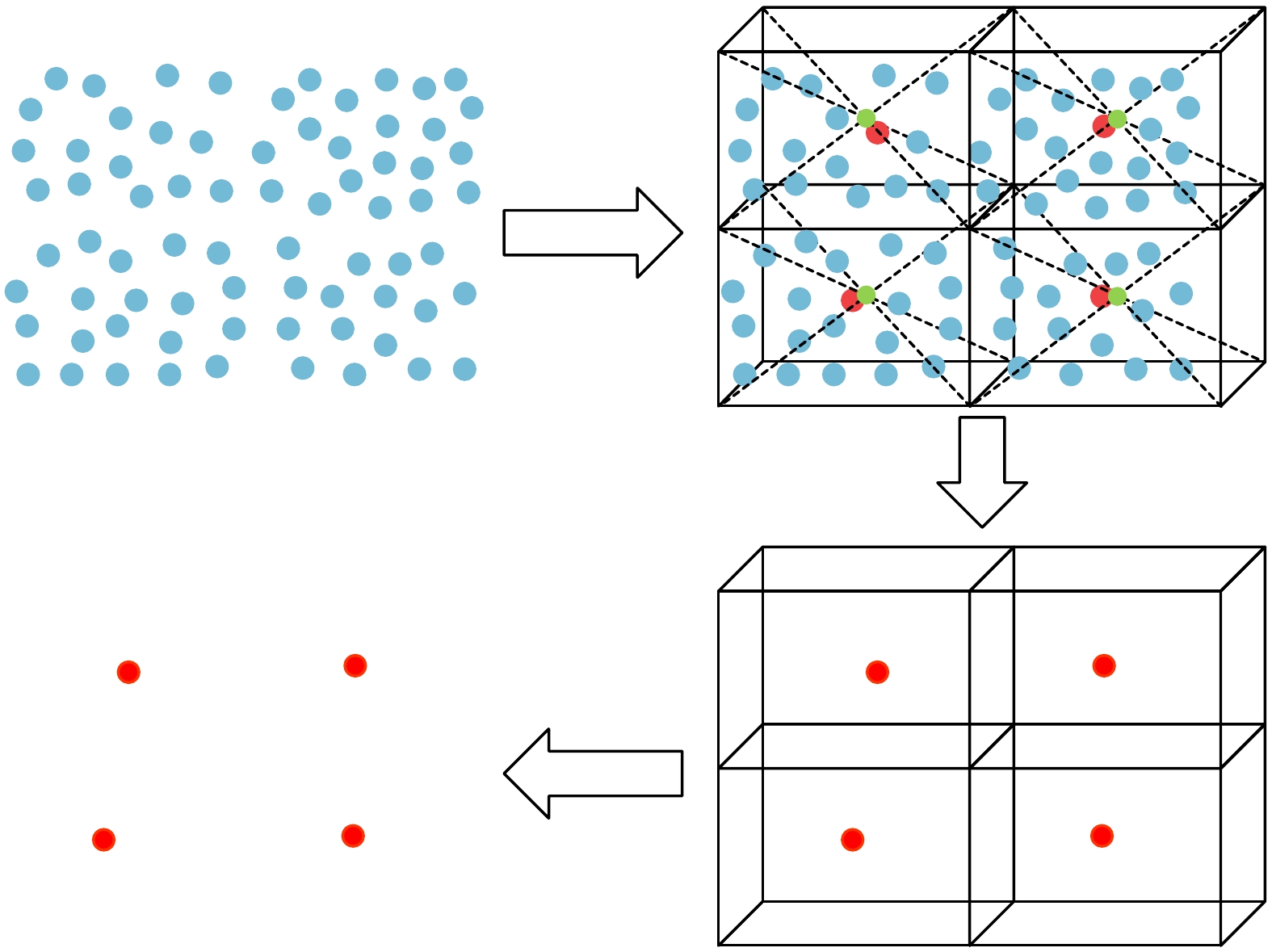

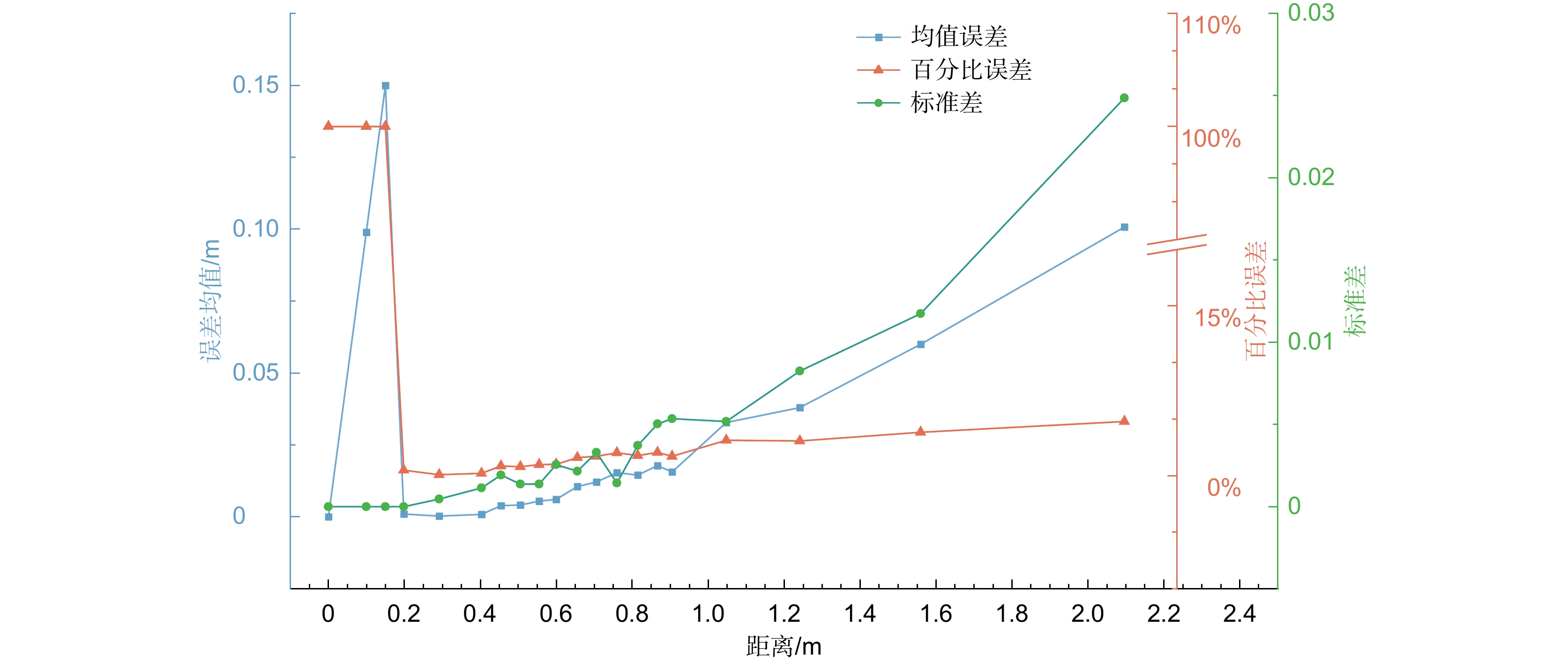

The proposed method develops the depth camera's performance gradient layered model using high-precision repeated positioning of the manipulator, and appropriately controls the image capture distance in the 3D reconstruction process, both the field of view and measurement accuracy are taken into account. The multi-view scanning posture prediction based on the point cloud normal vector is used to preserve the overlapping area between the two frame point clouds, which serves as the foundation for the follow-up ICP fine registration. Height constraints RANSAC (HC-RANSAC) was used to fit the target 3D model, and multiple point cloud filtering methods were integrated to further optimize the model, including improved barycentric voxel filtering, statistical filtering, and moving least squares up-sampling.

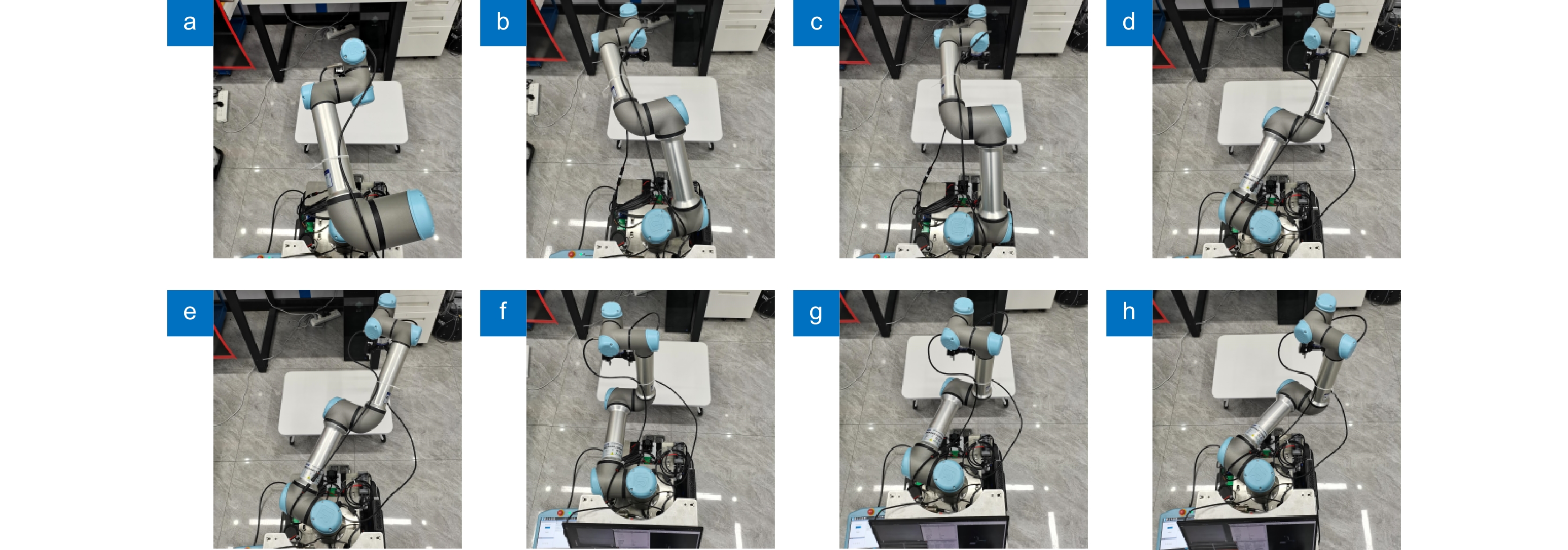

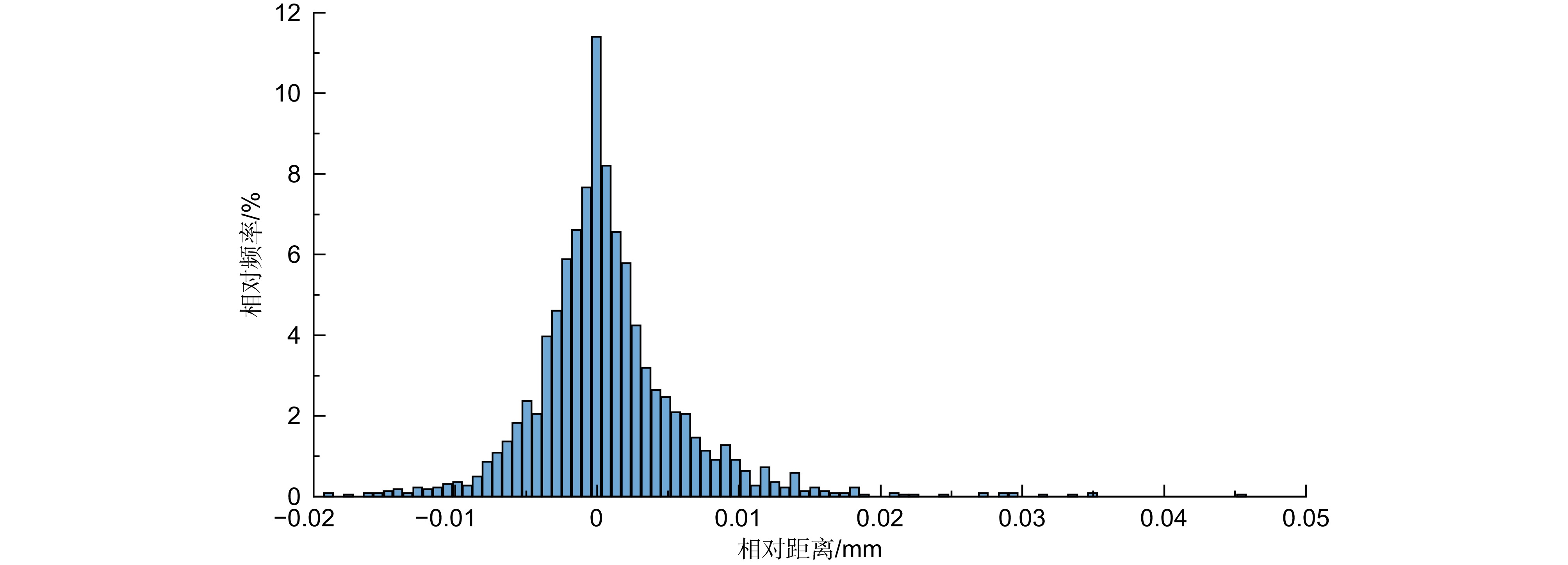

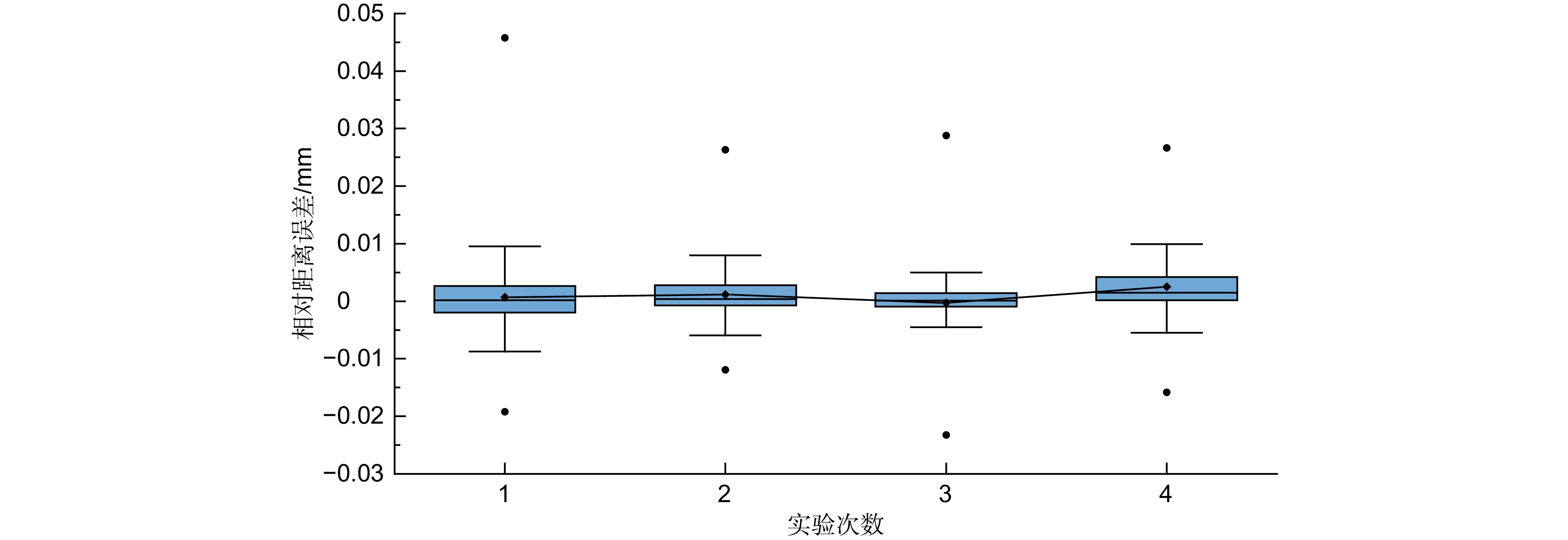

The experiments were carried out under laboratory circumstances, with three-dimensional reconstruction tests performed on the tea table, drawer, door, and storage box, respectively. The alignment error of the overlapping part of the adjacent point cloud is taken as the accuracy evaluation standard, which is positively correlated with the accuracy of the model, and the results show that the errors are all within 0.01 mm. Compared to fixed depth cameras with fixed view angle or three-dimensional reconstruction approaches based on fixed 2-DOF servo coupling depth camera, the proposed method has a larger reconstruction field of view and good reconstruction accuracy, as well as the ability to reconstruct large objects at close range, thus solving the problem that it is challenging to balance the field of view and accuracy. The proposed method is suitable for large object perception based on a mobile robot arm and large target reconstruction in narrow space.

-

-

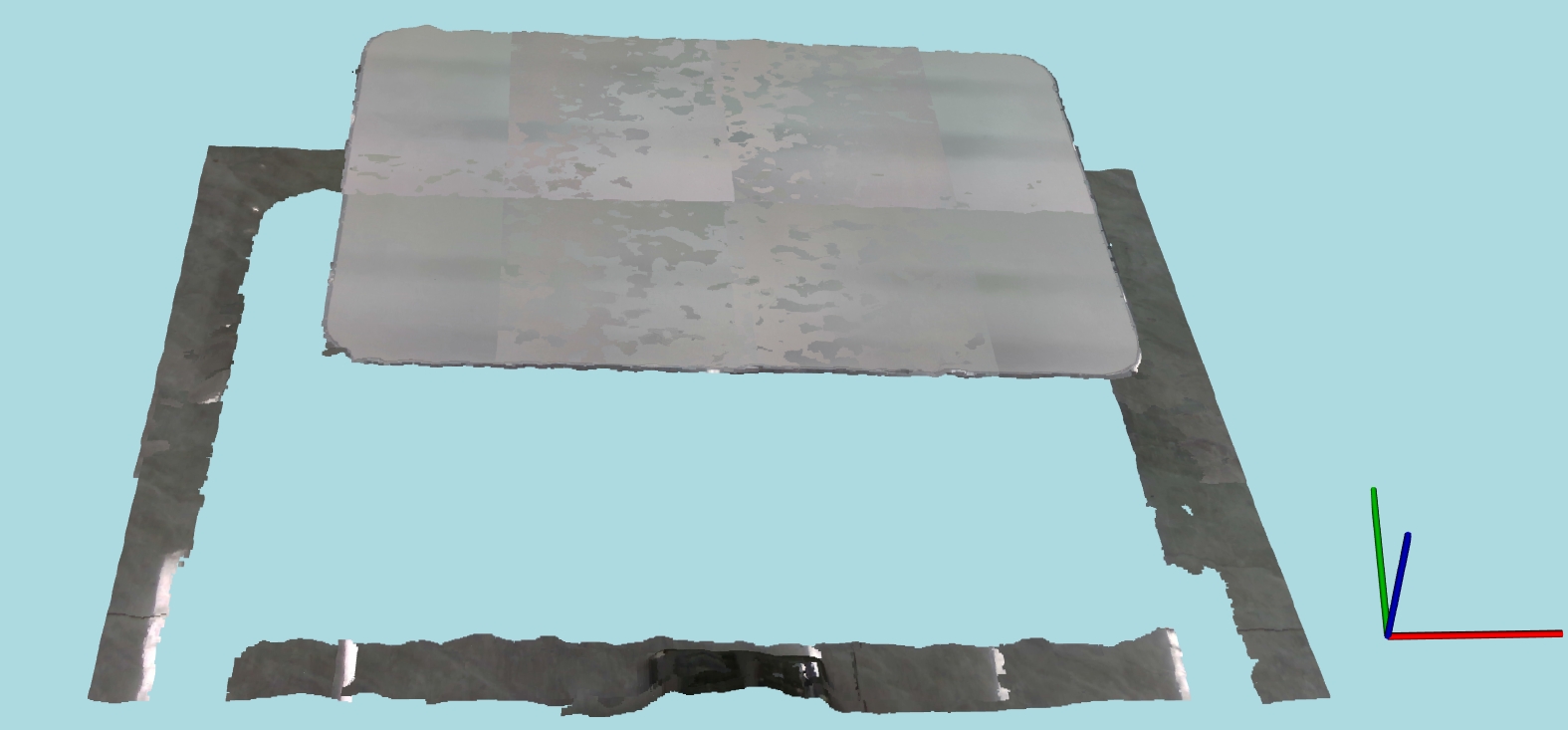

图 12 所提方法针对点云的滤波结果。(a) 降采样处理结果;(b) HC-RANSAC处理结果;(c) 统计滤波处理结果;(d) 移动最小二乘处理结果

Figure 12. Filtering results of the proposed method for point clouds. (a) Down sampling processing result; (b) HC-RANSAC processing result; (c) Statistical filtering processing result; (d) Moving least squares processing result

表 1 测量误差分析实验结果

Table 1. Experimental results of measurement error analysis

数据指标 实验Ⅰ 实验Ⅱ 实验Ⅲ 实验Ⅳ 实验Ⅴ 实验Ⅵ 实验Ⅶ ${d_i}$/m 0 0.199 0.291 0.404 0.501 0.692 1.012 0 0.199 0.290 0.400 0.502 0.697 1.006 0 0.199 0.291 0.403 0.499 0.695 1.016 0 0.199 0.291 0.403 0.502 0.695 1.017 0 0.199 0.290 0.401 0.501 0.694 1.014 0 0.199 0.291 0.402 0.499 0.696 1.014 0 0.199 0.291 0.402 0.501 0.688 1.026 0 0.199 0.291 0.402 0.502 0.695 1.017 0 0.199 0.291 0.401 0.503 0.690 1.009 0 0.199 0.290 0.403 0.499 0.687 1.011 ${d_{\mathrm{r}}}$/m 0.15 0.198 0.291 0.403 0.505 0.705 1.047 $\bar d$/m 0 0.199 0.2907 0.4021 0.5009 0.6929 1.0142 $\Delta d$/m 0.15 0.001 0.0003 0.0009 0.0041 0.0121 0.0328 ${e}$/% 100.00 0.51 0.10 0.22 0.81 1.72 3.13 $\sigma $ 0 0 0.00046 0.00114 0.00138 0.0033 0.00517 表 2 测距性能层次误差

Table 2. Hierarchical error of ranging performance

测距性能层次 距离过近 可接受范围 距离过远 距离阈值/m ( 0, 0.15 ] ( 0.15, 0.6 ) [ 0.6, ∞ ) $\Delta d$/m $ \approx {d_i}$ ≤0.005 ≥0.01 ${e }$/% $ \approx $100 ≤1 >1 表 3 各方向距离统计值表

Table 3. Statistical values of distance in each direction

均值/m 众数/m 标准差 相对距离 3.69×10−6 5.29×10−6 4.20×10−6 X方向相对距离 5.39×10−5 −1.48×10−5 7.57×10−4 Y方向相对距离 6.21×10−5 1.45×10−4 8.13×10−4 Z方向相对距离 3.29×10−4 −1.54×10−3 1.53×10−3 -

参考文献

[1] Yu X Y, Cheng Z Y, Zhang Y K, et al. Point cloud modeling and slicing algorithm for trajectory planning of spray painting robot[J]. Robotica, 2021, 39(12): 2246−2267. doi: 10.1017/S0263574721000308

[2] Ge J M, Deng Z H, Li Z Y, et al. Adaptive parameter optimization approach for robotic grinding of weld seam based on laser vision sensor[J]. Rob Comput Integr Manuf, 2023, 82: 102540. doi: 10.1016/j.rcim.2023.102540

[3] Nigro M, Sileo M, Pierri F, et al. Assembly task execution using visual 3D surface reconstruction: an integrated approach to parts mating[J]. Rob Comput Integr Manuf, 2023, 81: 102519. doi: 10.1016/j.rcim.2022.102519

[4] Yang L, Liu Y H, Peng J Z, et al. A novel system for off-line 3D seam extraction and path planning based on point cloud segmentation for arc welding robot[J]. Rob Comput Integr Manuf, 2020, 64: 101929. doi: 10.1016/j.rcim.2019.101929

[5] Lee I D, Seo J H, Kim Y M, et al. Automatic pose generation for robotic 3-D scanning of mechanical parts[J]. IEEE Trans Rob, 2020, 36(4): 1219−1238. doi: 10.1109/TRO.2020.2980161

[6] Qian J M, Feng S J, Xu M Z, et al. High-resolution real-time 360° 3D surface defect inspection with fringe projection profilometry[J]. Opt Lasers Eng, 2021, 137: 106382. doi: 10.1016/j.optlaseng.2020.106382

[7] Xu Z, Kang R, Lu R D. 3D reconstruction and measurement of surface defects in prefabricated elements using point clouds[J]. J Comput Civil Eng, 2020, 34(5): 04020033. doi: 10.1061/(ASCE)CP.1943-5487.0000920

[8] Zhang S. High-speed 3D shape measurement with structured light methods: a review[J]. Opt Lasers Eng, 2018, 106: 119−131. doi: 10.1016/j.optlaseng.2018.02.017

[9] Zuo C, Feng S J, Huang L, et al. Phase shifting algorithms for fringe projection profilometry: a review[J]. Opt Lasers Eng, 2018, 109: 23−59. doi: 10.1016/j.optlaseng.2018.04.019

[10] 向卓龙, 张启灿, 吴周杰. 结构光投影三维面形测量及纹理贴图方法[J]. 光电工程, 2022, 49(12): 220169. doi: 10.12086/oee.2022.220169

Xiang Z L, Zhang Q C, Wu Z J. 3D shape measurement and texture mapping method based on structured light projection[J]. Opto-Electron Eng, 2022, 49(12): 220169. doi: 10.12086/oee.2022.220169

[11] Lyu C, Bai Y, Yang J, et al. An iterative high dynamic range image processing approach adapted to overexposure 3D scene[J]. Opt Lasers Eng, 2020, 124: 105831. doi: 10.1016/j.optlaseng.2019.105831

[12] Yang S, Li B C, Cao Y P, et al. Noise-resilient reconstruction of panoramas and 3D scenes using robot-mounted unsynchronized commodity RGB-D cameras[J]. ACM Trans Graphics, 2020, 39(5): 152. doi: 10.1145/3389412

[13] Ge J H, Li J X, Peng Y P, et al. Online 3-D modeling of complex workpieces for the robotic spray painting with low-cost RGB-D cameras[J]. IEEE Trans Instrum Meas, 2021, 70: 5011013. doi: 10.1109/TIM.2021.3083425

[14] 李轩, 刘飞, 邵晓鹏. 偏振三维成像技术的原理和研究进展[J]. 红外与毫米波学报, 2021, 40(2): 248−262. doi: 10.11972/j.issn.1001-9014.2021.02.016

Li X, Liu F, Shao X P. Research progress on polarization 3D imaging technology[J]. J Infrared Millim Waves, 2021, 40(2): 248−262. doi: 10.11972/j.issn.1001-9014.2021.02.016

[15] Jiang X H, Li S L, Liu Y F, et al. Far3D: Expanding the horizon for surround-view 3D object detection[C]//AAAI Conference on Artificial Intelligence, 2024, 38 : 2561–2569. https://doi.org/10.1609/aaai.v38i3.28033.

[16] Zhu Z M, Zeng X N, Long W Q, et al. Three-dimensional reconstruction of polarized ambient light separation in complex illumination[J]. Opt Express, 2024, 32(8): 13932−13945. doi: 10.1364/OE.519650

[17] Munkberg J, Chen W Z, Hasselgren J, et al. Extracting triangular 3D models, materials, and lighting from images[C]//IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2022: 8270–8280. https://doi.org/10.1109/CVPR52688.2022.00810.

[18] 楚冬娅, 张广汇, 宋仁杰, 等. 先验知识辅助的条纹投影动态三维形貌测量[J]. 光电工程, 2022, 49(8): 210449. doi: 10.12086/oee.2022.210449

Chu D Y, Zhang G H, Song R J, et al. Priori knowledge assisted dynamic 3D shape measurement with fringe projection[J]. Opto-Electron Eng, 2022, 49(8): 210449. doi: 10.12086/oee.2022.210449

[19] Li C D, Yu L, Fei S M. Large-scale, real-time 3D scene reconstruction using visual and IMU sensors[J]. IEEE Sens J, 2020, 20(10): 5597−5605. doi: 10.1109/JSEN.2020.2971521

[20] Ding L J, Dai S G, Mu P G. CAD-based path planning for 3D laser scanning of complex surface[J]. Procedia Comput Sci, 2016, 92: 526−535. doi: 10.1016/j.procs.2016.07.378

[21] Koutecký T, Paloušek D, Brandejs J. Sensor planning system for fringe projection scanning of sheet metal parts[J]. Measurement, 2016, 94: 60−70. doi: 10.1016/j.measurement.2016.07.067

[22] Malhan R, Jomy Joseph R, Bhatt P M, et al. Algorithms for improving speed and accuracy of automated three-dimensional reconstruction with a depth camera mounted on an industrial robot[J]. J Comput Inf Sci Eng, 2022, 22(3): 031012. doi: 10.1115/1.4053272

[23] Malhan R, Gupta S K. Finding optimal sequence of mobile manipulator placements for automated coverage planning of large complex parts[C]//42nd Computers and Information in Engineering Conference, 2022: V002T02A006. https://doi.org/10.1115/DETC2022-90105.

[24] Zong Y L, Liang J, Pai W Y, et al. A high-efficiency and high-precision automatic 3D scanning system for industrial parts based on a scanning path planning algorithm[J]. Opt Lasers Eng, 2022, 158: 107176. doi: 10.1016/j.optlaseng.2022.107176

[25] Gao J, Li F, Zhang C, et al. A method of D-type weld seam extraction based on point clouds[J]. IEEE Access, 2021, 9: 65401−65410. doi: 10.1109/ACCESS.2021.3076006

[26] Cheng Z A, Li H D, Asano Y, et al. Multi-view 3D reconstruction of a texture-less smooth surface of unknown generic reflectance[C]//IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021: 16221–16230. https://doi.org/10.1109/CVPR46437.2021.01596.

[27] 陈朋, 任金金, 王海霞, 等. 基于深度学习的真实尺度运动恢复结构方法[J]. 光电工程, 2019, 46(12): 190006. doi: 10.12086/oee.2019.190006

Chen P, Ren J J, Wang H X, et al. Equal-scale structure from motion method based on deep learning[J]. Opto-Electron Eng, 2019, 46(12): 190006. doi: 10.12086/oee.2019.190006

[28] Wang J S, Tao B, Gong Z Y, et al. A mobile robotic measurement system for large-scale complex components based on optical scanning and visual tracking[J]. Rob Comput Integr Manuf, 2021, 67: 102010. doi: 10.1016/j.rcim.2020.102010

[29] Wang J S, Gong Z Y, Tao B, et al. A 3-D reconstruction method for large freeform surfaces based on mobile robotic measurement and global optimization[J]. IEEE Trans Instrum Meas, 2022, 71: 5006809. doi: 10.1109/TIM.2022.3156205

[30] 陶四杰, 白瑞林. 一种基于降采样后关键点优化的点云配准方法[J]. 计算机应用研究, 2021, 38(3): 904−907. doi: 10.19734/j.issn.1001-3695.2020.01.0021

Tao S J, Bai R L. Point cloud registration method based on key point optimization after downsampling[J]. Appl Res Comput, 2021, 38(3): 904−907. doi: 10.19734/j.issn.1001-3695.2020.01.0021

[31] Liu S L, Guo H X, Pan H, et al. Deep implicit moving least-squares functions for 3D reconstruction[C]//IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021: 1788–1797. https://doi.org/10.1109/CVPR46437.2021.00183.

[32] Tsai R Y, Lenz R K. A new technique for fully autonomous and efficient 3D robotics hand/eye calibration[J]. IEEE Trans Rob Autom, 1989, 5(3): 345−358. doi: 10.1109/70.34770

[33] Kim Y M, Theobalt C, Diebel J, et al. Multi-view image and tof sensor fusion for dense 3D reconstruction[C]//12th International Conference on Computer Vision Workshops, 2009: 1542–1549. https://doi.org/10.1109/ICCVW.2009.5457430.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: