Feature coordination and fine-grained perception of small targets in remote sensing images

-

摘要:

针对遥感图像中小目标多、排列密集导致的漏检问题,提出一种特征协同与细粒度感知的遥感图像小目标检测算法。首先,构造精细特征协同策略,通过智能调整卷积核参数,优化了特征间的交互和整合过程;通过精确控制信息流,实现从粗糙到精细的渐进式特征精化。在此基础上,本文设计一个细粒度感知模块,将感知注意力与移动反向卷积结合形成一个增强型检测头,显著增强网络对于极小尺寸物体的感知能力。最后,为了提升模型训练的效率,采用MPDIoU和NWD作为回归损失函数,解决位置偏差,加快模型收敛。在DOTA1.0数据集和DOTA1.5数据集上的实验结果表明,改进后算法相比于基准方法,平均精度分别提高7.4%和6.1%,相较于其他算法具有明显优势,显著改善遥感图像中小目标的漏检情况。

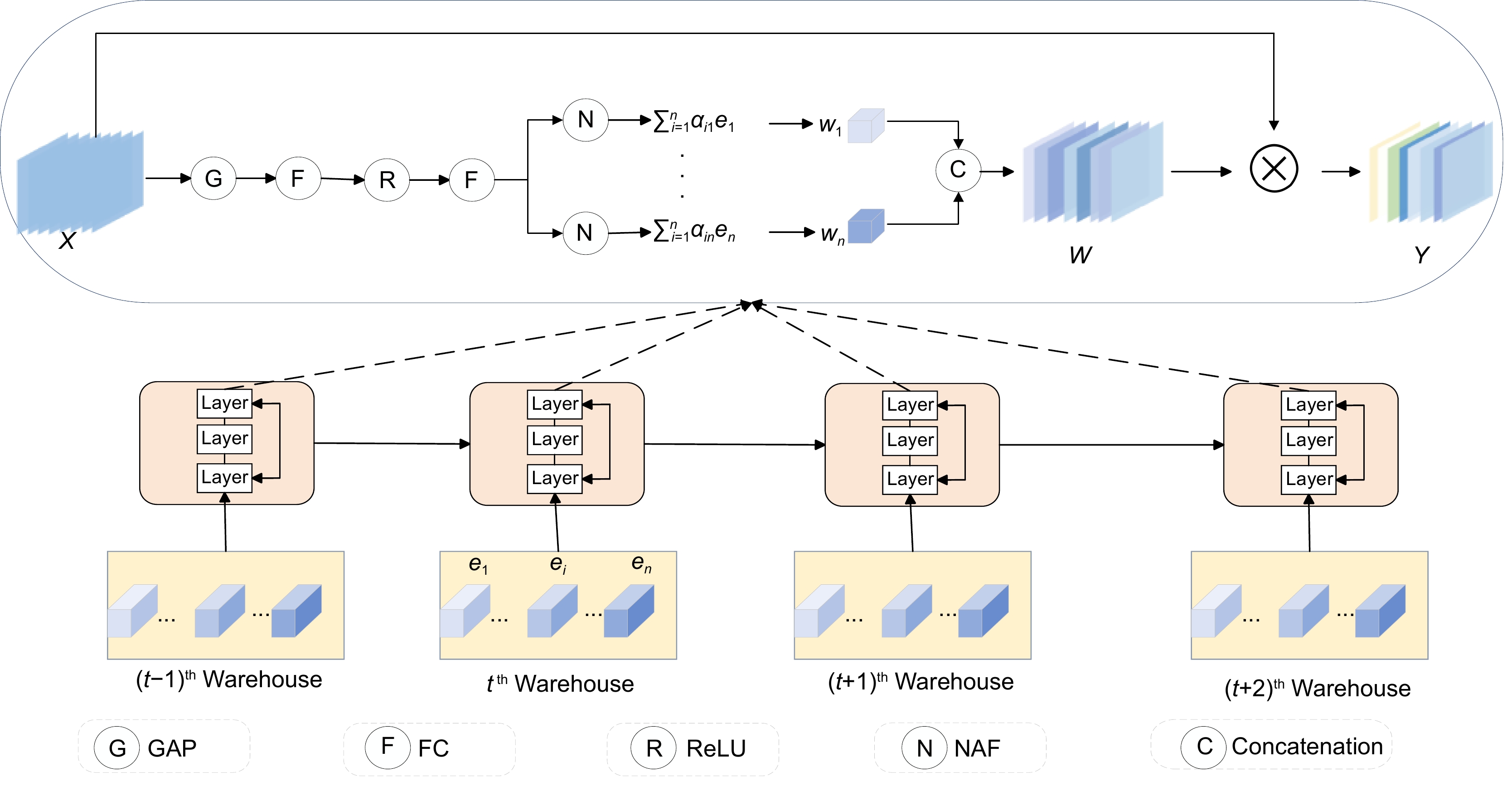

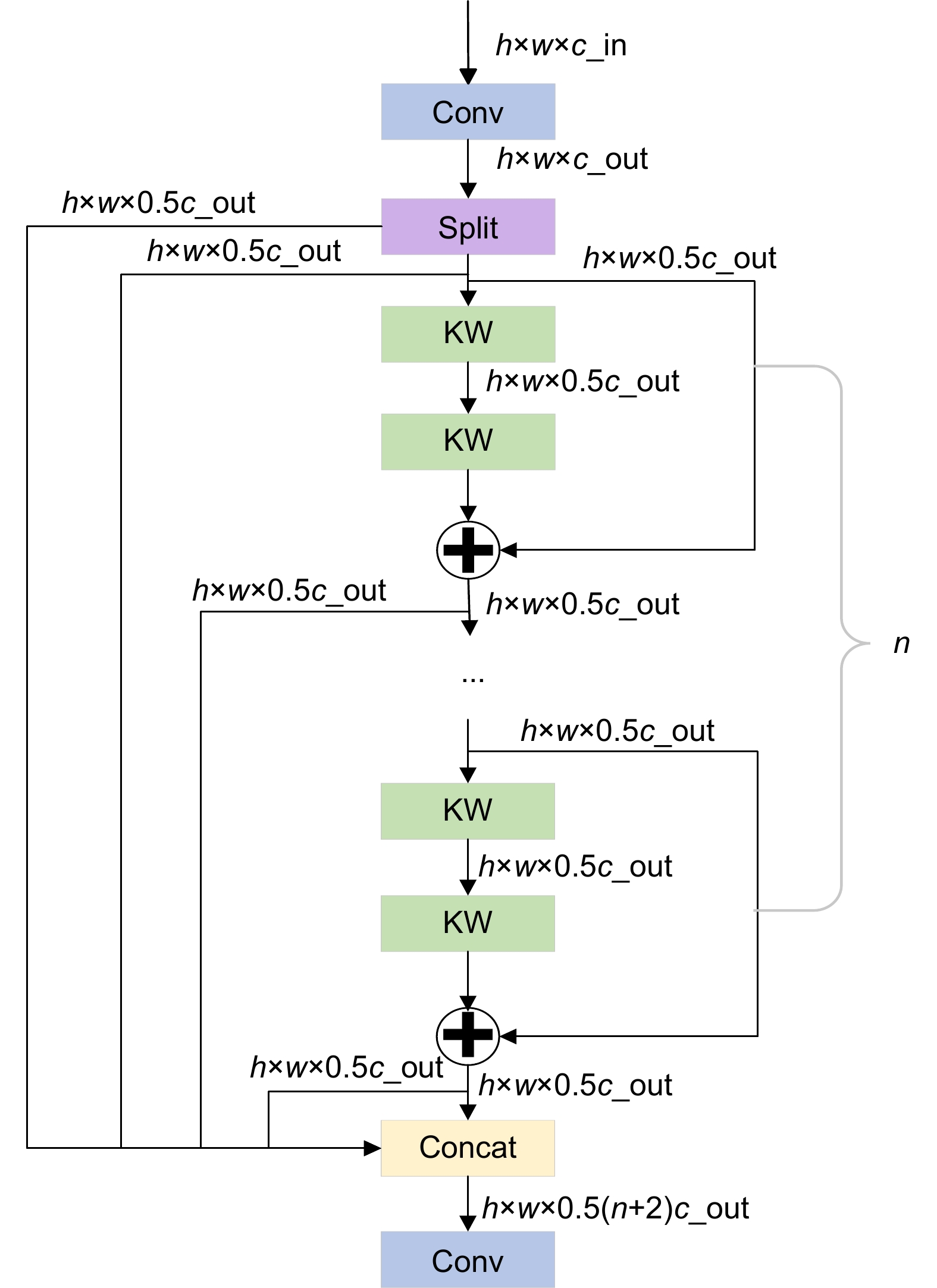

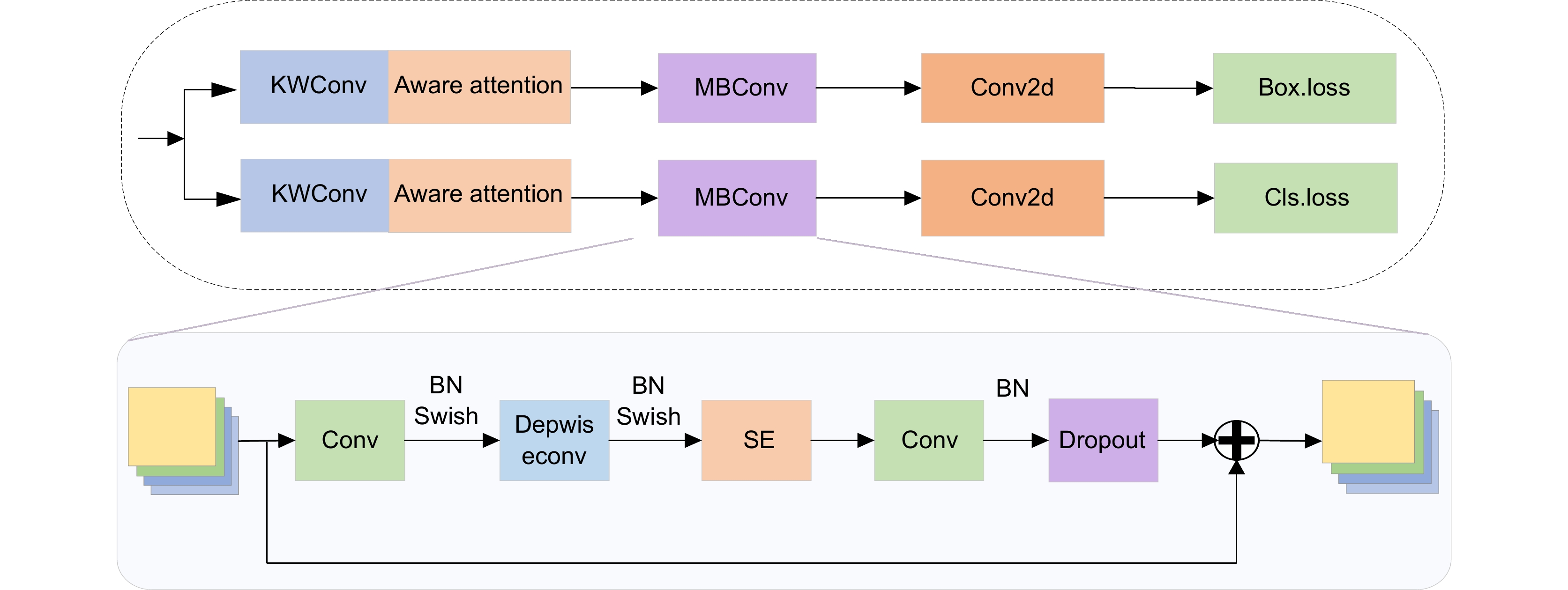

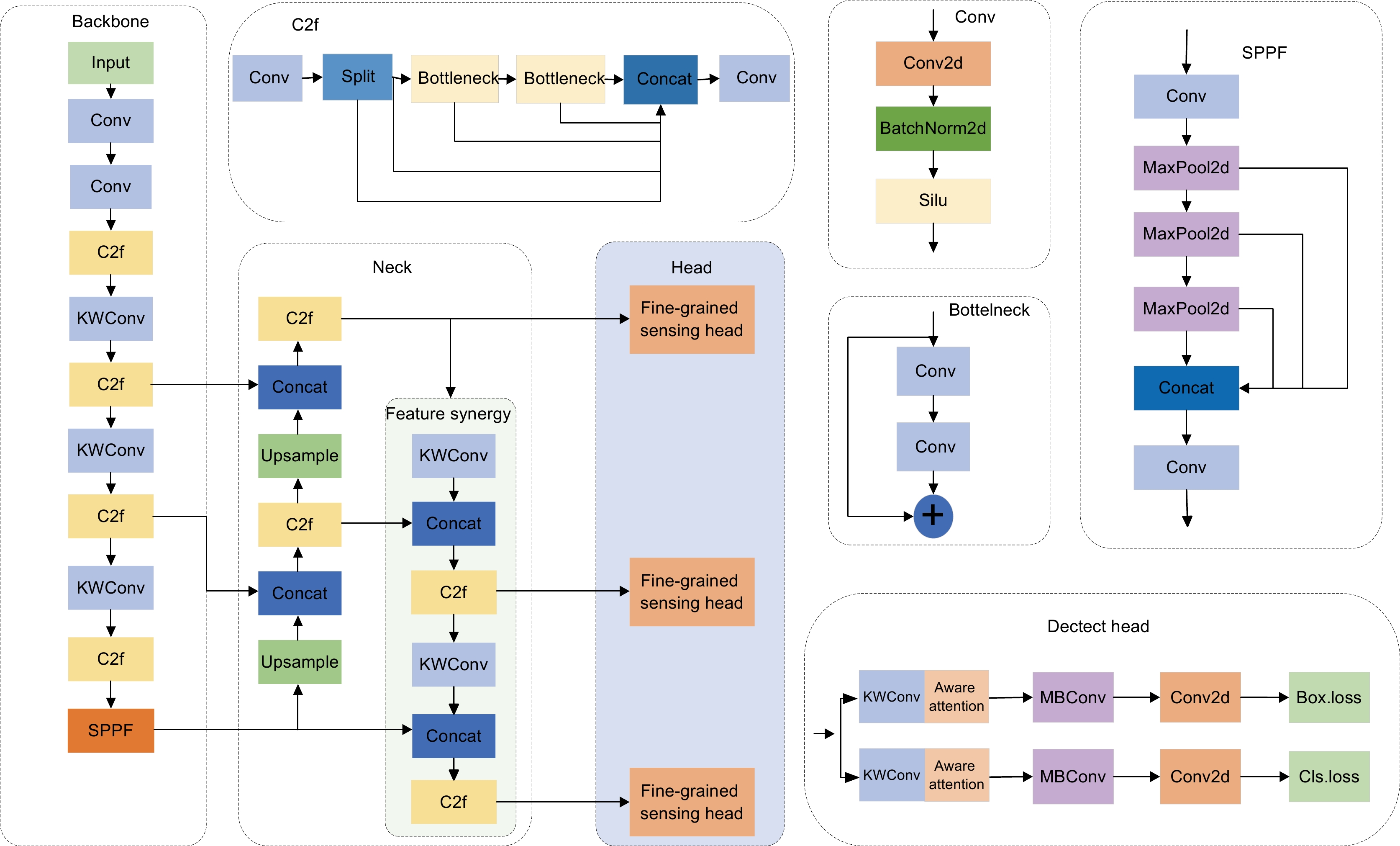

Abstract:Addressing the challenge of missed detection caused by many small targets and dense arrangement in remote sensing images, this study introduces a small target detection algorithm for remote sensing applications, leveraging a combination of feature synergy and micro-perception strategies. Initially, we propose a refined feature synergistic fusion strategy that optimizes the interaction and integration of features across different scales by intelligently adjusting the parameters of convolution kernels. This strategy facilitates progressive refinement of features from coarse to fine granularity. Building upon this foundation, a micro-perception unit is developed in this paper, incorporating perceptual attention mechanisms with moving inverse convolution to form an advanced detection head. This innovative approach substantially boosts the network's capability to detect very small objects. Furthermore, to augment the training efficiency of the model, we employ MPDIoU and NWD as regression loss functions, mitigating positional bias issues and expediting model convergence. Experimental evaluations on the DOTA1.0 dataset and DOTA1.5 dataset reveal that our algorithm achieves a substantial improvement in mean Average Precision (mAP) by 7.4% and 6.1% over the baseline method, which has obvious advantages over other algorithms. The results underscore the algorithm's efficacy in significantly reducing the incidence of missed detections of small targets within remote sensing imagery.

-

Key words:

- remote sensing images /

- small target detection /

- feature synergy /

- fine-gained aware

-

Overview: With the rapid development of remote sensing image technology, remote sensing image target detection is widely used in many important fields, including military target location and identification, natural environment protection, disaster detection, and urban planning and construction. The task of remote sensing image target detection is to accurately identify and locate the specific target in the image, and speculate its type and position. Different from targets in natural scenes, targets in remote sensing images have the characteristics of large scenes, small targets, multi-scale, complex backgrounds, overlapping occlusion, etc., so it is a challenging task to detect specific objects accurately. At present, great breakthroughs have been made in remote sensing image target detection algorithms, but the effect of small target detection is still not ideal. Small target detection faces two major difficulties: Little feature information of the target, scarce positive samples, and unbalanced classification; The target location is difficult, the background is complex, and contains a lot of redundant information, which causes serious interference to the detection. This makes it challenging to extract the edge features from aerial images and distinguish the object from the background. Therefore, the research on object detection and application in remote sensing images has important theoretical and practical significance. Addressing the challenge of missed detection caused by many small targets and dense arrangement in remote sensing images, this study introduces a small target detection algorithm for remote sensing applications, leveraging a combination of feature synergy and micro-perception strategies. Initially, we propose a refined feature synergistic fusion strategy that optimizes the interaction and integration of features across different scales by intelligently adjusting the parameters of convolution kernels. This strategy facilitates progressive refinement of features from coarse to fine granularity. Building upon this foundation, a micro-perception unit is developed in this paper, incorporating perceptual attention mechanisms with moving inverse convolution to form an advanced detection head. This innovative approach substantially boosts the network's capability to detect very small objects. Furthermore, to augment the training efficiency of the model, we employ MPDIoU and NWD as regression loss functions, mitigating positional bias issues and expediting model convergence. Experimental evaluations on the DOTA1.0 dataset and DOTA1.5 dataset reveal that our algorithm substantially improves mean Average Precision (mAP) by 7.4% and 6.1% over the baseline method, which has obvious advantages over other algorithms. The results underscore the algorithm's efficacy in significantly reducing the incidence of missed detections of small targets within remote sensing imagery.

-

-

表 1 所提算法在DOTA1.0数据集的消融实验

Table 1. Ablation experiments of the proposed algorithm in the DOTA1.0 dataset

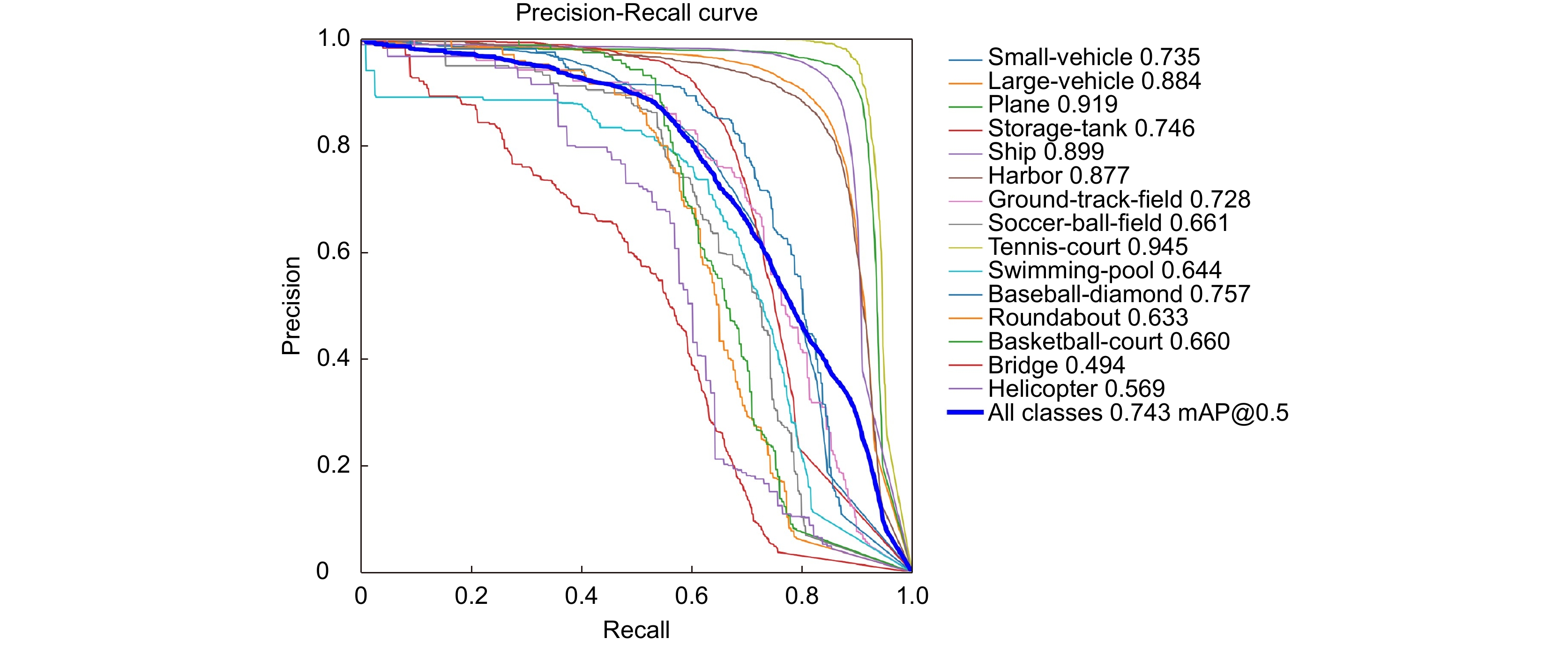

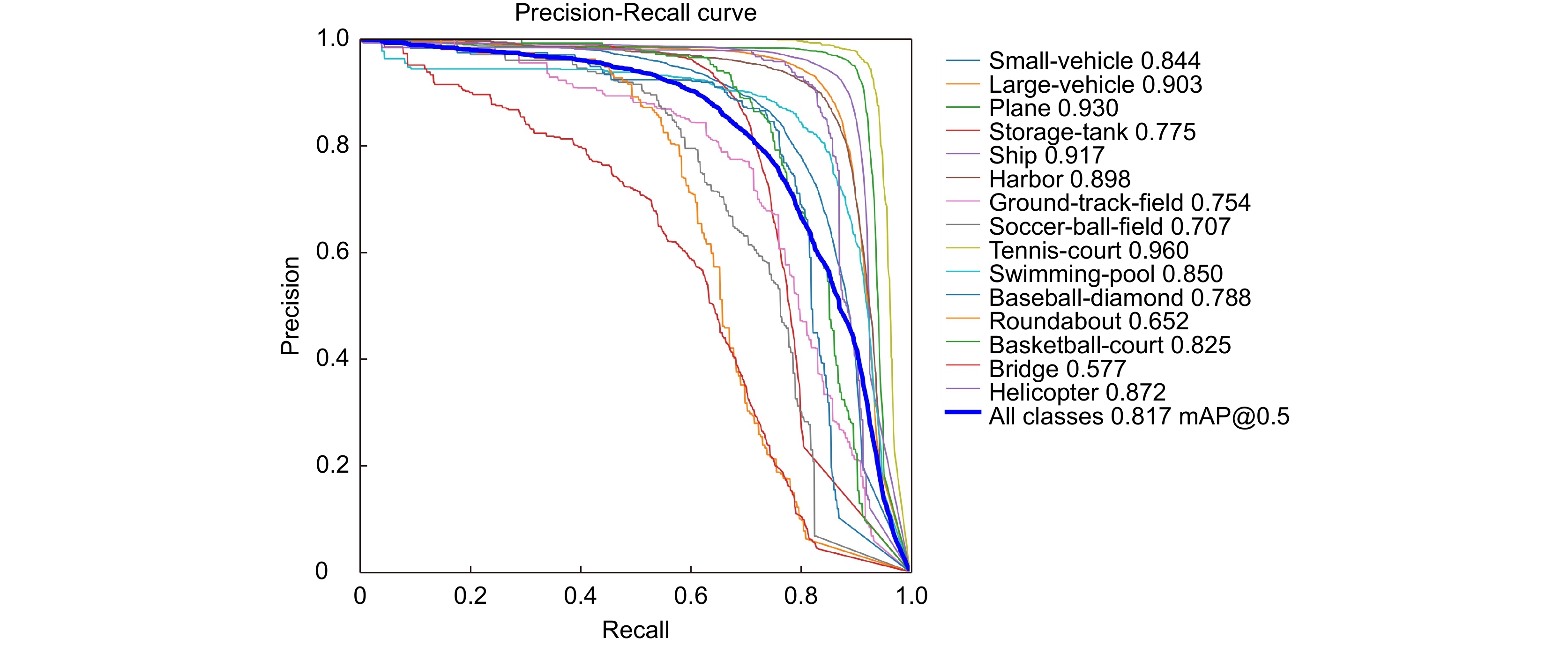

Number A B C D Precision/% Recall/% FPS mAP@0.5 /% mAP@0.5:.0.95 /% 1 × × × × 79.0 68.7 434 74.3 50.9 2 √ × × × 82.1 70.2 384 76.5 52.5 3 × √ × × 84.4 69.0 370 75.2 51.6 4 × × √ × 80.1 69.7 476 75.5 51.4 5 × × × √ 80.5 70.4 454 75.8 51.8 6 × × √ √ 83.3 72.3 566 78.0 53.9 7 √ √ × × 84.9 69.9 285 76.4 52.8 8 √ × √ √ 84.8 73.8 402 79.8 55.6 9 × √ √ √ 82.3 71.7 416 77.0 53.0 10 √ √ √ √ 84.3 75.6 454 81.7 58.0 表 2 不同算法在DOTA1.0数据集上的实验结果

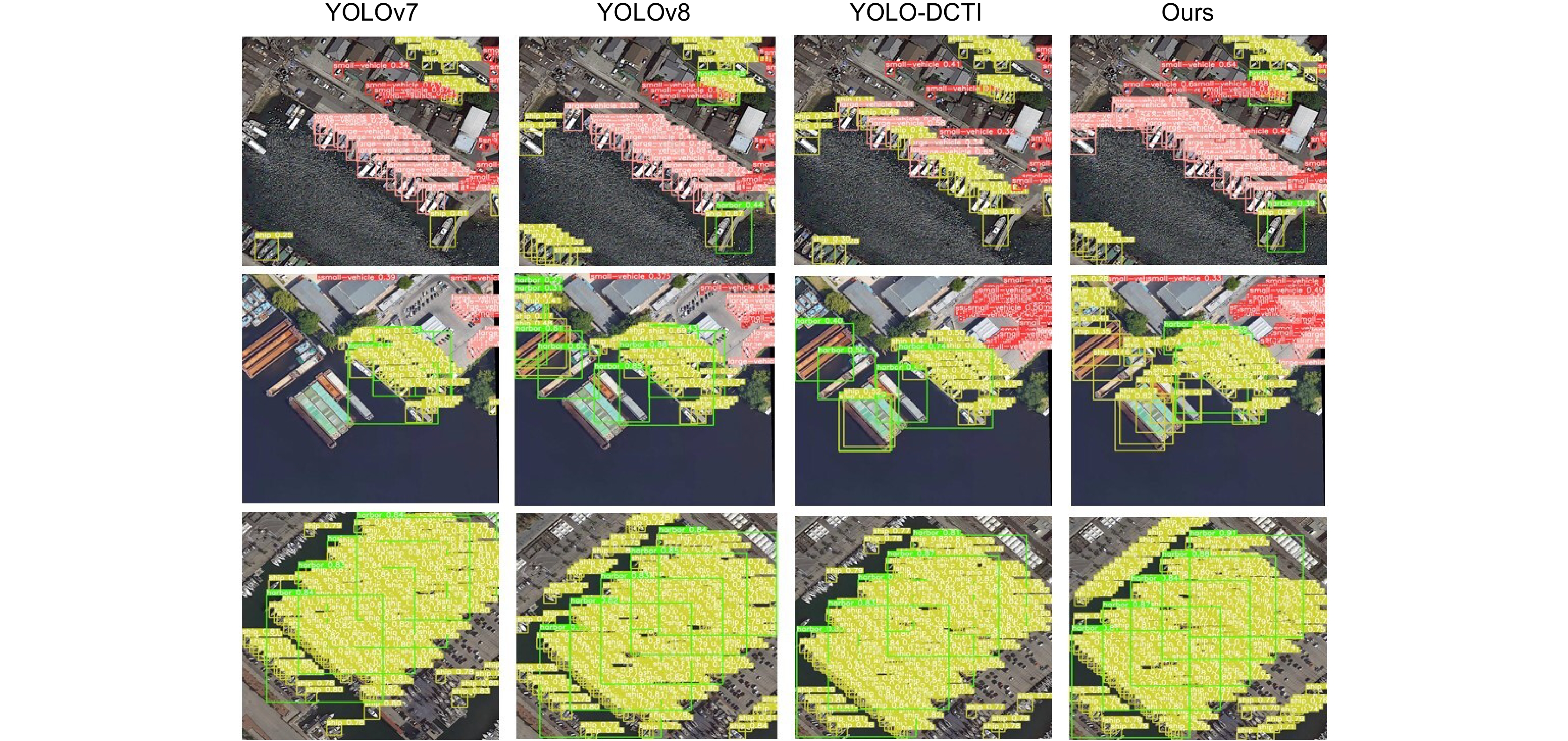

Table 2. Experimental results of different algorithms on DOTA1.0 dataset; unit: %

Category YOLOv5 YOLOv7 CornerNet R-FCN YOLO-BiFPN YOLO-PWCA YOLO-DCTI Ours SV 75.1 76.5 10.1 49.8 81.7 77.6 86.8 88.4 LV 86.7 86.7 50.2 45.1 85.4 85.7 90.9 90.3 PL 93.6 92.3 64.7 81.1 91.8 92.6 91.9 93.0 ST 74.3 70.9 57.9 67.4 77.9 72.7 85.7 77.5 SH 89.6 89.1 31.3 49.3 89.1 87.5 81.1 91.7 HA 87.7 83.5 80.5 45.2 88.2 84.1 88.5 89.8 GTF 71.1 55.5 24.9 58.9 69.3 64.2 73.6 75.4 SBF 62.1 58.4 22.7 41.8 66.8 64.5 70.6 70.7 TC 94.0 94.9 85.5 68.9 93.4 94.0 93.8 96.0 SP 82.8 79.5 18.5 53.3 64.1 64.2 83.0 85.0 BD 76.1 71.1 38.2 58.9 74.0 78.7 77.3 78.8 RA 64.3 47.1 44.5 51.4 59.4 62.0 63.9 65.2 BC 78.3 72.2 62.5 52.1 76.2 75.5 82.1 82.5 BR 59.2 45.3 26.2 31.6 56.4 51.8 57.1 57.7 HC 84.4 81.7 12.1 33.9 83.0 76.3 85.2 87.2 mAP@0.5 78.6 73.6 42.0 52.6 80.2 78.8 80.6 81.7 表 3 不同算法在DOTA1.5数据集上的实验结果

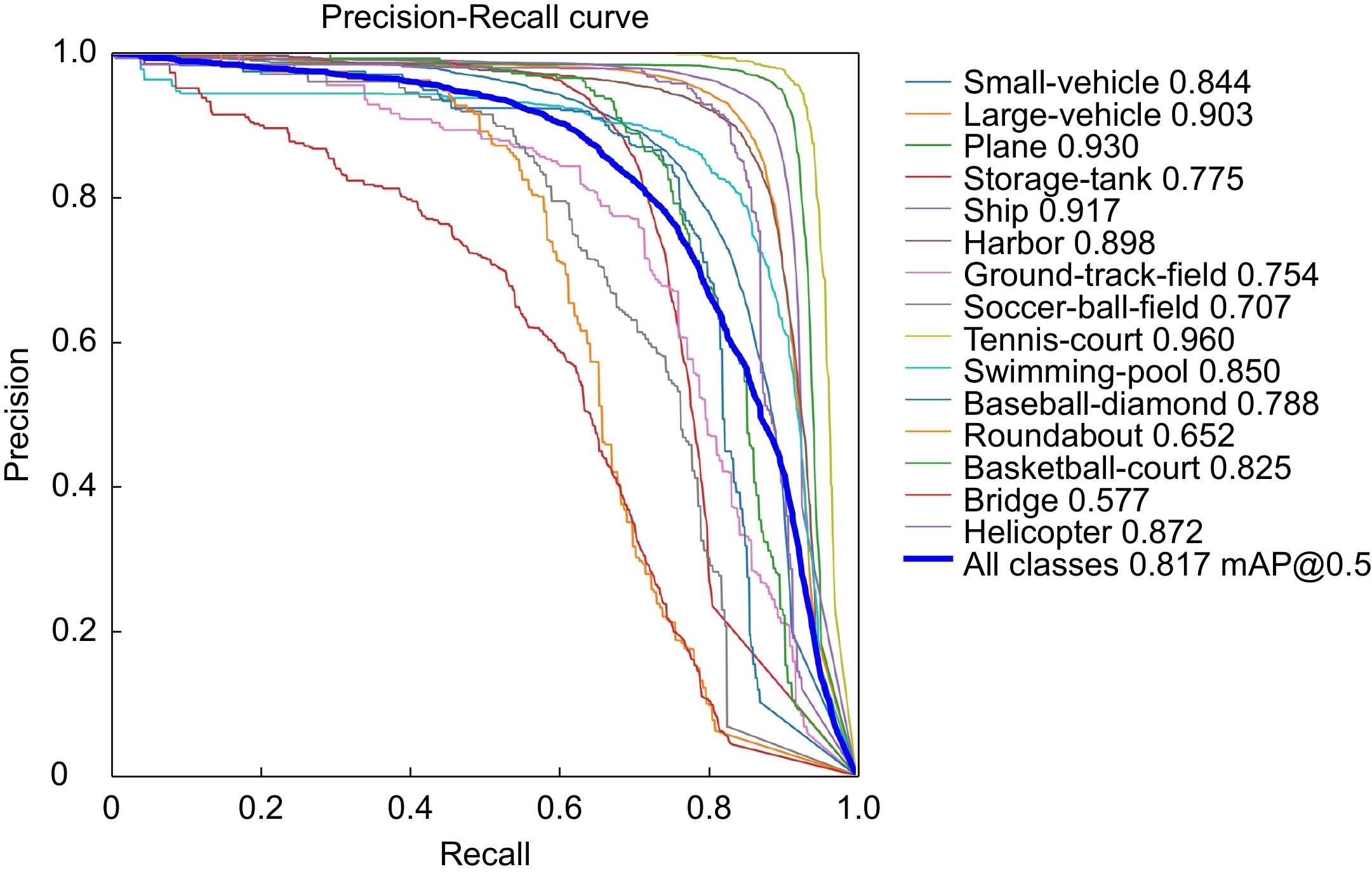

Table 3. Experimental results of different algorithms on DOTA1.5 dataset; unit: %

Category YOLOv5 YOLOv7 CornerNet R-FCN YOLO-BiFPN YOLO-PWCA YOLO-DCTI Ours SV 57.8 66.5 50.3 59.7 70.5 71.2 75.2 77.3 LV 71.4 82.1 59.6 58.9 77.8 86.3 88.5 89.6 PL 80.5 88.4 76.5 77.3 84.1 90.7 80.1 90.1 ST 77.8 80.9 68.1 70.5 76.2 73.1 75.7 77.6 SH 76.7 85.3 60.7 64.8 77.1 86.4 87.3 89.4 HA 82.6 81.7 77.8 75.1 86.9 80.6 89.1 90.5 GTF 73.7 80.6 64.1 60.3 77.5 75.3 74.9 76.1 SBF 63.2 68.4 58.3 61.6 73.4 66.8 75.1 75.2 TC 85.5 83.2 80.7 79.8 87.6 89.5 83.7 91.0 SP 76.1 78.6 72.9 73.4 60.5 70.1 80.4 81.3 BD 79.3 78.2 68.6 70.6 80.1 83.7 84.8 84.9 RA 73.4 75.4 70.2 66.5 74.6 68.7 70.1 76.9 BC 78.3 81.1 73.4 74.8 80.4 82.6 80.7 83.1 BR 60.3 62.5 63.2 66.3 67.1 69.8 62.6 70.2 HC 68.8 65.6 62.7 60.1 78.9 77.4 82.4 80.3 CC 62.7 67.8 64.5 66.9 73.1 70.3 74.2 75.6 mAP@0.5 76.3 75.6 72.0 70.8 78.7 77.9 78.1 80.4 -

[1] 马梁, 苟于涛, 雷涛, 等. 基于多尺度特征融合的遥感图像小目标检测[J]. 光电工程, 2022, 49(4): 210363. doi: 10.12086/oee.2022.210363

Ma L, Gou Y T, Lei T, et al. Small object detection based on multi-scale feature fusion using remote sensing images[J]. Opto-Electron Eng, 2022, 49(4): 210363. doi: 10.12086/oee.2022.210363

[2] 陈旭, 彭冬亮, 谷雨. 基于改进YOLOv5s的无人机图像实时目标检测[J]. 光电工程, 2022, 49(3): 210372. doi: 10.12086/oee.2022.210372

Chen X, Peng D L, Gu Y. Real-time object detection for UAV images based on improved YOLOv5s[J]. Opto-Electron Eng, 2022, 49(3): 210372. doi: 10.12086/oee.2022.210372

[3] 王友伟, 郭颖, 邵香迎. 基于改进级联算法的遥感图像目标检测[J]. 光学学报, 2022, 42(24): 2428004. doi: 10.3788/AOS202242.2428004

Wang Y W, Guo Y, Shao X Y. Target detection in remote sensing images based on improved cascade algorithm[J]. Acta Opt Sin, 2022, 42(24): 2428004. doi: 10.3788/AOS202242.2428004

[4] 王家宝, 程塨, 谢星星, 等. 多元信息监督的遥感图像有向目标检测[J]. 遥感学报, 2023, 27(12): 2726−2735. doi: 10.11834/jrs.20211564

Wang J B, Cheng G, Xie X X, et al. Multi-information supervision in optical remote sensing images[J]. Natl Remote Sens Bull, 2023, 27(12): 2726−2735. doi: 10.11834/jrs.20211564

[5] 张德银, 赵志恒, 谢逸戈, 等. 基于改进YOLOv8的遥感图像飞机目标检测研究[J]. 自动化应用, 2024, 65(2): 193−195,198. doi: 10.19769/j.zdhy.2024.02.060

Zhang D Y, Zhao Z H, Xie Y G, et al. Research on aircraft target detection in remote sensing images based on improved YOLOv8[J]. Autom Appl, 2024, 65(2): 193−195,198. doi: 10.19769/j.zdhy.2024.02.060

[6] Zhang Y L, Jin H Y. Detector consistency research on remote sensing object detection[J]. Remote Sens, 2023, 15(17): 4130. doi: 10.3390/rs15174130

[7] Lyu Z, Jin H F, Zhen T, et al. Small object recognition algorithm of grain pests based on SSD feature fusion[J]. IEEE Access, 2021, 9: 43202−43213. doi: 10.1109/ACCESS.2021.3066510

[8] Lin T Y, Goyal P, Girshick R, et al. Focal loss for dense object detection[J]. IEEE Trans Pattern Anal Mach Intell, 2020, 42(2): 318−327. doi: 10.1109/TPAMI.2018.2858826

[9] Redmon J, Divvala S, Girshick R, et al. You only look once: unified, real-time object detection[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, 2016: 779–788. https://doi.org/10.1109/CVPR.2016.91.

[10] Redmon J, Farhadi A. YOLO9000: better, faster, stronger[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, 2017: 6517–6525. https://doi.org/10.1109/CVPR.2017.690.

[11] Redmon J, Farhadi A. YOLOv3: an incremental improvement[Z]. arXiv: 1804.02767, 2018. https://doi.org/10.48550/arXiv.1804.02767.

[12] Bochkovskiy A, Wang C Y, Liao H Y M. YOLOv4: optimal speed and accuracy of object detection[Z]. arXiv: 2004.10934, 2020. https://doi.org/10.48550/arXiv.2004.10934.

[13] Ge Z, Liu S T, Wang F, et al. YOLOX: exceeding YOLO series in 2021[Z]. arXiv: 2107.08430, 2021. https://doi.org/10.48550/arXiv.2107.08430.

[14] Girshick R. Fast R-CNN[C]//Proceedings of 2015 IEEE International Conference on Computer Vision, 2015: 1440–1448. https://doi.org/10.1109/ICCV.2015.169.

[15] Dai J F, Li Y, He K M, et al. R-FCN: object detection via region-based fully convolutional networks[C]//Proceedings of the 30th International Conference on Neural Information Processing Systems, 2016: 29.

[16] Li Y T, Fan Q S, Huang H S, et al. A modified YOLOv8 detection network for UAV aerial image recognition[J]. Drones, 2023, 7(5): 304. doi: 10.3390/drones7050304

[17] Zhu F Z, Wang Y Y, Cui J Y, et al. Target detection for remote sensing based on the enhanced YOLOv4 with improved BiFPN[J]. Egypt J Remote Sens Space Sci, 2023, 26(2): 351−360. doi: 10.1016/j.ejrs.2023.04.003

[18] Zhai X X, Huang Z H, Li T, et al. YOLO-Drone: an optimized YOLOv8 network for tiny UAV object detection[J]. Electronics, 2023, 12(17): 3664. doi: 10.3390/electronics12173664

[19] Zhou F Y, Deng H G, Xu Q G, et al. CNTR-YOLO: improved YOLOv5 based on ConvNext and transformer for aircraft detection in remote sensing images[J]. Electronics, 2023, 12(12): 2671. doi: 10.3390/electronics12122671

[20] Zhu B Y, Lv Q B, Yang Y B, et al. Gradient structure information-guided attention generative adversarial networks for remote sensing image generation[J]. Remote Sens, 2023, 15(11): 2827. doi: 10.3390/rs15112827

[21] Xiao J S, Guo H W, Yao Y T, et al. Multi-scale object detection with the pixel attention mechanism in a complex background[J]. Remote Sens, 2022, 14(16): 3969. doi: 10.3390/rs14163969

[22] Wu J J, Su L M, Lin Z W, et al. Object detection of flexible objects with arbitrary orientation based on rotation-adaptive YOLOv5[J]. Sensors, 2023, 23(10): 4925. doi: 10.3390/s23104925

[23] Yang X, Yan J C, Liao W L, et al. SCRDet++: detecting small, cluttered and rotated objects via instance-level feature denoising and rotation loss smoothing[J]. IEEE Trans Pattern Anal Mach Intell, 2023, 45(2): 2384−2399. doi: 10.1109/TPAMI.2022.3166956

[24] Zhang H Y, Liu J. Direction estimation of aerial image object based on neural network[J]. Remote Sens, 2022, 14(15): 3523. doi: 10.3390/rs14153523

[25] Yu H T, Tian Y J, Ye Q X, et al. Spatial transform decoupling for oriented object detection[C]//Proceedings of the 38th AAAI Conference on Artificial Intelligence, 2024: 6782–6790. https://doi.org/10.1609/aaai.v38i7.28502.

[26] Li C, Yao A B. KernelWarehouse: towards parameter-efficient dynamic convolution[Z]. arXiv: 2308.08361, 2023. https://doi.org/10.48550/arXiv.2308.08361.

[27] Ma S L, Xu Y. MPDIoU: a loss for efficient and accurate bounding box regression[Z]. arXiv: 2307.07662, 2023. https://doi.org/10.48550/arXiv.2307.07662.

[28] Wang J W, Xu C, Yang W, et al. A normalized Gaussian Wasserstein distance for tiny object detection[Z]. arXiv: 2110.13389, 2021. https://doi.org/10.48550/arXiv.2110.13389.

[29] Sandler M, Howard A, Zhu M L, et al. MobileNetV2: inverted residuals and linear bottlenecks[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018: 4510–4520.

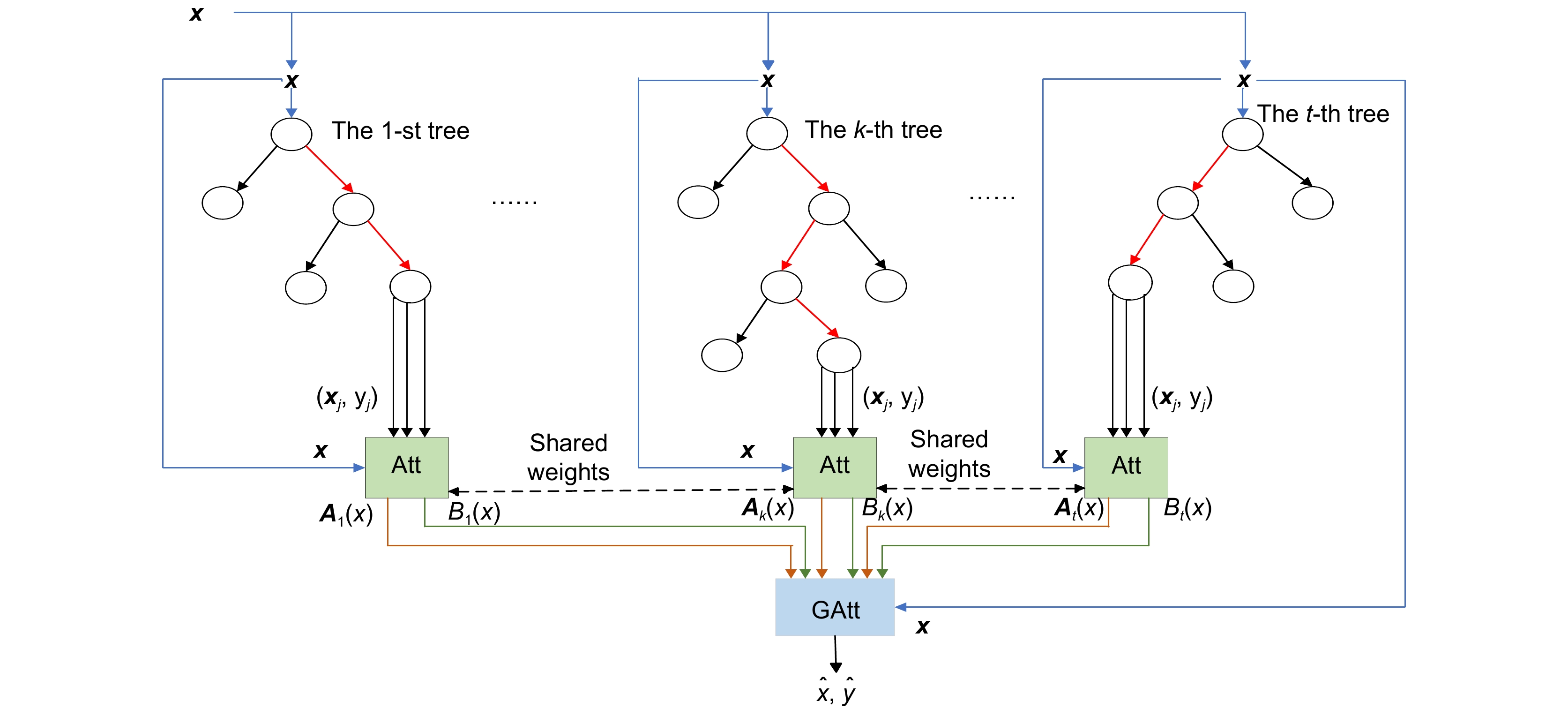

[30] Konstantinov A V, Utkin L V, Lukashin A A, et al. Neural attention forests: transformer-based forest improvement[C]//Proceedings of the Seventh International Scientific Conference “Intelligent Information Technologies for Industry”, 2023. https://doi.org/10.1007/978-3-031-43789-2_14.

[31] Tan M X, Le Q V. EfficientNet: rethinking model scaling for convolutional neural networks[C]//Proceedings of the 36th International Conference on Machine Learning, 2019: 6105–6114.

[32] Law H, Deng J. CornerNet: detecting objects as paired keypoints[C]//Proceedings of the 15th European Conference on Computer Vision (ECCV), 2018: 734–750. https://doi.org/10.1007/978-3-030-01264-9_45.

[33] 于傲泽, 魏维伟, 王平, 等. 基于分块复合注意力的无人机小目标检测算法[J]. 航空学报, 2023: 1–12. https://doi.org/10.7527/S1000-6893.2023.29148.

Yu A Z, Wei W W, Wang P, et al. Small target detection algorithm for UAV based on patch-wise co-attention[J]. Acta Aeronaut Astronaut Sin, 2023: 1–12. https://doi.org/10.7527/S1000-6893.2023.29148.

[34] Min L T, Fan Z M, Lv Q Y, et al. YOLO-DCTI: small object detection in remote sensing base on contextual transformer enhancement[J]. Remote Sens, 2023, 15(16): 3970. doi: 10.3390/rs15163970

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: