-

摘要

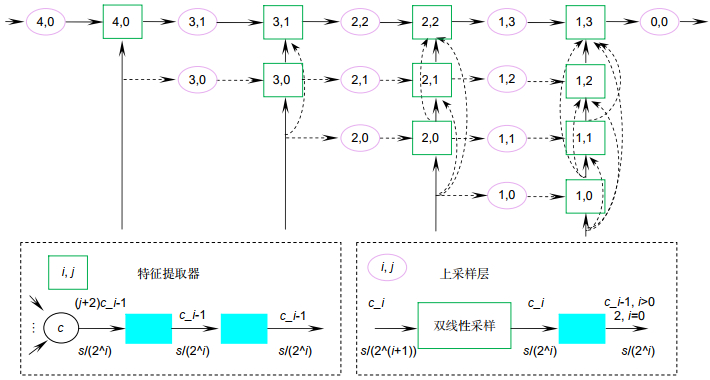

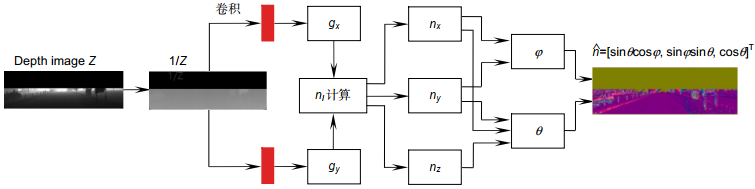

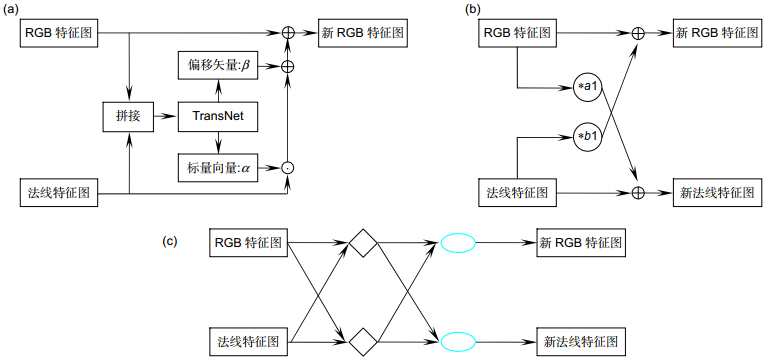

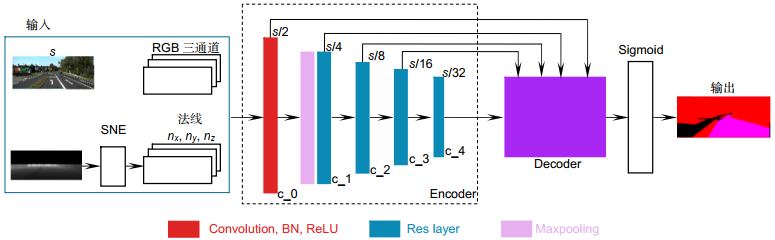

道路检测是车辆实现自动驾驶的前提。近年来,基于深度学习的多源数据融合成为当前自动驾驶研究的一个热点。本文采用卷积神经网络对激光雷达点云和图像数据加以融合,实现对交通场景中道路的分割。本文提出了像素级、特征级和决策级多种融合方案,尤其是在特征级融合中设计了四种交叉融合方案,对各种方案进行对比研究,给出最佳融合方案。在网络构架上,采用编码解码结构的语义分割卷积神经网络作为基础网络,将点云法线特征与RGB图像特征在不同的层级进行交叉融合。融合后的数据进入解码器还原,最后使用激活函数得到检测结果。实验使用KITTI数据集进行评估,验证了各种融合方案的性能,实验结果表明,本文提出的融合方案E具有最好的分割性能。与其他道路检测方法的比较实验表明,本文方法可以获得较好的整体性能。

Abstract

Road detection is the premise of vehicle automatic driving. In recent years, multi-modal data fusion based on deep learning has become a hot spot in the research of automatic driving. In this paper, convolutional neural network is used to fuse LiDAR point cloud and image data to realize road segmentation in traffic scenes. In this paper, a variety of fusion schemes at pixel level, feature level and decision level are proposed. Especially, four cross-fusion schemes are designed in feature level fusion. Various schemes are compared, and the best fusion scheme is given. In the network architecture, the semantic segmentation convolutional neural network with encoding and decoding structure is used as the basic network to cross-fuse the point cloud normal features and RGB image features at different levels. The fused data is restored by the decoder, and finally the detection results are obtained by using the activation function. The substantial experiments have been conducted on public KITTI data set to evaluate the performance of various fusion schemes. The results show that the fusion scheme E proposed in this paper has the best segmentation performance. Compared with other road-detection methods, our method gives better overall performance.

-

Key words:

- autonomous driving /

- road detection /

- semantic segmentation /

- data fusion

-

Overview

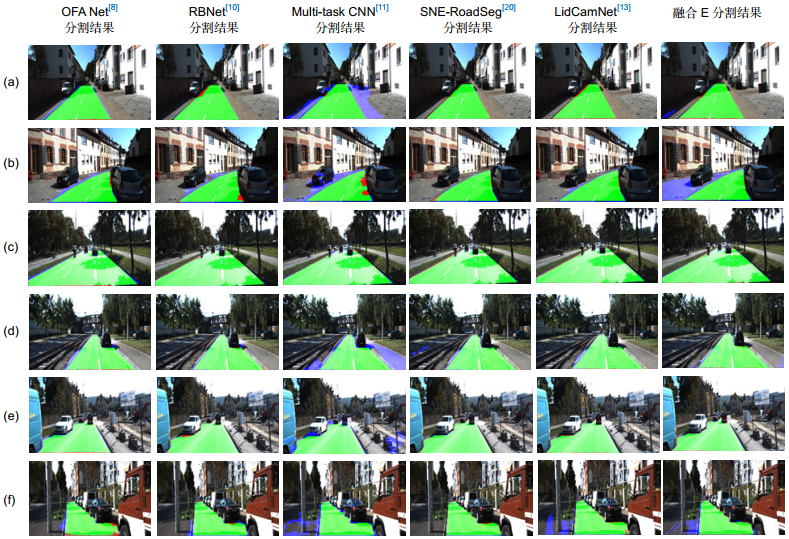

Overview: Road detection is an important content of environmental identification in the field of automatic driving, and it is an important prerequisite for vehicles to realize automatic driving. Multi-source data fusion based on deep learning has become a hot topic in the field of automatic driving. RGB data can provide dense texture and color information, LiDAR data can provide accurate spatial information, and multi-sensor data fusion can improve the robustness and accuracy of detection. The latest fusion method uses convolutional neural network (CNN) as a fusion tool to fuse the LiDAR data and RGB image data, and semantic segmentation to realize road detection and segmentation. In this paper, different fusion methods of LiDAR point cloud and image data are adopted by encoder-decoder structure to realize road segmentation in traffic scenes. Aiming at the fusion methods of point cloud and image data, this paper proposes a variety of fusion schemes at pixel level, feature level, and decision level. In particular, four kinds of cross-fusion schemes are designed in feature level fusion. Various schemes are compared and studied to give the best fusion scheme. As for the network architecture, we use the encoder with residual network and the decoder with dense connection and jump connection as the basic network. The input image is RGB-D, and the LiDAR depth map is processed into a normal map by a surface normal estimator. The normal map features and RGB image features are fused at different levels of the network. The features are extracted through two input signals generated by two encoders, restored by a decoder, and finally road detection results are obtained by using sigmoid activation function. KITTI data set is used to verify the performances of various fusion schemes. The contrast experiments show that the proposed fusion scheme E can better learn the LiDAR point cloud information, the camera image information, the correlation of cross added point cloud, and image information. Also, it can reduce the loss of characteristic information, and thus has the best road segmentation effect. Through quantitative analysis of the average accuracy (AP) of different road detection methods, the optimal fusion method proposed in this paper shows the advantages of average detection accuracy, and has good overall performance. Through qualitative analysis of the performance of different detection methods in different scenarios, the results show that the fusion scheme E proposed in this paper has good detection results for the boundary area between vehicles and roads, and could effectively reduce the false detection rate of road detection.

-

-

表 1 不同融合方式之间的性能比较

Table 1. Performance comparison between different fusion methods

Loss Accuracy Precision Recall F1-score IoU 像素级融合 融合A 0.049 0.984 0.959 0.948 0.954 0.911 融合B 0.065 0.982 0.941 0.951 0.946 0.897 特征级融合 融合C 0.050 0.985 0.943 0.969 0.956 0.915 融合D 0.047 0.983 0.951 0.946 0.948 0.902 融合E(ours) 0.022 0.994 0.979 0.988 0.984 0.968 决策级融合 融合F 0.058 0.982 0.934 0.962 0.948 0.901 表 2 KITTI道路基准测试结果

Table 2. The KITTI road benchmark results

方法类型 MaxF/% AP/% Precision/% Recall/% UM 基于图像的语义分割方法 OFA Net[8] 92.08 83.73 87.87 96.72 MultiNet[9] 93.99 93.24 94.51 93.48 RBNet[10] 94.77 91.42 95.16 94.37 Multi-task CNN[11] 85.95 81.28 77.40 96.64 基于点云—图像融合的语义分割方法 SNE-RoadSeg[20] 96.42 93.67 96.59 96.26 LidCamNet[13] 95.62 93.54 95.77 95.48 融合E(ours) 95.72 95.12 95.87 95.59 UMM 基于图像的语义分割方法 OFA Net[8] 95.43 89.10 92.78 98.24 MultiNet[9] 96.15 95.36 95.79 96.51 RBNet[10] 96.06 93.49 95.80 96.31 Multi-task CNN[11] 91.15 87.45 85.08 98.15 基于点云—图像融合的语义分割方法 SNE-RoadSeg[20] 97.47 95.63 97.32 97.61 LidCamNet[13] 97.08 95.51 97.28 96.88 融合E(ours) 96.71 95.79 96.33 97.10 UU 基于图像的语义分割方法 OFA Net[8] 92.62 83.12 88.97 96.58 MultiNet[9] 93.69 92.55 94.24 93.14 RBNet[10] 93.21 89.18 92.81 93.60 Multi-task CNN[11] 80.45 75.87 68.63 97.19 基于点云—图像融合的语义分割方法 SNE-RoadSeg[20] 96.03 93.03 96.22 95.83 LidCamNet[13] 94.54 92.74 94.64 94.45 融合E(ours) 95.38 93.23 94.95 95.83 -

参考文献

[1] Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation[C]//Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention, 2015.

[2] Zhou Z W, Siddiquee M R, Tajbakhsh N, et al. UNet++: a nested U-Net architecture for medical image segmentation[C]//Proceedings of the 4th International Workshop on Deep Learning in Medical Image Analysis, 2018.

[3] Huang G, Liu Z, van der Maaten L, et al. Densely connected convolutional networks[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, 2017.

[4] Xiao X, Lian S, Luo Z M, et al. Weighted Res-UNet for high-quality retina vessel segmentation[C]//Proceedings of the 2018 9th International Conference on Information Technology in Medicine and Education, 2018.

[5] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, 2016.

[6] Chen L C, Zhu Y K, Papandreou G, et al. Encoder-decoder with atrous separable convolution for semantic image segmentation[C]//Proceedings of the 15th European Conference on Computer Vision, 2018.

[7] Badrinarayanan V, Kendall A, Cipolla R. SegNet: a deep convolutional encoder-decoder architecture for image segmentation[J]. IEEE Trans Pattern Anal Mach Intell, 2017, 39(12): 2481–2495. doi: 10.1109/TPAMI.2016.2644615 https://ieeexplore.ieee.org/document/7803544

[8] Zhang S C, Zhang Z, Sun L B, et al. One for all: a mutual enhancement method for object detection and semantic segmentation[J]. Appl Sci, 2020, 10(1): 13. https://www.mdpi.com/2076-3417/10/1/13

[9] Teichmann M, Weber M, Zöllner M, et al. MultiNet: real-time joint semantic reasoning for autonomous driving[C]//Proceedings of 2018 IEEE Intelligent Vehicles Symposium, 2018.

[10] Chen Z, Chen Z J. RBNet: a deep neural network for unified road and road boundary detection[C]//Proceedings of the 24th International Conference on Neural Information Processing, 2017.

[11] Oeljeklaus M, Hoffmann F, Bertram T. A fast multi-task CNN for spatial understanding of traffic scenes[C]//Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems, 2018.

[12] Schlosser J, Chow C K, Kira Z. Fusing LIDAR and images for pedestrian detection using convolutional neural networks[C]//Proceedings of 2016 IEEE International Conference on Robotics and Automation, 2016.

[13] Caltagirone L, Bellone M, Svensson L, et al. LIDAR–camera fusion for road detection using fully convolutional neural networks[J]. Rob Auton Syst, 2019, 111: 125–131. doi: 10.1016/j.robot.2018.11.002 https://www.sciencedirect.com/science/article/abs/pii/S0921889018300496

[14] Chen Z, Zhang J, Tao D C. Progressive LiDAR adaptation for road detection[J]. IEEE/CAA J Automat Sin, 2019, 6(3): 693–702. doi: 10.1109/JAS.2019.1911459 http://www.cnki.com.cn/Article/CJFDTotal-ZDHB201903009.htm

[15] van Gansbeke W, Neven D, de Brabandere B, et al. Sparse and noisy LiDAR completion with RGB guidance and uncertainty[C]//Proceedings of the 2019 16th International Conference on Machine Vision Applications, 2019.

[16] Wang T H, Hu H N, Lin C H, et al. 3D LiDAR and stereo fusion using stereo matching network with conditional cost volume normalization[C]//Proceedings of 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2019.

[17] Zhang Y D, Funkhouser T. Deep depth completion of a single RGB-D image[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018.

[18] 邓广晖. 基于卷积神经网络的RGB-D图像物体检测和语义分割[D]. 北京: 北京工业大学, 2017.

Deng G H. Object detection and semantic segmentation for RGB-D images with convolutional neural networks[D]. Beijing: Beijing University of Technology, 2017.

[19] 曹培. 面向自动驾驶的双传感器信息融合目标检测及姿态估计[D]. 哈尔滨: 哈尔滨工业大学, 2019.

Cao P. Dual sensor information fusion for target detection and attitude estimation in autonomous driving[D]. Harbin: Harbin Institute of Technology, 2019.

[20] Fan R, Wang H L, Cai P D, et al. SNE-RoadSeg: incorporating surface normal information into semantic segmentation for accurate freespace detection[C]//Proceedings of the 16th European Conference on Computer Vision, 2020.

[21] Fan R, Wang H L, Xue B H, et al. Three-filters-to-normal: an accurate and ultrafast surface normal estimator[J]. IEEE Rob Automat Lett, 2021, 6(3): 5405–5412. doi: 10.1109/LRA.2021.3067308

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: