-

摘要:

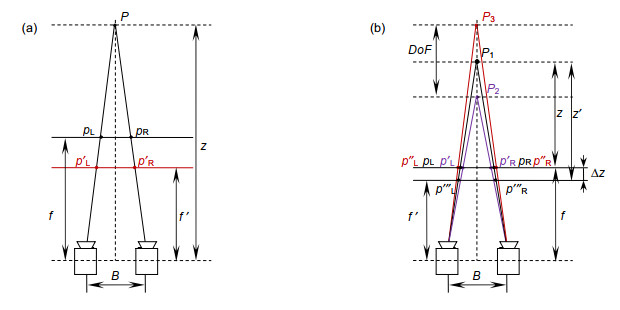

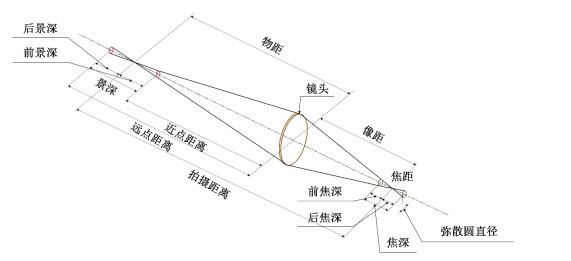

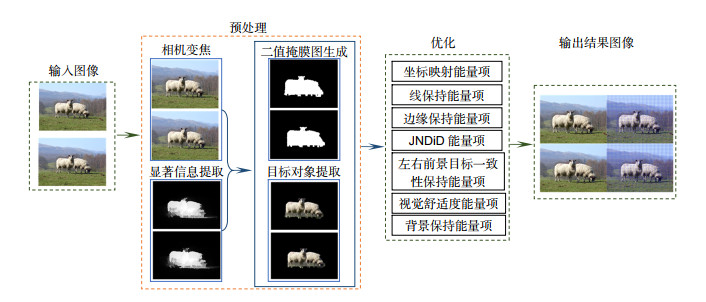

立体图像的视觉变焦优化是近年来图像处理和计算机视觉领域热门的基础研究问题。3D图像的变焦视觉增强技术越来越受到关注。为此,本文从相机变焦拍摄的模型出发,提出一种基于网格形变的立体变焦视觉优化方法,力求提高3D立体视觉的体验。首先利用数字变焦方法模拟相机模型对目标区域进行适当的视觉放大,然后根据相机变焦距离建立起参考图像与目标图像之间的映射关系,并提取前景目标对象,接着使用改进型深度恰可察觉深度模型来引导目标对象的自适应深度调整。最后结合本文所设计的七个网格优化能量项,对图像网格进行优化,以提高该目标对象的视觉感,并确保整幅立体图像的良好视觉体验效果。与现有的数字变焦方法相比,所提出的方法在图像目标对象的尺寸控制方面和目标对象的深度调整方面都具有更好的效果。

-

关键词:

- 立体图像 /

- 相机变焦 /

- 改进型深度恰可察觉深度模型 /

- 网格优化能量项

Abstract:Stereoscopic image zoom optimization is a popular basic research problem in the field of image processing and computer vision in recent years. The zoom visual enhancement technology of 3D images has attracted more and more attention. To this end, this paper proposes a method of stereoscopic zoom vision optimization based on grid deformation from the model of camera zoom shooting, and strives to improve the experience of 3D stereoscopic vision. Firstly, use the digital zoom method to simulate the camera model to properly zoom in on the target area, and then establish the mapping relationship between the reference image and the target image according to the camera zoom distance. Secondly, extract the foreground target object and use the modified just noticeable depth difference (JNDiD) model to guide the adaptive depth adjustment of the target object. Finally, combined with the seven grid-optimized energy terms designed in this paper, the image grid is optimized to improve the visual perception of the target object and ensure a good visual experience for the entire stereoscopic image. Compared with the existing digital zoom method, the proposed method has better effects on the size control of the image target object and the depth adjustment of the target object.

-

Overview: With the development of digital imaging technology, more and more attention has been paid to the visual enhancement technology of 3D stereoscopic image objects, which has made important contributions in the fields of AI image modeling, astronomical observation, and digital imaging shooting. For this reason, with the model of camera zoom shooting, a grid deformation-based method is proposed for visual optimization of the stereoscopic zoom. In order to improve the experience of 3D stereoscopic vision, the digital zoom method is used to simulate the camera model to appropriately enlarge the objective area, and then the mapping relationship between the reference image. The target image is established according to the camera zoom distance, and the foreground target object is extracted. Then use the modified just noticeable depth difference model to guide the adaptive depth adjustment of the target object. Finally, the grid of the image is optimized in combination with the designed energy term to improve the visual perception of the target object and ensure good visual experience effect of the entire stereoscopic image. The camera-based zoom operation is used to extract the foreground target object of the stereoscopic image, and then the target object and the transition area between the target object and the image background are optimized. From the perspective of the size of the target object, with the zoomed image and camera focal length as a reference, the foreground target object is enlarged while the background remains unchanged. From the point of view of the image optimization effect, the transition between the foreground target object and the image background is good, to avoid problems such as voids in the transition between the target object and the background and changes in the target depth causing visual discomfort. From the experimental results, the target object is enlarged according to the focal length, and the improved depth can be used to adjust the image depth to improve the visual comfort of the 3D image. Through camera zoom operation and related grid deformation operation, and introduce coordinate mapping energy term, line retention energy term, boundary retention energy term, modified just noticeable depth difference energy term, left and right foreground consistency retention energy term, visual comfort energy term, and background preservation energy term to achieve the visual optimization of stereoscopic zoom. Compared with the existing digital focus adjustment of the enlarged image object, the method proposed in this paper has a certain good effect on the size control of the image target object and the depth adjustment of the target object.

-

-

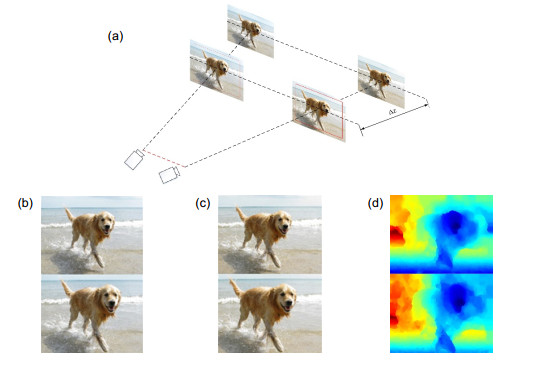

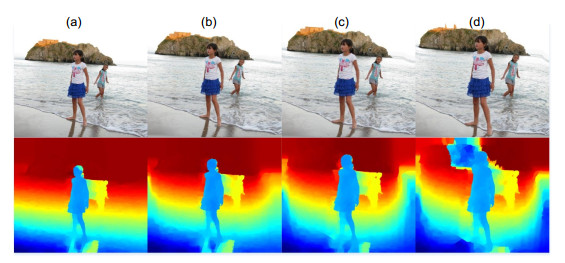

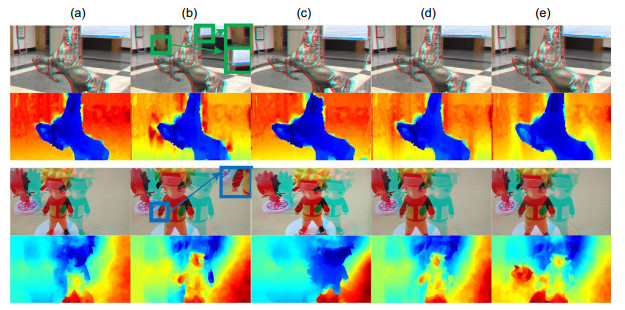

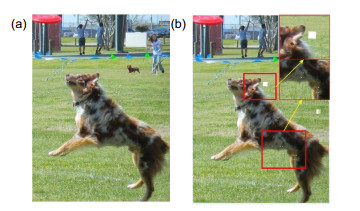

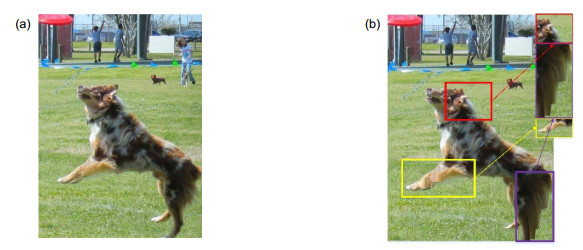

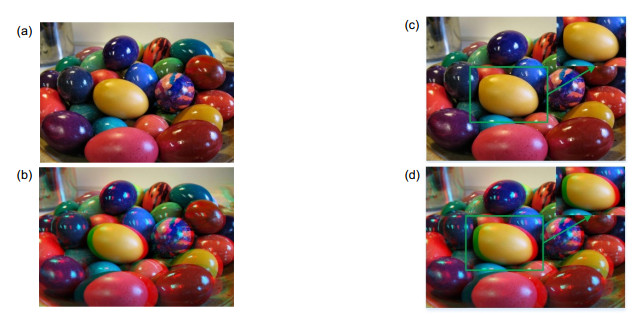

图 7 不同焦距下的目标对象。(a) 原图像及其视差图;(b) 焦距为260 mm的图像及其视差图;(c) 焦距为270 mm的图像及其视差图;(d) 焦距为280 mm的图像及其视差图

Figure 7. The target object at different focal distances. (a) Original image and its disparity map; (b) The image with focaldistance at 260 mm and its disparity map; (c) The image with focal distance at 270 mm and its disparity map; (d) The image focal distance at 280 mm and disparity map

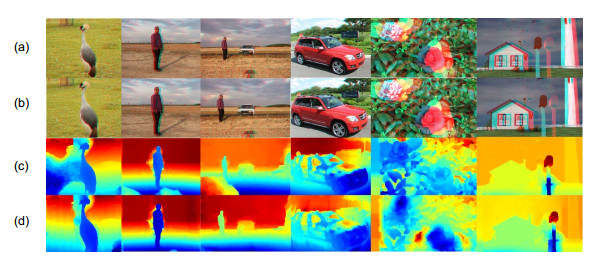

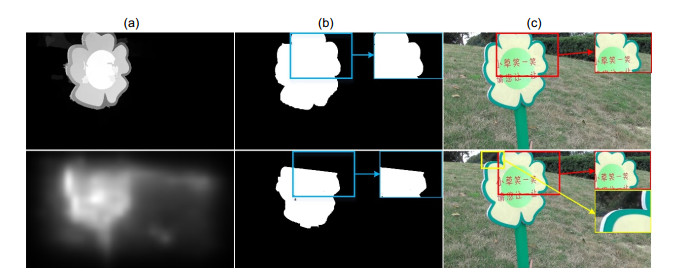

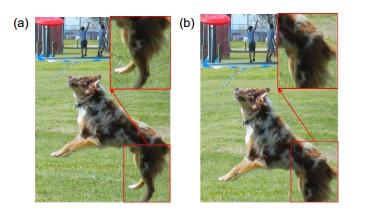

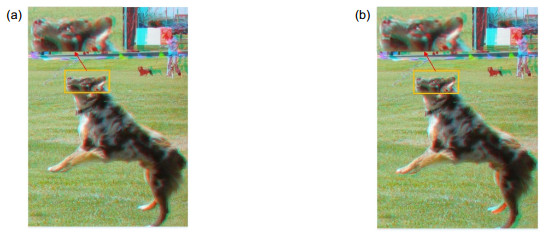

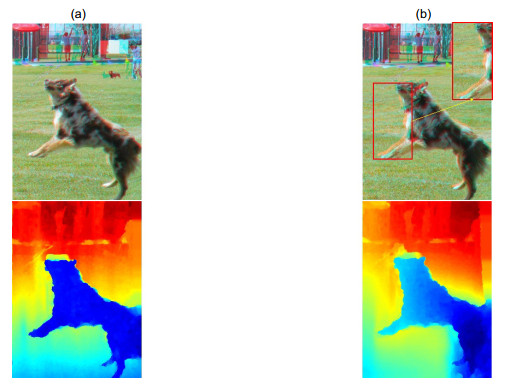

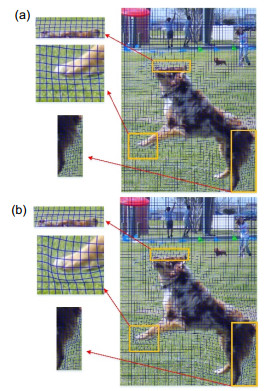

图 15 是否包含视觉舒适度能量项对比。(a) 包含了视觉舒适度能量项的红蓝图以及视差图的结果;(b) 未包含该能量项的红蓝图以及视差图的结果

Figure 15. Comparison of whether the visual comfort energy term is included. (a) Included the red-cyan anaglyph and the disparity images of the visual comfort energy term; (b) The red-cyan anaglyph and the disparity images without the energy term

表 1 不同能量项的权重

Table 1. Weights of different energy terms

权重参数 λ1 λ2 λ3 λ4 λ5 λ6 λ7 权重值 2 1 4 2 30 3 2 表 2 通过客观指标PSNR与SSIM比较四种实验方法

Table 2. Comparison of four experimental methods through objective indicators PSNR and SSIM

测试图像库 3D渲染 伸缩变换 深度控制 本文方法 PSNR SSIM PSNR SSIM PSNR SSIM PSNR SSIM NBU_VCA图库 12.62 0.3987 12.97 0.4786 12.61 0.4103 14.94 0.5062 CVPR2012图库 19.77 0.4453 19.68 0.5237 19.73 0.4523 20.32 0.5519 S3DImage图库 19.09 0.4227 18.75 0.4913 21.34 0.4937 22.66 0.5732 Average 17.16 0.4222 17.13 0.4979 17.89 0.4521 19.31 0.5438 表 3 不同能量项组合

Table 3. Combination of different energy terms

方案 能量项组合 方案一 坐标映射能量项+线保持能量项+改进型恰可察觉深度能量项+边界保持能量项+左右前景目标一致性保持能量项+视觉舒适度能量项+背景保持能量项 方案二 方案一中除去坐标映射能量项 方案三 方案一中除去线保持能量项 方案四 方案一中除去改进型恰可察觉深度能量项 方案五 方案一中除去边界保持能量项 方案六 方案一中除去左右前景目标一致性保持能量项 方案七 方案一中除去视觉舒适度能量项 方案八 方案一中除去背景保持能量项 -

[1] Devernay F, Duchêne S, Ramos-Peon A. Adapting stereoscopic movies to the viewing conditions using depth-preserving and artifact-free novel view synthesis[J]. Proc SPIE, 2011, 7863: 786302. doi: 10.1117/12.872883

[2] Islam M B, Lai-Kuan W, Chee-Onn W, et al. Stereoscopic image warping for enhancing composition aesthetics[C]//Proceedings of the 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 2015: 645-649.

[3] Basha D T, Moses Y, Avidan S. Stereo seam carving a geometrically consistent approach[J]. IEEE Trans Patt Anal Mach Intellig, 2013, 35(10): 2513-2525. doi: 10.1109/TPAMI.2013.46

[4] Luo S J, Sun Y T, Shen I C, et al. Geometrically consistent stereoscopic image editing using patch-based synthesis[J]. IEEE Trans Visual Comput Graph, 2015, 21(1): 56-67. doi: 10.1109/TVCG.2014.2327979

[5] Lee K Y, Chung C D, Chuang Y Y. Scene warping: layer-based stereoscopic image resizing[C]//2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 2012: 49-56.

[6] Yan W Q, Hou C P, Wang B L, et al. Content-aware disparity adjustment for different stereo displays[J]. Multim Tools Applicat, 2017, 76(8): 10465-10479. doi: 10.1007/s11042-016-3442-y

[7] Niu Y Z, Liu F, Feng W C, et al. Aesthetics-based stereoscopic photo cropping for heterogeneous displays[J]. IEEE Trans Multim, 2012, 14(3): 783-796. doi: 10.1109/TMM.2012.2186122

[8] Tong R F, Zhang Y, Cheng K L. Stereo Pasting: Interactive composition in stereoscopic images[J]. IEEE Trans Visualizat Comput Graph, 2013, 19(8): 1375-1385. doi: 10.1109/TVCG.2012.319

[9] Chang C H, Liang C K, Chuang Y Y. Content-aware display adaptation and interactive editing for stereoscopic images[J]. IEEE Trans Multim, 2011, 13(4): 589-601. doi: 10.1109/TMM.2011.2116775

[10] Shao F, Lin W C, Lin W S, et al. QoE-guided warping for stereoscopic image retargeting[J]. IEEE Trans Image Process, 2017, 26(10): 4790-4805. doi: 10.1109/TIP.2017.2721546

[11] Shao F, Shen L B, Jiang Q P, et al. StereoEditor: controllable stereoscopic display by content retargeting[J]. Opt Exp, 2017, 25(26): 33202-33213. doi: 10.1364/OE.25.033202

[12] Lu S P, Florea R M, Cesar P, et al. Efficient depth-aware image deformation adaptation for curved screen displays[C]//Proceedings of the on Thematic Workshops of ACM Multimedia, New York, NY, USA, 2017: 442-450.

[13] Kim D, Sohn K. Depth adjustment for stereoscopic image using visual fatigue prediction and depth-based view synthesis[C]//Proceedings of the 2010 IEEE International Conference on Multimedia and Expo, Singapore, 2010: 956-961.

[14] Lei J J, Peng B, Zhang C Q, et al. Shape-preserving object depth control for stereoscopic images[J]. IEEE Trans Circuits Syst Video Technol, 2018, 28(12): 3333-3344. doi: 10.1109/TCSVT.2017.2749146

[15] Park H, Lee H, Sull S. Object depth adjustment based on planar approximation in stereo images[C]//Proceedings of the IEEE International Conference on Consumer Electronics-Berlin (ICCE-Berlin), Berlin, Germany, 2011: 10-14.

[16] Park H, Lee H, Sull S. Efficient viewer-centric depth adjustment based on virtual fronto-parallel planar projection in stereo 3D images[J]. IEEE Trans Multim, 2014, 16(2): 326-336. doi: 10.1109/TMM.2013.2286567

[17] Yan T, Lau R W H, Xu Y, et al. Depth mapping for stereoscopic videos[J]. Int J Comput Vision, 2013, 102(1-3): 293-307. doi: 10.1007/s11263-012-0593-9

[18] Sohn H, Jung Y J, Lee S I, et al. Visual comfort amelioration technique for stereoscopic images: disparity remapping to mitigate global and local discomfort causes[J]. IEEE Trans Circuits Syst Video Technol, 2014, 24(5): 745-758. doi: 10.1109/TCSVT.2013.2291281

[19] Guan S H, Lai Y C, Chen K W, et al. A tool for stereoscopic parameter setting based on geometric perceived depth percentage[J]. IEEE Trans Circuits Syst Video Technol, 2016, 26(2): 290-303. doi: 10.1109/TCSVT.2015.2407774

[20] Oh H, Kim J, Kim J, et al. Enhancement of visual comfort and sense of presence on stereoscopic 3D images[J]. IEEE Trans Image Process, 2017, 26(8): 3789-3801. doi: 10.1109/TIP.2017.2702383

[21] Wang W G, Shen J B, Yu Y Z, et al. Stereoscopic thumbnail creation via efficient stereo saliency detection[J]. IEEE Trans Visualizat Comput Graph, 2017, 23(8): 2014-2027. doi: 10.1109/TVCG.2016.2600594

[22] Gastal E S L, Oliveira M M. Shared sampling for real-time alpha matting[J]. Comput Graph Forum, 2010, 29(2): 575-584. doi: 10.1111/j.1467-8659.2009.01627.x

[23] Li C H, An P, Shen L Q, et al. A modified just noticeable depth difference model built in perceived depth space[J]. IEEE Trans Multim, 2019, 21(6): 1464-1475. doi: 10.1109/TMM.2018.2882085

[24] Mao Y, Cheung G, Ji Y S. On constructing z-Dimensional DIBR-synthesized images[J]. IEEE Trans Multim, 2016, 18(8): 1453-1468. doi: 10.1109/TMM.2016.2573142

[25] Wang Z, Bovik A C, Sheikh H R, et al. Image quality assessment: From error visibility to structural similarity[J]. IEEE Trans Image Process, 2005, 13(4): 600-612.

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: