-

摘要:

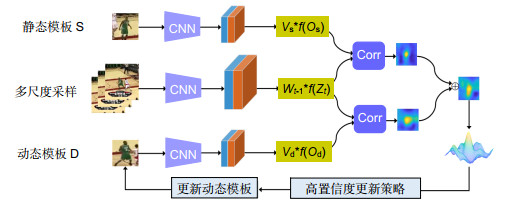

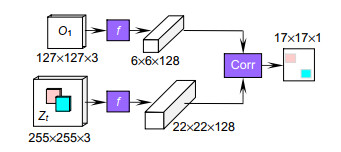

基于Siamese网络的视觉跟踪算法是近年来视觉跟踪领域的一类重要方法,其在跟踪速度和精度上都具有良好的性能。但是大多数基于Siamese网络的跟踪算法依赖离线训练模型,缺乏对跟踪器的在线更新。针对这一问题,本文提出了一种基于在线学习的Siamese网络视觉跟踪算法。该算法采用双模板思想,将第一帧中的目标当作静态模板,在后续帧中使用高置信度更新策略获取动态模板;在线跟踪时,利用快速变换学习模型从双模板中学习目标的表观变化,同时根据当前帧的颜色直方图特征计算出搜索区域的目标似然概率图,与深度特征融合,进行背景抑制学习;最后,将双模板获取的响应图进行加权融合,获得最终跟踪结果。在OTB2015、TempleColor128和VOT数据集上的实验结果表明,本文算法的测试结果与近几年的多种主流算法相比均有所提高,在目标形变、相似背景干扰、快速运动等复杂场景下具有较好的跟踪性能。

Abstract:Visual tracking algorithm based on a Siamese network is an important method in the field of visual tracking in recent years, and it has good performance in tracking speed and accuracy. However, most tracking algorithms based on the Siamese network rely on an off-line training model and lack of online update to tracker. In order to solve this problem, we propose an online learning-based visual tracking algorithm for Siamese networks. The algorithm adopts the idea of double template, treats the target in the first frame as a static template, and uses the high confidence update strategy to obtain the dynamic template in the subsequent frame; in online tracking, the fast transform learning model is used to learn the apparent changes of the target from the double template, and the target likelihood probability map of the search area is calculated according to the color histogram characteristics of the current frame, and the background suppression learning is carried out. Finally, the response map obtained by the dual templates is weighted, and the final prediction result is obtained. The experimental results on OTB2015, TempleColor128, and VOT datasets show that the test results of this algorithm are improved compared with the mainstream algorithms in recent years and have better tracking performance in target deformation, similar background interference, fast motion, and other scenarios.

-

Key words:

- target tracking /

- Siamese network /

- dual templates /

- fast transformation learning model

-

Overview: Visual tracking is a fundamental challenging task in computer vision. Tracking predicts a target position in all subsequent frames given the initial frame information. It has been widely used in intelligent surveillance, unmanned driving, military detection, and other fields. In visual tracking, the target is usually faced with scale change, motion blur, target deformation, occlusion. At present, most trackers based on discriminative models include the correlation filters trackers which use hand-crafted features or CNNs and the Siamese network trackers. Visual tracking algorithm based on the Siamese network is an important method in the field of visual tracking in recent years, and it has good performance in tracking speed and accuracy. However, most tracking algorithms based on the Siamese network rely on off-line training model and lack of online update to tracker. Guo et al. proposed the DSiam algorithm, which constructed a dynamic Siamese network structure, including a fast transform learning model, and was able to learn the apparent changes and background suppression of the online target in the tracking phase. But it still has some disadvantages. Firstly, in the tracking stage, the rich information in the history frame is not used. Second, when background suppression, only a Gaussian weight graph is used in the search area, which cannot effectively highlight the target and suppress the background. In order to solve these problems, we propose an online learning-based visual tracking algorithm for Siamese networks. Main tasks as follows:

The algorithm adopts the idea of double template, treats the target in the first frame as a static template, and uses the high confidence update strategy to obtain the dynamic template in the subsequent frame.

In online tracking, the fast transform learning model is used to learn the apparent changes of the target from the double template, and the target likelihood probability map of the search area is calculated according to the color histogram characteristics of the current frame, and the background suppression learning is carried out.

Finally, the response map obtained by the dual templates is weighted and the final prediction result is obtained.

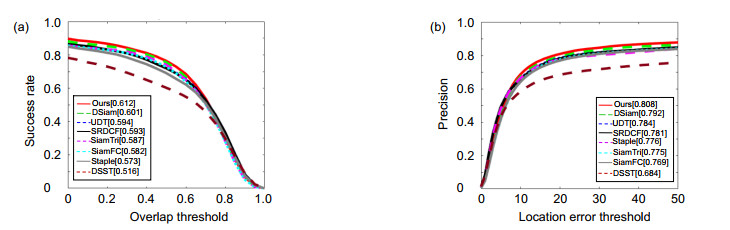

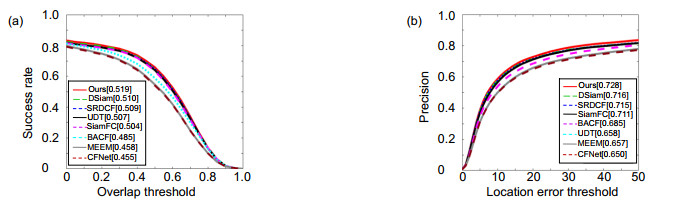

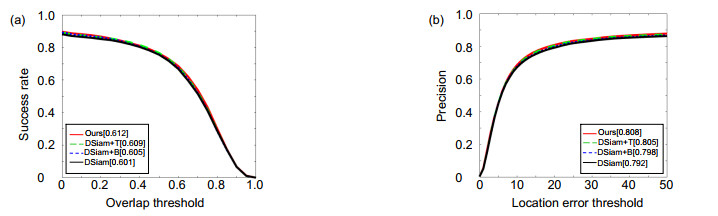

The experimental results on OTB2015, TempleColor128 and VOT datasets show that the test results of this algorithm are improved compared with the mainstream algorithms in recent years, and have better tracking performance in target deformation, similar background interference, fast motion, and other scenarios.

-

-

表 1 参数α、β的取值对成功率的影响(OTB2015)

Table 1. Influence of parameter values on success rate (OTB2015)

α β 0.75 0.80 0.85 0.90 0.95 0.75 0.592 0.596 0.599 0.607 0.594 0.80 0.602 0.604 0.607 0.612 0.603 0.85 0.594 0.596 0.603 0.609 0.598 0.90 0.591 0.598 0.601 0.608 0.602 0.95 0.589 0.593 0.599 0.602 0.597 表 2 参数λ的取值对成功率的影响(OTB2015)

Table 2. Influence of parameter λ values on success rate (OTB2015)

λ 0.70 0.75 0.80 0.85 0.90 Success rate 0.609 0.612 0.610 0.606 0.604 表 4 不同属性下算法的跟踪成功率对比结果

Table 4. Comparsion results of tracking success of the algorithm under different attributes

Ours 0.598 0.615 0.589 0.583 0.572 0.601 0.582 0.571 0.603 0.578 0.618 SiamFC 0.551 0.556 0.579 0.564 0.507 0.570 0.552 0.515 0.545 0.470 0.582 DSiam 0.589 0.592 0.592 0.567 0.553 0.583 0.576 0.566 0.591 0.566 0.612 SiamTri 0.568 0.557 0.573 0.542 0.492 0.585 0.560 0.533 0.573 0.543 0.627 UDT 0.565 0.544 0.536 0.552 0.535 0.589 0.562 0.528 0.592 0.460 0.480 Staple 0.525 0.535 0.548 0.560 0.549 0.535 0.589 0.568 0.537 0.476 0.448 DSST 0.482 0.475 0.501 0.457 0.423 0.467 0.540 0.511 0.480 0.386 0.390 SRDCF 0.556 0.547 0.541 0.564 0.539 0.592 0.596 0.578 0.594 0.460 0.512 表 5 不同属性下算法的跟踪精确度对比结果

Table 5. Comparsion results of tracking accuracy of the algorithm under different attributes

Algorithm SV OPR IPR OCC DEF FM IV BC MB OV LR Ours 0.796 0.816 0.781 0.772 0.737 0.777 0.752 0.749 0.743 0.719 0.862 SiamFC 0.732 0.747 0.742 0.720 0.690 0.732 0.713 0.690 0.701 0.669 0.875 DSiam 0.784 0.796 0.770 0.751 0.726 0.754 0.740 0.741 0.731 0.708 0.854 SiamTri 0.752 0.752 0.739 0.714 0.718 0.761 0.713 0.695 0.714 0.723 0.859 UDT 0.743 0.756 0.753 0.732 0.703 0.740 0.724 0.701 0.715 0.677 0.852 Staple 0.731 0.725 0.759 0.726 0.732 0.708 0.737 0.722 0.701 0.668 0.682 DSST 0.654 0.650 0.501 0.457 0.543 0.584 0.690 0.681 0.480 0.478 0.581 SRDCF 0.739 0.571 0.742 0.735 0.726 0.758 0.781 0.761 0.757 0.597 0.744 表 6 VOT2015数据集上不同算法的精度和鲁棒性对比结果

Table 6. Evaluation on VOT2015 by the means of accuracy and robustness

Ours DSiam HCF SRDCF Struck Staple SiamFC LDP Accuracy 0.65 0.59 0.45 0.56 0.47 0.53 0.52 0.51 Robustness 1.03 0.94 0.39 1.24 1.26 1.35 0.88 1.84 EAO 0.296 0.284 0.220 0.288 0.246 0.300 0.274 0.278 表 7 本文算法与不同算法的跟踪速度对比

Table 7. Comparing our method with different trackers in terms of tracking speed

Ours DSiam SiamFC SRDCF MEEM Struck TADT CFNet Speed 29 45 58 5 10 20 33 41 -

[1] 侯志强, 韩崇昭. 视觉跟踪技术综述[J]. 自动化学报, 2006, 32(4): 603-617. https://www.cnki.com.cn/Article/CJFDTOTAL-MOTO200604016.htm

Hou Z Q, Han C Z. A survey of visual tracking[J]. Acta Automat Sin, 2006, 32(4): 603-617. https://www.cnki.com.cn/Article/CJFDTOTAL-MOTO200604016.htm

[2] 汤学猛, 陈志国, 傅毅. 基于核滤波器实时运动目标的抗遮挡再跟踪[J]. 光电工程, 2020, 47(1): 190279. doi: 10.12086/oee.2020.190279

Tang X M, Chen Z G, Fu Y. Anti-occlusion and re-tracking of real-time moving target based on kernelized correlation filter[J]. Opto-Electron Eng, 2020, 47(1): 190279. doi: 10.12086/oee.2020.190279

[3] 卢湖川, 李佩霞, 王栋. 目标跟踪算法综述[J]. 模式识别与人工智能, 2018, 31(1): 61-76. https://www.cnki.com.cn/Article/CJFDTOTAL-MSSB201801008.htm

Lu H C, Li P X, Wang D. Visual object tracking: a survey[J]. Patt Recog Artif Intell, 2018, 31(1): 61-76. https://www.cnki.com.cn/Article/CJFDTOTAL-MSSB201801008.htm

[4] 赵春梅, 陈忠碧, 张建林. 基于卷积网络的目标跟踪应用研究[J]. 光电工程, 2020, 47(1): 180668. doi: 10.12086/oee.2020.180668

Zhao C M, Chen Z B, Zhang J L. Research on target tracking based on convolutional networks[J]. Opto-Electron Eng, 2020, 47(1): 180668. doi: 10.12086/oee.2020.180668

[5] Bertinetto L, Valmadre J, Henriques J F, et al. Fully-convolutional Siamese networks for object tracking[C]//European Conference on Computer Vision, Cham, 2016: 850-865.

[6] Dong X P, Shen J B. Triplet loss in Siamese network for object tracking[C]//Proceedings of the European Conference on Computer Vision (ECCV), Cham, 2018.

[7] Wang Q, Gao J, Xing J L, et al. Dcfnet: Discriminant correlation filters network for visual tracking[Z]. arXiv: 1704.04057v1, 2017.

[8] Li B, Yan J J, Wu W, et al. High performance visual tracking with siamese region proposal network[C]//Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 2018: 8971-8980.

[9] Russakovsky O, Deng J, Su H, et al. Imagenet large scale visual recognition challenge[J]. Int J Comput Vis, 2015, 115(3): 211-252. doi: 10.1007/s11263-015-0816-y

[10] Real E, Shlens J, Mazzocchi S, et al. Youtube-boundingboxes: A large high-precision human-annotated data set for object detection in video[C]//Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 2017: 5296-5305.

[11] Guo Q, Feng W, Zhou C, et al. Learning dynamic Siamese network for visual object tracking[C]//Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 2017: 1763-1771.

[12] Kuai Y L, Wen G J, Li D D. Masked and dynamic Siamese network for robust visual tracking[J]. Inf Sci, 2019, 503: 169-182. doi: 10.1016/j.ins.2019.07.004

[13] Wu Y, Lim J, Yang M H. Object tracking benchmark[J]. IEEE Trans Patt Anal Mach Intellig, 2015, 37(9): 1834-1848.

[14] Liang P P, Blasch E, Ling H B. Encoding color information for visual tracking: Algorithms and benchmark[J]. IEEE Trans Image Process, 2015, 24(12): 5630-5644. doi: 10.1109/TIP.2015.2482905

[15] Kristan M, Matas J, Leonardis A, et al. The visual object tracking vot2015 challenge results[C]//Proceedings of the 2015 IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 2015: 1-23.

[16] Wang M M, Liu Y, Huang Z Y. Large margin object tracking with circulant feature maps[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 2017: 4021-4029.

[17] 侯志强, 陈立琳, 余旺盛, 等. 基于双模板Siamese网络的鲁棒视觉跟踪算法[J]. 电子与信息学报, 2019, 41(9): 2247-2255. https://www.cnki.com.cn/Article/CJFDTOTAL-DZYX201909030.htm

Hou Z Q, Chen L L, Yu W S, et al. Robust visual tracking algorithm based on siamese network with dual templates[J]. J Electr Inf Technol, 2019, 41(9): 2247-2255. https://www.cnki.com.cn/Article/CJFDTOTAL-DZYX201909030.htm

[18] Possegger H, Mauthner T, Bischof H. In defense of color-based model-free tracking[C]//Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 2015: 2113-2120.

[19] 谢瑜, 陈莹. 空间注意机制下的自适应目标跟踪[J]. 系统工程与电子技术, 2019, 41(9): 1945-1954. https://www.cnki.com.cn/Article/CJFDTOTAL-XTYD201909005.htm

Xie Y, Chen Y. Adaptive object tracking based on spatial attention mechanism[J]. Syst Eng Electr, 2019, 41(9): 1945-1954. https://www.cnki.com.cn/Article/CJFDTOTAL-XTYD201909005.htm

[20] Krizhevsky A, Sutskever I, Hinton G E. Imagenet classification with deep convolutional neural networks[C]//NIPS'12: Proceedings of the 25th International Conference on Neural Information Processing Systems, New York, NY, USA, 2012.

[21] Song Y B, Ma C, Gong L J, et al. Crest: Convolutional residual learning for visual tracking[C]//Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 2017: 2555-2564.

[22] Bertinetto L, Valmadre J, Golodetz S, et al. Staple: Complementary learners for real-time tracking[C]//Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 2016: 1401-1409.

[23] Wang N, Song Y B, Ma C, et al. Unsupervised deep tracking[C]//Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 2019: 1308-1317.

[24] Danelljan M, Häger G, Khan F S, et al. Learning spatially regularized correlation filters for visual tracking[C]// Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 4310-4318.

[25] Danelljan M, Häger G, Khan F, et al. Accurate scale estimation for robust visual tracking[C]//British Machine Vision Conference, Nottingham, 2014.

[26] Zhang J M, Ma S G, Sclaroff S. MEEM: robust tracking via multiple experts using entropy minimization[C]//European Conference on Computer Vision, Cham, 2014: 188-203.

[27] Valmadre J, Bertinetto L, Henriques J, et al. End-to-end representation learning for correlation filter based tracking[C]//Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 2017: 2805-2813.

[28] Galoogahi H K, Fagg A, Lucey S. Learning background-aware correlation filters for visual tracking[C]//Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 2017: 1135-1143.

[29] Zhang Z P, Peng H W. Deeper and wider Siamese networks for real-time visual tracking[C]//Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 2019: 4591-4600.

[30] Li B, Wu W, Wang Q, et al. Siamrpn++: Evolution of siamese visual tracking with very deep networks[C]//Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 2019: 4282-4291.

[31] Li X, Ma C, Wu B Y, et al. Target-aware deep tracking[C]//Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 2019: 1369-1378.

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: