RGB-D object recognition algorithm based on improved double stream convolution recursive neural network

-

摘要

为了提高基于图像的物体识别准确率,提出一种改进双流卷积递归神经网络的RGB-D物体识别算法(Re-CRNN)。将RGB图像与深度光学信息结合,基于残差学习对双流卷积神经网络(CNN)进行改进:增加顶层特征融合单元,在RGB图像和深度图像中学习联合特征,将提取的RGB和深度图像的高层次特征进行跨通道信息融合,继而使用Softmax生成概率分布。最后,使用标准数据集进行实验,结果表明,Re-CRNN算法的RGB-D物体识别准确率为94.1%,较现有基于图像的物体识别方法有显著的提升。

Abstract

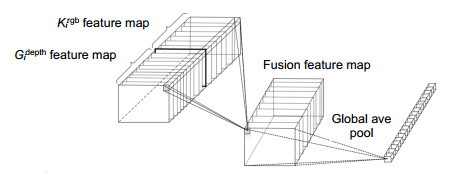

An algorithm (Re-CRNN) of image processing is proposed using RGB-D object recognition, which is improved based on a double stream convolutional recursive neural network, in order to improve the accuracy of object recognition. Re-CRNN combines RGB image with depth optical information, the double stream convolutional neural network (CNN) is improved based on the idea of residual learning as follows: top-level feature fusion unit is added into the network, the representation of federation feature is learning in RGB images and depth images and the high-level features are integrated in across channels of the extracted RGB images and depth images information, after that, the probability distribution was generated by Softmax. Finally, the experiment was carried out on the standard RGB-D data set. The experimental results show that the accuracy was 94.1% using Re-CRNN algorithm for the RGB-D object recognition, which was significantly improved compared with the existing image-based object recognition methods.

-

Key words:

- RGB-D image /

- structured light /

- object recognition /

- deep learning /

- depth image

-

Overview

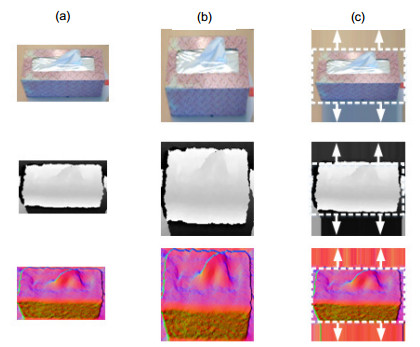

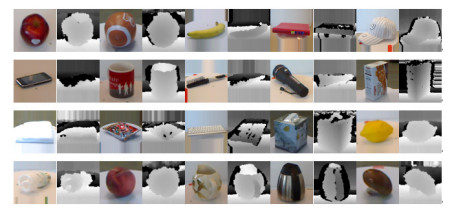

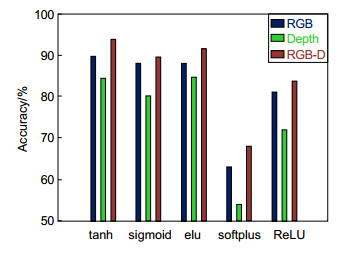

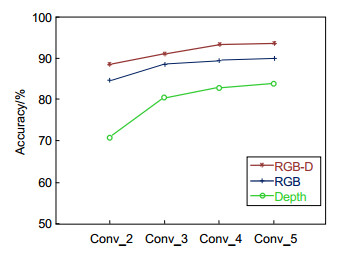

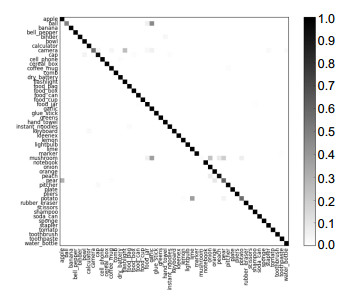

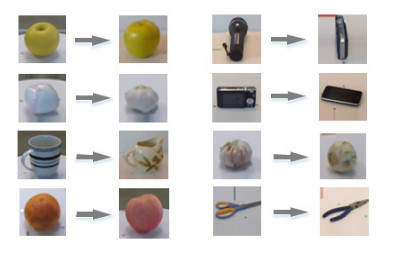

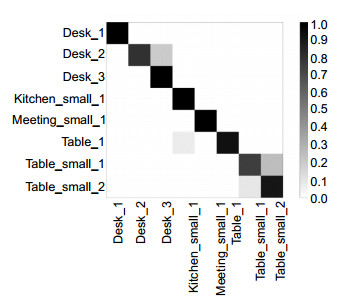

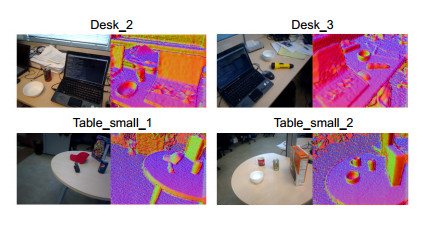

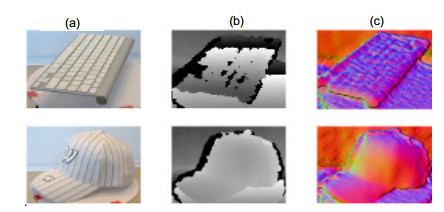

Overview: The object recognition of RGB image is easily affected by the external environment, and the recognition accuracy has reached the bottleneck, which is difficult to meet the requirements of practical application. In recent years, the recognition method combined with depth image has become a new way to improve the accuracy of object recognition. The RGB image contains the color and texture features of the object, and the depth image contains the geometric features of the object and has illumination invariance. The fusion of RGB features and depth features can effectively improve the recognition accuracy. In order to make full use of the potential feature information of RGB-D image, and overcome the problem that the existing literature pays attention to the recognition results of single-mode and ignores the complementary advantages of RGB image and depth image, an RGB-D object recognition algorithm (Re-CRNN) based on improved double stream convolution recursive neural network is proposed. The depth image is encoded by calculating the surface normal. The depth image of a single channel is encoded into three channels. The transfer learning method is used to train the original image to generate the same level features as the RGB image. The backbone network is based on the double stream convolution neural network with improved residual learning. Residual learning is introduced to optimize the network structure and reduce the complexity of the model. The parameters of each data stream network are the same. The RGB image and depth image are trained respectively to extract the high-order features of RGB image and depth image. A feature fusion unit is added at the top layer of the network. The extracted high-level features of RGB image and depth image are fused across channels and mapped to a public space. Next, the fused features are further extracted by using a recursive neural network to generate a new feature sequence, which is classified by the softmax classifier. Finally, experiments are carried out on the standard RGB-D data set to compare the effects of different extrusion functions on the experimental results, as well as the fusion results of different convolution layers. The experimental results show that the recognition accuracy of RGB-D image is higher than that of RGB image, and the fusion of RGB features and depth features can further improve the accuracy of object recognition. The RGB-D object recognition algorithm proposed in this paper has achieved the best recognition results. The recognition accuracy rate on the RGB-D data set reaches 94.1%, which is obviously improved compared with the existing methods.

-

-

表 1 特征融合方式对比

Table 1. Comparison of feature fusion methods

Method Category accuracy/% Instance accuracy/% Fc-RGB-D+Softmax 93.2 96.8 Fu-RGB-D+Softmax 93.3 97.1 Re-CRNN 94.1 98.5 表 2 与其他方法对比

Table 2. Compared with other methods

Method Category accuracy/% Instance accuracy/% RGB Depth RGB-D RGB Depth RGB-D Bo et al[3] 82.4±3.1 81.2±2.3 87.5±2.9 92.1 51.7 92.8 CNN-RNN[7] 82.9±4.6 60.4±5.6 86.8±3.3 - - - HCAE-ELM[8] 84.3±3.2 82.9±2.1 90.2±1.5 - - - CNN-features[19] 83.1±2.0 - 89.4±1.3 92.0 45.5 94.1 Fus-CNN[9] 84.1±2.7 83.8±2.7 91.3±1.4 - - - MM-LRF-ELM[11] 84.3±3.2 82.9±2.5 89.6±2.5 91.0 50.9 92.5 Andreas et al[10] 89.5±1.9 84.5±2.9 93.5±1.1 - - - STEM-CaRFs[12] 88.8±2.0 80.8±2.1 92.2±1.3 97.0 56.3 97.6 Re-CRNN 90.3±1.8 84.3±2.2 94.1±0.9 97.5 58.7 98.5 -

参考文献

[1] Lai K, Bo L F, Ren X F, et al. A large-scale hierarchical multi-view RGB-D object dataset[C]//Proceedings of 2011 IEEE International Conference on Robotics and Automation, 2011: 1817-1824.

[2] Paulk D, Metsis V, McMurrough C, et al. A supervised learning approach for fast object recognition from RGB-D data[C]//Proceedings of the 7th International Conference on PErvasive Technologies Related to Assistive Environments, 2014: 5.

[3] Bo L F, Ren X F, Fox D. Depth kernel descriptors for object recognition[C]//Proceedings of 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, 2011: 821-826.

[4] Blum M, Springenberg J T, Wülfing J, et al. A learned feature descriptor for object recognition in RGB-D data[C]//Proceedings of 2012 IEEE International Conference on Robotics and Automation, 2012: 1298-1303.

[5] 向程谕. RGB-D图像的特征提取与分类方法研究[D]. 湘潭: 湘潭大学, 2017: 28-31.

Xiang C Y. Research on feature extraction and classification method of RGB-D images[D]. Xiangtan: Xiangtan University, 2017: 28-31.

[6] 李珣, 李林鹏, 南恺恺, 等. 智能家居移动机器人的人脸识别方法[J]. 西安工程大学学报, 2020, 34(1): 61-66. https://www.cnki.com.cn/Article/CJFDTOTAL-XBFZ202001010.htm

Li X, Li L P, Nan K K, et al. Face recognition method of smart home mobile robot[J]. J Xi'an Poly Univ, 2020, 34(1): 61-66. https://www.cnki.com.cn/Article/CJFDTOTAL-XBFZ202001010.htm

[7] Socher R, Huval B, Bhat B, et al. Convolutional-recursive deep learning for 3D object classification[C]//Proceedings of the 25th International Conference on Neural Information Processing Systems, 2012: 665-673.

[8] 殷云华, 李会方. 基于混合卷积自编码极限学习机的RGB-D物体识别[J]. 红外与激光工程, 2018, 47(2): 0203008. https://www.cnki.com.cn/Article/CJFDTOTAL-HWYJ201802009.htm

Yin Y H, Li H F. RGB-D object recognition based on hybrid convolutional auto-encoder extreme learning machine[J]. Infrared Laser Eng, 2018, 47(2): 0203008. https://www.cnki.com.cn/Article/CJFDTOTAL-HWYJ201802009.htm

[9] Eitel A, Springenberg J T, Spinello L, et al. Multimodal deep learning for robust RGB-D object recognition[C]//Proceedings of 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2015: 681-687.

[10] Aakerberg A, Nasrollahi K, Rasmussen C B, et al. Depth value pre-processing for accurate transfer learning based RGB-D object recognition[C]//Proceedings of the International Joint Conference on Computational Intelligence, 2017: 121-128.

[11] Liu H P, Li F X, Xu X Y, et al. Multi-modal local receptive field extreme learning machine for object recognition[J]. Neurocomputing, 2018, 277: 4-11. doi: 10.1016/j.neucom.2017.04.077

[12] Asif U, Bennamoun M, Sohel F A. RGB-D object recognition and grasp detection using hierarchical cascaded forests[J]. IEEE Trans Robot, 2017, 33(3): 547-564. doi: 10.1109/TRO.2016.2638453

[13] 李梁华, 王永雄. 高效3D密集残差网络及其在人体行为识别中的应用[J]. 光电工程, 2020, 47(2): 190139. doi: 10.12086/oee.2020.190139

Li L H, Wang Y X. Efficient 3D dense residual network and its application in human action recognition[J]. Opto-Electron Eng, 2020, 47(2): 190139. doi: 10.12086/oee.2020.190139

[14] Cheng Y H, Cai R, Zhao X, et al. Convolutional fisher kernels for RGB-D object recognition[C]//Proceedings of 2015 International Conference on 3D Vision, 2015: 135-143.

[15] Szegedy C, Liu W, Jia Y Q, et al. Going deeper with convolutions[C]//Proceedings of 2015 IEEE Conference on Computer Vision and Pattern Recognition, 2015: 1-9.

[16] Li X, Zhao Z F, Liu L, et al. An optimization model of multi-intersection signal control for trunk road under collaborative information[J]. J Control Sci Eng, 2017, 2017: 2846987. http://www.researchgate.net/publication/317158890_An_Optimization_Model_of_Multi-Intersection_Signal_Control_for_Trunk_Road_under_Collaborative_Information

[17] 沈明玉, 俞鹏飞, 汪荣贵, 等. 多路径递归网络结构的单帧图像超分辨率重建[J]. 光电工程, 2019, 46(11): 180489. doi: 10.12086/oee.2019.180489

Shen M Y, Yu P F, Wang R G, et al. Image superresolution via multipath recursive convolutional network[J]. Opto-Electron Eng, 2019, 46(11): 180489. doi: 10.12086/oee.2019.180489

[18] Ren X F, Bo L F, Fox D. RGB-(D) scene labeling: features and algorithms[C]//Proceedings of 2012 IEEE Conference on Computer Vision and Pattern Recognition, 2012: 2759-2766.

[19] Schwarz M, Schulz H, Behnke S. RGB-D object recognition and pose estimation based on pre-trained convolutional neural network features[C]//Proceedings of 2015 IEEE International Conference on Robotics and Automation (ICRA), 2015: 1329-1335.

[20] 向程谕, 王冬丽, 周彦, 等. 基于RGB-D融合特征的图像分类[J]. 计算机工程与应用, 2018, 54(8): 178-182, 254. https://www.cnki.com.cn/Article/CJFDTOTAL-JSGG201808029.htm

Xiang C Y, Wang D L, Zhou Y, et al. Image classification based on RGB-D fusion feature[J]. Comput Eng Appl, 2018, 54(8): 178-182, 254. https://www.cnki.com.cn/Article/CJFDTOTAL-JSGG201808029.htm

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: