EEG emotion recognition based on linear kernel PCA and XGBoost

-

摘要:

本文通过引入线性核的主成分分析和极端梯度提升(XGBoost)模型,给出了一种连续视听刺激下脑电(EEG)情感四分类识别算法。为体现适普性,文中使用传统的功率谱密度(PSD)作为脑电信号特征,并结合XGBoost学习得到weight指标下的特征重要性度量,然后使用线性核的主成分分析对经阈值选择的重要特征进行处理后送入XGBoost模型进行识别。通过实验分析,gamma频段在XGBoost模型识别的参与重要度明显高于其他频段;另外,从通道分布上看,中央、顶叶和右枕区相对于其他脑区发挥着较为重要的作用。本文算法在所有被试参与(SAP)和被试单独依赖(SSD)两种识别方案下的识别准确率分别达到78.4%和92.6%,相对其他文献的识别算法取得了较大的提升。本文提出的方案有助于改善视听激励下脑机情感系统的识别性能。

Abstract:

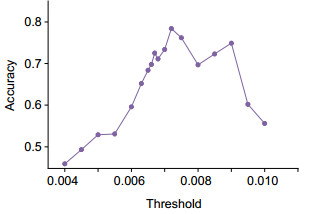

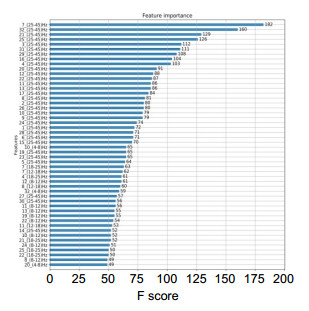

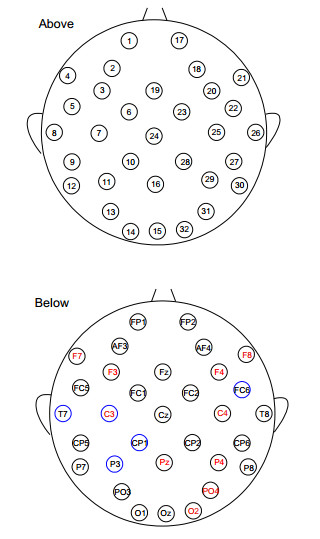

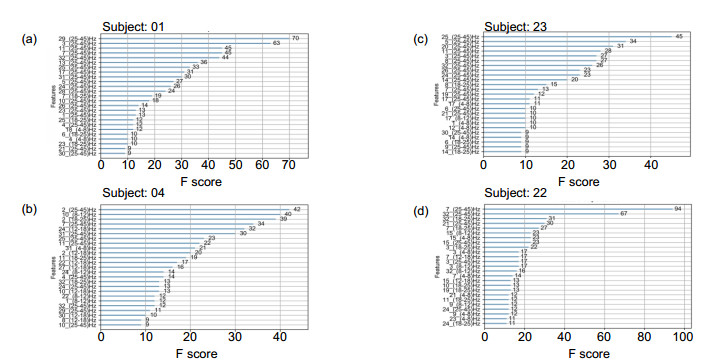

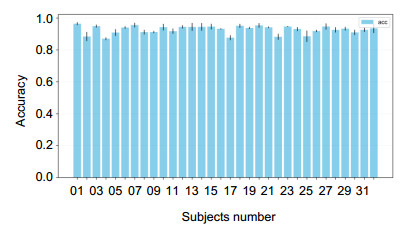

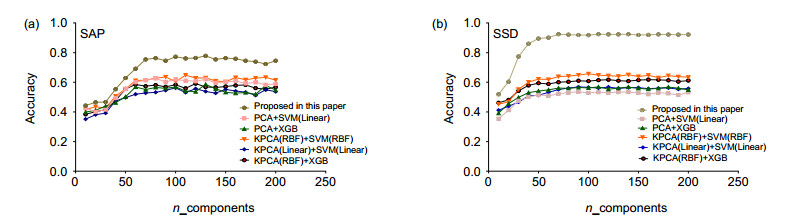

Abstract:The principal component analysis of linear kernel and XGBoost models are introduced to design electroencephalogram (EEG) classification algorithm of four emotional states under continuous audio-visual stimulation. In order to reflect universality, the traditional power spectral density (PSD) is used as the feature of EEG signal, and the feature importance measure under the weight index is obtained with XGBoost learning. Then linear kernel principal component analysis is used to process the threshold selected features and send them to XGBoost model for recognition. According to the experimental analysis, gamma-band plays a more important role than other bands in XGBoost model recognition; in addition, for distribution on channels, the central, parietal, and right occipital regions play a more important role than other brain regions. The recognition accuracy of this algorithm is 78.4% and 92.6% respectively under the two recognition schemes of subjects all participation (SAP) and subject single dependent (SSD). Compared with other literature, this algorithm has made a great improvement. The scheme proposed is helpful to improve the recognition performance of brain-computer emotion system under audio-visual stimulation.

-

Overview: Affective computing aims to build a harmonious human-computer environment so that computers have the ability to recognize and understand emotions. At present, the research of affective computing has penetrated into the fields of face recognition, speech recognition, text representation, gesture expression, and physiological signal. The relevant applications in these fields provide more humanized and emotional interfaces for all levels of human life. As the most direct physiological expression of the central nervous system, EEG contains rich emotional information. Compared with other research fields, the emotional information contained in EEG is more authentic and referential. At the same time, the expression of EEG emotion is not easy to be misled by subjective consciousness. In order to accurately distinguish different emotional states from EEG signals, and combine the corresponding models to explore the emotion-related frequency band and brain area in time and space, the principal component analysis of linear kernel and XGBoost model are introduced to design EEG classification algorithm of four emotional states under continuous audio-visual stimulation in this paper. XGBoost algorithm has the advantages of high speed, low computational complexity, easy parameter adjustment, strong controllability, and high recognition performance. As a recognition and prediction model, XGBoost algorithm has an excellent performance in industry, machine learning, and various scientific research competitions. In addition, XGBoost can measure the importance of features in sample learning according to some feature importance index in the process of sample training, so as to make features more transparent in the process of recognition. First, the traditional power spectral density (PSD) is used as the feature of EEG signal to reflect universality, and the feature importance measure under the weight index is obtained with XGBoost learning. Then the linear kernel principal component analysis is used to increase the dimension of the important features selected by the threshold, which makes the features more nonlinear and separable in the high-dimensional space. Finally, the processed features are sent to XGBoost model for recognition. According to the experimental analysis, gamma-band plays a more important role than other bands in XGBoost model recognition; in addition, for distribution on channels, the central, parietal, and right occipital regions play a more important role than other brain regions. The recognition accuracy of this algorithm is 78.4% and 92.6% respectively under the two recognition schemes of subjects all participation (SAP) and subject single dependent (SSD). Compared with other literature, this algorithm has made a great improvement. Therefore, the scheme proposed is helpful to improve the recognition performance of brain-computer emotion system under audio-visual stimulation.

-

-

表 1 各种算法识别效果的比较

Table 1. Comparison of recognition performance for various algorithms

Algorithm SAP SSD Acc/% f1_weighted/% Acc/% f1_weighted/% SVM[33] —— —— 57.6/62.0(V/A) — XGB 56.832 56.499 57.423 52.822 SVM 52.360 51.152 50.970 43.315 MLP 52.742 51.800 48.642 39.806 RF 58.799 58.243 55.394 51.996 LR 40.911 38.508 55.767 52.307 PCA+SVM[17] —— —— 68.3 —— SAE+LSTM[21] 76.82 —— —— —— Lasso+SVM[23] —— —— 87.15/86.60(V/A) —— DE+GELM[19] 69.67 —— —— —— Ours 78.376 77.848 92.583 92.539 -

[1] Vilar P. Designing the user interface: strategies for effective human-computer interaction (5th edition)[J]. J Assoc Inf Sci Technol, 2010, 65(5): 1073–1074.

[2] Andreasson R, Alenljung B, Billing E, et al. Affective touch in human–robot interaction: conveying emotion to the Nao robot[J]. Int J Soc Robot, 2018, 10(3): 473–491. doi: 10.1007/s12369-017-0446-3

[3] 任福继, 孙晓. 智能机器人的现状及发展[J]. 科技导报, 2015, 33(21): 32–38. https://www.cnki.com.cn/Article/CJFDTOTAL-KJDB201521011.htm

Ren F J, Sun X. Present situation and development of intelligent robots[J]. Sci Technol Rev, 2015, 33(21): 32–38. https://www.cnki.com.cn/Article/CJFDTOTAL-KJDB201521011.htm

[4] Fragopanagos N, Taylor J G. Emotion recognition in human-computer interaction[J]. Neural Netw, 2005, 18(4): 389–405. doi: 10.1016/j.neunet.2005.03.006

[5] 王晓华, 李瑞静, 胡敏, 等. 融合局部特征的面部遮挡表情识别[J]. 中国图象图形学报, 2016, 21(11): 1473–1482. doi: 10.11834/jig.20161107

Wang X H, Li R J, Hu M, et al. Occluded facial expression recognition based on the fusion of local features[J]. J Image Graph, 2016, 21(11): 1473–1482. doi: 10.11834/jig.20161107

[6] Ren F J, Huang Z. Automatic facial expression learning method based on humanoid robot XIN-REN[J]. IEEE Trans Hum Mach Syst, 2016, 46(6): 810–821. doi: 10.1109/THMS.2016.2599495

[7] 张石清, 李乐民, 赵知劲. 基于一种改进的监督流形学习算法的语音情感识别[J]. 电子与信息学报, 2010, 32(11): 2724–2729. https://www.cnki.com.cn/Article/CJFDTOTAL-DZYX201011034.htm

Zhang S Q, Li L M, Zhao Z J. Speech emotion recognition based on an improved supervised manifold learning algorithm[J]. J Electron Inf Technol, 2010, 32(11): 2724–2729. https://www.cnki.com.cn/Article/CJFDTOTAL-DZYX201011034.htm

[8] Piana S, Staglianò A, Odone F, et al. Adaptive body gesture representation for automatic emotion recognition[J]. ACM Trans Interact Intell Syst, 2016, 6(1): 6. http://dl.acm.org/doi/10.1145/2818740

[9] 孙晓, 彭晓琪, 胡敏, 等. 基于多维扩展特征与深度学习的微博短文本情感分析[J]. 电子与信息学报, 2017, 39(9): 2048–2055. https://www.cnki.com.cn/Article/CJFDTOTAL-DZYX201709003.htm

Sun X, Peng X Q, Hu M, et al. Extended multi-modality features and deep learning based microblog short text sentiment analysis[J]. J Electron Inf Technol, 2017, 39(9): 2048–2055. https://www.cnki.com.cn/Article/CJFDTOTAL-DZYX201709003.htm

[10] Ren F J, Wang L. Sentiment analysis of text based on three-way decisions[J]. J Intell Fuzzy Syst, 2017, 33(1): 245–254. doi: 10.3233/JIFS-161522

[11] 赵国朕, 宋金晶, 葛燕, 等. 基于生理大数据的情绪识别研究进展[J]. 计算机研究与发展, 2016, 53(1): 80–92. https://www.cnki.com.cn/Article/CJFDTOTAL-JFYZ201601009.htm

Zhao G Z, Song J J, Ge Y, et al. Advances in emotion recognition based on physiological big data[J]. J Comput Res Dev, 2016, 53(1): 80–92. https://www.cnki.com.cn/Article/CJFDTOTAL-JFYZ201601009.htm

[12] Petrantonakis P C, Hadjileontiadis L J. Adaptive emotional information retrieval from EEG signals in the time-frequency domain[J]. IEEE Trans Signal Process, 2012, 60(5): 2604–2616. doi: 10.1109/TSP.2012.2187647

[13] Lin Y P, Wang C H, Jung T P, et al. EEG-based emotion recognition in music listening[J]. IEEE Trans Biomed Eng, 2010, 57(7): 1798–1806. doi: 10.1109/TBME.2010.2048568

[14] Yin Z, Wang Y X, Liu L, et al. Cross-subject EEG feature selection for emotion recognition using transfer recursive feature elimination[J]. Front Neurorobot, 2017, 11: 19. http://europepmc.org/abstract/MED/28443015

[15] Jenke R, Peer A, Buss M. Feature extraction and selection for emotion recognition from EEG[J]. IEEE Trans Affect Comput, 2014, 5(3): 327–339. doi: 10.1109/TAFFC.2014.2339834

[16] Li X, Song D W, Zhang P, et al. Exploring EEG features in cross-subject emotion recognition[J]. Front Neurosci, 2018, 12: 162. doi: 10.3389/fnins.2018.00162

[17] 李幼军, 钟宁, 黄佳进, 等. 进基于高斯核函数支持向量机的脑电信号时频特征情感多类识别[J]. 北京工业大学学报, 2018, 44(2): 234–243. https://www.cnki.com.cn/Article/CJFDTOTAL-BJGD201802012.htm

Li Y J, Zhong N, Hang J J, et al. Human emotion multi-classification recognition based on the EEG time and frequency features by using a Gaussian kernel function SVM[J]. J Beijing Univ Technol, 2018, 44(2): 234–243. https://www.cnki.com.cn/Article/CJFDTOTAL-BJGD201802012.htm

[18] 李昕, 田彦秀, 侯永捷, 等. 小波变换结合经验模态分解在音乐干预脑电分析中的应用[J]. 生物医学工程学杂志, 2016, 33(4): 762–769. https://www.cnki.com.cn/Article/CJFDTOTAL-SWGC201604024.htm

Li X, Tian Y X, Hou Y J, et al. Applications of wavelet transform combining empirical mode decomposition in EEG Analysis with music intervention[J]. J Biomed Eng, 2016, 33(4): 762–769. https://www.cnki.com.cn/Article/CJFDTOTAL-SWGC201604024.htm

[19] Zheng W L, Zhu J Y, Lu B L. Identifying stable patterns over time for emotion recognition from EEG[J]. IEEE Trans Affect Comput, 2019, 10(3): 417–429. doi: 10.1109/TAFFC.2017.2712143

[20] 李昕, 蔡二娟, 田彦秀, 等. 一种改进脑电特征提取算法及其在情感识别中的应用[J]. 生物医学工程学杂志, 2017, 34(4): 510–517, 528. https://www.cnki.com.cn/Article/CJFDTOTAL-SWGC201704004.htm

Li X, Cai E J, Tian Y X, et al. An improved electroencephalogram feature extraction algorithm and its application in emotion recognition[J]. J Biomed Eng, 2017, 34(4): 510–517, 528. https://www.cnki.com.cn/Article/CJFDTOTAL-SWGC201704004.htm

[21] 李幼军, 黄佳进, 王海渊, 等. 基于SAE和LSTM RNN的多模态生理信号融合和情感识别研究[J]. 通信学报, 2017, 38(12): 109–120. https://www.cnki.com.cn/Article/CJFDTOTAL-TXXB201712011.htm

Li Y J, Huang J J, Wang H Y, et al. Study of emotion recognition based on fusion multi-modal bio-signal with SAE and LSTM recurrent neural network[J]. J Commun, 2017, 38(12): 109–120. https://www.cnki.com.cn/Article/CJFDTOTAL-TXXB201712011.htm

[22] Chen J X, Zhang P W, Mao Z J, et al. Accurate EEG-based emotion recognition on combined features using deep convolutional neural networks[J]. IEEE Access, 2019, 7: 44317–44328. http://ieeexplore.ieee.org/document/8676231

[23] 郭金良, 方芳, 王伟, 等. 基于稀疏组lasso-granger因果关系特征的脑电信号情感识别[J]. 模式识别与人工智能, 2018, 31(10): 941–949. https://www.cnki.com.cn/Article/CJFDTOTAL-MSSB201810011.htm

Guo J L, Fang F, Wang W, et al. EEG emotion recognition based on sparse group lasso-granger causality feature[J]. Pattern Recognit Artif Intell, 2018, 31(10): 941–949. https://www.cnki.com.cn/Article/CJFDTOTAL-MSSB201810011.htm

[24] Atkinson J, Campos D. Improving BCI-based emotion recognition by combining EEG feature selection and kernel classifiers[J]. Expert Syst Appl, 2016, 47: 35–41. http://www.sciencedirect.com/science/article/pii/S0957417415007538

[25] Gupta R, Laghari K U R, Falk T H. Relevance vector classifier decision fusion and EEG graph-theoretic features for automatic affective state characterization[J]. Neurocomputing, 2016, 174: 875–884. http://www.sciencedirect.com/science/article/pii/S0925231215014186

[26] Chen T Q, Guestrin C. XGBoost: a scalable tree boosting system[C]//Proceedings of the 22nd ACM SIGKDD International conference on Knowledge Discovery and Data Mining, 2016: 785–794.

[27] 张爱武, 董喆, 康孝岩. 基于XGBoost的机载激光雷达与高光谱影像结合的特征选择算法[J]. 中国激光, 2019, 46(4): 0404003. https://www.cnki.com.cn/Article/CJFDTOTAL-JJZZ201904020.htm

Zhang A W, Dong J, Kang X Y. Feature selection algorithms of airborne LIDAR combined with hyperspectral images based on XGBoost[J]. Chin J Lasers, 2019, 46(4): 0404003. https://www.cnki.com.cn/Article/CJFDTOTAL-JJZZ201904020.htm

[28] Zheng H T, Yuan J B, Chen L. Short-term load forecasting using EMD-LSTM neural networks with a XGBoost algorithm for feature importance evaluation[J]. Energies, 2017, 10(8): 1168. http://www.researchgate.net/publication/318996236_Short-Term_Load_Forecasting_Using_EMD-LSTM_Neural_Networks_with_a_Xgboost_Algorithm_for_Feature_Importance_Evaluation

[29] Chakraborty D, Elzarka H. Advanced machine learning techniques for building performance simulation: a comparative analysis[J]. J Build Perform Simul, 2019, 12(2): 193–207. http://www.tandfonline.com/doi/full/10.1080/19401493.2018.1498538?scroll=top

[30] Luo Y N, Zou J, Yao C F, et al. HSI-CNN: a novel convolution neural network for hyperspectral image[C]//2018 International Conference on Audio, Language and Image Processing (ICALIP), 2018.

[31] Ayumi V. Pose-based human action recognition with Extreme Gradient Boosting[C]//2016 IEEE Student Conference on Research and Development (SCOReD), 2016.

[32] Zhong J C, Sun Y S, Peng W, et al. XGBFEMF: an XGBoost-based framework for essential protein prediction[J]. IEEE Trans NanoBioscience, 2018, 17(3): 243–250. http://ieeexplore.ieee.org/abstract/document/8370098/

[33] Koelstra S, Muhl C, Soleymani M, et al. DEAP: a database for emotion analysis; using physiological signals[J]. IEEE Trans Affect Comput, 2012, 3(1): 18–31. http://doi.ieeecomputersociety.org/10.1109/T-AFFC.2011.15

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: