Multi-occluded pedestrian real-time detection algorithm based on preprocessing R-FCN

-

摘要

当前车辆辅助驾驶系统的一个主要挑战就是在复杂场景下实时检测出多遮挡的行人,以减少交通事故的发生。为了提高系统的检测精度和速度,提出了一种基于改进区域全卷积网络(R-FCN)的多遮挡行人实时检测算法。在R-FCN网络基础上,引进感兴趣区域(RoI)对齐层,解决特征图与原始图像上的RoI不对准问题;改进可分离卷积层,降低R-FCN的位置敏感分数图维度,提高检测速度。针对行人遮挡问题,提出多尺度上下文算法,采用局部竞争机制进行自适应上下文尺度选择;针对遮挡部位可见度低,引进可形变RoI池化层,扩大对身体部位的池化面积。最后为了减少视频序列中行人的冗余信息,使用序列非极大值抑制算法代替传统的非极大值抑制算法。检测算法在基准数据集Caltech训练检测和ETH上产生较低的检测误差,优于当前数据集中检测算法的精度,且适用于检测遮挡的行人。

Abstract

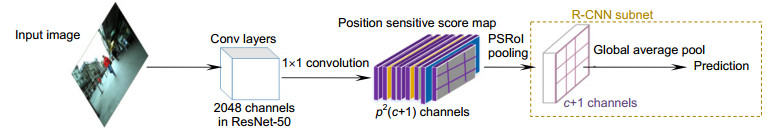

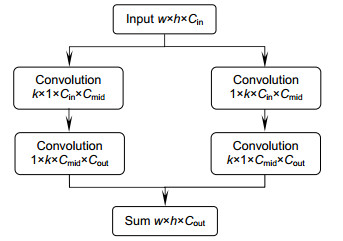

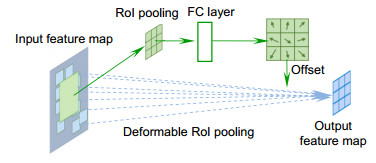

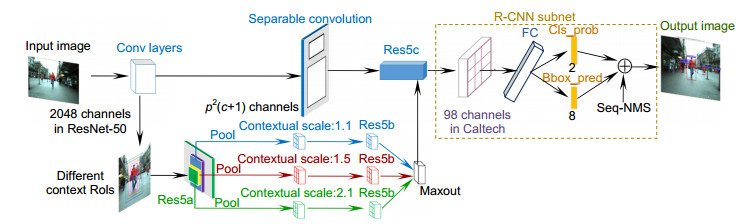

One of main challenges of driver assistance systems is to detect multi-occluded pedestrians in real-time in complicated scenes, to reduce the number of traffic accidents. In order to improve the accuracy and speed of detection system, we proposed a real-time multi-occluded pedestrian detection algorithm based on R-FCN. RoI Align layer was introduced to solve misalignments between the feature map and RoI of original images. A separable convolution was optimized to reduce the dimensions of position-sensitive score maps, to improve the detection speed. For occluded pedestrians, a multi-scale context algorithm is proposed, which adopt a local competition mechanism for adaptive context scale selection. For low visibility of the body occlusion, deformable RoI pooling layers were introduced to expand the pooled area of the body model. Finally, in order to reduce redundant information in the video sequence, Seq-NMS algorithm is used to replace traditional NMS algorithm. The experiments have shown that there is low detection error on the datasets Caltech and ETH, the accuracy of our algorithm is better than that of the detection algorithms in the sets, works particularly well with occluded pedestrians.

-

Overview

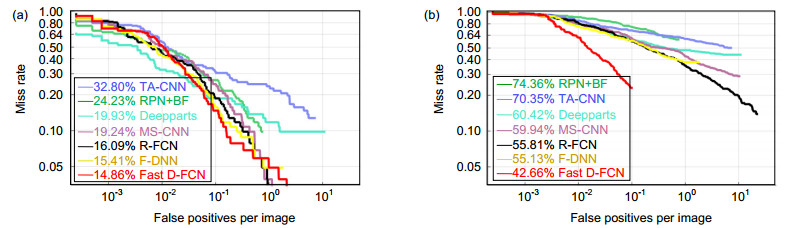

Overview: Pedestrian detection is a research hot in the fields of pattern recognition and machine learning. It is widely used in areas such as video surveillance, intelligent driving and robot navigation. Computer realizes pedestrian detection automatically, which can reduce the burden of people in a certain extent. With the development of deep learning theory, the convolutional neural network has made remarkable achievements in the field of pedestrian detection by improving the generation strategy of candidate regions and optimizing the network structure and training methods. Different from the usual object detection, pedestrian is a moving target and not a rigidity instance because of the change of occlusion and height. The methods base on feature extraction cannot meet the industrial requirements. So we choose a method base on convolutional neural network to achieve higher accuracy and real-time detection for multi-occluded pedestrians. The main work of pedestrian detection is to accurately draw the position coordinates of pedestrians in different scenarios and output the detection accuracy of the system. However, due to the complexity of the surrounding environment (such as multiple occlusion, weak illumination, etc.), the accuracy of the pedestrian detection system is greatly challenged. Compared with non-occluded pedestrians, multi-occluded pedestrians are easier to lose the detection information, and cause the decrease of pedestrian detection score below the threshold, thus missed the detection. In order to improve the detection accuracy and speed of multi-occlusion pedestrians in complex scenes, we propose a fast deformable full convolutional pedestrian detection network (called Fast D-FCN). Based on R-FCN, we introduced RoI Align layer to solve misalignments between the feature map and RoI of original images. To improve detection speed, we improved a separable convolution to reduce dimensions of position-sensitive score maps, put it on feature extraction layers of ResNet-50. For multi-occluded pedestrians, we proposed a multi-scale context in res5a of ResNet-50, which adopt a local competition mechanism for adaptive context scale selection. In the case of low visibility of the body occlusion, we introduced deformable RoI pooling layers to expand the pooled area of the body model in res5b of ResNet-50. Through the res5c layer, the channel feature vector of the fixed dimension, classification probability in the classification layer, and bounding box information in the regression layer are outputted. Finally, in order to reduce redundant information in the video sequence, we used Seq-NMS algorithm to replace traditional NMS algorithm. The experiments have shown that on the datasets Caltech, the detection error about part occlusion and heavy occlusion decrease 0.55% and 12.77% respectively compared to F-DNN. On the ETH dataset, our algorithm is better than the accuracy of other detection algorithms, and works particularly well with multi-occluded pedestrians.

-

-

表 1 ResNet-50网络参数表

Table 1. ResNet-50 network parameter

Layer Output size K Output channels Image 224×224 Conv1

maxPool112×112

56×563×3

3×3256

256Stage2 28×28

28×28512 Stage3 14×14

14×141024 Stage4 7×7

7×72048 FC 1×1 1000 Comp* 98 M (Comp*表示模型的复杂度,K表示卷积内核大小) 表 2 漏检率与检测速度比较

Table 2. Comparison of miss and detect rate

Algorithm Fast D-FCN SSD R-FCN Test size

Base-model

Part-occlusion(MR)/%640x480

ResNet-50

14.86512x512

ResNet-50

20.49640x480

ResNet-50

16.09Heavy-occlusion(MR)/% 42.36 57.64 55.81 Speed/(f/s) 48.71 35.42 11.24 -

参考文献

[1] Dollar P, Wojek C, Schiele B, et al. Pedestrian detection: an Evaluation of the State of the art[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2012, 34(4): 743–761. doi: 10.1109/TPAMI.2011.155

[2] Wang X Y, Han T X, Yan S C. An HOG-LBP human detector with partial occlusion handling[C]//Proceedings of the 12th IEEE International Conference on Computer Vision, 2009: 32–39.

http://ieeexplore.ieee.org/xpls/icp.jsp?arnumber=5459207 [3] Dai J F, Li Y, He K M, et al. R-FCN: object detection via region-based fully convolutional networks[C]//Proceedings of the 30th Conference on Neural Information Processing Systems, 2016: 379–387.

http://www.researchgate.net/publication/303409473_R-FCN_Object_Detection_via_Region-based_Fully_Convolutional_Networks [4] 王科俊, 赵彦东, 邢向磊.深度学习在无人驾驶汽车领域应用的研究进展[J].智能系统学报, 2018, 13(1): 55–69. http://d.old.wanfangdata.com.cn/Periodical/xdkjyc201801005

Wang K J, Zhao Y D, Xing X L. Deep learning in driverless vehicles[J]. CAAI Transactions on Intelligent Systems, 2018, 13(1): 55–69. http://d.old.wanfangdata.com.cn/Periodical/xdkjyc201801005

[5] 王正来, 黄敏, 朱启兵, 等.基于深度卷积神经网络的运动目标光流检测方法[J].光电工程, 2018, 45(8): 180027. CNKI:SUN:GDGC.0.2018-08-006

Wang Z L, Huang M, Zhu Q B, et al. The optical flow detection method of moving target using deep convolution neural network[J]. Opto-Electronic Engineering, 2018, 45(8): 180027. CNKI:SUN:GDGC.0.2018-08-006

[6] Liu W, Anguelov D, Erhan D, et al. SSD: single shot MultiBox detector[C]//Proceedings of the 14th European Conference on Computer Vision, 2016: 21–37.

[7] Ren S Q, He K M, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[C]//Proceedings of the 28th International Conference on Neural Information Processing Systems, 2015: 91–99.

http://www.tandfonline.com/servlet/linkout?suffix=CIT0014&dbid=8&doi=10.1080%2F2150704X.2018.1475770&key=27295650 [8] 程德强, 唐世轩, 冯晨晨, 等.改进的HOG-CLBC的行人检测方法[J].光电工程, 2018, 45(8): 180111. CNKI:SUN:GDGC.0.2018-08-010

Cheng D Q, Tang S X, Feng C C, et al. Extended HOG-CLBC for pedstrain detection[J]. Opto-Electronic Engineering, 2018, 45(8): 180111. CNKI:SUN:GDGC.0.2018-08-010

[9] Ouyang W L, Wang X G. Joint deep learning for pedestrian detection[C]//Proceedings of 2013 IEEE International Conference on Computer Vision, 2014: 2056–2063.

http://www.researchgate.net/publication/261857512_Joint_Deep_Learning_for_Pedestrian_Detection [10] Tian Y L, Luo P, Wang X G, et al. Deep learning strong parts for pedestrian detection?[C]//Proceedings of 2015 IEEE International Conference on Computer Vision, 2015: 1904–1912.

http://www.researchgate.net/publication/300412405_Deep_Learning_Strong_Parts_for_Pedestrian_Detection [11] Ouyang W L, Zeng X Y, Wang X G. Partial occlusion handling in pedestrian detection with a deep model[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2016, 26(11): 2123–2137. doi: 10.1109/TCSVT.2015.2501940

[12] Szegedy C, Vanhoucke V, Ioffe S, et al. Rethinking the inception architecture for computer vision[J]. arXiv: 1512.00567v3[cs.CV], 2015.

[13] Han W, Khorrami P, Le Paine P, et al. Seq-NMS for video object detection[J]. arXiv: 1602.08465[cs.CV], 2016.

[14] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, 2016: 770–778.

http://www.tandfonline.com/servlet/linkout?suffix=CIT0020&dbid=16&doi=10.1080%2F15481603.2018.1426091&key=10.1109%2FCVPR.2016.90 [15] He K M, Gkioxari G, Dollár P, et al. Mask R-CNN[C]// Proceedings of 2017 IEEE International Conference on Computer Vision, 2017: 2980–2988.

[16] Dai J F, Qi H Z, Xiong Y W, et al. Deformable convolutional networks[C]//Proceedings of 2017 IEEE International Conference on Computer Vision, 2017: 764–773.

http://www.researchgate.net/publication/315463609_Deformable_Convolutional_Networks?ev=prf_high [17] Bell S, Zitnick C L, Bala K, et al. Inside-outside net: detecting objects in context with skip pooling and recurrent neural networks[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, 2016: 2874–2883.

[18] Cai Z W, Fan Q F, Feris R S, et al. A unified multi-scale deep convolutional neural network for fast object detection[C]//Proceedings of the 14th European Conference on Computer Vision, 2016: 354–370.

[19] Goodfellow I J, Warde-Farley D, Mirza M, et al. Maxout networks[J]. JMLR WCP, 2013, 28(3): 1319–1327. http://d.old.wanfangdata.com.cn/Periodical/txxb201707012

[20] Zhang L L, Lin L, Liang X D, et al. Is faster R-CNN doing well for pedestrian detection?[C]//Proceedings of the 14th European Conference on Computer Vision, 2016: 443–457.

http://link.springer.com/chapter/10.1007/978-3-319-46475-6_28 [21] Tian Y L, Luo P, Wang X G, et al. Pedestrian detection aided by deep learning semantic tasks[C]//Proceedings of 2015 IEEE Conference on Computer Vision and Pattern Recognition, 2015: 5079–5087.

10.1109/CVPR.2015.7299143 [22] Du X Z, El-Khamy M, Lee J, et al. Fused DNN: a deep neural network fusion approach to fast and robust pedestrian detection[C]//Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision, 2017.

10.1109/WACV.2017.111 [23] Dollár P, Appel R, Belongie S, et al. Fast feature pyramids for object detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2014, 36(8): 1532–1545. doi: 10.1109/TPAMI.2014.2300479

[24] Nam W, Dollár P, Han J H. Local decorrelation for improved pedestrian detection[C]//Proceedings of the 27th International Conference on Neural Information Processing Systems, 2014: 424–432.

https://www.researchgate.net/publication/319770161_Local_Decorrelation_for_Improved_Pedestrian_Detection -

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: