Visual odometry with three-stage local binocular BA

-

摘要:

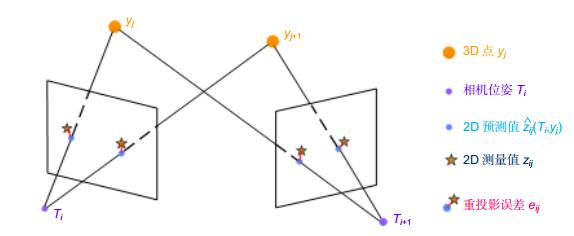

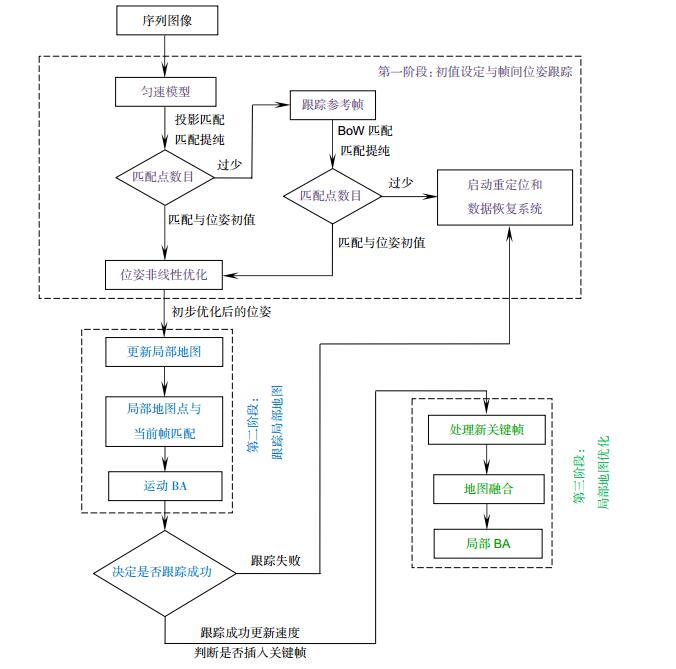

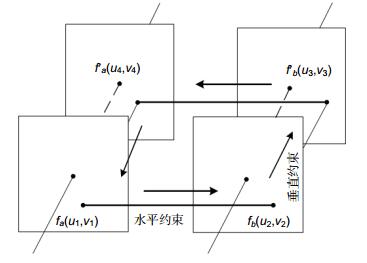

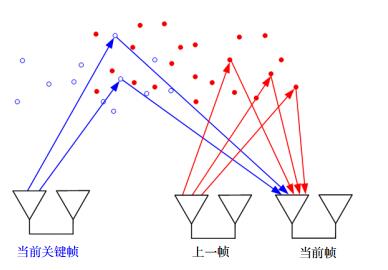

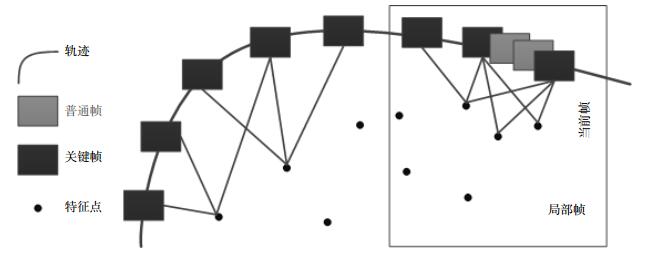

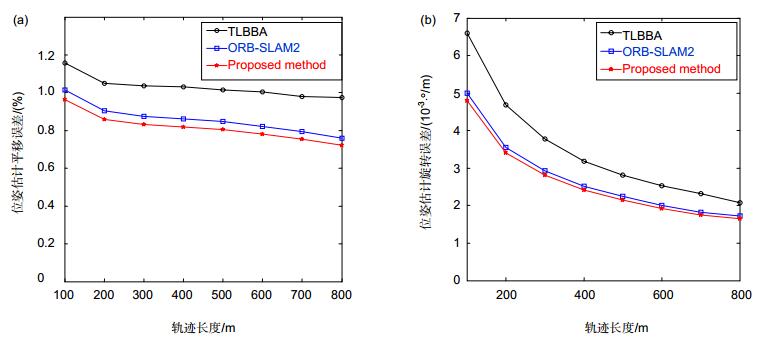

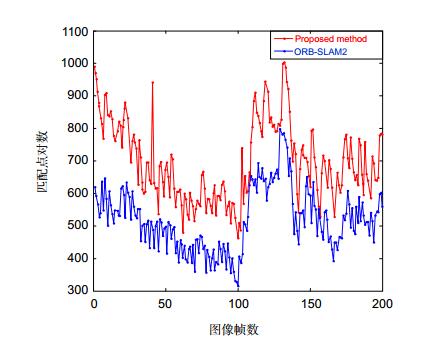

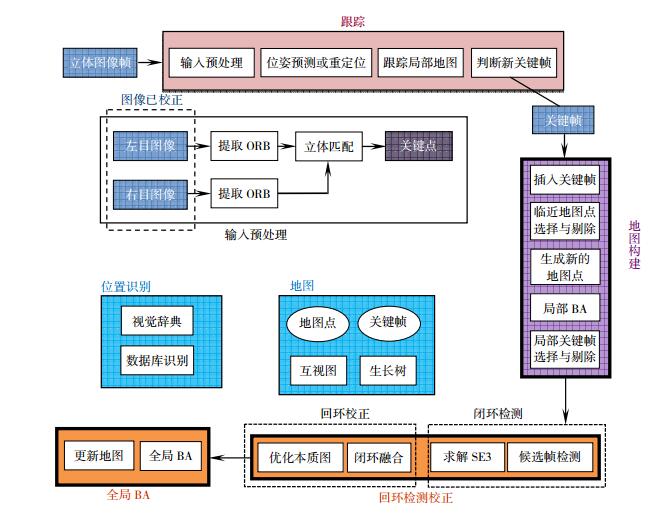

针对光束法对初始值依赖性大以及双目相机模型的特点,在ORB-SLAM2算法基础提出了一种三阶段局部双目光束法平差算法。在帧间位姿跟踪阶段,为降低累积误差对匀速模型下3D-2D匹配的影响,引入环形匹配机制进一步剔除误匹配,并将关键帧地图点与当前帧3D-2D投影匹配;在跟踪局部地图阶段插入关键帧时,增加双目相机基距参数优化环节;在局部地图优化阶段,将最近的两关键帧间的普通帧也作为局部帧进行优化。KITTI数据集实验表明,三阶段局部双目光束法平差与ORB-SLAM2相比,构造了更多精确的3D-2D匹配,增加了优化约束条件,提高了运动估计与优化精度。

-

关键词:

- ORB-SLAM2算法 /

- 三阶段局部双目光束法平差 /

- 帧间位姿跟踪 /

- 跟踪局部地图 /

- 局部地图优化词

Abstract:In this paper, a three-stage local binocular BA (bundule adjustment) is proposed based on the ORB-SLAM2 algorithm, which is based on the large value of the initial value and the binocular camera model. In order to reduce the influence of cumulative error on 3D-2D matching in the uniform model, the ring matching mechanism is introduced to eliminate the mismatched again and match the key frame map point with the current frame 3D-2D projection. In the tracking part of the local map optimization phase, the normal frame between the two nearest key frames is also optimized as the local frame when the key phase is inserted into the key frame. KITTI data set experiments show that the three-stage local binocular beam method has more accurate 3D-2D matching compared with ORB-SLAM2, which improves the optimization constraint and improves the motion estimation and optimization precision.

-

Overview: Visual odometry (VO) generally cascades single-frame motion estimation to obtain global navigation information of the camera, so that errors accumulate. In order to obtain globally consistent navigation results in large-scale complex environments, VSLAM based on graph optimization has become a research hotspot. ORB-SLAM2 is an open source algorithm proposed by Mur-Artal in 2016. The High computational efficiency and the ability to run in real time under CPU configuration make it can be used for Visual navigation of features such as map reconstruction, loop detection, and relocation in many scenes such as handheld carrier in indoor environment, aircraft in industrial environment, vehicles driven in urban environments and so on. The ORB-SLAM2 algorithm has a monocular, binocular and depth camera interface, and has been extensively researched on the basis of this. In this paper, a three-stage local binocular BA is proposed based on the ORB-SLAM2 algorithm, which is based on the large value of the initial value and the binocular camera model. On the basis of the ORB-LATCH feature proposed in, in order to reduce the influence of cumulative error on 3D-2D matching in the uniform model, the ring matching mechanism is introduced. Re-purifying the feature matching according to whether the ring matching constraint is satisfied, ensuring the correctness of the matching, to eliminate the mismatched again and match the key frame map point with the current frame 3D-2D projection. In the tracking of the local map phase, since the binocular camera requires a base distance of sufficient length to effectively cope with the driving environment of the vehicle, both the stereo matching and the three-dimensional reconstruction require accurate base distance parameters. Therefore, considering that the camera calibration parameters may change during the motion, we optimize the base distance of the binocular camera each time a key frame is inserted. In the local map optimization stage, the normal frames between the last two key frames are also included in the local frame for optimization, which provides a larger number of accurate local map points for the next camera pose tracking, and improves the accuracy of the camera pose tracking. Experiment with the algorithm under the KITTI data set. The result shows that the three-stage local binocular beam method has more accurate 3D-2D matching compared with ORB-SLAM2, which improves the optimization constraint and improves the motion estimation and optimization precision. In terms of real-time, the VO based on the algorithm in this paper meets the frame rate requirement of 10 Hz for the KITTI data set.

-

-

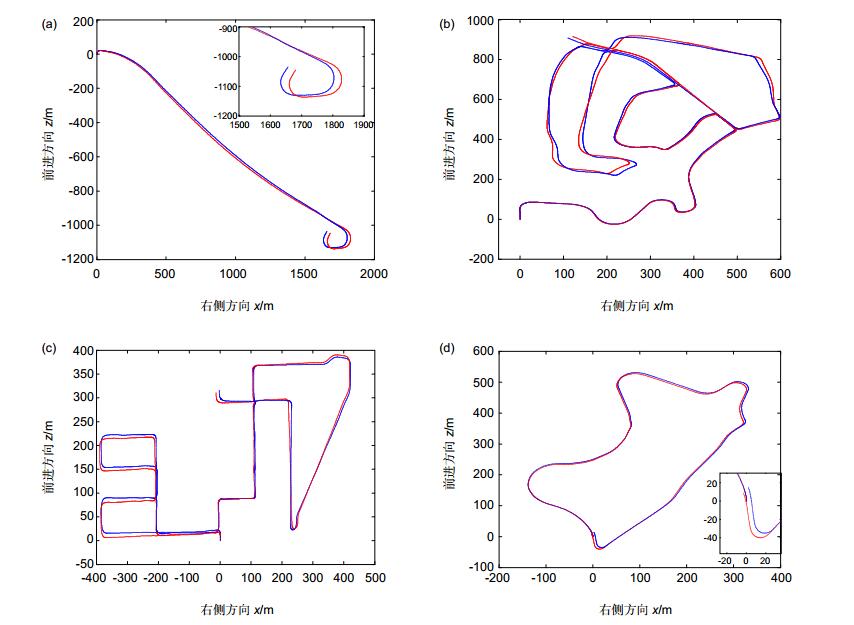

图 7 VO导航轨迹与导航车实际轨迹对比图。(a) 01序列车辆行驶轨迹;(b) 02序列车辆行驶轨迹;(c) 08序列车辆行驶轨迹;(d) 09序列车辆行驶轨迹

Figure 7. The comparison between the navigation track and the actual track of the navigation vehicle.(a) 01 sequence vehicle trajectory; (b) 02 sequence vehicle trajectory; (c) 08 sequence vehicle trajectory; (d) 09 sequence vehicle trajectory

表 1 本文算法、ORB-SLAM2、TLBBA实验结果对比

Table 1. The experiment result of algorithm, ORB-SLAM2, TLBBA

序列号 本文算法 ORB-SLAM2算法 TLBBA算法 trel/% rrel

/(rad/100 m)平均每帧耗时/s trel/% rrel

/(rad/100 m)平均每帧耗时/s trel/% rrel

/(rad/100 m)平均每帧耗时/s 0 0.805 0.47 0.070 0.847 0.49 0.068 0.984 0.72 1 1.295 0.38 0.076 1.362 0.39 0.075 2.383 0.70 2 0.811 0.50 0.069 0.852 0.52 0.067 0.976 0.60 3 0.663 0.22 0.071 0.705 0.24 0.071 1.055 0.69 4 0.457 0.30 0.071 0.476 0.31 0.070 1.215 0.35 5 0.525 0.36 0.073 0.553 0.38 0.072 0. 747 0.59 6 0.781 0.41 0.070 0.831 0.43 0.069 1.146 0.64 7 0.793 0.65 0.080 0.826 0.69 0.079 0.843 1.01 8 0.980 0.54 0.075 1.021 0.52 0.074 1.135 0.61 9 0.829 0.47 0.073 0.882 0.46 0.071 1.045 0.49 10 0.567 0.40 0.078 0.604 0.42 0.077 0.543 0.75 0.049 -

[1] Sibley G. Relative bundle adjustment[R]. Department of Engineering Science, Oxford University Technical Report. Oxford: Department of Engineering Science, Oxford University, 2009.

[2] Klein G, Murray D. Parallel tracking and mapping for small AR workspaces[C]//Proceedings of the 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, 2007: 225-234.

[3] Strasdat H, Davison A J, Montiel J M M, et al. Double window optimisation for constant time visual SLAM[C]//2011 International Conference on Computer Vision, 2011: 2352-2359.

[4] Kümmerle R, Grisetti G, Strasdat H, et al. G2O: A general framework for graph optimization[C]//2011 IEEE International Conference on Robotics and Automation, 2011: 3607-3613.

[5] Galvez-López D, Tardos J D. Bags of binary words for fast place recognition in image sequences[J]. IEEE Transactions on Robotics, 2012, 28(5): 1188-1197. doi: 10.1109/TRO.2012.2197158

[6] Bellavia F, Fanfani M, Pazzaglia F, et al. Robust selective stereo SLAM without loop closure and bundle adjustment[C]//Proceedings of the 17th International Conference on Image Analysis and Processing, 2013, 8156: 462-471.

[7] Badino H, Yamamoto A, Kanade T. Visual odometry by multi-frame feature integration[C]//Proceedings of 2013 IEEE International Conference on Computer Vision Workshops, 2013: 222-229.

[8] Cvišić I, Petrović I. Stereo odometry based on careful feature selection and tracking[C]//European Conference on Mobile Robots. IEEE, 2015: 1-6.

[9] 许允喜, 陈方.基于多帧序列运动估计的实时立体视觉定位[J].光电工程, 2016, 43(2): 89-94. doi: 10.3969/j.issn.1003-501X.2016.02.015

Xu Y X, Chen F. Real-time stereo visual localization based on multi-frame sequence motion estimation[J]. Opto-Electronic Engineering, 2016, 43(2): 89-94. doi: 10.3969/j.issn.1003-501X.2016.02.015

[10] 罗杨宇, 刘宏林.基于光束平差法的双目视觉里程计研究[J].控制与决策, 2016, 31(11): 1936-1944. http://d.old.wanfangdata.com.cn/Periodical/kzyjc201611002

Luo Y Y, Liu H L. Research on binocular vision odometer based on bundle adjustment method[J]. Control and Decision, 2016, 31(11): 1936-1944. http://d.old.wanfangdata.com.cn/Periodical/kzyjc201611002

[11] Lu W, Xiang Z Y, Liu J L. High-performance visual odometry with two-stage local binocular BA and GPU[C]//2013 IEEE Intelligent Vehicles Symposium (IV), 2013: 23-26.

[12] Mur-Artal R, Tardós J D. ORB-SLAM2: an open-source SLAM system for monocular, stereo, and RGB-D cameras[J]. IEEE Transactions on Robotics, 2016, 33(5): 1255-1262. http://d.old.wanfangdata.com.cn/Periodical/dhdwxb201802006

[13] 侯荣波, 魏武, 黄婷, 等.基于ORB-SLAM的室内机器人定位和三维稠密地图构建[J].计算机应用, 2017, 37(5): 1439-1444. http://d.old.wanfangdata.com.cn/Periodical/jsjyy201705041

Hou R B, Wei W, Huang T, et al. Indoor robot localization and 3D dense mapping based on ORB-SLAM[J]. Computer Applications, 2017, 37(5): 1439-1444. http://d.old.wanfangdata.com.cn/Periodical/jsjyy201705041

[14] 周绍磊, 吴修振, 刘刚, 等.一种单目视觉ORB-SLAM/INS组合导航方法[J].中国惯性技术学报, 2016, 24(5): 633-637. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=zggxjsxb201605013

Zhou S L, Wu X Z, Liu G, et al. Integrated navigation method of monocular ORB-SLAM/INS[J]. Journal of Chinese Inertial Technology, 2016, 24(5): 633-637. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=zggxjsxb201605013

[15] Lourakis M I A, Argyros A A. SBA: a software package for generic sparse bundle adjustment[J]. ACM Transactions on Mathematical Software (TOMS), 2009, 36(1): 2. http://d.old.wanfangdata.com.cn/Periodical/wjclxb200304024

[16] 李卓, 刘洁瑜, 李辉, 等.基于ORB-LATCH的特征检测与描述算法[J].计算机应用, 2017, 37(6): 1759-1762, 1781. http://d.old.wanfangdata.com.cn/Periodical/jsjyy201706041

Li Z, Liu J Y, Li H, et al. Feature detection and description algorithm based on ORB-LATCH[J]. Journal of Computer Applications, 2017, 37(6): 1759-1762, 1781. http://d.old.wanfangdata.com.cn/Periodical/jsjyy201706041

[17] 樊俊杰, 梁华为, 祝辉, 等.基于双目视觉的四边形闭环跟踪算法[J].机器人, 2015, 37(6): 674-682. http://d.old.wanfangdata.com.cn/Periodical/jqr201506005

Fan J J, Liang H W, Zhu H, et al. Closed quadrilateral feature tracking algorithm based on binocular vision[J]. Robot, 2015, 37(6): 674-682. http://d.old.wanfangdata.com.cn/Periodical/jqr201506005

[18] 卢维.高精度实时视觉定位的关键技术研究[D].杭州: 浙江大学, 2015.

Lu W. Research on key techniques of high-precision and real-time visual localization[D]. Hangzhou: Zhejiang University, 2015.

http://cdmd.cnki.com.cn/Article/CDMD-10335-1015558776.htm [19] Geiger A, Lenz P, Stiller C, et al. Vision meets robotics: the KITTI dataset[J]. The International Journal of Robotics Research, 2013, 32(11): 1231-1237. doi: 10.1177/0278364913491297

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: