-

摘要

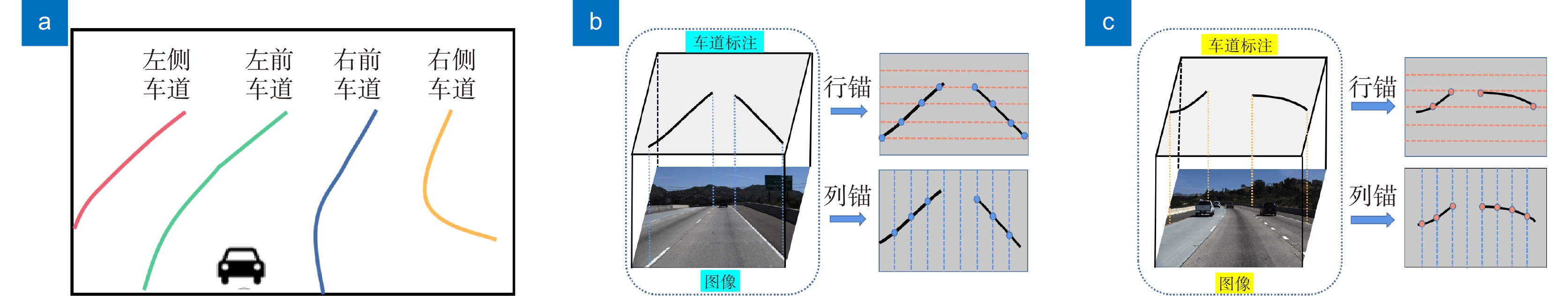

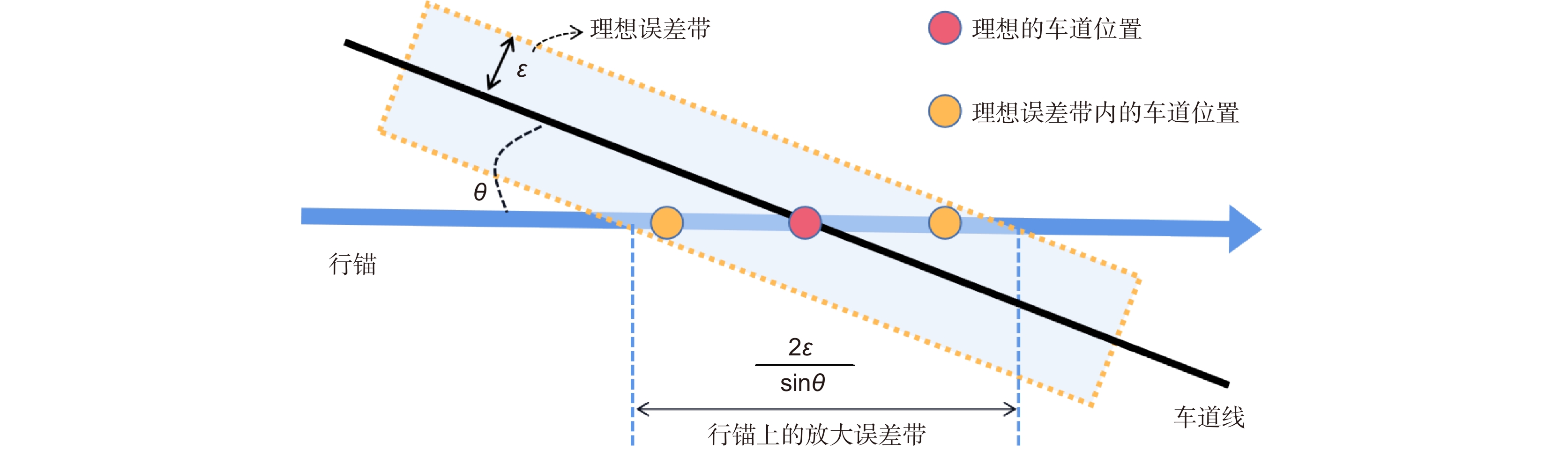

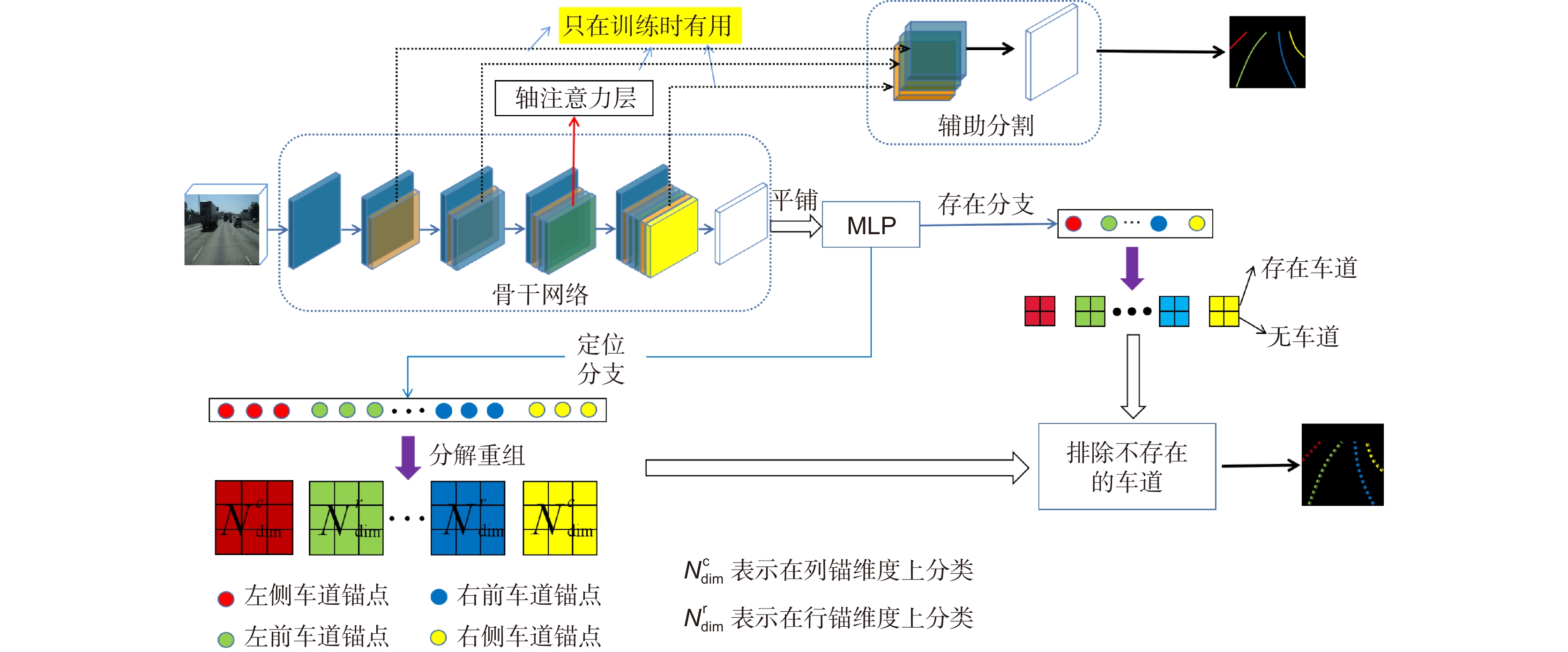

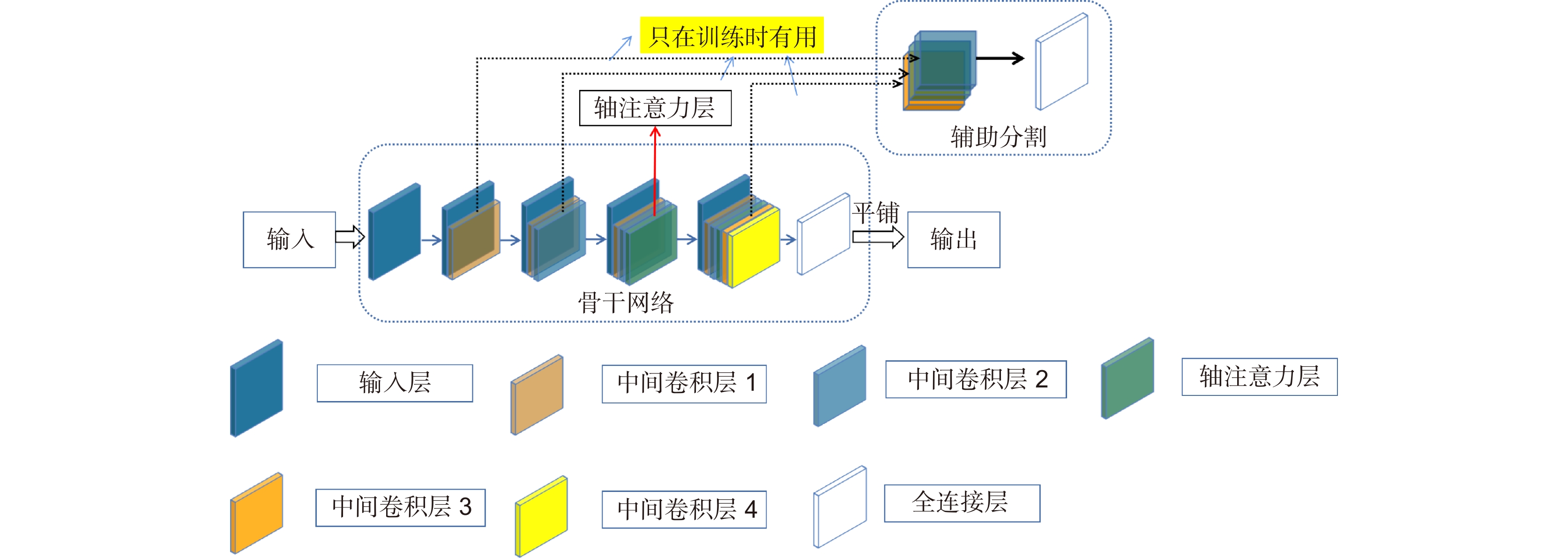

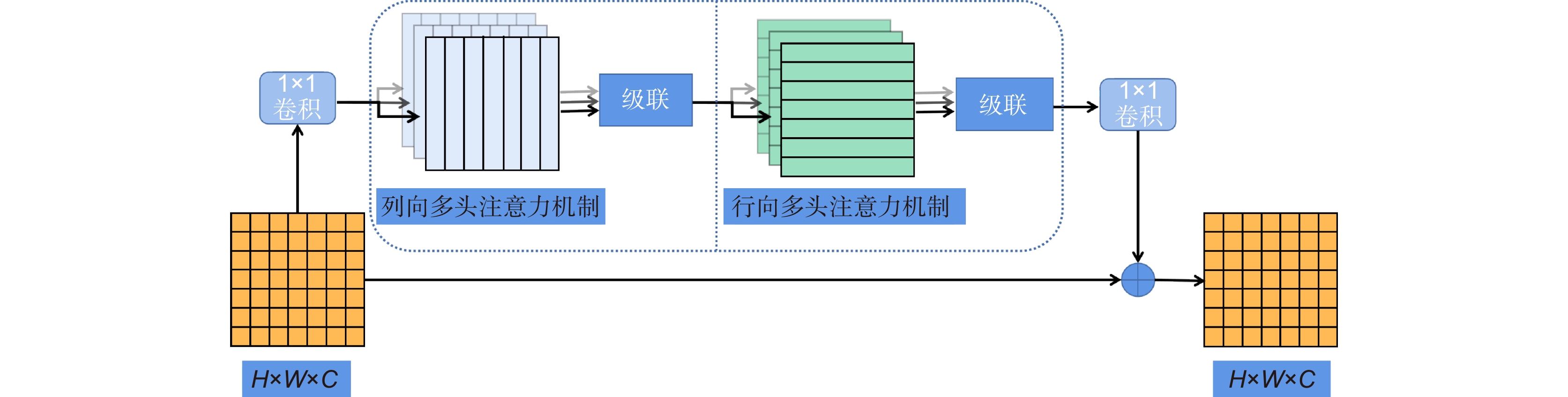

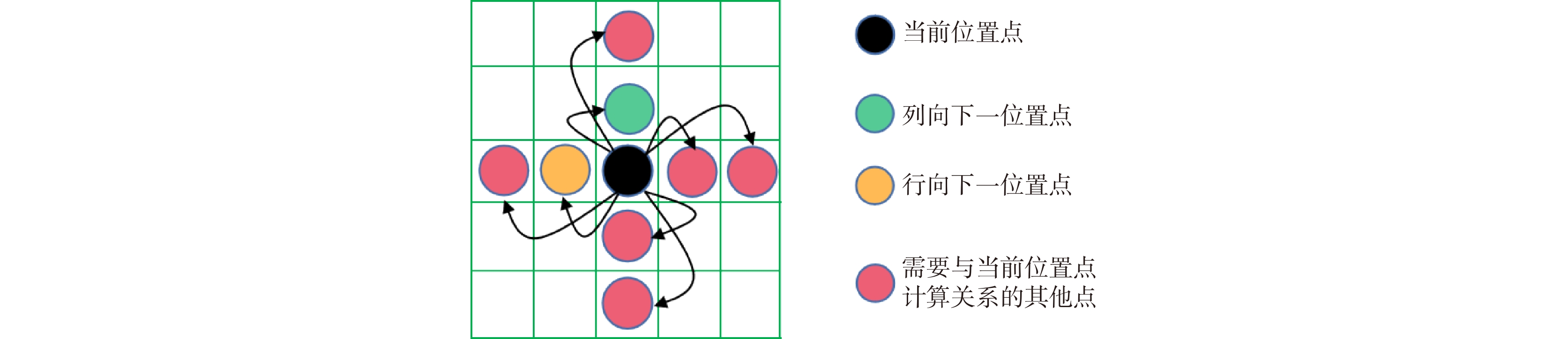

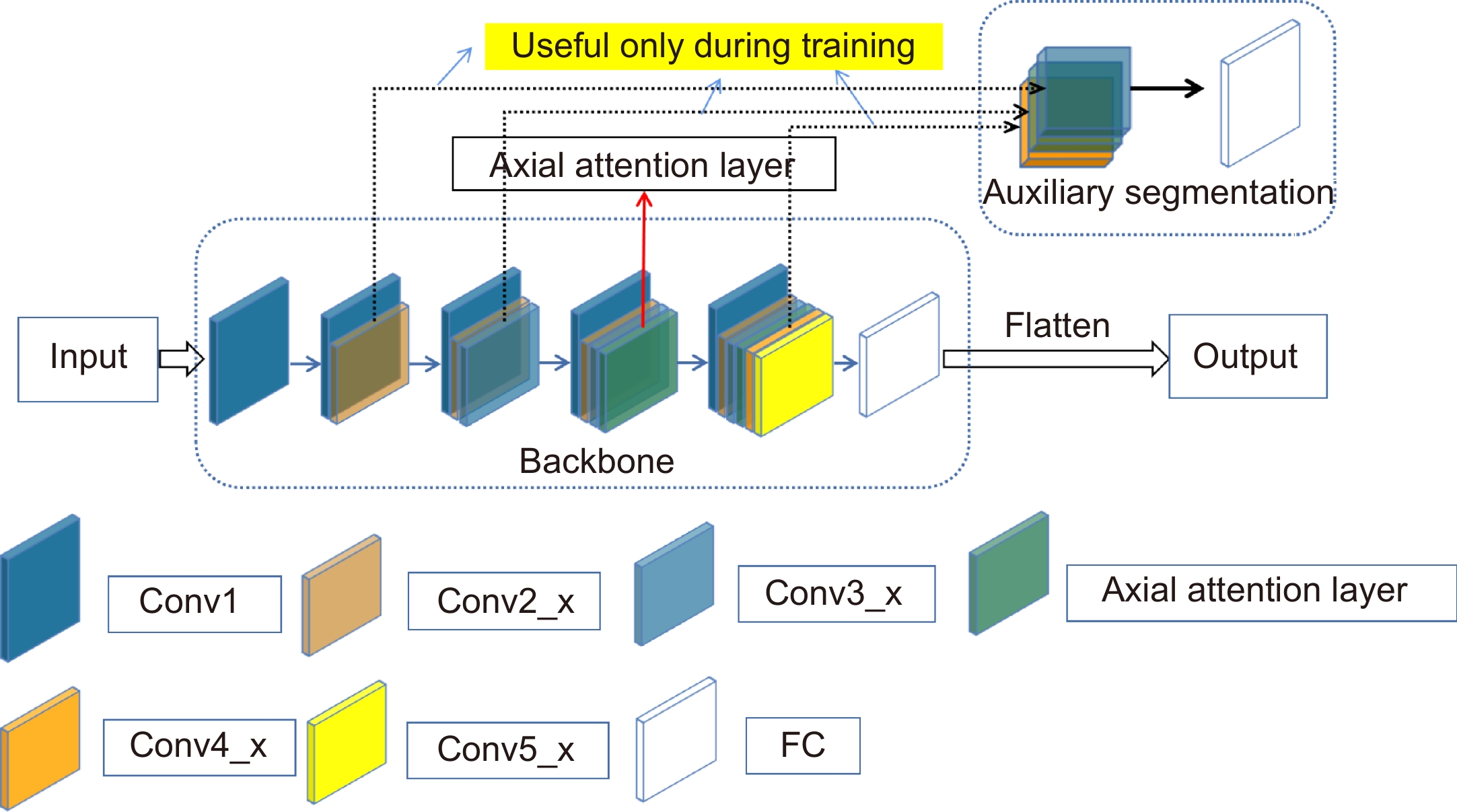

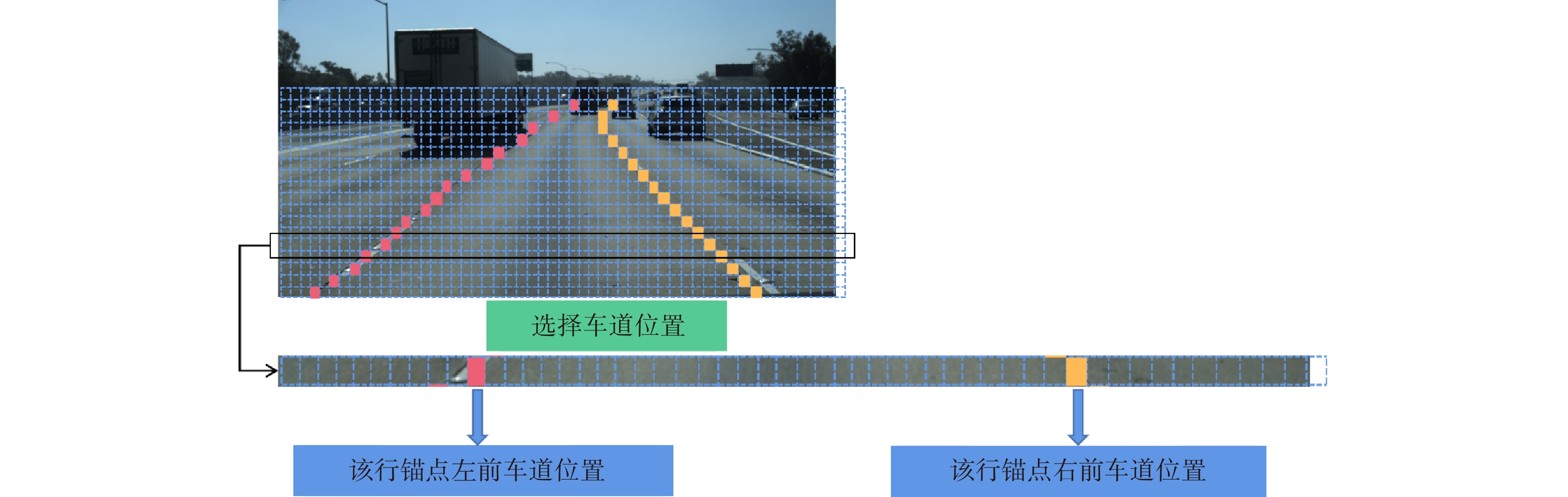

由于车道线的多样性以及交通场景的复杂性等问题,车道线检测是一项具有挑战性的任务。其主要表现在当车辆行驶在拥堵、夜晚、弯道等车道线不清晰或被遮挡的道路上时,现有检测方法的检测结果并不理想。本文基于检测方法的框架提出了一种轴注意力引导的锚点分类车道线检测方法来解决两个问题。首先是车道线不清晰或缺失时存在的视觉线索缺失问题。其次是锚点分类时用混合锚点上的稀疏坐标表示车道线带来的特征信息缺失问题,从而导致检测精度下降,所以通过在骨干网络中添加轴注意力层来聚焦行向和列向的显著特征来提高精度。在TuSimple和CULane两个数据集上进行了大量实验。实验结果表明,本文方法在各种条件下都具有鲁棒性,同时与现有的先进方法相比,在检测精度和速度方面都表现出综合优势。

Abstract

Lane detection is a challenging task due to the diversity of lane lines and the complexity of traffic scenes. The detection results of the existing detection methods are not ideal when the vehicle is driving in congestion, at night, and the lane lines are not clear or blocked on the road such as curves. Based on the framework of detection methods, a method that axial attention-guided anchor classification lane detection is proposed to solve two problems. The first is the problem of missing visual cues when lane lines are unclear or missing. The second problem is the lack of feature information caused by using sparse coordinates on mixed anchors, which leads to a decline of detection accuracy. Therefore, an axial attention layer is added to the backbone network to focus on prominent features of the row and column directions to improve the accuracy. Extensive experiments are conducted on the TuSimple and CULane datasets. Experimental results show that the proposed method is robust under various conditions while showing comprehensive advantages in terms of detection accuracy and speed compared with existing advanced methods.

-

Key words:

- lane detection /

- anchor classification /

- axial attention /

- autonomous driving

-

Overview

Overview: Lane detection is an important function of environment perception for autonomous vehicles. Although lane detection algorithms have been studied for a long time, existing algorithms still face many challenges in practical applications, mainly reflected in their unsatisfactory detection results when vehicles travel on roads with unclear or occluded lane lines such as in congestion, at night, or on curves. In recent years, deep learning-based methods have attracted more and more attention in lane detection because of their excellent robustness to image noise. These methods can be roughly divided into three categories: segment-based, detection-based, and parametric curve-based. Segmentation-based methods can achieve high-precision detection by detecting lane features pixel by pixel but have low detection efficiency due to high computational cost and time consumption. Detection-based methods usually convert the lane segments into learnable structural representations such as blocks or points,and then detect these structural features as lane lines. This method has the advantages of high speed and a strong ability to handle straight lanes, but their detection accuracy is obviously inferior to the segmentation-based methods. The performance of parametric curve-based methods lags behind well-designed segmentation-based and detection-based methods because the abstract polynomial coefficients are difficult for computers to learn. Following the framework of detection-based methods, a method that axial attention-guided anchor classification lane detection is proposed. The basic idea is to segment the lane into intermittent point blocks and transform the lane detection problem into the detection of lane anchor points. In the implementation process, replacing the pixel-by-pixel segmentation with a row anchor and column anchor can not only improve the lane detection speed but also improve the problem of missing visual cues of lane lines. In terms of network structure, adding the axial attention mechanism to the feature extraction network can more effectively extract anchor features and filter out redundant features, thereby improving the accuracy problem of detection-based methods. We conducted extensive experiments on two datasets, TuSimple and CULane, and the experimental results show that the proposed method has good robustness under various road conditions, especially in the case of occlusion. Compared with the existing models, it has comprehensive advantages in detection accuracy and speed. However as a detection method reliant on a single sensor, it remains challenging to achieve high-accuracy detection in highly complex real-world scenes, like rainy and polluted roads. Subsequent studies might achieve lane detection in more demanding environments by fusing multiple sensors together, such as laser radar and vision, and by incorporating prior constraints on vehicle motion.

-

-

表 1 数据集描述

Table 1. Datasets description

数据集 总数据 训练集 验证集 测试集 分辨率 车道数 环境 场景 TuSimple 6408 3268 358 2782 1280×720 ≤5 1 高速公路 CULane 133235 88880 9675 34680 1640×590 ≤4 9 城区和高速公路 表 2 不同数据集上的参数设置

Table 2. Hyperparameter settings on different datasets.

数据集 TuSimple CULane 行数量 56 18 列数量 40 40 每一行单元格数量(行锚点数量) 100 200 每一列单元格数量(列锚点数量) 100 100 使用行锚分类车道线数量 2 2 使用列锚分类车道线数量 2 2 表 3 消融实验结果

Table 3. Ablation results

行锚 列锚 轴注意力 精度/TuSimple 精度/CULane √ 95.55 64.72 √ √ 95.89 71.34 √ √ 95.91 65.61 √ √ √ 95.92 73.05 表 4 在TuSimple数据集上与其他方法的比较

Table 4. Comparison with other methods on the TuSimple dataset

方法 F1/% Acc/% FP/% FN/% 基于分割的方法 SCNN[2] 95.97 96.53 6.17 1.80 SAD[6] 95.92 96.64 6.02 2.05 LaneNet[7] N/A 96.40 7.80 2.44 DALaneNet[8] N/A 95.86 8.20 3.16 基于参数曲线的方法 BezierLaneNet (ResNet34)[15] N/A 95.65 5.10 3.90 基于检测的方法 E2E (ResNet34)[10] N/A 96.22 3.21 4.28 LaneATT (ResNet18)[14] 96.71 95.57 3.56 3.01 UFLD (ResNet34)[11] N/A 95.55 19.35 4.30 UFLDv2 (ResNet18)[12] 96.11 95.92 3.16 4.59 Ours (ResNet34) 96.64 95.92 2.41 4.29 注:N/A表示相关论文没有提及该内容。 表 5 CULane测试集F1的比较

Table 5. Comparison of F1 on CULane dataset

方法 Normal Crowd Dazzle Shadow No-line Arrow Curve Cross Night Average FPS 基于分割的方法 SCNN[2] 90.6 69.7 58.5 66.9 43.4 84.1 64.4 1990 66.1 71.6 8 SAD[6] 90.1 68.8 60.2 65.9 41.6 84.0 65.7 1998 66.0 70.8 75 基于参数曲线的方法 BezierLaneNet (ResNet18)[15] 90.2 71.6 62.5 70.9 45.3 84.1 59.0 996 68.7 73.7 N/A 基于检测的方法 E2E (ResNet34)[10] 90.4 69.9 61.5 68.1 45.0 83.7 69.8 2077 63.2 71.5 N/A CLRNet (ResNet34)[13] 93.3 78.3 73.7 79.7 53.1 90.3 71.6 1321 75.1 76.9 103 LaneATT (ResNet18)[14] 91.1 73.0 65.7 70.9 48.4 85.5 68.4 1170 69.0 75.1 250 LaneATT (ResNet34)[14] 92.1 75.0 66.5 78.2 49.4 88.4 67.7 1330 70.7 76.7 171 UFLD (ResNet18)[11] 89.3 68.0 62.2 63.0 40.7 83.5 58.2 1743 62.9 69.7 323 UFLD (ResNet34)[11] 89.5 68.7 57.2 69.2 41.7 84.7 59.3 2037 65.4 70.9 175 UFLDv2 (ResNet18)[12] 92.0 74.0 63.2 72.4 45.0 87.7 69.0 1998 69.8 75.0 330 Ours (ResNet34) 92.6 74.9 65.6 75.5 49.0 88.2 69.8 1864 70.9 76.0 171 注:N/A表示相关论文没有提及该内容。 -

参考文献

[1] The tusimple lane challenge[EB/OL]. (2018-10-20). https://github.com/TuSimple/tusimple-benchmark/issues/3.

[2] Pan X G, Shi J P, Luo P, et al. Spatial as deep: spatial CNN for traffic scene understanding[C]//Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, 2018: 891.

[3] Huang Y P, Li Y W, Hu X, et al. Lane detection based on inverse perspective transformation and Kalman filter[J]. KSII Trans Internet Inf Syst, 2018, 12(2): 643−661. doi: 10.3837/tiis.2018.02.006

[4] Laskar Z, Kannala J. Context aware query image representation for particular object retrieval[C]//Proceedings of the 20th Scandinavian Conference on Image Analysis, 2017: 88–99. https://doi.org/10.1007/978-3-319-59129-2_8.

[5] Zhao H S, Zhang Y, Liu S, et al. PSANet: point-wise spatial attention network for scene parsing[C]//Proceedings of the 15th European Conference on Computer Vision, 2018: 270–286. https://doi.org/10.1007/978-3-030-01240-3_17.

[6] Hou Y N, Ma Z, Liu C X, et al. Learning lightweight lane detection CNNs by self attention distillation[C]//2019 IEEE/CVF International Conference on Computer Vision (ICCV), 2019: 1013–1021. https://doi.org/10.1109/ICCV.2019.00110.

[7] Neven D, De Brabandere B, Georgoulis S, et al. Towards end-to-end lane detection: an instance segmentation approach[C]//2018 IEEE Intelligent Vehicles Symposium, 2018: 286–291. https://doi.org/10.1109/IVS.2018.8500547.

[8] Guo Z Y, Huang Y P, Wei H J, et al. DALaneNet: a dual attention instance segmentation network for real-time lane detection[J]. IEEE Sensors J, 2021, 21(19): 21730−21739. doi: 10.1109/JSEN.2021.3100489

[9] 张冲, 黄影平, 郭志阳, 等. 基于语义分割的实时车道线检测方法[J]. 光电工程, 2022, 49(5): 210378. doi: 10.12086/oee.2022.210378

Zhang C, Huang Y P, Guo Z Y, et al. Real-time lane detection method based on semantic segmentation[J]. Opto-Electron Eng, 2022, 49(5): 210378. doi: 10.12086/oee.2022.210378

[10] Yoo S, Lee H S, Myeong H, et al. End-to-end lane marker detection via row-wise classification[C]//2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2020: 4335–4343.

[11] Qin Z Q, Wang H Y, Li X. Ultra fast structure-aware deep lane detection[C]//Proceedings of the 16th European Conference on Computer Vision, 2020: 276–291. https://doi.org/10.1007/978-3-030-58586-0_17.

[12] Qin Z Q, Zhang P Y, Li X. Ultra fast deep lane detection with hybrid anchor driven ordinal classification[J]. IEEE Trans Pattern Anal Mach Intell, 2022. https://doi.org/10.1109/TPAMI.2022.3182097.

[13] Zheng T, Huang Y F, Liu Y, et al. CLRNet: cross layer refinement network for lane detection[C]//2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022: 888–897. https://doi.org/10.1109/CVPR52688.2022.00097.

[14] Tabelini L, Berriel R, Paixão T M, et al. Keep your eyes on the lane: real-time attention-guided lane detection[C]. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021: 294–302. https://doi.org/10.1109/CVPR46437.2021.00036.

[15] Feng Z Y, Guo S H, Tan X, et al. Rethinking efficient lane detection via curve modeling[C]//2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2022: 17041–17049. https://doi.org/10.1109/CVPR52688.2022.01655.

[16] Felguera-Martin D, Gonzalez-Partida J T, Almorox-Gonzalez P, et al. Vehicular traffic surveillance and road lane detection using radar interferometry[J]. IEEE Trans Veh Technol, 2012, 61(3): 959−970. doi: 10.1109/TVT.2012.2186323

[17] Wang F, Jiang M Q, Qian C, et al. Residual attention network for image classification[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition, 2017: 6450–6458. https://doi.org/10.1109/CVPR.2017.683.

[18] Hu J, Shen L, Sun G. Squeeze-and-excitation networks[C]//2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018: 7132–7141. https://doi.org/10.1109/CVPR.2018.00745.

[19] Woo S, Park J, Lee J Y, et al. CBAM: convolutional block attention module[C]//Proceedings of the 15th European Conference on Computer Vision, 2018: 3–19. https://doi.org/10.1007/978-3-030-01234-2_1.

[20] Fu J, Liu J, Tian H J, et al. Dual attention network for scene segmentation[C]//2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019: 3141–3149. https://doi.org/10.1109/CVPR.2019.00326.

[21] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016: 770–778. https://doi.org/10.1109/CVPR.2016.90.

[22] Wang H Y, Zhu Y K, Green B, et al. Axial-deeplab: stand-alone axial-attention for panoptic segmentation[C]//Proceedings of the 16th European Conference on Computer Vision, 2020: 108–126. https://doi.org/10.1007/978-3-030-58548-8_7.

[23] Ramachandran P, Parmar N, Vaswani A, et al. Stand-alone self-attention in vision models[C]//Proceedings of the 33rd International Conference on Neural Information Processing Systems, 2019: 7.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: