Nighttime sea fog recognition based on remote sensing satellite and deep neural decision tree

-

摘要

遥感卫星具有覆盖范围广、连续观测等特点,被广泛应用于海雾识别相关研究。本文首先借助能够穿透云层,获取大气剖面信息的星载激光雷达(cloud-aerosol LiDAR with orthogonal polarization, CALIOP)对中高云、低云、海雾、晴空海表样本进行了标注。然后结合葵花8号卫星(Himawari-8)多通道数据提取了各类样本的亮温特征与纹理特征。最后根据海雾监测的需求,抽象出海雾监测的推理决策树,并据此建立深度神经决策树模型,实现了高精度监测夜间海雾的同时具备较强的可解释性。选择2020年6月5日夜间Himawari-8每时次连续观测数据进行测试,监测结果能够清晰地展现此次海雾事件的动态发展过程。同时本文方法海雾监测平均命中率(probability of detection, POD)为87.32%,平均误判率(false alarm ratio, FAR)为13.19%,平均临界成功指数(critical success index, CSI)为77.36%,为海上大雾的防灾减灾提供了一种新方法。

-

关键词:

- 葵花8号卫星 /

- CALIOP星载激光雷达 /

- 深度神经决策树 /

- 夜间海雾识别

Abstract

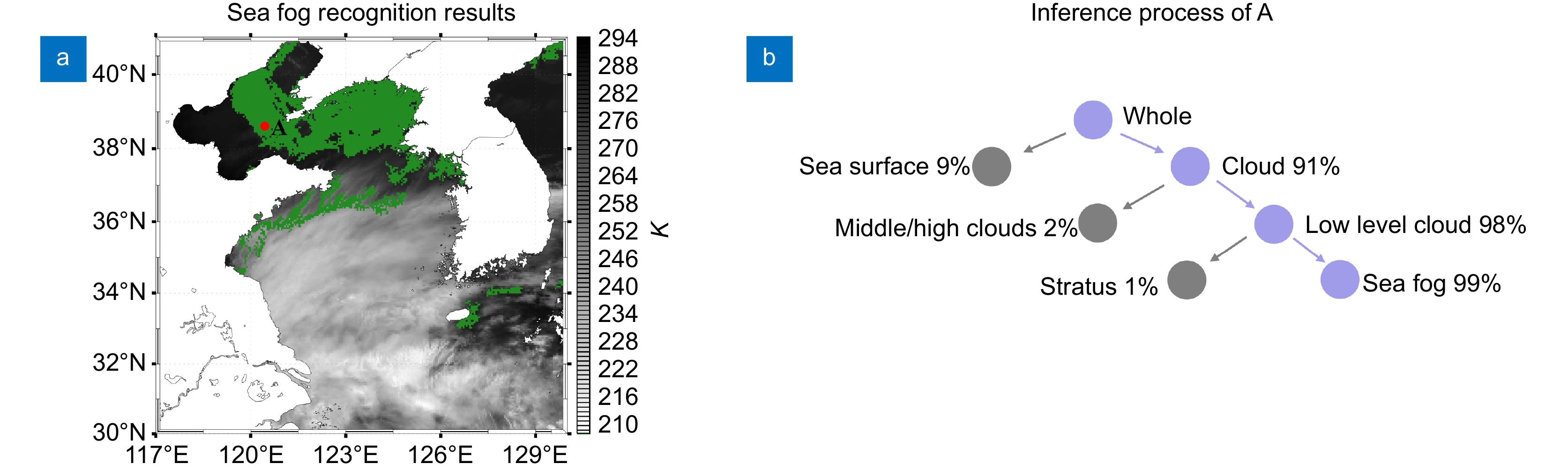

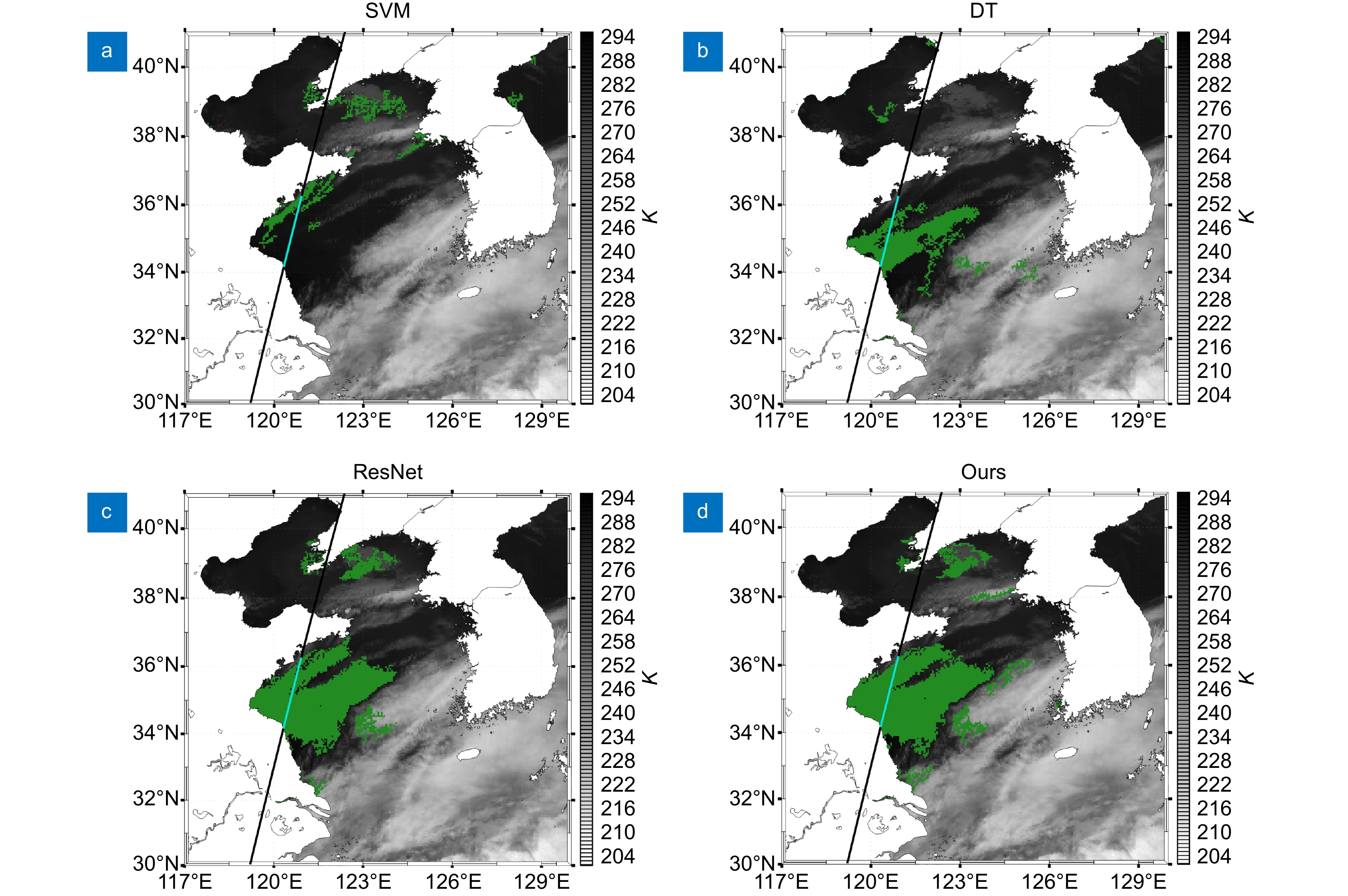

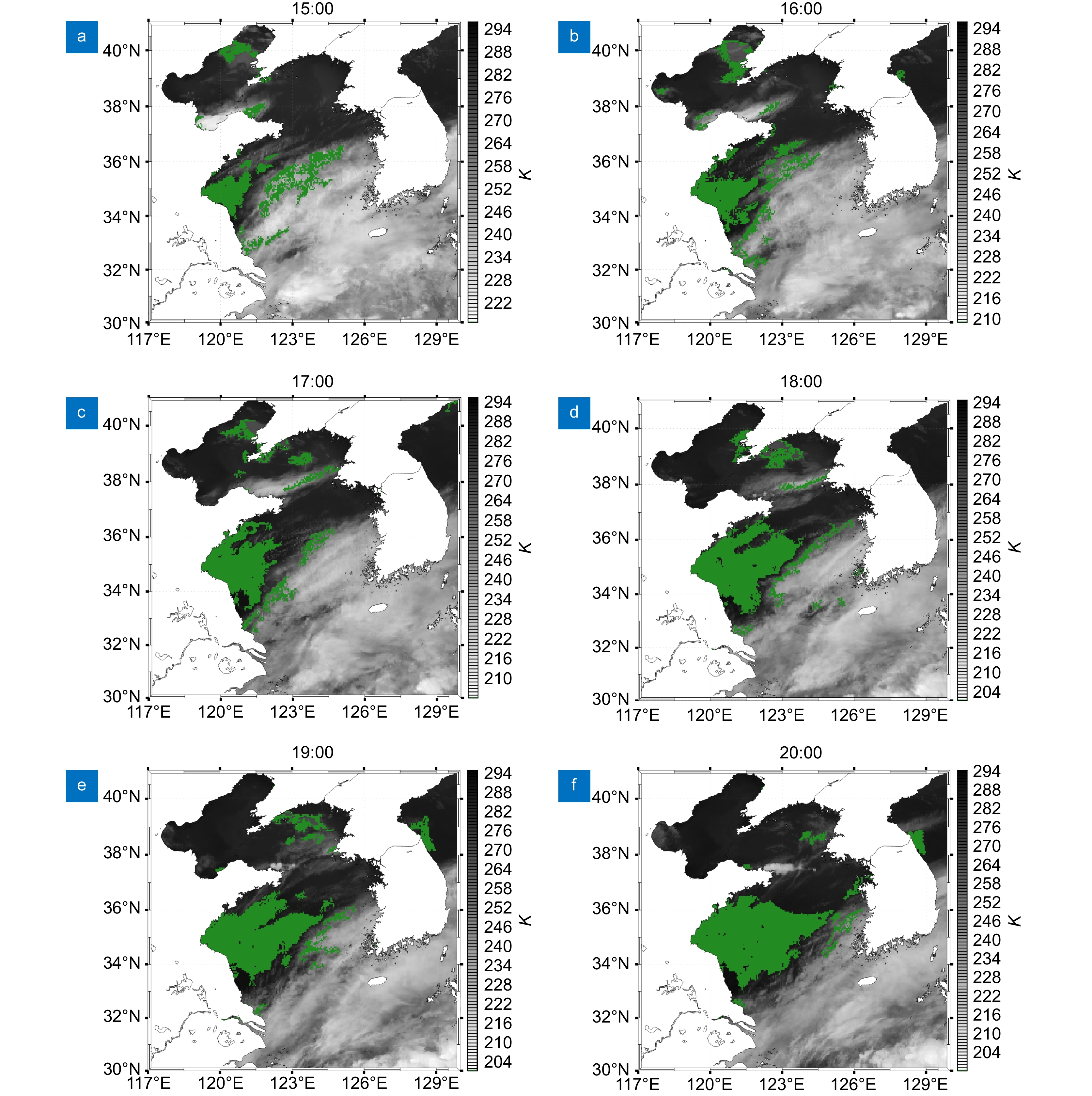

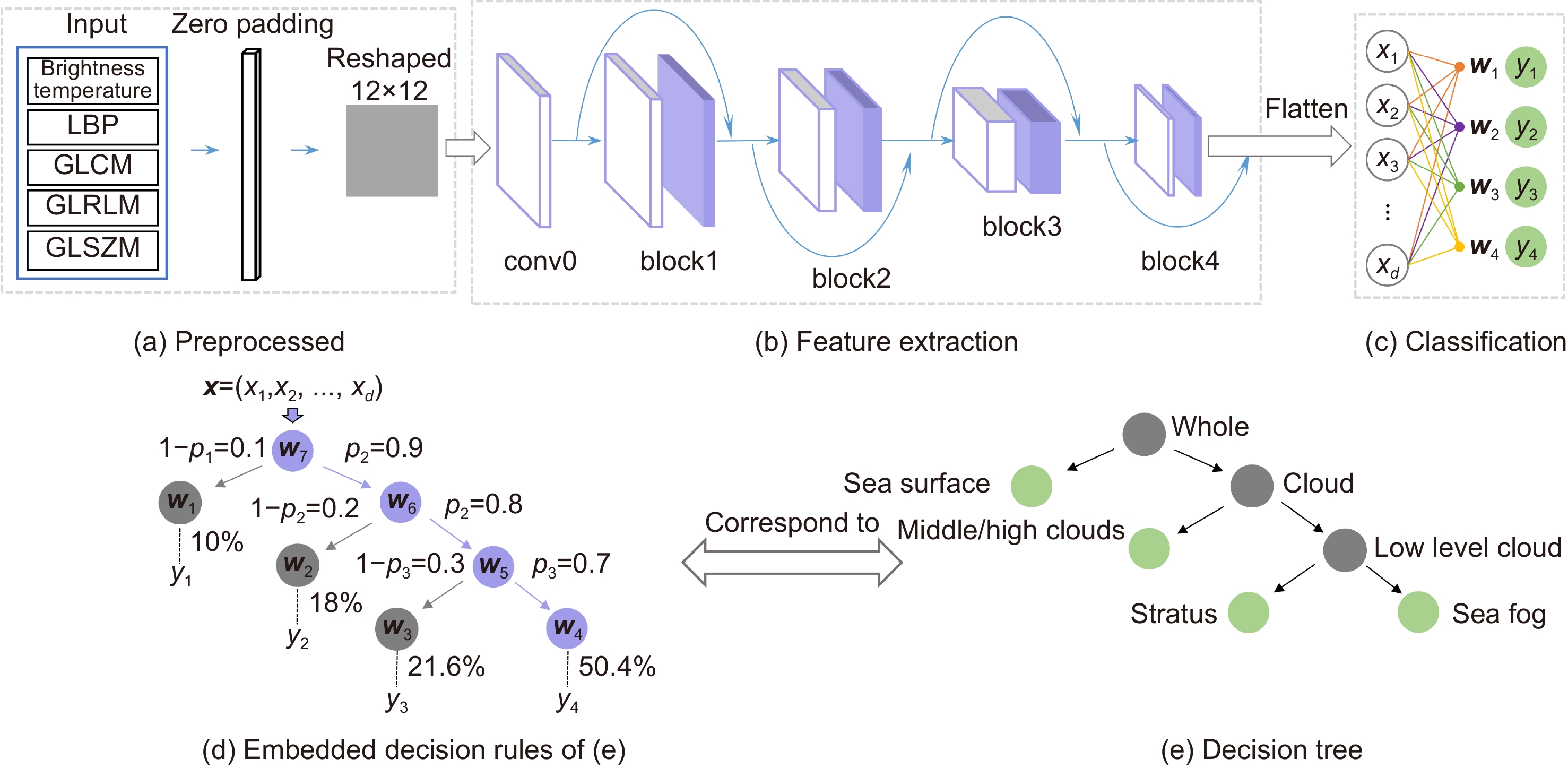

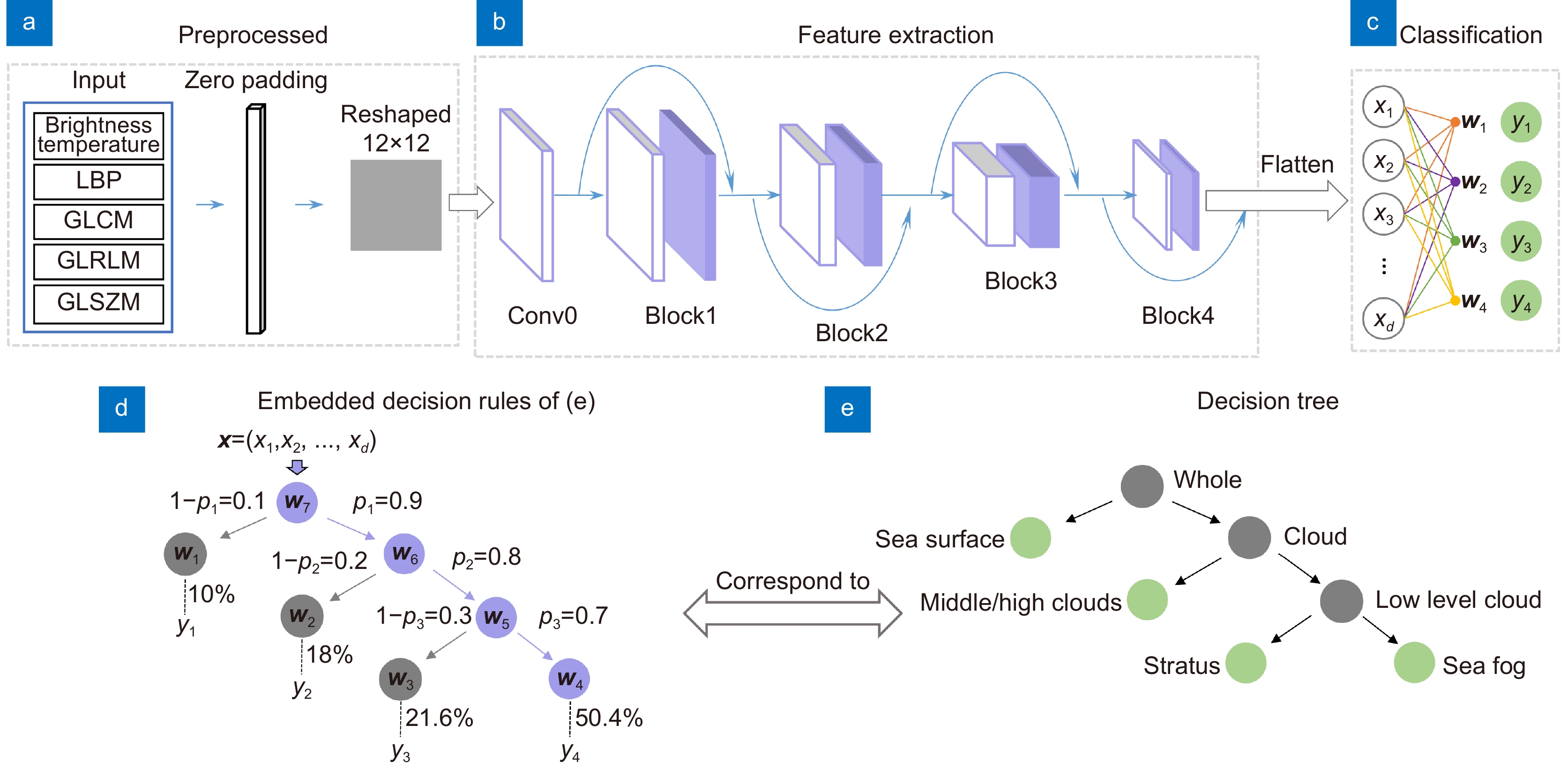

Remote sensing satellites have the characteristics of wide coverage and continuous observation, and are widely used in research related to the sea fog identification. Firstly, the Cloud-Aerosol LiDAR with Orthogonal Polarization (CALIOP), which is capable of penetrating clouds and obtaining atmospheric profiles, was used to annotate medium and high cloud, low cloud, sea fog, and clear sky sea surface samples. Then, bright temperature features and texture features were extracted from each type of sample in combination with multi-channel data from the Himawari-8 satellite. Finally, according to the needs of sea fog monitoring, the inference decision tree for sea fog monitoring was abstracted and a deep neural decision tree model was built accordingly, which could achieve high accuracy for nighttime sea fog monitoring while having strong interpretability. The continuous observation data of Himawari-8 on the night of June 5, 2020 was selected to test the sea fog. The monitoring results can clearly show the dynamic development process of the sea fog events. At the same time, the sea fog monitoring method in this paper has an average probability of detection (POD) of 87.32%, an average false alarm ratio (FAR) of 13.19%, and an average critical success index (CSI) of 77.36%, which provides a new method for disaster prevention and mitigation of heavy fog at sea.

-

Key words:

- Himawari-8 /

- CALIOP /

- deep neural decision tree /

- nighttime sea fog recognition

-

Overview

Overview: Sea fog is a dangerous weather phenomenon that seriously affects maritime traffic and other operations at sea. Remote sensing satellites have the characteristics of wide coverage and continuous observation, and are widely used in research related to the sea fog identification. Traditional sea fog monitoring algorithms usually establish a single channel or multi-channel model to gradually separate the clear sky sea surface, medium and high clouds, and low clouds with the help of the differences of reflectivity or brightness-temperature distribution of clouds in different channel satellite cloud images, so as to finally achieve the purpose of identifying sea fog. Although this method has the advantages of being simple, efficient, and highly interpretable, its sea fog identification accuracy is usually low, and is susceptible to seasonal and regional influences. As the main method of deep learning, convolutional neural network has the advantages of strong feature learning ability and high prediction accuracy. It is widely used in cloud image related fields. Although many studies have transferred convolutional neural network to sea fog monitoring task, they are limited to daytime sea fog monitoring. It is more difficult to label sea fog because of the lack of visible wavelength data at night compared to daytime monitoring scenarios. In addition, convolutional neural network is "black box" in nature, i.e. it is difficult to explain their inference process in a reasonable way.

In order to make the recognition of sea fog with high accuracy and reasonable interpretability, the cloud-aerosol LiDAR with orthogonal polarization (CALIOP), which is capable of penetrating clouds and obtaining atmospheric profiles, was first used to annotate medium and high cloud, low cloud, sea fog, and clear sky sea surface samples. Then, bright temperature features and texture features were extracted for each type of sample in combination with multi-channel data from the Himawari-8 satellite. Finally, according to the needs of sea fog monitoring, the inference decision tree for sea fog monitoring was abstracted and a deep neural decision tree model was built accordingly, which achieves high accuracy for nighttime sea fog monitoring while having strong interpretability. The continuous observation data of Himawari-8 on the night of June 5, 2020 was selected to test the sea fog. The monitoring results can clearly show the dynamic development process of the sea fog events. At the same time, the proposed sea fog monitoring method has an average probability of detection (POD) of 87.32%, an average false alarm ratio (FAR) of 13.19%, and an average critical success index (CSI) of 77.36%, which provides a new method for disaster prevention and mitigation of heavy fog at sea.

-

-

表 1 不同网络层数实验结果

Table 1. Experimental results of different network layers

Method Middle/high clouds Stratus Sea fog Sea surface Average POD/(%) 89.30 76.71 82.12 92.15 85.07 Three-groups FAR/(%) 11.07 20.37 21.13 7.42 15.00 CSI/(%) 80.36 64.13 67.31 85.81 74.40 POD/(%) 89.09 80.87 86.47 92.84 87.32 Four- groups FAR/(%) 7.06 18.64 19.14 7.90 13.19 CSI/(%) 83.44 68.23 71.78 85.99 77.36 POD/(%) 90.40 78.59 82.00 90.65 85.41 Five- groups FAR/(%) 11.07 19.82 19.24 7.54 14.42 CSI/(%) 81.25 65.81 68.60 84.41 75.02 POD/(%) 91.99 77.60 80.24 91.34 85.29 Six- groups FAR/(%) 11.67 20.43 17.83 7.05 14.24 CSI/(%) 82.02 64.71 68.34 85.42 75.12 表 2 不同卷积网络结果对比

Table 2. Comparison of results of different convolution networks

Method Middle/high clouds Stratus Sea fog Sea surface Average POD/(%) 87.36 76.11 81.76 88.45 83.42 CNN_1D FAR/(%) 11.60 25.07 23.79 4.84 16.33 CSI/(%) 78.38 60.66 65.14 84.64 72.20 POD/(%) 89.09 80.87 86.47 92.84 87.32 CNN_2D FAR/(%) 7.06 18.64 19.14 7.90 13.19 CSI/(%) 83.44 68.23 71.78 85.99 77.36 表 3 消融结果对比

Table 3. Comparison of ablation results

Method Middle/high clouds Stratus Sea fog Sea surface Average POD/(%) 90.40 67.59 73.65 93.30 81.24 ATF FAR/(%) 11.55 24.72 21.55 18.30 19.03 CSI/(%) 80.85 55.31 61.25 77.17 68.65 POD/(%) 89.44 81.96 80.47 89.49 85.34 ATL FAR/(%) 8.80 20.40 21.92 7.52 14.66 CSI/(%) 82.34 67.73 65.64 83.42 74.78 POD/(%) 89.09 80.87 86.47 92.84 87.32 WOA FAR/(%) 7.06 18.64 19.14 7.90 13.19 CSI/(%) 83.44 68.23 71.78 85.99 77.36 表 4 模型分类混淆矩阵

Table 4. Confusion matrix of model

True label Middle/high clouds Stratus Sea fog Sea surface Middle/high clouds 1290 85 58 15 Stratus 56 816 98 39 Sea fog 36 64 735 15 Sea surface 6 38 18 804 表 5 不同海雾识别方法分类结果对比

Table 5. Classification accuracy of different sea fog recognition methods

Method Middle/high clouds Stratus Sea fog Sea surface Average POD/(%) 85.28 81.71 58.78 91.78 79.39 SVM FAR/(%) 19.12 21.56 31.02 9.05 20.19 CSI/(%) 70.97 66.72 46.49 84.10 67.07 POD/(%) 81.42 64.22 62.71 82.10 72.61 DT FAR/(%) 19.41 35.01 36.85 18.18 27.36 CSI/(%) 68.07 47.72 45.91 69.43 57.78 POD/(%) 89.99 81.17 85.41 93.76 87.58 ResNet FAR/(%) 8.37 17.94 18.24 6.13 12.67 CSI/(%) 83.15 68.94 71.74 88.36 78.05 POD/(%) 89.09 80.87 86.47 92.84 87.32 Ours FAR/(%) 7.06 18.64 19.14 7.90 13.19 CSI/(%) 83.44 68.23 71.78 85.99 77.36 -

参考文献

[1] 肖艳芳, 张杰, 崔廷伟, 等. 海雾卫星遥感监测研究进展[J]. 海洋科学, 2017, 41(12): 146−154. doi: 10.11759/hykx20170523001

Xiao Y F, Zhang J, Cui T W, et al. Review of sea fog detection from satellite remote sensing data[J]. Marine Sciences, 2017, 41(12): 146−154. doi: 10.11759/hykx20170523001

[2] Shin D, Kim J H. A new application of unsupervised learning to nighttime sea fog detection[J]. Asia Pac J Atmos Sci, 2018, 54(4): 527−544. doi: 10.1007/s13143-018-0050-y

[3] Kim D, Park M S, Park Y J, et al. Geostationary Ocean Color Imager (GOCI) marine fog detection in combination with Himawari-8 based on the decision tree[J]. Remote Sens, 2020, 12(1): 149. doi: 10.3390/rs12010149

[4] 郝姝馨, 郝增周, 黄海清, 等. 基于Himawari-8数据的夜间海雾识别[J]. 海洋学报, 2021, 43(11): 166−180. doi: 10.12284/hyxb2021158

Hao S X, Hao Z Z, Huang H Q, et al. Nighttime sea fog recognition based on Himawari-8 data[J]. Haiyang Xuebao, 2021, 43(11): 166−180. doi: 10.12284/hyxb2021158

[5] Zhou Z W, Siddiquee M M R, Tajbakhsh N, et al. UNet++: a nested U-Net architecture for medical image segmentation[M]//Stoyanov D, Taylor Z, Carneiro G, et al. Deep learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support. Cham: Springer, 2018: 3–11.

[6] Xuan R R, Li T, Wang Y T, et al. Prenatal prediction and typing of placental invasion using MRI deep and radiomic features[J]. BioMed Eng OnLine, 2021, 20(1): 56. doi: 10.1186/s12938-021-00893-5

[7] Zha S J, Jin W, He C F, et al. Detecting of overshooting cloud tops via Himawari-8 imagery using dual channel multiscale deep network[J]. IEEE J Sel Top Appl Earth Obs Remote Sens, 2021, 14: 1654−1664. doi: 10.1109/JSTARS.2020.3044618

[8] Kim J H, Ryu S, Jeong J, et al. Impact of satellite sounding data on virtual visible imagery generation using conditional generative adversarial network[J]. IEEE J Sel Top Appl Earth Obs Remote Sens, 2020, 13: 4532−4541. doi: 10.1109/JSTARS.2020.3013598

[9] Kim K, Kim J H, Moon Y J, et al. Nighttime reflectance generation in the visible band of satellites[J]. Remote Sens, 2019, 11(18): 2087. doi: 10.3390/rs11182087

[10] Jeon H K, Kim S, Edwin J, et al. Sea fog identification from GOCI images using CNN transfer learning models[J]. Electronics, 2020, 9(2): 311. doi: 10.3390/electronics9020311

[11] Xu M Q, Wu M, Guo J, et al. Sea fog detection based on unsupervised domain adaptation[J]. Chin J Aeron, 2022, 35(4): 415−425. doi: 10.1016/j.cja.2021.06.019

[12] Huang Y X, Wu M, Guo J, et al. A correlation context-driven method for sea fog detection in meteorological satellite imagery[J]. IEEE Geosci Remote Sens Lett, 2022, 19: 1003105. doi: 10.1109/LGRS.2021.3095731

[13] 刘树霄, 衣立, 张苏平, 等. 基于全卷积神经网络方法的日间黄海海雾卫星反演研究[J]. 海洋湖沼通报, 2019(6): 13−22. doi: 10.13984/j.cnki.cn37-1141.2019.06.002

Liu S X, Yi L, Zhang S P, et al. A study of daytime sea fog retrieval over the yellow sea based on fully convolutional networks[J]. Trans Oceanol Limnol, 2019(6): 13−22. doi: 10.13984/j.cnki.cn37-1141.2019.06.002

[14] 黄彬, 吴铭, 孙舒悦, 等. 基于深度学习的卫星多通道图像融合的海雾监测处理方法[J]. 气象科技, 2021, 49(6): 823−829,850.

Huang B, Wu M, Sun S Y, et al. Sea fog monitoring method based on deep learning satellite multi-channel image fusion[J]. Meteorol Sci Technol, 2021, 49(6): 823−829,850.

[15] Wan J H, Su J, Liu S W, et al. The research on the spectral characteristics of sea fog based on CALIOP and MODIS data[J]. Int Arch Photogramm Remote Sens Spatial Inf Sci, 2018, XLII-3: 1667−1671. doi: 10.5194/isprs-archives-XLII-3-1667-2018

[16] Wu D, Lu B, Zhang T C, et al. A method of detecting sea fogs using CALIOP data and its application to improve MODIS-based sea fog detection[J]. J Quant Spectr Radiat Transfer, 2015, 153: 88−94. doi: 10.1016/j.jqsrt.2014.09.021

[17] 司光, 符冉迪, 何彩芬, 等. 结合遥感卫星及深度神经网络的白天海雾识别[J]. 光电子·激光, 2020, 31(10): 1074−1082. doi: 10.16136/j.joel.2020.10.0172

Si G, Fu R D, He C F, et al. Daytime sea fog recognition based on remote sensing satellite and deep neural network[J]. J Optoelectron·Laser, 2020, 31(10): 1074−1082. doi: 10.16136/j.joel.2020.10.0172

[18] Ioffe S, Szegedy C. Batch normalization: accelerating deep network training by reducing internal covariate shift[C]//Proceedings of the 32nd International Conference on International Conference on Machine Learning, 2015: 448–456.

[19] Wan A, Dunlap L, Ho D, et al. NBDT: neural-backed decision trees[Z]. arXiv: 2004.00221, 2020. https://arxiv.org/abs/2004.00221.

[20] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition, 2016.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: