Super-resolution reconstruction of infrared image based on channel attention and transfer learning

-

摘要

针对现有红外图像分辨率低、质量不高的问题,提出了基于通道注意力与迁移学习的红外图像超分辨率重建方法。该方法设计了一个深度卷积神经网络,融入通道注意力机制来增强网络的学习能力,并且使用残差学习方式来减轻梯度爆炸或消失问题,加速网络的收敛。考虑到高质量的红外图像难以采集、数目不足的情况,将网络的训练分成两步:第一步使用自然图像来预训练网络模型,第二步利用迁移学习的知识,用较少数量的高质量红外图像对预训练的模型参数进行迁移微调,使模型对红外图像的重建效果更优。最后,加入多尺度细节滤波器来提升红外重建图像的视觉效果。在Set5、Set14数据集以及红外图像上的实验表明,融入通道注意力机制和残差学习方法,均能提升超分辨率重建的效果,迁移微调能很好地解决红外样本数量不足的问题,而多尺度细节提升滤波则能提升重建图像的细节,增大信息量。

Abstract

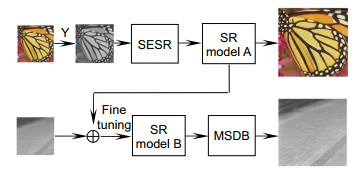

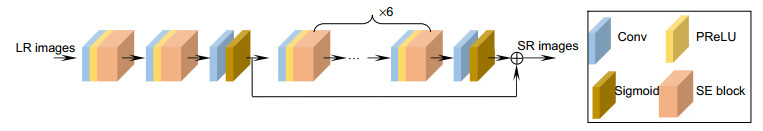

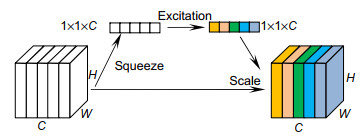

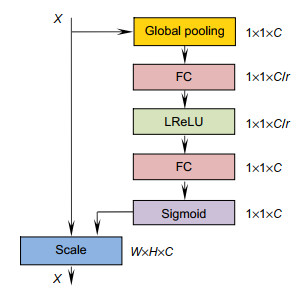

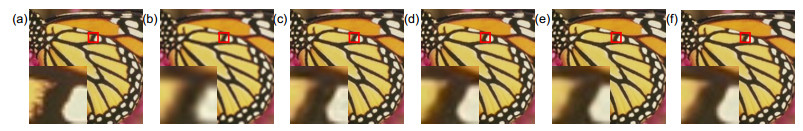

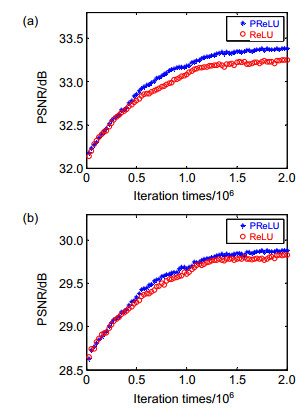

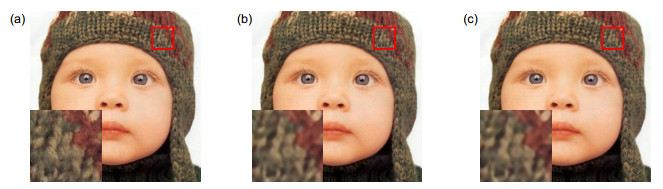

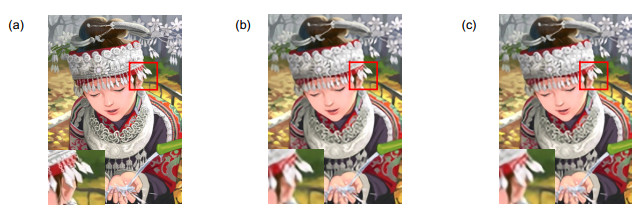

A super-resolution reconstruction method of infrared images based on channel attention and transfer learning was proposed to solve the problems of low resolution and low quality of infrared images. In this method, a deep convolutional neural network is designed to enhance the learning ability of the network by introducing the channel attention mechanism, and the residual learning method is used to mitigate the problem of gradient explosion or disappearance and to accelerate the convergence of the network. Because high-quality infrared images are difficult to collect and insufficient in number, so this method is divided into two steps: the first step is to use natural images to pre-train the neural network model, and the second step is to use transfer learning knowledge to fine-tune the pre-trained model's parameters with a small number of high-quality infrared images to make the model better in reconstructing the infrared image. Finally, a multi-scale detail boosting filter is added to improve the visual effect of the reconstructed infrared image. Experiments on Set5 and Set14 datasets as well as infrared images show that the deepening network depth and introducing channel attention mechanism can improve the effect of super-resolution reconstruction, transfer learning can well solve the problem of insufficient number of infrared image samples, and multi-scale detail boosting filter can improve the details and increase the amount of information of the reconstruction image.

-

Key words:

- super-resolution /

- infrared image /

- convolutional neural network /

- attention /

- transfer learning

-

Overview

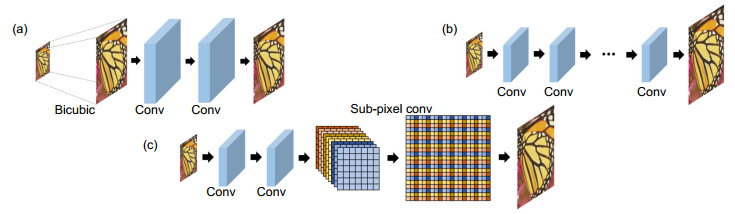

Overview: In recent years, infrared imaging technology has developed rapidly and has been increasingly used in military reconnaissance, security surveillance, and medical imaging. However, in the process of infrared image imaging or transmission, it is affected by many factors such as environment and equipment. The infrared image often has a low resolution, which greatly reduces the amount of information contained in the infrared image and restricts the application value of the infrared image. Therefore, how to obtain high-resolution and high-information infrared images has become an issue that people urgently need to solve. In recent years, the development of deep learning technology has made rapid progress, and super-resolution methods based on deep learning have begun to appear. However, if these convolutional neural networks are directly applied to the infrared image field, there are some problems: SRCNN, FSRCNN, and ESPCN have fewer network convolutional layers and insufficient network depth, and the learning features will be relatively single, ignoring the differences between image features. The mutual relationship makes it difficult to extract the deep-level information of the infrared image, and SRGAN may generate super-resolution images that are not close to the original image in certain details, which is not conducive to the application of infrared images in military, medical and surveillance. Another problem that needs to be overcome is that it is difficult to collect a sufficient number of high-quality infrared images in real life, and a large number of images of different scenes and targets are required as training samples for common deep learning methods. The infrared images used as training data sets to achieve deep learning methods often fail to achieve the desired effect. In order to solve these problems, this paper proposes a method for super-resolution reconstruction of infrared images based on channel attention and transfer learning. This method first designs a deep convolutional neural network, which integrates the channel attention mechanism to learn the correlation between the channels in the feature space, enhances the learning ability of the network, and uses residual learning to reduce the problem of gradient explosion or disappearance and to speed up network convergence. Then, considering that high-quality infrared images are difficult to collect and insufficient in number, the network training is divided into two steps: the first step uses natural images to pre-train a super-resolution model of natural images, and the second step is to use transfer learning knowledge. Using a small number of high-quality infrared images, the pre-trained model parameters are quickly transferred and fine-tuned to improve the reconstruction effect of the model on the infrared image, thereby obtaining a super-resolution model of the infrared image. Finally, a multi-scale detail boosting (MSDB) module is added to enhance the details and visual effects of the infrared reconstructed image and to increase the amount of information.

-

-

表 1 各卷积层参数设置

Table 1. The parameter setting of each convolution layer

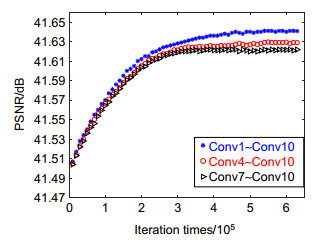

Name Number Size Conv1 64 9×9 Conv2 32 1×1 Conv3 1 5×5 Conv4~Conv9 64 3×3 Conv10 1 3×3 表 2 5种方法在2个数据集上的重建结果(PSNR/SSIM)

Table 2. Reconstruction results of 5 methods on 2 datasets (PSNR/SSIM)

Data set Scale Bicubic SRCNN FSRCNN ESPCN SESR Set5 2 33.66/0.9299 36.66/0.9542 37.00/0.9558 37.06/0.9559 37.39/0.9586 3 30.39/0.8682 32.75/0.9090 33.16/0.9104 33.13/0.9135 33.38/0.9142 4 28.42/0.8104 30.48/0.8628 30.71/0.8657 30.90/0.8673 31.03/0.8735 Set14 2 30.24/0.8688 32.42/0.9063 32.63/0.9088 32.75/0.9098 32.94/0.9116 3 27.55/0.7742 29.28/0.8209 29.42/0.8242 29.49/0.8271 29.66/0.8298 4 26.00/0.7027 27.49/0.7503 27.59/0.7535 27.73/0.7637 27.85/0.7721 表 3 2种方式在2个数据集上的重建结果

Table 3. Reconstruction results of 2 methods on 2 datasets

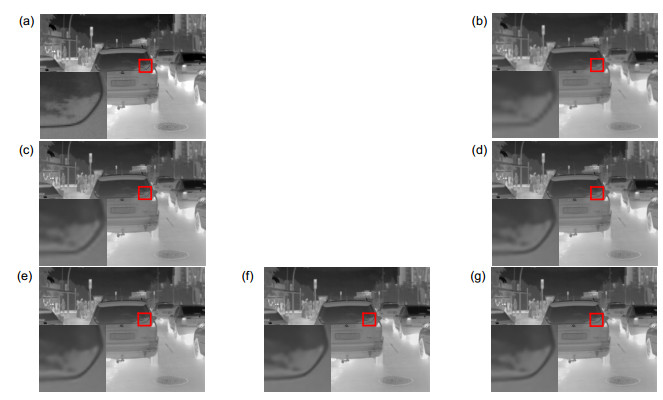

Data set Scale SESR(no attention) SESR Set5 2 37.31/0.9576 37.39/0.9586 3 33.27/0.9136 33.38/0.9142 4 31.02/0.8707 31.03/0.8735 Set14 2 32.88/0.9108 32.94/0.9116 3 29.59/0.8283 29.66/0.8298 4 27.86/0.7720 27.85/0.7721 表 4 6种方法在5幅红外图像上的测试结果(PSNR/SSIM)

Table 4. Reconstruction results of 6 methods on 5 infrared images(PSNR/SSIM)

Methods Car1 Car2 People1 People2 Road Average Bicubic 39.21/0.9480 37.22/0.9362 42.48/0.9558 41.02/0.9407 40.67/0.9364 40.12/0.9434 SRCNN 40.69/0.9545 38.54/0.9456 43.11/0.9587 41.61/0.9453 41.21/0.9413 41.05/0.9491 FSRCNN 41.23/0.9565 39.20/0.9497 43.27/0.9591 41.82/0.9469 41.36/0.9422 41.38/0.9509 SESR_I 41.17/0.9561 39.13/0.9489 43.23/0.9589 41.80/0.9463 41.33/0.9419 41.33/0.9504 SESR 41.43/0.9576 39.44/0.9521 43.30/0.9595 41.94/0.9484 41.34/0.9425 41.49/0.9520 SESR_T 41.75/0.9584 39.58/0.9524 43.38/0.9598 42.01/0.9486 41.48/0.9431 41.64/0.9525 表 5 5幅红外图像上3种评价指标的重建结果

Table 5. Reconstruction results of 3 assessment indicator on 5 infrared images

Methods Assessment indicator Car1 Car2 People1 People2 Road Average SESR_T PSNR 41.75 39.58 43.38 42.01 41.48 41.64 SSIM 0.9584 0.9524 0.9598 0.9486 0.9431 0.9525 NIQE 5.0882 4.6436 5.4748 4.8767 5.2902 5.0747 SESR_T+MSDB PSNR 40.12 38.46 42.68 41.38 40.73 40.67 SSIM 0.9554 0.9537 0.9578 0.9463 0.9466 0.9520 NIQE 4.4907 4.3997 4.8909 4.6168 4.9432 4.6683 -

参考文献

[1] 苏衡, 周杰, 张志浩. 超分辨率图像重建方法综述[J]. 自动化学报, 2013, 39(8): 1202–1213. https://www.cnki.com.cn/Article/CJFDTOTAL-MOTO201308005.htm

Su H, Zhou J, Zhang Z H. Survey of super-resolution image reconstruction methods[J]. Acta Autom Sin, 2013, 39(8): 1202–1213. https://www.cnki.com.cn/Article/CJFDTOTAL-MOTO201308005.htm

[2] Bätz M, Eichenseer A, Seiler J, et al. Hybrid super-resolution combining example-based single-image and interpolation-based multi-image reconstruction approaches[C]//Proceedings of 2015 IEEE International Conference on Image Processing (ICIP), 2015: 58–62.

[3] Kim K I, Kwon Y. Single-image super-resolution using sparse regression and natural image prior[J]. IEEE Trans Pattern Anal Mach Intell, 2010, 32(6): 1127–1133. doi: 10.1109/TPAMI.2010.25

[4] 练秋生, 张伟. 基于图像块分类稀疏表示的超分辨率重构算法[J]. 电子学报, 2012, 40(5): 920–925. https://www.cnki.com.cn/Article/CJFDTOTAL-DZXU201205010.htm

Lian Q S, Zhang W. Image super-resolution algorithms based on sparse representation of classified image patches[J]. Acta Electron Sin, 2012, 40(5): 920–925. https://www.cnki.com.cn/Article/CJFDTOTAL-DZXU201205010.htm

[5] 肖进胜, 刘恩雨, 朱力, 等. 改进的基于卷积神经网络的图像超分辨率算法[J]. 光学学报, 2017, 37(3): 0318011. https://www.cnki.com.cn/Article/CJFDTOTAL-GXXB201703012.htm

Xiao J S, Liu E Y, Zhu L, et al. Improved image super-resolution algorithm based on convolutional neural network[J]. Acta Opt Sin, 2017, 37(3): 0318011. https://www.cnki.com.cn/Article/CJFDTOTAL-GXXB201703012.htm

[6] Stark H, Oskoui P. High-resolution image recovery from image-plane arrays, using convex projections[J]. J Opt Soc Am A, 1989, 6(11): 1715–1726. doi: 10.1364/JOSAA.6.001715

[7] Irani M, Peleg S. Improving resolution by image registration[J]. CVGIP: Graph Models Image Process, 1991, 53(3): 231–239. doi: 10.1016/1049-9652(91)90045-L

[8] Chang H, Yeung D Y, Xiong Y M. Super-resolution through neighbor embedding[C]//Proceedings of 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2004: 275–282.

[9] Yang J C, Wright J, Huang T S, et al. Image super-resolution via sparse representation[J]. IEEE Trans Image Process, 2010, 19(11): 2861–2873. doi: 10.1109/TIP.2010.2050625

[10] Dong C, Loy C C, He K M, et al. Image super-resolution using deep convolutional networks[J]. IEEE Trans Pattern Anal Mach Intell, 2016, 38(2): 295–307. doi: 10.1109/TPAMI.2015.2439281

[11] Dong C, Loy C C, Tang X O. Accelerating the super-resolution convolutional neural network[C]//Proceedings of the 14th European Conference on Computer Vision, 2016: 391–407.

[12] Shi W Z, Caballero J, Huszár F, et al. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, 2016: 1874–1883.

[13] Ledig C, Theis L, Huszár F, et al. Photo-realistic single image super-resolution using a generative adversarial network[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, 2017: 4681–4690.

[14] Pan S J, Yang Q. A survey on transfer learning[J]. IEEE Trans Knowl Data Eng, 2010, 22(10): 1345–1359. doi: 10.1109/TKDE.2009.191

[15] 徐舟, 曲长文, 何令琪. 基于迁移学习的SAR目标超分辨重建[J]. 航空学报, 2015, 36(6): 1940–1952. https://www.cnki.com.cn/Article/CJFDTOTAL-HKXB201506023.htm

Xu Z, Qu C W, He L Q. SAR target super-resolution based on transfer learning[J]. Acta Aeronaut Astronaut Sin, 2015, 36(6): 1940–1952. https://www.cnki.com.cn/Article/CJFDTOTAL-HKXB201506023.htm

[16] Yanai K, Kawano Y. Food image recognition using deep convolutional network with pre–training and fine–tuning[C]//Proceedings of 2015 IEEE International Conference on Multimedia & Expo Workshops, 2015: 1–6.

[17] Du B, Xiong W, Wu J, et al. Stacked convolutional denoising auto-encoders for feature representation[J]. IEEE Trans Cybern, 2017, 47(4): 1017–1027. doi: 10.1109/TCYB.2016.2536638

[18] Hu J, Shen L, Sun G. Squeeze-and-excitation networks[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018: 7132–7141.

[19] Kim Y, Koh Y J, Lee C, et al. Dark image enhancement based onpairwise target contrast and multi-scale detail boosting[C]//Proceedings of 2015 IEEE International Conference on Image Processing, 2015: 1404–1408.

[20] Kingma D P, Ba J. Adam: a method for stochastic optimization[Z]. arXiv: 1412.6980, 2014.

[21] Mittal A, Soundararajan R, Bovik A C. Making a "completely blind" image quality analyzer[J]. IEEE Signal Process Lett, 2013, 20(3): 209–212. doi: 10.1109/LSP.2012.2227726

[22] 邵雪, 曾台英, 汪祖辉. 一种基于NIQE的印刷图像无参考质量评价方法[J]. 包装学报, 2016, 8(4): 35–39. doi: 10.3969/j.issn.1674-7100.2016.04.007

Shao X, Zeng T Y, Wang Z H. No-reference quality assessment method for printed image based on NIQE[J]. Packaging J, 2016, 8(4): 35–39. doi: 10.3969/j.issn.1674-7100.2016.04.007

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: