Real-time airplane detection algorithm in remote-sensing images based on improved YOLOv3

-

摘要

针对遥感图像中的飞机目标, 本文提出一种遥感图像飞机的改进YOLOv3实时检测算法。首先, 针对单一的遥感图像飞机目标, 提出一种有49个卷积层的卷积神经网络。其次, 在提出的卷积神经网络上应用密集相连模块进行改进, 并提出使用最大池化加强密集连接模块间的特征传递。最后, 针对遥感图像中飞机多为小目标的现实, 提出将YOLOv3的3个尺度检测增加至4个并以密集相连融合不同尺度模块特征层的信息。在本文设计的遥感飞机测试集上进行训练和测试, 实验表明, 该算法的检测精度达到96.26%、召回率达到93.81%。

Abstract

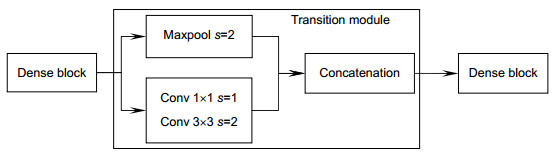

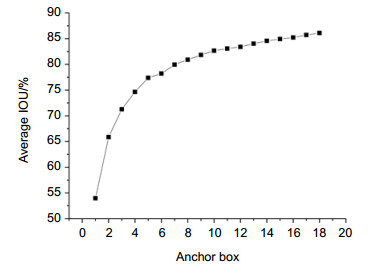

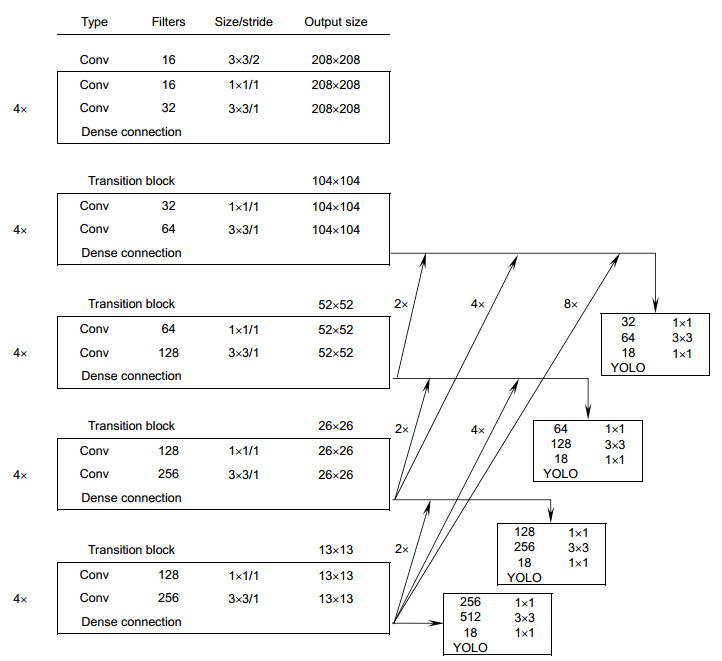

Focusing on the airplanes in remote-sensing images, a real-time algorithm based on improved YOLOv3 is proposed to detect airplanes in remote-sensing images. Firstly, a convolutional neural network that consists of 49 convolutional layers is proposed to detect airplanes in remote-sensing images specifically. Secondly, dense connection is employed on proposed convolutional neural network, and maxpool is employed to enhance the feature transmit between dense blocks. Finally, to deal with the fact that airplanes in remote-sensing images are small targets mainly, we propose to increase the scale detection from 3 to 4 and employ dense connection to merge feature map among different scales. The algorithm is trained and tested on the designed airplane dataset. The experiment results show that our algorithm obtain 96.26% on precision and 93.81% on recall.

-

Overview

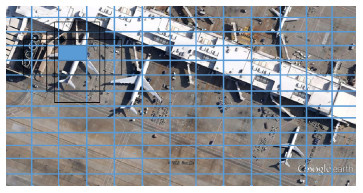

Overview: The detection of airplanes in remote-sensing images has many important applications in many domains. However, limited to the performance of traditional machine learning methods, the airplanes in remote-sensing images are difficult to be detected. Recently, deep convolutional neural networks are employed to solve object detection problem and reach excellent accuracy. YOLO is one of the most famous real-time object detection algorithms based on regression. Compared with other algorithms, YOLO is more generalized when applied to many domains. Focusing on the airplanes in remote-sensing images, a real-time algorithm based on improved YOLOv3 is proposed to detect airplanes in remote-sensing images. Firstly, a convolutional neural network that consists of 49 convolutional layers is proposed to detect airplanes in remote-sensing images specifically. In the transition blocks of proposed convolutional neural network, we employ 1×1 convolution kernels to further reduce the parameters. Secondly, dense connection is employed on proposed convolutional neural network, and the maxpool is employed to enhance the feature transmit between two dense blocks. In this way, the feature transmit between two dense blocks is reconnected after a undersampling convolutional layer. The dense connection in proposed convolutional neural network enable the network to avoid over-fitting and reach high accuracy although the network is trained by relative few training data. Finally, to deal with the fact that airplanes in remote-sensing images are small targets mainly, we propose to increase the scale detections from 3 to 4 and employ dense connection to merge feature map among different scales. The anchor boxes in our work are obtained by running k-means clustering on the training set bounding boxes. The algorithm is trained and tested on the designed airplane dataset, which have 990 remote-sensing images. The qualitative experiment results show that our algorithm has stronger robustness than other existing algorithms, and our algorithm also shows especially high recall on small targets. The quantitative experiment results show that our algorithm obtains 96.26% on precision, 93.81% on recall and 89.31% on AP. Our algorithm reaches a relative improvement of 13.1% with respect to the YOLOv3 on AP. The detector proposed in this study is proven to perform real-time speed of more than 58.3 frames per second on a 1070Ti GPU. This study demonstrates the high effectiveness and accuracy of deep convolutional neural network in detecting airplanes on remote-sensing images. Meanwhile, the research also shows the fact that the performance of convolutional neural networks is decided by their structure and the number of training data.

-

-

表 1 Darknet49的网络结构

Table 1. The network structure of Darknet49

Type Output Filters Size Conv 208×208 16 3×3 conv stride=2 Residual block(1)

×4208×208 16 1×1 conv stride=1 32 3×3 conv stride=1 Transition module 104×104 16 1×1 conv stride=1 32 3×3 conv stride=2 Residual block(2)

×4104×104 32 1×1 conv stride=1 64 3×3 conv stride=1 Transition module 52×52 32 1×1 conv stride=1 64 3×3 conv stride=2 Residual block(3)

×452×52 64 1×1 conv stride=1 128 3×3 conv stride=1 Transition module 26×26 64 1×1 conv stride=1 128 3×3 conv stride=2 Residual block(4)

×426×26 128 1×1 conv stride=1 256 3×3 conv stride=1 Transition module 13×13 128 1×1 conv stride=1 256 3×3 conv stride=2 Residual block(5)

×413×13 128 1×1 conv stride=1 256 3×3 conv stride=1 表 1 5种算法的性能对比

Table 1. Performance comparison of 5 algorithms

P/% R/% F1/% AP/% RIOU/% 速度/(f/s) 处理时间/ms YOLOv3 93.56 78.9 85.61 78.97 68.80 33.2 30.1 YOLOv3-tiny 90.82 83.05 86.76 78.99 67.05 215.2 4.6 YOLOv3-air 96.26 93.81 95.02 89.31 72.46 58.3 17.2 YOLOv2 87.11 62.27 72.62 60.92 60.28 47.5 21.1 YOLOv2-tiny 67.44 54.41 60.23 46.87 45.83 207.5 4.8 表 2 在不同大小的训练集中,YOLOv3-air的性能对比

Table 2. Performance comparison of YOLOv3-air with different number images in training set

P/% R/% F1/% AP/% RIOU/% YOLOv3-air 96.26 93.81 95.02 89.31 72.46 YOLOv3-air-500 93.47 87.25 90.25 86.53 70.74 YOLOv3-air-300 92.62 74.49 82.57 78.12 67.15 -

参考文献

[1] Girshick R, Donahue J, Darrell T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]// Proceedings of 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 2014: 580-587.

[2] Girshick R. Fast R-CNN[C]//IEEE International Conference on Computer Vision. IEEE, 2015: 1440-1448.

[3] Ren S Q, He K M, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[C]// Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, Canada, 2015: 91-99.

https://ieeexplore.ieee.org/document/7485869 [4] He K, Gkioxari G, Dollár P, et al. Mask R-CNN[C]//IEEE International Conference on Computer Vision. IEEE, 2017: 2980-2988.

[5] Redmon J, Divvala S, Girshick R, et al. You Only Look Once: Unified, Real-Time Object Detection[C]//Computer Vision and Pattern Recognition. IEEE, 2016: 779-788.

[6] Liu W, Anguelov D, Erhan D, et al. SSD: Single Shot MultiBox Detector[C]//European Conference on Computer Vision. Springer International Publishing, 2016: 21-37.

[7] Redmon J, Farhadi A. YOLO9000: Better, Faster, Stronger[C]// IEEE Conference on Computer Vision and Pattern Recognition. IEEE, 2017: 6517-6525.

[8] Redmon J, Farhadi A. YOLOv3: An Incremental Improvement[J]. arXiv preprint arXiv: 1804.02767, 2018.

[9] 薛月菊, 黄宁, 涂淑琴, 等.未成熟芒果的改进YOLOv2识别方法[J].农业工程学报, 2018, 34(7): 173-179. http://d.old.wanfangdata.com.cn/Periodical/nygcxb201807022

Xue Y J, Huang N, Tu S Q, et al. Immature mango detection based on improved YOLOv2[J]. Transactions of the Chinese Society of Agricultural Engineering, 2018, 34(7): 173-179. http://d.old.wanfangdata.com.cn/Periodical/nygcxb201807022

[10] 王思雨, 高鑫, 孙皓, 等.基于卷积神经网络的高分辨率SAR图像飞机目标检测方法[J].雷达学报, 2017, 6(2): 195-203. http://d.old.wanfangdata.com.cn/Periodical/ldxb201702008

Wang S Y, Gao X, Sun H, et al. An aircraft detection method based on convolutional neural networks in high-resolution SAR images[J]. Journal of Radars, 2017, 6(2): 195-203. http://d.old.wanfangdata.com.cn/Periodical/ldxb201702008

[11] 周敏, 史振威, 丁火平.遥感图像飞机目标分类的卷积神经网络方法[J].中国图象图形学报, 2017, 22(5): 702-708. http://d.old.wanfangdata.com.cn/Periodical/zgtxtxxb-a201705015

Zhou M, Shi Z W, Ding H P. Aircraft classification in remote-sensing images using convolutional neural networks[J]. Journal of Image and Graphics, 2017, 22(5): 702-708. http://d.old.wanfangdata.com.cn/Periodical/zgtxtxxb-a201705015

[12] 谷雨, 徐英.基于随机卷积特征和集成超限学习机的快速SAR目标识别[J].光电工程, 2018, 45(1): 170432. doi: 10.12086/oee.2018.170432 https://doi.org/10.12086/oee.2018.170432

Gu Y, Xu Y. Fast SAR target recognition based on random convolution features and ensemble extreme learning machines[J]. Opto-Electronic Engineering, 2018, 45(1): 170432. doi: 10.12086/oee.2018.170432 https://doi.org/10.12086/oee.2018.170432

[13] Huang G, Liu Z, Maaten L V D, et al. Densely Connected Convolutional Networks[C]//IEEE Conference on Computer Vision and Pattern Recognition. IEEE Computer Society, 2017: 2261-2269.

https://ieeexplore.ieee.org/document/8099726 [14] Lin T Y, Dollar P, Girshick R, et al. Feature Pyramid Networks for Object Detection[C]//IEEE Conference on Computer Vision and Pattern Recognition. IEEE Computer Society, 2017: 936-944.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: