-

摘要

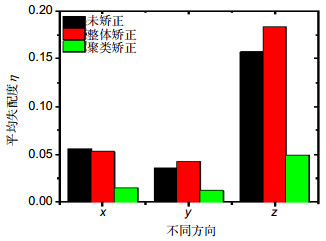

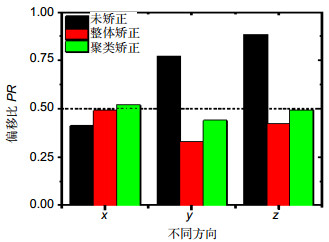

针对单一传感器在动态场景感知问题上的局限性,设计了一种融合激光与视觉的实现系统,并对运动检测中的背景显露区误判问题和融合中不同传感器间点云的失配问题分别提出了改进算法。在运动检测上,首先基于视觉的背景差分算法对激光进行前景点分拣,再以激光前景点为启发信息进行视觉前景聚类。在融合失配问题上,首先基于栅格失配度分别对激光和视觉点云进行聚类分割,再以激光为基准,逐一将对应的视觉点云与之配准,滤除噪声后所得到的矫正点云可用于场景重建进行进一步验证。实验结果表明,改进算法所获得的融合前景对"影子"有更好的鲁棒性;较之整体配准的矫正,改进算法在平均失配度上降低了约75%,在y和z方向上的偏移比收敛了至少5%。

Abstract

Aiming at the limitations of single sensor in dynamic scene perception issue, an implementation system for fusing laser and vision was designed. In addition, two improved algorithms were proposed to solve the problems of the error foreground detection in the motion detection and the mismatching between the point clouds of different sensors. As for motion detection, the laser foreground points were firstly detected based on visual background subtraction algorithm. Then, the visual foreground was clustered regarding laser foreground points as the heuristic information. To solve the mismatching of fusion, the laser and vision point cloud were segmented into clusters based on the cell mismatching degree firstly. Then the corresponding stereo point cloud was registered referring to laser clusters. The corrected point cloud could be used for further verification by reconstructing the scene after filtering. The experimental results showed that the fusion foreground obtained finally had a better robustness to shadow. Compared with the whole registration correction, the average mismatching degree reduced by 75%, and the positive ratio in the direction of y and z converged at least 5%.

-

Overview

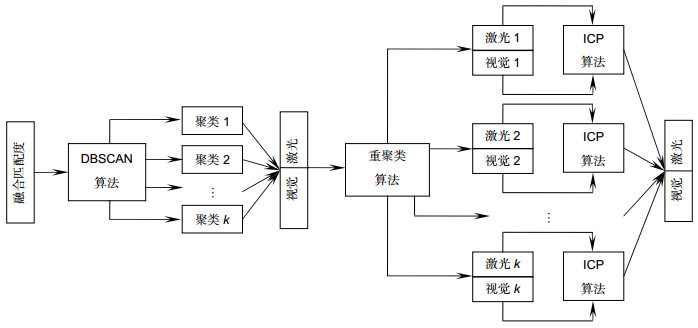

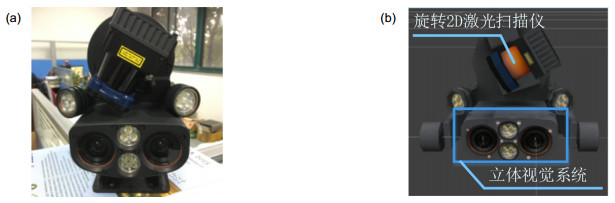

Abstract: As a core technique in the video surveillance and 3D map building fields, the moving object detection and scene reconstruction are the foundations of the real-time navigation, obstacle avoidance and path planning. In the meantime, as two important sub-problems of environment perception task, they are not only closely connected with the development of many fields, such as robot, unmanned aircraft, unmanned vehicles and body feeling game, but also are the part of the lives of human intelligence. The current research is mainly based on single laser sensor or single vision sensor. It is difficult to meet the requirements of real-time multi task scenario due to the limitation of the visual field, the amount of data, the richness of the data, the real-time and the anti-jamming. Based on the mutual supplement and constraint of the laser information and the visual information, an implementation system for fusing laser and vision was designed. In addition, two improved algorithms were proposed to solve the problems of the error foreground detection in the motion detection and the mismatching between the point clouds of different sensors.

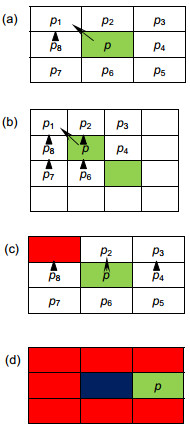

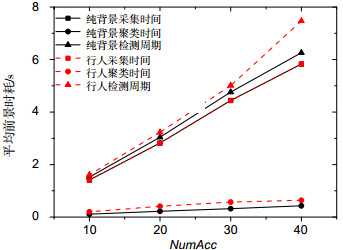

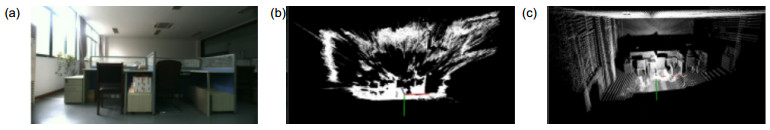

As for motion detection, aiming at the error detection for uncovered background area, a novel fusion motion detection algorithm based on foreground clustering was proposed. This algorithm of laser information motion detection was operated by relating fusing visual system. The 2D foreground and background of visual system were firstly detected based on visual background subtraction algorithm. Then the laser points mapped the visual 2D image by the joint calibration matrix. And then the visual foreground clustered regarding laser foreground points as the heuristic information. In order to solve the problem of the noise in the fusion and the mismatch of the point cloud directly, which was caused by the direct registration based on the external calibration relationship between the sensors, related optimization strategies were proposed before the scene reconstruction. ICP algorithm, a novel mismatching correction algorithm was proposed. The laser and vision point cloud were segmented into clusters based on the cell mismatching degree firstly. Then the corresponding stereo point cloud was registered referring to laser clusters. The corrected point cloud could be used for further verification by reconstructing the scene after filtering.

The advantages of visual texture features and real-time combined the accuracy and robustness of laser, and a fusion system used for dynamic scene awareness was designed. In this multi-sensor fusion system, the laser and visual information could check and complement each other. The experimental results showed that the fusion foreground obtained finally had a better robustness to shadow. Compared with the whole registration correction, the average mismatching degree reduced by 75%, and the positive ratio in the direction of y and z converged at least 5%.

-

-

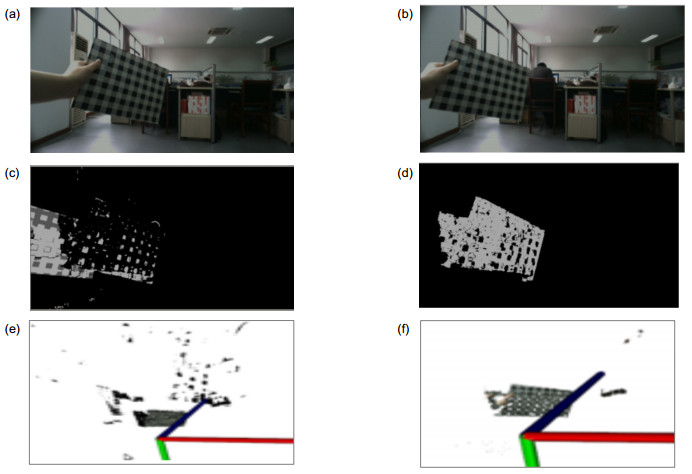

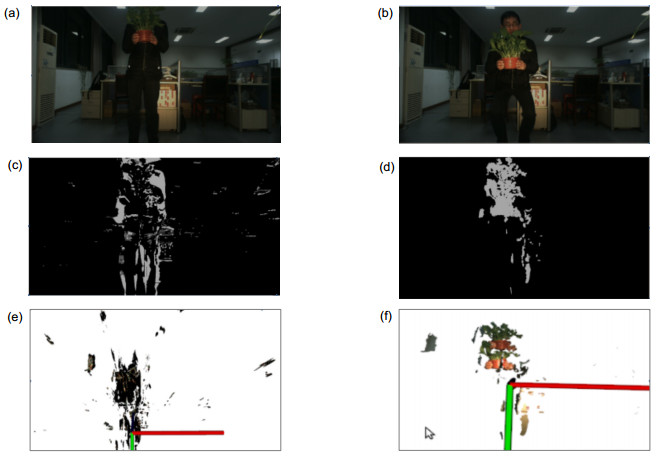

图 5 移动的笔记本. (a)第k帧彩色图片. (b)第k+10帧彩色图片. (c)对比算法下的2D前景. (d)本文算法下的2D前景. (e)对比算法下的3D前景. (f)本文算法下的3D前景.

Figure 5. The moving notebook. (a) The k-th frame colour picture. (b) The k+10-th frame colour picture. (c) 2D foreground of alignment algorithm. (d) 2D foreground of text algorithm. (e) 3D foreground of alignment algorithm. (f) 3D foreground of text algorithm.

图 6 放花盆的人. (a)第k帧彩色图片. (b)第k+10帧彩色图片. (c)对比算法下的2D前景. (d)本文算法下的2D前景. (e)对比算法下的3D前景. (f)本文算法下的3D前景.

Figure 6. A person who putting down a flower pot. (a) The k-th frame colour picture. (b) The k+10-th frame colour picture. (c) 2D foreground of alignment algorithm. (d) 2D foreground of text algorithm. (e) 3D foreground of alignment algorithm. (f) 3D foreground of text algorithm.

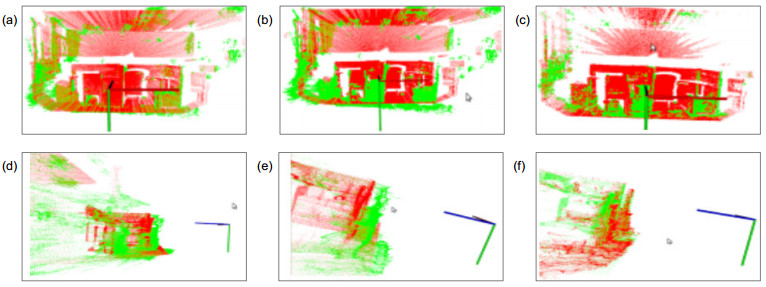

图 9 不同算法下点云的失配矫正结果对比. (a) 未矫正点云. (b) 整体矫正点云. (c) 聚类矫正点云. (d) 未矫正点云细节. (e) 整体矫正点云细节. (f) 聚类矫正点云细节.

Figure 9. The comparison of the results of point cloud mismatching correction under different algorithms. (a) Point cloud before correction. (b) Point cloud after whole correction. (c) Point cloud after clustering correction. (d) Point cloud details before correction. (e) Point cloud details after whole correction. (f) Point cloud details after clustering correction.

-

参考文献

[1] 于金霞, 蔡自兴, 段琢华.基于激光雷达的移动机器人运动目标检测与跟踪[J].电子器件, 2007, 30(6): 2301‒2306. http://www.cqvip.com/qk/91075X/200706/1000048449.html

Yu Jinxia, Cai Zixing, Duan Zhuohua. Detection and tracking of moving object with a mobile robot using laser scanner[J]. Chi-nese Journal of Electron Devices, 2007, 30(6): 2301‒2306. http://www.cqvip.com/qk/91075X/200706/1000048449.html

[2] 黄成都, 黄文广, 闫斌.基于Codebook背景建模的视频行人检测[J].传感器与微系统, 2017, 36(3): 144‒146. http://kns.cnki.net/KCMS/detail/detail.aspx?filename=cgqj201703040&dbname=CJFD&dbcode=CJFQ

Huang Chengdu, Huang Wenguang, Yan Bin. Pedestrian detection based on Codebook background modeling in video[J]. Transducer and Microsystem Technologies, 2017, 36(3): 144‒146. http://kns.cnki.net/KCMS/detail/detail.aspx?filename=cgqj201703040&dbname=CJFD&dbcode=CJFQ

[3] 孟明, 杨方波, 佘青山, 等.基于Kinect深度图像信息的人体运动检测[J].仪器仪表学报, 2015, 36(2): 386‒393. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=yqyb201502017

Meng Ming, Yang Fangbo, She Qingshan, et al. Human motion detection based on the depth image of Kinect[J]. Chinese Journal of Scientific Instrument, 2015, 36(2): 386‒393. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=yqyb201502017

[4] 刘伟华, 樊养余, 雷涛.基于深度图像的运动人手检测与指尖点跟踪算法[J].计算机应用, 2014, 34(5): 1442‒1448. doi: 10.11772/j.issn.1001-9081.2014.05.1442

Liu Weihua, Fan Yangyu, Lei Tao. Human fingertip detection and tracking algorithm based on depth image[J]. Journal of Computer Applications, 2014, 34(5): 1442‒1448. doi: 10.11772/j.issn.1001-9081.2014.05.1442

[5] 王思明, 鲁永杰. PCA与自适应阈值相结合的运动目标检测[J].光电工程, 2015, 42(10): 1‒6. doi: 10.3969/j.issn.1003-501X.2015.10.001

Wang Siming, Lu Yongjie. Moving object detection combining PCA and adaptive threshold[J]. Opto-Electronic Engineering, 2015, 42(10): 1‒6. doi: 10.3969/j.issn.1003-501X.2015.10.001

[6] 段勇, 裴明涛.基于多RGBD摄像机的动态场景实时三维重建系统[J].北京理工大学学报, 2014, 34(11): 1157‒1162. http://www.docin.com/p-1484969320.html

Duan Yong, Pei Mingtao. A real-time system for 3D recovery of dynamic scenes based on multiple RGBD imagers[J]. Transactions of Beijing Institute of Technology, 2014, 34(11): 1157–1162. http://www.docin.com/p-1484969320.html

[7] 邓念晨, 杨旭波.多Kinect实时室内动态场景三维重建[J].东华大学学报(自然科学版), 2015, 41(4): 448‒454. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=zgfzdxxb201504007

Deng Nianchen, Yang Xubo. Real-time dynamic indoor scene 3D reconstruction using multiple Kinect[J]. Journal of Donghua University (Natural Science), 2015, 41(4): 448‒454. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=zgfzdxxb201504007

[8] Muhlbauer Q, Kühnlenz K, Buss M. Fusing laser and vision data with a genetic ICP algorithm[C]//Proceedings of the 10th International Conference on Control, Automation, Robotics and Vision, Hanoi, Vietnam, 2008: 1844‒1849.

https://www.researchgate.net/publication/221144366_Fusing_Laser_and_Vision_Data_with_a_Genetic_ICP_Algorithm [9] Baltzakis H, Argyros A, Trahanias P. Fusion of laser and visual data for robot motion planning and collision avoidance[J]. Machine Vision and Applications, 2003, 15(2): 92‒100. doi: 10.1007/s00138-003-0133-2

[10] Droeschel D, Stückler J, Behnke S. Local multi-resolution representation for 6D motion estimation and mapping with a continuously rotating 3D laser scanner[C]//Proceedings of 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 2014: 5221‒5226.

https://ieeexplore.ieee.org/document/6907626/ [11] 华媛蕾, 刘万军.改进混合高斯模型的运动目标检测算法[J].计算机应用, 2014, 34(2): 580‒584. http://www.zndxzk.com.cn/down/paperDown.aspx?id=55971

Hua Yuanlei, Liu Wanjun. Moving object detection algorithm of improved Gaussian mixture model[J]. Journal of Computer Applications, 2014, 34(2): 580‒584. http://www.zndxzk.com.cn/down/paperDown.aspx?id=55971

[12] 李广, 冯燕.基于SIFT特征匹配与K-均值聚类的运动目标检测[J].计算机应用, 2012, 32(10): 2824‒2826. http://d.wanfangdata.com.cn/Periodical_jsjyy201210036.aspx

Li Guang, Feng Yan. Moving object detection based on SIFT features matching and K-means clustering[J]. Journal of Computer Applications, 2012, 32(10): 2824‒2826. http://d.wanfangdata.com.cn/Periodical_jsjyy201210036.aspx

[13] Będkowski J, Majek K, Masłowski A, et al. Recognition of 3D objects for walking robot equipped with multisense-sl sensor head[M]//Waldron K J, Tokhi M O, Virk G S. Nature-Inspired Mobile Robotics. New Jersey: World Scientific Publishing Company, 2013: 797‒804.

https://www.researchgate.net/publication/268471528_Recognition_of_3D_objects_for_walking_robot_equipped_with_multisense-sl_sensor_head [14] Song Xuan, Zhao Huijing, Cui Jinshi, et al. Fusion of laser and vision for multiple targets tracking via on-line learn-ing[C]//Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 2010: 406‒411.

[15] 赵次郎. 基于激光视觉数据融合的三维场景重构与监控[D]. 大连: 大连理工大学, 2014.

Zhao Cilang. Laser and vision data fusion for 3D scene reconstruction and surveillance[D]. Dalian: Dalian University of Technology, 2014.

http://cdmd.cnki.com.cn/Article/CDMD-10141-1015560793.htm -

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: