A laser inertial SLAM approach based on planar expansion and constrained optimization

-

摘要:

针对激光SLAM算法在特征匮乏、拐角狭窄的室内场景中定位精度低的问题,提出一种基于平面扩展和约束优化的激光惯性SLAM方法。在激光SLAM中融合IMU,根据IMU状态估计结果对激光点云进行位置补偿并判断关键帧。搭建全局平面地图,基于RANSAC算法对关键帧进行平面提取并结合预提取的方法跟踪平面特征以降低时间成本,拟合结果经iPCA优化去除噪声对RANSAC的影响。利用点到面的距离构建平面约束优化方程,并将其与边缘点约束和预积分约束统一融合,建立非线性优化模型,求解得到优化后的平面信息和关键帧位姿。最后为验证算法的有效性,在M2DGR公开数据集和私有数据集上分别进行实验,实验结果表明,本算法在大部分公开数据集上表现良好,特别在私有数据集上,相比于目前广泛应用的faster-lio算法,定位精度提升61.9%,展现出良好的鲁棒性和实时性。

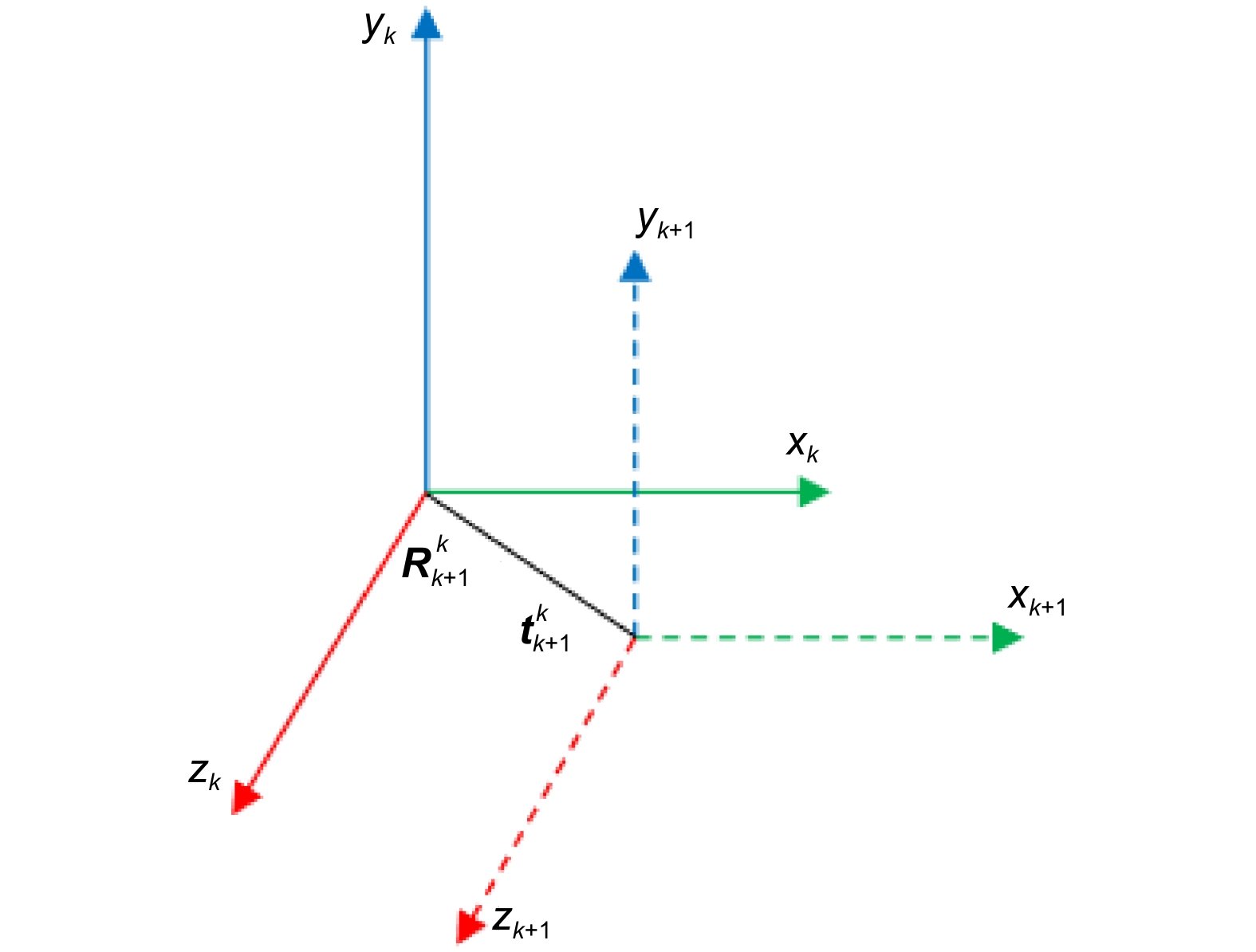

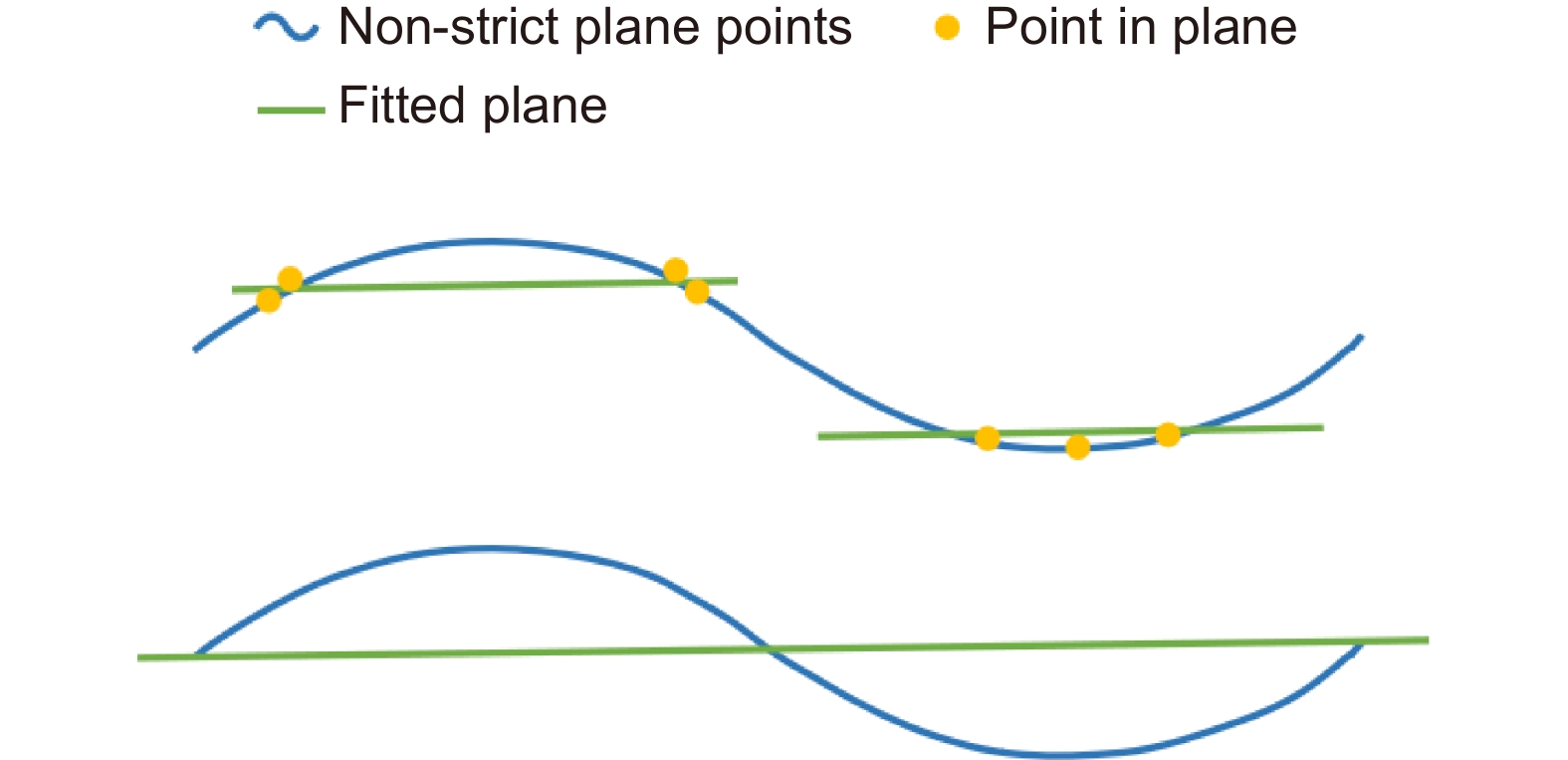

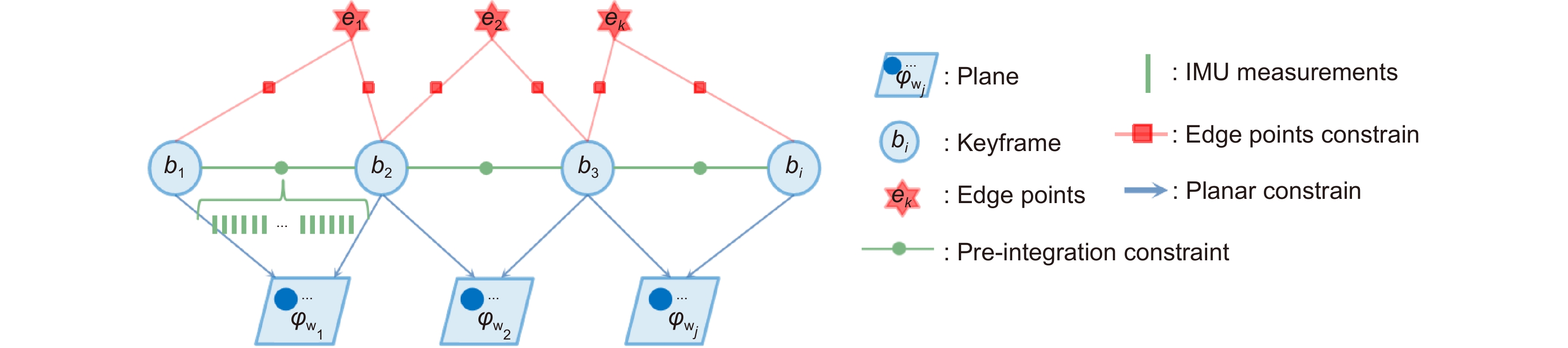

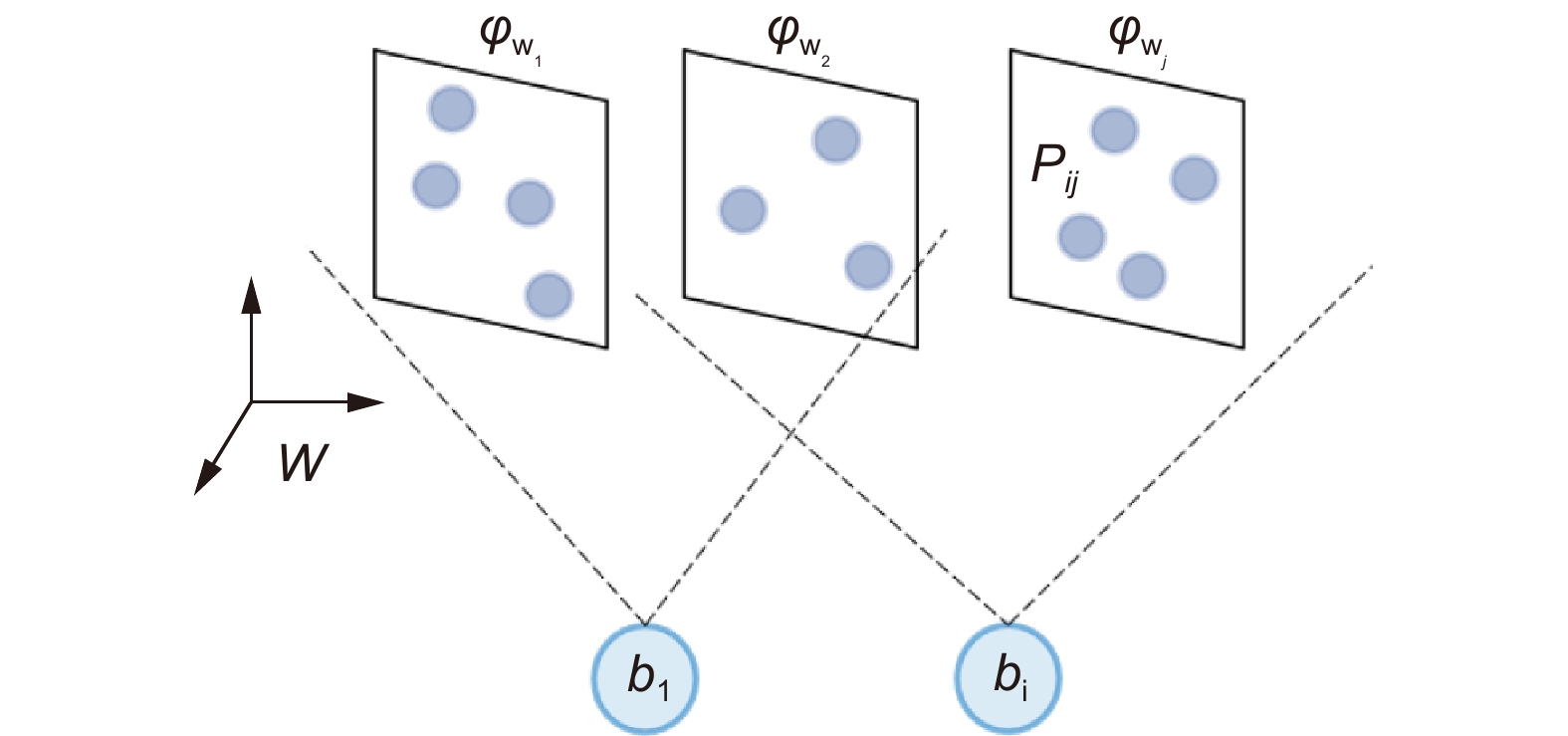

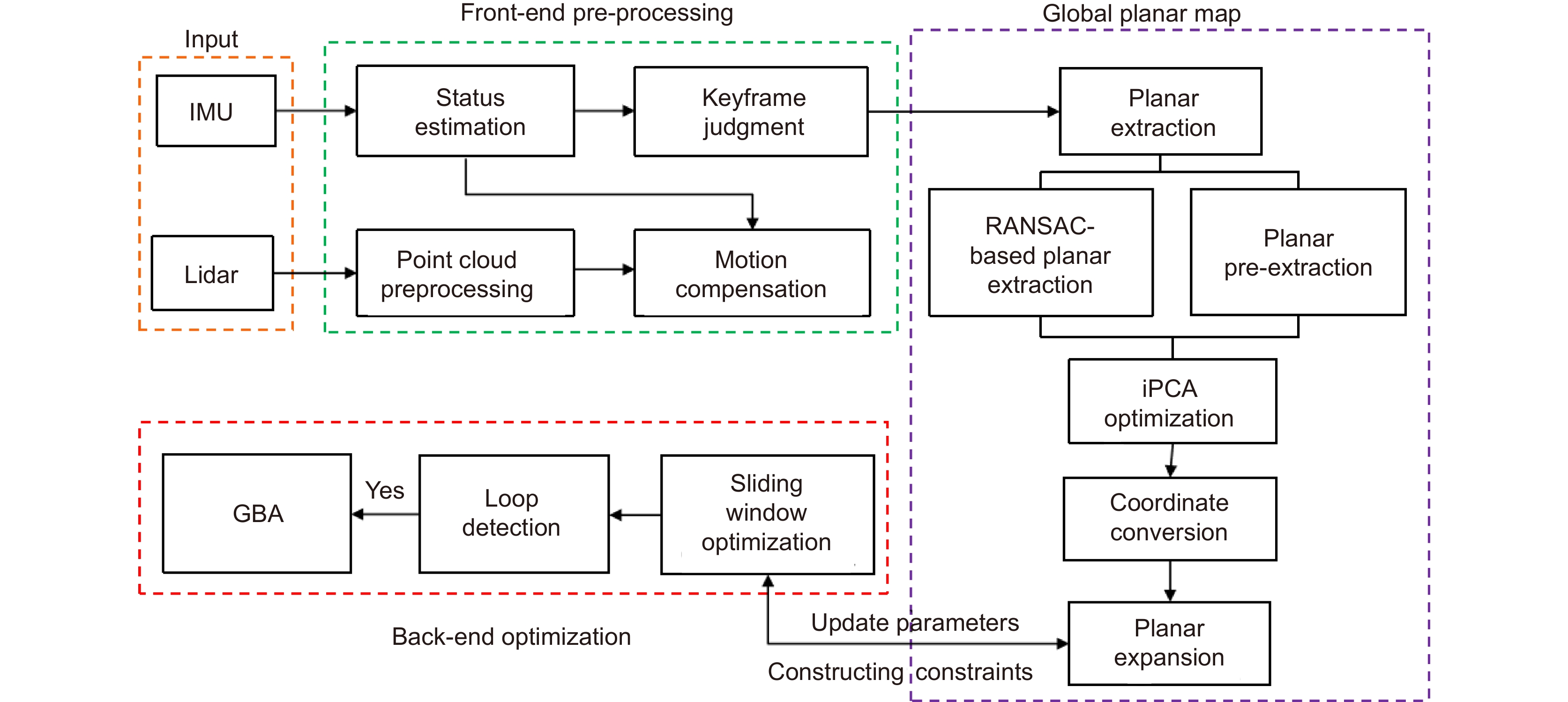

Abstract:Aiming at the problem of low positioning accuracy of laser SLAM algorithm in indoor scenes with feature scarcity and narrow corners, a laser inertial SLAM method based on planar extension and constraint optimization is proposed. The IMU is fused in laser SLAM, and the laser point cloud is position compensated and key frames are judged according to the IMU state estimation results. The global planar map is constructed, the planar extraction of key frames is performed based on the RANSAC algorithm and combined with the pre-extraction method to track the planar features in order to reduce the time cost, and the fitting results are optimized by iPCA to remove the effect of noise on the RANSAC. Using the distance from the point to the surface to construct the plane constraint optimization equation, and integrate it with the edge point constraints and pre-integration constraints in a unified way to establish a nonlinear optimization model, and solve to get the optimized plane information and key frame bit position. Finally, to verify the effectiveness of the algorithm, experiments are carried out on the M2DGR public dataset and private dataset respectively, and the experimental results show that the present algorithm performs well on most of the public datasets, especially in the private dataset compared with the widely used fast-lio algorithm, the localization accuracy is improved by 61.9%, which demonstrates good robustness and real-time performance.

-

Key words:

- SLAM /

- indoor scene /

- global planar maps /

- pre-extraction /

- iPCA optimization /

- planar constraints

-

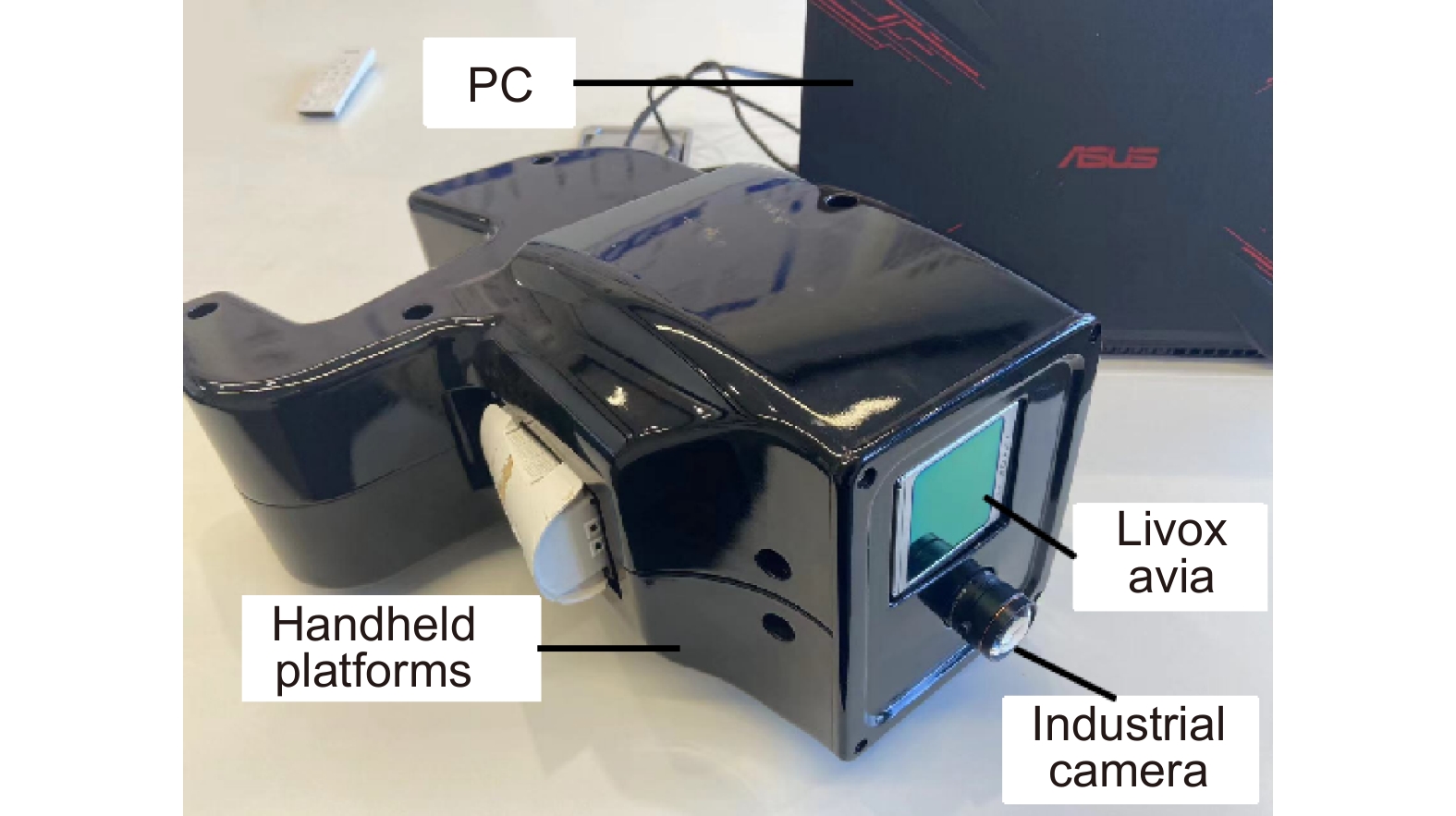

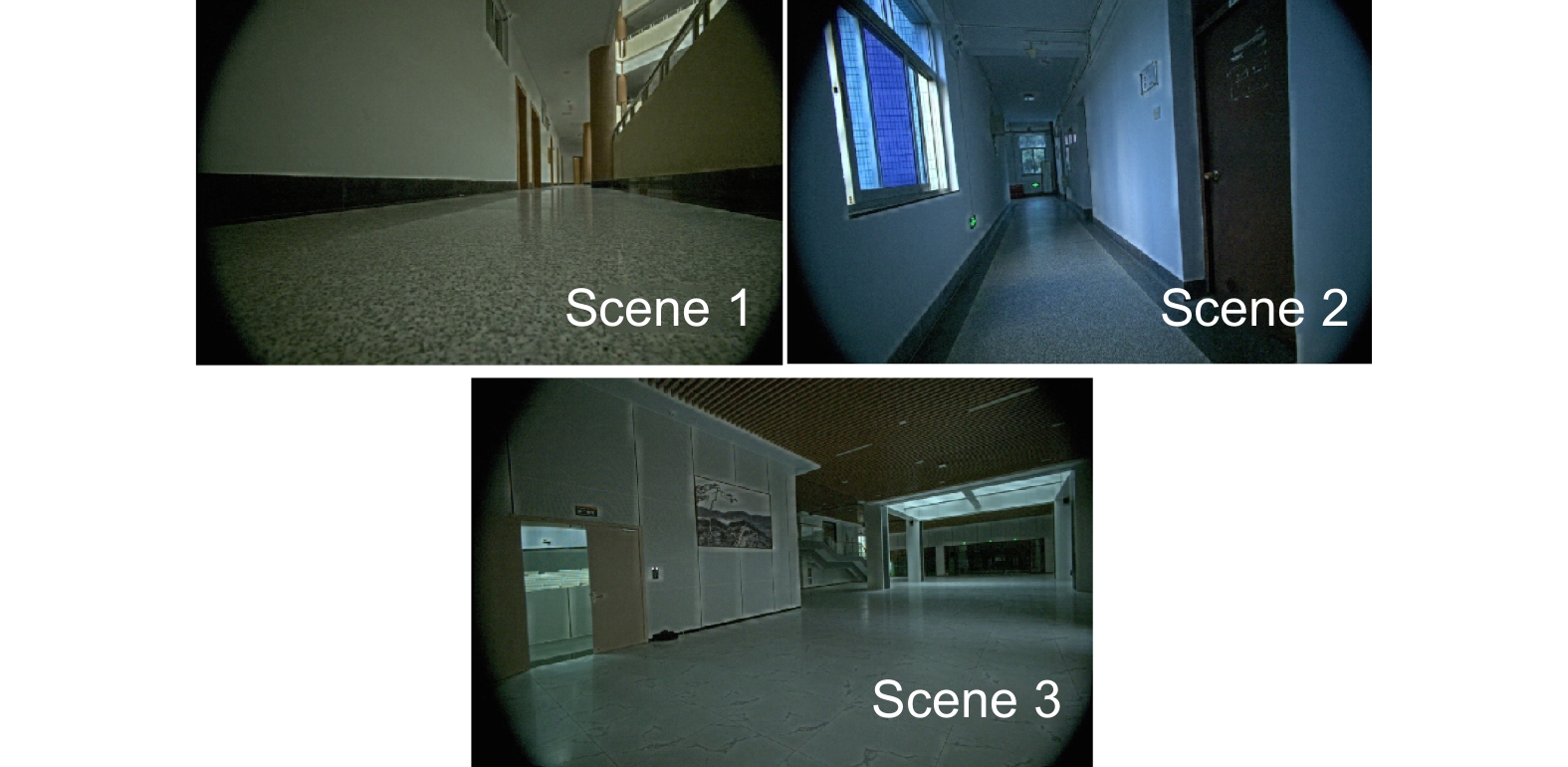

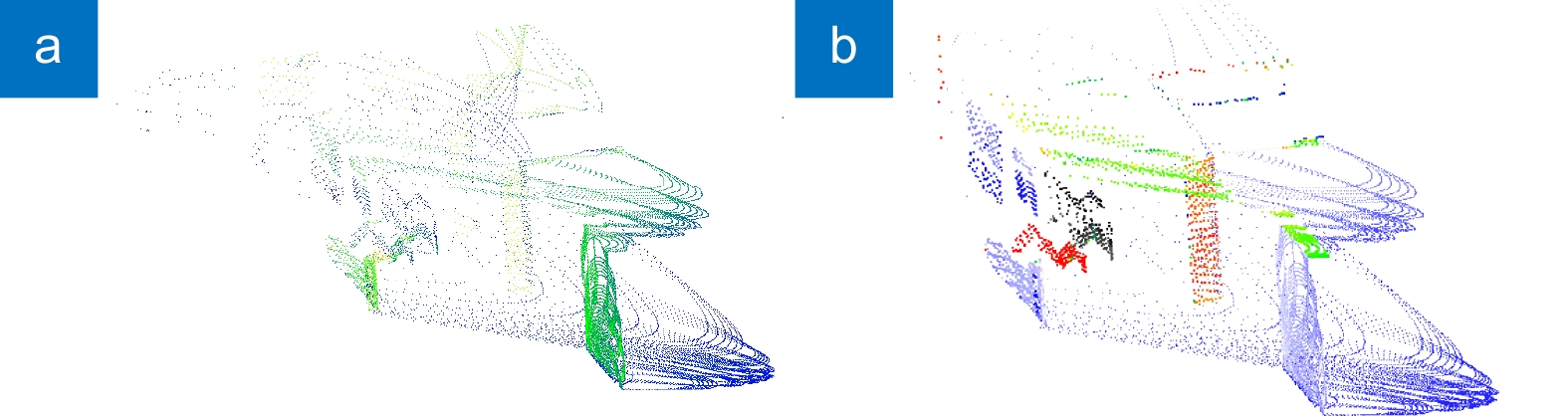

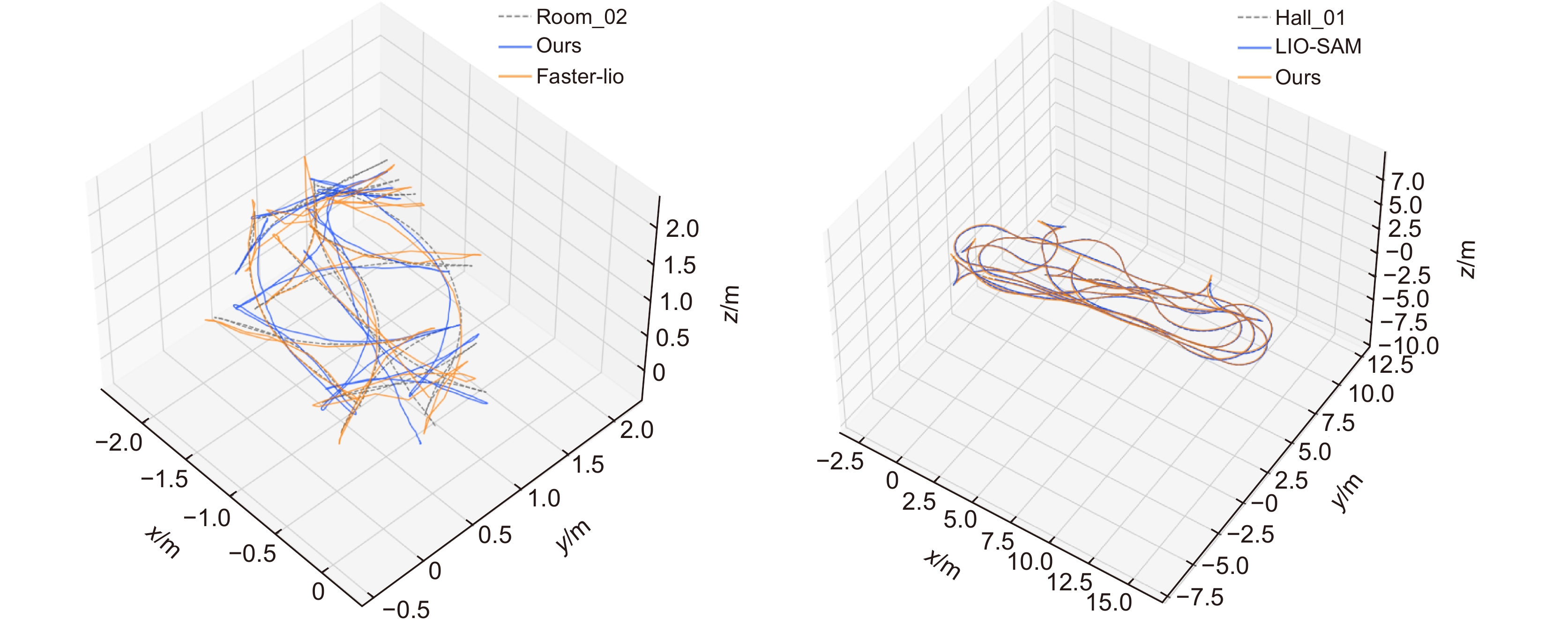

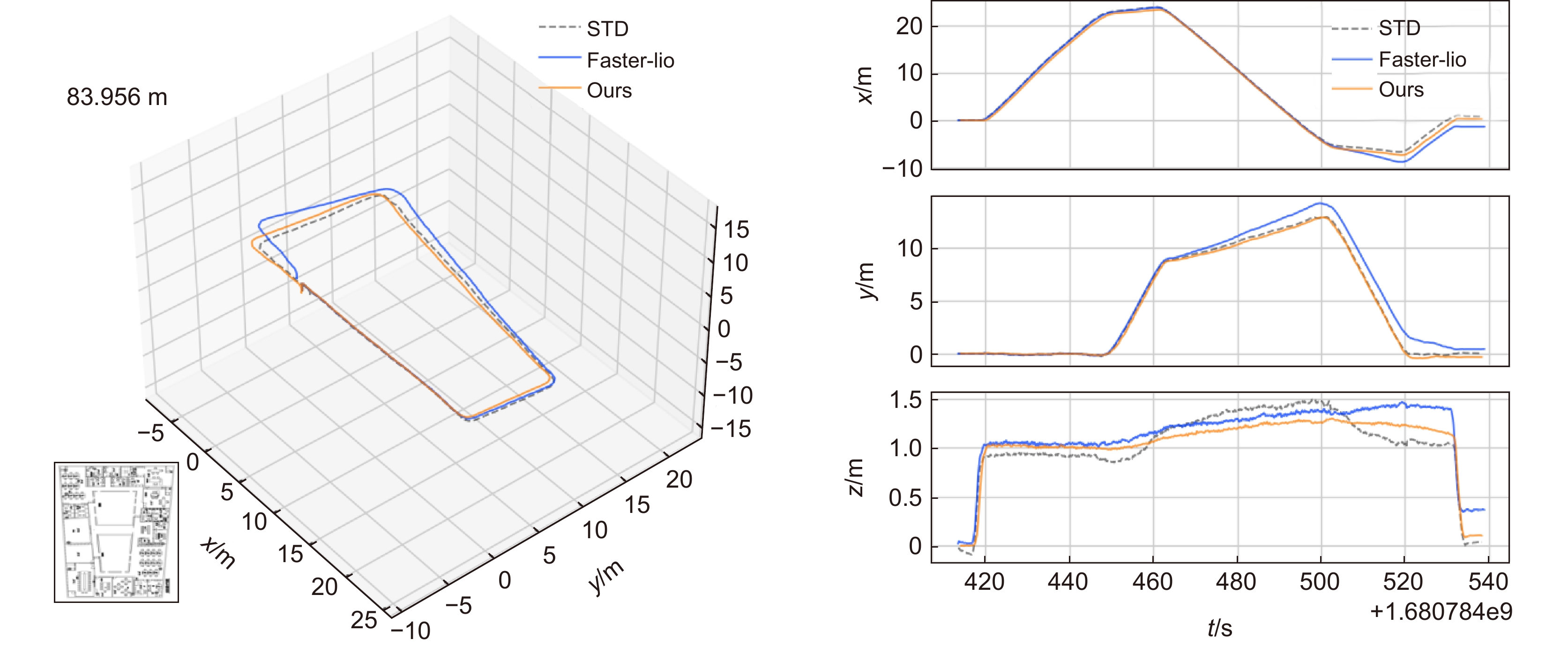

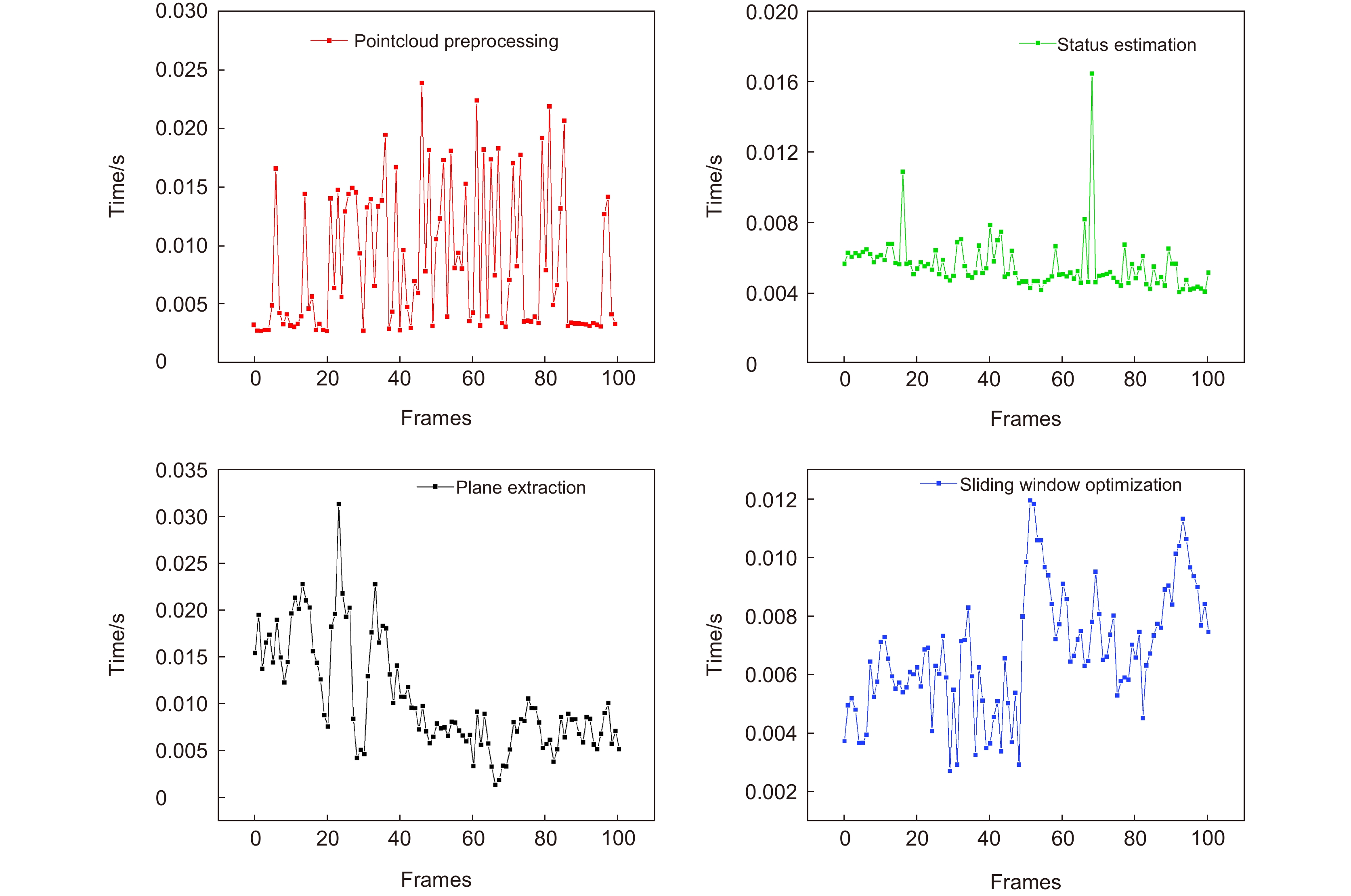

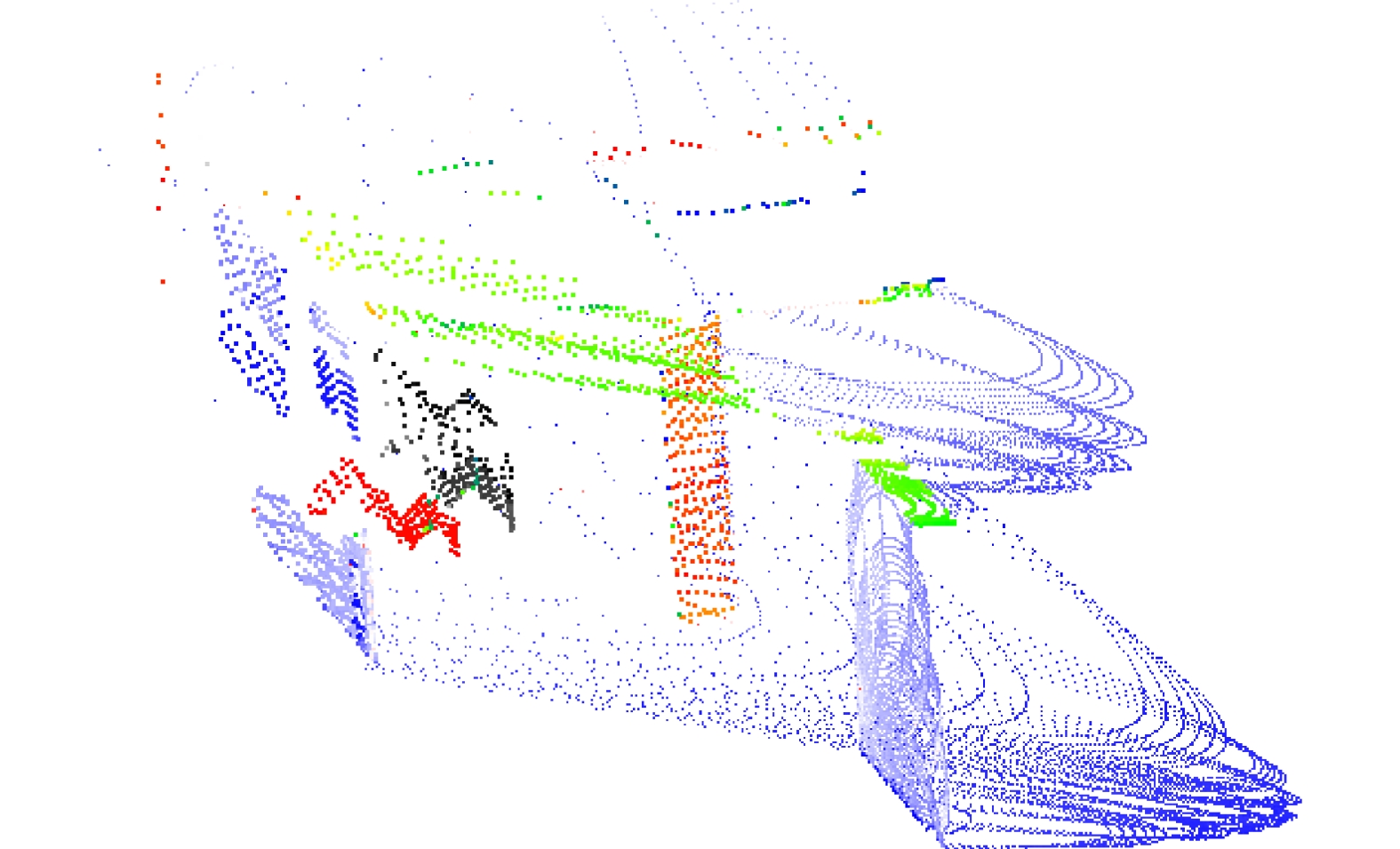

Overview: The scarcity of features and narrow corners in indoor environments make the laser SLAM algorithm have low localization accuracy and even algorithm failure. To solve the above problems, a laser inertial SLAM method based on plane extension and constraint optimization is proposed. Fusion of IMU in laser SLAM, position compensation of laser point cloud, and judgment of key frames based on IMU state estimation results. Build a global planar map, planar extraction of key frames based on the RANSAC algorithm, and track planar features by combining the pre-extraction method to reduce the time cost, and the fitting results are optimized by iPCA to remove the effect of noise on RANSAC. Using the distance from the point to the plane, construct the plane constraint optimization equation. Integrate it with the edge point constraints and pre-integration constraints in a unified way to establish a nonlinear optimization model. Solve this model to get the optimized plane information and key frame bit position. To verify the effectiveness of the algorithm, experiments are carried out in the M2DGR public dataset and private dataset respectively. The results of plane extraction are shown in Table 1, facing different scenes and distances, the position accuracy error of the plane fitting method, which is based on the combination of RANSAC and iPCA and can be controlled within 10mm. Additionally, the attitude accuracy error is less than 2, meeting the initial value requirements. Figures 9 and 10 visualize the localization effect of this method and other algorithms. The experimental results show that the algorithm not only performs well on the open dataset, but also in the closed-loop dataset "Indoor_01", which has narrow corners and fewer features, the algorithm improves 61.9% compared with the comparison algorithm, which can effectively inhibit the drift caused by the corners and the lack of features (Fig. 10). The planar pre-extraction method effectively reduces the time cost of RANSAC, and the use of planar constraints instead of planar point constraints saves the unnecessary search and fitting process (Fig. 11), which provides the possibility of deploying the algorithm in mobile devices. The experimental results show that the comnbination of planar pre-extraction and the iPCA-based planar optimization scheme effectively eliminates noise and the error caused by the unstrict planes, while also saves the unnecessary RANSAC fitting iterations. The plane constraints also effectively replace the plane point constraints, which are uniformly fused with the edge point constraints and preintegration constraints to participate in the optimization after compression. The proposed method effectively improves the localization accuracy of laser SLAM in indoor environments, demonstrating robustness and real-time capabilities.

-

-

表 1 平面提取预测值与真实值对比

Table 1. Comparison of predicted and true values for planar extraction

Scene Parameters Predicted True Scene 1 High/mm 2977 2975 2972 2978 2968 2970 Width/mm 1769 1770 1764 1757 1758 1760 Angle_h/° 0.059 0.071 0.078 0.043 0.027 0 Angle_w/° 0.416 0.155 0.002 0.264 0.017 0 Scene 2 High/mm 3119 3120 3117 3117 3126 3125 Width/mm 1802 1811 1813 1808 1802 1810 Angle_h/° 0.099 0.099 0.155 0.004 0.003 0 Angle_w/° 1.244 0.315 0 0.093 0.051 0 表 2 本算法与对比算法的绝对轨迹误差及均值对比(单位:米)

Table 2. Comparison of absolute trajectory error and mean between this algorithm and the comparison algorithm (unit: m)

Sequence Runtime/s faster-lio LIO-SAM Ours RMSE MEAN RMSE MEAN RMSE MEAN room_01 72 0.162 0.233 0.213 0.179 0.237 0.210 room_02 75 0.336 0.313 0.428 0.335 0.354 0.322 hall_01 351 0.241 0.192 0.229 0.189 0.109 0.095 hall_05 402 1.062 0.980 1.225 1.122 1.061 0.952 room_dark_01 111 0.159 0.146 0.263 0.244 0.133 0.120 room_dark_05 159 0.301 0.264 0.532 0.449 0.310 0.298 表 3 本算法与对比算法的绝对轨迹误差及均值对比(单位:米)

Table 3. Comparison of absolute trajectory error and mean between this algorithm and the comparison algorithm (unit: m)

Sequence Faster-lio Ours RMSE MEAN RMSE MEAN Indoor_01 0.837 0.687 0.319 0.282 -

[1] 周治国, 曹江微, 邸顺帆. 3D激光雷达SLAM算法综述[J]. 仪器仪表学报, 2021, 41(9): 13−27. doi: 10.19650/j.cnki.cjsi.J2107897

Zhou Z G, Cao J W, Di S F. Overview of 3D lidar SLAM algorithms[J]. Chin J Sci Instrum, 2021, 41(9): 13−27. doi: 10.19650/j.cnki.cjsi.J2107897

[2] Zhang J, Singh S. LOAM: lidar odometry and mapping in real-time[C]//Robotics: Science and Systems, 2014. https://doi.org/10.15607/RSS.2014.X.007.

[3] Shan T X, Englot B. LeGO-LOAM: lightweight and ground-optimized lidar odometry and mapping on variable terrain[C]//2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018: 4758–4765. https://doi.org/10.1109/IROS.2018.8594299.

[4] Besl P J, McKay N D. A method for registration of 3-D shapes[J]. IEEE Trans Pattern Anal Mach Intell, 1992, 14(2): 239−256. doi: 10.1109/34.121791

[5] Qin C, Ye H Y, Pranata C E, et al. LINS: a lidar-inertial state estimator for robust and efficient navigation[C]//Proceedings of 2020 IEEE International Conference on Robotics and Automation (ICRA), 2020: 8899–8906. https://doi.org/10.1109/ICRA40945.2020.9197567.

[6] Shan T X, Englot B, Meyers D, et al. LIO-SAM: tightly-coupled lidar inertial odometry via smoothing and mapping[C]//2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020: 5135–5142. https://doi.org/10.1109/IROS45743.2020.9341176.

[7] Xu W, Zhang F. FAST-LIO: a fast, robust lidar-inertial odometry package by tightly-coupled iterated Kalman filter[J]. IEEE Robot Autom Lett, 2021, 6(2): 3317−3324. doi: 10.1109/LRA.2021.3064227

[8] Lin J R, Zhang F. R3LIVE: a robust, real-time, RGB-colored, LiDAR-inertial-visual tightly-coupled state estimation and mapping package[C]//2022 International Conference on Robotics and Automation (ICRA), 2022: 10672–10678. https://doi.org/10.1109/ICRA46639.2022.9811935.

[9] Li Y Y, Brasch N, Wang Y D, et al. Structure-SLAM: low-drift monocular slam in indoor environments[J]. IEEE Robot Autom Lett, 2020, 5(4): 6583−6590. doi: 10.1109/LRA.2020.3015456

[10] Grant W S, Voorhies R C, Itti L. Efficient Velodyne SLAM with point and plane features[J]. Auton Robots, 2019, 43(5): 1207−1224. doi: 10.1007/s10514-018-9794-6

[11] Geneva P, Eckenhoff K, Yang Y L, et al. LIPS: LiDAR-inertial 3D plane SLAM[C]//2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018: 123–130. https://doi.org/10.1109/IROS.2018.8594463.

[12] Kaess M. Simultaneous localization and mapping with infinite planes[C]//2015 IEEE International Conference on Robotics and Automation (ICRA), 2015: 4605–4611. https://doi.org/10.1109/ICRA.2015.7139837.

[13] Zhou L P, Koppel D, Ju H, et al. An efficient planar bundle adjustment algorithm[C]//2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2020: 136–145. https://doi.org/10.1109/ISMAR50242.2020.00035.

[14] Zhou L P, Wang S Z, Kaess M. π-LSAM: LiDAR smoothing and mapping with planes[C]//2021 IEEE International Conference on Robotics and Automation (ICRA), 2021: 5751–5757. https://doi.org/10.1109/ICRA48506.2021.9561933.

[15] Chen X Y, Wu P X, Li G, et al. LIO-PPF: fast LiDAR-inertial odometry via incremental plane pre-fitting and skeleton tracking[C]//2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2023: 1458–1465. https://doi.org/10.1109/IROS55552.2023.10341524.

[16] Zhu F C, Ren Y F, Zhang F. Robust real-time LiDAR-inertial initialization[C]//2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2022: 948–3955. https://doi.org/10.1109/IROS47612.2022.9982225.

[17] Qin T, Li P L, Shen S J. VINS-mono: a robust and versatile monocular visual-inertial state estimator[J]. IEEE Trans Robot, 2018, 34(4): 1004−1020. doi: 10.1109/TRO.2018.2853729

[18] 刘亚坤, 李永强, 刘会云, 等. 基于改进RANSAC算法的复杂建筑物屋顶点云分割[J]. 地球信息科学学报, 2021, 23(8): 1497−1507. doi: 10.12082/dqxxkx.2021.200742

Liu Y K, Li Y Q, Liu H Y, et al. An improved RANSAC algorithm for point cloud segmentation of complex building roofs[J]. J Geo-Inf Sci, 2021, 23(8): 1497−1507. doi: 10.12082/dqxxkx.2021.200742

[19] 夏金泽, 孙浩铭, 胡盛辉, 等. 基于图像信息约束的三维激光点云聚类方法[J]. 光电工程, 2023, 50(2): 220148. doi: 10.12086/oee.2023.220148

Xia J Z, Sun H M, Hu S H, et al. 3D laser point cloud clustering method based on image information constraints[J]. Opto-Electron Eng, 2023, 50(2): 220148. doi: 10.12086/oee.2023.220148

[20] Bai C G, Xiao T, Chen Y J, et al. Faster-LIO: lightweight tightly coupled lidar-inertial odometry using parallel sparse incremental voxels[J]. IEEE Robot Autom Lett, 2022, 7(2): 4861−4868. doi: 10.1109/LRA.2022.3152830

[21] Yuan C J, Lin J R, Zou Z H, et al. STD: stable triangle descriptor for 3D place recognition[C]//2023 IEEE International Conference on Robotics and Automation (ICRA), 2023: 1897–1903. https://doi.org/10.1109/ICRA48891.2023.10160413.

[22] Cui Y G, Chen X Y L, Zhang Y L, et al. BoW3D: bag of words for real-time loop closing in 3D LiDAR SLAM[J]. IEEE Robot Autom Lett, 2023, 8(5): 2828−2835. doi: 10.1109/LRA.2022.3221336

[23] Kim G, Kim A. Scan context: egocentric spatial descriptor for place recognition within 3D point cloud map[C]//2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018: 4802–4809. https://doi.org/10.1109/IROS.2018.8593953.

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: