-

摘要:

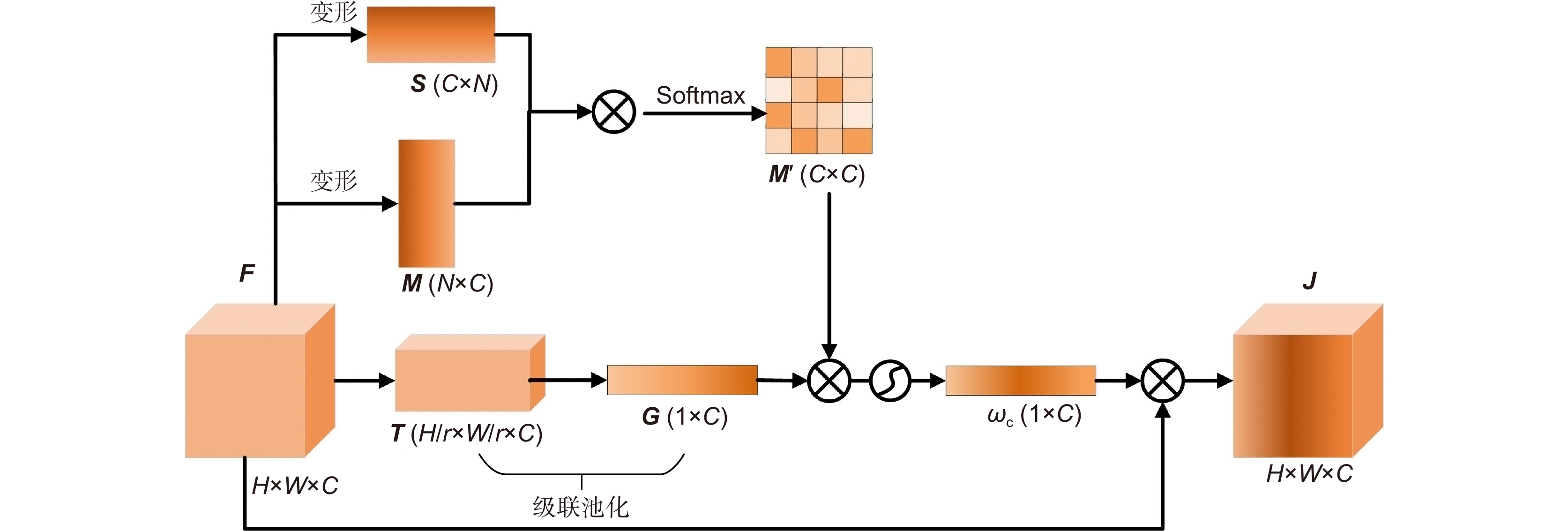

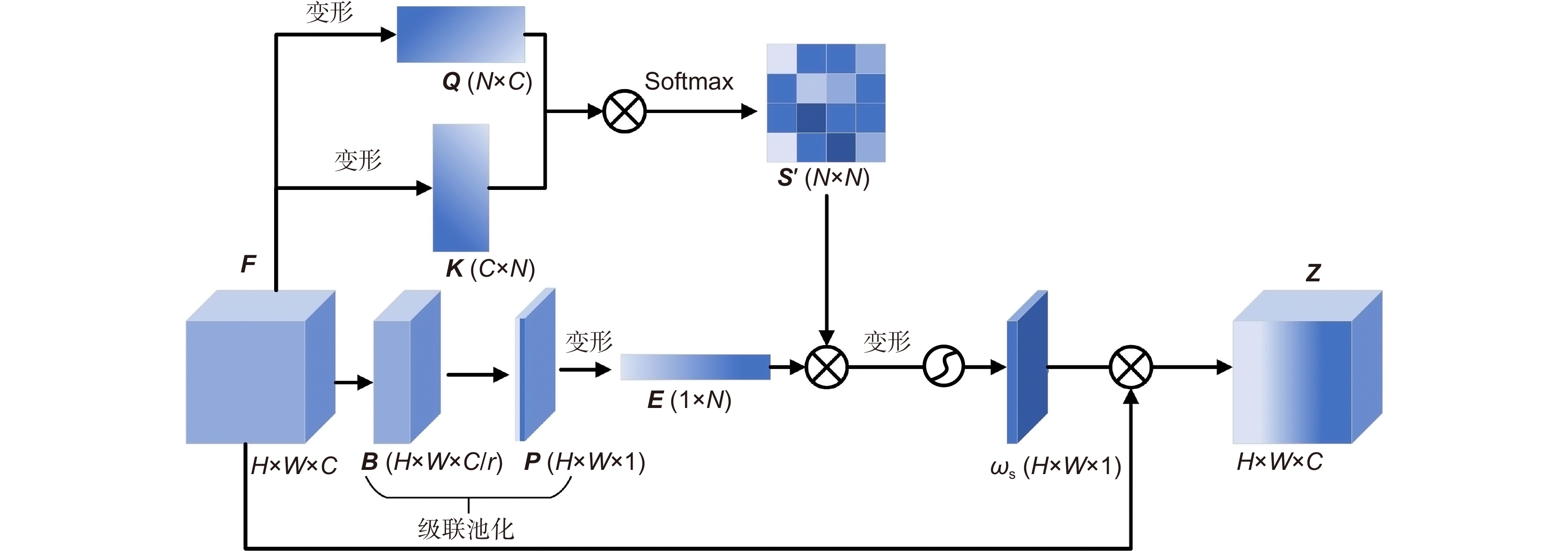

高分辨率遥感图像检索中,由于图像内容复杂,细节信息丰富,以致通过卷积神经网络提取的特征难以有效表达图像的显著信息。针对该问题,提出一种基于级联池化的自注意力模块,用来提高卷积神经网络的特征表达。首先,设计了级联池化自注意力模块,自注意力在建立语义依赖关系的基础上,可以学习图像关键的显著特征,级联池化是在小区域最大池化的基础上再进行均值池化,将其用于自注意力模块,能够在关注图像显著信息的同时保留图像重要的细节信息,进而增强特征的判别能力。然后,将级联池化自注意力模块嵌入到卷积神经网络中,进行特征的优化和提取。最后,为了进一步提高检索效率,采用监督核哈希对提取的特征进行降维,并将得到的低维哈希码用于遥感图像检索。在UC Merced、AID和NWPU-RESISC45数据集上的实验结果表明,本文方法能够有效提高检索性能。

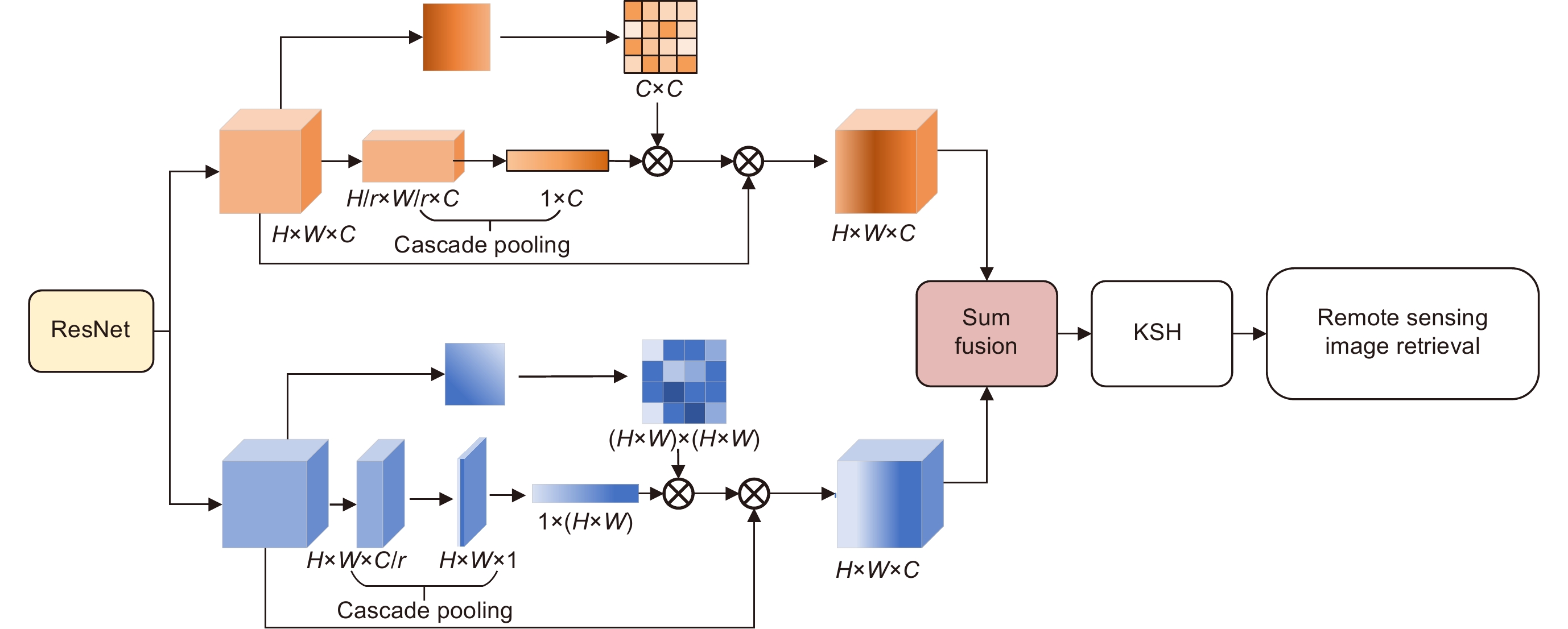

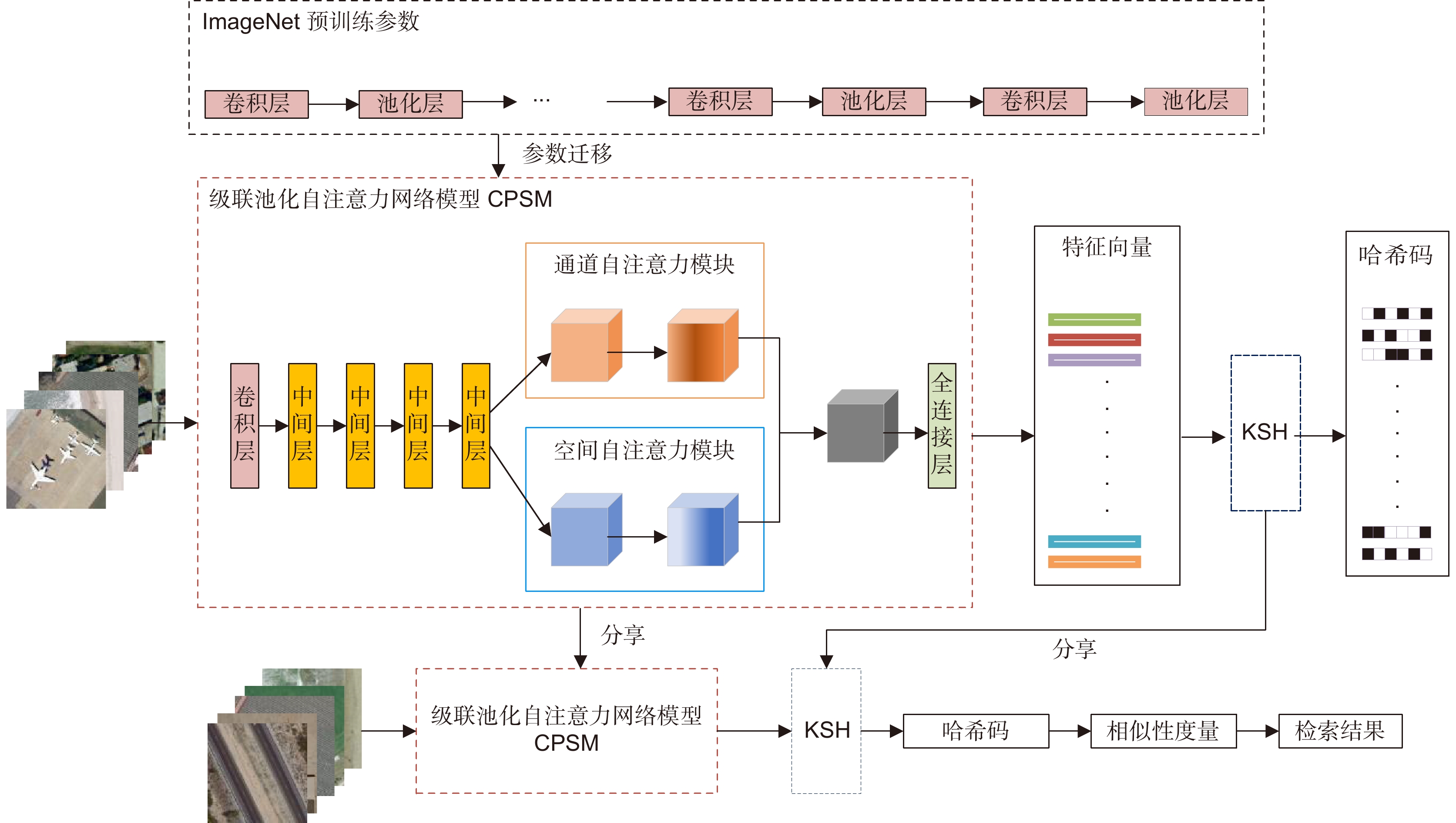

Abstract:In high-resolution remote sensing image retrieval, due to the complex image content and rich detailed information, it is difficult for the features extracted by a convolutional neural network to effectively express the salient information of the image. In response to this issue, a self-attention module based on cascade pooling is proposed to improve the feature representation of convolutional neural networks. Firstly, a cascade pooling self-attention module is designed, and the self-attention module can learn key salient features of images on the basis of establishing semantic dependencies. Cascade pooling uses max pooling based on a small region, and then adopts average pooling based on the max pooled feature map. The cascade pooling is exploited in the self-attention module, which can keep important details of the image while paying attention to the salient information of the image, thereby enhancing feature discrimination. After that, the cascade pooled self-attention module is embedded into the convolutional neural network for feature optimization and extraction. Finally, in order to further improve the retrieval efficiency, supervised hashing with kernels is applied to reduce the dimensionality of features, and then the obtained low-dimensional hash code is utilized for remote sensing image retrieval. The experimental results on the UC Merced, AID and NWPU-RESISC45 data sets show that the proposed method can improve the retrieval performance effectively.

-

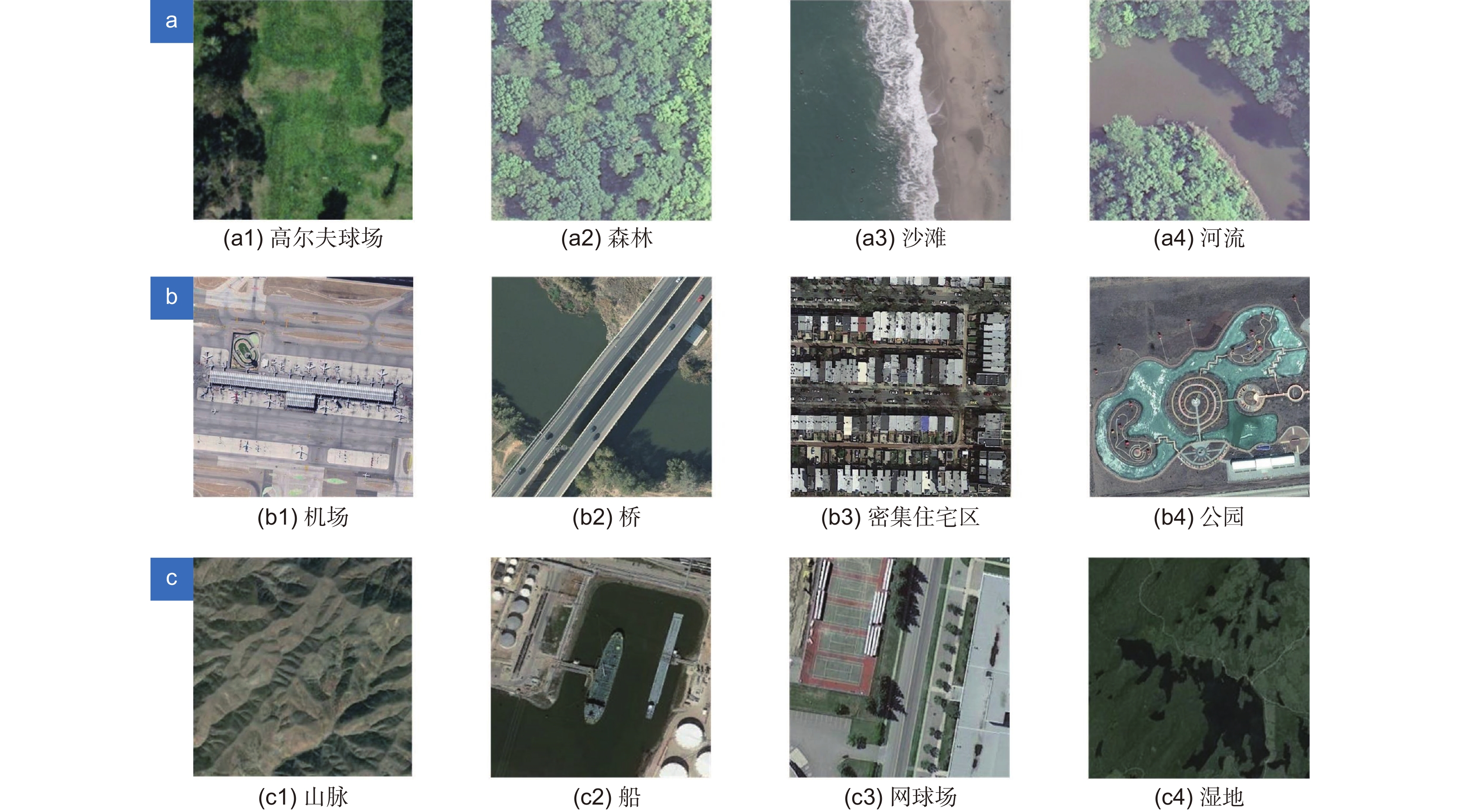

Overview: With the development of remote sensing satellite technology and the expansion of the market in remote sensing images (RSIs), content-based remote sensing image retrieval (RSIR) plays an irreplaceable role in many fields, such as economic and social development, resource and environmental monitoring, and urban life management. However, there are complex content and rich background information in the high-resolution remote sensing images, whose features extracted by convolutional neural networks are difficult to effectively express the salient information of the RSIs. For this problem in high-resolution RSIR, a self-attention mechanism based on cascading pooling is proposed to enhance the feature expression of convolutional neural networks. Firstly, a cascade pooling self-attention module is designed. Cascade pooling uses max pooling based on a small region, and then adopts average pooling based on the max pooled feature map. Compared with traditional global pooling, cascade pooling combines the advantages of max pooling and average pooling, which not only pays attention to the salient information of the RSIs, but also retains crucial detailed information. The cascade pooling is employed in the self-attention module, which includes spatial self-attention and channel self-attention. The spatial self-attention combines self-attention and spatial attention based on location correlation, which enhances specific object regions of interest through spatial weights and weakens irrelevant background regions, to strengthen the ability of spatial feature description. The channel self-attention combines self-attention and content correlation-based channel attention, which assigns weights to different channels by linking contextual information. Each channel can be regarded as the response of one class of features, and more weights are assigned to the features with large contributions, thereby the ability to discriminate the salient features of the channel is enhanced. The cascade pooling self-attention module can learn crucial salient features of the RSIs based on the establishment of semantic dependencies. After that, the cascade pooled self-attention module is embedded into the convolutional neural networks to extract features and optimize features. Finally, in order to further increase the retrieval efficiency, supervised Hashing with kernels is applied to reduce the dimensionality of features, and then the obtained low-dimensional hash code is utilized in the RSIR. Experiments are conducted on the UC Merced, AID and NWPU-RESISC45 datasets, the mean average precisions reach 98.23%, 94.96% and 94.53% respectively. The results show that compared with the existing retrieval methods, the proposed method improves the retrieval accuracy effectively. Therefore, cascade pooling self-attention and supervised hashing with kernels optimize features from two aspects of network structure and feature compression respectively, which enhances the feature representation and improves retrieval performance.

-

-

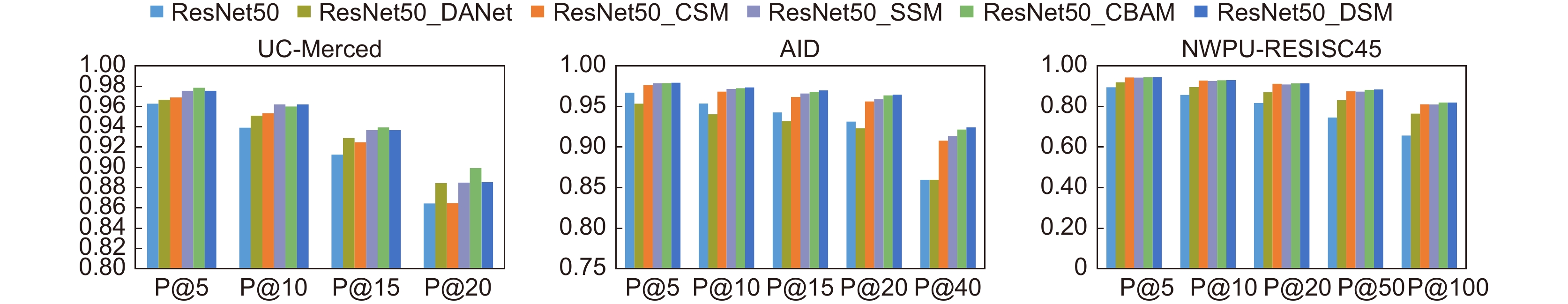

表 1 不同注意力模块的mAP值

Table 1. mAP value of different attention modules

网络结构 UC Merced/% AID/% NWPU-RESISC45/% ResNet50 91.17 87.35 60.07 ResNet50_DANet 92.13 88.13 73.02 ResNet50_CSM 91.50 92.38 77.12 ResNet50_SSM 92.60 92.91 77.13 ResNet50_CBAM 93.72 93.33 78.19 ResNet50_DSM 92.67 93.48 78.28 表 2 不同池化方法的mAP值

Table 2. mAP value of different pooling methods

网络结构 UC Merced/% AID/% NWPU-RESISC45/% ResNet50 91.17 87.35 60.07 ResNet50_CSM 91.50 92.38 77.12 ResNet50_SSM 92.60 92.91 77.13 ResNet50_DSM 92.67 93.48 78.28 ResNet50_CPCSM 93.35 93.23 78.17 ResNet50_CPSSM 93.62 93.15 77.19 ResNet50_CPSM 94.12 93.76 79.00 表 3 不同降维方法的比较

Table 3. Comparison of different dimensionality reduction methods

方法 mAP/% 时间/ms 维度 - 94.12 24.06 100352 PCA 95.85 23.11 20 PCA 93.56 23.46 64 LDA 98.07 29.67 20 KSH 97.43 23.32 20 KSH 98.23 23.44 64 表 4 UC Merced数据集中不同方法的比较

Table 4. Comparison of different methods on UC Merced data set

表 5 AID数据集中不同方法的比较

Table 5. Comparison of different methods on AID data set

表 6 NWPU-RESISC45数据集不同方法比较

Table 6. Comparison of different methods on NWPU-RESISC45 data set

-

[1] Husain S S, Bober M. REMAP: Multi-layer entropy-guided pooling of dense CNN features for image retrieval[J]. IEEE Trans Image Process, 2019, 28(10): 5201−5213. doi: 10.1109/TIP.2019.2917234

[2] Ge Y, Jiang S L, Xu Q Y, et al. Exploiting representations from pre-trained convolutional neural networks for high-resolution remote sensing image retrieval[J]. Multimed Tools Appl, 2018, 77(13): 17489−17515. doi: 10.1007/s11042-017-5314-5

[3] 葛芸, 马琳, 储珺. 结合判别相关分析与特征融合的遥感图像检索[J]. 中国图象图形学报, 2020, 25(12): 2665−2676. doi: 10.11834/jig.200009

Ge Y, Ma L, Chu J. Remote sensing image retrieval combining discriminant correlation analysis and feature fusion[J]. J Image Graphics, 2020, 25(12): 2665−2676. doi: 10.11834/jig.200009

[4] Hou F, Liu B, Zhuo L, et al. Remote sensing image retrieval with deep features encoding of Inception V4 and largevis dimensionality reduction[J]. Sens Imaging, 2021, 22(1): 20. doi: 10.1007/s11220-021-00341-7

[5] 江曼, 张皓翔, 程德强, 等. 融合HSV与方向梯度特征的多尺度图像检索[J]. 光电工程, 2021, 48(11): 210310. doi: 10.12086/oee.2021.210310

Jiang M, Zhang H X, Cheng D Q, et al. Multi-scale image retrieval based on HSV and directional gradient features[J]. Opto-Electron Eng, 2021, 48(11): 210310. doi: 10.12086/oee.2021.210310

[6] Liu Y S, Chen C H, Han Z Z, et al. High-resolution remote sensing image retrieval based on classification-similarity networks and double fusion[J]. IEEE J Sel Top Appl Earth Obs Remote Sens, 2020, 13: 1119−1133. doi: 10.1109/JSTARS.2020.2981372

[7] Zhang M D, Cheng Q M, Luo F, et al. A triplet nonlocal neural network with dual-anchor triplet loss for high-resolution remote sensing image retrieval[J]. IEEE J Sel Top Appl Earth Obs Remote Sens, 2021, 14: 2711−2723. doi: 10.1109/JSTARS.2021.3058691

[8] Cheng Q M, Gan D Q, Fu P, et al. A novel ensemble architecture of residual attention-based deep metric learning for remote sensing image retrieval[J]. Remote Sens, 2021, 13(17): 3445. doi: 10.3390/rs13173445

[9] Zhuo Z, Zhou Z. Remote sensing image retrieval with Gabor-CA-ResNet and split-based deep feature transform network[J]. Remote Sens, 2021, 13(5): 869. doi: 10.3390/rs13050869

[10] Hu J, Shen L, Sun G. Squeeze-and-excitation networks[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018: 7132−7141. doi: 10.1109/CVPR.2018.00745.

[11] Woo S, Park J, Lee J Y, et al. CBAM: Convolutional block attention module[C]//Proceedings of the 15th European Conference on Computer Vision, 2018: 3–19. doi: 10.1007/978-3-030-01234-2_1.

[12] Wang Q L, Wu B G, Zhu P F, et al. ECA-Net: efficient channel attention for deep convolutional neural networks[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020: 11531–11539. doi: 10.1109/CVPR42600.2020.01155.

[13] Hou Q B, Zhou D Q, Feng J S. Coordinate attention for efficient mobile network design[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021: 13708–13717. doi: 10.1109/CVPR46437.2021.01350.

[14] Wang X L, Girshick R, Gupta A, et al. Non-local neural networks[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018: 7794–7803. doi: 10.1109/CVPR.2018.00813.

[15] Fu J, Liu J, Tian H J, et al. Dual attention network for scene segmentation[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2019: 3141–3149. doi: 10.1109/CVPR.2019.00326.

[16] Huang Z L, Wang X G, Huang L C, et al. CCNet: Criss-cross attention for semantic segmentation[C]//Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019: 603–612. doi: 10.1109/ICCV.2019.00069.

[17] Wang Y M, Ji S P, Lu M, et al. Attention boosted bilinear pooling for remote sensing image retrieval[J]. Int J Remote Sens, 2020, 41(7): 2704−2724. doi: 10.1080/01431161.2019.1697010

[18] Wold S, Esbensen K, Geladi P. Principal component analysis[J]. Chemom Intell Lab Syst, 1987, 2(1–3): 37−52. doi: 10.1016/0169-7439(87)80084-9

[19] Yang W J, Wang L J, Cheng S L, et al. Deep hash with improved dual attention for image retrieval[J]. Information, 2021, 12(7): 285. doi: 10.3390/info12070285

[20] Liu W, Wang J, Ji R R, et al. Supervised hashing with kernels[C]//IEEE Conference on Computer Vision and Pattern Recognition, 2012: 2074–2081. doi: 10.1109/CVPR.2012.6247912.

[21] Ge Y, Tang Y L, Jiang S L, et al. Region-based cascade pooling of convolutional features for HRRS image retrieval[J]. Remote Sens Lett, 2018, 9(10): 1002−1010. doi: 10.1080/2150704X.2018.1504334

[22] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 2016: 770–778. doi: 10.1109/CVPR.2016.90.

[23] 孙月驰, 李冠. 基于卷积神经网络嵌套模型的人群异常行为检测[J]. 计算机应用与软件, 2019, 36(3): 196−201, 276. doi: 10.3969/j.issn.1000-386x.2019.03.036

Sun Y C, Li G. Abnormal behavior detection of crowds based on nested model of convolutional neural network[J]. Comput Appl Software, 2019, 36(3): 196−201, 276. doi: 10.3969/j.issn.1000-386x.2019.03.036

[24] Yang Y, Newsam S. Geographic image retrieval using local invariant features[J]. IEEE Trans Geosci Remote Sens, 2013, 51(2): 818−832. doi: 10.1109/TGRS.2012.2205158

[25] Xia G S, Hu J W, Hu F, et al. AID: A benchmark data set for performance evaluation of aerial scene classification[J]. IEEE Trans Geosci Remote Sens, 2017, 55(7): 3965−3981. doi: 10.1109/TGRS.2017.2685945

[26] Cheng G, Han J W, Lu X Q. Remote sensing image scene classification: benchmark and state of the art[J]. Proc IEEE, 2017, 105(10): 1865−1883. doi: 10.1109/JPROC.2017.2675998

[27] Izenman A J. Linear discriminant analysis[M]//Izenman A J. Modern Multivariate Statistical Techniques. New York: Springer, 2013: 237–280. doi: 10.1007/978-0-387-78189-1_8.

[28] Ye F M, Xiao H, Zhao X Q, et al. Remote sensing image retrieval using convolutional neural network features and weighted distance[J]. IEEE Geosci Remote Sens Lett, 2018, 15(10): 1535−1539. doi: 10.1109/LGRS.2018.2847303

[29] Ye F M, Dong M, Luo W, et al. A new re-ranking method based on convolutional neural network and two image-to-class distances for remote sensing image retrieval[J]. IEEE Access, 2019, 7: 141498−141507. doi: 10.1109/ACCESS.2019.2944253

[30] 叶发茂, 孟祥龙, 董萌, 等. 遥感图像蚁群算法和加权图像到类距离检索法[J]. 测绘学报, 2021, 50(5): 612−620. doi: 10.11947/j.AGCS.2021.20200357

Ye F M, Meng X L, Dong M, et al. Remote sensing image retrieval with ant colony optimization and a weighted image-to-class distance[J]. Acta Geod Cartogr Sin, 2021, 50(5): 612−620. doi: 10.11947/j.AGCS.2021.20200357

[31] Roy S, Sangineto E, Demir B, et al. Metric-learning-based deep hashing network for content-based retrieval of remote sensing images[J]. IEEE Geosci Remote Sens Lett, 2021, 18(2): 226−230. doi: 10.1109/LGRS.2020.2974629

[32] Song W W, Li S T, Benediktsson J A. Deep hashing learning for visual and semantic retrieval of remote sensing images[J]. IEEE Trans Geosci Remote Sens, 2021, 59(11): 9661−9672. doi: 10.1109/TGRS.2020.3035676

[33] Liu C, Ma J J, Tang X, et al. Deep hash learning for remote sensing image retrieval[J]. IEEE Trans Geosci Remote Sens, 2021, 59(4): 3420−3443. doi: 10.1109/TGRS.2020.3007533

[34] Tang X, Jiao L C, Emery W J. SAR image content retrieval based on fuzzy similarity and relevance feedback[J]. IEEE J Sel Top Appl Earth Obs Remote Sens, 2017, 10(5): 1824−1842. doi: 10.1109/JSTARS.2017.2664119

[35] Demir B, Bruzzone L. Hashing-based scalable remote sensing image search and retrieval in large archives[J]. IEEE Trans Geosci Remote Sens, 2016, 54(2): 892−904. doi: 10.1109/TGRS.2015.2469138

[36] Marmanis D, Datcu M, Esch T, et al. Deep learning earth observation classification using ImageNet pretrained networks[J]. IEEE Geosci Remote Sens Lett, 2016, 13(1): 105−109. doi: 10.1109/LGRS.2015.2499239

[37] Imbriaco R, Sebastian C, Bondarev E, et al. Aggregated deep local features for remote sensing image retrieval[J]. Remote Sens, 2019, 11(5): 493. doi: 10.3390/rs11050493

[38] Hou D Y, Miao Z L, Xing H Q, et al. Exploiting low dimensional features from the MobileNets for remote sensing image retrieval[J]. Earth Sci Inform, 2020, 13(4): 1437−1443. doi: 10.1007/s12145-020-00484-3

[39] Wang Y M, Ji S P, Zhang Y J. A learnable joint spatial and spectral transformation for high resolution remote sensing image retrieval[J]. IEEE J Sel Top Appl Earth Obs Remote Sens, 2021, 14: 8100−8112. doi: 10.1109/JSTARS.2021.3103216

[40] Fan L L, Zhao H W, Zhao H Y. Distribution consistency loss for large-scale remote sensing image retrieval[J]. Remote Sens, 2020, 12(1): 175. doi: 10.3390/rs12010175

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: