-

摘要:

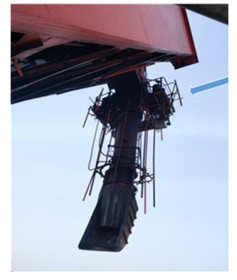

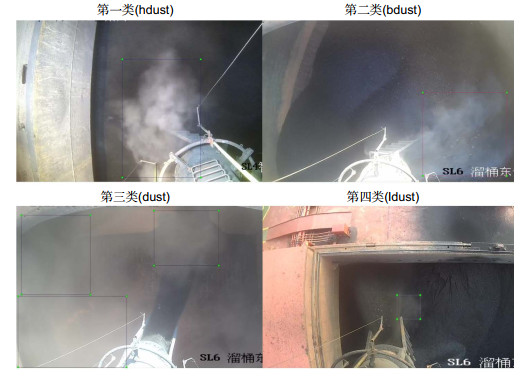

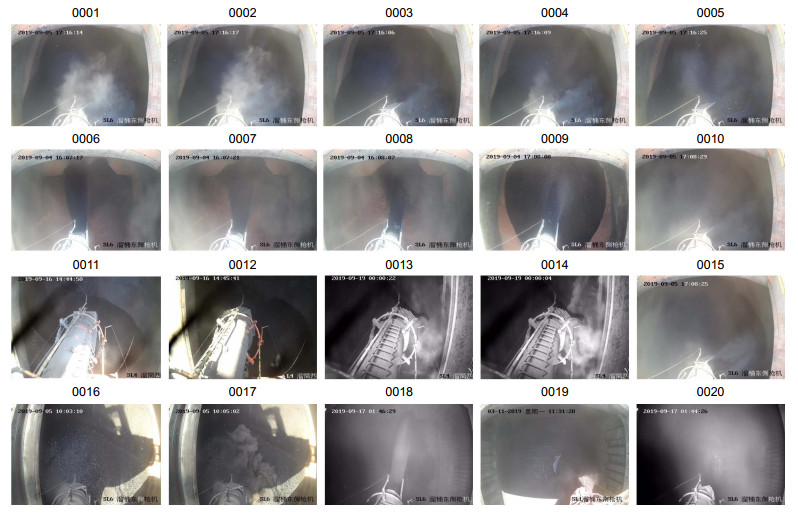

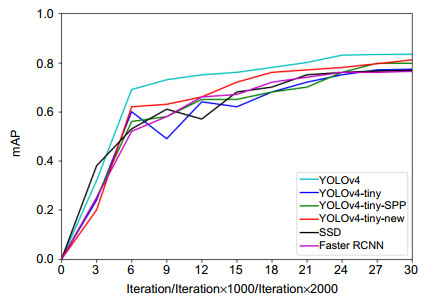

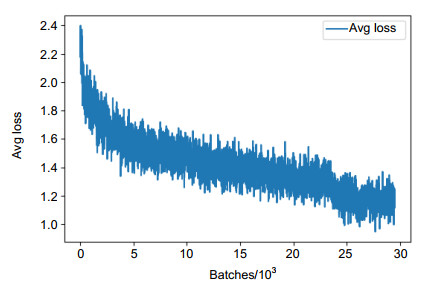

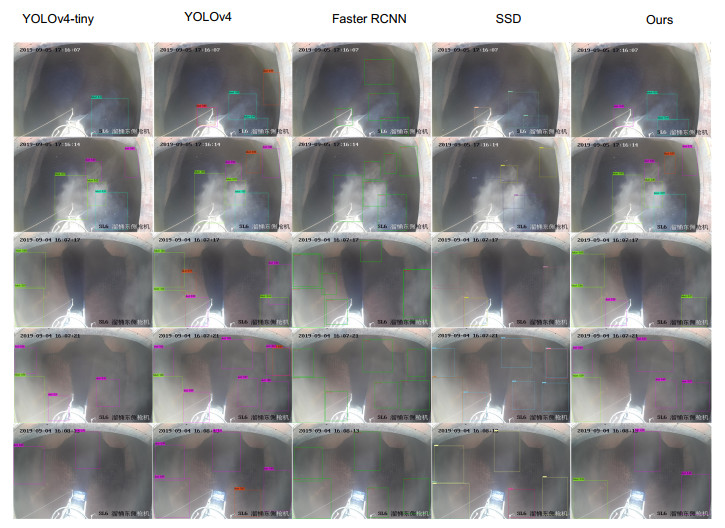

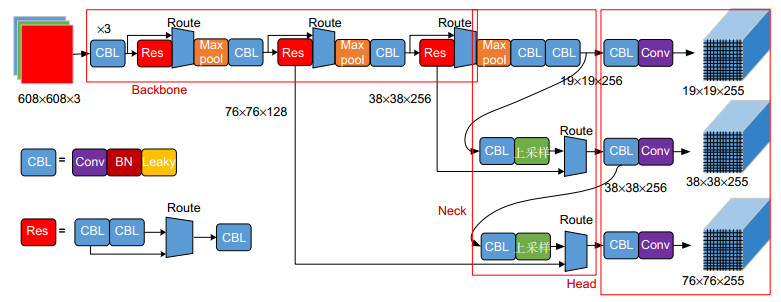

煤炭港在使用装船机的溜筒卸载煤的过程中会产生扬尘,港口为了除尘,需要先对粉尘进行检测。为解决粉尘检测问题,本文提出一种基于深度学习(YOLOv4-tiny)的溜筒卸料煤粉尘的检测方法。利用改进的YOLOv4-tiny算法对溜筒卸料粉尘数据集进行训练和测试,由于检测算法无法获知粉尘浓度,本文将粉尘分为四类分别进行检测,最后统计四类粉尘的检测框总面积,通过对这些数据做加权和计算近似判断粉尘浓度大小。实验结果表明,四类粉尘的检测精度(AP)分别为93.98%、93.57%、80.03%和57.43%,平均检测精度(mAP)为81.27%,接近YOLOv4的83.38%,而检测速度(FPS)为25.1,高于YOLOv4的13.4。该算法较好地平衡了粉尘检测的速率和精度,可用于实时的粉尘检测以提高抑制溜筒卸料产生的煤粉尘的效率。

-

关键词:

- 煤粉尘检测 /

- YOLOv4-tiny /

- 深度学习 /

- 目标检测

Abstract:The coal port will produce dust in the process of unloading coal by the chute of the ship loader. In order to solve the problem of dust detection, this paper proposes a method of coal dust detection based on deep learning (YOLOv4-tiny). The improved YOLOv4-tiny network is used to train and test the dust data set of chute discharge. Because the detection algorithm cannot get the dust concentration, this paper divides the dust into four categories for detection, and finally counts the area of detection frames of the four categories of dust. After that, the dust concentration is approximately judged through the weighted sum calculation of these data. The experimental results show that the detection accuracy (AP) of four types of dust is 93.98%, 93.57%, 80.03% and 57.43%, the average detection accuracy (mAP) is 81.27% (which is close to 83.38% of YOLOv4), and the detection speed (FPS) is 25.1 (which is higher than 13.4 of YOLOv4). The algorithm can balance the speed and accuracy of dust detection, and can be used for real-time dust detection to improve the efficiency of suppressing coal dust generated by chute discharge.

-

Key words:

- coal dust detection /

- YOLOv4-tiny /

- deep learning /

- object detection

-

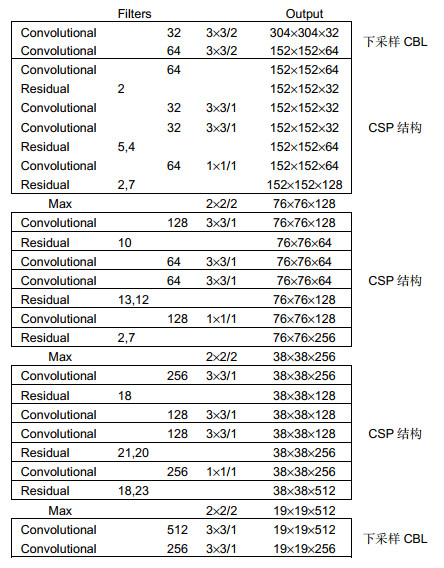

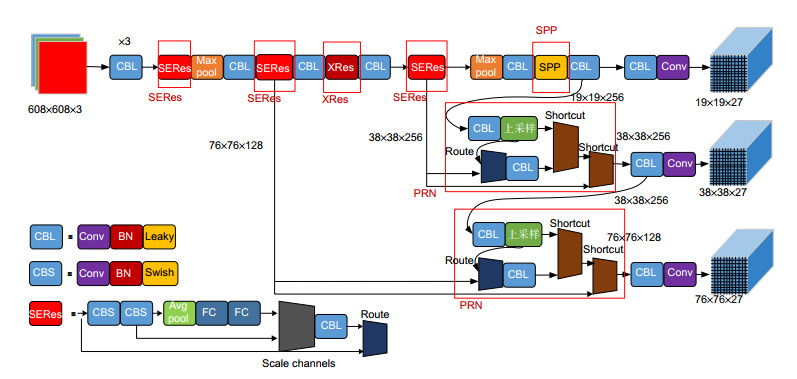

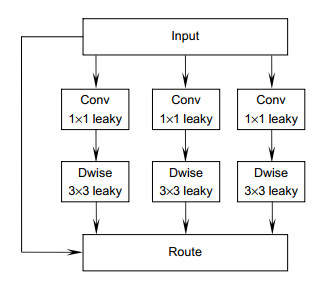

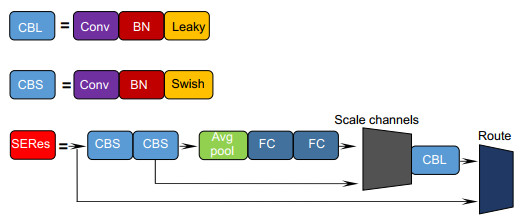

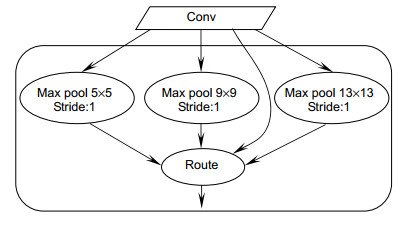

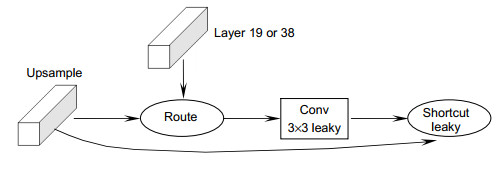

Overview: In recent years, with the improvement of the public's environmental protection concept and the tightening of environmental protection policies, how to effectively reduce or quickly suppress the dust generated in the production process has become a problem that coal ports must face. It is the last link of the whole production link that the ship loader unloads the coal to the cargo ship through the chute. In the front production link of the coal ports, various dust suppression measures are adopted, such as wind proof and dust suppression nets, dry dust removal systems, and so on. Although these measures effectively reduce the dust generation when the coal spills into the cabin, the dust suppression measures in the early stage do not form a closed-loop control with the unloading link, and cannot automatically suppress the dust from the unloading link. Thus, the separate treatment of the unloading dust is still an important part of the whole environmental protection operation. At present, the main measure to suppress this kind of dust in domestic coal ports is watering. As the discharge dust only occurs occasionally, if the sprinkler dust removal device is always turned on, it will lead to excessive watering, which will undoubtedly reduce the actual coal loading of cargo ships and affect the economic benefits. If the dust removal device is manually controlled by workers on site, it is not conducive to the construction of unmanned ports. Therefore, it is necessary to develop an automatic real-time detection method of coal dust discharge. When the dust is detected, an early warning signal is sent to inform the dust suppression operation to take corresponding measures. The improved deep convolution neural network (YOLOv4-tiny) is used to train and test on the data set of dust, and then to learn its internal feature representation. The improvement measures include: a SERes module is proposed to strengthen the information interaction between the detection algorithm network channels; a XRes module is proposed to increase the depth and width of the algorithm network; a SPP module and a PRN module are added to enhance the feature fusion ability of the algorithm. Because the detection algorithm cannot get the dust concentration, this paper divides the dust into four categories for detection, and finally counts the total area of the detection frame of the four categories of dust. After that, the dust concentration is approximately judged by weighting and calculating these data. The experimental results show that the detection accuracy (AP) of four types of dust is 93.98%, 93.57%, 80.03% and 57.43%, the average detection accuracy (mAP) is 81.27% (which is close to 83.38% of YOLOv4), and the detection speed (FPS) is 25.1 (which is higher than 13.4 of YOLOv4). The algorithm can balance the speed and accuracy of dust detection, and can be used for real-time dust detection to improve the efficiency of suppressing coal dust generated by chute discharge.

-

-

表 1 网络参数表

Table 1. Network parameter

参数名 参数值 学习率(learning rate) 0.00261 老化(burn_in) 1000 迭代次数(iteration) 30000 批次大小(batch size) 64 批次分割(subdivisions) 8 动量(momentum) 0.9 权重衰退(decay) 0.0005 饱和度(saturation) 1.5 曝光量(exposure) 1.5 色调(hue) 0.1 马赛克数据增强(mosaic) 1 抖动因子(jitter) 0.3 表 2 消融实验

Table 2. Data of ablation experiment

SPP XRes SAM SERes PRN 第1类AP/% 第2类AP/% 第3类AP/% 第4类AP/% mAP/% FPS 92.15 89.66 74.32 53.51 77.41 29.0 √ 95.14 91.15 77.81 54.85 79.74 28.8 √ 93.80 89.43 75.47 52.63 77.83 27.9 √ 93.62 90.09 74.13 53.40 77.81 28.5 √ 92.19 89.07 74.07 51.71 76.26 29.5 √ 93.69 91.24 76.50 55.48 79.23 28.7 √ √ 94.99 91.13 77.26 55.14 79.63 27.3 √ √ 94.78 91.08 77.17 55.24 79.57 28.2 √ √ √ 94.40 90.13 78.58 56.16 79.82 28.0 √ √ 94.92 90.20 79.24 56.50 80.22 28.3 √ √ √ 94.35 90.10 80.47 56.82 80.55 28.2 √ √ √ 93.44 91.78 78.88 56.31 80.10 28.9 √ √ √ √ 93.98 93.57 80.08 57.43 81.27 25.1 √ √ √ √ √ 95.04 89.87 81.25 57.92 81.02 23.9 表 3 对比实验

Table 3. Contrast experiments

Model FPS mAP/% YOLOv4-tiny 29.0 77.41 YOLOv4-tiny-SPP 28.8 79.74 YOLOv4 13.4 83.38 SSD 35.1 77.17 Faster RCNN 11.6 76.42 YOLOv4-tiny-new 25.1 81.27 -

[1] 汪大春. 黄骅港粉尘治理技术研究[J]. 神华科技, 2017, 15(5): 89-92. doi: 10.3969/j.issn.1674-8492.2017.05.025

Wang D C. Study about dust controlling techniques in Huanghua port[J]. Shenhua Sci Technol, 2017, 15(5): 89–92. doi: 10.3969/j.issn.1674-8492.2017.05.025

[2] 姚筑宇. 基于深度学习的目标检测研究与应用[D]. 北京: 北京邮电大学, 2019.

Yao Z Y. Research on the application of object detection technology based on deep learning algorithm[D]. Beijing: Beijing University of Posts and Telecommunications, 2019.

[3] Frizzi S, Kaabi R, Bouchouicha M, et al. Convolutional neural network for video fire and smoke detection[C]//IECON 2016- 42nd Annual Conference of the IEEE Industrial Electronics Society, Florence, 2016: 877–882.

[4] Tao C Y, Zhang J, Wang P. Smoke detection based on deep convolutional neural networks[C]//2016 International Conference on Industrial Informatics - Computing Technology, Intelligent Technology, Industrial Information Integration (ICIICII), Wuhan, 2016: 150–153.

[5] Zhang F, Qin W, Liu Y B, et al. A Dual-Channel convolution neural network for image smoke detection[J]. Multimed Tools Appl, 2020, 79(45): 34587–34603. http://www.researchgate.net/publication/338410651_A_Dual-Channel_convolution_neural_network_for_image_smoke_detection/download

[6] Girshick R. Fast R-CNN[C]//Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, 2015: 1440–1448.

[7] Ren S Q, He K M, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Trans Pattern Anal Mach Intell, 2017, 39(6): 1137–1149. http://europepmc.org/abstract/MED/27295650

[8] Redmon J, Divvala S, Girshick R, et al. You only look once: unified, real-time object detection[C]//Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, 2016: 779–788.

[9] Redmon J, Farhadi A. YOLO9000: Better, faster, stronger[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, 2017: 6517–6525.

[10] Redmon J, Farhadi A. YOLOv3: An Incremental improvement[Z]. arXiv: 1804.02767, 2018.

[11] Bochkovskiy A, Wang C Y, Liao H Y M. YOLOv4: optimal speed and accuracy of object detection[Z]. arXiv: 2004.10934, 2020.

[12] He K M, Zhang X Y, Ren S Q, et al. Spatial pyramid pooling in deep convolutional networks for visual recognition[J]. IEEE Trans Pattern Anal Mach Intell, 2015, 37(9): 1904-1916. doi: 10.1109/TPAMI.2015.2389824

[13] Wang C Y, Liao H Y M, Chen P Y, et al. Enriching variety of layer-wise learning information by gradient combination[C]//2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), Seoul, 2019: 2477–2484.

[14] Wang C Y, Liao H Y M, Wu Y H, et al. CSPNet: a new backbone that can enhance learning capability of CNN[C]//Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, 2020: 1571–1580.

[15] Lin T Y, Dollár P, Girshick R, et al. Feature pyramid networks for object detection[C]//2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, 2017: 936–944.

[16] Liu S, Qi L, Qin H F, et al. Path aggregation network for instance segmentation[C]//Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, 2018: 8759-8768.

[17] Chollet F. Xception: deep learning with depthwise separable convolutions[C]//Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, 2017: 1800-1807.

[18] Szegedy C, Liu W, Jia Y Q, et al. Going deeper with convolutions[C]//2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, 2015: 1-9.

[19] Szegedy C, Vanhoucke V, Ioffe S, et al. Rethinking the inception architecture for computer vision[C]//Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, 2016: 2818-2826.

[20] Hu J, Shen L, Sun G. Squeeze-and-Excitation networks[C]//Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, 2018: 7132-7141.

[21] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, 2016: 770-778.

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: