Anti-occlusion and re-tracking of real-time moving target based on kernelized correlation filter

-

摘要:

相关滤波算法是通过模板与检测目标的相似性来确定目标位置, 自从将相关滤波概念用于目标跟踪起便一直受到广泛的关注, 而核相关滤波算法的提出更是将这一理念推到了一个新的高度。核相关滤波算法以其高速度、高精度以及高鲁棒性的特点迅速成为研究热点, 但核相关滤波算法在抗遮挡性能上有着严重的缺陷。本文针对核相关滤波在抗遮挡性能上的缺陷对此算法进行改进, 提出了一种融合Sobel边缘二元模式算法的改进KCF算法, 通过Sobel边缘二元模式算法加权融合目标特征, 然后计算目标的峰值响应强度旁瓣值比检测目标是否丢失, 最后将Kalman算法作为目标遮挡后搜索目标的策略。结果显示, 本文方法不仅对抗遮挡有较好的鲁棒性, 而且能够满足实时要求, 准确地对目标进行再跟踪。

Abstract:

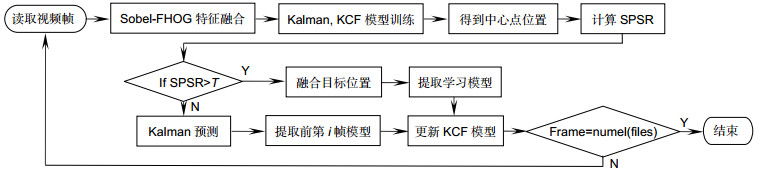

Abstract:The correlation filtering algorithm determines the target position by the similarity between the template and the detection target. Since the related filtering concept is used for target tracking, it has been widely concerned, and the proposal of the kernelized correlation filter is to push this concept to a new height. The kernelized correlation filter has become a research hotspot with its high speed, high precision and high robustness. However, the kernelized correlation filter has serious defects in anti-blocking performance. In this paper, the algorithm for the anti-occlusion performance of kernelized correlation filter is improved. An improved KCF algorithm based on Sobel edge binary mode algorithm is proposed. The Sobel edge binary mode algorithm is used to weight the fusion target feature. The target's peak response intensity sidelobe value is more than the detection target is lost. Finally, the Kalman algorithm is used as the target occlusion strategy. The results show that the proposed method not only has better robustness against occlusion, but also satisfy the real-time requirements and can accurately re-tracks the target.

-

Key words:

- KCF /

- feature fusion /

- side lobe ratio /

- Kalman prediction

-

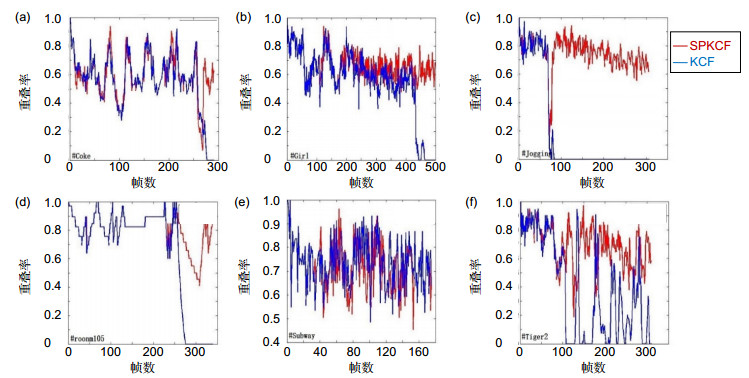

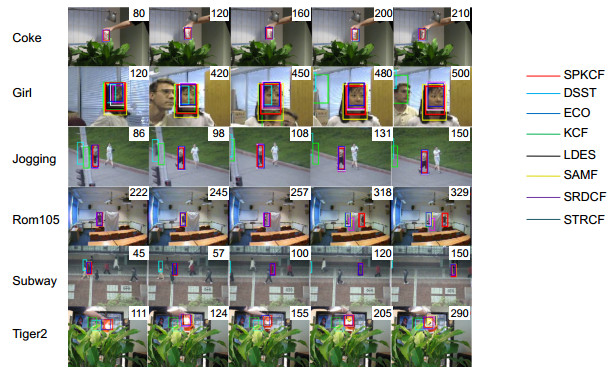

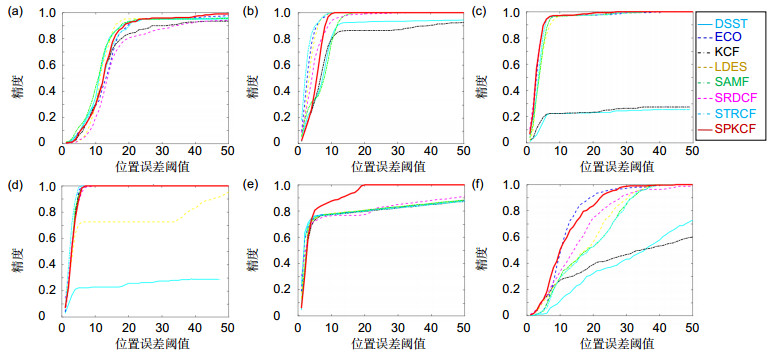

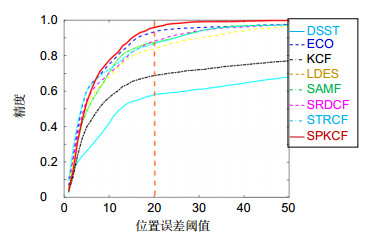

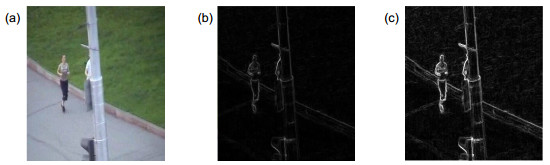

Overview: Target tracking is a topic that has been discussed in depth in current academic community. Its application is quite broad, spanning monitoring, motion analysis, medical imaging, behavior recognition, monitoring and human-computer interaction. When the tracking target is occluded, the accuracy of the current algorithm is not high. Therefore, the research of target tracking algorithm is still an important topic in the field of computer vision. The kernelized correlation filter is one of the most effective methods in the target tracking algorithm. It has become a research hotspot with its high speed, high precision and high robustness. More and more experts and scholars are committed to optimizing the existing features, so that the improved algorithm can achieve good experimental results. The kernelized correlation filter mainly uses the histogram of oriented gradient (HOG) in feature extraction, and determines the target position by the similarity between the template and the detection target. However, the inherent nature of the gradient makes the histogram of oriented gradient of the target very sensitive to noise and the target cannot be tracked by using this algorithm when the target is occluded. In order to overcome these shortcomings of the algorithm, this paper proposes an improved kernelized correlation filter that combines the Sobel edge binary mode algorithm. Firstly, the Sobel edge binary mode algorithm and the histogram of oriented gradient are used to weight the fusion target feature, and the HOG edge detection is enhanced for the target feature, which makes the tracking target information more obvious. Secondly, in order to make the Kalman prediction algorithm can accurately judge the target after it is occluded, the target position obtained by the kernelized correlation filter in the unoccluded tracking process is continuously merged with the target position obtained by the Kalman algorithm. Finally, the target's peak response intensity sidelobe ratio is calculated, and the detection target is judged whether it is lost. Combined with the Kalman algorithm, the position of the next frame of the target can be predicted according to the state before the target is lost. In this paper, six sets of occlusion test videos are selected on the public database visual tracker benchmark for experiments. In order to verify the effectiveness of the proposed algorithm, the authors use Matlab2018b programming, and select DSST, ECO, KCF, LDES, SRDCF, SAMF and STRCF as a comparison algorithm, which has good performance. The final experimental results show that the proposed method improves the accuracy of the algorithm when the target is occluded.

-

-

表 1 在视频集上的结果

Table 1. Results of the videos

DSST ECO KCF LDES SAMF SRDCF STRCF SPKCF Coke 0.931 0.911 0.838 0.952 0.935 0.808 0.897 0.921 Girl 0.928 1 0.864 1 1 0.992 1 1 Subway 0.257 1 1 0.726 1 1 1 1 Jogging 0.231 0.974 0.235 0.977 0.974 0.977 0.977 0.99 Rom105 0.805 0.805 0.796 0.808 0.805 0.767 0.797 1 Tiger2 0.323 0.900 0.39 0.584 0.526 0.748 0.533 0.839 Average 0.579 0.932 0.687 0.841 0.873 0.882 0.867 0.958 表 2 算法在测试视频上的覆盖情况

Table 2. Coverage of the algorithm on the test video

DSST ECO KCF LDES SAMF SRDCF STRCF SPKCF Coke 0.832 0.526 0.722 0.952 0.818 0.636 0.722 0.773 Girl 0.306 0.774 0.756 0.980 0.904 0.776 0.940 0.960 Jogging 0.225 0.958 0.225 0.971 0.967 0.971 0.971 0.971 Rom105 0.773 0.773 0.767 0.761 0.773 0.761 0.764 0.956 Subway 0.223 1.000 0.994 0.726 0.989 0.994 1.000 0.994 Tiger2 0.319 0.723 0.400 0.574 0.532 0.945 0.497 0.861 Average 0.501 0.754 0.655 0.845 0.829 0.817 0.802 0.898 表 3 算法在测试视频上的平均中心误差

Table 3. The average center error of the algorithm on the test video

DSST ECO KCF LDES SAMF SRDCF STRCF SPKCF Average 55.24 7.88 30.02 10.60 9.54 9.55 8.98 6.55 表 4 平均跟踪速度

Table 4. Mean velocity

(f/s) Coke Girl Jogging Rom105 Subway Tiger2 Average DSST 28.9 72.5 37.4 69.4 95.4 22.7 54.38 ECO 38.8 59.8 41.41 51.42 56.04 39.42 47.82 KCF 219.82 361.91 330.08 432.95 476.72 184.22 334.28 LDES 9.05 31.38 15.99 25.49 24.39 8.74 19.17 SAMF 11.53 48.14 26.71 32.83 35.15 10.8 27.53 SRDCF 5.24 9.32 5.45 8.35 12.49 4.98 7.64 STRCF 14.17 16.1 14.98 16.41 25.9 14.71 17.05 SPKCF 83.5 143.16 119.47 151.45 175.56 66.14 123.21 -

[1] 吴小俊, 徐天阳, 须文波.基于相关滤波的视频目标跟踪算法综述[J].指挥信息系统与技术, 2017, 8(3): 1–5. doi: 10.15908/j.cnki.cist.2017.03.001

Wu X J, Xu T Y, Xu W B. Review of target tracking algorithms in video based on correlation filter[J]. Command Information System and Technology, 2017, 8(3): 1–5. doi: 10.15908/j.cnki.cist.2017.03.001

[2] Henriques J F, Caseiro R, Martins P, et al. Exploiting the circulant structure of tracking-by-detection with kernels[C]// Proceedings of the 12th European Conference on Computer Vision, 2012: 702–715.

[3] Comaniciu D, Meer P. Mean shift: a robust approach toward feature space analysis[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2002, 24(5): 603–619. doi: 10.1109/34.1000236

[4] Mei X, Ling H B. Robust visual tracking and vehicle classification via sparse representation[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2011, 33(11): 2259–2272. doi: 10.1109/TPAMI.2011.66

[5] Danelljan M, Häger G, Khan F S, et al. Accurate scale estimation for robust visual tracking[C]//Proceedings of the British Machine Vision Conference, 2014.

[6] Grabner H, Grabner M, Bischof H. Real-time tracking via on-line boosting[C]//Proceedings of the British Machine Vision Conference, 2006, 1: 47–56.

[7] 王暐, 王春平, 付强, 等.基于分块的尺度自适应CSK跟踪算法[J].电光与控制, 2017, 24(2): 25–29. doi: 10.3969/j.issn.1671-637X.2017.02.005

Wang W, Wang C P, Fu Q, et al. Patch-based scale adaptive CSK tracking method[J]. Electronics Optics & Control, 2017, 24(2): 25–29. doi: 10.3969/j.issn.1671-637X.2017.02.005

[8] Henriques J F, Caseiro R, Martins P, et al. High-speed tracking with kernelized correlation filters[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(3): 583–596. doi: 10.1109/TPAMI.2014.2345390

[9] Danelljan M, Khan F S, Felsberg M, et al. Adaptive color attributes for real-time visual tracking[C]//Proceedings of 2014 IEEE Conference on Computer Vision and Pattern Recognition, 2014: 1090–1097.

[10] Danelljan M, Häger G, Khan F S, et al. Learning spatially regularized correlation filters for visual tracking[C]//Proceedings of 2015 IEEE International Conference on Computer Vision, 2015: 4310–4318.

[11] Bertinetto L, Valmadre J, Golodetz S, et al. Staple: complementary learners for real-time tracking[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, 2016: 1401–1409.

[12] Valmadre J, Bertinetto L, Henriques J, et al. End-to-end representation learning for correlation filter based tracking[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, 2017: 5000–5008.

[13] Danelljan M, Bhat G, Khan F S, et al. Eco: efficient convolution operators for tracking[C]//Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, 2017: 6931–6939.

[14] 包晓安, 詹秀娟, 王强, 等.基于KCF和SIFT特征的抗遮挡目标跟踪算法[J].计算机测量与控制, 2018, 26(5): 148–152. doi: 10.16526/j.cnki.11-4762/tp.2018.05.037

Bao X A, Zhan X J, Wang Q, et al. Anti occlusion target tracking algorithm based on KCF and SIFT feature[J]. Computer Measurement & Control, 2018, 26(5): 148–152. doi: 10.16526/j.cnki.11-4762/tp.2018.05.037

[15] 闫河, 张杨, 杨晓龙, 等.一种抗遮挡核相关滤波目标跟踪算法[J].光电子·激光, 2018, 29(6): 647–652. doi: 10.16136/j.joel.2018.06.0286

Yan H, Zhang Y, Yang X L, et al. A kernelized correlaton filters with occlusion handling[J]. Journal of Optoelectronics·Laser, 2018, 29(6): 647–652. doi: 10.16136/j.joel.2018.06.0286

[16] Li F, Tian C, Zuo W M, et al. Learning spatial-temporal regularized correlation filters for visual tracking[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018: 4904–4913.

[17] Li Y, Zhu J K, Hoi S C H, et al. Robust estimation of similarity transformation for visual object tracking[C]//Proceedings of the AAAI Conference on Artificial Intelligence, 2019, 33: 8666–8673.

[18] Xu T Y, Feng Z H, Wu X J, et al. Learning adaptive discriminative correlation filters via temporal consistency preserving spatial feature selection for robust visual tracking[C]//Proceedings of 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

[19] Li B, Yan J J, Wu W, et al. High performance visual tracking with Siamese region proposal network[C]//Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2018: 8971–8980.

[20] Li B, Wu W, Wang Q, et al. SiamRPN++: evolution of Siamese visual tracking with very deep networks[C]//Proceedings of 2018 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2018.

[21] 单倩文, 郑新波, 何小海, 等.基于改进多尺度特征图的目标快速检测与识别算法[J].激光与光电子学进展, 2019, 56(2): 55–62. doi: 10.3788/LOP56.021002

Shan Q W, Zheng X B, He X H, et al. Fast object detection and recognition algorithm based on improved multi-scale feature maps[J]. Laser & Optoelectronics Progress, 2019, 56(2): 55–62. doi: 10.3788/LOP56.021002

[22] Fan H, Lin L T, Yang F, et al. LaSOT: a high-quality benchmark for large-scale single object tracking[C]//Proceedings of 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

[23] Faragher R. Understanding the basis of the Kalman filter via a simple and intuitive derivation[lecture notes][J]. IEEE Signal Processing Magazine, 2012, 29(5): 128–132. doi: 10.1109/MSP.2012.2203621

[24] Bolme D S, Beveridge J R, Draper B A, et al. Visual object tracking using adaptive correlation filters[C]//Proceedings of 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2010: 2544–2550.

[25] Li Y, Zhu J K. A scale adaptive kernel correlation filter tracker with feature integration[C]//Computer Vision-ECCV 2014 Workshops, 2014: 254–265.

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: