-

摘要:

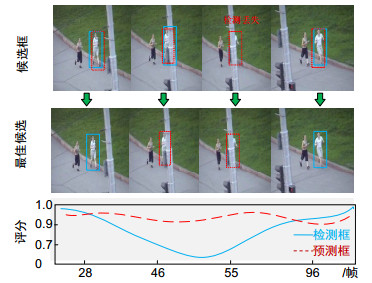

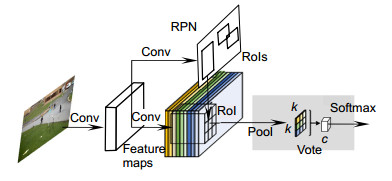

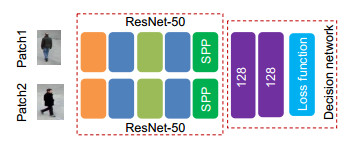

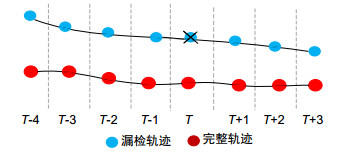

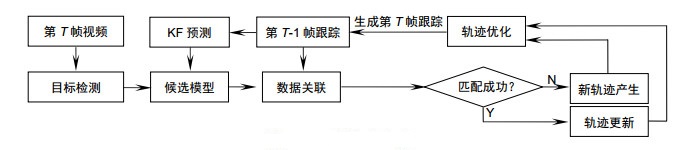

在线多目标跟踪是实时视频序列分析的重要前提。针对在线多目标跟踪中目标检测可靠性低、跟踪丢失较多、轨迹不平滑等问题,提出了基于R-FCN网络框架的多候选关联的在线多目标跟踪模型。首先,通过基于R-FCN网络从KF预测结果和检测结果中获取更可靠的候选框,然后利用Siamese网络进行基于外观特征的相似性度量,实现候选与轨迹之间的数据关联,最后通过RANSAC算法优化跟踪轨迹。在人流密集和目标被部分遮挡的复杂场景中,提出的算法具有较高的目标识别和跟踪能力,大幅减少漏检和误检现象,跟踪轨迹更加连续平滑。实验结果表明,在同等条件下,与当前已有的方法对比,本文提出在目标跟踪准确度(MOTA)、丢失轨迹数(ML)和误报次数(FN)等多个性能指标均有较大提升。

Abstract:

Abstract:Online multi-target tracking is an important prerequisite for real-time video sequence analysis. Because of low reliability in target detection, high tracking loss rate and unsmooth trajectory in online multi-target tracking, an online multi-target tracking model based on R-FCN (region based fully convolutional networks) network framework is proposed. Firstly, the target evaluation function based on R-FCN network framework is used to select more reliable candidates in the next frame between KF and detection results. Second, the Siamese network is used to perform similarity measurement based on appearance features to complete the match between candidates and tracks. Finally, the tracking trajectory is optimized by the RANSAC (random sample consensus) algorithm. In crowded and partially occluded complex scenes, the proposed algorithm has higher target recognition ability, greatly reduces the phenomenon of missed detection and false detection, and the tracking track is more continuous and smooth. The experimental results show that under the same conditions, compared with the existing methods, the performance indicators of the proposed method, such as target tracking accuracy (MOTA), number of lost trajectories (ML) and number of false positives (FN), have been greatly improved.

-

Key words:

- multi-target tracking /

- candidate model /

- Siamese network /

- trajectory estimation

-

Overview: As the application basis of human behavior recognition, semantic segmentation and unmanned driving, multi-target tracking is one of the research hotspots in the field of computer vision. In complex tracking scenarios, in order to track multiple targets stably and accurately, many difficulties in tracking need to be considered, such as camera motion, interaction between targets, missed detection and error detection. In recent years, with the rapid development of deep learning, many excellent multi-target tracking algorithms based on detection framework have emerged, which are mainly divided into online multi-target tracking method and offline multi-target tracking method. The multi-target tracking framework process on the basis of detection is as following: the target is detected by the off-line trained target detector, and then the similarity matching method is applied to correlate the detection target. Ultimately, the generated trajectory is continuously used to match the detection result to generate more reliable trajectory. Among them, online multi-target tracking methods mainly include Sort, Deep-sort, SDMT, etc., while offline multi-target tracking methods mainly include network flow model, conditional random field model and generalized association graph model. The offline multi-target tracking methods use multi-frame data information to realize the correlation between the target trajectory and the detection result in the data association process, and can obtain better tracking performance, simultaneously. Unfortunately, those methods are not used to real-time application scenarios. The online tracking methods only use the single-frame data information to complete the data association between the trajectory and the new target which is often unreliable, thus the data association of the lost target will be invalid and the ideal tracking effect cannot be obtained. For purpose of solving the reliability problem of the detection results, an online multi-target tracking method based on R-FCN framework is proposed. Firstly, a candidate model combining Kalman filtering prediction results with detection results is devised. The candidate targets are no longer only from the detection results, which enhances the robustness of the algorithm. Secondly, the Siamese network framework is applied to realize the similarity measurement with respect to the target appearance, and the multiple feature information of the target is merged to complete the data association between multiple targets, which improves the discriminating ability of the target in the complex tracking scene. In addition, on account of the possible missed detection and false detection of the target trajectory in the complex scene, the RANSAC algorithm is used to optimize the existing tracking trajectory so that we can obtain more complete and accurate trajectory information and synchronously the trajectories are more continuous and smoother. Finally, compared to some existing excellent algorithms, the experimental result indicates that the proposed method has brilliant performances in tracking accuracy, the number of lost tracks and target missed detections.

-

-

表 1 在MOT16训练集上验证算法各个模块的有效性

Table 1. Verify the validity of each module of the algorithm on the MOT16 training set

算法 S R MOTA/(%)↑ FP↓ FN↓ IDSW↓ 基准算法 28.9 2493 75805 686 √ 32.8 4159 69428 452 √ 37.7 10803 57430 537 本文算法 √ √ 39.8 6131 59898 328 注:最优算法性能指标记为红色,次优算法性能指标记为绿色。 表 2 MOT16测试集实验结果对比

Table 2. Comparison of experimental results of MOT16 test set

-

[1] Ren S Q, He K M, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2017, 39(6): 1137-1149. doi: 10.1109/TPAMI.2016.2577031

[2] Redmon J, Divvala S, Girshick R, et al. You only look once: Unified, real-time object detection[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, 2016: 779-788.

[3] 刘鑫, 金晅宏.四帧间差分与光流法结合的目标检测及追踪[J].光电工程, 2018, 45(8): 170665. doi: 10.12086/oee.2018.170665

Liu X, Jin X H. Algorithm for object detection and tracking combined on four inter-frame difference and optical flow methods[J]. Opto-Electronic Engineering, 2018, 45(8): 170665. doi: 10.12086/oee.2018.170665

[4] Bewley A, Ge Z Y, Ott L, et al. Simple online and realtime tracking[C]//2016 IEEE International Conference on Image Processing (ICIP), Phoenix, 2016: 3464-3468.

[5] Wojke N, Bewley A, Paulus D. Simple online and realtime tracking with a deep association metric[C]//2017 IEEE International Conference on Image Processing (ICIP), Beijing, 2017: 3645-3649.

[6] Thoreau M, Kottege N. Improving online multiple object tracking with deep metric learning[Z]. arXiv: 1806.07592v2[cs: CV], 2018.

[7] Sadeghian A, Alahi A, Savarese S. Tracking the untrackable: Learning to track multiple cues with long-term dependencies[Z]. arXiv: 1701.01909[cs: CV], 2017.

[8] Baisa N L. Online multi-target visual tracking using a HISP filter[C]//13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Funchal, 2018.

[9] Bae S H, Yoon K J. Confidence-based data association and discriminative deep appearance learning for robust online multi-object tracking[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, 40(3): 595-610. doi: 10.1109/TPAMI.2017.2691769

[10] Milan A, Schindler K, Roth S. Multi-target tracking by discrete-continuous energy minimization[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2016, 38(10): 2054-2068. doi: 10.1109/TPAMI.2015.2505309

[11] Dehghan A, Assari S M, Shah M. GMMCP tracker: Globally optimal generalized maximum multi clique problem for multiple object tracking[C]//2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, 2015: 4091-4099.

[12] 齐美彬, 岳周龙, 疏坤, 等.基于广义关联聚类图的分层关联多目标跟踪[J].自动化学报, 2017, 43(1): 152-160. http://d.old.wanfangdata.com.cn/Periodical/zdhxb201701013

Qi M B, Yue Z L, Shu K, et al. Multi-object tracking using hierarchical data association based on generalized correlation clustering graphs[J]. Acta Automatica Sinica, 2017, 43(1): 152-160. http://d.old.wanfangdata.com.cn/Periodical/zdhxb201701013

[13] Wen L Y, Li W B, Yan J J, et al. Multiple target tracking based on undirected hierarchical relation hypergraph[C]//2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, 2014: 1282-1289.

[14] Zagoruyko S, Komodakis N. Learning to compare image patches via convolutional neural networks[C]//2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, 2015: 4353-4361.

[15] Dai J F, Li Y, He K M, et al. R-FCN: Object detection via region-based fully convolutional networks[Z]. arXiv: 1605.06409[cs: CV], 2016.

[16] Iandola F N, Han S, Moskewicz M W, et al. SqueezeNet: Alexnet-level accuracy with 50x fewer parameters and < 0.5 MB model size[Z]. arXiv: 1602.07360[cs: CV], 2016.

[17] He K M, Zhang X Y, Ren S Q, et al. Spatial pyramid pooling in deep convolutional networks for visual recognition[Z]. arXiv: 1406.4729[cs: CV], 2014.

[18] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, 2016: 770-778.

[19] Zheng L, Shen L Y, Tian L, et al. Scalable person re-identification: A benchmark[C]//2015 IEEE International Conference on Computer Vision (ICCV), Santiago, 2015: 1116-1124.

[20] Bernardin K, Stiefelhagen R. Evaluating multiple object tracking performance: the CLEAR MOT metrics[J]. EURASIP Journal on Image and Video Processing, 2008, 2008: 246309. doi: 10.1155/2008/246309

-

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: