Improved algorithm of Faster R-CNN based on double threshold-non-maximum suppression

-

摘要

根据目标检测算法中出现的目标漏检和重复检测问题,本文提出了一种基于双阈值-非极大值抑制的Faster R-CNN改进算法。算法首先利用深层卷积网络架构提取目标的多层卷积特征,然后通过提出的双阈值-非极大值抑制(DT-NMS)算法在RPN阶段提取目标候选区域的深层信息,最后使用了双线性插值方法来改进原RoI pooling层中的最近邻插值法,使算法在检测数据集上对目标的定位更加准确。实验结果表明,DT-NMS算法既有效地平衡了单阈值算法对目标漏检问题和目标误检问题的关系,又针对性地减小了同一目标被多次检测的概率。与soft-NMS算法相比,本文算法在PASCAL VOC2007上的重复检测率降低了2.4%,多次检测的目标错分率降低了2%。与Faster R-CNN算法相比,本文算法在PASCAL VOC2007上检测精度达到74.7%,性能提升了1.5%。在MSCOCO数据集上性能提升了1.4%。同时本文算法具有较快的检测速度,达到16 FPS。

Abstract

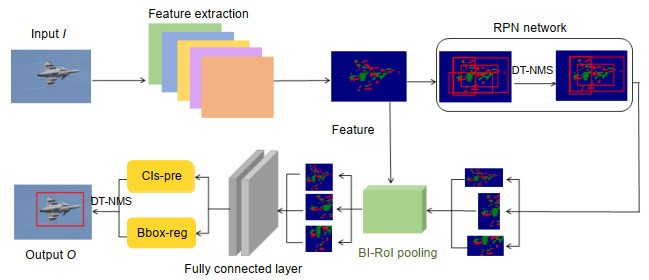

According to the problems of target missed detection and repeated detection in the object detection algorithm, this paper proposes an improved Faster R-CNN algorithm based on dual threshold-non-maximum suppression. The algorithm first uses the deep convolutional network architecture to extract the multi-layer convolution features of the targets, and then proposes the dual threshold-non-maximum suppression (DT-NMS) algorithm in the RPN(region proposal network). The phase extracts the deep information of the target candidate regions, and finally uses the bilinear interpolation method to improve the nearest neighbor interpolation method in the original RoI pooling layer, so that the algorithm can more accurately locate the target on the detection dataset. The experimental results show that the DT-NMS algorithm effectively balances the relationship between the single-threshold algorithm and the target missed detection problem, and reduces the probability of repeated detection. Compared with the soft-NMS algorithm, the repeated detection rate of the DT-NMS algorithm in PASCAL VOC2007 is reduced by 2.4%, and the target error rate of multiple detection is reduced by 2%. Compared with the Faster R-CNN algorithm, the detection accuracy of this algorithm on the PASCAL VOC2007 is 74.7%, the performance is improved by 1.5%, and the performance on the MSCOCO dataset is improved by 1.4%. At the same time, the algorithm has a fast detection speed, reaching 16 FPS.

-

Overview

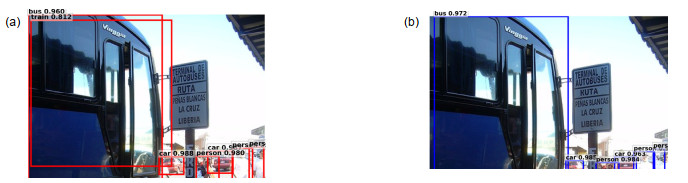

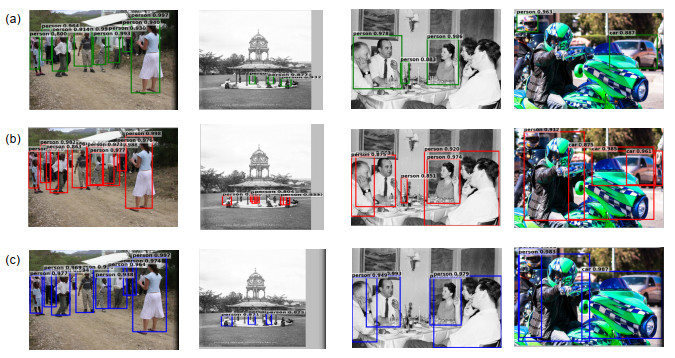

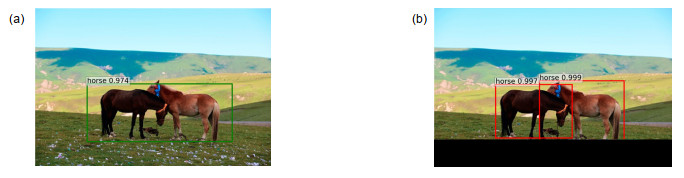

Overview: The Faster R-CNN algorithm uses the non-maximum suppression algorithm for proposals filtering. It adopts the idea of “non-one or zero”, leaving only the candidate box with the highest score of the classification targets, which greatly increases the risk that the target will be missed when it is highly overlapping. Therefore, the “weight penalty” strategy is employed by the soft-NMS algorithm to solve this problem, which reduces the target missed detection to a certain extent. However, the test found that the use of the soft-NMS algorithm will greatly increase the number of proposals, resulting in a new problem that the same target is repeatedly detected and multiple detections have mis-targeted the targets, especially when there are multiple targets in the image and the degree of overlap of the targets is high. According to the problems of target missed detection and repeated detection in the object detection algorithm, this paper proposes an improved Faster R-CNN algorithm based on double threshold-non-maximum suppression. The algorithm first uses the VGG-Net-16 deep convolutional network architecture to extract the multi-layer convolution features of the targets, and then proposes the dual threshold-non-maximum suppression (DT-NMS) algorithm in the RPN (region proposal network). The stage extracts the deep information of the target candidate regions, and finally uses the bilinear interpolation method to improve the nearest neighbor interpolation method in the original RoI pooling layer, so that the algorithm can locate the targets more accurately on the detection dataset. In order to highlight the performance of the DT-NMS algorithm on the target repetitive detection problem, this paper first proposed the repeated detection rate and the object mis-distribution rate of multiple detections as the measurement index. By simply setting the threshold in the DT-NMS algorithm, the relationship between the single-threshold algorithm and the target misdetection problem is effectively balanced, and the probability that the same target is detected multiple times is reduced. The improved Faster R-CNN algorithm re-adjusts network training and parameters on the VGG-Net-16 network structure, and a lot of experimental verification on the PASCAL VOC data set has been implemented. The experimental results show that compared with the soft-NMS algorithm, the repeated detection rate of the proposed algorithm in PASCAL VOC2007 is reduced by 2.4%, and the target error rate of multiple detections is reduced by 2%, indicating that the improved algorithm solves the problem of target missed detection and repeated detection in the traditional algorithms. Compared with the Faster R-CNN algorithm, the detection accuracy of this algorithm on the PASCAL VOC2007 is 74.7%, and the performance is improved by 1.5%. At the same time, the algorithm has a fast detection speed, reaching 16 FPS.

-

-

表 算法 1 一种基于双阈值-非极大值抑制的Faster R-CNN改进算法

Input: Image / Output: Image O with detected object Step one: Extracting features through a deep convolutional network to obtain features map of the input image Step two: Use the DT-NMS algorithm to obtain proposals information in the RPN network Step three: Map the deep information of the proposals information back to the features map Step four: After using the BI-RoI pooling method, the proposals information of different sizes are fixed to a uniform size Step five: Detected image obtained by two fully connected layers Algorithm over 表 1 不同阈值组合下的算法的准确度对比

Table 1. Comparison of algorithm precision under different threshold combinations

Ni Nt 0.3 0.35 0.4 0.45 0.5 0.8 73.95 74.05 74.01 74.10 74.19 0.85 74.15 74.20 74.18 74.17 74.1 0.9 74.43 74.50 74.05 74.30 74.36 0.95 74.62 74.72 74.66 74.58 74.44 表 2 本文算法和soft-NMS在重复检测问题上的结果对比

Table 2. Comparison of the results obtained by the proposed algorithm and soft-NMS on the repeated detection problem

Algorithm Rtotal R2 R3 Cerror Rrate/% Cerror-rate/% soft-NMS 592 543 49 64 11.9 10.8 Ours 471 441 30 38 9.5 8.6 表 3 本文算法中各模块的检测精度和速度

Table 3. Detection accuracy and speed of each module in the algorithm

Algorithm mAP Detection speed/(FPS) Threshold Network Faster R-CNN 73.2 15.3 Nt=0.6 VGG16 Faster+soft-NMS 74.3 15.1 Nt=0.3 VGG16 Faster+DT-NMS 74.3 16.0 (Nt, Ni)=(0.35, 0.95) VGG16 Faster+DT-NMS+BI-RoI pooling 74.7 16.0 (Nt, Ni)=(0.35, 0.95) VGG16 Faster R-CNN 59.9 15.8 Nt=0.6 AlexNet Faster +soft-NMS 63.2 15.6 Nt=0.3 AlexNet Faster+DT-NMS 63.4 15.6 (Nt, Ni)=(0.35, 0.95) AlexNet Faster+DT-NMS+BI-RoI pooling 63.7 15.6 (Nt, Ni)=(0.35, 0.95) AlexNet 表 4 本文算法和其他算法在PASAL VOC2007数据集上的检测结果

Table 4. Test results of the proposed algorithm and other algorithms on the PASAL VOC2007 dataset

Algorithm aero bike bird boat bottle bus car cat chair cow table dog horse motor person plant sheep sofa train tv mAP Fast R-CNN 77.0 78.1 69.3 59.4 38.3 81.6 78.6 86.7 42.8 78.8 68.9 84.7 82.0 76.6 69.9 31.8 70.1 74.8 80.4 70.4 70.0 A-Fast-RCNN 75.7 83.6 68.4 58.0 44.7 81.9 80.4 86.3 53.7 76.1 72.5 82.6 83.9 77.1 73.1 38.1 70.0 69.7 78.8 73.1 71.4 Faster R-CNN 76.5 79.0 70.9 65.5 52.1 83.1 84.7 86.4 52.0 81.9 65.7 84.8 84.6 77.5 76.7 38.8 73.6 73.9 83.0 72.6 73.2 RON320 75.7 79.4 74.8 66.1 53.2 83.7 83.6 85.8 55.8 79.5 69.5 84.5 81.7 83.1 76.1 49.2 73.8 75.2 80.3 72.5 74.2 soft-NMS 76.9 81.3 74.8 64.9 60.2 81.8 86.2 85.6 55.8 80.0 67.5 82.8 82.3 79.6 81.0 43.4 77.1 71.7 79.8 73.7 74.3 Ours 76.0 81.0 74.8 64.6 62.5 81.6 85.7 87.5 56.8 81.2 68.2 84.3 82.9 78.9 81.0 43.8 78.3 70.0 80.9 73.9 74.7 表 5 本文算法和一阶段检测算法在PASAL VOC2007数据集上的检测结果

Table 5. Test results of the proposed algorithm and one-stage detection algorithm on the PASAL VOC2007 dataset

Algorithm Network mAP YoLo VGG16 63.4 Faster R-CNN VGG16 73.2 YoLov2 VGG16 73.7 SSD VGG16 74.3 Faster+soft-NMS VGG16 74.3 Ours VGG16 74.7 表 6 本文算法和其他算法在MSCOCO数据集上的检测结果

Table 6. Test results of the proposed algorithm and other algorithms on the MSCOCO dataset

Algorithm Network AP(0.5:0.95) AP@0.5 YoLov2 VGG16 21.6 44.0 SSD VGG16 23.2 41.2 Faster R-CNN VGG16 24.4 45.7 Faster+soft-NMS VGG16 25.5 46.7 Ours VGG16 25.8 47.1 YoLov3 Darknet 33.0 57.9 RetinaNet ResNet101 34.4 53.1 -

参考文献

[1] Borji A, Cheng M M, Jiang H Z, et al. Salient object detection: a benchmark[J]. IEEE Transactions on Image Processing, 2015, 24(12): 5706-5722. doi: 10.1109/TIP.2015.2487833

[2] 罗海波, 许凌云, 惠斌, 等.基于深度学习的目标跟踪方法研究现状与展望[J].红外与激光工程, 2017, 46(5): 0502002. http://d.old.wanfangdata.com.cn/Periodical/hwyjggc201705002

Luo H B, Xu L Y, Hui B, et al. Status and prospect of target tracking based on deep learning[J]. Infrared and Laser Engineering, 2017, 46(5): 0502002. http://d.old.wanfangdata.com.cn/Periodical/hwyjggc201705002

[3] 侯志强, 韩崇昭.视觉跟踪技术综述[J].自动化学报, 2006, 32(4): 603-617. http://d.old.wanfangdata.com.cn/Periodical/zdhxb200604016

Hou Z Q, Han C Z. A survey of visual tracking[J]. Acta Automatica Sinica, 2006, 32(4): 603-617. http://d.old.wanfangdata.com.cn/Periodical/zdhxb200604016

[4] 辛鹏, 许悦雷, 唐红, 等.全卷积网络多层特征融合的飞机快速检测[J].光学学报, 2018, 38(3): 0315003. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=gxxb201803035

Xin P, Xu Y L, Tang H, et al. Fast airplane detection based on multi-layer feature fusion of fully convolutional networks[J]. Acta Optica Sinica, 2018, 38(3): 0315003. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=gxxb201803035

[5] 戴伟聪, 金龙旭, 李国宁, 等.遥感图像中飞机的改进YOLOv3实时检测算法[J].光电工程, 2018, 45(12): 180350. doi: 10.12086/oee.2018.180350 http://www.oejournal.org/J/OEE/Article/Details/A181130000018/CN

Dai W C, Jin L X, Li G N, et al. Real-time airplane detection algorithm in remote-sensing images based on improved YOLOv3[J]. Opto-Electronic Engineering, 2018, 45(12): 180350. doi: 10.12086/oee.2018.180350 http://www.oejournal.org/J/OEE/Article/Details/A181130000018/CN

[6] 王思明, 韩乐乐.复杂动态背景下的运动目标检测[J].光电工程, 2018, 45(10): 180008 doi: 10.12086/oee.2018.180008 http://www.oejournal.org/J/OEE/Article/Details/A181012000018/CN

Wang S M, Han L L. Moving object detection under complex dynamic background[J]. Opto-Electronic Engineering, 2018, 45(10): 180008. doi: 10.12086/oee.2018.180008 http://www.oejournal.org/J/OEE/Article/Details/A181012000018/CN

[7] 周炫余, 刘娟, 卢笑, 等.一种联合文本和图像信息的行人检测方法[J].电子学报, 2017, 45(1): 140-146. doi: 10.3969/j.issn.0372-2112.2017.01.020

Zhou X Y, Liu J, Lu X, et al. A method for pedestrian detection by combining textual and visual information[J]. Acta Electronica Sinica, 2017, 45(1): 140-146. doi: 10.3969/j.issn.0372-2112.2017.01.020

[8] 曹明伟, 余烨.基于多层背景模型的运动目标检测[J].电子学报, 2016, 44(9): 2126-2133. doi: 10.3969/j.issn.0372-2112.2016.09.016

Cao M W, Yu Y. Moving object detection based on multi-layer background model[J]. Acta Electronica Sinica, 2016, 44(9): 2126-2133. doi: 10.3969/j.issn.0372-2112.2016.09.016

[9] Zhang Z S, Qiao S Y, Xie C H, et al. Single-shot object detection with enriched semantics[C]//Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, 2018: 5813-5821.

[10] Redmon J, Divvala S, Girshick R, et al. You only look once: unified, real-time object detection[C]//Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, 2016: 779-788.

[11] Liu W, Anguelov D, Erhan D, et al. SSD: single shot MultiBox detector[EB/OL]. (2016-12-29) [2019-05-28]. arXiv: 1512. 02325 v1. https://arxiv.org/abs/1512.02325v1.

[12] Girshick R, Donahue J, Darrell T, et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]//Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Columbus, USA, 2014: 580-587.

[13] He K M, Zhang X Y, Ren S Q, et al. Spatial pyramid pooling in deep convolutional networks for visual recognition[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2015, 37(9): 1904-1916. doi: 10.1109/TPAMI.2015.2389824

[14] Girshick R. Fast R-CNN[C]//Proceedings of IEEE International Conference on Computer Vision, Santiago, Chile, 2015: 1440-1448.

[15] Ren S, He K M, Girshick R, et al. Faster R-CNN: towards real-time object detection with region proposal networks[EB/OL]. (2015-06-04)[2019-05-28]. arXiv: 1506.01497. https: //arxiv.org/abs/1506.01497?source=post_page.

[16] Bodla N, Singh B, Chellappa R, et al. Soft-NMS - improving object detection with one line of code[C]//Proceedings of IEEE International Conference on Computer Vision, Venice, Italy, 2017: 5562-5570.

[17] He K M, Gkioxari G, Dollár P, et al. Mask R-CNN[C]// Proceedings of IEEE International Conference on Computer Vision, Venice, Italy, 2017: 2980-2988.

[18] Wang X L, Shrivastava A, Gupta A. A-Fast-RCNN: hard positive generation via adversary for object detection[C]//Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 3039-3048.

[19] Kong T, Sun F C, Yao A B, et al. RON: reverse connection with objectness prior networks for object detection[C]//Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 5244-5252.

[20] Redmon J, Farhadi A. YOLO9000: better, faster, stronger[C]//Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 6517-6525.

[21] Lin T Y, Goyal P, Girshick R, et al. Focal loss for dense object detection[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2018, doi: 10.1109/TPAMI.2018.2858826.

[22] Redmon J, Farhadi A. YOLOv3: an incremental improvement[EB/OL]. (2018-04-08)[2019-05-28]. arXiv: 1804.02767. https://arxiv.org/abs/1804.02767.

[23] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, USA, 2016: 770-778.

[24] Huang G, Liu Z, van der Maaten L, et al. Densely connected convolutional networks[C]//Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 2017: 2261-2269.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: