-

摘要

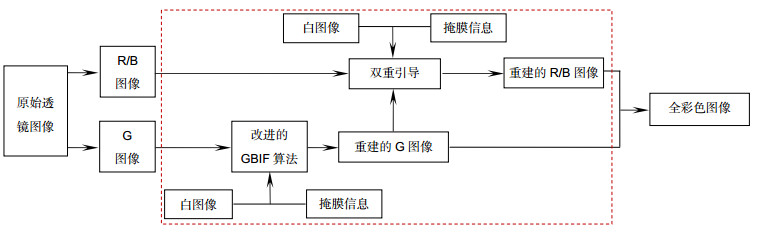

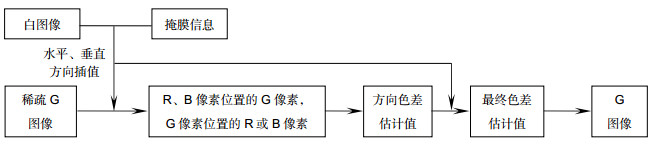

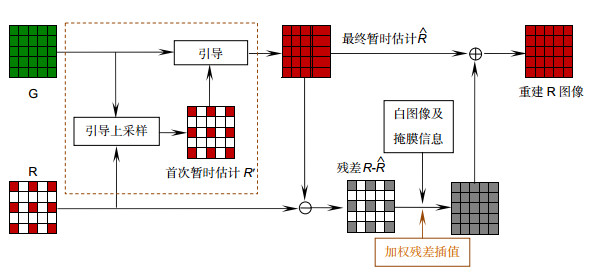

针对光场相机特定透镜结构及透镜边缘像素混叠导致获得的光场多视角图像质量较差的问题,本文提出了一种基于双引导滤波的光场去马赛克算法。首先用白图像及透镜掩膜信息重新加权基于梯度的无阈值(GBTF)算法重建G图像,然后使用重建的G图像对R/B图像进行双引导重建R/B图像,最后将重建的R、G、B图像组合为全彩色图像。实验结果表明,与其他先进去马赛克方法相比,指标CPSNR提高1.68%,指标SSIM提高2%,并且本文方法得到的光场多视角图像具有清晰的边缘和较少的颜色伪影。

Abstract

Aiming at the problem that the light field multi-view image quality is poor which is resulting from the specific lenslet structure of the light field camera and pixel aliasing at the lenslet edge, a light field demosaicing algorithm based on double-guided filtering is proposed. First, the G image is reconstructed by reweighting the gradient based threshold free (GBTF) algorithm with the white image and lenslet mask information. Then, the reconstructed G image is used to double-guide the R/B image for reconstruction. Finally, the reconstructed R, G, and B images are combined into a full color image. The demosaicing result demonstrates that compared with other advanced demosaicing algorithms, the index CPSNR is increased by 1.68%, the index SSIM is increased by 2%, and the light field multi-view image obtained by our method has clear edges and less color artifacts.

-

Key words:

- demosaicing /

- light field /

- microlens array /

- double guided filtering

-

Overview

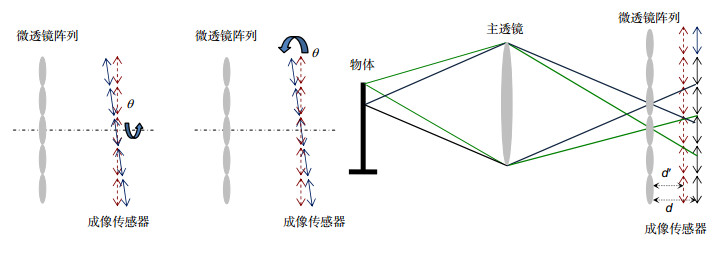

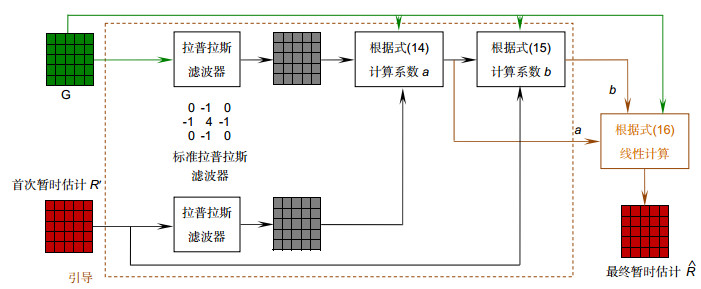

Overview: With the development of light field imaging technology, the light field camera, as a new multi-view imaging device, has become popular in computer imaging community. Light field cameras can be divided into three categories: camera array cameras, mask cameras, and microlens cameras. Due to its simple structure and small size, the microlens camera has been widely used. Since the microlens camera uses a single CCD sensor with a color filter array (CFA) to capture the 3D scene information, it can only sample one of the RGB values for each pixel. In order to obtain a high quality light field color image, the light field camera needs to be demosaiced to obtain a full-color image. The demosaicing algorithm of the traditional cameras has been studied for decades, and the corresponding technologies are very mature. Different from the regulars image, every microlens image has aliasing or vignetting effect at the boundary owing to its special structure. Therefore, it is not suitable to directly apply a conventional demosaicing algorithm to the microlens images to obtain a full-color image. In recent years, many light field demosaicing algorithms have been proposed to achieve reasonable results when there is no aliasing or vignetting on the microlens images. However, when there are aliasing and vignetting effects on the microlens images, the performance of these algorithms becomes worse and some terrible phenomena may appear in full-color images, such as image blurring and color artifacts. To solve the above issue, a light field demosaicing algorithm based on double-guided filtering is proposed. Wherein, double-guided filtering refers to using two guiding filters, that is, applying a sparse Laplacian to the input image in the first guiding filtering, and obtaining an output by minimizing sparse Laplacian energy. In the second boot filtering, the output of the first boot filter is used as the input, and a standard Laplacian is applied to the input image by minimizing the standard Laplacian energy, which can effectively preserve the structure of the guided image. First, the G image is reconstructed by reweighting the gradient based threshold free (GBTF) algorithm with the white image and lenslet mask information. Then, the reconstructed G image is used to double-guide the R/B image for reconstruction. Finally, the reconstructed R, G, and B images are combined into a full-color image. The experiments are carried out on the synthetic light field dataset and the real scene light field dataset, respectively, which verify the effectiveness of the proposed algorithm by increasing the index CPSNR by 1.68%, the index SSIM by 2%, comparing with the state of the arts. The light field full-color images obtained by our method have clear edges and less color artifacts.

-

-

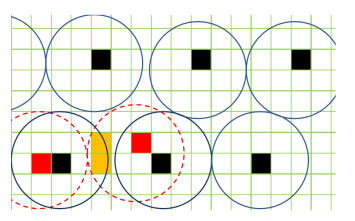

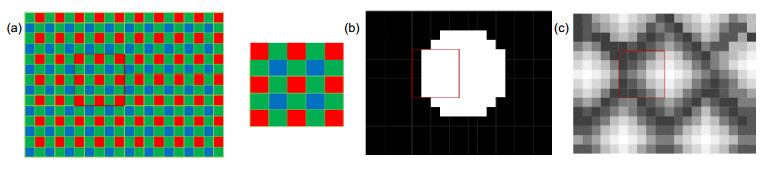

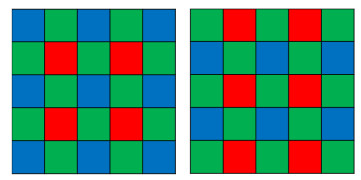

图 4 微透镜边缘混叠示意图。每个蓝色实圈代表标定后的微透镜图像,黑色像素代表其中心位置。每个红色虚圈代表真实的微透镜图像,红色像素代表其中心位置

Figure 4. Microlens edge aliasing diagram. Each blue solid circle represents the calibrated microlens image, with black pixels representing its center position. Each red dotted circle represents the true microlens image and the red pixel represents its center position

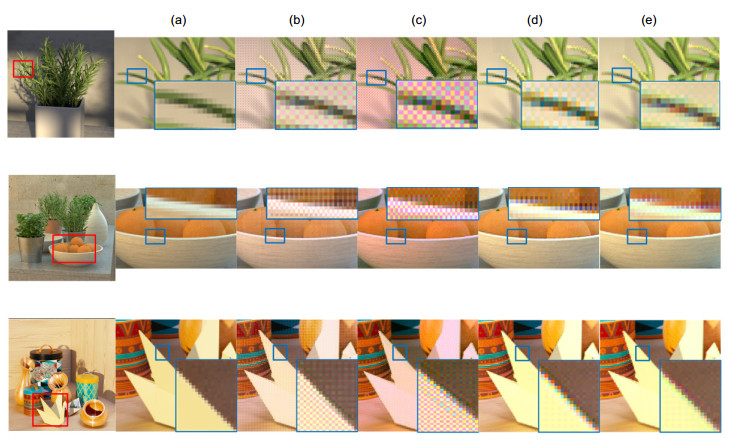

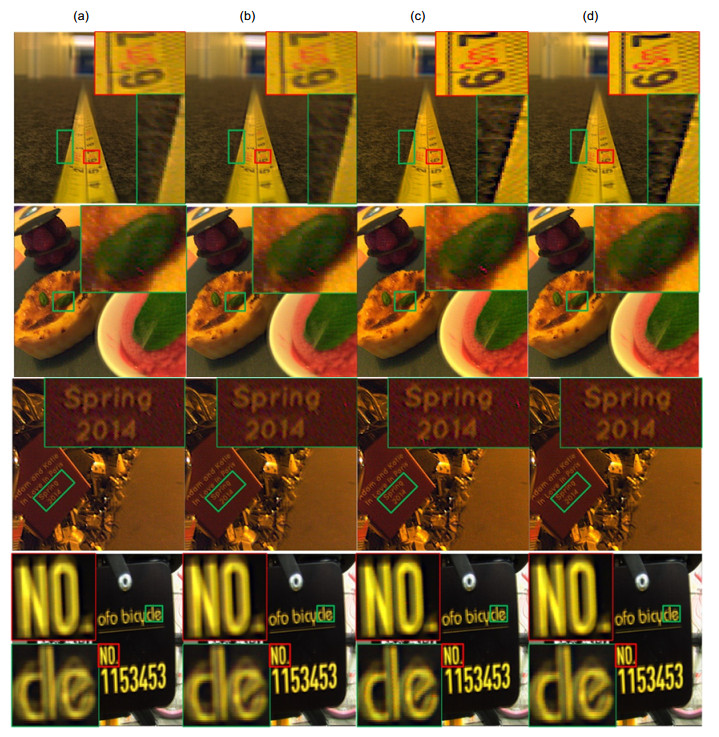

图 10 合成光场场景图。(a) GT值;(b)文献[21]方法;(c)文献[28]方法;(d)文献[17]方法;(e)本文方法。选取的图像是光场多视角图像的(2, 2)视角

Figure 10. Synthetic light field scene image. (a) GT value; (b) The method of Ref.[21]; (c) The method of Ref.[28]; (d) The method of Ref.[17]; (e) Our method. The selected image is the (2, 2) view of the multi-view image of the light field

表 1 有渐晕且边缘混叠情况下的定量指标(CPSNR)

Table 1. Quantitative indicators (CPSNR) with vignetting and edge aliasing

Dataset table Bicycle rosemary backgammon vinyl herbs boxes sideboard origami dishes Avg 文献[21] 39.73 37.76 37.23 43.12 39.72 40.46 40.17 35.53 40.23 36.02 38.99 文献[28] 39.32 37.56 38.36 43.48 40.47 40.93 39.54 35.62 37.95 37.48 39.07 文献[17] 43.52 40.43 40.82 44.74 42.55 41.73 43.33 37.39 42.91 38.52 41.59 本文 43.82 42.21 41.49 45.57 42.78 42.55 43.53 37.77 44.39 38.76 42.29 表 2 有渐晕且边缘混叠情况下的定量指标(SSIM)

Table 2. Quantitative indicators (SSIM) with vignetting and edge aliasing

Dataset table Bicycle rosemary backgammon vinyl herbs boxes sideboard origami dishes Avg 文献[21] 0.763 0.731 0.818 0.723 0.839 0.686 0.777 0.679 0.712 0.622 0.735 文献[28] 0.703 0.712 0.809 0.676 0.806 0.674 0.768 0.716 0.705 0.652 0.722 文献[17] 0.784 0.761 0.902 0.747 0.904 0.723 0.884 0.775 0.826 0.696 0.8 本文 0.798 0.768 0.921 0.768 0.918 0.737 0.892 0.778 0.866 0.715 0.816 -

参考文献

[1] Ng R, Levoy M, Brédif M, et al. Light field photography with a hand-held plenoptic camera: CS-TR-2005-02[R]. Stanford: Stanford University, 2005.

[2] 王丽娟, 张骏, 张旭东, 等.局部搜索式的Lytro相机微透镜阵列中心标定[J].光电工程, 2016, 43(11): 19–25. doi: 10.3969/j.issn.1003-501X.2016.11.004

Wang L J, Zhang J, Zhang X D, et al. Micro-lens array center calibration via local searching using lytro cameras[J]. Opto-Electronic Engineering, 2016, 43(11): 19–25. doi: 10.3969/j.issn.1003-501X.2016.11.004

[3] Chan W S, Lam E Y, Ng M K, et al. Super-resolution reconstruction in a computational compound-eye imaging system[J]. Multidimensional Systems and Signal Processing, 2007, 18(2–3): 83–101. doi: 10.1007/s11045-007-0022-3

[4] Bishop T E, Zanetti S, Favaro P. Light field superresolution[C]//Proceedings of 2009 IEEE International Conference on Computational Photography (ICCP), 2009: 1–9.

[5] Georgiev T, Lumsdaine A. Superresolution with plenoptic 2.0 cameras[C]//Signal Recovery and Synthesis 2009, 2009.

[6] Bishop T E, Favaro P. The light field camera: extended depth of field, aliasing, and superresolution[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2012, 34(5): 972–986. doi: 10.1109/TPAMI.2011.168

[7] Mitra K, Veeraraghavan A. Light field denoising, light field superresolution and stereo camera based refocussing using a GMM light field patch prior[C]//Proceedings of 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, 2012.

[8] Boominathan V, Mitra K, Veeraraghavan A. Improving resolution and depth-of-field of light field cameras using a hybrid imaging system[C]//Proceedings of 2014 IEEE International Conference on Computational Photography (ICCP), 2014.

[9] Yoon Y, Jeon H G, Yoo D, et al. Learning a deep convolutional network for light-field image super-resolution[C]//Proceedings of 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), 2015.

[10] Wang Y W, Liu Y B, Heidrich W, et al. The light field attachment: turning a DSLR into a light field camera using a low budget camera ring[J]. IEEE Transactions on Visualization and Computer Graphics, 2017, 23(10): 2357–2364. doi: 10.1109/TVCG.2016.2628743

[11] 邓武, 张旭东, 熊伟, 等.融合全局与局部视角的光场超分辨率重建[J].计算机应用研究, 2019, 36(5): 1549–1554, 1559. 10.19734/j.issn.1001-3695.2017.12.0835

Deng W, Zhang X D, Xiong W, et al. Light field super-resolution using global and local multi-views[J]. Application Research of Computers, 2019, 36(5): 1549–1554, 1559. 10.19734/j.issn.1001-3695.2017.12.0835

[12] Huang X, Cossairt O. Dictionary learning based color demosaicing for plenoptic cameras[C]//Proceedings of 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2014: 455–460.

[13] Seifi M, Sabater N, Drazic V, et al. Disparity-guided demosaicking of light field images[C]//Proceedings of 2014 IEEE International Conference on Image Processing, 2015.

[14] Yu Z, Yu J Y, Lumsdaine A, et al. An analysis of color demosaicing in plenoptic cameras[C]//Proceedings of 2012 IEEE Conference on Computer Vision and Pattern Recognition, 2012: 901–908.

[15] Xu S, Zhou Z L, Devaney N. Multi-view image restoration from plenoptic raw images[C]//Computer Vision - ACCV 2014 Workshops, 2014, 9009: 3–15.

[16] Cho H, Yoo H. Masking based demosaicking for image enhancement using plenoptic camera[J]. International Journal of Applied Engineering Research, 2018, 13(1): 273–276. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=631008e38ecaa7dba9b7305be8a20b10

[17] David P, le Pendu M, Guillemot C. White lenslet image guided demosaicing for plenoptic cameras[C]//Proceedings of the 19th International Workshop on Multimedia Signal Processing (MMSP), 2017.

[18] Adams Jr J E, Hamilton Jr J F. Adaptive color plane interpolation in single sensor color electronic camera: EP0732858A3[P]. 1996-09-18.

[19] Malvar H S, He L W, Cutler R. High-quality linear interpolation for demosaicing of bayer-patterned color images[C]//Proceedings of 2004 IEEE International Conference on Acoustics, Speech, and Signal Processing, 2004.

[20] Zhang L, Wu X L. Color demosaicking via directional linear minimum mean square-error estimation[J]. IEEE Transactions on Image Processing, 2005, 14(12): 2167–2178. doi: 10.1109/TIP.2005.857260

[21] Pekkucuksen I, Altunbasak Y. Gradient based threshold free color filter array interpolation[C]//Proceedings of 2010 IEEE International Conference on Image Processing, 2010.

[22] Zhang L, Wu X L, Li X. Color demosaicking by local directional interpolation and nonlocal adaptive thresholding[J]. Journal of Electronic Imaging, 2011, 20(2): 023016. doi: 10.1117/1.3600632

[23] Chen X D, He L W, Jeon G, et al. Multidirectional weighted interpolation and refinement method for bayer pattern CFA demosaicking[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2015, 25(8): 1271–1282. doi: 10.1109/TCSVT.2014.2313896

[24] Alleysson D, Susstrunk S, Herault J. Linear demosaicing inspired by the human visual system[J]. IEEE Transactions on Image Processing, 2005, 14(4): 439–449. doi: 10.1109/TIP.2004.841200

[25] Dubois E. Frequency-domain methods for demosaicking of Bayer-sampled color images[J]. IEEE Signal Processing Letters, 2005, 12(12): 847–850. doi: 10.1109/LSP.2005.859503

[26] Leung B, Jeon G, Dubois E. Least-squares luma-chroma demultiplexing algorithm for bayer demosaicking[J]. IEEE Transactions on Image Processing, 2011, 20(7): 1885–1894. doi: 10.1109/TIP.2011.2107524

[27] Kiku D, Monno Y, Tanaka M, et al. Residual interpolation for color image demosaicking[C]//Proceedings of 2013 IEEE International Conference on Image Processing, 2013.

[28] Kiku D, Monno Y, Tanaka M, et al. Minimized-laplacian residual interpolation for color image demosaicking[J]. Proceedings of the SPIE, 2014, 9023: 90230L. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=CC0214508046

[29] Dansereau D G, Pizarro O, Williams S B. Decoding, calibration and rectification for lenselet-based plenoptic cameras[C]//Proceedings of 2013 IEEE Conference on Computer Vision and Pattern Recognition, 2013.

[30] Wanner S, Meister S, Goldluecke B. Datasets and benchmarks for densely sampled 4D light fields[C]//Vision Modeling and Visualization, 2013: 225–226.

[31] Mousnier A, Vural E, Guillemot C. Lytro dataset[EB/OL].[2017-05-03]. http://www.irisa.fr/temics/demos/lightField/index.html.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: