-

摘要

卷积神经网络在单标签图像分类中表现出了良好的性能,但是,如何将其更好地应用到多标签图像分类仍然是一项重要的挑战。本文提出一种基于卷积神经网络并融合注意力机制和语义关联性的多标签图像分类方法。首先,利用卷积神经网络来提取特征;其次,利用注意力机制将数据集中的每个标签类别和输出特征图中的每个通道进行对应;最后,利用监督学习的方式学习通道之间的关联性,也就是学习标签之间的关联性。实验结果表明,本文方法可以有效地学习标签之间语义关联性,并提升多标签图像分类效果。

Abstract

Multi-label image classification which is a generalization of the single-label image classification is aimed to assign multi-labels to the image to full express the specific visual concepts contained in the image. We propose a method based on convolutional neural networks, which combines attention mechanism and semantic relevance, to solve the multi label problem. Firstly, we use convolution neural network to extract features. Then, we apply the attention mechanism to obtain the correspondence between the label and channel of the feature map. Finally, we explore the channel-wise correlation which is essentially the semantic dependencies between labels by means of supervised learning. The experimental results show that the proposed method can exploit the dependencies between multiple tags to improve the performance of multi label image classification.

-

Overview

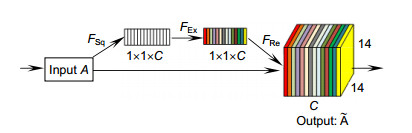

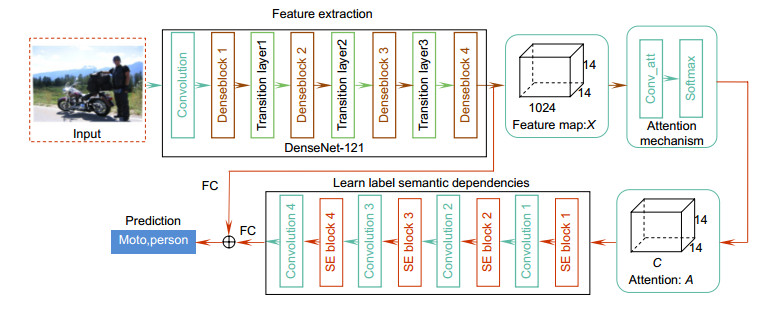

Overview: As a fundamental task of image classification problems, single-label image classification has been researched for decades and has made good progress. However, multi-label image classification task is not only a general and practical problem, but also a challenging task, because most real-world images often contain rich semantic information, such as multiple objects, scenes, attributes, and actions. In this paper, combines attention mechanism and semantic relevance, a method based on convolutional neural networks is proposed to solve the multi label problem. Firstly, we use the recent most popular convolutional neural network denseNet-121 to extract image features. Traditional methods usually to pre-process the images by extracting hand-craft features and train a classifier. However, these hand-craft are designed for different visual tasks. In contrast, the method based on convolutional neural network can extract more discriminative features from images by powerful feature learning ability. Secondly, the attention mechanism which can explore the basic spatial relation has recently been applied to many computer vision tasks. For multi-label images classification, most of the images have different semantic information and we tag them with several labels. We hope that we will use the attention mechanism to focus on the areas of interest where we need to identify and the channels of the feature map can correspond to the categories of the dataset so as to better explore the dependencies between labels. Consequently, we use the image feature map extracted from the network as the input of the attention mechanism and utilize convolution operation to preliminarily learn the conversion relationship between the label and the channel. Then, we employ the softmax function to ensure that each group channel of the feature map has a tag response. The softmax operation may cause visual feature redundancies, because the network also learns some negative feature information, that is, the corresponding labels that are not existed in the images. So, we exploit the SE module to eliminate the negative feature information. The Squeeze-and-Excitation (SE) block which is a structural unit is able to definitely model inter-dependencies between channels. And this unit focuses on channels through adaptively adjusting channel-wise feature information. Finally, we explore the channel-wise correlation which is essentially the semantic dependencies between labels by means of supervised learning. This special approach using the SE block and the convolution operation alternately is able to more accurately learn the dependencies between channels. The experimental results show that the proposed method can exploit the dependencies between multiple tags to improve the performance of multi label image classification.

-

-

表 1 Pascal VOC2007数据集实验结果

Table 1. The experimental results on Pascal VOC2007 dataset

Labels Plane Bike Bird Boat Bottle Bus Car Cat Chair Cow Table Dog Horse Motor Person Plant Sheep Sofa Train Tv mAP CNN-SVM 88.5 81.0 83.5 82.0 42.0 72.5 85.3 81.6 59.9 58.5 66.5 77.8 81.8 78.8 90.2 54.8 71.1 62.6 87.2 71.8 73.9 CNN-RNN 96.7 83.1 94.2 92.8 61.2 82.1 89.1 94.2 64.2 83.6 70.0 92.4 91.7 84.2 93.7 59.8 93.2 75.3 99.7 78.6 84.0 RLSD 96.4 92.7 93.8 94.1 71.2 92.5 94.2 95.7 74.3 90.0 74.2 95.4 96.2 92.1 97.9 66.9 93.5 73.7 97.5 87.6 88.5 Very deep 98.9 95.0 96.8 95.4 69.7 90.4 93.5 96.0 74.2 86.6 87.8 96.0 96.3 93.1 97.2 70.0 92.1 80.3 98.1 87.0 89.7 Densenet 99.1 95.4 96.6 95.4 70.6 89.0 94.3 95.9 78.8 88.9 79.9 96.8 96.2 92.4 97.8 77.4 88.1 77.7 98.3 88.2 89.9 Proposed 99.3 95.8 97.2 95.4 73.2 88.5 94.3 95.5 77.3 91.8 81.4 97.1 96.3 91.7 98.0 78.3 92.2 75.7 98.4 88.8 90.4 表 2 MirFLickr25k数据集实验结果(38个类)

Table 2. The experimental results on MirFLickr25k dataset (38 classes)

No. Labels LDA SVM DBN AIACNN Denseset Proposed(38) 1 Animals 0.537 0.531 0.498 0.612 0.854 0.862 2 Baby(r1) 0.285(0.308) 0.200(0.165) 0.129(0.134) 0.487(0.462) 0.459(0.591) 0.467(0.587) 3 Bird(r1) 0.426(0.500) 0.443(0.520) 0.184(0.255) 0.529(0.534) 0.704(0.902) 0.725(0.918) 4 Car(r1) 0.297(0.389) 0.339(0.434) 0.309(0.354) 0.502(0.521) 0.758(0.818) 0.777(0.827) 5 Cloud(r1) 0.651(0.528) 0.695(0.434) 0.759(0.691) 0.667(0.682) 0.937(0.833) 0.945(0.840) 6 Dog(r1) 0.621(0.663) 0.607(0.641) 0.342(0.376) 0.555(0.523) 0.832(0.902) 0.864(0.914) 7 Female(r1) 0.494(0.454) 0.465(0.451) 0.540(0.478) 0.623(0.630) 0.840(0.842) 0.844(0.855) 8 Flower(r1) 0.560(0.623) 0.480(0.717) 0.593(0.679) 0.612(0.630) 0.774(0.886) 0.775(0.881) 9 Food 0.439 0.308 0.447 0.645 0.714 0.720 10 Indoor 0.663 0.683 0.750 0.793 0.902 0.903 11 Lake 0.258 0.207 0.262 0.369 0.458 0.463 12 Male(r1) 0.434(0.354) 0.413(0.335) 0.503(0.406) 0.623(0.625) 0.821(0.801) 0.830(0.814) 13 Night(r1) 0.615(0.420) 0.588(0.450) 0.655(0.483) 0.712(0.709) 0.760(0.640) 0.761(0.681) 14 People(r1) 0.731(0.664) 0.748(0.565) 0.800(0.730) 0.789(0.787) 0.967(0.969) 0.967(0.973) 15 Plant_life 0.703 0.691 0.791 0.721 0.921 0.927 16 Portrait(r1) 0.543(0.541) 0.480(0.558) 0.642(0.635) 0.692(0.698) 0.911(0.909) 0.903(0.901) 17 River(r1) 0.317(0.134) 0.158(0.109) 0.263(0.110) 0.488(0.246) 0.462(0.184) 0.492(0.146) 18 Sea(r1) 0.477(0.197) 0.529(0.201) 0.586(0.259) 0.526(0.301) 0.788(0.498) 0.792(0.499) 19 Sky 0.800 0.823 0.873 0.833 0.950 0.949 20 Structures 0.709 0.695 0.787 0.756 0.913 0.916 21 Sunset 0.528 0.613 0.648 0.649 0.745 0.747 22 Transport 0.411 0.369 0.406 0.516 0.785 0.787 23 Tree(r1) 0.515(0.342) 0.559(0.321) 0.660(0.483) 0.639(0.388) 0.852(0.706) 0.860(0.713) 24 Water 0.525 0.527 0.629 0.629 0.841 0.836 25 mAP 0.492 0.475 0.503 0.624 0.774 0.782 表 3 注意力机制与语义关联分类模块对图像分类效果的影响

Table 3. The influence of attention mechanism and semantic association classification model on image classification

Methods Pascal VOC2007 MirFLickr25k(38) DenseNet 89.9% 77.4% DenseNet+att 90.0% 77.6% DenseNet+SE 89.7% 77.0% Proposed 90.4% 78.2% 表 4 SE模块和卷积的结合方式对语义关联分类效果的影响

Table 4. The influence of the combination of SE block and convolution on semantic association classification

Methods Pascal VOC2007 MirFLickr25k(38) SEblock(1)+4Convs 90.1% 77.8% SEblock(1, 3)+4Convs 90.2% 77.9% 5SEblock+5Convs 90.4% 78.1% Proposed 90.4% 78.2% -

参考文献

[1] Sivic J, Zisserman A. Video Google: a text retrieval approach to object matching in videos[C]//Proceedings 9th IEEE International Conference on Computer Vision, 2003: 1470-1477.

[2] 汪荣贵, 丁凯, 杨娟, 等.三角形约束下的词袋模型图像分类方法[J].软件学报, 2017, 28(7): 1847-1861. http://d.old.wanfangdata.com.cn/Periodical/rjxb201707014

Wang R G, Ding K, Yang J, et al. Image classification based on bag of visual words model with triangle constraint[J]. Journal of Software, 2017, 28(7): 1847-1861. http://d.old.wanfangdata.com.cn/Periodical/rjxb201707014

[3] 黄启宏, 刘钊.基于多超平面支持向量机的图像语义分类算法(英文)[J].光电工程, 2007, 34(8): 99-104. doi: 10.3969/j.issn.1003-501X.2007.08.021

Huang Q H, Liu Z. Multiple-hyperplane SVMs algorithm in image semantic classification[J]. Opto-Electronic Engineering, 2007, 34(8): 99-104. doi: 10.3969/j.issn.1003-501X.2007.08.021

[4] Chang C C, Lin C J. LIBSVM: a library for support vector machines[J]. ACM Transactions on Intelligent Systems and Technology, 2011, 2(3): 27. http://d.old.wanfangdata.com.cn/Periodical/jdq201315008

[5] Breiman L. Random forests[J]. Machine Learning, 2001, 45(1): 5-32. http://d.old.wanfangdata.com.cn/Periodical/gtzyyg201404010

[6] Harzallah H, Jurie F, Schmid C. Combining efficient object localization and image classification[C]//Proceedings of the 12th International Conference on Computer Vision, 2009: 237-244.

http://www.researchgate.net/publication/224135968_Combining_efficient_object_localization_and_image_classification [7] Lowe D G. Distinctive image features from scale-invariant keypoints[J]. International Journal of Computer Vision, 2004, 60(2): 91-110. doi: 10.1023/B:VISI.0000029664.99615.94

[8] Dalal N, Triggs B. Histograms of oriented gradients for human detection[C]//Proceedings of 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 2005: 886-893.

http://www.researchgate.net/publication/303607641_Histograms_of_oriented_gradients_for_human_detection [9] Ojala T, Pietikäinen M, Harwood D. A comparative study of texture measures with classification based on featured distributions[J]. Pattern Recognition, 1996, 29(1): 51-59.

[10] Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition[J]. arXiv: 1409.1556[cs.CV], 2015.

http://www.oalib.com/paper/4068791 [11] Huang G, Liu Z, van der Maaten L, et al. Densely connected convolutional networks[C]//Proceedings of 2017 IEEE Computer Vision and Pattern Recognition, 2017: 2261-2269.

[12] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition[C]//Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, 2016: 770-778.

[13] Razavian A S, Azizpour H, Sullivan J, et al. CNN features off-the-shelf: an astounding baseline for recognition[C]// Proceedings of 2014 IEEE Conference on Computer Vision and Pattern Recognition, 2014: 512-519.

[14] Deng J, Dong W, Socher R, et al. ImageNet: a large-scale hierarchical image database[C]//Proceedings of 2009 IEEE Computer Vision and Pattern Recognition, 2009: 248-255.

http://www.researchgate.net/publication/221361415 [15] Wei Y C, Xia W, Lin M, et al. HCP: a flexible CNN framework for multi-label image classification[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2016, 38(9): 1901-1907. doi: 10.1109/TPAMI.2015.2491929

[16] Cheng M M, Zhang Z M, Lin W Y, et al. BING: binarized normed gradients for objectness estimation at 300fps[C]//Proceedings of 2014 IEEE Conference on Computer Vision and Pattern Recognition, 2014: 3286-3293.

[17] Wang J, Yang Y, Mao J H, et al. CNN-RNN: a unified framework for multi-label image classification[C]//Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition, 2016: 2285-2294.

[18] Hochreiter S, Schmidhuber J. Long short-term memory[J]. Neural Computation, 1997, 9(8): 1735-1780. doi: 10.1162/neco.1997.9.8.1735

[19] Zhang J J, Wu Q, Shen C H, et al. Multilabel image classification with regional latent semantic dependencies[J]. IEEE Transactions on Multimedia, 2018, 20(10): 2801-2813. doi: 10.1109/TMM.2018.2812605

[20] Ioffe S, Szegedy C. Batch normalization: accelerating deep network training by reducing internal covariate shift[C]//Proceedings of the 32nd International Conference on Machine Learning, 2015: 448-456.

http://www.researchgate.net/publication/272194743_Batch_Normalization_Accelerating_Deep_Network_Training_by_Reducing_Internal_Covariate_Shift [21] Glorot X, Bordes A, Bengio Y. Deep sparse rectifier neural networks[C]//Proceedings of the 14th International Conference on Artificial Intelligence and Statistics, 2011: 315-323.

[22] Ba J, Mnih V, Kavukcuoglu K. Multiple object recognition with visual attention[J]. arXiv: 1412.7755[cs.LG], 2015.

[23] Xu K, Ba J, Kiros R, et al. Show, attend and tell: neural image caption generation with visual attention[J]. arXiv: 1502.03044[cs.LG], 2015.

[24] Wang Z X, Chen T S, Li G B, et al. Multi-label image recognition by recurrently discovering attentional regions[C]//Proceedings of 2017 IEEE International Conference on Computer Vision, 2017: 464-472.

[25] Everingham M, van Gool L, Williams C K I, et al. The Pascal visual object classes (VOC) challenge[J]. International Journal of Computer Vision, 2010, 88(2): 303-338. doi: 10.1007/s11263-009-0275-4

[26] Srivastava N, Salakhutdinov R. Learning representations for multimodal data with deep belief nets[C]//Proceedings of 2012 ICML Representation Learning Workshop, 2012: 79.

[27] Wang R G, Xie Y F, Yang J, et al. Large scale automatic image annotation based on convolutional neural network[J]. Journal of Visual Communication and Image Representation, 2017, 49: 213-224. doi: 10.1016/j.jvcir.2017.07.004

[28] Li Y N, Yeh M C. Learning image conditioned label space for multilabel classification[J]. arXiv: 1802.07460[cs.CV], 2018.

http://www.researchgate.net/publication/323335326_Learning_Image_Conditioned_Label_Space_for_Multilabel_Classification -

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: