An object detection and tracking algorithm based on LiDAR and camera information fusion

-

摘要

环境感知系统是智能车辆的重要组成部分,它主要是指依赖于车载传感器对车辆周围环境进行探测。为了保证智能车辆环境感知系统的准确性和稳定性,有必要使用智能车辆车载传感器来检测和跟踪可通行区域的目标。本文提出一种基于激光雷达和摄像机信息融合的目标检测和跟踪算法,采用多传感器信息融合的方式对目标进行检测和跟踪。该算法利用激光雷达点云数据聚类方法检测可通行区域内的物体,并将其投射到图像上,以确定跟踪对象。在确定对象后,该算法利用颜色信息跟踪图像序列中的目标,由于基于图像的目标跟踪算法很容易受到光、阴影、背景干扰的影响,该算法利用激光雷达点云数据在跟踪过程中修正跟踪结果。本文采用KITTI数据集对算法进行验证和测试,结果显示,本文提出的目标检测和跟踪算法的跟踪目标平均区域重叠为83.10%,跟踪成功率为80.57%,与粒子滤波算法相比,平均区域重叠提高了29.47%,跟踪成功率提高了19.96%。

Abstract

As an important part of intelligent vehicle, environmental perception system mainly refers to the detection of the surrounding environment of the vehicle by the sensors attached on the vehicle. In order to ensure the accuracy and stability of the intelligent vehicle environmental perception system, it is necessary to use intelligent vehicle sensors to detect and track objects in the passable area. In this paper, an object detection and tracking algorithm based on the LiDAR and camera information fusion is proposed. The algorithm uses the point cloud data clustering method of LiDAR to detect the objects in the passable area and project them onto the image to determine the tracking objects. After the objects are determined, the algorithm uses color information to track objects in the image sequence. Since the object tracking algorithm based on image is easily affected by light, shadow and background interference, the algorithm uses LiDAR point cloud to modify the tracking results. This paper uses KITTI data set to verify and test this algorithm and experiments show that the target area detection overlap of the proposed target detection and tracking algorithm is 83.10% on average and the tracking success rate is 80.57%. Compared with particle filtering algorithm, the average region overlap increased by 29.47% and the tracking success rate increased by 19.96%.

-

Key words:

- object detection /

- object tracking /

- intelligent vehicle /

- LiDAR point cloud

-

Overview

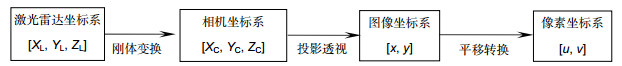

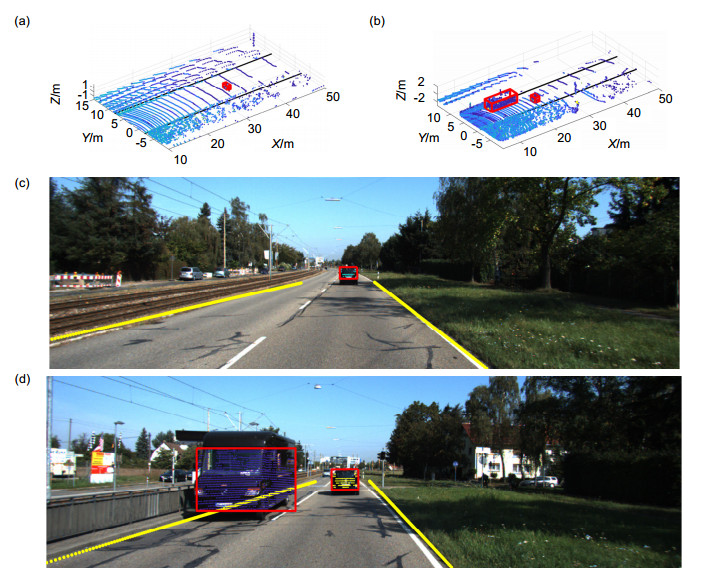

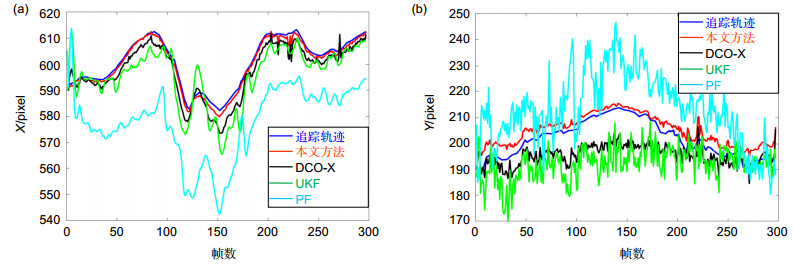

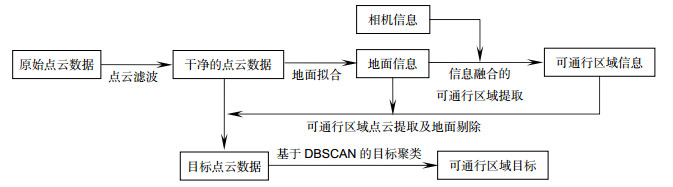

Overview: Intelligent vehicle refers to the new type of car which integrates a variety of technologies, including environmental perception, path planning, decision-making, controlling, etc., which carries advanced vehicle sensor, controller, actuator and other devices, can realize the car with X (people, vehicles, road, cloud, etc.) of information exchange and sharing to achieve safety, high efficiency, energy saving, and ultimately. Environmental perception is the technology which detecting vehicle environment information relies on the on-board sensors including vehicle vision sensors, LiDAR, millimeter wave radar, global positioning system (GPS), INS system and ultrasonic wave radar. In order to ensure the accuracy and stability of environmental perception of intelligent vehicle, it is necessary to use intelligent vehicle on-board sensors to detect and track the objects in the passable area. This paper puts forward a kind of object detection and tracking algorithm based on the LiDAR and camera information fusion. Firstly, this algorithm uses the LiDAR point cloud data clustering method to detect the objects in the passable area and project them onto the picture to determine the tracking objects. The LiDAR point cloud data clustering method contains filtering of original point cloud data, ground detection, passable area extraction based on point cloud data reflectivity and data clustering based on DBSCAN algorithm. After the object has been determined, this algorithm uses color information to track the object in the image sequence. Since object tracking algorithm based on image is easily influenced by light, shade and background interference, this algorithm uses LiDAR point cloud to modify tracking results in the process of tracking. The tracking strategy is: first, place N initial particles uniformly at the target position; second, calculate the similarity between the current moment particles and the previous moment particles according to the Bhattacharyya coefficient; third, resample particles according to similarity; finally, since LiDAR point cloud can be projected onto picture, calculate the object position by combining the particles and the point cloud through the algorithm. At the end of paper, this paper uses KITTI data set to test and verify the algorithm. KITTI dataset is established by Germany Karlsruhe Institute of Technology and Technology Research Institute in the United States, which is currently the largest data of computer vision algorithm for automatic driving scenarios evaluation. The experiment used a computer with 4 GB memory as the experimental platform and programmed on MATLAB 2017b. In this paper, particle filter, unscented Kalman filter (UKF) and DCO-X algorithm are used as comparison algorithms to verify the effectiveness of the algorithm. Experiments show that the algorithm has a good effect in object tracking evaluation standard of X direction, Y direction errors and center position error, regional overlap and the success rate.

-

-

表 1 四种算法的性能比较

Table 1. Performance comparison of four algorithms

exa/pixel eya/pixel eac/pixel oar/% Rs/% 本文方法 1.022 3.431 3.724 83.10 80.57 DCO-X 3.389 6.096 7.852 78.85 80.39 UKF 5.059 9.443 12.664 66.60 71.42 PF 23.022 11.884 27.806 56.63 60.61 -

参考文献

[1] Bishop R. Intelligent Vehicle Technology and Trends[M]. Boston: Artech House, 2005.

[2] 何树林.浅谈智能汽车及其相关问题[J].汽车工业研究, 2010(9): 28-30. doi: 10.3969/j.issn.1009-847X.2010.09.008

[3] Lan Y, Huang J, Chen X. Environmental perception for information and immune control algorithm of miniature intelligent vehicle[J]. International Journal of Control & Automation, 2017, 10(5): 221-232. http://cn.bing.com/academic/profile?id=ce95fe7d7af5d81b725f40a217be168b&encoded=0&v=paper_preview&mkt=zh-cn

[4] 高德芝, 段建民, 郑榜贵, 等.智能车辆环境感知传感器的应用现状[J].现代电子技术, 2008(19): 151-156. doi: 10.3969/j.issn.1004-373X.2008.19.049

Gao D Z, Duan J M, Zheng B G, et al. Application statement of intelligent vehicle environment perception sensor[J]. Modern Electronics Technique, 2008(19): 151-156. doi: 10.3969/j.issn.1004-373X.2008.19.049

[5] 王世峰, 戴祥, 徐宁, 等.无人驾驶汽车环境感知技术综述[J].长春理工大学学报(自然科学版), 2017, 40(1): 1-6. doi: 10.3969/j.issn.1672-9870.2017.01.001

Wang S F, Dai X, Xu N, et al. Overview on environment perception technology for unmanned ground vehicle[J]. Journal of Changchun University of Science and Technology (Natural Science Edition), 2017, 40(1): 1-6. doi: 10.3969/j.issn.1672-9870.2017.01.001

[6] Wang Z N, Zhan W, Tomizuka M. Fusing bird view LIDAR point cloud and front view camera image for deep object detection[OL]. arXiv: 1711.06703[cs.CV].

http://www.researchgate.net/publication/321180423_Fusing_Bird_View_LIDAR_Point_Cloud_and_Front_View_Camera_Image_for_Deep_Object_Detection [7] Dieterle T, Particke F, Patino-Studencki L, et al. Sensor data fusion of LIDAR with stereo RGB-D camera for object tracking[C]//Proceedings of 2017 IEEE Sensors, 2017: 1-3.

http://ieeexplore.ieee.org/document/8234267/ [8] Oh S I, Kang H B. Object detection and classification by decision-level fusion for intelligent vehicle systems[J]. Sensors, 2017, 17(1): 207. http://cn.bing.com/academic/profile?id=4760fd7a61e2e7d9ca7c28fcc5f03372&encoded=0&v=paper_preview&mkt=zh-cn

[9] 厉小润, 谢冬.基于双目视觉的智能跟踪行李车的设计[J].控制工程, 2013, 20(1): 98-101. doi: 10.3969/j.issn.1671-7848.2013.01.023

Li X R, Xie D. Design of intelligent object tracking baggage vehicle based on binocular vision[J]. Control Engineering of China, 2013, 20(1): 98-101. doi: 10.3969/j.issn.1671-7848.2013.01.023

[10] Granstr m K, Baum M, Reuter S. Extended object tracking: introduction, overview, and applications[J]. Journal of Advances in Information Fusion, 2017, 12(2): 139-174. http://d.old.wanfangdata.com.cn/NSTLQK/NSTL_QKJJ026837439/

[11] Li X, Wang K J, Wang W, et al. A multiple object tracking method using Kalman filter[C]//Proceedings of 2012 IEEE International Conference on Information and Automation, 2010: 1862-1866.

https://www.researchgate.net/publication/224156933_A_multiple_object_tracking_method_using_Kalman_filter [12] Liu B, Cheng S, Shi Y H. Particle filter optimization: a brief introduction[C]//Proceedings of the 7th Advances in Swarm Intelligence, 2016.

http://cn.bing.com/academic/profile?id=66fff60b9f34d090eea3d99928015481&encoded=0&v=paper_preview&mkt=zh-cn [13] Dou J F, Li J X. Robust visual tracking based on interactive multiple model particle filter by integrating multiple cues[J]. Neurocomputing, 2014, 135: 118-129. doi: 10.1016/j.neucom.2013.12.049

[14] 侯志强, 王利平, 郭建新, 等.基于颜色、空间和纹理信息的目标跟踪[J].光电工程, 2018, 45(5): 170643. doi: 10.12086/oee.2018.170643

Hou Z Q, Wang L P, Guo J X, et al. An object tracking algorithm based on color, space and texture inf ormation[J]. Opto-Electronic Engineering, 2018, 45(5): 170643. doi: 10.12086/oee.2018.170643

[15] 张娟, 毛晓波, 陈铁军.运动目标跟踪算法研究综述[J].计算机应用研究, 2009, 26(12): 4407-4410. doi: 10.3969/j.issn.1001-3695.2009.12.002

Zhang J, Mao X B, Chen T J. Survey of moving object tracking algorithm[J]. Application Research of Computers, 2009, 26(12): 4407-4410. doi: 10.3969/j.issn.1001-3695.2009.12.002

[16] Zhang Z. A flexible new technique for camera calibration[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2000, 22(11): 1330-1334. doi: 10.1109/34.888718

[17] 蔡喜平, 赵远, 黄建明, 等.成像激光雷达系统性能的研究[J].光学技术, 2001, 27(1): 60-62. doi: 10.3321/j.issn:1002-1582.2001.01.016

Cai X P, Zhao Y, Huang J M, et al. Research on the performance of imaging laser radar[J]. Optical Technique, 2001, 27(1): 60-62. doi: 10.3321/j.issn:1002-1582.2001.01.016

[18] Rusu R B, Cousins S. 3D is here: point cloud library (PCL)[C]//Proceedings of 2011 IEEE International Conference on Robotics and Automation, 2011: 1-4.

[19] 周琴, 张秀达, 胡剑, 等.凝视成像三维激光雷达噪声分析[J].中国激光, 2011, 38(9): 0908005. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=zgjg201109032

Zhou Q, Zhang X D, Hu J, et al. Noise analysis of staring three-dimensinal active imaging laser radar[J]. Chinese Journal of Lasers, 2011, 38(9): 0908005. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=zgjg201109032

[20] 官云兰, 刘绍堂, 周世健, 等.基于整体最小二乘的稳健点云数据平面拟合[J].大地测量与地球动力学, 2011, 31(5): 80-83. http://d.old.wanfangdata.com.cn/Periodical/dkxbydz201105017

Guan Y L, Liu S T, Zhou S J, et al. Obust plane fitting of point clouds based on TLS[J]. Journal of Geodesy and Geodynamics, 2011, 31(5): 80-83. http://d.old.wanfangdata.com.cn/Periodical/dkxbydz201105017

[21] 邹晓亮, 缪剑, 郭锐增, 等.移动车载激光点云的道路标线自动识别与提取[J].测绘与空间地理信息, 2012, 35(9): 5-8. doi: 10.3969/j.issn.1672-5867.2012.09.002

Zou X L, Miao J, Guo R Z, et al. Automatic road marking detection and extraction based on LiDAR point clouds from vehicle- borne MMS[J]. Geomatics & Spatial Information Technology, 2012, 35(9): 5-8. doi: 10.3969/j.issn.1672-5867.2012.09.002

[22] Ester M, Kriegel H P, Sander J, et al. A density-based algorithm for discovering clusters a density-based algorithm for discovering clusters in large spatial databases with noise[C]//Proceedings of the 2nd International Conference on Knowledge Discovery and Data Mining, 1996: 226-231.

[23] 朱明清, 王智灵, 陈宗海.基于改进Bhattacharyya系数的粒子滤波视觉跟踪算法[J].控制与决策, 2012, 27(10): 1579-1583. http://d.old.wanfangdata.com.cn/Periodical/kzyjc201210027

Zhu M Q, Wang Z L, Chen Z H. Modified Bhattacharyya coefficient for particle filter visual tracking[J]. Control and Decision, 2012, 27(10): 1579-1583. http://d.old.wanfangdata.com.cn/Periodical/kzyjc201210027

[24] 冯驰, 王萌, 汲清波.粒子滤波器重采样算法的分析与比较[J].系统仿真学报, 2009, 21(4): 1101-1105, 1110. http://d.old.wanfangdata.com.cn/Periodical/xtfzxb200904044

Feng C, Wang M, Ji Q B. Analysis and comparison of resampling algorithms in particle filter[J]. Journal of System Simulation, 21(4): 1101-1105, 1110. http://d.old.wanfangdata.com.cn/Periodical/xtfzxb200904044

[25] Geiger A, Lenz P, Stiller C, et al. Vision meets robotics: the KITTI dataset[J]. The International Journal of Robotics Research, 2013, 32(11): 1231-1237. doi: 10.1177/0278364913491297

[26] Zhou T R, Ouyang Y N, Wang R, et al. Particle filter based on real-time compressive tracking[C]//Proceedings of 2016 International Conference on Audio, Language and Image Processing, 2016: 754-759.

[27] 石勇, 韩崇昭.自适应UKF算法在目标跟踪中的应用[J].自动化学报, 2011, 37(6): 755-759. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=zdhxb201106012

Shi Y, Han C Z. Adaptive UKF method with applications to target tracking[J]. Acta Automatica Sinica, 2011, 37(6): 755-759. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=zdhxb201106012

[28] Milan A, Schindler K, Roth S. Detection- and trajectory-level exclusion in multiple object tracking[C]//Proceedings of 2013 IEEE Conference on Computer Vision and Pattern Recognition, 2013: 3682-3689.

-

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: