-

摘要

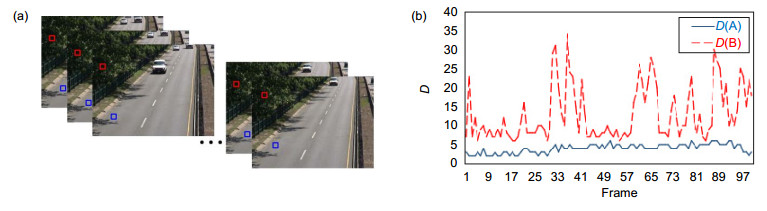

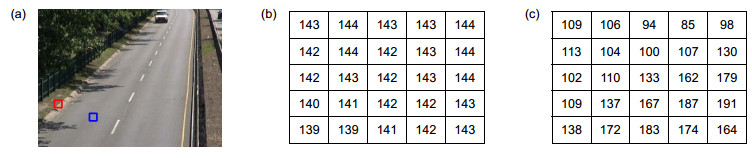

为了构建鲁棒的背景模型和提高前景目标检测的准确性, 综合考虑视频图像在同一位置上像素点的时间相关性和邻域像素的空间相关性, 本文提出一种基于多特征融合的背景建模方法, 用单帧图像中像素的邻域相关性快速建立初始背景模型, 利用视频图像序列像素值、频数、更新时间和自适应敏感度更新背景模型, 有效改善了ghost现象, 减少运动目标的孔洞和假前景。通过多组数据测试, 表明本算法提高了对动态背景、复杂背景的适应性和鲁棒性。

Abstract

In order to build a robust background model and improve the accuracy of detection of foreground objects, the temporal correlation of pixels at the same position of the video image and the spatial correlation of neighboring pixels are considered comprehensively. This paper proposed a background modeling method based on multi-feature fusion. By using the domain correlation of pixels in a single frame image to quickly establish an initial background model whichis updated using pixel values, frequency, update time and sensitivity of the video image sequence, the ghost phenomenon is effectively improved and the holes and false prospects for moving targets are reduced. Through multiple sets of data tests, it shows that the algorithm improves the adaptability and robustness of dynamic background and complex background.

-

Key words:

- multi-feature joint matching /

- motion detection /

- background modeling

-

Overview

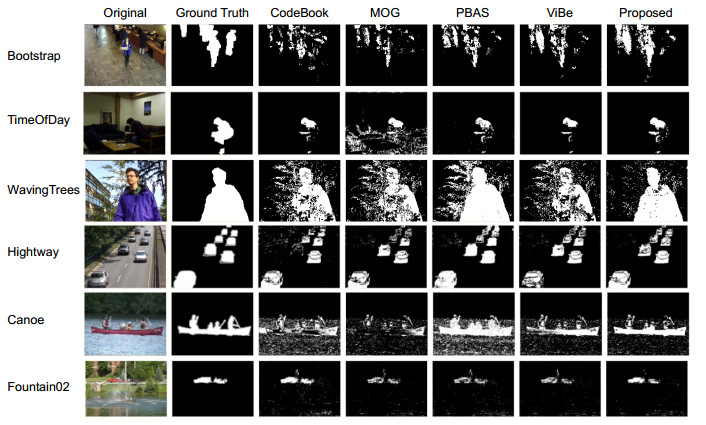

Overview: Background modeling of moving targets is one of the research focal points and difficulties in machine vision and intelligent video processing. Its goal is to extract the change regions from the video sequence and effectively detect the moving targets for follow-up research such as object tracking, target classification, and application understanding such as behavior analysis and behavior understanding plays an important role. The commonly used detection methods include frame difference method, optical flow method, and background difference method. The background difference method has the advantages of small overhead, high speed, high accuracy, and accurate target extraction. It has become the most common method for detecting moving targets. The detection performance of the background difference method mainly depends on a robust background model. The background model establishment and update algorithm directly affects the detection effect of the final target. In order to build a robust background model and improve the accuracy of foreground detection, the temporal correlation of pixels in the same location of the video image and the spatial correlation of pixels in the neighborhood are considered comprehensively. This paper proposed a background modeling method based on multi-feature fusion. The rapid establishment of the initial background model with the first frame of the video sequence reduces the complexity of modeling sampling. The background model is updated using the video image sequence pixel values, frequency, update time, and adaptive sensitivity, wherein the adaptive sensitivity uses the feedback information of the pixel level background to adaptively acquire sensitivity for regions of different complexity to adapt to different complexity backgrounds. The high complexity background area has a high sensitivity, avoids the generation of erroneous front sights, and has a low complexity background area with less sensitivity and reduces misidentification of background points. The algorithm effectively improves the ghost phenomenon through multiple features, reducing the holes in the moving object in the foreground and the false foreground caused by pixel drift. In order to verify the effectiveness and practicability of the proposed algorithm, four background modeling algorithms, CodeBook, MOG, PBAS and ViBe, were selected for comparison experiments. Experiments selected Bootstrap, TimeOfDay, and WavingTrees in the Microsoft Wallflower paper dataset, highway, canoe, fountain02 in the CDNet2014 dataset, and were divided into three types of scene test algorithms: indoor, outdoor, and complex backgrounds. The test results show that this algorithm improves the adaptability and robustness of dynamic background and complex background.

-

-

表 1 五种算法处理速度对比

Table 1. The speed comparison processed by five algorithms

Method WavingTrees Bootstrap Hightway Fountain02 MT HT MT HT MT HT MT HT Codebook 0.6 0.03 0.66 0.03 1.28 0.07 1.45 0.09 MOG 2.30 0.29 2.29 0.27 8.45 1.21 9.12 1.65 ViBe 0.11 0.03 0.10 0.02 0.48 0.13 0.56 0.21 PBAS 0.09 0.37 0.10 0.35 0.33 0.56 0.48 0.63 Proposed 0.05 0.07 0.05 0.07 0.22 0.23 0.29 0.33 表 2 普通场景准确性验证

Table 2. The verification of accuracy under ordinary scene

Method Bootstrap TimeOfDay WavingTrees Highway RE PR ηPWC RE PR ηPWC RE PR ηPWC RE PR ηPWC Codebook 0.37 0.63 11 0.37 1 4.3 0.77 0.73 16 0.7 0.89 4.8 MOG 0.29 0.63 12 0.49 0.31 11 0.7 0.69 19 0.75 0.96 3.5 PBAS 0.39 0.57 12 0.4 0.98 4.1 1 0.68 15 0.92 0.92 2.1 ViBe 0.4 0.53 13 0.32 1 4.7 0.65 0.69 20 0.71 0.94 4.3 Proposed 0.58 0.57 11 0.42 1 3.9 0.96 0.88 5.3 0.81 0.98 2.6 表 3 复杂场景准确性验证

Table 3. The verification of accuracy under complex scene

Method Canoe Fountain02 RE PR ηPWC RE PR ηPWC Codebook 0.53 0.34 16 0.93 0.58 1.6 MOG 0.3 0.5 11 0.61 0.71 1.3 PBAS 0.95 0.41 15 0.58 0.59 1.7 ViBe 0.71 0.66 6.9 0.61 0.68 1.4 Proposed 0.83 0.73 5.1 0.81 0.76 0.94 -

参考文献

[1] Ueng S K, Chen G Z. Vision based multi-user human computer interaction[J]. Multimedia Tools and Applications, 2016, 75(16): 10059-10076. doi: 10.1007/s11042-015-3061-z

[2] 刘行, 陈莹.自适应多特征融合目标跟踪[J].光电工程, 2016, 43(3): 58-65. doi: 10.3969/j.issn.1003-501X.2016.03.010

Liu X, Chen Y. Target tracking based on adaptive fusion of multi-feature[J]. Opto-Electronic Engineering, 2016, 43(3): 58-65. doi: 10.3969/j.issn.1003-501X.2016.03.010

[3] Piccardi M. Background subtraction techniques: a review[C]//Proceedings of 2004 IEEE International Conference on Systems, Man and Cybernetics, The Hague, Netherlands, 2004: 3099-3104.

https://ieeexplore.ieee.org/document/1400815 [4] Lipton A J, Fujiyoshi H, Patil R S. Moving target classification and tracking from real-time video[C]//Proceedings of the 4th IEEE Workshop on Applications of Computer Vision. WACV'98, Princeton, NJ, USA, 1998: 8-14.

https://ieeexplore.ieee.org/document/732851 [5] Barron J L, Fleet D J, Beauchemin S. Performance of optical flow techniques[J]. International Journal of Computer Vision, 1994, 12(1): 43-77. doi: 10.1007/BF01420984

[6] Dikmen M, Huang T S. Robust estimation of foreground in surveillance videos by sparse error estimation[C]//Proceedings of the 19th International Conference on Pattern Recognition, Tampa, USA, 2008: 1-4.

https://ieeexplore.ieee.org/document/4761910 [7] Xue G J, Song L, Sun J, et al. Foreground estimation based on robust linear regression model[C]//Proceedings of the 18th IEEE International Conference on Image Processing, Brussels, Belgium, 2011: 3269-3272.

https://ieeexplore.ieee.org/document/6116368 [8] Xue G J, Song L, Sun J. Foreground estimation based on linear regression model with fused sparsity on outliers[J]. IEEE Transactions on Circuits and Systems for Video Technology, 2013, 23(8): 1346-1357. doi: 10.1109/TCSVT.2013.2243053

[9] 张金敏, 王斌.光照快速变化条件下的运动目标检测[J].光电工程, 2016, 43(2): 14-21. doi: 10.3969/j.issn.1003-501X.2016.02.003

Zhang J M, Wang B. Moving object detection under condition of fast illumination change[J]. Opto-Electronic Engineering, 2016, 43(2): 14-21. doi: 10.3969/j.issn.1003-501X.2016.02.003

[10] 李飞, 张小洪, 赵晨丘, 等.自适应的SILTP算法在运动车辆检测中的研究[J].计算机科学, 2016, 43(6): 294-297. http://d.old.wanfangdata.com.cn/Periodical/jsjkx201606058

Li F, Zhang X H, Zhao C Q, et al. Vehicle detection research based on adaptive SILTP algorithm[J]. Computer Science, 2016, 43(6): 294-297. http://d.old.wanfangdata.com.cn/Periodical/jsjkx201606058

[11] 王永忠, 梁彦, 潘泉, 等.基于自适应混合高斯模型的时空背景建模[J].自动化学报, 2009, 35(4): 371-378. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=CAS201303040000408935

Wang Y Z, Liang Y, Pan Q, et al. Spatiotemporal background modeling based on adaptive mixture of Gaussians[J]. Acta Automatica Sinica, 2009, 35(4): 371-378. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=CAS201303040000408935

[12] 范文超, 李晓宇, 魏凯, 等.基于改进的高斯混合模型的运动目标检测[J].计算机科学, 2015, 42(5): 286-288, 319. http://d.old.wanfangdata.com.cn/Periodical/jsjkx201505058

Fan W C, Li X Y, Wei K, et al. Moving target detection based on improved Gaussian mixture model[J]. Computer Science, 2015, 42(5): 286-288, 319. http://d.old.wanfangdata.com.cn/Periodical/jsjkx201505058

[13] 霍东海, 杨丹, 张小洪, 等.一种基于主成分分析的Codebook背景建模算法[J].自动化学报, 2012, 38(4): 591-600. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=QK201200567327

Huo D H, Yang D, Zhang X H, et al. Principal component analysis based Codebook background modeling algorithm[J]. Acta Automatica Sinica, 2012, 38(4): 591-600. http://www.wanfangdata.com.cn/details/detail.do?_type=perio&id=QK201200567327

[14] Barnich O, van Droogenbroeck M. ViBe: a universal background subtraction algorithm for video sequences[J]. IEEE Transactions on Image Processing, 2011, 20(6): 1709-1724. doi: 10.1109/TIP.2010.2101613

[15] 张泽斌, 袁哓兵.一种改进反馈机制的PBAS运动目标检测算法[J].电子设计工程, 2017, 25(3): 35-40. http://d.old.wanfangdata.com.cn/Periodical/dzsjgc201703009

Zhang Z B, Yuan X B. An improved PBAS algorithm for dynamic background[J]. Electronic Design Engineering, 2017, 25(3): 35-40. http://d.old.wanfangdata.com.cn/Periodical/dzsjgc201703009

[16] Wang Y, Jodoin P M, Porikli F, et al. CDnet 2014: an expanded change detection benchmark dataset[C]//Proceedings of 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 2014: 393-400.

https://ieeexplore.ieee.org/document/6910011 -

访问统计

E-mail Alert

E-mail Alert RSS

RSS

下载:

下载: